1. Introduction

Wind energy has received widespread attention due to its renewable, environmentally friendly, and sustainable characteristics. However, as a clean energy source with significant intermittent and fluctuating characteristics, its large-scale grid connection poses many great challenges, including the safe and stable operation of the power system, dispatch optimization, and energy planning [

1]. Accurate wind power forecasting (WPF) can be used to guide power dispatching and grid deployment [

2,

3], and therefore it is an effective way to mitigate the impact of wind power instability on power systems [

4].

In recent years, deep learning has been widely used in wind power forecasting tasks due to its powerful nonlinear fitting and complex data modeling capabilities. Liu et al. [

5] proposed a short-term wind power forecasting framework based on wavelet transform (WT) denoising and multi-feature long short-term memory (LSTM) networks. Li et al. [

6] decomposed wind power sequences using VMD, and grouped these sequences by complexity, and they then implemented forecasting using a dual-channel network composed of an informer and a temporal convolutional network (TCN) informer. Wang et al. [

7] divided wind farm clusters based on empirical orthogonal functions and combined multi-level quantiles with WaveNet with multi-head self-attention to achieve ultra-short-term interval power multi-step prediction. Zhu et al. [

8] proposed a wind speed prediction method that combines a non-stationary Transformer with dynamic data distillation and wake effect correction. Although the above methods have achieved some degree of success in capturing temporal dependencies of wind power data, wind power prediction is by no means a simple time series prediction problem. The power generation of a wind turbine is determined by its own historical pattern and the conditions of related wind turbines [

9]. Therefore, the wind power prediction task is essentially a spatiotemporal prediction problem that requires simultaneous characterization of temporal laws and spatial characteristics.

Due to the advantages of graph neural networks (GNNs) in modeling spatial dependencies, many studies have used GNNs to capture dependencies between wind turbines [

10]. These methods use GRU or CNN and their related variants to capture temporal features, which are combined with spatial features captured by GNNs to form spatiotemporal joint features. For example, Daenens et al. [

11] constructed a graph structure based on line-of-sight visibility and used generalized graph convolution (GENConv) and LSTM to extract spatiotemporal dependencies to achieve offshore wind farm power prediction. An et al. [

12] used electrical connection relationships to construct a graph structure and embedded diffusion graph convolution (Diffusion Graph Convolution) into a gated recurrent unit (GRU) to capture spatiotemporal dependencies to achieve ultra-short-term power prediction. Yang et al. [

13] divided wind farm clusters through deep attention embedded graph clustering (DEAGC) and used TimesNet to capture multi-cycle time features after data correction and screening. Zhao et al. [

14] constructed a multi-graph structure and integrated TCN with spatiotemporal attention mechanism to achieve ultra-short-term wind power interpretable prediction. Zhao et al. [

15] modelled wind farms as nodes, calculated edge weights based on distance and Pearson correlation coefficient, and constructed a static adjacency matrix. They used two spatiotemporal graph convolutional layers (STGCN) and an output layer to achieve the effect of ultra-short-term wind power prediction. All the aforementioned methods constructed static graphs using wind turbines as nodes and information such as their correlation and geographic distance as edges. However, the actual spatial connections between wind turbines are determined not only by their location but also by time-varying meteorological conditions such as wind speed, direction, and humidity. Static graphs with fixed structure and edge weights may not capture these dynamic changes well.

Since it is difficult to use static graphs to capture spatial-temporal correlations, researchers have begun to explore the application of dynamic graph models in wind power prediction. For example, in [

9], the authors integrated geographic distance graphs, semantic distance graphs, and learnable parameter matrices, and they dynamically optimized the graph structure through a spatial attention mechanism, and then captured spatiotemporal dependencies by combining an Attentional Graph Convolutional Recurrent Network (AGCRN) with LSTM. Yang et al. [

10] proposed a method for ultra-short-term wind power prediction for large-scale wind farm clusters based on dynamic spatiotemporal graph convolution. This method abstracts the wind farm into a graph and dynamically adjusts it. It combines spatial graph convolution with temporal graph convolution to model temporal and spatial dependencies and introduces multi-task learning (MTL) to simultaneously output multi-step prediction results for multiple wind farms. Han et al. [

16] used dynamic graph convolution to capture the dynamic spatial correlation of wind farm clusters and used BiLSTM and Transformer to mine the bidirectional temporal dependencies of wind power, thus achieving power prediction for wind farm clusters. Song et al. [

17] proposed a wind power forecasting method based on dynamic graph convolution and multi-resolution CNN. This method uses multi-resolution CNN and dynamic graph convolution to extract temporal and spatial features, respectively. Dong et al. [

18] extracted the temporal and spatial features of wind power data through directed graph convolution and TCN, respectively, and they realized the power forecasting of wind farm clusters. Although dynamic graphs can dynamically capture spatial features, recent studies on time series modeling have shown that the dependencies between time and space are heterogeneous [

19]; time dependencies are usually reflected as dynamic characteristics within the sequence of wind turbine-related variables (such as changes in wind speed, wind direction, or temperature over time), while spatial dependencies are often manifested as cross-sequence relationships between wind turbine variables (such as the correlation between wind speed and wind direction) or spatial dependencies between wind turbines. Existing dynamic graph-based wind power forecasting methods often attempt to extract features simultaneously from the coupled temporal and spatial dimensions. Due to the fundamental differences between these two types of dependencies, jointly modelling them can lead to information entanglement in the dependency representation during feature learning and thus limit the model’s expressive power. Furthermore, existing methods create adjacency graphs with numerous links, and redundant noise in the graph may interfere with the model’s learning of core dependencies, reducing forecasting performance.

To address the above issues, this research proposes a short-term wind power forecasting method based on a triple-flow dynamic graph convolutional network (TFDGCN). Our contributions are as follows:

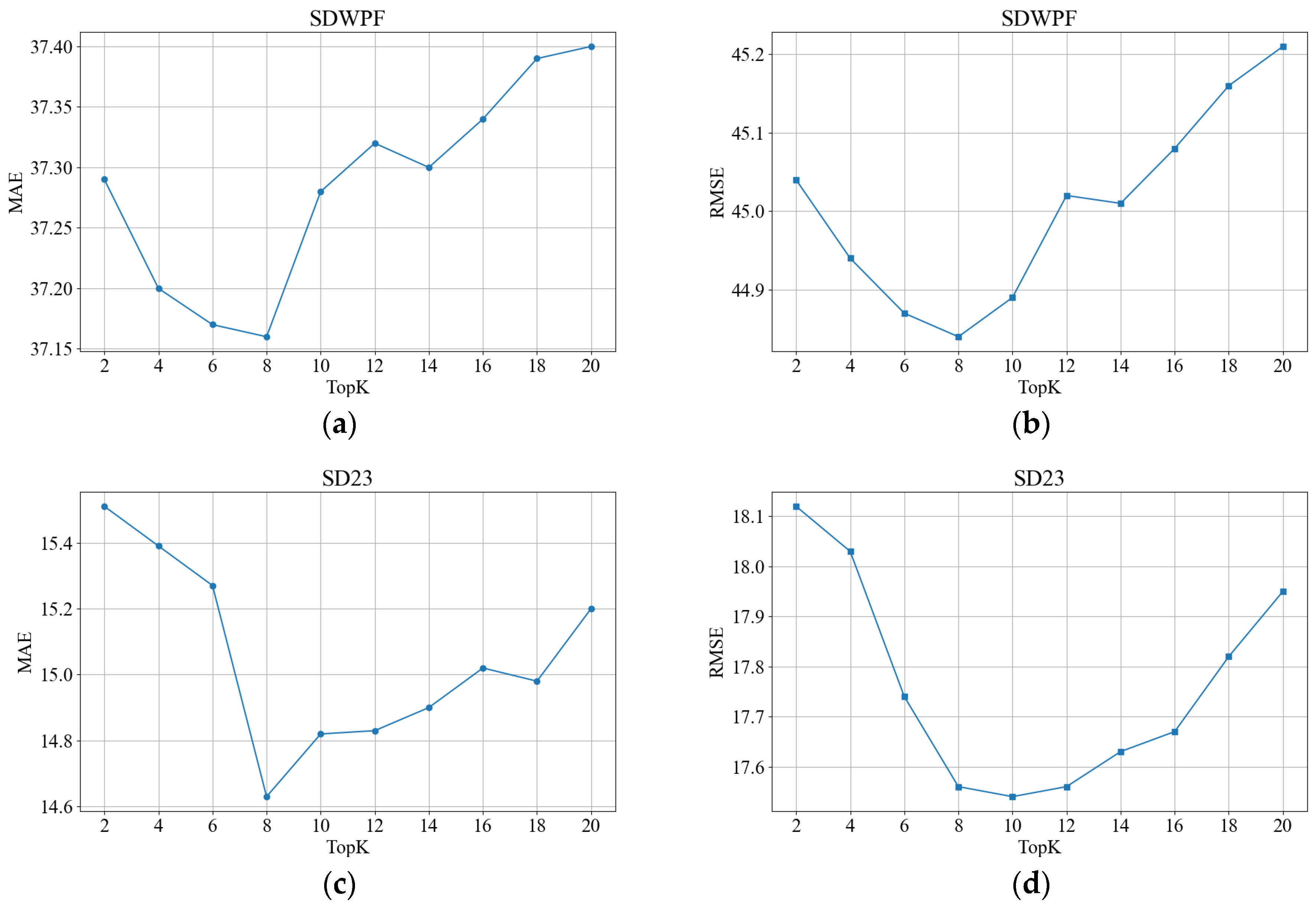

We propose a method for constructing dynamic sparse graphs for wind turbines based on cosine similarity. This method dynamically constructs three sparse graphs based on similarities within variable sequences, between variable sequences, and between nodes (wind turbines). This method effectively describes the relationships within wind turbine variable sequences, between sequences, and between wind turbines while reducing computational complexity.

We propose TFDGCN, which features a symmetric triple-flow architecture. It decouples and learns relationships across three dimensions, namely, within variable sequences, between sequences, and between wind turbines, represented by three sparse dynamic graphs. TFDGCN also uses linear attention to capture global information across the three dimensions.

We conduct extensive experiments on two real-world wind farm datasets to validate the effectiveness of TFDGCN. TFDGCN outperforms the baseline methods on both wind farm datasets. Furthermore, we demonstrate the effectiveness of various components of TFDGCN through ablation experiments.

The rest of this paper is organized as follows:

Section 2 details the problem studied in this research and formally defines the wind power prediction problem. The proposed TFDGCN is presented in

Section 3. This is followed by the simulation results reported in

Section 4. Finally,

Section 5 concludes this paper.

2. Problem Statement

Wind power forecasting for wind farms can reflect three types of correlations: (1) Variable correlation: the various characteristic variables of wind turbines, such as wind speed, wind direction, and temperature, do not act independently. They are interrelated and influence each other, jointly determining wind power. (2) Temporal correlation: continuous wind power data readings form a time series. This means that the data observed at the current moment is correlated with data observed at past moments. This correlation allows for future power forecasts through historical data analysis. (3) Spatial correlation: different wind turbine nodes within the same wind farm may have similar characteristics (e.g., wind speed, wind direction, temperature, power) at the same moment. The correlation between wind turbine nodes with similar characteristics is essentially spatial correlation.

We capture the spatiotemporal correlations of the three types mentioned above through three spatiotemporal dynamic graphs. Namely, we establish dynamic graphs

based on variable correlation,

based on temporal similarity, and

based on spatial correlation. The construction methods of these three dynamic graphs will be detailed in

Section 3.1.

Given a multidimensional data set

observed in a wind farm,

is the number of wind turbines in the wind farm,

is the total time, and

is the measured data of the wind turbines. Where

represents the data observed by the

th wind turbine. Our goal is to learn a function

f from the historical observation value

of the time window of length

, and predict the wind power of the future time window of length

, as shown below:

In the above, is the wind power predicted by the th wind turbine in time window at time .

3. Methodology

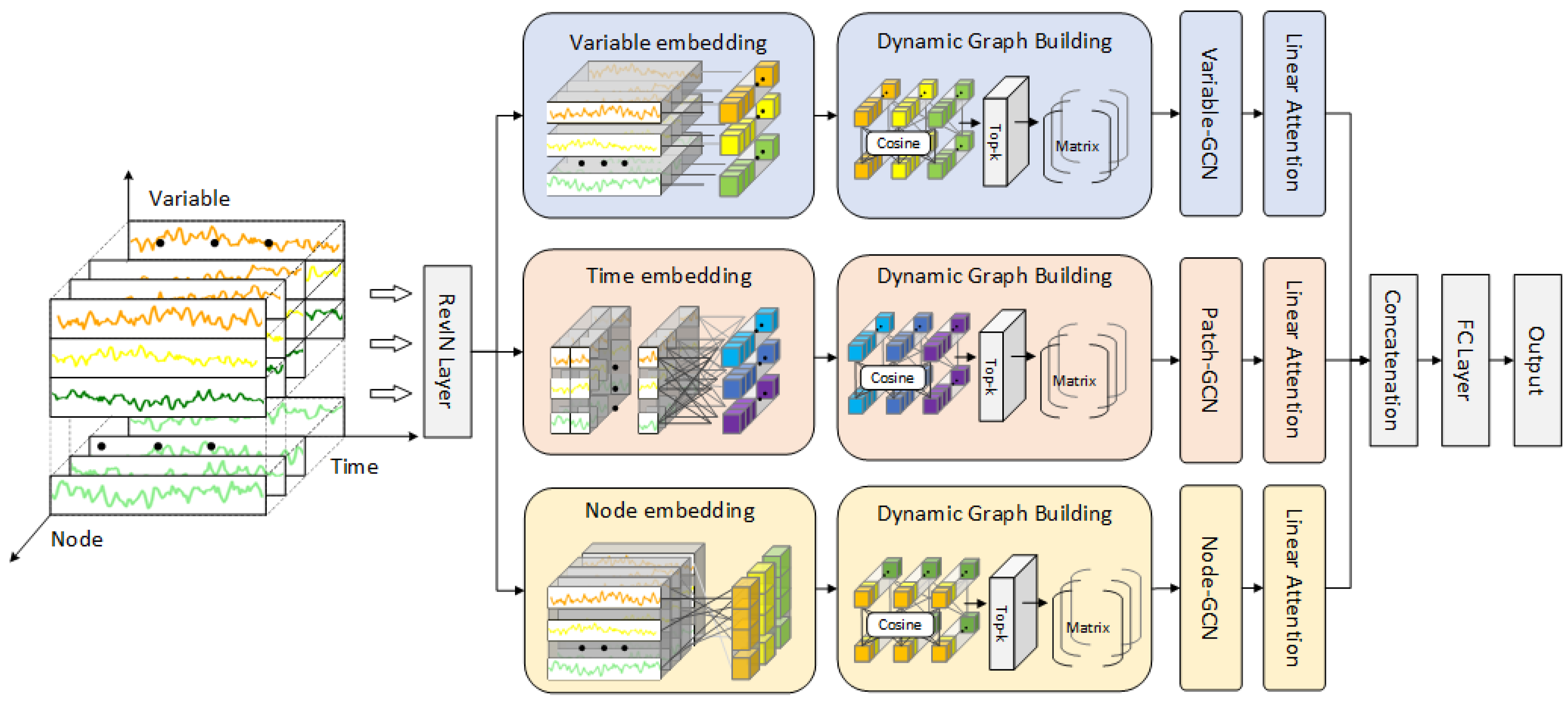

The proposed TFDGCN network architecture is shown in

Figure 1. The input data for TFDGCN is multivariate wind power time series data. The coordinate system in

Figure 1 can be used to illustrate the variable correlation, temporal correlation, and spatial correlation inherent in these multivariate time series data. In

Figure 1, the Variable axis represents wind turbine time series variables such as: wind speed, wind direction, temperature of the surrounding environment, temperature inside the turbine nacelle, nacelle direction, pitch angle of blade, reactive power and active power. The Variable axis describes the relationship between multivariate time series. The Time axis represents the time series, which is the data sequence formed by the changes in wind turbine variables over time. The Time axis reflects the changes in different time slices within a variable’s time series, that is, the change in the variable over time. The Node axis represents the characteristics of wind turbine nodes, namely the wind turbine power at the current moment, wind speed, wind direction, and temperature at the location. The Node axis reflects the spatial correlation between wind turbine nodes at the current moment.

TFDGCN is a three-stream Graph Convolutional Network (GCN) consisting of variable flow, time flow and node flow, with a symmetric architectural design that ensures consistent processing logic across all three branches. The statistical characteristics of time series data, such as mean and variance, change dynamically over time, leading to inconsistent distributions between training and test data, known as distribution drift. This drift can seriously affect the generalization ability of the model. Therefore, TFDGCN first uses the RevIN layer for standardization to address the problem of data distribution drift [

20]. The standardized data is then fed into the three branches. For each branch, an embedding operation is first performed, which constructs an adjacency graph representing the variable, time, and node relationship characteristics. TFDGCN then uses dynamic graph convolution to propagate and aggregate features across the dynamic graph and employs linear attention to capture long-range dependencies. The results from each branch are then concatenated. The concatenated features pass through a fully connected layer to produce the final prediction result.

3.1. Spatiotemporal Sequence Embedding and Construction of Dynamic Sparse Graph

We propose a method for constructing dynamic sparse graphs. By using variable embedding, time embedding, and node embedding, we represent variables, time, and nodes as sparse graphs, respectively, thereby decoupling the dependencies among the three dimensions of variables, time, and nodes.

3.1.1. Variable Embedding and Construction of Sparse Graph Between Sequence

As previously mentioned, the variables that affect wind power do not act independently; rather, they are interrelated and mutually influential, jointly determining the final wind power output. Variable embedding aims to represent the differences between different variables in the input data for wind power forecasting tasks. Specifically, for the observed multidimensional data set

, the

th turbine treats each variable as a node of the graph and maps each variable to a high-dimensional vector space through linear transformation:

Among them, represents the embedding vector of the th variable of the th wind turbine, and the Embedding function transforms . That is, the Embedding function maps the variables to a high-dimensional vector space through linear transformation, which enables the model to capture these complex relationships that are difficult to represent in low-dimensional space and improves the model’s understanding of the changing patterns of wind power.

Dependencies between variables must be dynamically analyzed based on the observational data, rather than relying on pre-set assumptions or fixed rules. TFDGCN uses cosine similarity to assess similarity between variables and constructs a dynamic sparse graph between variable sequences, as shown below:

where

represents the cosine similarity function,

represents the cosine similarity matrix of the

th wind turbine variable,

represents the wind turbine number and

represents the cosine similarity between variable

and variable

.

and

represents the variable index. In the similarity matrix shown in Equation (5), the

most relevant variables are found for each variable (Equation (7)), and the inter-sequence sparse graph set

is established (Equation (8)), as shown below:

where

represents the sparse graph between variable sequences of the

th wind turbine, and

represents the set of sparse graphs of all wind turbines in the variable dimension.

3.1.2. Temporal Embedding and Construction of Sparse Graph Within Sequence

In wind power forecasting tasks, the goal of time embedding is to characterize the multivariate time series data along the time dimension, such as the trend of short-term power changes, intraday periodic fluctuations, and short-term sudden fluctuations. In order to capture the temporal characteristics, we draw on the PatchTST method [

21] to divide the continuous time series data into several time patch units with local correlation. This method can not only focus on the details of variable fluctuations within each time segment but also capture trend or cyclical patterns across time periods through correlation modelling between patches. At the same time, this segmentation method can reduce the sequence length while retaining key time information, allowing the model to focus on the local time series characteristics of short-term predictions, thereby improving the ability of time embedding to characterize the short-term variation pattern of wind power. Specifically, for a sequence

with a time window size of

, we divide it into

subsequences:

, where

is the patch length and

represents the completion of the last patch. The time embedding can be expressed as follows:

In the above, represents the time flow embedding, represents the th subsequence of the th wind turbine, and represents the embedding vector of the th subsequence of the th wind turbine. The embedding dimension is . captures the time dimension features by integrating wind power information and mapping it into a high-dimensional space.

The method for constructing a time dimension sparse graph (intra-sequence sparse graph) is the same as that for constructing an inter-variable sparse graph. The equation is as follows:

where

is the cosine similarity between the

th time slice and the

th time slice. In the similarity matrix shown in Equation (12), we select the

most relevant variables for each variable (Equation (13)) and establish the sparse graph set

between sequences (Equation (14)):

Here represents the sparse graph within the variable sequence of the th wind turbine, and represents the set of sparse graphs of all wind turbines in the time dimension.

3.1.3. Node Embedding and Construction of Sparse Graph of Node Relationships

The purpose of node embedding is to learn the relative spatial relationship between wind turbines. Considering that the wind turbine’s own state parameters, such as the yaw angle and pitch angle of the nacelle, reflect the real-time operating status of a single wind turbine, the correlation between it and other wind turbines is not clear in the physical sense. Using state parameters to define the connections between nodes would result in complex and difficult-to-interpret coupling mechanisms within the graph structure. Instead, we chose environmental parameters such as wind direction and speed, along with the predicted target parameters, as inputs for node embedding. This more directly reflects the correlations between wind turbines due to environmental factors. For example, the wind speed and power changes in adjacent wind turbines are often synchronous or transferable. The graph structure constructed based on these parameters is more consistent with the laws of wind farm power forecasting and makes it easier for the model to clearly capture the dynamic influence relationship between nodes, thereby improving the node embedding’s ability to capture the coordinated operation characteristics of wind turbines. Node embedding can be expressed as follows:

where

represents the input time window tensor after the environmental factor features are selected,

represents the embedding vector of the

th wind turbine under the

th variable, and

represents the node flow embedding.

In the correlation mapping between wind turbines, previous studies often constructed geographic correlation graph structures by presetting distance thresholds or attenuation functions. However, the influence between wind turbines is not solely determined by geographic distance, but changes dynamically with external conditions. Static distance graphs cannot reflect this time-varying dependence. In addition, some studies [

22] randomly initialize the embedding matrix and construct a dynamic graph through post-learning. This will lead to insufficient convergence stability, resulting in large fluctuations in the dynamic graph structure in different training rounds, reducing the prediction stability. To address the above problems, TFDGCN uses a data-driven approach to construct a dynamic sparse graph between wind turbine nodes. The equation is as follows:

where

represents the cosine similarity matrix between wind turbines under the

th environmental variable, and

represents the cosine similarity between the

th wind turbine and the

th wind turbine. In the similarity matrix shown in Equation (18), find the

most relevant variables for each variable (Equation (19)) and establish the inter-sequence sparse graph set

(Equation (20)):

Here represents the sparse graph between nodes under the th environment variable, and represents the set of sparse graphs between nodes under all environment variables.

3.2. Triple-Flow GCN

TFDGCN captures the dimensional dependencies of variables, time patch, and nodes by inputting the three dynamic sparse graphs constructed in

Section 3.1 into their corresponding GCNs.

Specifically, TFDGCN uses variable graph convolutional networks (Variable-GCN), time patch graph convolutional networks (Time patch-GCN), and node graph convolutional networks (Node-GCN) to capture the spatial dependencies between variables, dynamic dependencies along the time dimension, and dynamic dependencies between nodes. In this way, TFDGCN can effectively learn the spatiotemporal features of data and improve network performance.

3.2.1. Variable-GCN

We construct a sparse graph between sequence for Variable-GCN based on the method described in

Section 3.1.1. By using graph convolution, the node features are weighted and averaged with their adjacent node features to update the new feature representation, as shown below:

where

represents the features learned through graph convolution,

represents the adjacency matrix of the sparse graph between variable sequences with self-loops after normalization, as shown in Equation (22),

represents the sparse graph set in

Section 3.1.1,

represents the identity matrix, and

represents the degree matrix. This normalization helps stabilize the training process of graph convolution and avoid gradient vanishing or exploding.

represents the learnable parameter matrix, and

represents the activation function, which is used to introduce nonlinear factors and enhance the expressive power of the model.

3.2.2. Time Patch-GCN

We construct a sparse graph within the sequence for Time patch-GCN based on the method in

Section 3.1.2, and explore dynamic dependency features between different time slices through graph convolution operations. This is represented as follows:

where

represents the features learned by graph convolution,

represents the adjacency matrix of the sparse graph in the variable sequence with self-loops after normalization, and its specific content is shown in Equation (24).

is the sparse graph set in

Section 3.1.2,

is the identity matrix, and

is the degree matrix.

represents the learnable parameter matrix, and

is the activation function.

3.2.3. Node-GCN

We construct a sparse graph of node relationships for node GCN based on the method in

Section 3.1.3, and the wind turbine features are weighted and averaged with their neighboring features to update them into new features. This is represented as follows:

where

represents the features learned by graph convolution,

represents the adjacency matrix of the normalized sparse graph with self-loops between nodes, as shown in Equation (26),

is the sparse graph set in

Section 3.1.3,

is the identity matrix, and

is the degree matrix.

represents the learnable parameter matrix, and

represents the activation function.

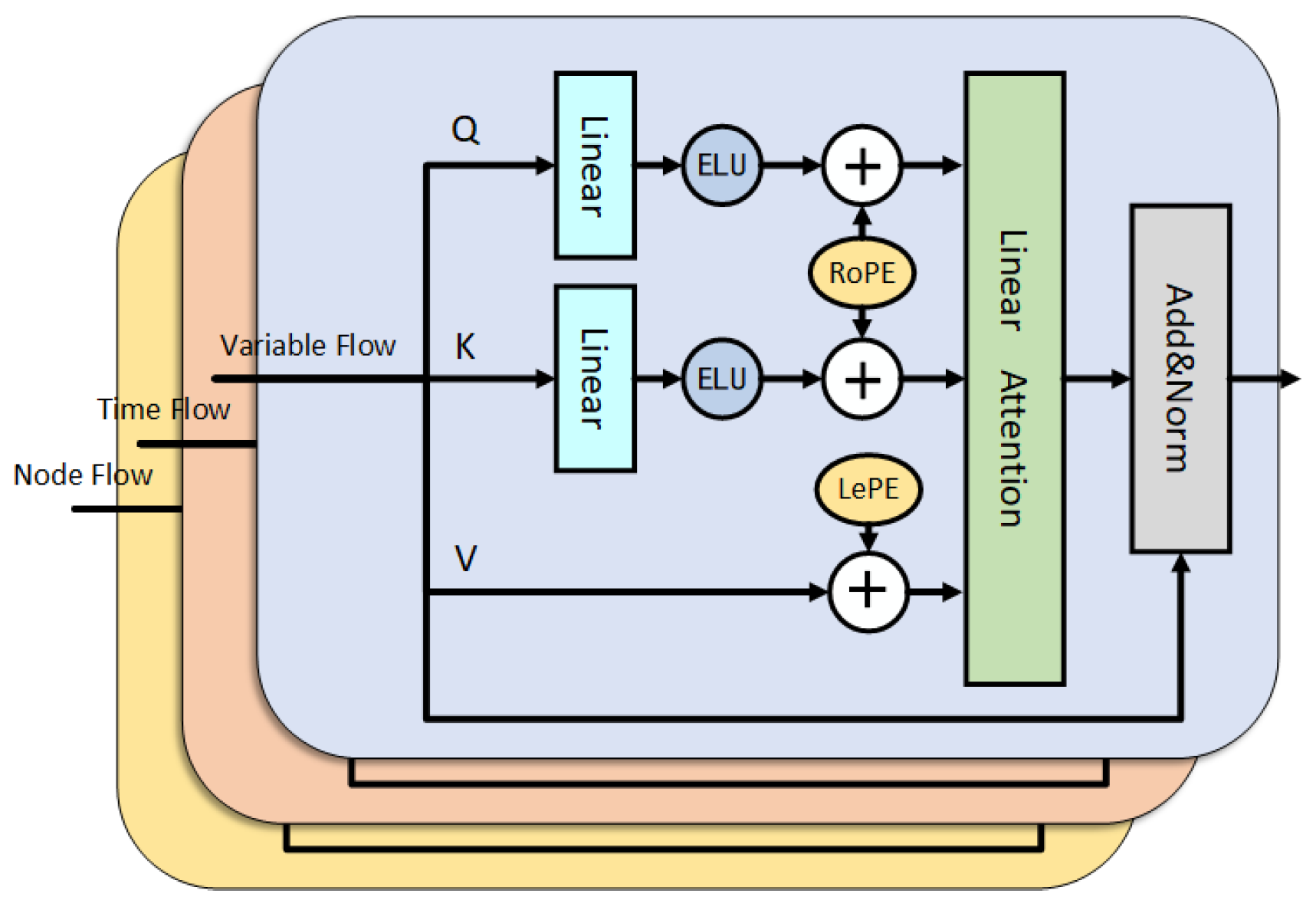

3.3. Triple-Flow Linear Attention Layer

Variable-GCN, Time patch-GCN, and Node-GCN are followed by a linear attention encoder with rotational position encoding and local position encoding. The linear attention mechanism aims to reduce model complexity while learning global dependencies between variable sequences, within variable sequences, and between wind turbines, thereby improving the model’s representational capabilities. The detailed structure of the linear attention module is shown in

Figure 2. The features learned by the dynamic graph convolution in

Section 3.2 are used as the input of Q, K, and V. The query Q and key K are processed by the linear layer and then activated by the ELU function. The position information is then added to the query Q and key K through the rotation position encoding, and the local position encoding adds local spatial information to the value V, which supplements the deficiency of global dependency modeling in the attention mechanism [

23]. After attention calculation, residual connection and layer normalization output are performed.

3.3.1. Variable Flow Attention Correlation

Linear attention mechanism [

24,

25] can effectively calculate the global correlation between variables and complete the weighted aggregation of features while reducing the complexity of the model. This is shown as follows:

We update the feature

learned from the Variable-GCN in

Section 3.2.1 to

through the linear attention mechanism.

in Equation (28) represents the updated feature of the

th variable for wind turbine

, and

represents the local position encoding of the

th variable for wind turbine

, which is learnable.

represents the relationship coefficient, which determines the amount of information each feature receives from other variables, as shown in Equation (29):

In the above, is the rotation position encoding, is the kernel function (), and , and are learnable parameters.

3.3.2. Time Flow Attention Correlation

Linear attention mechanism can effectively calculate the global correlation between time slices while reducing model complexity, and thus performs weighted feature aggregation. This is calculated as follows:

In Equation (30),

represents the updated feature for wind turbine

at the

th time slice, and

represents the local position encoding for wind turbine

at the

th time slice. It helps the model capture short-term, high-frequency temporal dependencies, forcing the model to focus on the dependencies of these nearby moments and avoid being disturbed by irrelevant historical data in the long sequence.

represents the relationship coefficient, which determines the amount of information each feature receives from other time slices, as shown in Equation (31):

In the above, is the rotation position encoding, is the kernel function (), and , and are learnable parameters.

3.3.3. Node Flow Attention Correlation

In the node flow, we still use linear attention mechanism, as shown below

In Equation (32),

represents the updated feature of the

th wind turbine for environmental variable

, and

represents the local position code of the

th wind turbine for environmental variable

. It can strengthen the close dependence between wind turbines, that is, the mutual influence of wind turbines at close distances is much stronger than that of wind turbines at far distances.

represents the relationship coefficient, which determines the amount of information each feature receives from other wind turbines, as shown in Equation (33):

In the above, is the rotation position encoding, is the kernel function (), and , and are learnable parameters.

3.4. Fusion Prediction Module

After processing through the Triple-flow linear attention mechanism, we obtain features in three dimensions: variable, time, and node. The task of the feature fusion module is to fuse the features of these three dimensions to generate the final prediction result. Specifically, it uses a combination of splicing and fully connected layers to aggregate. Before feature splicing, it is necessary to map the features of each dimension to the same dimensional space through linear transformation to ensure the effectiveness of the splicing operation and the stability of subsequent feature fusion. Specifically, we convert the dimensions into:

,

,

. Then we concatenate the feature vectors along the second dimension through the concatenation operation, and

is the new feature after concatenation.

Finally, we map the hidden layer dimensions to the output time window dimensions through the fully connected layer to obtain the final prediction result:

On the one hand, this method retains the feature information of variable flow, time flow and node flow; on the other hand, it generates more expressive integrated features through the fully connected layer. These two aspects work together to effectively enhance the performance of the model.

3.5. Loss Function

TFDGCN uses mean square error (MSE) as our loss function, which is defined as follows:

where ETrue is the actual value of wind power, is

the number of wind turbines, and

is the size of the prediction time window.

5. Conclusions

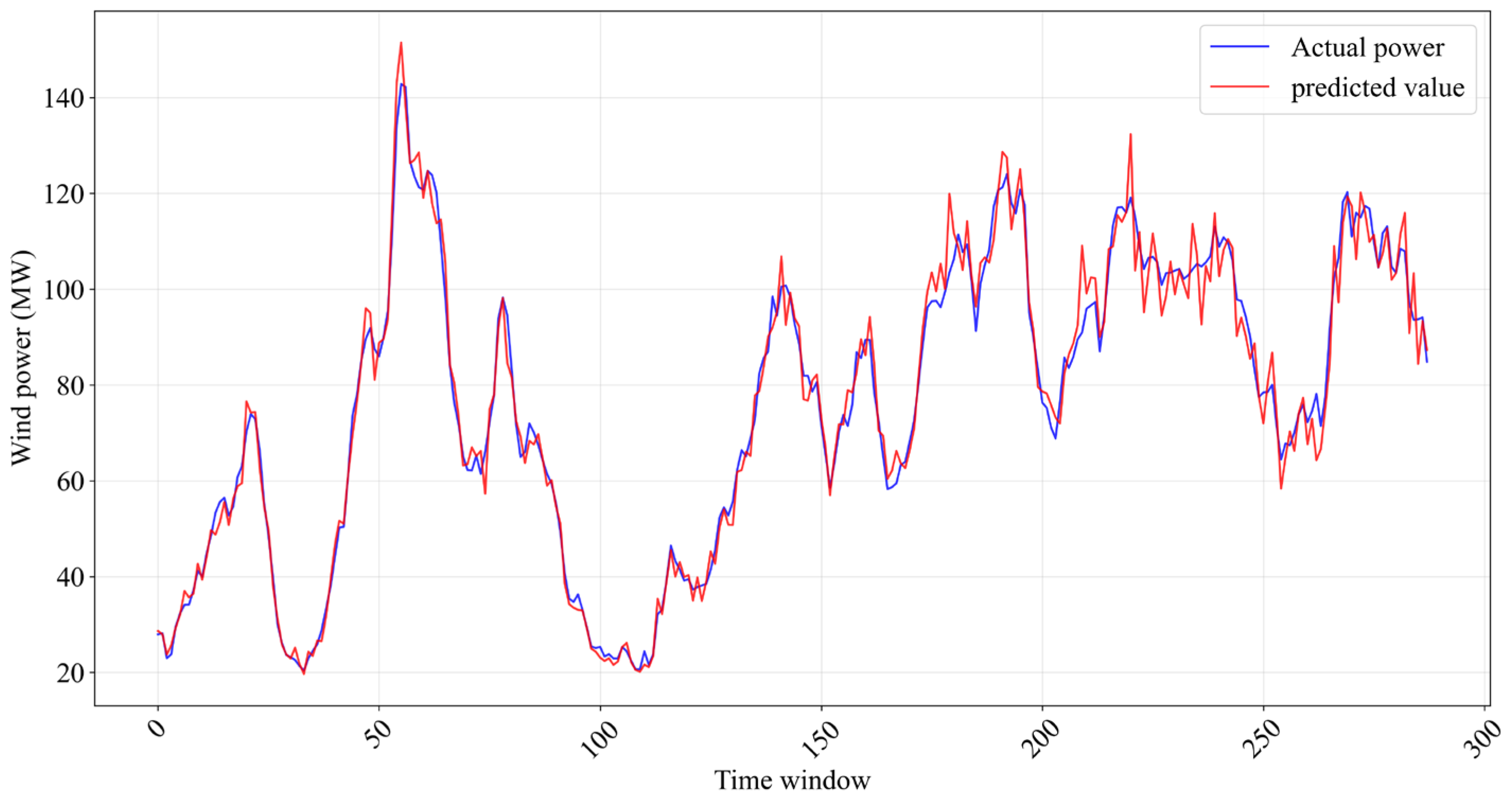

We propose TFDGCN, a novel spatiotemporal wind power short-term prediction model. TFDGCN has a three-branch structure, which models the variable dimension, time dimension, and node dimension separately to capture heterogeneous dependencies in spatiotemporal sequence data. Experimental results show that the proposed TFDGCN outperforms traditional temporal models and the state-of-the-art method in terms of performance. The ablation experiments further confirmed the effectiveness of the three-branch structure, that is, when any one of the three branch structures is removed, the model performance decreases. Removing the linear attention module will also result in a decrease in prediction accuracy. This demonstrates the role of the linear attention mechanism in extracting global dependencies.

The latest research results show that the collaborative application of wind power prediction and power scheduling optimization can improve the quality of scheduling strategies. In future work, we will attempt to study the synergistic effect between wind power forecasting and power dispatch optimization, in order to further reduce the operating costs of the power grid and ensure the safe and stable operation of the power system.