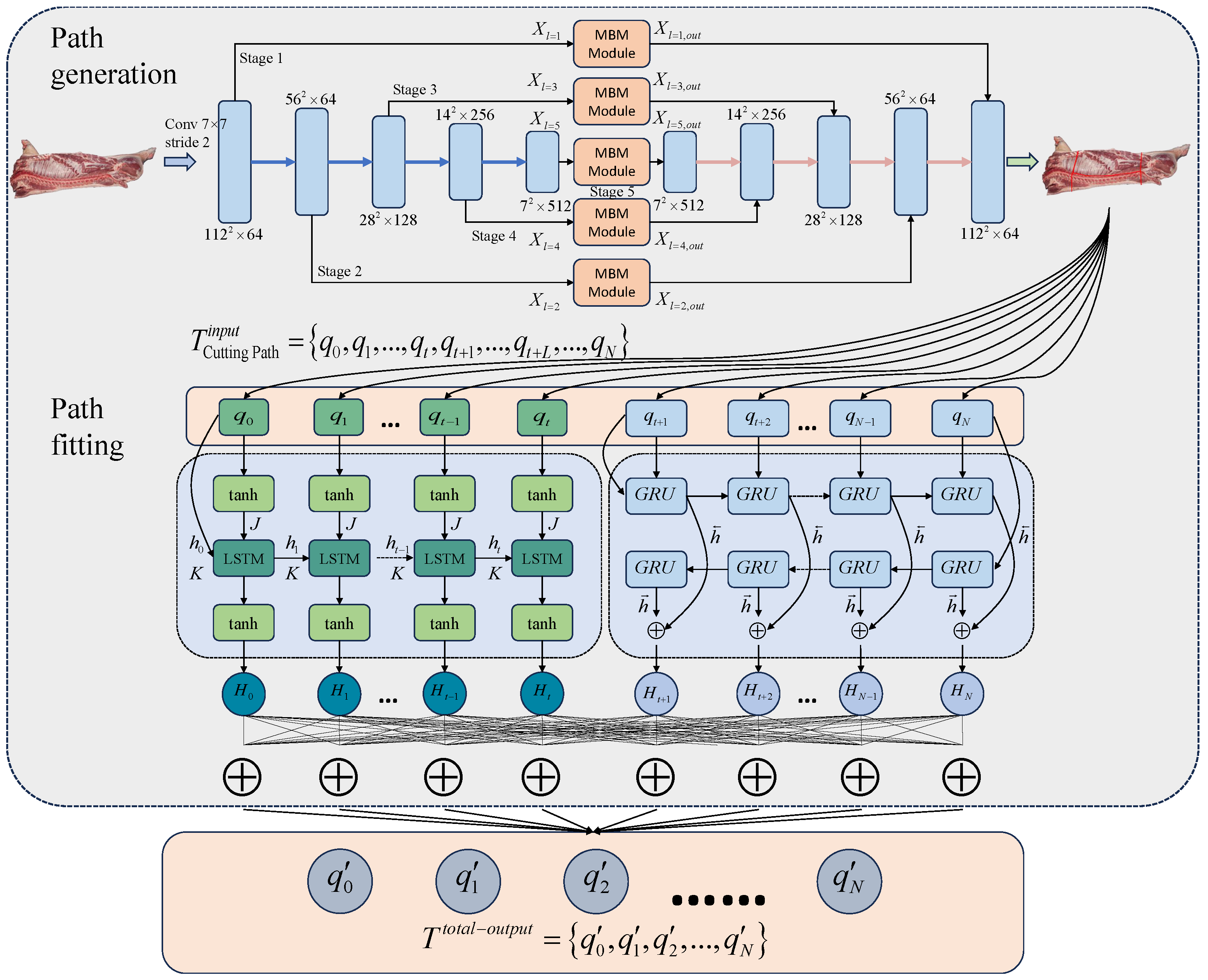

In this section, a generation and fitting optimization method for pig carcass cutting paths (

PGF-Net) is described in detail. First, the boundary constraint cutting path generation method (

CGM) is introduced as a whole. Then, the main structure of the multi-scale boundary extraction module (

MBM) and the realization principle are explained. Finally, a bifurcated cutting path fitting module (

BFM) is proposed, and the recursive network of two branches and the implementation process are described in detail. The network framework of the algorithm in this paper is shown in

Figure 1.

2.1. Cutting Path Generation Method (CGM)

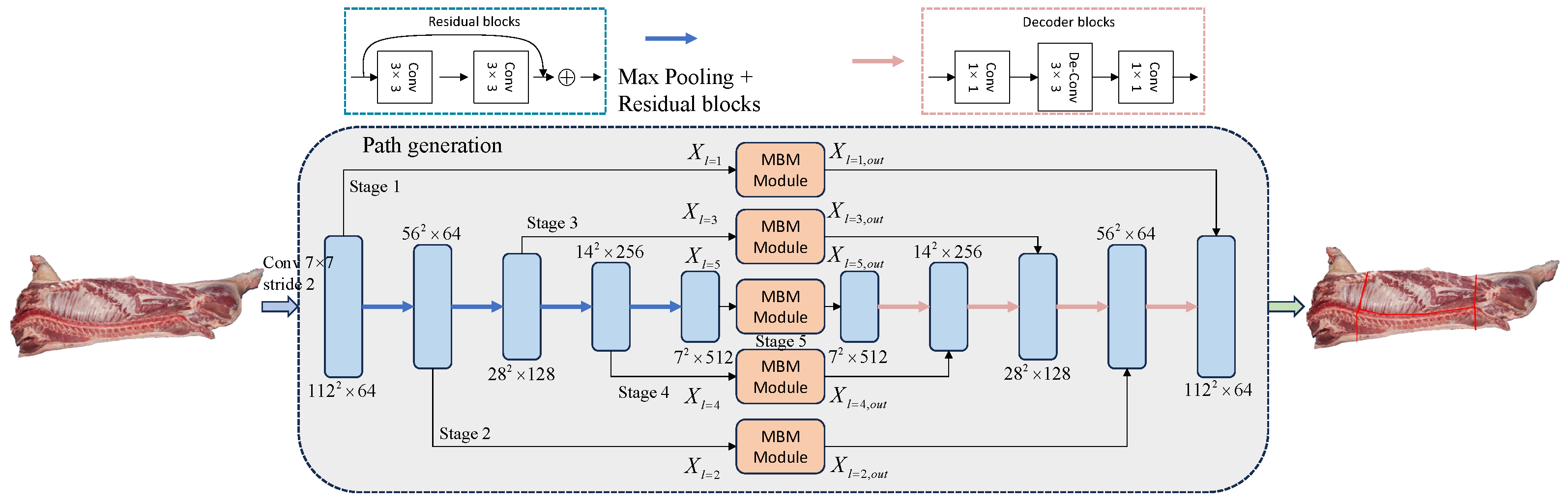

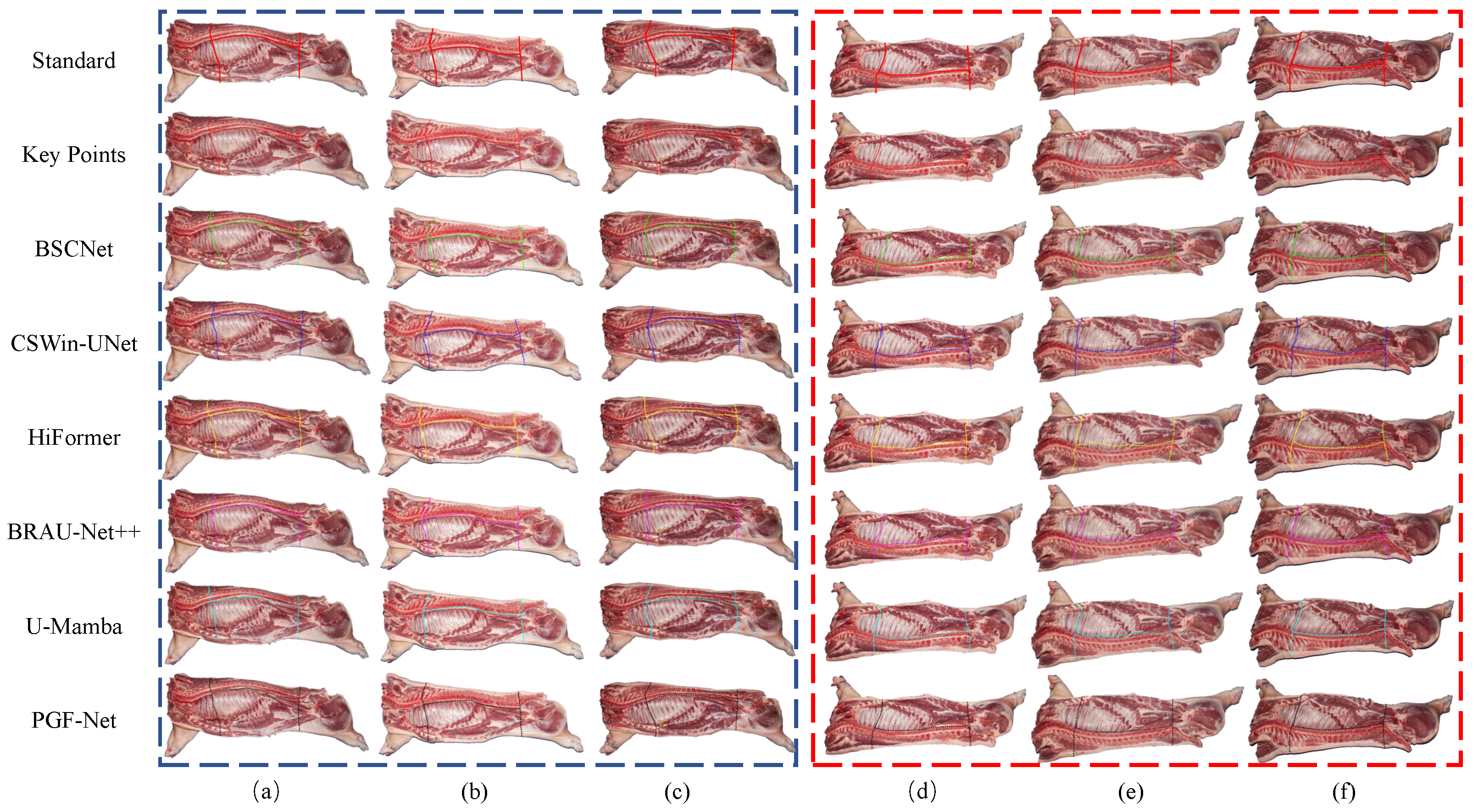

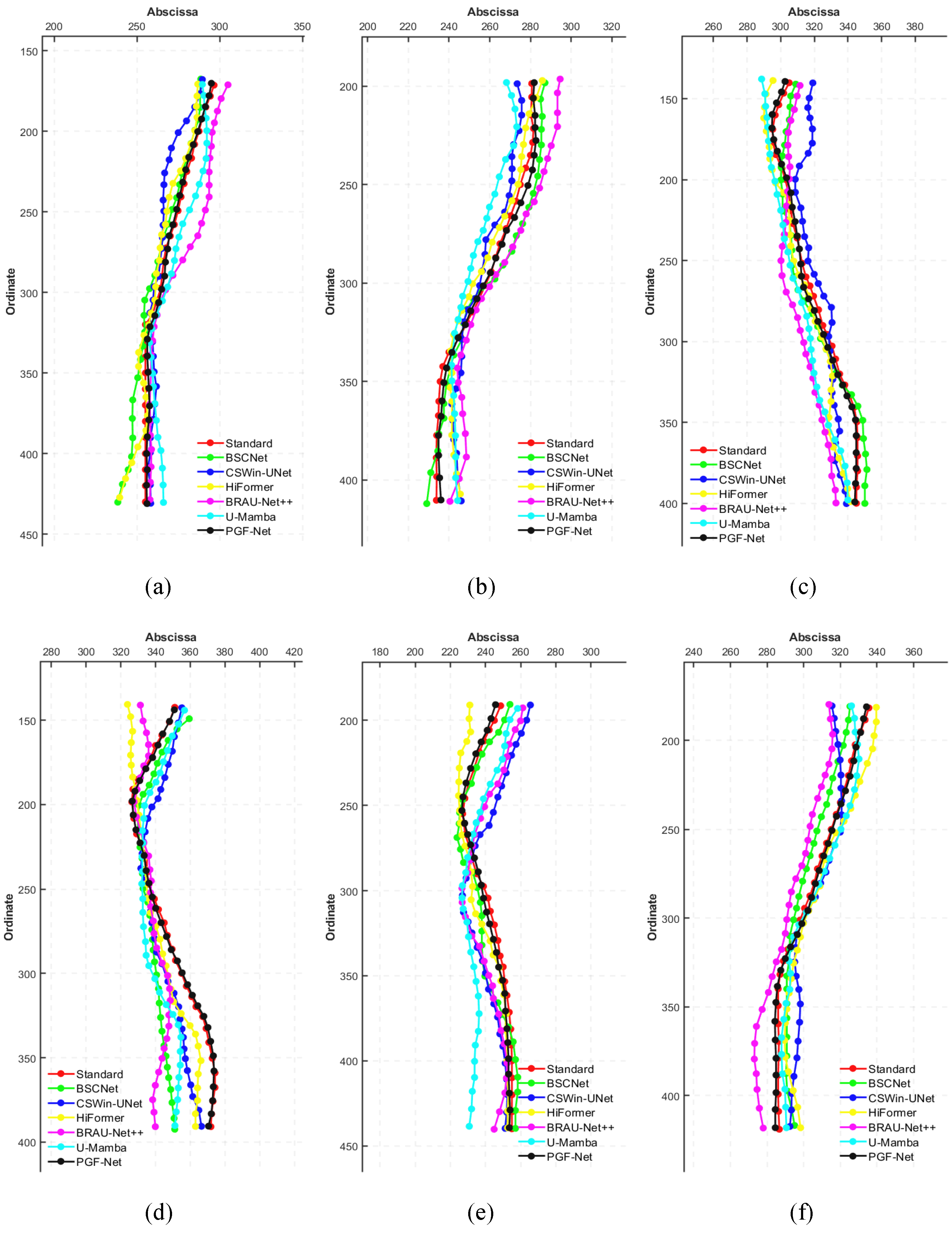

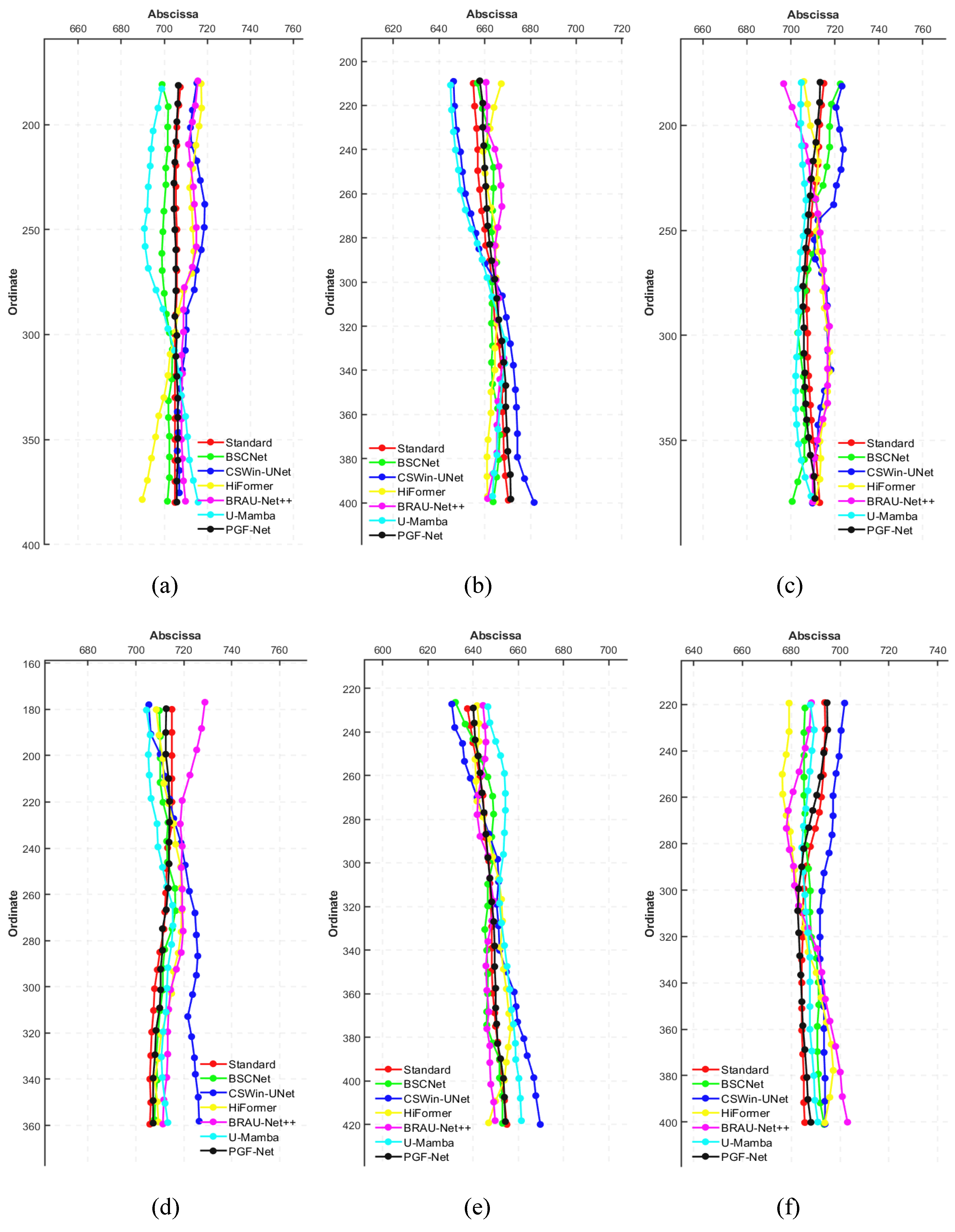

In this section, a rough generation method for pig carcass cutting paths is proposed, as shown in

Figure 2. The method mainly uses semantic segmentation to obtain the cutting boundary. First, a convolution operation with a step size of 2 is performed on the input image. Then, it is inputted into the rough path generation network, and the convolution operation with 5 different stages is performed. The image after the convolution of each scale is input into the multi-scale boundary extraction module separately to enhance the extraction of boundary features. The residual linking mechanism is used, which mainly consists of two

convolution blocks and feature summation to avoid gradient vanishing. In each multi-scale boundary extraction module, it is divided into five scales after a

DW convolution, and then DW convolution is performed for each of the five scales. The final feature fusion is performed to recover the original size of the image by a

convolution. The enhanced target features from the multi-scale boundary extraction module are fused with the corresponding scale decoding blocks, and then richer boundary detail information is obtained.

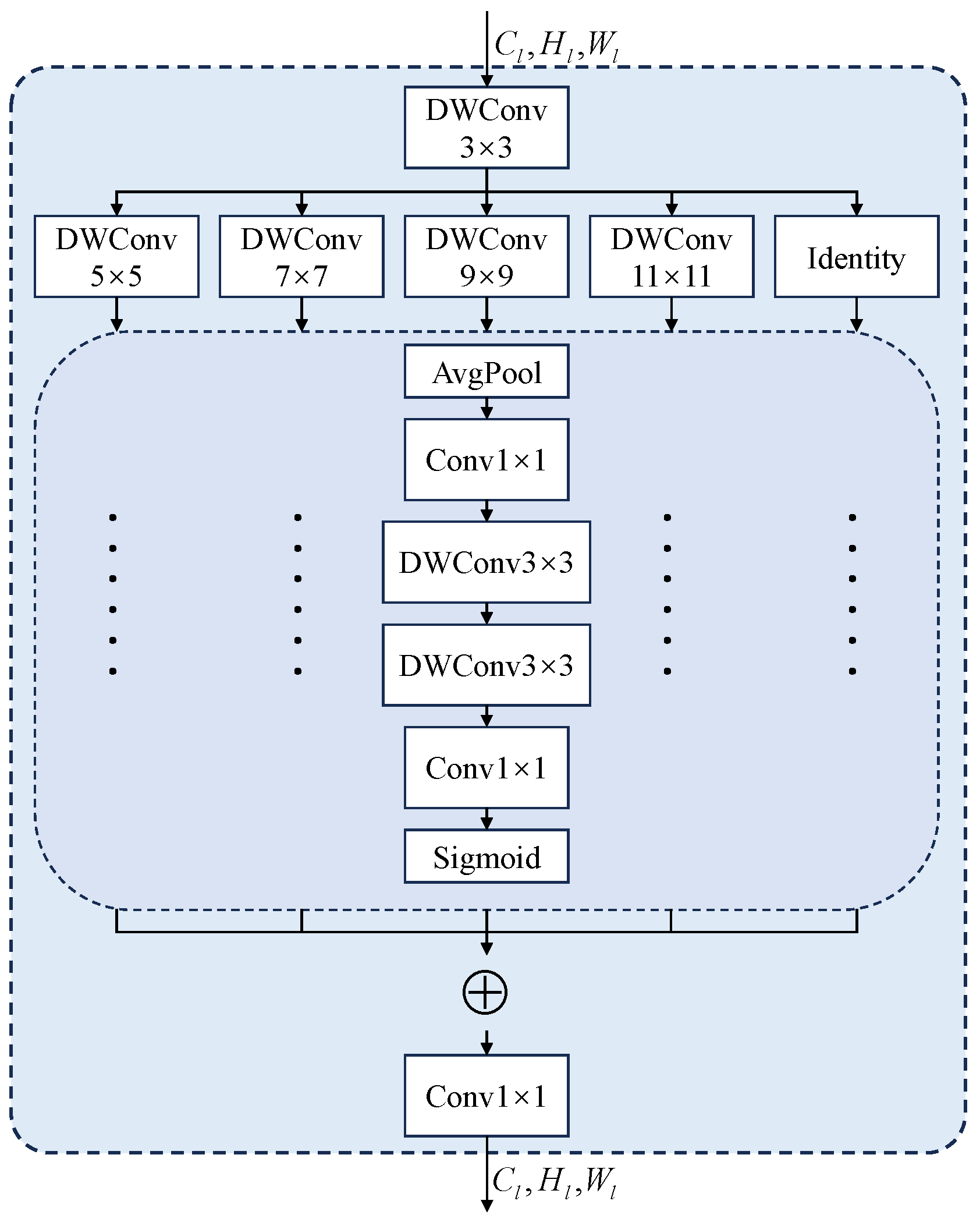

During the cutting process of pig carcasses, the images have problems such as large body size differences, blood stains at critical parts, and missing boundary information due to fascia coverage. In this paper, the MBM module is introduced to capture the multi-scale information of the key part features, and the MBM module is shown in

Figure 3. The MBM module includes a small kernel convolution to capture the local information. Then, there is a set of parallel deep convolutions to capture the contextual information on multiple scales, where an Identity block is set to ensure the consistency of the feature information. The

n th MBM block in stage

l is represented by the following equation:

where

is the localized feature extracted through

.

is the contextual feature extracted by the

n th

deep convolution

. In this experiment, the settings

n and

s correspond to each branch. As an the example, in the

n th branch of the

l th stage, average pooling is used first. Then, a

convolution is performed to obtain the local region features. It can be represented as

where

denotes the average pooling operation. Then, two depth strip convolutions are applied as an approximation to the standard large kernel depth convolution. It can be represented as

Deep strip convolution is chosen based on two main factors. First, strip convolution is lightweight. A similar effect is achieved with a pair of 1D deep kernels with

fewer parameters compared to the traditional

2D deep convolution. Secondly, strip convolution can be better extracted for feature recognition and extraction of the thin strips (e.g., loin). In order to adapt to different sensory fields and better extract multi-scale features,

is set so that it can obtain more relevant semantic linkage of feature information in the feature extraction process. And the design of strip convolution can avoid the increase in computational cost to some extent. Finally, an attention weight

is generated to further enhance the output of the MBM module. This is expressed by the following equation:

where the

function is to ensure that the attention graph

stays within the

range.

is the output of the entire module at the

n th branch of the

l th stage. The output of the entire module is

where

is the feature that has been enhanced by the module.

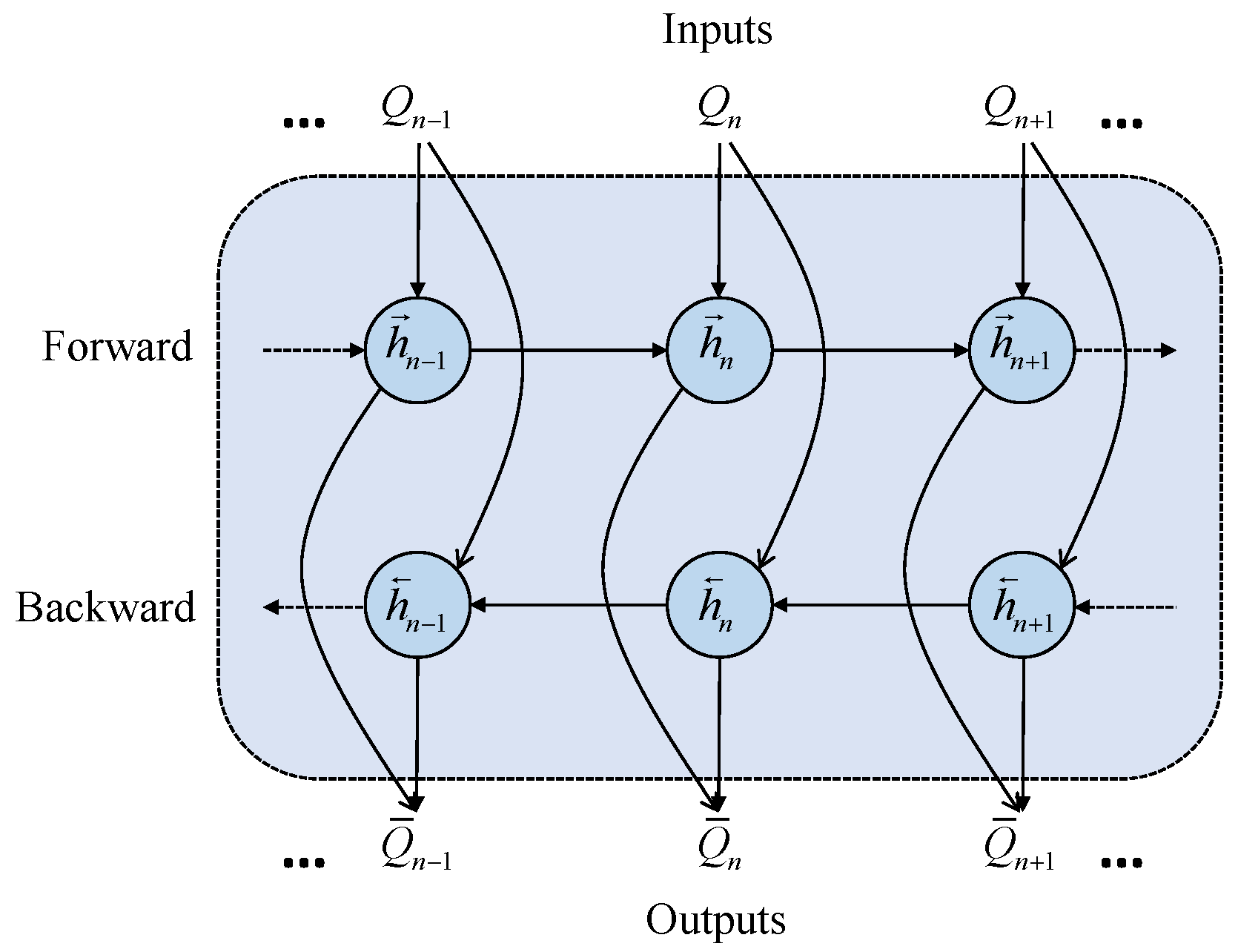

2.2. Bifurcated Cutting Path Fitting Module (BFM)

In this section, a double-ended GRU bifurcated cutting path fitting optimization model (BFM) based on forward and backward recurrent networks is proposed, and the overall network structure is shown in

Figure 4. The framework is divided into three parts. (1) The sequence of cutting path key points extracted from the CGM module as input data. (2) A bidirectional linear regression network layer. (3) A fusion output layer that aggregates data from the two-branch network. The main components include input data preprocessing, a dual-branch network, and summation and fusion output. Data preprocessing operates on raw, actual coarse segmentation path data. Through data integration techniques, the fusion status information of key segmentation points is converted into a sequence of segmentation paths. The forward recursive network starts from the cutting origin point, extracting features based on adjacent feature information. The backward recursive network begins at the cutting endpoint, extracting features based on adjacent feature information. Each recursive network separately fits the input cutting path sequence. Finally, the fusion layer outputs the sequence of fitted cutting path points by summing the features. In the BFM module, the cutting path fitting problem can be expressed as an optimization problem, whose objective is to minimize the error between the predicted path and the actual path. We define the objective function as

where

is the predicted output of the model at time

t,

is the true cut waypoint, and

is the model parameter. By optimizing this objective function, the BFM module can fit the cutting path more accurately.

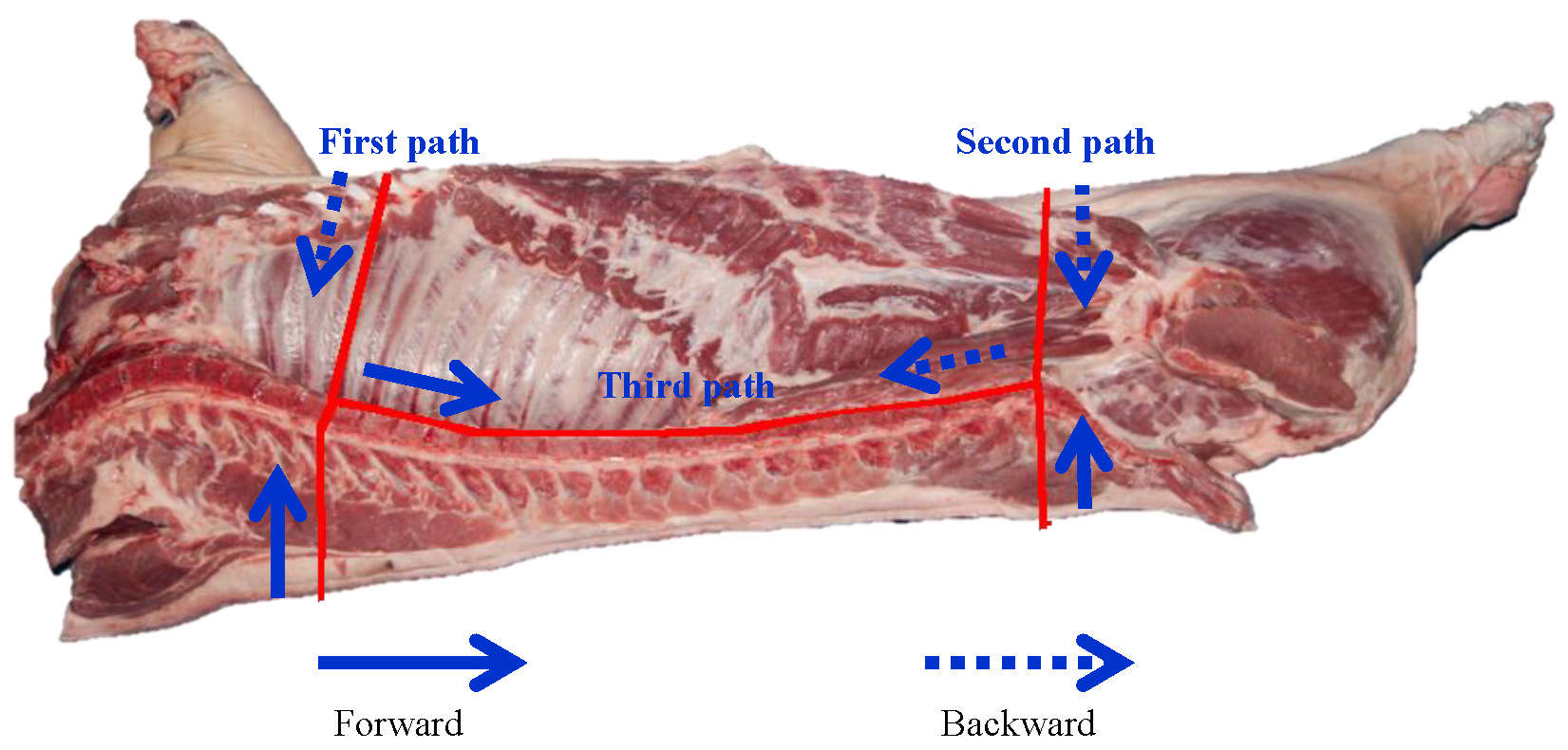

The rough cutting path generated by the pig carcass cutting path generation method, which only has RGB image information, needs to be preprocessed before inputting it to the cutting path fitting optimization algorithm, as shown in

Figure 5. The preset cutting paths are set as three paths with known state data as the first path, the second path, and the third path. The existing three paths are just a collection of points on the RGB image, and the horizontal coordinates, vertical coordinates, movement speed, direction of travel, and relative time at moment

t of the cutting device during the cutting process need to be fused. The position information of the cutting tool at moment

t can be represented as

. Where

,

,

,

, and

are the horizontal coordinates of the corresponding cutting position, the vertical coordinates, the cutting tool moving speed, the direction of travel of the cutting tool, and the relative time at the moment of

t, respectively.

In the cutting process, the feed point and the cutting direction of the front section are extremely important; it requires the forward network to have a good ability to deal with complex long sequences. Therefore, a standard LSTM network is used as the forward network. The pig carcass cutting path data with moments from 0 to

t are organized into a data vector

, which is used as the input vector of the forward network. The hidden layer is

. The forward network takes the computed cell state and outputs and combines them to form the state vector

. Then,

J,

K, and

S denote the values of the layer-to-layer weighting metrics, respectively. The hidden and output layers can be represented as

where

denotes the state data at moment

.

and

denote the bias values of the hidden and output layers, respectively.

LSTM is able to recognize long-term data better than traditional RNN networks. LSTM solves the problem of long term dependence of data, thanks to the setup and effective cooperation of its input gates, forgetting gates, output gates, and cell state, defined as i, f, o, and c, respectively. Among them, the forgetting gate will decide which cut path point data need to be retained after receiving the previous state data, mainly realizing the filtering of abnormal path point interference. The input gate determines which data need to be retained and updates the cell state. The output gate is what controls which information from the current time step is transferred to the next or output layer. The cell state is the core of the whole network, which can store and transfer information, as well as control the flow and update of information.

The purpose of the forgetting gate is to minimize the interference of anomalous path points on subsequent fitted cut paths, in addition to being responsible for the collection of data from the previous moment. Then, the recursive input

and the current input

are multiplied by their weights as inputs to the

function denoted as

. If output

is a value within

, multiply by cell state

. If

outputs 1, the LSTM will save this new data, and if

outputs 0, the LSTM will completely forget this data.

In order to synthesize the efficiency and accuracy of the cut-path fitting, the backward recurrent network that is chosen ensures the correlation between the previous and subsequent moments and at the same time ensures the efficiency of the data sequence processing. In this section, a two-headed GRU network is chosen to realize the fitting of the path from to . The cut path data sequence has the single data characteristics of incremental time information, detailed path information, and small fluctuations between neighboring path points. These data characteristics can better meet the requirements of GRU to handle long data sequences, thus ensuring that the Sigmoid function in the update gate is in an intermediate state distribution. The training effect of each input data is maximized, thus reducing the gradient vanishing and improving the accuracy of path fitting. In this paper, the input cut path vector , after bi-directional GRU computation as well as summing, outputs the combined state vector .

The update gate is updated based on the

and

fields in the cut path data. When the two data changes are small, the curvature of the cutting path changes are relatively small, then the update gate takes a smaller value close to 0, and the weights should also take a smaller value. The equation is expressed as follows:

where

denotes the weight of the update gate.

is the state at the time of the previous path point. tanh takes values between −1 and 1.

The purpose of the reset gate is to minimize the interference of anomalous path points with subsequent fitted path points. When the reset gate takes a value close to 1, it means that the state values should be invalidated and discarded, thus ensuring the accuracy of the cut path fitting. The expression for the reset gate is shown below.

where

is the weight belonging to the reset gate.

is the state of the previous path point. tanh takes values between −1 and 1.

The output variable

denotes the output vector value of this network model at moment

N. Variable

denotes the degree of dependency between the candidate state at the current moment and the cut path state value at the previous moment.

is responsible for storing the position, attitude direction, and velocity information of the cutting path, and it serves as an input variable for the next moment. The equation is expressed as follows:

where

is the weight value of GRU network.

and

are the bias values. ⊗ is the multiplication operation.

The first cutting path at successive moments is labeled from

to

. Thus, the cutting path at time

can be fitted from

to

from the initial undercutting point or from

to

at the end of the cut. Finally, it is fused with the cut path features fitted by the double-ended GRU network. The Double-ended GRU network is represented by the following equation:

where

W denotes the weight matrix.

,

,

, and

denote the implied states and weight values of the forward and backward structures of the double-ended GRU network with double heads, respectively. Therefore, the temporal correlation of the input cut path points in both directions can be extracted. The detailed framework is shown in

Figure 6.

2.3. Definition of a Loss Function

A semantic segmentation neural network is applied to a pig carcass cutting task. Its main challenge is the lack of feature differentiation due to the high background similarity of the area to be cut, which makes it difficult to achieve accurate cutting path segmentation. Moreover, there are body size differences between different pig carcasses, which leads to an uneven distribution of key part categories in the segmentation process. Meanwhile, the generated cutting paths are difficult to adapt to the body size variability in pig carcasses. Therefore, in order to optimize the proposed model, the cross-entropy loss function

is set between the segmentation result and the true value, respectively. The dice loss function

is set between the cut path generation result and the standard cut path. Cross-entropy loss excels at pixel-wise classification, particularly for imbalanced datasets, by penalizing misclassifications of small or rare anatomical structures. Its logarithmic term ensures gradient stability during backpropagation. Dice loss directly optimizes the overlap between predicted and ground-truth regions, making it suitable for spatial consistency in cutting path generation. It mitigates foreground–background asymmetry caused by size variability among carcasses. Dice loss

and cross-entropy loss

complement each other in addressing the issues of pig carcass segmentation and the generation of cutting paths. In this paper, the total loss function

, which consists of the dice loss function and the cross-entropy loss function together, is used to perform the optimization task of fitting the cutting paths of pig carcasses. The equation is expressed as

where

and

denote the ground-truth real image and the predicted probability map, respectively.

N is the total number of pixels.

and

are the balancing factors.

Higher

prioritizes spatial overlap (critical for cutting paths), while

refines pixel-level accuracy. The value range of F1-Score is from 0 to 1, where 1 indicates the best output of the model and 0 indicates the worst output result of the model. As shown in

Table 1, when

and

are set to 0.6 and 0.4, respectively, the best F1-score (0.89) is obtained.