1. Introduction

Microservice architecture has emerged as a leading paradigm for building scalable, modular, and maintainable distributed systems [

1,

2]. By decomposing monolithic applications into loosely coupled, independently deplorably services, microservices enable rapid development and continuous delivery. However, the dynamic and heterogeneous nature of these systems makes ensuring reliability a complex challenge [

3]. Detecting anomalies in service call patterns is crucial, as these anomalies often indicate failures, misconfigurations, or security breaches that can propagate across the system.

A central challenge in microservice anomaly detection is the severe class asymmetry, referring to both the extreme disproportion between normal and anomalous instances and the heterogeneity of anomaly patterns caused by the scarcity of labeled anomaly data [

4]. Normal instances dominate the dataset, while anomalies are rare, diverse, and often subtle in both structure and attributes. For example, in financial fraud detection scenarios, anomalous events may account for only 0.1%

Graph data augmentation has been explored as a solution to the asymmetry problem [

5], with strategies such as edge addition, node feature swapping, and edge perturbation aiming to improve model generalization. Yet most existing approaches are random or predefined, failing to consider dataset-specific asymmetry patterns in anomaly distributions. Inappropriate transformations may disrupt critical structural patterns and degrade detection performance. Empirical studies show that augmentation effectiveness varies drastically across datasets, emphasizing the need for adaptive selection strategies to tailor transformations according to anomaly distribution characteristics.

To address this challenge, we propose Heterogeneous Graph Adaptive Augmentation (HGAA), a framework that dynamically adjusts augmentation strategies based on feedback from anomaly distributions. HGAA guides augmentation towards transformations that are likely to produce meaningful variations while avoiding operations that could introduce noise or distort structural patterns. Experiments on two real-world datasets demonstrate that HGAA consistently outperforms competitive baselines in AUC and AP, showing the potential of adaptive augmentation to enhance microservice anomaly detection in heterogeneous environments.

Our main contributions are summarized as follows:

HGAA, a dataset-adaptive graph augmentation framework designed to improve anomaly detection under severe and anomaly type asymmetry conditions, is proposed.

A filter network that learns anomaly distributions and selectively retains effective augmentations—optimizing the training process—is designed.

The effectiveness and efficiency of HGAA are validated through extensive comparative and ablation studies on two real-world microservice datasets: TraceLog and FlowGraph.

The structure of this paper is organized as follows.

Section 1 introduces the research background, the related tasks, and the main contributions of this work.

Section 2 provides a review of Graph Neural Networks (GNNs), the task of graph-level anomaly detection, and related studies, while also discussing existing graph data augmentation methods and their limitations.

Section 3 presents the proposed approach, which consists of three components: the graph augmentation method, the filter network, and the adaptive augmentation training strategy.

Section 4 reports the experimental results, where the effectiveness of the proposed method is validated across two datasets and multiple models. Finally,

Section 5 concludes the paper by summarizing the main methodology and findings.

2. Related Work

2.1. From General GNNs to Graph Anomaly Detection

Graph Neural Networks (GNNs) have become powerful tools for modeling structured data. As the foundational architecture for processing non-Euclidean graph data [

6], representative models such as GCN [

7], GraphSAGE [

8], and GAT [

9] learn node and graph embeddings by aggregating neighbor information or applying attention to capture structural dependencies. However, these homogeneous GNNs have inherent limitations: GCN struggles with scalability due to its full-graph convolution mechanism; GraphSAGE improves efficiency via neighborhood sampling but ignores semantic differences between edge types; GAT employs attention to weigh neighbors yet cannot distinguish heterogeneous relations—all of which restrict their performance in task-specific scenarios like anomaly detection and under class imbalance [

10,

11]. For heterogeneous graph scenarios (e.g., microservice architectures with diverse node/edge types), heterogeneous GNNs like HetGNN [

10], HAN [

11], and HGT [

12] are more naturally aligned with practical needs. HetGNN encodes node attributes and structures separately but incurs high computational overhead; HAN uses meta-path attention but relies on manually predefined paths, risking missing anomaly-critical relations; HGT learns meta-relations automatically with linear complexity yet still struggles with imbalanced type distributions [

5].

Graph-level anomaly detection aims to identify abnormal entire graphs or substructures, playing a crucial role in cybersecurity, fraud detection, and biochemical analysis [

13]. Building upon foundational GNN architectures, researchers have developed specialized methods for this task. Early adaptations include knowledge distillation approaches such as GLocalKD [

14], which transfers knowledge from complex teacher GNNs to compact student models while preserving performance on both local and global graph properties, and sequence-based methods like DeepLog [

15] and DeepTraLog [

1]—DeepLog applies LSTM models to sequential data (and can be adapted to graph-derived sequences for temporal anomaly detection), while DeepTraLog integrates gated GNNs with DeepSVDD (a deep one-class classification method) to address class asymmetry and temporal dynamics in graph anomaly detection [

1]. For heterogeneous graphs, HRGCN [

5] constructs relational hierarchies and applies meta-path-specific convolutions to better model multi-relational systems. However, these methods often assume either sufficient anomalous data or homogeneous relational semantics, which weakens their effectiveness under severe

class asymmetry or heterogeneous constraints. Recent surveys further emphasize that detecting rare anomalies in dynamic, multi-relational graphs remains an open challenge [

13,

16,

17,

18].

Gap

Most existing anomaly detection methods either (i) rely on abundant anomalous instances, (ii) overlook heterogeneity in graph relations (failing to leverage heterogeneous GNN advantages or address their limitations like HAN’s manual meta-paths), or (iii) neglect augmentation strategies that selectively amplify minority anomalous substructures. This motivates the need for augmentation-aware strategies tailored to imbalanced, heterogeneous anomaly detection.

2.2. Graph Data Augmentation

To improve robustness and generalization under limited or imbalanced training data (a core challenge in graph anomaly detection [

19]), graph data augmentation (GDA) has become a central tool. Early GDA methods include GraphMix [

20], which extends the Mixup technique from images to graphs by linearly interpolating existing node features to generate synthetic training examples with blended attributes; GraphSAINT [

21], which adopts subgraph sampling to improve GNN scalability—this sampling process also serves as augmentation by exposing the model to diverse local graph views; and DropEdge [

22], a simple yet effective regularization technique that randomly removes edges during training to prevent overfitting, though it risks deleting critical edges for anomaly distinction [

23]. Contrastive learning frameworks (e.g., GraphCL [

24]) further leverage augmentation by maximizing agreement between representations of two different augmented views of the same graph, aiming to learn rich, task-agnostic graph embeddings via positive/negative pair construction [

24].

More sophisticated and recent advances in GDA emphasize adaptive and structure-aware strategies. Adversarial augmentation methods like FLAG [

23] (Free Large-scale Adversarial Augmentation on Graphs) generate challenging training samples by adding small, structure-aware noise to node features (maximizing training loss to improve model robustness against adversarial attacks); structure-aware methods such as GraphCrop [

25] and Graph Transplant [

26]—GraphCrop performs instance-level cropping (masking or removing parts of graph instances) to enforce model robustness to incomplete information, while Graph Transplant generates diverse augmented graphs by transferring substructures between graphs to simulate unseen structural compositions [

26]; consistency-based models like GRAND [

27] (Graph Augmentation with Random propagation and Consistency regularization) create multiple augmented graph views via random propagation and use consistency regularization to ensure similar model outputs for perturbed views [

27].

Furthermore, adaptive augmentation approaches (e.g., [

28]) move beyond fixed random policies, learning optimal augmentation parameters or strategies from data or task feedback to generate task-specific variations. Adversarial and structure-aware methods like GAUG [

29] and Graph Structure Learning [

30] focus on generating augmentations that either enhance robustness against specific perturbations (GAUG) or explicitly manipulate graph structures to create meaningful training variations (Graph Structure Learning) [

29,

30]. For heterogeneous graphs, GAAD [

31] combines augmentation with adaptive denoising, demonstrating that naive application of homogeneous augmentations may corrupt semantic constraints (e.g., invalid cross-type edges) and lose anomaly-relevant signals [

31]. Despite these advancements, most adaptive methods still face limitations: they are designed under homogeneous graph assumptions [

28,

29], lack relation awareness (failing to model type-specific dependencies), and optimize for general classification rather than amplifying minority anomalous substructures—critical gaps for heterogeneous anomaly detection [

19].

Current augmentation strategies face three limitations: (i) they assume homogeneous graphs, risking semantic violations when applied to heterogeneous domains, (ii) they are relation-unaware, ignoring type-specific interaction patterns crucial for anomaly detection, and (iii) they optimize for general representation quality rather than emphasizing rare anomalous substructures.

2.3. Positioning of HGAA

Bringing together the above threads, we observe that general GNN models (both homogeneous and heterogeneous) excel at representation learning but struggle under class asymmetry [

6,

11,

12]; anomaly detection methods (e.g., GLocalKD, DeepTraLog, HRGCN) capture abnormality patterns but neglect adaptive augmentation [

1,

5,

14]; and adaptive augmentation methods (e.g., FLAG, GRAND, GAAD) improve robustness but often ignore heterogeneity and anomaly-specific objectives [

23,

27,

31]. This motivates our proposed Heterogeneous Graph Adaptive Augmentation (HGAA) framework.

Unlike prior methods, HGAA achieves the following: (i) introduces

relation-aware augmentation operators that preserve heterogeneity (addressing limitations of [

28,

29]) while selectively amplifying minority anomalous substructures; (ii) employs a filter network to discard unrealistic or semantically invalid augmentations (solving the semantic corruption issue noted in [

31]); (iii) integrates augmentation-aware training strategies that adapt to imbalanced settings (tackling class asymmetry challenges in [

1,

13]). In doing so, HGAA directly addresses the identified gaps in anomaly detection and adaptive augmentation, providing a principled solution for heterogeneous, imbalanced graph anomaly detection.

3. Methodology

In this section, the complete implementation process of the proposed method is systematically elaborated, which comprises three core components: the graph augmentation method, the filter network architecture, and the training algorithm. For the filter network design, knowledge distillation is innovatively incorporated into a heterogeneous Graph Neural Network (HGNN), enabling a graph augmentation framework tailored for heterogeneous graphs. Furthermore, to enhance the model’s adaptability and generalization across diverse application scenarios, two complementary training strategies are proposed.

A heterogeneous graph is denoted as , where represents the i-th node and denotes the directed edge from node to node . The sets and contain the types of nodes and edges, respectively. Node indices start from 0, and refers to the -th node. Each node is associated with a feature vector , where n is the feature dimension. The notation indicates the type of node , and indicates the type of edge .

3.1. Graph Augmentation Methods

In this subsection, several graph augmentation techniques employed in this work are introduced, accompanied by their corresponding mathematical formulations.

The design of these graph augmentation techniques is motivated by the constraints of real-world edge systems.

3.1.1. Edge Addition

This method randomly adds new edges to the original graph to enhance connectivity and enable the model to access richer neighborhood information. Specifically, a fraction

of the total edges is added by random sampling [

5]:

The types of the newly added edges are sampled from the set of node types:

To preserve dataset consistency, the types of sampled nodes and edges follow the original distributions:

In microservice systems, anomalies often arise when certain services lack sufficient connectivity information (e.g., sparse call traces or incomplete logs). Edge addition simulates missing or latent dependencies by enriching neighborhoods, allowing the model to better generalize in the presence of incomplete or asymmetric graph structures.

3.1.2. Node and Edge Type Swap

This augmentation randomly swaps the types of nodes or edges while keeping the graph’s structure and node features unchanged. Given two distinct node types

and

, their corresponding nodes are swapped accordingly. An analogous process applies for edge types:

In heterogeneous microservice graphs, anomaly detection is highly sensitive to type semantics (e.g., API calls vs. database queries). Type swap introduces controlled perturbations to simulate mislabeling or unexpected role changes of services and interactions. This challenges the model to distinguish between structural anomalies and benign type variations, improving robustness against asymmetric anomaly classes.

3.1.3. Heterogeneous Edge Perturbation

To simulate noise or uncertainty in heterogeneous graphs, this method perturbs edges by applying an XOR operation between the original adjacency matrix

A and a perturbation matrix

R generated from prior distributions [

5]:

In distributed systems, anomalies can manifest as unexpected communication links (e.g., unauthorized service calls) or missing expected ones. Edge perturbation captures this by injecting or removing edges while respecting type distributions. It exposes the model to abnormal connectivity patterns that are especially relevant under severe class asymmetry, where rare anomalies may involve subtle topological changes.

3.1.4. Node Feature Swap

This method swaps the feature vectors between randomly selected pairs of nodes, thereby diversifying feature representation while preserving the graph topology:

In microservice traces, feature attributes often correspond to runtime statistics such as latency, throughput, or error codes. Swapping node features simulates unexpected context shifts (e.g., low-latency services suddenly behaving like high-latency ones). This helps the model generalize across diverse runtime conditions and avoid overfitting to majority anomaly types.

3.1.5. Edge Direction Swap

This augmentation reverses the direction of a fraction of randomly selected directed edges, helping the model better capture relational dynamics:

Rationale: Microservice interactions are directional (e.g., client → service, service → database). Anomalies may occur when dependencies are reversed or misconfigured (e.g., a service calling its parent). Direction swap simulates these abnormal call patterns, making the model more sensitive to asymmetric anomalies that manifest as directional inconsistencies.

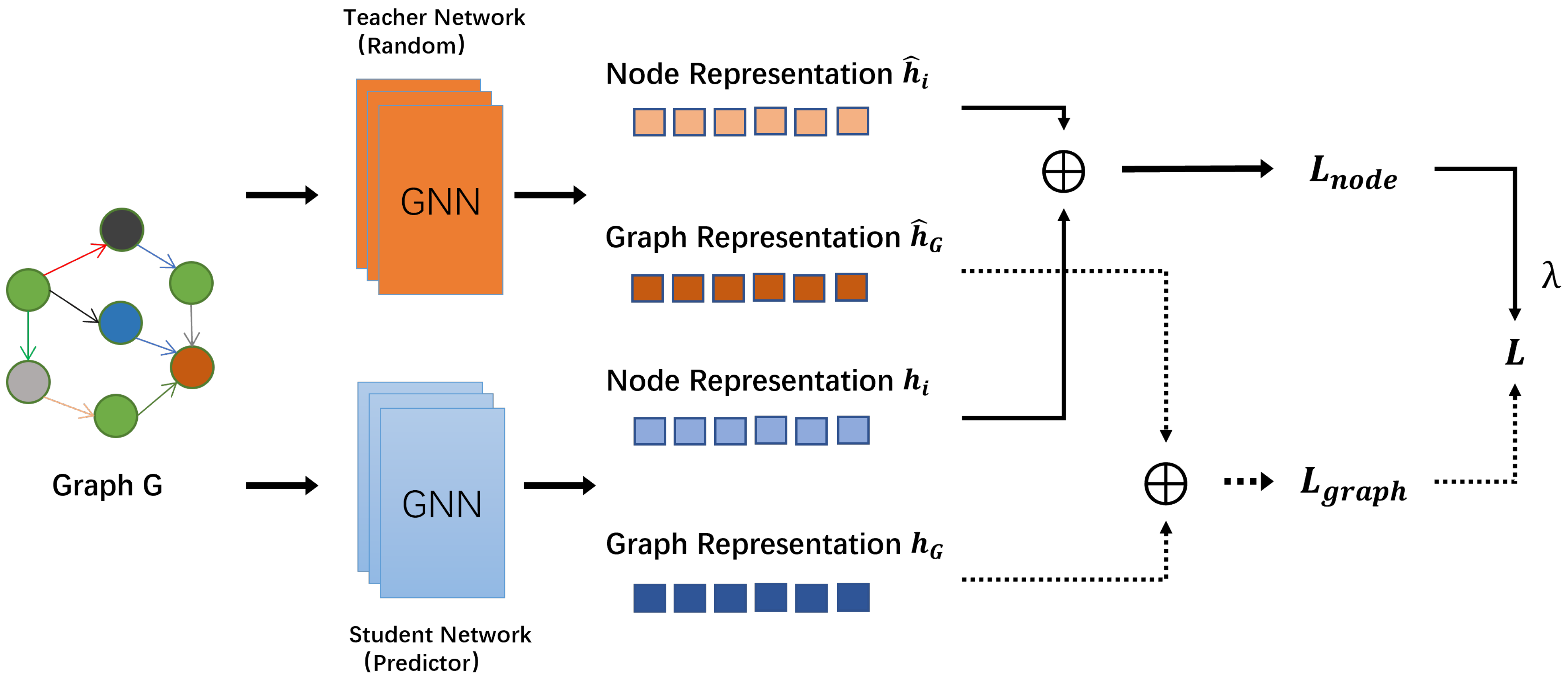

3.2. Filter Network

Graphs may exhibit two types of anomalies: local and global. Following [

14], a graph

is locally anomalous if it contains abnormal nodes, and globally anomalous if its overall properties deviate from the norm. To simultaneously capture both, a knowledge-distillation-based filter network using a heterogeneous Graph Neural Network is proposed.

The architecture consists of two networks (see

Figure 1): a teacher network

with fixed parameters and a student network

initialized randomly. Both share identical structure. The teacher produces node and graph-level embeddings

, and the student produces

. The node-level loss is computed as the maximum discrepancy across all nodes to emphasize extreme anomalies. This design differs from simpler anomaly scoring methods (e.g., one-class SVM, DeepSVDD, or autoencoders), which often focus only on either global consistency or local irregularities. By combining graph-level and node-level signals through knowledge distillation, the filter network ensures that both holistic distributional shifts and fine-grained deviations are simultaneously captured.

where

is a hyperparameter that can be adjusted to balance the importance of node-level loss and graph-level loss. This dual-objective formulation allows the model to highlight rare or extreme local anomalies without losing sensitivity to global graph-level irregularities, which is crucial for asymmetric anomaly distributions.

The anomaly score for a graph is defined as:

where

and

denote the trainable and fixed parameters of the filter model, respectively. Importantly, the teacher–student paradigm also reduces computational overhead compared to adversarial or ensemble-based anomaly scoring approaches, making the framework more suitable for resource-constrained edge environments.

3.3. Training Algorithm

3.3.1. Adaptive Augmentation and Training Strategy

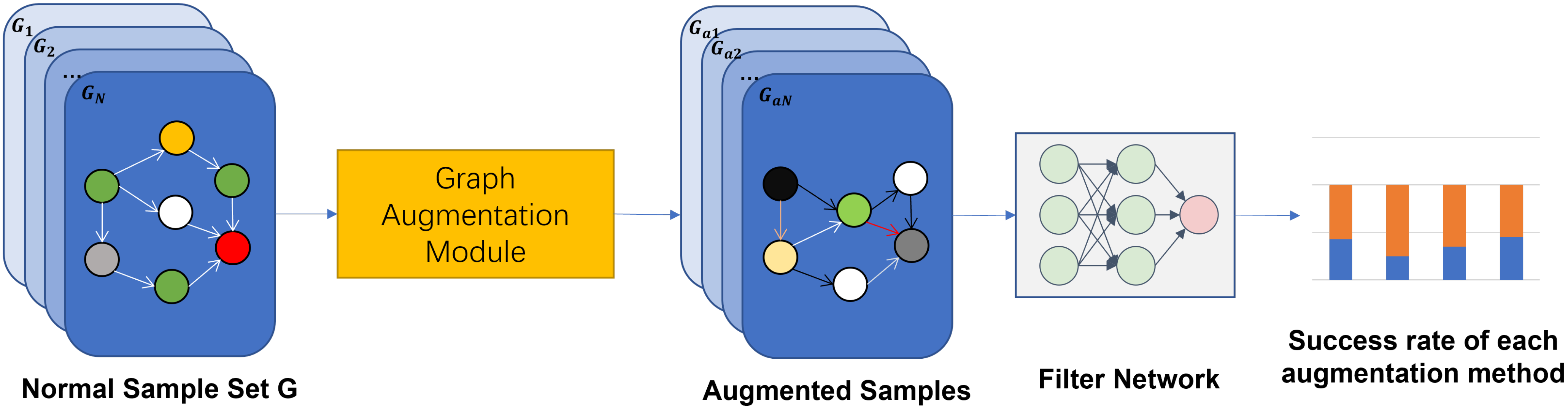

To ensure the quality and effectiveness of the augmented data used for anomaly detection, a filter-based adaptive augmentation strategy is designed. Each augmentation method is applied to a batch of normal graphs, resulting in augmented graphs denoted as

. These are input into a pre-trained filter network for inference:

Here, score represents the filter network’s prediction output for .

To assess the filtering quality of augmented samples, the precision metric is computed:

where

denotes true positives (correctly accepted augmented samples), and

denotes false positives (incorrectly accepted samples).

The number of successful augmentations

for each augmentation method

e is recorded, and the corresponding success rate is calculated as:

where

represents the count of successful augmentations using method

e and

is the total number of augmentations performed using method

e.

The success rates are then normalized to form a sampling distribution over augmentation methods:

This ensures that augmentation methods with higher success rates are more likely to be selected in future training iterations, while still preserving exploration of other methods. The overall pipeline is depicted in

Figure 2.

Training Workflow

Once

is obtained, training is initiated with dynamically sampled augmentations. For each normal sample,

, an augmentation method

E is selected based on

. The augmented sample

is then sent to the filter network with probability:

Here, is the probability of checking with the filter network. The hyperparameter controls the aggressiveness of filtering: lower success rates result in higher checking probability. If fails the filter test, a new sample is selected and re-augmented using method E, repeating the process until a qualified sample is produced.

This probabilistic filtering mechanism balances the trade-off between maintaining data diversity and ensuring sample quality by dynamically adjusting the filtering intensity based on augmentation method performance. Augmentation methods with lower reliability are more strictly filtered, while high-performing methods contribute more samples to the training set.

As illustrated in

Figure 3, the augmentation pipeline proceeds in a probabilistic yet structured manner. First, a normal graph sample

g is randomly selected from the dataset. An augmentation method

E is then chosen according to its selection probability

, producing an augmented graph

. For each augmented sample, there exists a probability

that it is further processed by the filter network. If

is not selected for filtering, it is directly added to the training batch. If it is sent to the filter network, the network evaluates whether the augmentation preserves task-relevant semantics. Augmented samples that pass this check are admitted into the batch, while those that fail are discarded, and the pipeline re-samples a new normal graph

g to undergo augmentation with method

E again. This iterative process ensures that each training batch is enriched with both diverse and semantically valid augmentations, while filtering out low-quality or misleading samples.

Batch Composition

This process continues until the number of normal samples matches the number of augmented (pseudo-anomalous) samples in the batch:

where

and

are the counts of original and augmented samples, respectively.

Once this balance is reached, the batch is constructed as:

This batch is then fed into the anomaly detection model for training. By continuously updating and incorporating high-quality samples, the model gradually learns to detect subtle anomalies while maintaining generalization.

3.3.2. Bias-Aware Augmentation for Rare Anomaly Types

In real-world anomaly detection, the most critical anomalies, such as security breaches or rare system faults, are often the most underrepresented in training data. This leads to poor model performance on the cases where accuracy matters most. This issue is especially acute in resource-constrained edge computing systems, where the training process must be highly efficient and explicitly focused on detecting these high-priority anomalies.

Therefore, a bias-aware augmentation strategy is further introduced within the smart filtering framework to increase the representation of such anomalies during training without sacrificing diversity or balance. The process begins with an anomaly-type classifier that assigns each anomaly sample to a predefined class. Based on this categorization, anomalies are grouped into two categories: (1) critical/rare anomalies, which are subjected to a biased augmentation procedure and processed through the filter network; (2) common anomalies, which follow standard augmentation and bypass the filter. This ensures that the model pays special attention to critical cases while still learning from a broad range of patterns.

To implement this bias, a modified augmentation selection process is introduced. Let

represent the original probability of selecting augmentation method

E. For prioritized anomaly types, a bias factor

that increases the likelihood of applying selected augmentation methods is defined. With probability

T, a designated method

E is force-selected, while with probability

, the method is sampled according to the original distribution. Formally, this becomes:

Example 1. Suppose a dataset contains two anomaly types: latency spike anomalies (accounting for 80% of all anomalies) and rare dependency–failure anomalies (only 5% of all anomalies). With a uniform augmentation strategy, the model would predominantly encounter latency spike anomalies during training, while the rare dependency–failure cases would remain severely underrepresented.

The bias-aware augmentation addresses this limitation by introducing a forced selection mechanism for augmentation methods critical to rare anomalies. For instance, assume edge perturbation (denoted as Method A) is particularly effective for enhancing the detection of dependency–failure anomalies, but it would only be selected with a 10% probability under the original probabilistic distribution. To prioritize Method A for this rare class, a 40% bias factor T is assigned to it. Specifically, when processing a dependency–failure anomaly:

First, generate a random value; if it is less than T (40% chance), Method A is directly selected.

Only if the first condition fails (60% chance), reversion to the original probabilistic selection process occurs—where Method A still retains its base 10% probability alongside other augmentation methods.

This two-step selection mechanism ensures Method A is prioritized for the rare dependency–failure class, resulting in a significantly higher effective selection rate than the original 10%, while preserving stochastic sampling for other anomaly types. Practically, this strategy increases the effective representation of dependency–failure anomalies in training batches, enabling the model to learn more balanced decision boundaries and remain sensitive to critical but infrequent anomaly patterns.

After an augmentation method is selected, samples from biased (rare/critical) categories proceed through the smart filtering pipeline, whereas non-prioritized anomalies follow the original unbiased augmentation and filtering workflow. This selective process continues until the number of augmented anomaly samples equals the number of original normal samples in the training batch.

By embedding this bias-aware augmentation into the training loop, the model is ensured to be better exposed to rare and significant anomaly types, improving detection robustness and enabling more effective learning from asymmetric datasets. The probabilistic bias mechanism also preserves augmentation diversity and provides fine-grained control over the training signal without introducing significant computational overhead Algorithm 1.

| Algorithm 1 Adaptive graph augmentation with bias-aware sampling (retry version simplified) |

Require: Normal graphs , augmentation set , filter network , success counts , totals , bias factor T for preferred augmentation method

Ensure: Balanced training batches

- 1:

Precompute success probabilities: - 2:

- 3:

(base probabilities) - 4:

for each training iteration do - 5:

Initialize empty batches: - 6:

while batch not full do - 7:

Sample - 8:

Generate random number - 9:

if then - 10:

Select preferred augmentation method E (bias applied) - 11:

else - 12:

Sample (probabilistic fallback) - 13:

end if - 14:

repeat - 15:

- 16:

- 17:

until rand() or passes - 18:

- 19:

- 20:

end while - 21:

Train_model(, labels) - 22:

end for

|

4. Experiments

4.1. Datasets

Due to the lack of public asymmetric and heterogeneous graph datasets, two real-world datasets are employed for evaluation.

Train Ticket Graph Dataset (TraceLog): TraceLog [

5] is a large-scale asymmetric and heterogeneous graph dataset from a train ticket booking microservice system [

32]. This dataset is widely used for anomaly detection evaluation in system log sequences. TraceLog contains four main fault categories and fourteen specific subcategories, covering different types of system anomalies, such as asynchronous interactions, multi-instance issues, configuration-related failures, and single-point failures. In this dataset, all fourteen types of system failures are treated as anomalies in normal system traces.

4.2. Performance Analysis

Model performance is evaluated using four key metrics in anomaly detection (all range 0–1; higher values mean better performance):

AUC (Area Under ROC Curve): Reflects the model’s overall ability to distinguish between normal samples and anomalies (1 = perfect distinction, 0.5 = random guessing).

AP (Average Precision): Focuses on balancing “reducing false anomaly predictions” and “avoiding missing true anomalies,” especially critical for imbalanced anomaly detection scenarios.

Recall: Indicates how many true anomalies the model can successfully identify (higher values mean fewer true anomalies are missed).

F1-score: Synthesizes the balance between “reducing false anomaly predictions” and “avoiding missing true anomalies,” suitable for real-world deployment where a fixed classification threshold is needed.

4.2.1. Augmented Effect Analysis

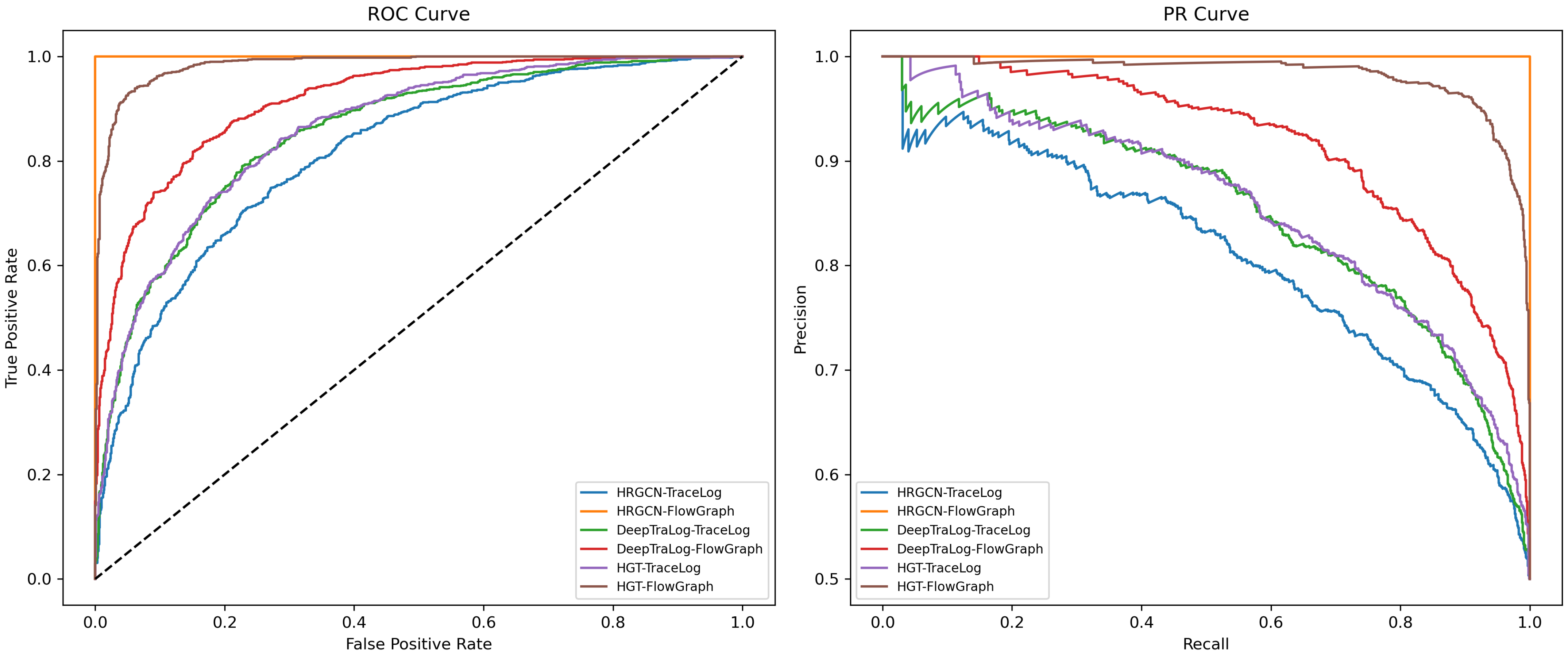

The proposed augmentation method was evaluated using three networks [

1,

5,

12] on two datasets [

32,

33]. The detailed results are presented in

Table 1, where the best performance for each metric is highlighted in bold.

Across the TraceLog dataset, our full method (ours) achieves notable improvements over the baseline: AUC increases from 0.703 to 0.820, AP from 0.655 to 0.756, F1-score from 0.742 to 0.823, and recall from 0.744 to 0.826. On FlowGraph, improvements are also observed, though less pronounced due to near-saturated baseline performance, e.g., AUC rises from 0.952 to 1.000, AP from 0.954 to 1.000. These results confirm that the proposed method effectively enhances model performance under asymmetric anomaly distributions, with particularly strong gains on datasets where anomalies are underrepresented

Figure 4.

4.2.2. Ablation Studies Furthermore, Efficiency Analysis

To further evaluate the contributions of each component in the proposed framework, we conducted a comprehensive ablation study across three models (HRGCN, DeepTraLog, and HGT) on the TraceLog and FlowGraph datasets. As shown in

Table 1, the results are reported in terms of AUC, AP, F1-score, and recall, providing a detailed comparison of different variants. The ROC and PR curve as show in

Figure 4.

Filter network: compared to the baseline, integrating the filter network consistently improves model performance by emphasizing high-quality augmented samples. On TraceLog, AUC increases by 5.5%–6.5% across different models (e.g., HRGCN: 0.703 → 0.768, HGT: 0.713 → 0.806). On FlowGraph, improvements are smaller (e.g., HRGCN: 0.952 → 0.988), indicating that filtering alone has limited impact when baseline performance is already high. However, relying solely on filtering can discard useful augmented samples, as evidenced by DeepTraLog’s AP on FlowGraph, which only rises from 0.622 to 0.689—lower than the 0.746 achieved by our adaptive method.

Percent-based selection: Using a fixed proportion of augmented samples without filtering results in moderate gains. On TraceLog with HGT, AUC improves from 0.788 (random) to 0.794 (percent), and on FlowGraph, AP reaches 0.899 compared to 0.891 (random). This demonstrates that controlling the selection ratio helps, but without filtering, low-quality or irrelevant augmentations limit effectiveness.

Bias-aware augmentation: Random augmentation produces inconsistent or marginal improvements and may reduce robustness. On TraceLog with HGT, random augmentation yields an AUC of 0.788, while bias-aware augmentation in the proposed framework achieves 0.859, a 7.1% absolute increase. On FlowGraph, AP improves from 0.891 (random) to 0.935 (ours). This confirms that accounting for anomaly type asymmetry is critical for stabilizing and enhancing performance.

Adaptive sampling: The proposed method (ours) leverages both the filter network and bias-aware probability adjustment. Compared to the filter variant, it improves AUC by an average of 4.5% and AP by 4.6% across all datasets and models. For instance, on TraceLog with DeepTraLog, AUC rises from 0.826 (filter) to 0.864 (ours), and AP from 0.743 to 0.782. On FlowGraph with HGT, AP increases from 0.905 (specific) to 0.935. These results indicate that adaptive sampling retains the benefits of filtering while avoiding excessive pruning, and effectively emphasizes rare or critical anomalies.

Performance trends across datasets and models: Improvements are more pronounced on TraceLog, which exhibits a more asymmetric anomaly distribution and lower baseline scores, highlighting the framework’s capability in handling challenging imbalanced scenarios. On FlowGraph, gains are smaller but still meaningful, particularly for AP and F1-score, demonstrating robustness and consistency of the adaptive mechanism.

As shown in

Table 1, each component contributes to performance improvement. The filter, percent, and random variants all improve over the baseline, but the combination of filtering, bias-aware augmentation, and adaptive sampling in the proposed HGAA framework achieves the highest and most consistent performance across both datasets and all models. These results confirm that the framework effectively addresses asymmetric anomaly distributions in microservice-based systems while maintaining model generalization and robustness.

Time Efficiency Analysis

While the method is not optimal in terms of computational time

Table 2, it achieves a good balance between performance and efficiency. It outperforms the Filter method significantly in terms of time. In terms of time, an average improvement of 71% is achieved on TraceLog and 8.6% on FlowGraph. The difference in time improvement is substantial, which is due to the characteristics of the dataset itself. As shown in

Figure 5, the TraceLog dataset has fewer successful augmentation samples. If all samples need to be tested by the filter before being sent to training, the time will be greatly extended. However, the ratio of successful augmentation samples in the FlowGraph dataset is very large, and most of them can pass the filter normally. Therefore, in terms of time efficiency reduction, the FlowGraph dataset is not as obvious as the TraceLog dataset, but there is still improvement.

Summary

The results show that our method outperforms other variants in terms of AUC, AP, F1-score, and recall, especially on the TraceLog dataset where the performance improvement is most significant. The strategy of dynamically adjusting the success rate of the augmentation method enables the model to better adapt to the dataset, improves the training effectiveness, and enhances the generalization capability on asymmetric and unseen data. As shown in

Table 3, the comparison between our method and the Specific method further demonstrates the superiority of our approach. Moreover,

Table 4 validates the consistent improvements of our method over the base model across AUC, AP, F1, and Recall. In addition,

Table 5 confirms the effectiveness of the Bias-Aware strategy in selecting appropriate augmentation methods. Furthermore, our method also balances the consideration of time with the limited resources in edge systems.

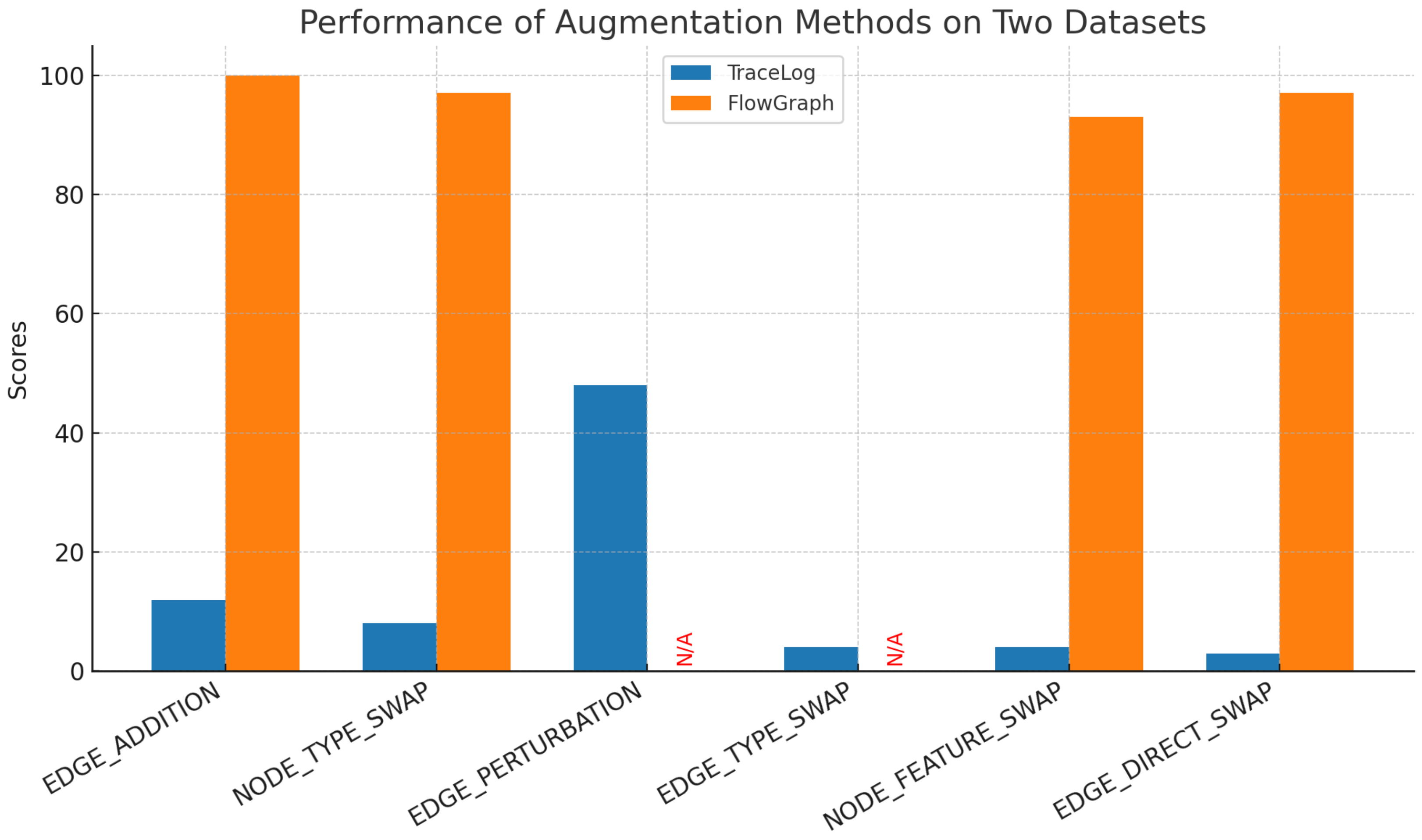

4.2.3. Performance Discrepancies of Augmentation Methods Across Datasets

The divergent effectiveness of augmentation methods between TraceLog and FlowGraph arises from their structural characteristics and method compatibility:

Edge Type Swap

This method is unsupported on FlowGraph due to its fixed edge type constraints— FlowGraph’s edges follow rigid service–data interaction rules, and swapping edge types would violate inherent semantic logic, resulting in invalid samples. On TraceLog, though technically supported, it performs poorly. TraceLog’s edges represent sequential API call relationships with weak type differentiation, and swapping edge types generates only meaningless perturbations that fail to form recognizable anomaly patterns.

Heterogeneous Edge Perturbation

This method is unsupported on FlowGraph. FlowGraph’s topology relies on fixed service–data dependencies, and any edge perturbation (addition/removal) would break its inherent structural integrity, producing samples that do not reflect real-world operations. On TraceLog, it is the only method with partial success. TraceLog’s sequential API call structure allows limited edge perturbations—about half of such operations retain temporal logic, simulating plausible anomalies like incomplete call chains, thus enabling partial detection effectiveness.

Edge Addition, Node Type Swap, Node Feature Swap, Edge Direction Swap

These four methods are all supported on FlowGraph and perform well. FlowGraph’s clear node/edge semantics and fixed topology allow these methods to generate meaningful perturbations that simulate real anomalies (e.g., adding necessary service links, swapping node types to mimic misconfigurations). On TraceLog, while technically supported, all four methods perform poorly. TraceLog’s sequential log structure and weak semantic boundaries mean these perturbations disrupt temporal logic or create meaningless noise, failing to provide effective training signals for anomaly detection

Table 6.

5. Conclusions

Anomaly detection in microservice-based systems under edge intelligence remains a formidable challenge due to the joint constraints of asymmetric anomaly types and limited computational resources. This work proposed Heterogeneous Graph Adaptive Augmentation (HGAA), which integrates heterogeneous GNNs, knowledge distillation, and bias-aware augmentation to dynamically tailor graph transformations according to dataset-specific anomaly distributions.

The framework demonstrated consistent advantages across two real-world datasets. In particular, HGAA achieved on average 4.5% higher AUC and 4.6% higher AP than the strongest baselines, with even larger improvements in challenging scenarios (e.g., AUC gain of 14.6% on TraceLog under HGT). Beyond quantitative performance, HGAA proved more robust to anomaly heterogeneity and better adapted to dataset-specific structural characteristics, validating its ability to alleviate anomaly type asymmetry while remaining efficient in resource-constrained environments.

Despite these encouraging results, several limitations remain. First, HGAA assumes the availability of a modest number of labeled anomalies to guide bias-aware augmentation, which may not always hold in extremely sparse or fully unsupervised settings. Second, although our efficiency analysis shows reduced overhead compared to filter-based methods (e.g., 71% faster on TraceLog), adaptive augmentation can still incur costs when scaling to ultra-large graphs or real-time applications. Finally, the empirical evaluation is limited to TraceLog and FlowGraph due to the scarcity of heterogeneous microservice anomaly benchmarks, which constrains the generality of conclusions.

Future research will address these limitations by exploring semi-supervised and unsupervised bias-aware augmentation, optimizing adaptive mechanisms for deployment on highly resource-constrained devices, and extending evaluations to larger, more diverse datasets across domains such as IoT security, financial fraud detection, and industrial monitoring. Furthermore, integrating HGAA with automated augmentation policy search and meta-learning will be investigated to further enhance scalability, adaptability, and trustworthiness in edge intelligence ecosystems.

Author Contributions

Conceptualization: H.Z.; Methodology: H.Z., W.L., Z.Z. and C.G.; Validation: H.Z. and W.L.; Formal analysis: W.S.; Investigation: H.Z., W.L. and Z.Z.; Resources: J.C.; Writing—original draft: H.Z.; Writing—review and editing: W.L., C.G., W.S., Z.Z. and J.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Science and Technology Project of State Grid Corporation of China (No. 5700-202440239A-1-1-ZN).

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

Author Jianfei Chen was employed by the company State Grid Shandong Electric Power Company and author Wei Liu was employed by the company NARI Group Corporation. The authors declare that this study received funding from State Grid Corporation of China. The funder was not involved in the study design, collection, analysis, interpretation of data, the writing of this article or the decision to submit it for publication. The remaining authors declare that the research was conducted without any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Zhang, C.; Peng, X.; Sha, C.; Zhang, K.; Fu, Z.; Wu, X.; Lin, Q.; Zhang, D. Deeptralog: Trace-log combined microservice anomaly detection through graph-based deep learning. In Proceedings of the 44th International Conference on Software Engineering, Pittsburgh, PA, USA, 21–29 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 623–634. [Google Scholar]

- Li, Y.; Tarlow, D.; Brockschmidt, M.; Zemel, R. Gated graph sequence neural networks. arXiv 2015, arXiv:1511.05493. [Google Scholar]

- Xie, Y.; Yu, B.; Lv, S.; Zhang, C.; Wang, G.; Gong, M. A survey on heterogeneous network representation learning. Pattern Recognit. 2021, 116, 107936. [Google Scholar] [CrossRef]

- Liu, Z.; Li, Y.; Chen, N.; Wang, Q.; Hooi, B.; He, B. A survey of imbalanced learning on graphs: Problems, techniques, and future directions. arXiv 2023, arXiv:2308.13821. [Google Scholar] [CrossRef]

- Li, J.; Pang, G.; Chen, L.; Namazi-Rad, M.R. HRGCN: Heterogeneous Graph-level Anomaly Detection with Hierarchical Relation-augmented Graph Neural Networks. In Proceedings of the 2023 IEEE 10th International Conference on Data Science and Advanced Analytics (DSAA), Thessaloniki, Greece, 9–13 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–10. [Google Scholar]

- Scarselli, F.; Gori, M.; Tsoi, A.C.; Hagenbuchner, M.; Monfardini, G. The graph neural network model. IEEE Trans. Neural Netw. 2008, 20, 61–80. [Google Scholar] [CrossRef] [PubMed]

- Bruna, J.; Zaremba, W.; Szlam, A.; LeCun, Y. Spectral networks and locally connected networks on graphs. arXiv 2013, arXiv:1312.6203. [Google Scholar]

- Hamilton, W.; Ying, Z.; Leskovec, J. Inductive representation learning on large graphs. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- Zhang, C.; Song, D.; Huang, C.; Swami, A.; Chawla, N.V. Heterogeneous graph neural network. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 793–803. [Google Scholar]

- Wang, X.; Ji, H.; Shi, C.; Wang, B.; Ye, Y.; Cui, P.; Yu, P.S. Heterogeneous graph attention network. In Proceedings of the World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 2022–2032. [Google Scholar]

- Hu, Z.; Dong, Y.; Wang, K.; Sun, Y. Heterogeneous graph transformer. In Proceedings of the Web Conference 2020, Taipei, Taiwan, 20–24 April 2020; pp. 2704–2710. [Google Scholar]

- Ma, X.; Wu, J.; Xue, S.; Yang, J.; Zhou, C.; Sheng, Q.Z.; Xiong, H.; Akoglu, L. A comprehensive survey on graph anomaly detection with deep learning. IEEE Trans. Knowl. Data Eng. 2021, 35, 12012–12038. [Google Scholar] [CrossRef]

- Ma, R.; Pang, G.; Chen, L.; van den Hengel, A. Deep graph-level anomaly detection by glocal knowledge distillation. In Proceedings of the 15th ACM International Conference on Web Search and Data Mining, Virtual Event, 21–25 February 2022; pp. 704–714. [Google Scholar]

- Du, M.; Li, F.; Zheng, G.; Srikumar, V. Deeplog: Anomaly detection and diagnosis from system logs through deep learning. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 1285–1298. [Google Scholar]

- Qiao, H.; Tong, H.; An, B.; King, I.; Aggarwal, C.; Pang, G. Deep Graph Anomaly Detection: A Survey and New Perspectives. IEEE Trans. Knowl. Data Eng. 2025, 37, 5106–5126. [Google Scholar] [CrossRef]

- Liu, Z.; Qiu, R.; Zeng, Z.; Yoo, H.; Zhou, D.; Xu, Z.; Zhu, Y.; Weldemariam, K.; He, J.; Tong, H. Class-Imbalanced Graph Learning without Class Rebalancing. arXiv 2023, arXiv:2308.14181. [Google Scholar]

- Xu, L.; Zhu, H.; Chen, J. Imbalanced graph learning via mixed entropy minimization. Sci. Rep. 2024, 14, 16724. [Google Scholar] [CrossRef] [PubMed]

- Ding, K.; Xu, Z.; Tong, H.; Liu, H. Data augmentation for deep graph learning: A survey. ACM SIGKDD Explor. Newsl. 2022, 24, 61–77. [Google Scholar] [CrossRef]

- Verma, V.; Qu, M.; Kawaguchi, K.; Lamb, A.; Bengio, Y.; Kannala, J.; Tang, J. Graphmix: Improved training of gnns for semi-supervised learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual Event, 2–9 February 2021; Volume 35, pp. 10024–10032. [Google Scholar]

- Zeng, H.; Zhou, H.; Srivastava, A.; Kannan, R.; Prasanna, V. Graphsaint: Graph sampling based inductive learning method. arXiv 2019, arXiv:1907.04931. [Google Scholar]

- Rong, Y.; Huang, W.; Xu, T.; Huang, J. Dropedge: Towards deep graph convolutional networks on node classification. arXiv 2019, arXiv:1907.10903. [Google Scholar]

- Kong, K.; Li, G.; Ding, M.; Wu, Z.; Zhu, C.; Ghanem, B.; Taylor, G.; Goldstein, T. Flag: Adversarial data augmentation for graph neural networks. arXiv 2020, arXiv:2010.09891. [Google Scholar]

- You, Y.; Chen, T.; Sui, Y.; Chen, T.; Wang, Z.; Shen, Y. Graph Contrastive Learning with Augmentations. In Proceedings of the Advances in Neural Information Processing Systems, Virtual Event, 6–12 December 2020; Volume 33, pp. 5812–5823. [Google Scholar]

- Wang, Y.; Wang, W.; Liang, Y.; Cai, Y.; Hooi, B. Graphcrop: Subgraph cropping for graph classification. arXiv 2020, arXiv:2009.10564. [Google Scholar] [CrossRef]

- Park, J.; Shim, H.; Yang, E. Graph transplant: Node saliency-guided graph mixup with local structure preservation. In Proceedings of the 36th AAAI Conference on Artificial Intelligence, Virtual Event, 22 February–1 March 2022; Volume 36, pp. 7966–7974. [Google Scholar]

- Feng, W.; Zhang, J.; Dong, Y.; Han, Y.; Luan, H.; Xu, Q.; Yang, Q.; Kharlamov, E.; Tang, J. Graph random neural networks for semi-supervised learning on graphs. Adv. Neural Inf. Process. Syst. 2020, 33, 22092–22103. [Google Scholar]

- Zhu, Y.; Xu, Y.; Wang, F.; Wang, X.; He, X. Graph Contrastive Learning with Adaptive Augmentation. In Proceedings of the Web Conference 2021, Ljubljana, Slovenia, 19–23 April 2021; pp. 2069–2080. [Google Scholar]

- Zhao, T.; Akoglu, L.; Ahn, Y.Y. Data Augmentation for Graph Classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual Event, 2–9 February 2021; Volume 35, pp. 4019–4027. [Google Scholar]

- Jin, W.; Ma, Y.; Liu, X.; Tang, X.; Gao, J.; Tang, J. Graph Structure Learning for Robust Graph Neural Networks. In Proceedings of the International Conference on Machine Learning (ICML), Virtual Event, 13–18 July 2020; pp. 490–500. [Google Scholar]

- Lou, X.; Liu, G.; Li, J. Heterogeneous Graph Neural Network with Graph-data Augmentation and Adaptive Denoising. Appl. Intell. 2024, 54, 4411–4424. [Google Scholar] [CrossRef]

- Zhou, X.; Peng, X.; Xie, T.; Sun, J.; Xu, C.; Ji, C.; Zhao, W. Benchmarking microservice systems for software engineering research. In Proceedings of the 40th International Conference on Software Engineering: Companion Proceeedings, Gothenburg, Sweden, 27 May–3 June 2018; pp. 323–324. [Google Scholar]

- Manzoor, E.; Milajerdi, S.M.; Akoglu, L. Fast memory-efficient anomaly detection in streaming heterogeneous graphs. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1035–1044. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).