Abstract

In this paper, we consider the symmetric matrix optimization problem arising in the process of unsupervised feature selection. By relaxing the orthogonal constraint, this problem is transformed into a constrained symmetric nonnegative matrix optimization problem, and an efficient algorithm is designed to solve it. The convergence theorem of the new algorithm is derived. Finally, some numerical examples show that the new method is feasible. Notably, some simulation experiments in unsupervised feature selection illustrate that our algorithm is more effective than the existing algorithms.

Keywords:

symmetric matrix optimization problem; numerical method; convergence analysis; unsupervised feature selection MSC:

15A23; 65F30

1. Introduction

Throughout this paper, we use to denote the set of real matrices. We write if the matrix B is nonnegative. The symbols stand for the trace and transpose of the matrix B, respectively. The symbol stands for the -norm of the vector , i.e., . The symbol stands for the Frobenius norm of the matrix B. The symbol stands for the identity matrix. For the matrices A and B, denotes the Hadamard product of A and B. The symbol represents the greater of x and y.

In this paper, we consider the following symmetric matrix optimization problem in unsupervised feature selection.

Problem 1.

Given a matrix , consider the symmetric matrix optimization problem

Here is the data matrix, is the indicator matrix (feature weight matrix) and is the coefficient matrix.

Problem 1 arises in unsupervised feature selection, which is an important part of machine learning. This can be stated as follows. Data from image processing, pattern recognition and machine learning are usually high-dimensional data. If we deal with these data directly, this may increase the computational complexity and the memory of the algorithm. In particular, it may lead to the overfitting phenomenon for the machine learning model. Feature selection is a common dimension reduction method, the goal of which is to find the most representative feature subset from the original features, that is to say, for a given original high-dimensional data matrix A, we must find out the relationship between the original feature space and the subspace generated by the selected feature. Feature selection can be formalized as follows.

where I denotes the index set of the selected features and Y is the coefficient matrix of the initial feature space in the selected features. From the viewpoint of matrix factorization, feature selection is expressed as follows

Considering that the data in practical problems are often nonnegative, we add a constraint to guarantee that any feature is described as the positive linear combination of the selected features, so the problem in (3) can be rewritten as in (1).

In the last few years, many numerical methods have been proposed for solving optimization problems with nonnegative constraints, and these methods can be broadly classified into two categories: alternating gradient descent methods and alternating nonnegative least squares methods. The most commonly used alternating gradient descent method is the multiplicative update algorithm [1,2]. Although the multiplicative update algorithm is simple to implement, it lacks a convergence guarantee. The alternating nonnegative least squares method is used to solve nonnegative subproblems. Many numerical methods, such as the active method [3], the projected gradient method [4,5], the projected Barzilai–Borwein method [6,7], the projected Newton method [8] and the projected quasi-Newton method [9,10], have been designed to solve these subproblems.

For optimization problems with orthogonal constraints, which are also known as optimization problems on manifolds, there are many algorithms to solve this type of problem. In general, these can be divided into two categories: the feasible method and the infeasible method. The feasible method means that the variance obtained after each iteration must satisfy the orthogonal constraint. Many traditional optimization algorithms, such as the gradient method [11], the conjugate method [12], the trust-region method [13], Newton method [8] and the quasi-Newton method [14], can be used to deal with optimization problems on manifolds. Wen and Yin [15] proposed the CMBSS algorithm, which combined the Cayley transform and the curvilinear search approach with BB steps. However, the computational complexity increases when the number of variables or the amount of data increases, resulting in the low efficiency of this algorithm. Infeasible methods can overcome this disadvantage when facing high-dimensional data. In 2013, Lai and Osher [16] proposed a splitting method based on Bregman iteration and the ADMM method for orthogonality constraint problems. The SOC method is a valid and efficient method for solving the convex optimization problems but the proof of its convergence is still uncertain. Thus, Chen et al. [17] put forward a proximal alternating augmented Lagrangian method to solve such optimization problems with a non-smooth objective function and non-convex constraint.

Some unsupervised feature selection algorithms based on matrix decomposition have been proposed and have achieved good performance, such as SOCFS, MFFS, RUFSM, OPMF and so on. Based on the orthogonal basis clustering algorithm, SOCFS [18] does not explicitly use the pre-computed local structure information for data points represented as additional terms of their objective functions, but directly computes latent cluster information by means of the target matrix, conducting orthogonal basis clustering in a single unified term of the objective function. In 2017, Du S et al. [19] proposed RUFSM, in which robust discriminative feature selection and robust clustering are performed simultaneously under the -norm, while the local manifold structures of the data are preserved. MFFS was developed from the viewpoint of subspace learning. That is, it treats feature selection as a matrix factorization problem and introduces an orthogonal constraint into its objective function to select the most informative features from high-dimensional data.

OPMF [20] incorporates matrix factorization, ordinal locality structure preservation and inner-product regularization into a unified framework, which can not only preserve the ordinal locality structure of the original data, but also achieve sparsity and low redundancy among features.

However, research into Problem 1 is very scarce as far as we know. The greatest difficulty is how to deal with the nonnegative and orthogonal constraints, because the problem has highly structured constraints. In this paper, we first use the penalty technique to deal with the orthogonal constraint and reformulate Problem 1 as a minimization problem with nonnegative constraints. Then, we design a new method for solving this problem. Based on the auxiliary function, the convergence theorem of the new method is derived. Finally, some numerical examples show that the new method is feasible. In particular, some simulation experiments in unsupervised feature selection illustrate that our algorithm is more efficient than the existing algorithms.

The rest of this paper is organized as follows. A new algorithm is proposed to solve Problem 1 in Section 2 and the convergence analysis is given in Section 3. In Section 4, some numerical examples are reported. Numerical tests on the proposed algorithm applied to unsupervised feature selection are also reported in that section.

2. A New Algorithm for Solving Problem 1

In this section we first design a new algorithm for solving Problem 1; then, we present the properties of this algorithm.

Problem 1 is difficult to solve due to the orthogonal constraint . Fortunately, this difficulty can be overcome by adding a penalty term for the constraint. Therefore, Problem 1 can be transformed into the following form

where is a penalty coefficient. This extra term is used to penalize the divergence between and . The parameter is chosen by users to make a trade-off between making small, while ensuring that is not excessively large.

Let the Lagrange function of (4) be

where and are the Lagrangian multipliers of X and Y. It is straightforward to obtain its gradient functions as follows

Setting the partial derivatives of X and Y to zero, we obtain

which implies that

Noting that is the stationary point of (4), if it satisfies the KKT conditions

which implies

or

According to (6) and (7) we can obtain the following iterations

which are equivalent to the following update formulations

However, the iterative formulae (10) and (11) have two drawbacks, as follows.

- (1)

- (2)

In order to overcome these difficulties, we designed the following iterative methods

where

Here, is a small positive number which can guarantee the nonnegativity of every element of X and Y. So we can establish a new algorithm for solving Problem 1 as follows (Algorithm 1).

| Algorithm 1: This Algorithm attempts to Solve Problem 1. |

Input Data matrix , the number of selected features p, parameters , and . Output An index set of selected features and 1. Initialize matrix and . 2. Set . 3. Repeat 4. Fix Y and update X by (2.9); 5. Fix X and update Y by (2.10); 6. Until convergence condition has been satisfied, otherwise set and turn to step 3. 7. End for 8. Compute and sort them in a descending order to choose the top p features. |

The sequences and generated by Algorithm 1 have the following property.

Theorem 1.

If and , then for arbitrary , we have and . If and , then for arbitrary , we have and .

Proof.

It is obvious that the conclusion is true when . Now we will consider the case . □

Case I.

From it follows that and

Hence, if then and if then .

Case II.

From , it follows that and

Noting that and , we can easily conclude that if then and if then .

Case III.

From it follows that and

Thus, if then and if then .

Case IV.

From , it follows that and

Based on the fact that and , we can conclude that if then and if then . □

3. Convergence Analysis

In this section, we will give the convergence theorem for Algorithm 1. For the objective function

of Problem (4), we first prove that

where and are the k-th iteration of Algorithm 1, then obtain the limit point as the stationary point of Problem (4). In order to develop this section, we need a lemma.

Lemma 1

([21]). If there exists a function of satisfying

then is non-increasing under the update rule

Here is called an auxiliary function of if it satisfies (16).

Theorem 2.

Fixing X, the objective function is non-increasing, that is

Proof.

Set and , then

Noting that

and when X is fixed we can ignore the constant term , then

If we need to prove , we must prove that

is a nonincreasing function. Noting that is a quadratic function and its second-order Taylor approximation at is as follows

where

and

Now we will construct a function

where is a diagonal matrix with

or

We begin to prove that is an auxiliary function of . It is obvious that is satisfied; now we prove that the inequality holds. Noting that

In fact, we can prove that the matrix is a positive semi-definite matrix.

Case I.

When or but , we have . For any nonzero vector , we have

The last inequality is true due to the nonnegativity of X, and the data matrix A generally has practical significance, so the elements are usually nonnegative; therefore, we can obtain that the matrix is also a nonnegative matrix. Thus we obtain

Case II.

When and , we have , we can also use the same technique to verify that matrix is positive semi-definite. According to Lemma 1, we obtain that is anon-increasing function so is non-increasing when X is fixed. Therefore, we can obtain

This completes the proof. □

Similarly, we can use the same method to verify that when Y is fixed, the function is also a non-increasing function. Thus, we have

Consequently, by (20) and (21), we obtain

Theorem 3.

The sequence generated by Algorithm 1 converges to the stationary point of Problem 1.

Proof.

Since is a decreasing sequence and it is bounded with the lower bound zero and the upper bound , and combining Theorem 1, there exist nonnegative matrices such that

Because of the continuity and monotonicity of the function F, we can obtain

Now we will prove the point is the stationary point of Problem 1, that is, we will prove that satisfies the KKT conditions (5). We first prove

and

Based on the definition of in (14)

so the sequence may have two convergent points or . We set

Furthermore, according to (25), we have

When , we have ; thereby it immediately implies which is consistent with (24). Now we begin to prove (25). If the result is not true, there exists such that

When k is large enough, we have and

Therefore

which is a contradiction of (26); hence, (25) holds. (24) and (25) imply that satisfies the KKT conditions (5). In a similar way, we can prove that satisfies the KKT conditions (5). Hence, is the stationary point of Problem 1. □

4. Numerical Experiments

In this section, we first present a simple example to illustrate that Algorithm 1 is feasible to solve Problem 1, and we apply Algorithm 1 to unsupervised feature selection. We also compare our algorithm with the MaxVar Algorithm [22], the UDFS Algorithm [23] and the MFFS Algorithm [24]. All experiments were performed in MATLAB R2014a on a PC with an Intel Core i5 processor at 2.50 GHz with a precision of . Set the gradient value

Due to the KKT conditions (5) we know that if then is the stationary point of Problem 1. So we use either or the iteration step k has reached the upper limit 500 as the stopping criterion of Algorithm 1.

4.1. A Simple Example

Example 1.

Considering Problem 1 with n = 5, m = 4, p = 3 and

Set the initial matrices

We use Algorithm 1 to solve this problem. After 99 iterations, we get the solution of Problem 1 as follows

and

This example shows that Algorithm 1 is feasible to solve Problem 1.

4.2. Application to Unsupervised Feature Selection and Comparison with Existing Algorithms

4.2.1. Dataset

In the next stage of our study, we used standard databases to test the performance of our proposed algorithm. We first describe the four datasets use, the target image is shown in Figure 1, and the characteristics of which are summarized in Table 1.

Figure 1.

Some images from different databases. (a) COIL20, (b) PIE, (c) ORL, (d) Yale.

Table 1.

Database Description.

1. COIL20 (http://www.cad.zju.edu.cn/home/dengcai/Data/MLData.html (accessed on 1 December 2021)): This dataset contains 20 objects. The images of each object were taken 5 degrees apart as the object was rotated on a turntable, and for each object there are 72 images. The size of each image is pixels, with 256 grey levels per pixel. Thus, each image is represented by a 1024-dimensional vector.

2. PIE (http://archive.ics.uci.edu/ml/datasets.php (accessed on 1 December 2021)): This is a face image dataset with 53 different people; for each subject, 22 pictures were taken under different lighting conditions with different postures and expressions.

3. ORL (http://www.cad.zju.edu.cn/home/dengcai/Data/FaceData.html (accessed on 1 December 2021)): This dataset contains ten different images of each of 40 distinct subjects. For some subjects, the images were taken at different times, varying the lighting, facial expressions (open/closed eyes, smiling/not smiling) and facial details (glasses/no glasses). All the images were taken against a dark homogeneous background with the subjects in an upright, frontal position (with tolerance for some side movement).

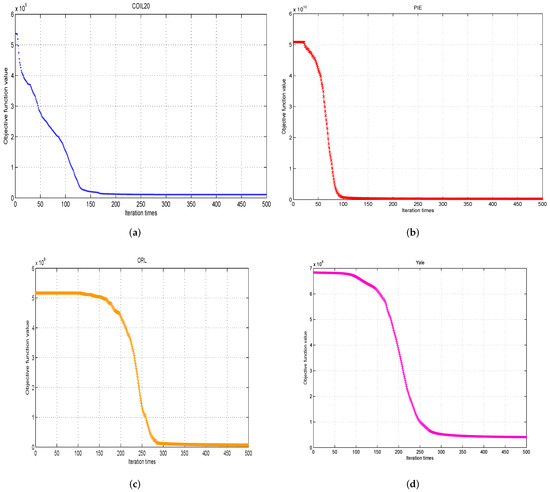

4. Yale (http://www.cad.zju.edu.cn/home/dengcai/Data/FaceData.html (accessed on 1 December 2021)): This dataset contains 165 grayscale images of 15 individuals. There are 11 images per subject, and they have different facial expressions (happy, sad, surprised, sleepy, normal and wink) or lighting conditions (center-light, left-light, right-light). Using the above four datasets, we input these grayscale images as the initial value A, and then used the initial matrix X and Y so that we could obtain a series of function values through the iterative updating of the algorithm. Then, we were able to obtain four convergence curves for different databases, as shown in Figure 2.

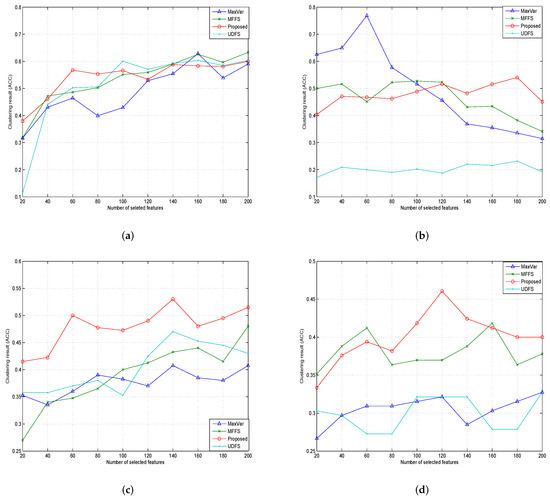

Figure 2.

Clustering results (ACC) of different feature selection algorithms. (a) COIL20, (b) PIE, (c) ORL, (d) Yale.

4.2.2. Comparison Methods

1. MaxVar [22]: Selecting features according to the variance of features. The feature with the higher variance than others is more important.

2. UDFS [23]: -norm regularized discriminative feature selection method. This method selects the most distinctive feature through the local discrimination information of data and the correlation of features.

3. MFFS [24]: unsupervised feature selection via matrix factorization, in which the objective function originates from the distance between two subspaces.

4.2.3. Parameter Settings

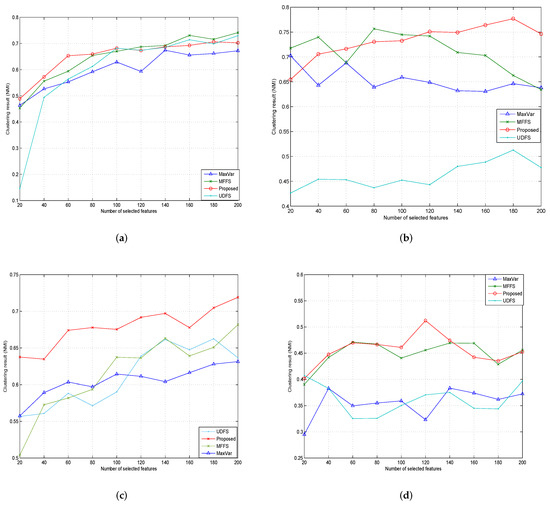

In our proposed method, the parameter was selected from the set . In the following experiments, we set the value of to be and for the COIL20, PIE, ORL and Yale databases. The numbers of selected features were taken from for all datasets. Then, we computed the average value of ACC and NMI when selecting different numbers of features. The maximum iteration number (maxiter) was set to 1000. For the sparsity parameters and in UDFS, we set . The value of parameter in MFFS was set as . We set in our proposed algorithm. The results of the comparison of MaxVar, UDFS, MFFS and our proposed algorithm are presented in Figure 3 and Figure 4. The following two tables give the average accuracy and normalized mutual information calculated using our proposed algorithm.

Figure 3.

Clustering results (NMI) of different feature selection algorithms. (a) COIL20, (b) PIE, (c) ORL, (d) Yale.

Figure 4.

The convergence curves of the proposed approach on four different databases. (a) , (b) , (c) , (d) .

4.2.4. Evaluation Metrics

There are two metrics to measure the results of clustering using the selected features. The accuracy of clustering (ACC) and normalized mutual information (NMI) can be calculated as follows. The value of ACC and NMI scales between 0 and 1, and a high value indicates an efficient clustering result. For every dataset, there are two parts, fea and gnd, the fea data are used to operate the selection, and after clustering one can obtain a clustering label, denoted by ; gnd is the true label of features denoted by , and the ACC can be computed using the clustering label and the true label.

where if ; if . The indicates a mapping that permutes the label of clustering result to match the true label as well as possible using the Kuhn–Munkres Algorithm [25]. For two variables P and Q, the NMI is defined in the following form:

where is the mutual information of P and Q, and and are the entropy of P and Q, respectively.

4.2.5. Experiments Results and Analysis

Figure 4 shows the curves of the iteration step and the values of the objective function when Algorithm 1 was run on four datasets. We can see that the objective function value decreases as the iteration step increases. After a finite number of iterations, the objective function value reaches the minimum and tends to be stable.

In Table 2 and Table 3, we report the best clustering accuracy and the best normalized mutual information, expressed as the number of selected feature changes. In Table 2 and Table 3, we can see that the performance of Algorithm 1 was more effective than that of the MaxVar Algorithm [22], the UDFS Algorithm [23] and the MFFS Algorithm [24] on all data sets, which shows the effectiveness and robustness of our proposed method.

Table 2.

Clustering results (average ACC) of different algorithms on different databases.

Table 3.

Clustering results (average NMI) of different algorithms on different databases.

In Figure 3 and Figure 4, we present the clustering accuracy and the normalized mutual information, expressed as the number of selected feature changes. We can see that the performance of MFFS was slightly better than that of Algorithm 1 on COIL20. However, on the other three datasets, Algorithm 1 was relatively more efficient compared with the MaxVar Algorithm [22], the UDFS Algorithm [23] and the MFFS Algorithm [24], especially when the number of selected features was large.

5. Conclusions

The symmetric matrix optimization problem in the area of unsupervised feature selection is considered in this paper. By relaxing the orthogonal constraint, this problem is converted into a constrained symmetric nonnegative matrix optimization problem. An efficient algorithm was designed to solve this problem and its convergence theorem was also derived. Finally, a simple example was given to verify the feasibility of the new method. Some simulation experiments in unsupervised feature selection showed that our algorithm was more effective than the existing methods.

Author Contributions

Conceptualization, C.L.; methodology, W.W. All authors have read and agreed to the published version of the manuscript.

Funding

The work was supported by the National Natural Science Foundation of China (No. 11761024), and the Natural Science Foundation of Guangxi Province (No. 2017GXNSFBA198082).

Institutional Review Board Statement

Institutional review board approval of our school was obtained for this study.

Informed Consent Statement

Written informed consent was obtained from all the participants prior to the enrollment of this study.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.

Conflicts of Interest

The author declares no conflict of interest.

References

- Lee, D.D.; Seung, H.S. Learning the parts of objects by non-negative matrix factorization. Nature 1999, 401, 788. [Google Scholar] [CrossRef] [PubMed]

- Smaragdis, P.; Brown, J.C. Non-negative matrix factorization for polyphonic music transcription. In Proceedings of the 2003 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (IEEE Cat. No.03TH8684), New Paltz, NY, USA, 19–22 October 2003. [Google Scholar]

- Kim, H.; Park, H. Nonnegative Matrix Factorization Based on Alternating Nonnegativity Constrained Least Squares and Active Set Method. SIAM J. Matrix Anal. Appl. 2008, 30, 713–730. [Google Scholar] [CrossRef]

- Lin, C. Projected Gradient Methods for Nonnegative Matrix Factorization. Neural Comput. 2007, 19, 2756–2779. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, X.L.; Liu, H.W.; Zheng, X.Y. Non-monotone projection gradient method for non-negative matrix factorization. Comput. Optim. Appl. 2012, 51, 1163–1171. [Google Scholar] [CrossRef]

- Han, L.X.; Neumann, M.; Prasad, A.U. Alternating projected Barzilai-Borwein methods for Nonnegative Matrix Factorization. Electron. Trans. Numer. Anal. 2010, 36, 54–82. [Google Scholar]

- Huang, Y.K.; Liu, H.W.; Zhou, S. An efficient monotone projected Barzilai-Borwein method for nonnegative matrix factorization. Appl. Math. Lett. 2015, 45, 12–17. [Google Scholar] [CrossRef]

- Gong, P.H.; Zhang, C.S. Efficient Nonnegative Matrix Factorization via projected Newton method. Pattern Recognit. 2012, 45, 3557–3565. [Google Scholar] [CrossRef]

- Kim, D.; Sra, S.; Dhillon, I.S. Fast Newton-type Methods for the Least Squares Nonnegative Matrix Approximation Problem. In Proceedings of the SIAM International Conference on Data Mining, Minneapolis, MN, USA, 26–28 April 2007. [Google Scholar]

- Zdunek, R.; Cichocki, A. Non-negative matrix factorization with quasi-newton optimization. In Proceedings of the International Conference on Artificial Intelligence and Soft Computing, Zakopane, Poland, 25–29 June 2006. [Google Scholar]

- Abrudan, T.E.; Eriksson, J.; Koivunen, V. Steepest descent algorithms for optimization under unitary matrix constraint. IEEE Trans. Signal Process. 2008, 56, 1134–1147. [Google Scholar] [CrossRef]

- Abrudan, T.E.; Eriksson, J.; Koivunen, V. Conjugate gradient algorithm for optimization under unitary matrix constraint. Signal Process. 2009, 89, 1704–1714. [Google Scholar] [CrossRef]

- Absil, P.A.; Baker, C.G.; Gallivan, K.A. Trust-Region methods on riemannian manifolds. Found. Comput. Math. 2007, 7, 303–330. [Google Scholar] [CrossRef]

- Savas, B.; Lim, L.H. Quasi-Newton methods on grassmannians and multilinear approximations of tensors. SIAM J. Sci. Comput. 2010, 2, 3352–3393. [Google Scholar] [CrossRef]

- Wen, Z.W.; Yin, W.T. A feasible method for optimization with orthogonality constraints. Math. Program. 2013, 142, 397–434. [Google Scholar] [CrossRef] [Green Version]

- Lai, R.J.; Osher, S. A splitting method for orthogonality constrained problems. J. Sci. Comput. 2014, 58, 431–449. [Google Scholar] [CrossRef]

- Chen, W.Q.; Ji, H.; You, Y.F. An augmented lagrangian method for l1-regularized optimization problems with orthogonality constraints. SIAM J. Sci. Comput. 2016, 38, 570–592. [Google Scholar] [CrossRef] [Green Version]

- Han, D.; Kim, J. Unsupervised Simultaneous Orthogonal basis Clustering Feature Selection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–15 June 2015. [Google Scholar]

- Du, S.Q.; Ma, Y.D.; Li, S.L. Robust unsupervised feature selection via matrix factorization. Neurocomputing 2017, 241, 115–127. [Google Scholar] [CrossRef]

- Yi, Y.G.; Zhou, W.; Liu, Q.H. Ordinal preserving matrix factorization for unsupervised feature selection. Signal Process. Image Commun. 2018, 67, 118–131. [Google Scholar] [CrossRef]

- Lee, D.D.; Seung, H.S. Algorithms for non-negative matrix factorization. In Proceedings of the International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 3–8 December 2001. [Google Scholar]

- Lu, Y.J.; Cohen, I.; Zhou, X.S.; Tian, Q. Feature selection using principal feature analysis. In Proceedings of the 15th ACM International Conference on Multimedia, Augsburg, Germany, 24–29 September 2007. [Google Scholar]

- Yang, Y.; Shen, H.T.; Ma, Z.G. l21-norm regularized discriminative feature selection for unsupervised learning. In Proceedings of the Twenty-Second International Joint Conference on Artificial Intelligence, Barcelona, Spain, 16–22 July 2011. [Google Scholar]

- Wang, S.P.; Witold, P.; Zhu, Q.X. Subspace learning for unsupervised feature selection via matrix factorization. Pattern Recognit. 2015, 48, 10–19. [Google Scholar] [CrossRef]

- Lovász, L.; Plummer, M.D. Matching Theory, 1st ed.; Elsevier Science Ltd.: Amsterdam, The Netherlands, 1986. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).