Abstract

Multi-Criteria Decision-Analysis (MCDA) methods are successfully applied in different fields and disciplines. However, in many studies, the problem of selecting the proper methods and parameters for the decision problems is raised. The paper undertakes an attempt to benchmark selected Multi-Criteria Decision Analysis (MCDA) methods. To achieve that, a set of feasible MCDA methods was identified. Based on reference literature guidelines, a simulation experiment was planned. The formal foundations of the authors’ approach provide a reference set of MCDA methods ( Technique for Order Preference by Similarity to Ideal Solution (TOPSIS), VlseKriterijumska Optimizacija I Kompromisno Resenje (VIKOR), Complex Proportional Assessment (COPRAS), and PROMETHEE II: Preference Ranking Organization Method for Enrichment of Evaluations) along with their similarity coefficients (Spearman correlation coefficients and WS coefficient). This allowed the generation of a set of models differentiated by the number of attributes and decision variants, as well as similarity research for the obtained rankings sets. As the authors aim to build a complex benchmarking model, additional dimensions were taken into account during the simulation experiments. The aspects of the performed analysis and benchmarking methods include various weighing methods (results obtained using entropy and standard deviation methods) and varied techniques of normalization of MCDA model input data. Comparative analyses showed the detailed influence of values of particular parameters on the final form and a similarity of the final rankings obtained by different MCDA methods.

1. Introduction

Making decisions is an integral part of human life. All such decisions are made based on the assessment of individual decision options, usually based on preferences, experience, and other data available to the decision maker. Formally, a decision can be defined as a choice made based on the available information, or a method of action aimed at solving a specific decision problem [1]. Taking into account the systematics of the decision problem itself and the classical paradigm of single criterion optimization, it should be noted that it is now widely accepted to extend the process of decision support beyond the classical model of single goal optimization described on the set of acceptable solutions [2]. This extension allows one to tackle multi-criteria problems with a focus on obtaining a solution that meets enough many, often contradictory, goals [3,4,5,6,7].

The concept of rational decisions is, at the same time, a paradigm of multi-criteria decision support and is the basis of the whole family of Multi-Criteria Decision-Analysis (MCDA) methods [8]. These methods aim to support the decision maker in the process of finding a solution that best suits their preferences. Such an approach is widely discussed in the literature. In the course of the research, whole groups of MCDA methods and even ”schools” of multi-criteria decision support have developed. There are also many different individual MCDA methods and their modifications developed so far. The common MCDA methods belonging to the American school include Technique for Order Preference by Similarity to Ideal Solution (TOPSIS) [9,10,11], VlseKriterijumska Optimizacija I Kompromisno Resenje (VIKOR) [12], Analytic Hierarchy Process (AHP) [13,14], and Complex Proportional Assessment (COPRAS) [15]. Examples of the most popular methods belonging to the European school are the ELECTRE [16,17] and Preference Ranking Organization Method for Enrichment of Evaluations (PROMETHEE) [18] method families. The third best-known group of methods are mixed approaches, based on the decision-making rules of [19,20,21]. The result of the research is also a dynamic development of new MCDA methods and extensions of existing methods [22].

Despite the large number of MCDA methods, it should be remembered that no method is perfect and cannot be considered suitable for use in every decision-making situation or for solving every decision problem [23]. Therefore, using different multi-criteria methods may lead to different decision recommendations [24]. It should be noted, however, that if different multi-criteria methods achieve contradictory results, then the correctness of the choice of each of them is questioned [25]. In such a situation, the choice of a decision support method appropriate to the given problem [22] becomes an important research issue, as only an adequately chosen method allows one to obtain a correct solution reflecting the preferences of the decision maker [2,26]. The importance of this problem is raised by Roy, who points out that in stage IV of the decision-making model defined by him, the choice of the calculation procedure should be made in particular [2]. This is also confirmed by Hanne [27], Cinelli et al. [26].

It should be noted that it is difficult to answer the question of which method is the most suitable to solve a specific type of problem [24]. This is related to the apparent universality of MCDA methods, because many methods meet the formal requirements of a given decision-making problem so that they can be selected independently of the specificity of a particular problem (e.g., the existence of a finite set of alternatives may be a determinant for the decision-maker when choosing a method) [27]. Therefore, Guitouni et al. recommend to study different methods and determine their areas of application by identifying their limitations and conditions of applicability [23]. This is very important because different methods can provide different solutions to the same problem [28]. Differences in results using different calculation procedures can be influenced by, for example, the following factors: Individual techniques use different weights of criteria in calculations, the algorithms of methods themselves differ significantly, and many algorithms try to scale the targets [24].

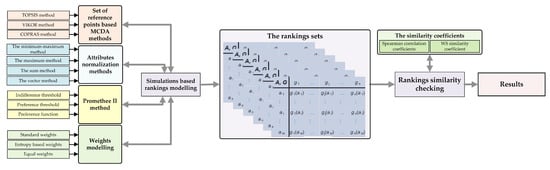

Many methodologies, frameworks, and formal approaches to identify a subset of MCDA methods suitable for the given problem have been developed in prior works [20,22]. The synthesis of available terms is presented later in the article. It should be noted that the assessment of accuracy and reliability of results obtained using various MCDA methods remains a separate research problem. The examples of work on the accuracy assessment and more broadly benchmarking of MCDA methods include Zanakis et al. [24], Chang et al. [29], Hajkowicz and Higgins [30], Żak [31], and others. Their broader discussion is also conducted later in the paper. The authors, focusing their research on selected MCDA methods, effectively use a simulation environment (e.g., Monte Carlo simulation) to generate a set of ranks. The authors most often use Spearman’s rank correlation coefficient to assess and analyze the similarity of the rankings. However, the shortcomings of the indicated approaches should be indicated. In the vast majority of the available literature, the approaches are focused on a given domain of the MCDA method (or a subset of methods) application. Thus, despite the use of a simulation environment, the studies are not comprehensive, limiting the range of obtained results. Most of the papers are focused on the assessment of only selected aspects of the accuracy of single MCDA methods or contain narrow comparative studies of selected MCDA methods. Another important challenge related to multi-criteria decision-making problems that are not included in the works mentioned above is the proper determination of weights [32]. It seems to be an investigation into how various methods to determine criteria weights (subjective weighting methods and objective weighting methods) affect final ranking [33]. In our research, we attempt a complex benchmarking for a subset of carefully selected MCDA methods. Taking care of the correctness and comprehensiveness of the conducted research and at the same time following the guidelines of the authors of publications in this area, we use a simulation environment and apply multiple model input parameters (see Figure 1). The aspects of the conducted analysis and benchmarking of methods include not only the generation of final rankings for a variable number of decision-making options, but also take into account different weighing methods (results obtained using entropy and standard deviation methods) and different techniques of normalizing input data of the MCDA model. The analysis of rank similarities obtained under different conditions and using different MCDA methods was carried out based on reference publications and using Spearman’s ranking correlation coefficient and coefficients.

Figure 1.

Methodical framework.

The study aims to analyze the similarity of rankings obtained using the selected four MCDA methods. It should be noted that in research, we analyze this problem only from the technical (algorithmic) point of view, leaving in the background the conceptual aspects of the method and the systemic assumptions of obtaining model input data. For each of these methods using a simulation environment, several rankings were calculated. The simulation itself was divided into two parts. The first part refers to a comparison of the similarity of the results separately for TOPSIS, VIKOR, COPRAS, and PROMETHEE II. In the second part, these methods were compared with each other. As illustrated in Figure 1), the calculation procedure took into account different normalization methods, weighting methods, preference thresholds, and preference functions. Thus, in the case of the TOPSIS method for each decision matrix, 12 separate rankings were created, where each of them is a different combination of a standardization method and a weighing method. Then, for the same matrix, a set of rankings using other MCDA methods (VIKOR, COPRAS, and PROMETHEE II) was created respectively. This procedure was repeated for different parameters of the decision matrix (in terms of the number of analyzed alternatives and number of criteria). In this way, the simulation provides a complex dataset in which similarities were analyzed using the Spearman and WS coefficients.

In this paper, we used several MCDA methods (TOPSIS, VIKOR, COPRAS, and PROMETHEE II) to make comparative tests. The choice of this set was dictated by the properties and popularity of these methods. These methods and their modifications found an application in many different domains such as sustainability assessment [34,35,36], logistics [37,38,39], supplier selection [7,40,41], manufacturing [42,43,44], environment management [45,46,47], waste management [48,49], energy management [50,51,52,53,54,55], chemical engineering [56,57,58], and many more [59,60]. The choice of a group of TOPSIS, VIKOR, and COPRAS methods is justified, as they form a coherent group of methods of the American MCDA school and are based on the same principles, using the concepts of the so-called reference points. At the same time, unlike other methods of the American school, they are not merely trivial (in the algorithmic sense) elaborations of the simple additional or multiplicative weighted aggregation. The choice of the PROMETHEE II method was dictated by the fact that this method belongs to the European school and that the PROMETHEE II algorithm implements the properties of other European school-based MCDA methods (outranking relations, thresholds, and different preference functions). It should be noted that for benchmarking purposes, the choice of this method has one more justification—unlike other methods of this school, the method provides a full, quantitative final ranking of decision-making options.

The rest of the article is structured as follows. Section 2.1.1 present the most important MCDA foundations. Operational point of view and preference aggregation techniques are presented in Section 2.1.2. Section 2.1.3 and Section 2.1.4 describe respectively American and European-based MCDA methods. Mixed and rule-based methods are shown in Section 2.1.5. Section 2.2 presents the MCDA method selection and benchmarking problem. Section 3.1.1 contains a description of the TOPSIS method and Section 3.1.2 describes the VIKOR method. A description of the COPRAS method can be found in Section 3.1.3 and a description of the PROMETHEE II method is in Section 3.1.4. Section 3.2 describes the normalization methods, which could be applied to data before executing any MCDA methods. Section 3.3 contains a description of weighting methods, which would be used in article. Section 3.4 describes the correlation coefficients that will be used to compare results. The research experiment and the numerical example are presented in Section 4. Section 5 provides the most relevant results and their discussion. Finally, the conclusions are formulated in Section 6.

2. Literature Review

2.1. MCDA State of the Art

In this section, we introduce the methodological assumptions of MCDA, taking into account the operational point of view and available data aggregation techniques. At the same time, we provide an outline of existing methods and decision-making schools.

2.1.1. MCDA Foundations

Almost in every case, the nature of the decision problem makes it a multi-criteria problem. This means that making a “good” decision requires considering many decision options, where each option should be considered in terms of many factors (criteria) that characterize its acceptability. The values of these factors may limit the number of variants in case their values exceed the assumed framework. They can also serve for grading the admissibility of variants in the situation when each of them is admissible, and the multi-criteria problem consists in choosing subjectively the best of them. For different decision makers, different criteria may have a different relevance, so in no case can a multi-criteria decision be considered as completely objective. Only the ranking of individual variants with given weights of individual criteria is objective here, as this ranking is usually generated using a specific multi-criteria method. Therefore, concerning recommended multi-criteria decisions, the term “optimal” is not used but ”most satisfactory decision-maker” [23] which means optimum in the sense of Pareto [2]. In conclusion, it can be concluded that multi-criteria models should take into account elements that can be described as a multi-criteria paradigm: The existence of multiple criteria, the existence of conflicts between criteria, and the complex, subjective, and poorly structured nature of the decision-making problem [61].

The MCDA methods are used to solve decision problems where there are many criteria. The literature provides various models of the decision-making process, e.g., Roy, Guitouni, and Keeney. For example, Guitouni’s model distinguishes five stages: Decision problem structuring, articulating and modeling preferences, preference aggregation, exploitation of aggregation, and obtaining a solution recommendation [62]. The essential elements of a multi-criteria decision problem are formed by a triangle (A, C, and E), where A defines a set of alternative decision options, C is a set of criteria, while E represents the criteria performance of the options [62]. Modeling of the decision maker’s preferences can be done in two ways, i.e., directly or indirectly, using the so-called disaggregation procedures [63]. The decision maker’s preferences are expressed using binary relations. When comparing decision options, two fundamental indifference relations ( I ) and strict preference ( P ) may occur. Moreover, the set of basic preferential relations may be extended by the relations of a weak preference of one of the variants to another and their incomparability (variants) [64], creating together with the basic relations the so-called outranking relation.

2.1.2. Operational Point of View and Preference Aggregation Techniques

The structured decision problem, for which the modeling of the decision maker’s preferences was carried out, is an input for the multi-criteria preference aggregation procedure (MCDA methods). This procedure should take into account the preferences of the decision-maker, modeled using the weighting of criteria, and preference thresholds. This procedure is responsible for aggregating the performance of the criteria of individual variants to obtain a global result of comparing the variants consistent with one of the multi-criteria issues. The individual aggregation procedures can be divided according to their operational approach. In the literature three main approaches exist [63]:

- The use of a single synthesized criterion: In this approach, the result of the variants’ comparisons is determined for each criterion separately. Then the results are synthesized into a global assessment. The full order of variants is obtained here [65];

- The synthesis of the criteria based on the relation of outranking: Due to the occurrence of incomparability relations, this approach allows for obtaining the partial order of variants [65];

- Aggregation based on decision rules [63]: This approach is based on the rough sets set theory [66]. It uses cases and reference ranking from which decision rules are generated [67].

The difference between the use of a single synthesized criterion and the synthesis based on the relation of exceedance is that in methods using synthesis to one criterion there is a compensation, while methods using the relation of exceedance by many researchers are considered uncompensated [68,69].

Research on multi-criteria decision support has developed two main groups of methods. These groups can be distinguished due to the operational approach used in them. These are the methods of the so-called American school of decision support and the methods of the European school [70,71]. There is also a group of so-called basic methods, most of which are similar in terms of the operational approach to the American school methods. Examples of basic methods are the lexicographic method, the ejection method for the minimum attribute value, the maximum method, or the additive weighting method. Besides, there is a group of methods combining elements of the American and European approaches, as well as methods based on the previously mentioned rule approach.

2.1.3. American School-Based MCDA Methods

The methods of the American school of decision support are based on a functional approach [63], namely, the use of value or usability. These methods usually do not take into account the uncertainty, inaccuracy, and uncertainty that can occur in data or decision-maker preferences [1]. This group of methods is strongly connected with the operational approach using a single synthesized criterion. The basic methods of the American school are MAUT, AHP, ANP, SMART, UTA, MACBETH (Measuring Attractiveness by a Categorical Based Evaluation Technique), or TOPSIS.

In the MAUT method, the most critical assumption is that the preferences of the decision-maker can be expressed using a global usability function, taking into account all the criteria taken into account. The AHP method is the best known and most commonly used functional method. This method allows one to prioritize the decision-making problem. The ANP method is a generalization of AHP. Instead of prioritizing the decision problem, it allows the building of a network model in which there may be links between criteria and variants and their feedback. In the SMART method, the criteria values of variants are converted to a common internal scale. This is done mathematically by the decision-maker, and the value function is used [72]. In the UTA method, the decision maker’s preferences are extracted from the reference set of variants [73]. The MACBETH method (Measuring Attractiveness by a Categorical Based Evaluation Technique) is based on qualitative evaluations. The individual variants are compared here in a comparison matrix in pairs. The criterion preferences of the variants are aggregated as a weighted average [74,75]. In the TOPSIS (Technique for Order Preference by Similarity to Ideal Solution) method, the decision options considered are compared for the ideal and the anti-ideal solution [76,77,78,79].

2.1.4. European School-Based MCDA Methods

The methods of the European School use a relational model. Thus, they use a synthesis of criteria based on the relation of outranking. This relation is characterized by transgression between pairs of decision options. Among the methods of the European School of Decision Support, the groups of ELECTRE and PROMETHEE methods should be mentioned above all [1].

ELECTRE I and ELECTRE Is methods are used to solve the selection problem. In the ELECTRE I method there is a true criterion (there are no indifference and preference thresholds), while in ELECTRE Is, pseudo-criteria have been introduced including thresholds. It should be noted here that indifference and preference thresholds can be expressed directly as fixed quantities for a given criterion or as functions, which would allow distinguishing the relation of weak and strong preferences. The relations of the excess occurring between the variants are presented on the graph and the best variants are those which are not exceeded by any other [80,81]. The ELECTRE II method is similar to the ELECTRE I since no indifference and preference thresholds are defined here as well, i.e., the true criteria are also present here. Furthermore, the calculation algorithm is the same almost throughout the procedure. However, the ELECTRE II method distinguishes weak and strong preference [82]. The ELECTRE III method is one of the most frequently used methods of multi-criteria decision support and it deals with the ranking problem. The ELECTRE IV method is similar to ELECTRE III in terms of using pseudo-criteria. Similarly, the final ranking of variants is also determined here. The ELECTRE IV method determines two orders (ascending and descending) from which the final ranking of variants is generated. However, the ELECTRE IV method does not define the weights of criteria, so all criteria are equal [83]. ELECTRE Tri is the last of the discussed ELECTRE family methods. It deals with the classification problem and uses pseudo-criteria. This method is very similar to ELECTRE III in procedural terms. However, in the ELECTRE Tri method, the decisional variants are compared with so-called variants’ profiles, i.e., “artificial variants” limiting particular quality classes [84].

PROMETHEE methods are used to determine a synthetic ranking of alternatives. Depending on the implementation, they operate on true or pseudo-criteria. The methods of this family combine most of the ELECTRE methods as they allow one to apply one of the six preference functions, reflecting, among others, the true criterion and pseudo-criteria. Moreover, they enrich the ELECTRE methodology at the stage of object ranking. These methods determine the input and output preference flows, based on which we can create a partial ranking in the PROMETHEE I method [85]. In contrast, in the PROMETHEE II method, the net preference flow values for individual variants are calculated based on input and output preference flows. Based on net values, a complete ranking of variants is determined [86,87].

NAIADE (Novel Approach to Imprecise Assessment and Decision Environment) methods are similar to PROMETHEE in terms of calculation because the ranking of variants is determined based on input and output preference flows [88]. However, when comparing the variants, six preferential relations defined based on trapezoidal fuzzy numbers are used (apart from indistinguishability of variants, weak, and strong preference is distinguished). The methods of this family do not define the weights of criteria [89].

Other examples of methods from the European MCDA field are ORESTE, REGIME, ARGUS, TACTIC, MELCHIOR, or PAMSSEM. The ORESTE method requires the presentation of variant evaluations and a ranking of criteria on an ordinal scale [90]. Then, using the distance function, the total order of variants is determined concerning the subsequent criteria [91]. The REGIME method is based on the analysis of variants’ compatibility. The probability of dominance for each pair of variants being compared is determined and on this basis the order of variants is determined [92]. In the ARGUS method for the representation of preferences on the order scale, qualitative measures are used [93]. The TACTIC method (Treatment of the Alternatives According To the Importance of Criteria) is based on quantitative assessments of alternatives and weights of criteria. Furthermore, it allows the use of true criteria and quasi-criteria, thus using the indistinguishability threshold as well as the veto. Similarly to ELECTRE I, TACTIC and ARGUS methods use preference aggregation based on compliance and non-compliance analysis [94]. In the MELCHIOR method pseudo-criteria are used, the calculation is similar to ELECTRE IV, while in the MELCHIOR method the order relationship between the criteria is established [93]. The PAMSSEM I and II methods are a combination of ELECTRE III, NAIADE, and Promethee and implement the computational procedure used in these methods [95,96].

2.1.5. Mixed and Rule-Based Methods

Many multi-criteria methods combine the approaches of the American and European decision support school. An example is the EVAMIX method [23], which allows taking into account both quantitative and qualitative criteria, using two separate measures of domination [97]. Another mishandled method is the QUALIFLEX method [98], which allows for the use of qualitative evaluations of variants and both quantitative and qualitative criteria weights [99]. PCCA (Pairwise Criterion Comparison Approach) methods can be treated as a separate group of multi-criteria methods. They focus on the comparison of variants concerning different pairs of criteria considered instead of single criteria. The partial results obtained in this way are then aggregated into the evaluation and final ranking [100]. The methods are based on the PCCA approach: MAPPAC, PRAGMA, PACMAN, and IDRA [101].

The last group of methods are methods based strictly on decision rules [102]. These are methods using fuzzy sets theory (COMET: Characteristic Objects Method) [22] and rough sets theory (DRSA: (Dominance-based Rough Set Approach) [103]. In the methods belonging to this group, the decision rules are initially built. Then, based on these rules, variants are compared and evaluated, and a ranking is generated.

The COMET (Characteristic Objects Method) requires giving fuzzy triangular numbers for each criterion [104], determining the degree of belonging of variants to particular linguistic values describing the criteria [105]. Then, from the values of vertices of particular fuzzy numbers, the characteristic variants are generated. These variants are compared in pairs by the decision-maker, and their model ranking is generated. These variants, together with their aggregated ranking values, create a fuzzy rule database [21]. After the decision system of the considered variants is given to the decision system, each of the considered variants activates appropriate rules and its aggregated rating is determined as the sum of the products of the degrees in which the variants activate individual rules [106,107].

The DRSA method (Dominance-based Rough Set Approach) is based on the rough set theory and requires the definition of a decision table taking into account the values of criteria and consequences of previous decisions (in other words, the table contains historical decision options together with their criteria assessments as well as their aggregated global assessments) [108]. The decision defines the relation of exceedance. The final assessment of a variant is determined as the number of variants which, based on the rules, the considered variant exceeds or is not exceeded by. Moreover, the number of variants that are exceeded or not exceeded by the considered variant is deducted from the assessment [109,110].

2.2. MCDA Methods Selection and Benchmarking Problem

The complexity of the problem of choosing the right multi-criteria method to solve a specific decision problem results in numerous works in the literature where this issue is addressed. These works can be divided according to their approach to method selection. Their authors apply approaches based on benchmarks, multi-criteria analysis, and informal and formal structuring of the problem or decision-making situation. An attempt at a short synthesis of available approaches to the selection of MCDA methods to a decision-making problem is presented below.

The selection of a method based on multi-criteria analysis requires defining criteria against which individual methods will be evaluated. This makes it necessary to determine the structure of the decision problem at least partially [111]. Moreover, it should be noted that treating the MCDA method selection based on the multi-criteria approach causes a looping of the problem, because for this selection problem, the appropriate multi-criteria method should also be chosen [112]. Nevertheless, the multi-criteria approach to MCDA method selection is used in the literature. Examples include works by Gershon [113], Al-Shemmeri et al. [111], and Celik and Deha Er [114].

The informal approach to method selection consists of selecting the method for a given decision problem based on heuristic analysis performed by the analyst/decision-maker [115]. This analysis is usually based on the author’s thoughts and unstructured description of the decision problem and the characteristics of particular methods. The methodological approach is similar to the semi-formal one, with the difference that the characteristics of individual MCDA methods are to some extent formalized here (e.g., table describing the methods). The informal approach was used in Adil et al. [115] and Bagheri Moghaddam et al. [116]. The semi-formal approach has been used in the works of Salinesi and Kornyshov [117], De Montis et al. [118], and Cinelli et al. [119].

In the formal approach to the selection of the MCDA method, the description of individual methods is fully structured (e.g., taxonomy or a table of features of individual MCDA methods) [120,121]. The decision problem and the method of selecting a single or group of MCDA methods from among those considered are formally defined (e.g., based on decision rules [122], artificial neural networks [123], or decision trees [23,124]). These are frameworks, which enable a selection of MCDA method based on the formal description of methods and decision problem. Such an approach is proposed, among others, in works: Hwang and Yoon [125], Moffett and Sarkar [124], Guitouni and Martel [23], Guitouni et al. [62], Wątróbski [120], Wątróbski and Jankowski [122], Celik and Topcu [126], Cicek et al. [127], and Ulengin et al. [123].

The benchmarking approach seems particularly important. It focuses on a comparison of the results obtained by individual methods. The main problem of applying this approach is to find a reference point against which the results of the examined multi-criteria methods would be compared. Some authors take the expert ranking as a point of reference whilst others compare the results to the performance of one selected method or examine the compliance of individual rankings obtained using particular MCDA methods. Examples of benchmark-based approach to selection/comparison of MCDA methods are works: Zanakisa et al. [24], Chang et al. [29] Hajkowicz and Higgins [30], and Żak [31].

The publication of Zanakis et al. [29] presents results of benchmarks for eight MCDA methods (Simple Additive Weighting, Multiplicative Exponential Weighting, TOPSIS, ELECTRE, and four AHP variants). In the simulation test scenario, randomly generated decision problems were assumed in which the number of variants was equal: 3, 5, 7, and 9; the number of criteria were: 5, 10, 15, and 20; and the weights of the criteria could be equal, have a uniform distribution in the range <0; 1> with a standard deviation of 1/12, or a beta U-shaped distribution in the range <0; 1> with a standard deviation of 1/24. Moreover, the assessments of alternatives were randomly generated according to a uniform distribution in the range <0; 1>. The number of repetitions was 100 for each combination of criteria, variants, and weights. Therefore, 4800 decision problems were considered in the benchmark (4 number of criteria × 4 number of variants × 3 weightings types × 100 repetitions) and 38,400 solutions were obtained in total (4800 problems × 8 MCDA methods). Within the tests, the average results of all rankings generated by each method were compared with the average results of rankings generated by the SAW method, which was the reference point. Comparisons were made using, among others, Spearman’s rank correlation coefficient for rankings.

Benchmark also examined the phenomenon of ranking reversal after introducing an additional non-dominant variant to the evaluation. The research on the problem of ranking reversal was carried out based on similar measures, with the basic ranking generated by a given MCDA method being the point of reference in this case. The authors of the study stated that the AHP method gives the rankings closest to the SAW method, while in terms of ranking reversal, the TOPSIS method turned out to be the best.

Chang et al. [29] took up a slightly different problem. They presented the procedure of selecting a group fuzzy multi-criteria method generating the most preferred group ranking for a given problem. The authors defined 18 fuzzy methods, which are combinations of two methods of group rating averaging (arithmetic and geometric mean), three multi-criteria methods (Simple Additive Weighting, Weighted Product, and TOPSIS), and three methods of results defuzzification (Center-of-area, graded mean integration, and metric distance). The best group ranking was selected by comparing each of the 18 rankings with nine individual rankings of each decision maker created using methods that are a combination of multi-criteria procedures and defuzzification methods. Spearman’s correlation was used to compare group and individual rankings.

In the work of Hajkowicz and Higgins [30] rankings were compared using five methods (Simple Additive Weighting, Range of Value Method, PROMETHEE II, Evamix, and Compromise programming). To compare the rankings, we used Spearman’s and Kendall’s correlations for full rankings and a coefficient that directly determines the compliance on the first three positions of each of the compared rankings. The study considered six decision-making problems, in the field of water resources management, undertaken in the scientific literature. Nevertheless, it should be noted that this statement is based on the analysis of features and possibilities offered by individual MCDA methods. However, it does not result from the conducted benchmark.

Another publication cited in which the benchmark was applied to the work of Żak [31]. The author considered five multi-criteria methods (ELECTRE, AHP, UTA, MAPPAC, and ORESTE). The study was based on the examination of the indicated methods concerning three decision-making problems related to transport, i.e., (I) evaluation of the public transportation system development scenarios, (II) ranking of maintenance and repair contractors in the public transportation system, and (III) selection of the means of transport used in the public transportation system. The benchmark uses expert evaluations for each of the methods in terms of versatility and relevance to the problem, computational performance, modeling capabilities for decision-makers, reliability, and usefulness of the ranking.

The problem of MCDA methods benchmarking is also addressed in many up-to-date studies. Thus in the paper [128] using building performance simulation reliability of rankings generated using AHP, TOPSIS, ELECTRE III, and PROMETHEE II methods was evaluated. In the paper [129] using Monte Carlo simulation, Weighted Sum, and Weighted Product Methods (WSM/WPM), TOPSIS, AHP, PROMETHEE I, and ELECTRE I were compared. The next study [130] adopted the same benchmarking environment as in the study [24]. The authors empirically compare the rankings produced by multi-MOORA, TOPSIS, and VIKOR methods. In the paper [131] another benchmark of selected MCDA methods was presented. AHP, Fuzzy AHP, TOPSIS, Fuzzy TOPSIS, and PROMETHEE I methods were used here. The similarity of rankings was evaluated using Spearman’s rank correlation coefficient. In the next paper [132], the impact of different uncertainty sources on the rankings of MCDA problems in the context of food safety was analyzed. In this study, MMOORA, TOPSIS, VIKOR, WASPAS, and ELECTRE II were compared. In the last example work [133], using a simulation environment, the impact of different standardization techniques in the TOPSIS method on the final form of final rankings was examined. The above literature analysis unambiguously shows the effectiveness of the simulation environment in benchmarking methods from the MCDA family. However, at the same time, it constitutes a justification for the authors of this article for the research methods used.

3. Preliminaries

3.1. MCDA Methods

In this section, we introduced formal foundations of MCDA methods used during the simulation. We selected three methods belonging to the American school (TOPSIS, VIKOR, and COPRAS) and one popular method of the European school called PROMETHEE II.

3.1.1. TOPSIS

The first one is Technique of Order Preference Similarity (TOPSIS). In this approach, we measure the distance of alternatives from the reference elements, which are respectively positive and negative ideal solution. This method was widely presented in [9,134]. The TOPSIS method is a simple MCDA technique used in many practical problems. Thanks to its simplicity of use, it is widely used in solving multi-criteria problems. Below we present its algorithm [9]. We assume that we have a decision matrix with m alternatives and n criteria is represented as .

Step 1. Calculate the normalized decision matrix. The normalized values calculated according to Equation (1) for profit criteria and (2) for cost criteria. We use this normalization method, because [11] shows that it performs better than classical vector normalization. Although, we can also use any other normalization method.

Step 2. Calculate the weighted normalized decision matrix according to Equation (3).

Step 3. Calculate Positive Ideal Solution (PIS) and Negative Ideal Solution (NIS) vectors. PIS is defined as maximum values for each criteria (4) and NIS as minimum values (5). We do not need to split criteria into profit and cost here, because in step 1 we use normalization which turns cost criteria into profit criteria.

Step 4. Calculate distance from PIS and NIS for each alternative. As shown in Equations (6) and (7).

Step 5. Calculate each alternative’s score according to Equation (8). This value is always between 0 and 1, and the alternatives which have values closer to 1 are better.

3.1.2. VIKOR

VIKOR is an acronym in Serbian that stands for VlseKriterijumska Optimizacija I Kompromisno Resenje. The decision maker chooses an alternative that is the closest to the ideal and the solutions are assessed according to all considered criteria. The VIKOR method was originally introduced by Opricovic [135] and the whole algorithm is presented in [134]. These both methods are based on closeness to the ideal objects [136]. However, they differ in their operational approach and how these methods consider the concept of proximity to the ideal solutions.

The VIKOR method, similarly to the TOPSIS method, is based on distance measurements. In this approach a compromise solution is sought. The description of the method will be quoted according to [135,136]. Let us say that we have a decision matrix with m alternatives and n criteria is represented as . Before actually applying this method, the decision matrix can be normalized with one of the methods described in Section 3.2.

Step 1. Determine the best and the worth values for each criteria functions. Use (9) for profit criteria and (10) for cost criteria.

Step 3. Compute the values using Equation (13).

where

and v is introduced as a weigh for the strategy “majority of criteria”. We use here.

Step 4. Rank alternatives, sorting by the values S, R, and Q in ascending order. Result is three ranking lists.

Step 5. Normally, we should use S, R, and Q ranking lists to propose the compromise solution or set of compromise solutions, as shown in [134,136]. However, in this paper would use only the Q ranking list.

3.1.3. COPRAS

Third used method is a COPRAS (Complex Proportional Assessment), introduced by Zavadskas [137,138]. This approach assumes a direct and proportional relationship of the importance of investigated variants on a system of criteria adequately describing the decision variants and on values and weights of the criteria [139]. This method ranks alternatives based on their relative importance (weight). Final ranking is creating using the positive and negative ideal solutions [138,140]. Assuming, that we have a decision matrix with m alternatives and n criteria is represented as , the COPRAS method is defined in five steps:

Step 1. Calculate the normalized decision matrix using Equation (14).

Step 2. Calculate the difficult normalized decision matrix, which represents a multiplication of the normalized decision matrix elements with the appropriate weight coefficients using Equation (15).

Step 3. Determine the sums of difficult normalized values, which was calculated previously. Equation (16) should be used for profit criteria and Equation (17) for cost criteria.

where k is the number of attributes that must be maximized. The rest of attributes from to n prefer lower values. The and values show the level of the goal achievement for alternatives. A higher value of means that this alternative is better and the lower value of also points to better alternative.

Step 4. Calculate the relative significance of alternatives using Equation (18).

Step 5. Final ranking is performed according values (19).

where stands for the maximum value of the utility function. Better alternatives have a higher value, e.g., the best alternative would have .

3.1.4. PROMETHEE II

The Preference Ranking Organization Method for Enrichment of Evaluations (PROMETHEE) is a family of MCDA methods developed by Brans [18,141]. It is similar to other methods input data, but it optionally requires to choose preference function and some other variables. In this article we use PROMETHEE II method, because the output of this method has a full ranking of the alternatives. It is the approach where a complete ranking of the actions is based on the multi-criteria net flow. It includes preferences and indifferences (preorder) [142]. According to [134,141], PROMETHEE II is designed to solve the following multicriteria problems:

where A is a finite set of alternatives and is a set of evaluation criteria either to be maximized or minimized. In other words, is a value of criteria i for alternative . With these values and weights we can define evaluation table (see Table 1).

Table 1.

Evaluation table.

Step 1. After defining the problem as described above, calculate the preference function values. It is defined as (21) for profit criteria.

where is the difference between two actions (pairwise comparison):

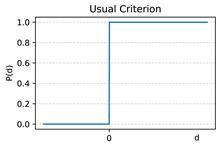

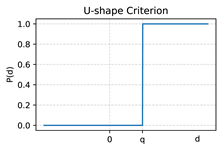

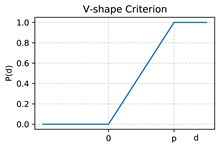

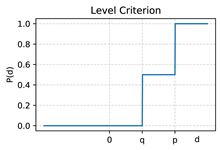

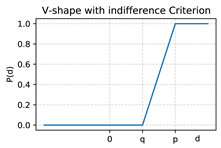

and the value of the preference function P is always between 0 and 1 and it is calculating for each criterion. The Table 2 presents possible preference functions.

Table 2.

PROMETHEE (Preference Ranking Organization Method for Enrichment of Evaluations) preference functions.

This preference functions and variables such as p and q allows one to customize the PROMETHEE model. For our experiment these variables are calculated according to Equations (23) and (24):

where D is a positive value of the d values (22) for each criterion and k is a modifier. In our experiments .

Step 2. Calculate the aggregated preference indices (25).

where a and b are alternatives and shows how much alternative a is preferred to b over all of the criteria. There are some properties (26) which must be true for all alternatives set A.

Step 4. In this article we will use only PROMETHEE II, which results in a complete ranking of alternatives. Ranking is based on the net flow (29).

Larger value of means better alternative.

3.2. Normalization Methods

In the literature, there is no clear assignment to which decision-makers’ methods of data normalization are used. This situation poses a problem, as it is necessary to consider the influence of a particular normalization on the result. The most common normalization methods in MCDA methods can be divided into two groups [143], i.e., methods designed to profit (30), (32), (34) and (36) and cost criteria (31), (33), (35), and (37).

The minimum-maximum method: In this approach, the greatest and the least values in the considered set are used. The formulas are described as follows (30) and (31):

The maximum method: In this technique, only the greatest value in the considered set is used. The formulas are described as follows (32) and (33):

3.3. Weighting Methods

In this section, we present three popular methods related to objective criteria weighting. These are the most popular methods currently found in the literature. In the future, this set should be extended to other methods.

3.3.1. Equal Weights

The first and least effective weighted method is the equal weight method. All criteria’s weights are equal and calculated by Equation (38), where n is the number of criteria.

3.3.2. Entropy Method

3.3.3. Standard Deviation Method

3.4. Correlation Coefficients

Correlation coefficients make it possible to compare obtained results and determine how similar they are. In this paper we compare ranking lists obtained by several MCDA methods using Spearman rank correlation coefficient (44), weighted Spearman correlation coefficient (46), and rank similarity coefficient (47).

3.4.1. Spearman’s Rank Correlation Coefficient

3.4.2. Weighted Spearman’s Rank Correlation Coefficient

For a sample of size N, rank values and are defined as (46). In this approach, the positions at the top of both rankings are more important. The weight of significance is calculated for each comparison. It is the element that determines the main difference to the Spearman’s rank correlation coefficient, which examines whether the differences appeared and not where they appeared.

3.4.3. Rank Similarity Coefficient

For a samples of size N, the rank values and is defined as (47) [144]. It is an asymmetric measure. The weight of a given comparison is determined based on the significance of the position in the first ranking, which is used as a reference ranking during the calculation.

4. Study Case and Numerical Examples

The main goal of the experiments is to test if the MCDA method or weight calculation method has an impact on the final ranking and how significant this impact is. For this purpose, we applied four commonly used classical MCDA methods, which are listed in Section 3.1. In the case of TOPSIS and VIKOR methods, we would use different normalization methods, and for the PROMETHEE method, we use various preference function and use different p and q values. The primary way to analyze the results obtained from numerical experiments is to use selected correlation coefficients, described in Section 3.4.

Algorithm 1 presents the simplified pseudo-code of the experiment, where we process matrices with different number of criteria and alternatives. The number of criteria changed from 2 to 5 and the number of the alternatives belongs to the set . For each of the 20 combinations, the number of alternatives and criteria was generated after 1000 random decision matrices. They contain attribute values for all analyzed alternatives for all analyzed criteria. The preference values of the drawn alternatives are not known, but three different vectors of criteria weights are derived from this data. Rankings are then calculated using these different methods with different settings. This way, we obtain research material in the form of rankings calculated using different approaches. Analyzing the results using similarity coefficients, we try to determine the similarity of the obtained results. For each matrix we perform the following steps:

- Step 1.

- Calculate 3 vectors of weights, using equations described in Section 3.3;

- Step 2.

- Split criteria into profit and cost criteria: Assuming we have n criteria, first are considered to be profit criteria and the rest ones are considered to be cost;

- Step 3.

- Compute 3 rankings using MCDA methods listed in Section 3.1 and three different weighting vectors.

| Algorithm 1 Research algorithm |

|

The further part of this section presents two examples that are intended to explain our simulation study better. The sample data and how it is handled in the following section will be reviewed. Due to the vast number of generated results, some figures have been placed in the Appendix A for clarity.

4.1. Decision Matrices

We have chosen two random matrices with three criteria and five alternatives to show how exactly these matrices are processed during the experiment. These matrices were chosen to demonstrate how many different or similar the rankings obtained with different MCDA methods in particular cases could be. Table 3 and Table A1 contain chosen matrices and their weights which was calculated with three different methods described in Section 3.3. In the following sections, the example from Table 3 will be discussed separately for clarity. The results for the second example (Table A1) will be shown in the Appendix B.

Table 3.

The first numerical example of a decision matrix with three different criteria weighting vectors.

4.2. TOPSIS

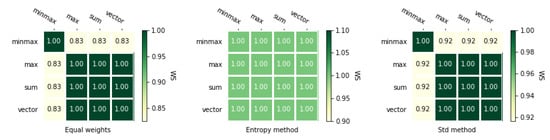

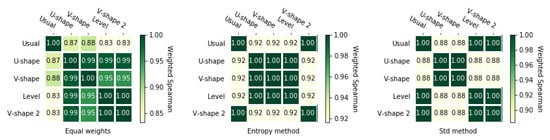

Processing a matrix with the TOPSIS method, using four different normalization methods and three different weighting methods gives us 12 rankings. Table 4 presents all rankings for the analyzed example showed in Table 4, and rankings for the second example are shown in Table A2. The orders in both cases are not identical and we can observe that the impact of different parameters varies for almost every case in the first example (Table 3). It depends mainly on the applied normalization method and selected weight vector. However, we need to determine exactly the similarity of considered rankings. Therefore, we use coefficients similarity, which are presented in heat maps in Figure 2 and Figure 3. The results for the second numerical example is presented in Figure A1 and Figure A2. These figures show exactly how different these rankings are using the and coefficients described in Section 3.4. Each figure shows three heat maps corresponding to three weighting methods.

Table 4.

TOPSIS (Technique for Order Preference by Similarity to Ideal Solution) rankings for matrix 1: (a) Equal weights, (b) entropy method, and (c) std method.

Figure 2.

correlations heat map for TOPSIS with different normalization methods (matrix 1).

Figure 3.

correlations heat map for TOPSIS with different normalization methods (matrix 1).

Figure 2 and Figure 3 presents and correlations between rankings obtained using different normalization methods with TOPSIS method. The most significant difference is obtained for the ranking calculated using the entropy method and sum-based normalization. Only a single discrepancy appears for the alternatives and while comparing this ranking with the rest of the rankings. There is a change in the ranking on positions 2 and 5. These figures also show that the coefficient is asymmetrical as opposed to . Therefore both coefficients will also be used in further analyses.

Next, Figure A1 and Figure A2 show and correlations for the second matrix. Rankings obtained using different normalization methods with TOPSIS are far more correlated than for the first matrix. The entropy methods weights gave as equal rankings and the ranking for the minmax normalization method is slightly different for equal weights and standard deviation weighting method.

4.3. VIKOR

Next, we calculate rankings for both matrices using the VIKOR method in combination with four different normalization methods and three weighting methods. Besides, we also use VIKOR without normalization (represented by “none” in the tables and on the figures). Rankings are shown in Table 5 and Table A3. These rankings have more differences between themselves than the rankings obtained using TOPSIS method with different normalization methods.

Table 5.

VIKOR (VlseKriterijumska Optimizacija I Kompromisno Resenje) rankings for matrix 1: (a) Equal weights, (b) entropy method, and (c) std method.

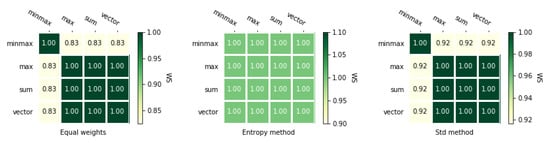

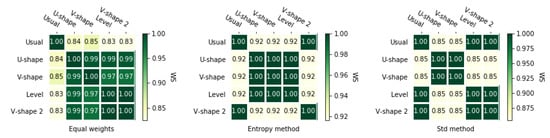

Figure 4 and Figure 5 with and correlation coefficients show us that in this case the entropy weighting method performed worse than the other two weighting methods. In addition, it is noticeable how small size of the correlation between VIKOR without normalization. For the entropy weighting method, the value is which means that rankings obtained with VIKOR without normalization are reversed to VIKOR with any other normalization methods.

Figure 4.

correlations heat map for VIKOR with different normalization methods (matrix 1).

Figure 5.

correlations heat map for VIKOR with different normalization methods (matrix 1).

The rankings calculated for the second matrix are more correlated between themselves, which is presented on heat maps Figure A2 and Figure A3. Similarly to TOPSIS, the entropy weighted method gives perfectly correlated rankings and the other two weighting method gives us fewer correlated rankings. Moreover, it is noticeable that the ranking obtained using VIKOR without normalization is less correlated to VIKOR with normalization methods.

4.4. PROMETHEE II

For exemplary purposes, we use the PROMETHEE II method with five different preference functions, and the q and p values for them are calculated as follows:

where D stands for positive values of the differences (see Section 3.1.4 for a more detailed explanation). As previously mentioned, Table 6 and Table A4 contains rankings obtained using different preference functions and different weighting methods.

Table 6.

PROMETHEE II rankings for matrix 1: (a) Equal weights, (b) entropy method, and (c) std method.

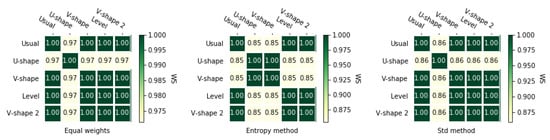

According to Figure 6 and Figure 7, entropy weighting methods give slightly less correlated rankings than the two other methods. It is noticeable that for equal weights and for std-based method ranking obtained using U-shape preference function was not the same as for other rankings.

Figure 6.

correlations heat map for PROMETHEE II with different preference functions (matrix 1).

Figure 7.

correlations heat map for PROMETHEE II with different preference functions (matrix 1).

For the second matrix, rankings obtained with the usual preference function and equal weights is quite different than other rankings which is shown in Figure A5 and Figure A6. For the entropy weighting method, we could see that the rankings obtained with the usual preference function and the v-shape 2 preference function are equal, but applying other preference functions gave us slightly different rankings. In the case of the standard weighting methods, preference functions usual, level, and v-shape 2 have equal rankings, but, as previously mentioned, they are different from the rankings obtained by other preference functions.

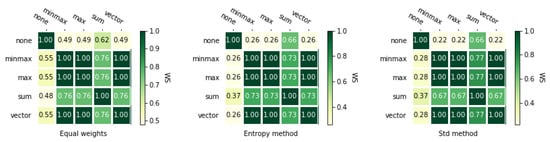

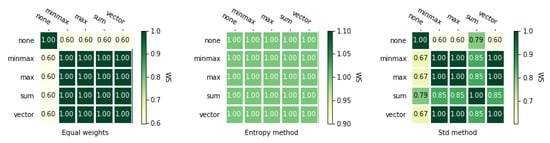

4.5. Different Methods

In this part, we show how different rankings could be obtained by different methods. We compare rankings obtained by TOPSIS with minmax-based normalization, VIKOR without normalization, PROMETHEE II with the usual preference function, and COPRAS. Obtained rankings for first and second matrices are shown in Table 7 and Table A5 accordingly.

Table 7.

Rankings obtained with different Multi-Criteria Decision-Analysis (MCDA) methods for matrix 1: (a) Equal weights, (b) entropy method, and (c) std method.

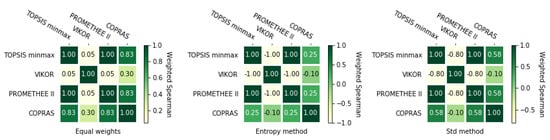

Correlation heat maps are shown in Figure 8 and Figure 9. The differences between rankings in the case of the first example are the smallest. It can be seen that the ranking obtained using VIKOR and entropy weighting method is reversed to rankings obtained using TOPSIS minmax and PROMETHEE II methods. It also poorly correlated with the ranking obtained with the COPRAS method. This is also noticeable that for other two weighting methods, with the VIKOR ranking being much less correlated with other rankings.

Figure 8.

correlations heat map for PROMETHEE II with different preference functions (matrix 1).

Figure 9.

correlations heat map for PROMETHEE II with different preference functions (matrix 1).

4.6. Summary

Based on the results of the two numerical examples presented in Section 4.1, Section 4.2, Section 4.3, Section 4.4 and Section 4.5, it can be seen that the selection of the MCDA method is important for the results in the final ranking. Both numerical examples were presented to show positive and negative cases. In both samples, it can be seen that once a given method is selected, the weighing method and the normalization used in this method play a key role. The impact of parameters in the decision-making process in the discussed examples was different. Therefore, simulations should be performed in order to examine the typical similarity between the received rankings concerning a different number of alternatives. In Section 4.5, comparisons are made between methods using the most common configuration, where each of the analyzed scenarios has a huge impact on the final ranking. Therefore, simulation studies will be conducted to show the similarity of the rankings examined in the next section.

5. Results and Discussion

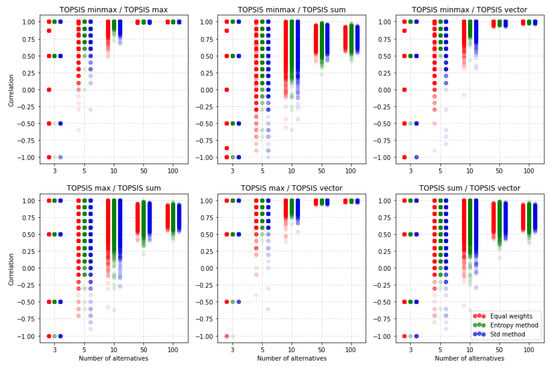

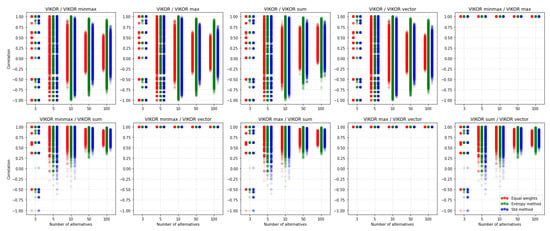

5.1. TOPSIS

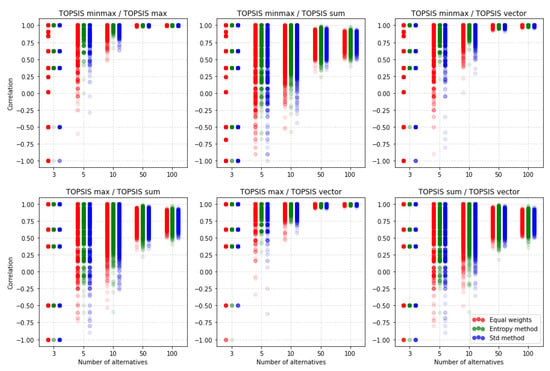

In this section, we compare TOPSIS between different normalization methods. Figure 10, Figure 11 and Figure 12 contain results of simulations. Each dot represents the correlation between two rankings for certain random matrix. The color of the dot shows which weighting method it is. For TOPSIS we compare rankings with three correlation coefficients described in Section 3.4, but other methods would be compared only using the and correlation coefficients because the Spearman correlation has some limitations. At first, we could not use the correlation for rankings where standard deviations are 0. It is frequent occurrences for the VIKOR method for decision matrices with a small number of alternatives. The second reason is that the cardinality of the Spearman correlation coefficient values set is strictly less than the cardinality of possible values sets of other two correlation coefficients. It is perfectly seen in Figure 10 for five alternative.

Figure 10.

Comparison of the similarity coefficient for the TOPSIS method with different normalization methods.

Figure 11.

Comparison of the similarity coefficient for the TOPSIS method with different normalization methods.

Figure 12.

Comparison of the similarity coefficient for the TOPSIS method with different normalization methods.

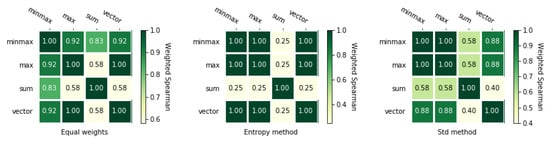

Figure 11 shows the correlation between rankings obtained using the TOPSIS method with different normalization methods. We have some reversed rankings for matrices with three alternatives but with a greater number of alternatives, rankings become increasingly similar. It is noticeable that for a big number of alternatives, such as 50 or 100, rankings obtained using minmax vs. max normalization, minmax vs. vector normalization, and max vs. vector normalization methods are almost perfectly correlated. It is clearly seen in Table A6, which contains mean values of this correlations. The table gives details of the average correlation values for the number of alternatives (3, 5, 10, 50, or 100) and the number of criteria (2, 3, 4, and 5). Overall, the value of reaches the lowest values for five criteria and three alternatives. The closest results are obtained for the max and minmax normalizations, but when not applying an equal distribution of criteria weights. Then, in the worst case, we get the value of the coefficient 0.897, where for the method of equal distribution of weights we get the result 0.735. Other pairs of normalizations reach, in the worst case, the correlation of 0.571. It is also visible that rankings obtained using the sum normalization method are less correlated to rankings obtained with other normalization methods. Herein we can conclude that sum normalization is performing poorly with TOPSIS compared to other normalization methods.

On Figure 12, we see similar results to . Rankings obtained using sum normalization are less correlated with other rankings. It is noticeable that according to the similarity coefficient, there are more poorly correlated rankings for the sum vs. vector normalization methods case than for correlation. A problem of interpretation may be that both coefficients have a different domain. Nevertheless, this is the only case where the number of alternatives is increasing and there is no improvement in the similarity of the rankings obtained. The detailed data in Table A7 also confirm this. It may also come as a big surprise that the choice of the weighting method is not so important. Relatively similar results are obtained regardless of the method used.

In general, it follows that with a small number of alternatives that do not exceed 10 decision-making options, the rankings may vary considerably depending on the normalization chosen. Thus, this shows that a problem as important as the choice of the method of weighting is the choice of the method used to normalize the input data. For larger groups of alternatives, the differences are also significant, although a smaller difference can be expected. The analysis of the data in Table A6 and Table A7 shows that the increase in the complexity of the problem, understood as an increase in the number of criteria, practically always results in the decrease in similarity between the results obtained as a result of different normalizations applied.

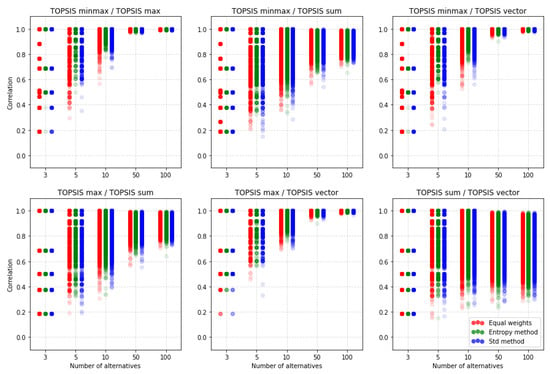

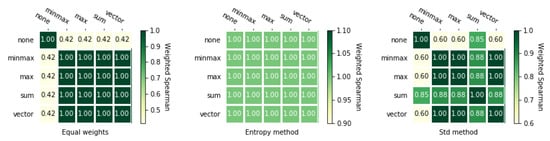

5.2. VIKOR

As mentioned previously, we would use only and correlation coefficients for the following comparisons. Figure 13 shows correlation between rankings obtained using VIKOR with different normalization and without normalization. It is clearly seen that VIKOR without normalization is poorly correlated to VIKOR when normalization is applied. In Table A8, we could see that mean values of the correlation values for VIKOR without normalization with cases where normalization was applied is around 0. It is also noticeable that VIKOR with minmax, max, and vector normalization gives very similar rankings.

Figure 13.

Comparison of the similarity coefficient for the VIKOR method with different normalization methods.

This is similar to the TOPSIS method, where sum normalization has less similar rankings than other methods. Interestingly, correlations of rankings in the case of VIKOR without normalization vs. VIKOR with any normalization have a mean around zero, but rankings obtained with equal weight have less variance. In this case, less variability may be of concern, as it means that it is not possible to get rankings that are compatible. In addition to the three exceptions mentioned earlier, it should be noted that the choice of normalization has a significantly stronger impact on the similarity of rankings than in the TOPSIS method.

We can observe a similar situation on Figure 14, where similar coefficient values are presented. It confirms the results obtained using correlation coefficient: Rankings obtained using VIKOR without normalization is less correlated with rankings obtained using VIKOR with normalization than other rankings correlated between themselves. It is also noticeable that in the sum vs. vector normalization methods case, the similarity coefficient values are also visibly smaller, as it was for TOPSIS. Therefore, we can conclude that rankings obtained using the sum and vector normalization method usually should be quite different.

Figure 14.

Comparison of the similarity coefficient for the VIKOR method with different normalization methods.

A detailed analysis of the results of mean values of (Table A8) and mean (Table A9) show that the mean significance of similarities is significantly lower than in the TOPSIS method. Besides, the dependence of the change in the values of both coefficients in the tables is more random and more challenging to predict. Nevertheless, it means that the final result of the ranking is significant whether or not we apply the method with or without normalization, and which normalization we apply. Again, the influence of the selection of the method of criteria weighting was not as significant as one might expect. Generally, the proof is shown here that standardization can have a considerable impact on the final result.

5.3. PROMETHEE II

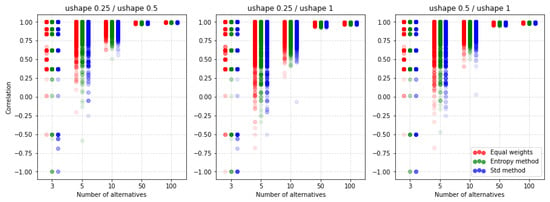

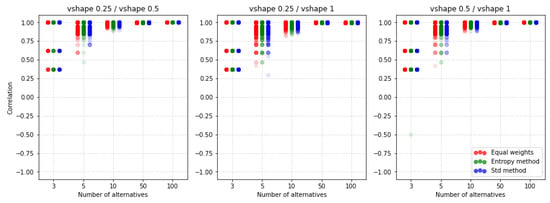

For PROMETHEE II, the normalization of the decision matrix does not apply. That is why this section will analyze receiving the rankings with different values of parameters p, q, and preference function. Figure 15 shows the results of the factor for the U-shape preference function concerning different techniques of criteria weighting. The values p and q are calculated automatically and we only scale them. The differences between the individual rankings are very similar. If we analyze up to 10 alternatives, using any scaling value, we get significantly different results. As the number of alternatives increases, the spread of possible correlation values decreases. However, when analyzing the values contained in Table A10 and Table A11 we see that the average values indicate a much smaller impact than in the case of the selection of standardization methods in the two previous MCDA methods.

Figure 15.

Comparison of the similarity coefficient for the PROMETHEE II method with U-shape preference function and different k values.

For comparison, we present Figure 16 where another preference function is shown but also for the ratio. It turns out that by choosing the V-shape preference function instead of the U-shape we get more of a similarity of results. Again, it turns out that the methods of weighting do not have such a significant impact on the similarity results obtained.

Figure 16.

Comparison of the similarity coefficient for the PROMETHEE II method with V-shape preference function and different k values.

An important observation is that despite the lack of possibilities to normalize input data, there are still differences depending on the selected parameters and functions of preferences. The other two preference functions for the ratio are shown in Figure A9 and Figure A10. The results also indicate a similar level of similarity between the rankings. The coefficient for the corresponding cases is shown in Figure A11, Figure A12, Figure A13 and Figure A14. Both coefficients indicate the same nature of the influence of the applied parameters on the similarity of rankings. To sum up, in all the discussed cases, we see significant differences between the rankings obtained. Thus, not only normalization but other parameters are important for the results obtained and it is always necessary to make an in-depth analysis by selecting a specific method and assumptions, i.e., such as normalization, etc.

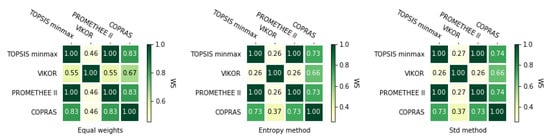

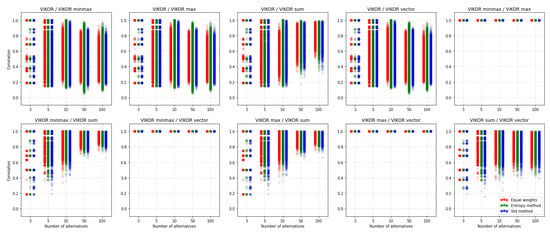

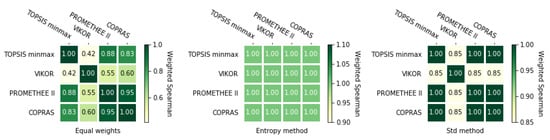

5.4. Comparison of the MCDA Methods

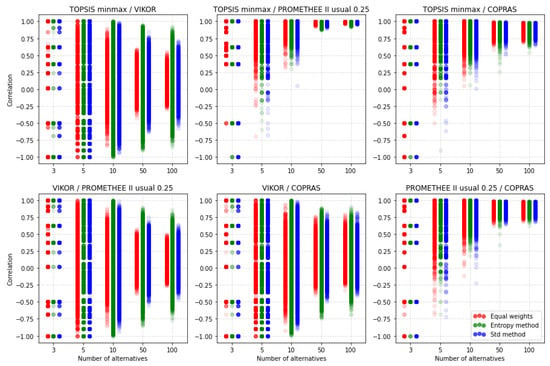

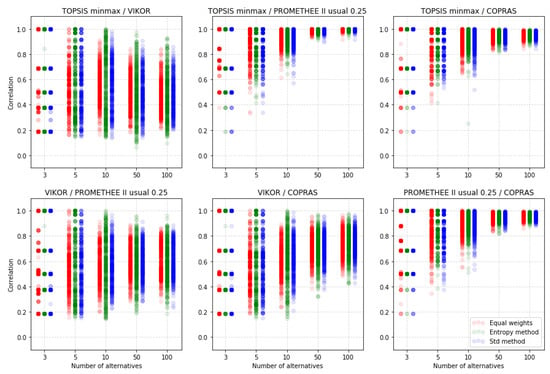

Figure 17 shows how much correlated rankings obtained by four different methods could be: TOPSIS with minmax normalization, VIKOR without normalization, PROMETHEE II with usual preference function, and COPRAS. We can see that VIKOR has quite different rankings in comparison to other methods.

Figure 17.

Comparison of the similarity coefficient for the different MCDA methods.

Table A12 shows that the mean values of the correlation between VIKOR rankings and other methods’ rankings are around zero. It is also noticeable that for cases VIKOR vs. other method correlation for rankings obtained using equal weights has a smaller variance in comparison to the other two weighting methods. Next, we could see that mostly correlated rankings could be obtained with TOPSIS minmax and PROMETHEE II usual methods. The comparisons between TOPSIS minmax vs. COPRAS and PROMETHEE II usual vs. COPRAS look quite similar and this methods’ rankings are less correlated between themselves than TOPSIS minmax and PROMETHEE II usual methods’ rankings.

The general situation is quite similar for correlation coefficient, as it shows Figure 18. The rankings obtained by VIKOR are far less correlated to rankings obtained using the other three methods than these rankings between themselves. Similarly to , points that rankings obtained using TOPSIS minmax and PROMETHEE II usual methods have a strong correlation between themselves. Rankings obtained by TOPSIS minmax, COPRAS, and PROMETHEE II with the usual preference function are slightly less correlated. However, correlations between them are stronger than in the case of VIKOR vs. other methods.

Figure 18.

Comparison of the similarity coefficient for the different MCDA methods.

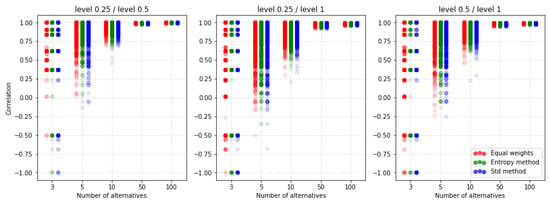

5.5. Dependence of Ranking Similarity Coefficients on the Distance between Weight Vectors

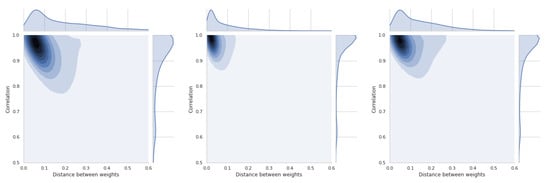

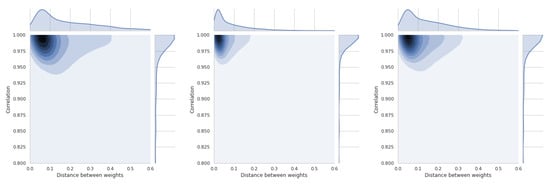

The last section is devoted to a short presentation of distance distributions between weight vectors and similarity coefficients. All these distributions are asymmetric and will be briefly discussed for each of the four methods used. Thus, Figure 19 shows the relations between coefficient and TOPSIS method. The smallest difference in the distance between the vectors of weights is between the equal weights and those obtained by the std method. These values refer to all simulations that have been performed and cannot be generalized to the whole space. In Figure A15, there is a distribution for the coefficient, which confirms that there is a moderate relation between the distance of weight vectors and the similarity coefficient of obtained rankings.

Figure 19.

Relationship between the euclidean distance of weights and similarity coefficient for rankings obtained by the TOPSIS method with different weighting methods, where (left) equal/entropy, (center) equal/std, and (right) std/entropy.

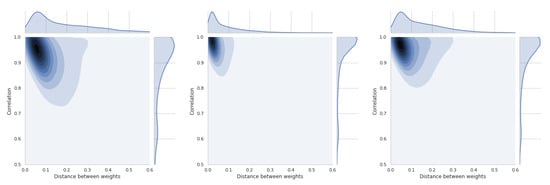

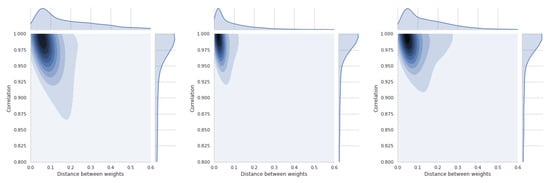

In Figure 20, the results of the VIKOR method are presented. The distribution of similarity coefficient is very similar to the distribution obtained for the TOPSIS method. Distance distributions between vectors of respective methods are the same for all presented graphs. Figure A16 shows the relationships for the VIKOR method and WS coefficient.

Figure 20.

Relationship between the euclidean distance of weights and similarity coefficient for rankings obtained by the VIKOR method with different weighting methods, where (left) equal/entropy, (center) equal/std, and (right) std/entropy.

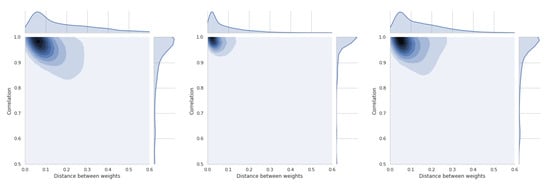

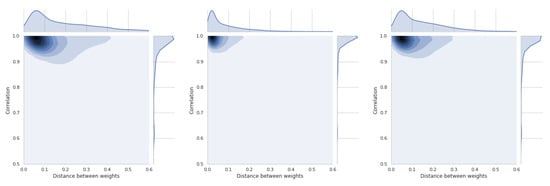

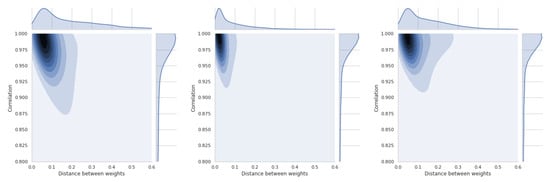

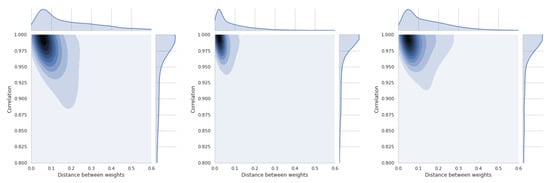

Using the PROMETHEE II method (usual), we can observe changes in the relation which is presented in Figure 21 and Figure A17. This means that the use of different weights in the presented simulation was less important in the PROMETHEE II method than in the TOPSIS or VIKOR method. The distance between the scale vectors was the least important in the COPRAS method, the results of which are presented in Figure 22 and Figure A18.

Figure 21.

Relationship between the euclidean distance of weights and similarity coefficient for rankings obtained by the PROMETHEE II (usual) method with different weighting methods, where (left) equal/entropy, (center) equal/std, and (right) std/entropy.

Figure 22.

Relationship between the euclidean distance of weights and similarity coefficient for rankings obtained by the COPRAS method with different weighting methods, where (left) equal/entropy, (center) equal/std, and (right) std/entropy.

These results are important preliminary research on whether the weights and their differences always have a important influence on the compliance of the obtained rankings. TOPSIS and VIKOR have the highest sensitivity in the presented experiment, and COPRAS has the lowest sensitivity.

6. Conclusions

The results of the conducted research indicate that when choosing the MCDA method, not only the method itself but also the method of normalization and other parameters should be carefully selected. Almost every combination of the method and its parameters may bring us different results. In our study, we have checked how much influence these decisions can have on the ranking of the decision problem. As it turns out, it may weigh not only the correct identification of the best alternative but also the whole ranking.

For the TOPSIS method, rankings obtained using minmax, max, and vector normalization method could be quite similar, especially for the big number of alternatives. In this case, equal weights performed worse than entropy or standard deviation method. Furthermore, with these normalization methods, correlation of rankings had a smaller variance when the entropy weighting method was used. For VIKOR, rankings obtained using any normalization methods could be even reversed in comparison to rankings obtained using VIKOR without normalization. Thus, although it was not necessary to apply normalization when using VIKOR, applying one could be noticeable to improve rankings and the overall performance of the method. Equal weights performed better with VIKOR. The PROMETHEE II method, despite the lack of use of normalization, returned quite different results depending on the set of parameters used and it clearly showed that the choice of the method and its configuration for the decision-making problem was important and should be the subject of further benchmarking. In four different method comparison, rankings obtained using VIKOR without normalization were very different from rankings obtained by other methods. Some equal rankings for the small number of alternatives was achieved. However, for a greater number of alternatives, correlations between VIKOR’s rankings and other methods rankings had oscillated around zero, which means that there was no correlation between these rankings. Most similar rankings were obtained using TOPSIS minmax and PROMETHEE II usual methods. In this case, equal weighting methods performed slightly better. This proves that it is worthwhile to research in order to develop reliable and generalized benchmarks.

The direction of future work should be focused on the development of an algorithm for estimation of accuracy for multi-criteria decision analysis method and estimating the accuracy of selected multi-criteria decision-making methods. However, special attention should be paid to the family of fuzzy set-based MCDA methods and group decision making methods as well. Another challenge is to create a method that, based on the results of the benchmarks, will be able to recommend a proper solution.

Author Contributions

Conceptualization, A.S. and W.S.; methodology, A.S. and W.S.; software, A.S.; validation, A.S., J.W., and W.S.; formal analysis, A.S., J.W., and W.S.; investigation, A.S. and W.S.; resources, A.S.; data curation, A.S.; writing—original draft preparation, A.S. and W.S.; writing—review and editing, A.S., J.W., and W.S.; visualization, A.S. and W.S.; supervision, W.S.; project administration, W.S.; funding acquisition, W.S. All authors have read and agreed to the published version of the manuscript.

Funding

The work was supported by the National Science Centre, Decision number UMO-2018/29/B/HS4/02725.

Acknowledgments

The authors would like to thank the editor and the anonymous reviewers, whose insightful comments and constructive suggestions helped us to significantly improve the quality of this paper.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| TOPSIS | Technique for Order of Preference by Similarity to Ideal Solution |

| VIKOR | VlseKriterijumska Optimizacija I Kompromisno Resenje (Serbian) |

| COPRAS | Complex Proportional Assessment |

| PROMETHEE | Preference Ranking Organization Method for Enrichment of Evaluation |

| MCDA | Multi Criteria Decision Analysis |

| MCDM | Multi Criteria Decision Making |

| PIS | Positive Ideal Solution |

| NIS | Negative ideal Solution |

| SD | Standard Deviation |

Appendix A. Figures

Figure A1.

correlations heat map for TOPSIS with different normalization methods (matrix 2).

Figure A2.

correlations heat map for TOPSIS with different normalization methods (matrix 2).

Figure A3.

correlations heat map for VIKOR with different normalization methods (matrix 2).

Figure A4.

correlations heat map for VIKOR with different normalization methods (matrix 2).

Figure A5.

correlations heat map for PROMETHEE II with different preference functions (matrix 2).

Figure A6.

correlations heat map for PROMETHEE II with different preference functions (matrix 2).

Figure A7.

correlations heat map for PROMETHEE II with different preference functions (matrix 2).

Figure A8.

correlations heat map for PROMETHEE II with different preference functions (matrix 2).

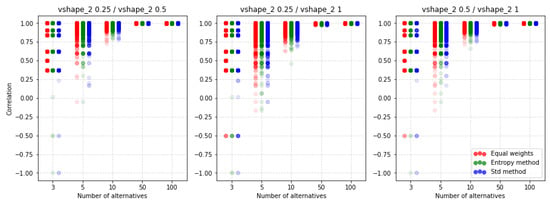

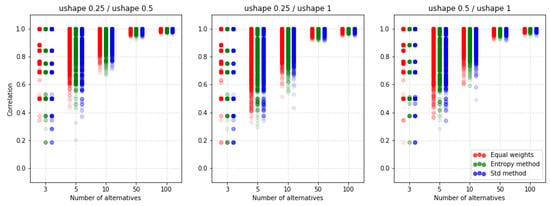

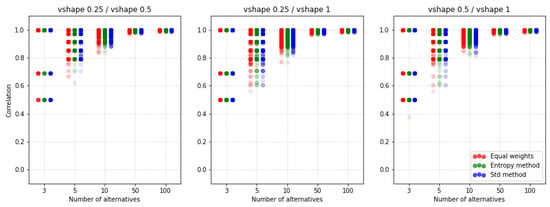

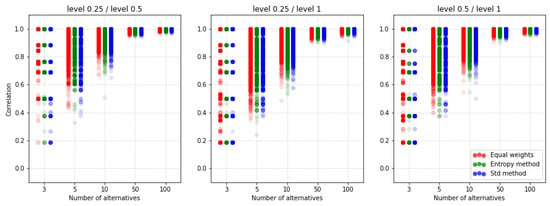

Figure A9.

Comparison of the similarity coefficient for the PROMETHEE II method with level preference function and different k values.

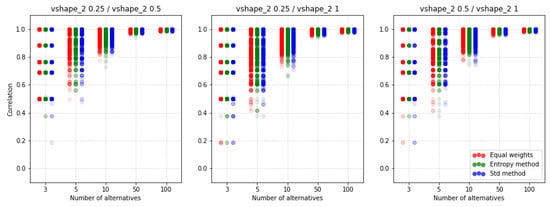

Figure A10.

Comparison of the similarity coefficient for the PROMETHEE II method with V-shape 2 preference function and different k values.

Figure A11.

Comparison of the similarity coefficient for the PROMETHEE II method with U-shape preference function and different k values.

Figure A12.

Comparison of the similarity coefficient for the PROMETHEE II method with V-shape preference function and different k values.

Figure A13.

Comparison of the similarity coefficient for the PROMETHEE II method with level preference function and different k values.

Figure A14.

Comparison of the similarity coefficient for the PROMETHEE II method with V-shape 2 preference function and different k values.

Figure A15.

Relationship between the euclidean distance of weights and similarity coefficient for rankings obtained by the TOPSIS method with different weighting methods, where (left) equal/entropy, (center) equal/std, and (right) std/entropy.

Figure A16.