Dihedral Group D4—A New Feature Extraction Algorithm

Abstract

1. Introduction

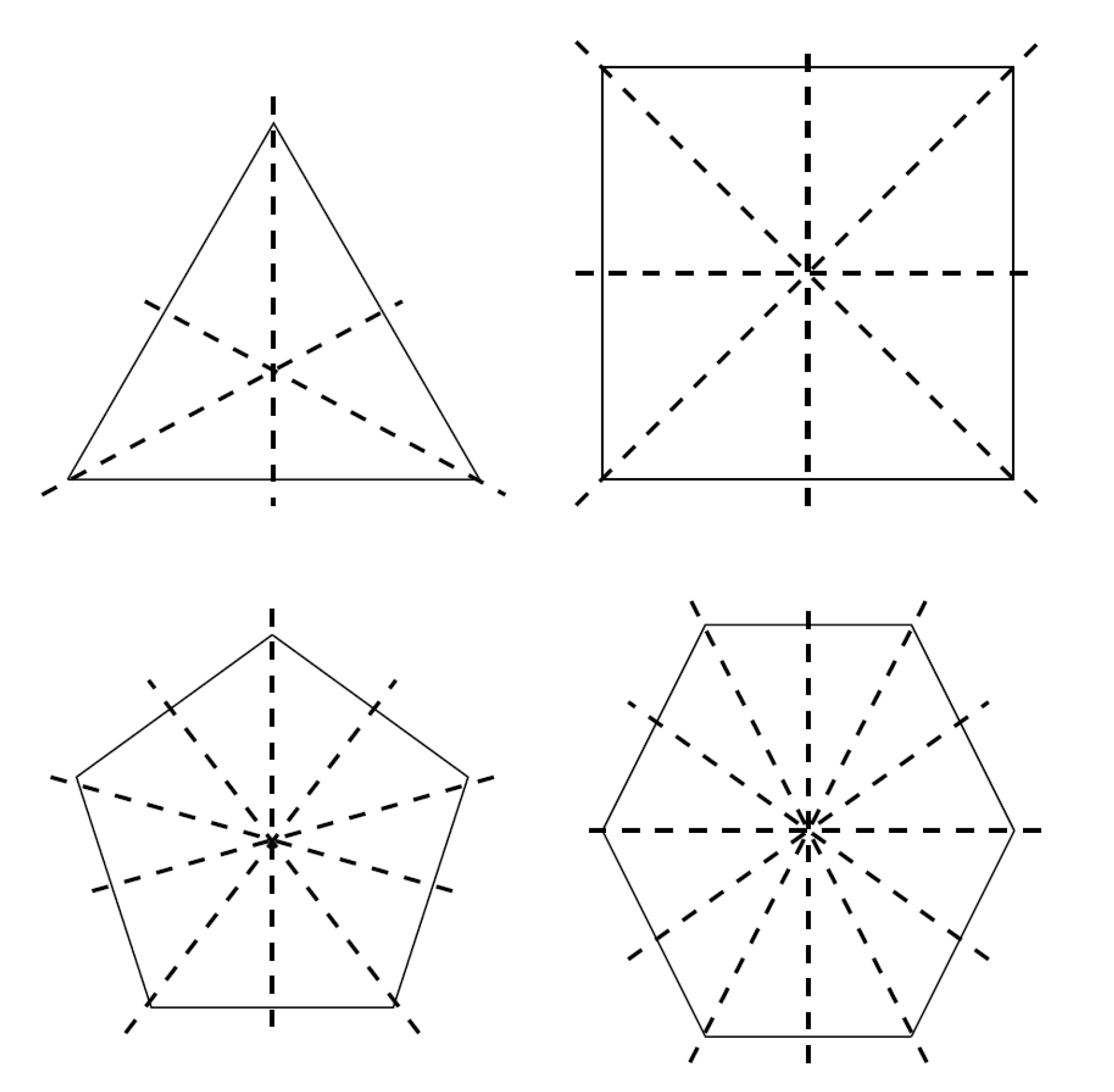

2. Theory

- (i)

- G must be closed under ∗, that is, for every pair of elements in G we must have that is again an element in G.

- (ii)

- The operation ∗ must be associative, that is, for all elements in G we must have that

- (iii)

- There is an element e in G, called the identity element, such that for all we have that

- (iv)

- For every element g in G there is an element in G, called the inverse of g, such that

The Group D

3. Method

3.1. De-Correlated Color Space

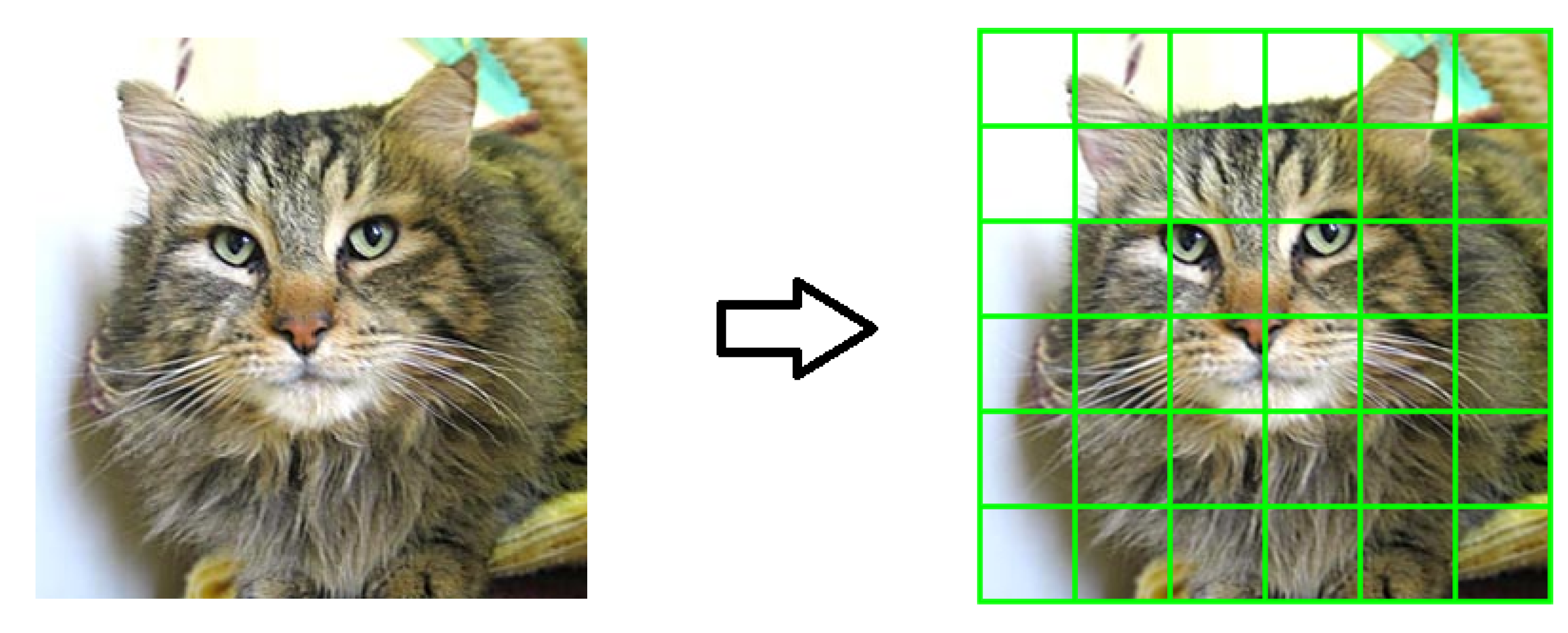

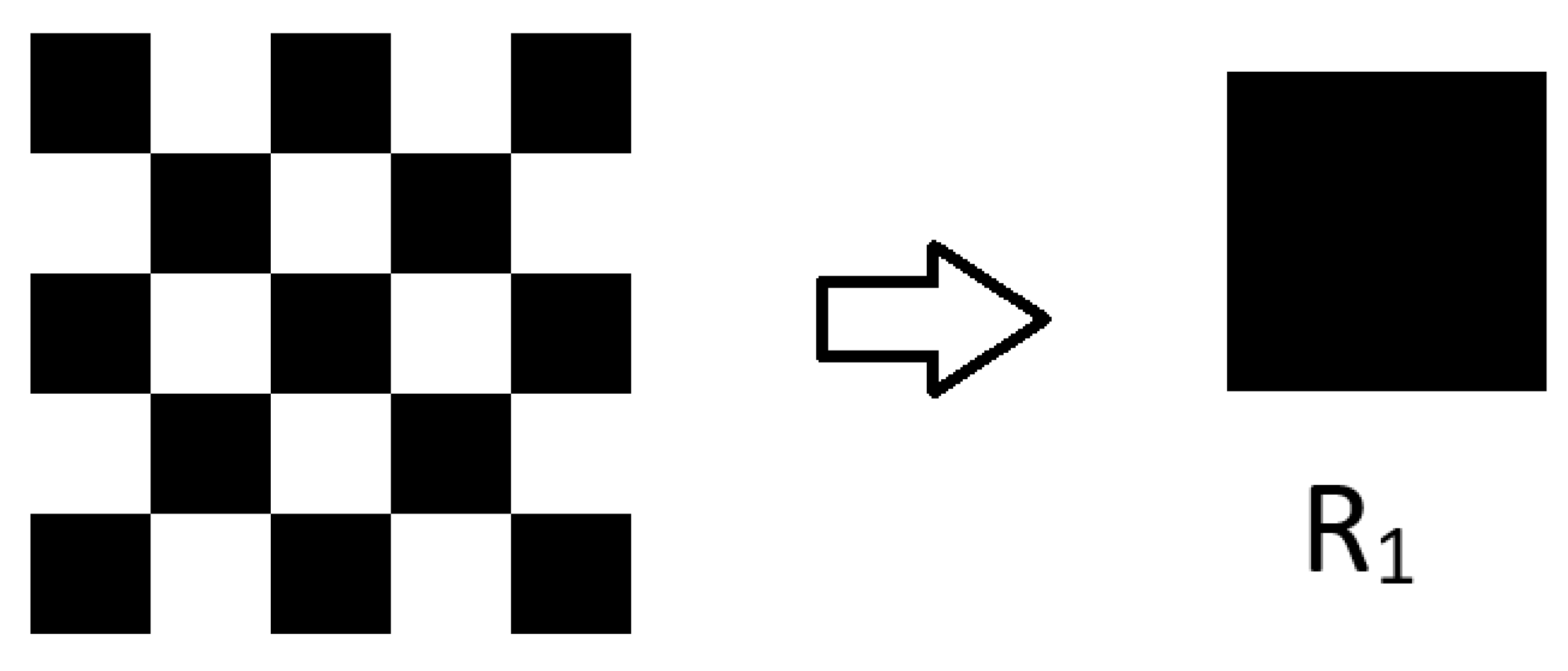

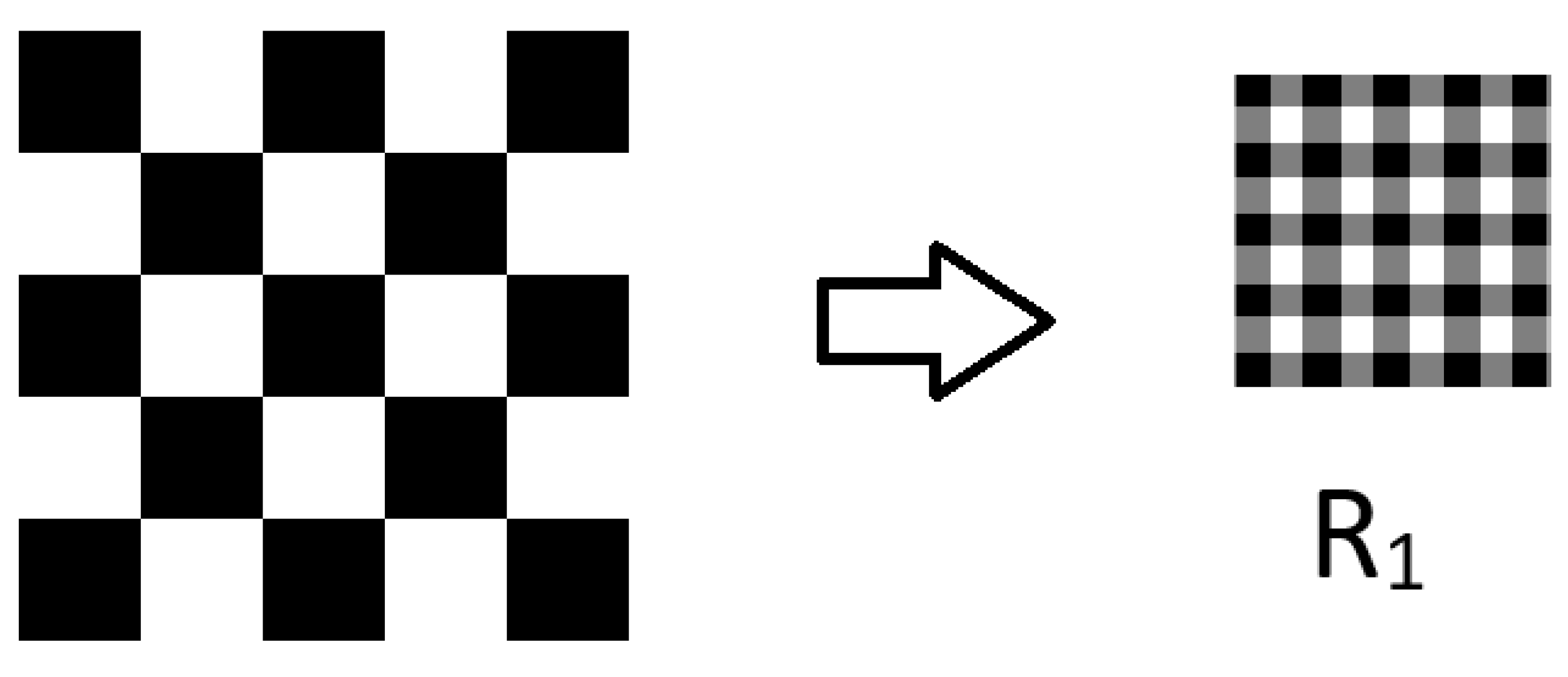

3.2. Proposed D Model

Special Case

3.3. Databases

3.4. Procedure for Analysis

ECOC Algorithm

4. Results

4.1. Colorspace Selection

4.2. Norm Function Selection

4.3. Comparison for Different Databases

5. Discussion

6. Conclusions

Funding

Acknowledgments

Conflicts of Interest

References

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar] [CrossRef]

- Ma, Y.; Chen, X.; Chen, G. Pedestrian Detection and Tracking Using HOG and Oriented-LBP Features. In Network and Parallel Computing; Altman, E., Shi, W., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 176–184. [Google Scholar]

- Jia, W.; Hu, R.; Lei, Y.; Zhao, Y.; Gui, J. Histogram of Oriented Lines for Palmprint Recognition. IEEE Trans. Syst. Man Cybern. Syst. 2014, 44, 385–395. [Google Scholar] [CrossRef]

- Hu, R.; Collomosse, J. A performance evaluation of gradient field HOG descriptor for sketch based image retrieval. Comput. Vis. Image Underst. 2013, 117, 790–806. [Google Scholar] [CrossRef]

- Tian, S.; Lu, S.; Su, B.; Tan, C.L. Scene Text Recognition Using Co-occurrence of Histogram of Oriented Gradients. In Proceedings of the 2013 12th International Conference on Document Analysis and Recognition, Washington, DC, USA, 25–28 August 2013; pp. 912–916. [Google Scholar] [CrossRef]

- Kassani, P.H.; Hyun, J.; Kim, E. Application of soft Histogram of Oriented Gradient on traffic sign detection. In Proceedings of the 2016 13th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Xian, China, 19–22 August 2016; pp. 388–392. [Google Scholar] [CrossRef]

- Cheng, R.; Wang, K.; Yang, K.; Long, N.; Bai, J.; Liu, D. Real-time pedestrian crossing lights detection algorithm for the visually impaired. Multimed. Tools Appl. 2017, 77. [Google Scholar] [CrossRef]

- Mao, L.; Xie, M.; Huang, Y.; Zhang, Y. Preceding vehicle detection using Histograms of Oriented Gradients. In Proceedings of the 2010 International Conference on Communications, Circuits and Systems (ICCCAS), Chengdu, China, 28–30 July 2010; pp. 354–358. [Google Scholar] [CrossRef]

- Mohan, A.; Papageorgiou, C.; Poggio, T. Example-Based Object Detection in Images by Components. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 349–361. [Google Scholar] [CrossRef]

- Ke, Y.; Sukthankar, R. PCA-SIFT: A more distinctive representation for local image descriptors. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2004), Washington, DC, USA, 27 June–2 July 2004; Volume 2, p. 2. [Google Scholar] [CrossRef]

- Belongie, S.; Malik, J.; Puzicha, J. Matching shapes. In Proceedings of the Eighth IEEE International Conference on Computer Vision (ICCV 2001), Vancouver, BC, Canada, 7–14 July 2001; Volume 1, pp. 454–461. [Google Scholar] [CrossRef]

- Lenz, R. Using representations of the dihedral groups in the design of early vision filters. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP-93), Minneapolis, MN, USA, 27–30 April 1993; pp. 165–168. [Google Scholar]

- Lenz, R. Investigation of Receptive Fields Using Representations of the Dihedral Groups. J. Vis. Commun. Image Represent. 1995, 6, 209–227. [Google Scholar] [CrossRef]

- Foote, R.; Mirchandani, G.; Rockmore, D.N.; Healy, D.; Olson, T. A wreath product group approach to signal and image processing. I. Multiresolution analysis. IEEE Trans. Signal Process. 2000, 48, 102–132. [Google Scholar] [CrossRef]

- Lenz, R.; Bui, T.H.; Takase, K. A group theoretical toolbox for color image operators. In Proceedings of the ICIP 2005 IEEE International Conference on Image Processing, Genova, Italy, 14 September 2005; Volume 3, pp. 557–560. [Google Scholar]

- Sharma, P.; Eiksund, O. Group Based Asymmetry–A Fast Saliency Algorithm. In Advances in Visual Computing; Bebis, G., Boyle, R., Parvin, B., Koracin, D., Pavlidis, I., Feris, R., McGraw, T., Elendt, M., Kopper, R., Ragan, E., et al., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 901–910. [Google Scholar]

- Sharma, P. Modeling Bottom-Up Visual Attention Using Dihedral Group D4. Symmetry 2016, 8, 79. [Google Scholar] [CrossRef]

- Dummit, D.S.; Foote, R.M. Abstract Algebra; John Wiley & Sons: Hoboken, NJ, USA, 2004. [Google Scholar]

- Elson, J.; Douceur, J.J.; Howell, J.; Saul, J. Asirra: A CAPTCHA that Exploits Interest-Aligned Manual Image Categorization. In Proceedings of the 14th ACM Conference on Computer and Communications Security (CCS), Alexandria, VA, USA, 29 October–2 November 2007. [Google Scholar]

- Xiao, H.; Rasul, K.; Vollgraf, R. Fashion-MNIST: A Novel Image Dataset for Benchmarking Machine Learning Algorithms. arXiv 2017, arXiv:1708.07747. [Google Scholar]

- Sharma, P.; Dalin, P.; Mann, I. Towards a Framework for Noctilucent Cloud Analysis. Remote Sens. 2019, 11, 2743. [Google Scholar] [CrossRef]

- Trosten, D.J.; Sharma, P. Unsupervised Feature Extraction–A CNN-Based Approach. In Image Analysis; Felsberg, M., Forssén, P.E., Sintorn, I.M., Unger, J., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 197–208. [Google Scholar]

- Dietterich, T.G.; Bakiri, G. Solving Multiclass Learning Problems via Error-correcting Output Codes. J. Artif. Int. Res. 1995, 2, 263–286. [Google Scholar] [CrossRef]

- Sejnowski, T.J.; Rosenberg, C.R. Neurocomputing: Foundations of Research; Chapter NETtalk: A Parallel Network That Learns to Read Aloud; MIT Press: Cambridge, MA, USA, 1988; pp. 661–672. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Bagheri, M.; Montazer, G.A.; Escalera, S. Error correcting output codes for multiclass classification: Application to two image vision problems. In Proceedings of the The 16th CSI International Symposium on Artificial Intelligence and Signal Processing (AISP 2012), Fars, Iran, 2–3 May 2012; pp. 508–513. [Google Scholar] [CrossRef]

- Escalera, S.; Pujol, O.; Radeva, P. Separability of ternary codes for sparse designs of error-correcting output codes. Pattern Recognit. Lett. 2009, 30, 285–297. [Google Scholar] [CrossRef]

- Ballesteros, G.; Salgado, L. Optimized HOG for on-road video based vehicle verification. In Proceedings of the 2014 22nd European Signal Processing Conference (EUSIPCO), Lisbon, Portugal, 1–5 September 2014; pp. 805–809. [Google Scholar]

- Bilgic, B.; Horn, B.K.P.; Masaki, I. Fast human detection with cascaded ensembles on the GPU. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, San Diego, CA, USA, 21–24 June 2010; pp. 325–332. [Google Scholar] [CrossRef]

- Liu, H.; Xu, T.; Wang, X.; Qian, Y. Related HOG Features for Human Detection Using Cascaded Adaboost and SVM Classifiers. In Advances in Multimedia Modeling; Li, S., El Saddik, A., Wang, M., Mei, T., Sebe, N., Yan, S., Hong, R., Gurrin, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 345–355. [Google Scholar]

| Dataset | Size | Channels | Samples | Classes |

|---|---|---|---|---|

| Cats and Dogs [19] | 60 × 60 | 2 (RGB) | 8192 | 2 |

| Fashion-MNIST [20] | 28 × 28 | 1 (Gray) | 60,000 | 10 |

| Person [1] | 64 × 128 | 3 (RGB) | 7264 | 2 |

| NLC [21] | 50 × 50 | 3 (RGB) | 24,000 | 4 |

| Colorspace | N | Accuracy (in %) |

|---|---|---|

| RGB | 16 | 94.80 |

| L*a*b* | 16 | 97.07 |

| HSV | 16 | 97.51 |

| De-Corr | 16 | 96.20 |

| Norm | N | Accuracy (in %) |

|---|---|---|

| 16 | 95.74 | |

| 16 | 95.91 | |

| As defined in Equation (4) | 16 | 97.51 |

| Model | N | Feature Vector Size | Database | Accuracy |

|---|---|---|---|---|

| D | 8 | 4725 | Cats and Dogs [19] | 67.43% |

| HOG [1] | 8 | 3888 | Cats and Dogs [19] | 68.19% |

| D | 16 | 1029 | Cats and Dogs [19] | 69.21% |

| HOG [1] | 16 | 432 | Cats and Dogs [19] | 69.66% |

| D + HOG | 16 | 1461 | Cats and Dogs [19] | 73.76% |

| D | 16 | 2205 | Person [1] | 97.51% |

| HOG [1] | 16 | 2268 | Person [1] | 96.98% |

| D + HOG | 16 | 4473 | Person [1] | 98.09% |

| D | 4 | 1183 | Fashion-MNIST [20] | 90.61% |

| HOG [1] | 4 | 1296 | Fashion-MNIST [20] | 90.61% |

| D + HOG | 4 | 2479 | Fashion-MNIST [20] | 91.50% |

| D | 8 | 3549 | NLC [21] | 89.55% |

| HOG [1] | 8 | 2700 | NLC [21] | 84.11% |

| D | 16 | 1029 | NLC [21] | 86.35% |

| HOG [1] | 16 | 432 | NLC [21] | 80.94% |

| D + HOG | 16 | 1461 | NLC [21] | 92.63% |

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sharma, P. Dihedral Group D4—A New Feature Extraction Algorithm. Symmetry 2020, 12, 548. https://doi.org/10.3390/sym12040548

Sharma P. Dihedral Group D4—A New Feature Extraction Algorithm. Symmetry. 2020; 12(4):548. https://doi.org/10.3390/sym12040548

Chicago/Turabian StyleSharma, Puneet. 2020. "Dihedral Group D4—A New Feature Extraction Algorithm" Symmetry 12, no. 4: 548. https://doi.org/10.3390/sym12040548

APA StyleSharma, P. (2020). Dihedral Group D4—A New Feature Extraction Algorithm. Symmetry, 12(4), 548. https://doi.org/10.3390/sym12040548