Abstract

This paper is concerned with the least squares estimation of drift parameters for the Cox–Ingersoll–Ross (CIR) model driven by small symmetrical α-stable noises from discrete observations. The contrast function is introduced to obtain the explicit formula of the estimators and the error of estimation is given. The consistency and the rate of convergence of the estimators are proved. The asymptotic distribution of the estimators is studied as well. Finally, some numerical calculus examples and simulations are given.

1. Introduction

Stochastic differential equations driven by Brownian motion are always used to model the phenomena influenced by stochastic factors such as molecular thermal motion and short-term interest rate [1,2]. When establishing a pricing formula, the parameters in stochastic models describe the relevant asset dynamics. Nevertheless, in most cases, parameters are always unknown. Over the past few decades, many authors have studied the parameter estimation problem by maximum likelihood estimation [3,4,5], least squares estimation [6,7,8], and Bayes estimation [9,10]. However, non-Gaussian noise such as -stable noise can more accurately reflect the practical random perturbation. Therefore, stochastic differential equations driven by -stable noise have been investigated by many authors in recent years. Particularly, the parameter estimation problem has been discussed as well [11,12].

The Cox–Ingersoll–Ross (CIR) model [13,14] introduced in 1985 is an extension of the Vasicek model [15]; it is mean-reverting and remains non-negative. As we all know, the parameter estimation problem for the CIR model has been well studied [16,17]. However, many financial processes exhibit discontinuous sample paths and heavy-tailed properties (e.g., certain moments are infinite). These features cannot be captured by the CIR model. Therefore, it is natural to replace the Brownian motion by an -stable process. In recent years, parameter estimation problems for the Levy-type CIR model have been discussed in some literature studies. For example, Ma and Yang [18] used least squares methods to study the parameter estimation problem for the CIR model driven by -stable noises. Li and Ma [19] derived the conditional least squares estimators for a stable CIR model. However, the asymptotic distribution of the estimators has not been discussed in the literature. Asymptotic properties of estimators such as consistency, asymptotic distribution of estimation errors, and hypothesis tests can reflect the effectiveness of estimators and estimation methods, which helps to obtain a more reasonable economic model structure and more accurately grasp the dynamics of related assets. Therefore, it is of great important to study these topics.

The parameter estimation problem for the discretely observed CIR model with small symmetrical -stable noises is studied in this article. The contrast function is introduced to obtain the least squares estimators. The consistency and asymptotic distribution of the estimators are derived by Markov inequality, Cauchy–Schwarz inequality and Gronwall’s inequality. Some numerical calculus examples and simulations are given as well.

The structure of this paper is as follows. In Section 2, we introduce the CIR model driven by small symmetrical -stable noises and obtain the explicit formula of the least squares estimators. In Section 3, the consistency and asymptotic distribution of the estimators are studied. In Section 4, some simulation results are made. The conclusions are given in Section 5.

2. Problem Formulation and Preliminaries

In this paper, notation “” is used to denote “convergence in probability” and notation “⇒” is used to denote “convergence in distribution”. We write for equality in distribution.

Let be a basic probability space equipped with a right continuous and increasing family of -algebras and be a strictly symmetric -stable Levy motion.

A random variable is said to have a stable distribution with index of stability , scale parameter , skewness parameter , and location parameter , if it has the following characteristic function:

We denote . When , we say is strictly -stable, if in addition , we call symmetrical -stable. Throughout this paper, it is assumed that -stable motion is strictly symmetrical and .

In this paper, we study the parametric estimation problem for the Cox–Ingersoll–Ross Model driven by small -stable noises described by the following stochastic differential equation:

where and are unknown parameters. We assume that .

To get the least squares estimators, we introduce the following contrast function:

where . Then, the least squares estimators and are defined as follows:

It is easy to obtain the least squares estimators:

3. Main Results and Proofs

Let be the solution to the underlying ordinary differential equation under the true value of the parameters:

Note that

Then, we can give a more explicit decomposition for and as follows:

Before giving the theorems, we need to establish some preliminary results.

Lemma 1.

[20] Let be a strictly -stable Levy process and , where is the family of all real-valued predictable processes on such that for every , Then,

If is symmetric, that is, , then there exists some -stable Levy process , such that

Lemma 2.

When, we have

Proof.

Observe that

By using the Cauchy–Schwarz inequality, we find

According to Gronwall’s inequality, we obtain

Then, it follows that

Assume that By the Markov inequality, for any given , when , we have

where C is a constant.

Therefore, it is easy to check that

The proof is complete.□

Remark 1.

In Lemma 2, the following moment inequalities for stable stochastic integrals has been used to obtain the results:

The above moment inequalities for stable stochastic integrals were established in Theorems 3.1 and 3.2 of [21].

Proposition 1.

When, we have

Proof.

Since

it is clear that

According to Lemma 2, when , we have

Therefore, we obtain

The proof is complete.□

Proposition 2.

When, we have

Proof.

Since

it is clear that

According to Lemma 2, when , we have

Therefore, we obtain

In the following theorem, the consistency of the least squares estimators is proved.

The proof is complete.

Theorem 1.

Whenand, the least squares estimatorsandare consistent, namely

Proof.

According to Propositions 1 and 2, it is clear that

When , it can be checked that

and

According to Lemma 2, we have

By the Markov inequality, we have

where is constant and implies that as and .

With the results of Proposition 1 and (16), we have

since

By the Markov inequality, we have

which implies that as and .

According to Lemma 2, when , it is obvious that

Then, we have

Therefore, by (16), (19), and (22), when and , we have

Using the same methods in Theorem 1, it can be easily checked that

Then, according to (16), we have

Together with the results that

when and , we have

The proof is complete.□

Theorem 2.

Whenand,

Proof.

According to the explicit decomposition for , it is obvious that

From Lemma 2, when and ,

then, it is easy to check that

Together with (12) and (16), we have

and

since

Using the Markov inequality and Holder’s inequality, for any given , we have

as and .

Moreover,

where .

Since

it is clear that

It immediately follows that

Then, from (12), (16), and (33), we have

as

According to above results, it is obvious that

and

Then, we have

The proof is complete.□

4. Simulation

In this experiment, we generate a discrete sample and compute and from the sample. We let and . For every given true value of the parameters , the size of the sample is represented as “Size n” and given in the first column of the table. In Table 1, , the size is increasing from 1000 to 5000. In Table 2, , the size is increasing from 10,000 to 50,000. Based on the ten-time average of the least squares estimation of the random number in the calculation model, the tables list the values of the least squares estimator (LSE) of (“”) and (“”), the absolute error (AE), and the relative error (RE) of the least squares estimator.

Table 1.

Least squares estimator simulation results of and .

Table 2.

Least squares estimator simulation results of and .

The two tables indicate that the absolute error between the estimator and the true value depends on the size of the true value samples for any given parameter. In Table 1, when n = 5000, the relative error of the estimators does not exceed 7%. In Table 2, when n = 50,000, the relative error of the estimators does not exceed 0.2%. The estimators are good.

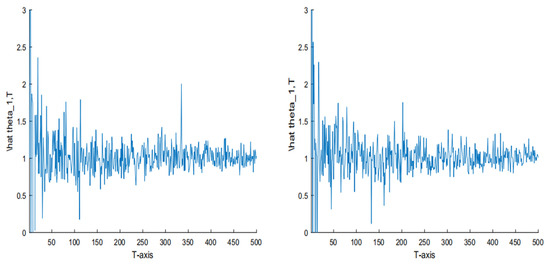

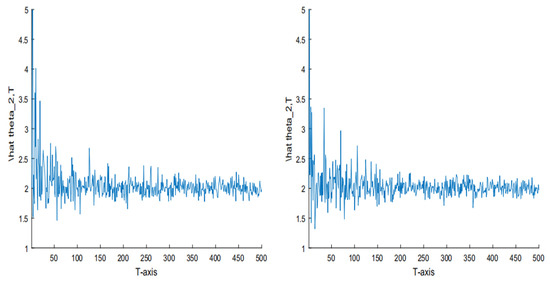

In Figure 1, we let under T = 500, and ,respectively. In Figure 2, we let under T = 500, and , respectively. The two figures indicate that when T is fixed and is small, the obtained estimators are closer to the true parameter value compared to that of the large . When T is large enough and is small enough, the obtained estimators are very close to the true parameter value. If we let T convergeto infinity and convergeto zero, the two estimators will converge to the true value.

Figure 1.

The simulation of the estimator with T = 500 and under and , respectively.

Figure 2.

The simulation of the estimator and with T = 500 and under and , respectively.

5. Conclusions

The aim of this paper was to study the parameter estimation problem for the Cox–Ingersoll–Ross model driven by small symmetrical -stable noises from discrete observations. The contrast function was introduced to obtain the explicit formula of the least squares estimators and the error of estimation was given. The consistency and the rate of convergence of the least squares estimators were proved by Markov inequality, Cauchy–Schwarz inequality, and Gronwall’s inequality. The asymptotic distribution of the estimators were discussed as well.

Funding

This research was funded by the National Natural Science Foundation of China under Grant Nos. 61403248 and U1604157.

Conflicts of Interest

The author declares no conflict of interest.The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Bishwal, J.P.N. Parameter Estimation in Stochastic Differential Equations; Springer: Berlin, Germany, 2008. [Google Scholar]

- Protter, P.E. Stochastic Integration and Differential Equations: Stochastic Modelling and Applied Probability, 2nd ed.; Applications of Mathematics (New York) 21; Springer: Berlin, Germany, 2004. [Google Scholar]

- Lauritzen, S.; Uhler, C.; Zwiernik, P. Maximum likelihood estimation in Gaussian models under total positivity. Ann. Stat. 2019, 47, 1835–1863. [Google Scholar]

- Wen, J.H.; Wang, X.J.; Mao, S.H.; Xiao, X.P. Maximum likelihood estimation of McKean–Vlasov stochastic differential equation and its application. Appl. Math. Comput. 2015, 274, 237–246. [Google Scholar]

- Wei, C.; Shu, H.S. Maximum likelihood estimation for the drift parameter in diffusion processes. Stoch. Int. J. Probab. Stoch. Process. 2016, 88, 699–710. [Google Scholar]

- Lu, W.; Ke, R. A generalized least squares estimation method for the autoregressive conditional duration model. Stat. Pap. 2019, 60, 123–146. [Google Scholar]

- Mendy, I. Parametric estimation for sub-fractional Ornstein-Uhlenbeck process. J. Stat. Plan. Inference 2013, 143, 663–674. [Google Scholar]

- Skouras, K. Strong consistency in nonlinear stochastic regression models. Ann. Stat. 2000, 28, 871–879. [Google Scholar]

- Deck, T. Asymptotic properties of Bayes estimators for Gaussian Ito processes with noisy observations. J. Multivar. Anal. 2006, 97, 563–573. [Google Scholar]

- Kan, X.; Shu, H.S.; Che, Y. Asymptotic parameter estimation for a class of linear stochastic systems using Kalman-Bucy filtering. Math. Probl. Eng. 2012, 2012, 1–12. [Google Scholar]

- Long, H.W. Least squares estimator for discretely observed Ornstein-Uhlenbeck processes with small Levy noises. Stat. Probab. Lett. 2009, 79, 2076–2085. [Google Scholar]

- Long, H.W.; Shimizu, Y.; Sun, W. Least squares estimators for discretely observed stochastic processes driven by small Levy noises. J. Multivar. Anal. 2013, 116, 422–439. [Google Scholar] [CrossRef]

- Cox, J.; Ingersoll, J.; Ross, S. An intertemporal general equilibrium model of asset prices. Econometrica 1985, 53, 363–384. [Google Scholar] [CrossRef]

- Cox, J.; Ingersoll, J.; Ross, S. A theory of the term structure of interest rates. Econometrica 1985, 53, 385–408. [Google Scholar] [CrossRef]

- Vasicek, O. An equilibrium characterization of the term structure. J. Financ. Econ. 1977, 5, 177–186. [Google Scholar] [CrossRef]

- Bibby, B.; Sqrensen, M. Martingale estimation functions for discretely observed diffusion processes. Bernoulli 1995, 1, 17–39. [Google Scholar] [CrossRef]

- Wei, C.; Shu, H.S.; Liu, Y.R. Gaussian estimation for discretely observed Cox-Ingersoll-Ross model. Int. J. Gen. Syst. 2016, 45, 561–574. [Google Scholar] [CrossRef]

- Ma, C.H.; Yang, X. Small noise fluctuations of the CIR model driven by α-stable noises. Stat. Probab. Lett. 2014, 94, 1–11. [Google Scholar] [CrossRef]

- Li, Z.H.; Ma, C.H. Asymptotic properties of estimators in a stable Cox-Ingersoll-Ross model. Stoch. Process. Appl. 2015, 125, 3196–3233. [Google Scholar] [CrossRef]

- Kallenberg, O. Some time change representations of stable integrals, via predictable transformations of local martingales. Stoch. Process. Appl. 1992, 40, 199–223. [Google Scholar] [CrossRef]

- Rosinski, J.; Woyczynski, W.A. Moment inequalities for real and vector p-stable stochastic integrals. In Probability in Banach Spaces V; Lecture Notes in Math; Springer: Berlin, Germany, 1985; Volume 1153, pp. 369–386. [Google Scholar]

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).