Abstract

Many problems in diverse disciplines such as applied mathematics, mathematical biology, chemistry, economics, and engineering, to mention a few, reduce to solving a nonlinear equation or a system of nonlinear equations. Then various iterative methods are considered to generate a sequence of approximations converging to a solution of such problems. The goal of this article is two-fold: On the one hand, we present a correct convergence criterion for Newton–Hermitian splitting (NHSS) method under the Kantorovich theory, since the criterion given in Numer. Linear Algebra Appl., 2011, 18, 299–315 is not correct. Indeed, the radius of convergence cannot be defined under the given criterion, since the discriminant of the quadratic polynomial from which this radius is derived is negative (See Remark 1 and the conclusions of the present article for more details). On the other hand, we have extended the corrected convergence criterion using our idea of recurrent functions. Numerical examples involving convection–diffusion equations further validate the theoretical results.

1. Introduction

Numerous problems in computational disciplines can be reduced to solving a system of nonlinear equations with n equations in n variables like

using Mathematical Modelling [1,2,3,4,5,6,7,8,9,10,11]. Here, F is a continuously differentiable nonlinear mapping defined on a convex subset of the dimensional complex linear space into In general, the corresponding Jacobian matrix is sparse, non-symmetric and positive definite. The solution methods for the nonlinear problem are iterative in nature, since an exact solution could be obtained only for a few special cases. In the rest of the article, some of the well established and standard results and notations are used to establish our results (See [3,4,5,6,10,11,12,13,14] and the references there in). Undoubtedly, some of the well known methods for generating a sequence to approximate are the inexact Newton (IN) methods [1,2,3,5,6,7,8,9,10,11,12,13,14]. The IN algorithm involves the steps as given in the following:

Algorithm IN [6]

- Step 1: Choose initial guess , tolerance value ; Set

- Step 2: While , Do

- Choose . Find so that .

- Set ;

Furthermore, if A is sparse, non-Hermitian and positive definite, the Hermitian and skew-Hermitian splitting (HSS) algorithm [4] for solving the linear system is given by,

Algorithm HSS [4]

- Step 1: Choose initial guess , tolerance value and ; Set

- Step 2: Set , where H is Hermitian and S is skew-Hermitian parts of A.

- Step 3: While , Do

- Solve

- Solve

- Set

Newton–HSS [5] algorithm combines appropriately both IN and HSS methods for the solution of the large nonlinear system of equations with positive definite Jacobian matrix. The algorithm is as follows:

Algorithm NHSS (The Newton–HSS method [5])

- Step 1: Choose initial guess , positive constants and ; Set

- Step 2: While

- -

- Compute Jacobian

- -

- Setwhere is Hermitian and is skew-Hermitian parts of .

- -

- Set

- -

- WhileDo{

- Solve sequentially:

- Set

} - -

- Set

- -

- Compute , and for new

Please note that is varying in each iterative step, unlike a fixed positive constant value in used in [5]. Further observe that if in (6) is given in terms of , we get

where and

Using the above expressions for and , we can write the Newton–HSS in (6) as

A Kantorovich-type semi-local convergence analysis was presented in [7] for NHSS. However, there are shortcomings:

- (i)

- The semi-local sufficient convergence criterion provided in (15) of [7] is false. The details are given in Remark 1. Accordingly, Theorem 3.2 in [7] as well as all the followings results based on (15) in [7] are inaccurate. Further, the upper bound function (to be defined later) on the norm of the initial point is not the best that can be used under the conditions given in [7].

- (ii)

- The convergence domain of NHSS is small in general, even if we use the corrected sufficient convergence criterion (12). That is why, using our technique of recurrent functions, we present a new semi-local convergence criterion for NHSS, which improves the corrected convergence criterion (12) (see also Section 3 and Section 4, Example 4.4).

- (iii)

- Example 4.5 taken from [7] is provided to show as in [7] that convergence can be attained even if these criteria are not checked or not satisfied, since these criteria are not sufficient too. The convergence criteria presented here are only sufficient.Moreover, we refer the reader to [3,4,5,6,7,8,9,10,11,13,14] and the references therein to avoid repetitions for the importance of these methods for solving large systems of equations.

The rest of the note is organized as follows. Section 2 contains the semi-local convergence analysis of NHSS under the Kantorovich theory. In Section 3, we present the semi-local convergence analysis using our idea of recurrent functions. Numerical examples are discussed in Section 4. The article ends with a few concluding remarks.

2. Semi-Local Convergence Analysis

To make the paper as self-contained as possible we present some results from [3] (see also [7]). The semi-local convergence of NHSS is based on the conditions (). Let and be differentiable on an open neighborhood on which is continuous and positive definite. Suppose where and are as in (2) with

- 1)

- There exist positive constants and such that

- 2)

- There exist nonnegative constants and such that for all

Next, we present the corrected version of Theorem 3.2 in [7].

Theorem 1.

Assume that conditions () hold with the constants satisfying

where with

and with satisfying (Here represents the largest integer less than or equal to the corresponding real number) and

Then, the iteration sequence generated by Algorithm NHSS is well defined and converges to so that

Proof.

We simply follow the proof of Theorem 3.2 in [7] but use the correct function instead of the incorrect function defined in the following remark. □

Remark 1.

The corresponding result in [7] used the function bound

instead of in (12) (simply looking at the bottom of first page of the proof in Theorem 3.2 in [7]), i.e., the inequality they have considered is,

However, condition (16) does not necessarily imply which means that does not necessarily exist (see (13) where is needed) and the proof of Theorem 3.2 in [7] breaks down. As an example, choose then and for we have Notice that our condition (12) is equivalent to Hence, our version of Theorem 3.2 is correct. Notice also that

so (12) implies (16) but not necessarily vice versa.

3. Semi-Local Convergence Analysis II

We need to define some parameters and a sequence needed for the semi-local convergence of NHSS using recurrent functions.

Let be positive constants and Then, there exists such that Set Define parameters and by

and

Moreover, define scalar sequence by

We need to show the following auxiliary result of majorizing sequences for NHSS using the aforementioned notation.

Lemma 1.

Proof.

Estimate (27) holds true for by the initial data and since it reduces to showing which is true by (20). Then, by (21) and (27), we have

and

Suppose that (26),

and

hold true. Next, we shall show that they are true for k replaced by It suffices to show that

or

or

or

(since ) or

Estimate (30) motivates us to introduce recurrent functions defined on the interval by

Then, we must show instead of (30) that

We need a relationship between two consecutive functions

where

Then, since

it suffices to show

instead of (32). We get by (31) that

so, we must show that

which reduces to showing that

which is true by (22). Hence, the induction for (26), (28) and (29) is completed. It follows that sequence is nondecreasing, bounded above by and as such it converges to its unique least upper bound which satisfies (25). □

We need the following result.

Lemma 2

([14]). Suppose that conditions () hold. Then, the following assertions also hold:

- (i)

- (ii)

- (iii)

- If then is nonsingular and satisfieswhere

Next, we show how to improve Lemma 2 and the rest of the results in [3,7]. Notice that it follows from (i) in Lemma 2 that there exists such that

We have that

holds true and can be arbitrarily large [2,12]. Then, we have the following improvement of Lemma 2.

Lemma 3.

Suppose that conditions () hold. Then, the following assertions also hold:

- (i)

- (ii)

- (iii)

- If then is nonsingular and satisfies

Proof.

(ii) We have

(iii)

It follows from the Banach lemma on invertible operators [1] that is nonsingular, so that (44) holds. □

Remark 2.

The new estimates (ii) and (iii) are more precise than the corresponding ones in Lemma 2, if

Next, we present the semi-local convergence of NHSS using the majorizing sequence introduced in Lemma 1.

Theorem 2.

Assume that conditions (), (22) and (23) hold. Let with

and is as in Lemma 1 and with satisfying (Here represents the largest integer less than or equal to the corresponding real number) and

Then, the sequence generated by Algorithm NHSS is well defined and converges to so that

Proof.

If we follow the proof of Theorem 3.2 in [3,7] but use (44) instead of (41) for the upper bound on the norms we arrive at

where

so by (21)

We also have that It follows from Lemma 1 and (49) that sequence is complete in a Banach space and as such it converges to some (since is a closed set).

However, [4] and NHSS, we deduce that □

Remark 3.

- (a)

- The point can be replaced by (given in closed form by (24)) in Theorem 2.

- (b)

- Suppose there exist nonnegative constants such that for allandSet Define Replace condition () by() There exist nonnegative constants and such that for allSet Notice thatsince Denote the conditions () and () by (). Then, clearly the results of Theorem 2 hold with conditions (), replacing conditions (), and respectively (since the iterates remain in which is a more precise location than ). Moreover, the results can be improved even further, if we use the more accurate set containing iterates defined by Denote corresponding to constant by and corresponding conditions to () by (). Notice that (see also the numerical examples) In view of (50), the results of Theorem 2 are improved and under the same computational cost.

- (c)

- The same improvements as in (b) can be made in the case of Theorem 1.

The majorizing sequence in [3,7] is defined by

Next, we show that our sequence is tighter than

Proposition 1.

Under the conditions of Theorems 1 and 2, the following items hold

- (i)

- (ii)

- and

- (iii)

Remark 4.

Majorizing sequences using or are even tighter than sequence .

4. Special Cases and Numerical Examples

Example 1.

The semi-local convergence of inexact Newton methods was presented in [14] under the conditions

and

where

More recently, Shen and Li [11] substituted with where

Estimate (22) can be replaced by a stronger one but directly comparable to (20). Indeed, let us define a scalar sequence (less tight than ) by

where Moreover, define recurrent functions on the interval by

and function Set Moreover, define function on the interval by

Then, following the proof of Lemma 1, we obtain:

Lemma 4.

Let be positive constants and Suppose that

Then, sequence defined by (52) is nondecreasing, bounded from above by

and converges to its unique least upper bound which satisfies

Proposition 2.

Suppose that conditions () and (54) hold with Then, sequence generated by algorithm NHSS is well defined and converges to which satisfies

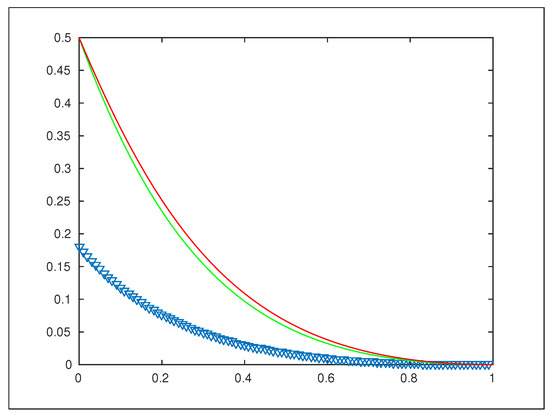

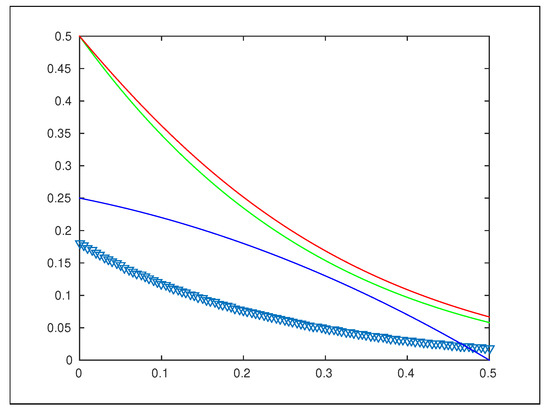

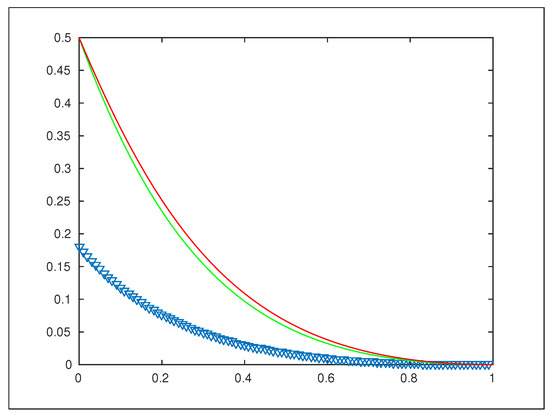

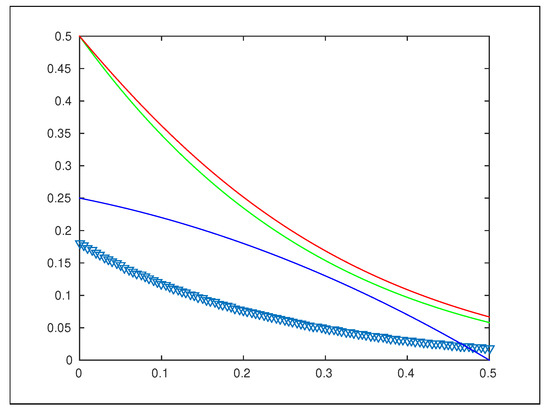

These bound functions are used to obtain semi-local convergence results for the Newton–HSS method as a subclass of these techniques. In Figure 1 and Figure 2, we can see the graphs of the four bound functions and Clearly our bound function improves all the earlier results. Moreover, as noted before, function cannot be used, since it is an incorrect bound function.

Figure 1.

Graphs of (Violet), (Green), (Red).

Figure 2.

Graphs of (Violet), (Green), (Red) and (Blue).

Example 2.

Let Define function F on Ω by

The next example is used for the reason already mentioned in (iii) of the introduction.

Example 3.

Consider the two-dimensional nonlinear convection–diffusion equation [7]

where and is the boundary of Here is a constant to control the magnitude of the convection terms (see [7,15,16]). As in [7], we use classical five-point finite difference scheme with second order central difference for both convection and diffusion terms. If N defines number of interior nodes along one co-ordinate direction, then and denotes the equidistant step-size and the mesh Reynolds number, respectively. Applying the above scheme to (56), we obtain the following system of nonlinear equations:

where the coefficient matrix is given by

Here, ⊗ is the Kronecker product, and are the tridiagonal matrices

In our computations, N is chosen as 99 so that the total number of nodes are . We use as in [7] and we consider two choices for i.e., and for all k.

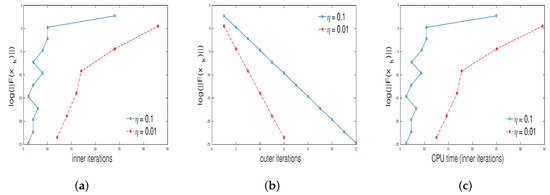

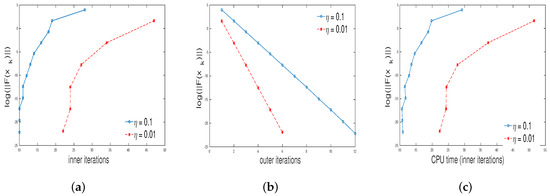

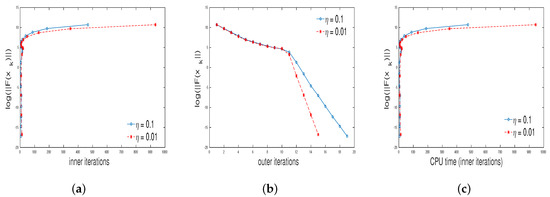

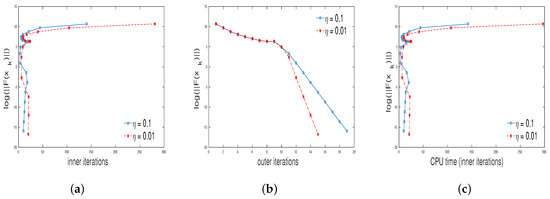

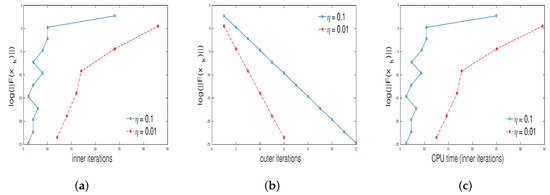

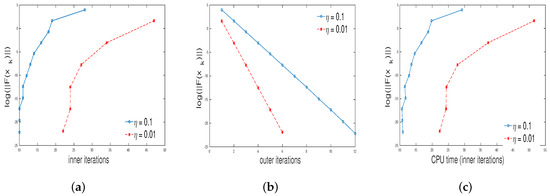

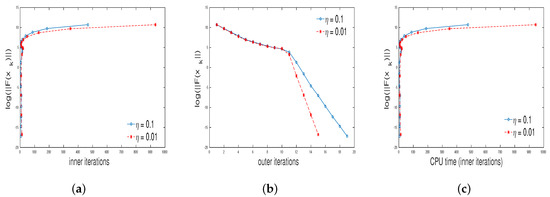

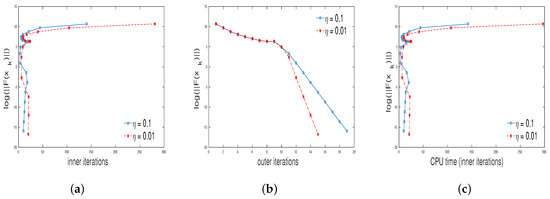

The results obtained in our computation is given in Figure 3, Figure 4, Figure 5 and Figure 6. The total number of inner iterations is denoted by , the total number of outer iterations is denoted by and the total CPU time is denoted by t.

Figure 3.

Plots of (a) inner iterations vs. , (b) outer iterations vs. , (c) CPU time vs. for and .

Figure 4.

Plots of (a) inner iterations vs. , (b) outer iterations vs. , (c) CPU time vs. for and .

Figure 5.

Plots of (a) inner iterations vs. , (b) outer iterations vs. , (c) CPU time vs. for and .

Figure 6.

Plots of (a) inner iterations vs. , (b) outer iterations vs. , (c) CPU time vs. for and .

5. Conclusions

A major problem for iterative methods is the fact that the convergence domain is small in general, limiting the applicability of these methods. Therefore, the same is true, in particular for Newton–Hermitian, skew-Hermitian and their variants such as the NHSS and other related methods [4,5,6,11,13,14]. Motivated by the work in [7] (see also [4,5,6,11,13,14]) we:

- (a)

- (b)

- The sufficient convergence criterion (16) given in [7] is false. Therefore, the rest of the results based on (16) do not hold. We have revisited the proofs to rectify this problem. Fortunately, the results can hold if (16) is replaced with (12). This can easily be observed in the proof of Theorem 3.2 in [7]. Notice that the issue related to the criteria (16) is not shown in Example 4.5, where convergence is established due to the fact that the validity of (16) is not checked. The convergence criteria obtained here are not necessary too. Along the same lines, our technique in Section 3 can be used to extend the applicability of other iterative methods discussed in [1,2,3,4,5,6,8,9,12,13,14,15,16].

Author Contributions

Conceptualization: I.K.A., S.G.; Editing: S.G., C.G.; Data curation: C.G. and A.A.M.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Argyros, I.K.; Szidarovszky, F. The Theory and Applications of Iteration Methods; CRC Press: Boca Raton, FL, USA, 1993. [Google Scholar]

- Argyros, I.K.; Magréñan, A.A. A Contemporary Study of Iterative Methods; Elsevier (Academic Press): New York, NY, USA, 2018. [Google Scholar]

- Argyros, I.K.; George, S. Local convergence for an almost sixth order method for solving equations under weak conditions. SeMA J. 2018, 75, 163–171. [Google Scholar] [CrossRef]

- Bai, Z.Z.; Golub, G.H.; Ng, M.K. Hermitian and skew-Hermitian splitting methods for non-Hermitian positive definite linear systems. SIAM J. Matrix Anal. Appl. 2003, 24, 603–626. [Google Scholar] [CrossRef]

- Bai, Z.Z.; Guo, X.P. The Newton-HSS methods for systems of nonlinear equations with positive-definite Jacobian matrices. J. Comput. Math. 2010, 28, 235–260. [Google Scholar]

- Dembo, R.S.; Eisenstat, S.C.; Steihaug, T. Inexact Newton methods. SIAM J. Numer. Anal. 1982, 19, 400–408. [Google Scholar] [CrossRef]

- Guo, X.P.; Duff, I.S. Semi-local and global convergence of the Newton-HSS method for systems of nonlinear equations. Numer. Linear Algebra Appl. 2011, 18, 299–315. [Google Scholar] [CrossRef]

- Magreñán, A.A. Different anomalies in a Jarratt family of iterative root finding methods. Appl. Math. Comput. 2014, 233, 29–38. [Google Scholar]

- Magreñán, A.A. A new tool to study real dynamics: The convergence plane. Appl. Math. Comput. 2014, 248, 29–38. [Google Scholar] [CrossRef]

- Ortega, J.M.; Rheinboldt, W.C. Iterative Solution of Nonlinear Equations in Several Variables; Academic Press: New York, NY, USA, 1970. [Google Scholar]

- Shen, W.P.; Li, C. Kantorovich-type convergence criterion for inexact Newton methods. Appl. Numer. Math. 2009, 59, 1599–1611. [Google Scholar] [CrossRef]

- Argyros, I.K. Local convergence of inexact Newton-like-iterative methods and applications. Comput. Math. Appl. 2000, 39, 69–75. [Google Scholar] [CrossRef]

- Eisenstat, S.C.; Walker, H.F. Choosing the forcing terms in an inexact Newton method. SIAM J. Sci. Comput. 1996, 17, 16–32. [Google Scholar] [CrossRef]

- Guo, X.P. On semilocal convergence of inexact Newton methods. J. Comput. Math. 2007, 25, 231–242. [Google Scholar]

- Axelsson, O.; Catey, G.F. On the numerical solution of two-point singularly perturbed value problems, Computer Methods in Applied Mechanics and Engineering. Comput. Methods Appl. Mech. Eng. 1985, 50, 217–229. [Google Scholar] [CrossRef]

- Axelsson, O.; Nikolova, M. Avoiding slave points in an adaptive refinement procedure for convection-diffusion problems in 2D. Computing 1998, 61, 331–357. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).