1. Introduction

Underwater wireless sensor networks (UWSN) are often used in environment monitoring where they review how human activities affect marine ecosystems, undersea explorations such as detecting oilfields, for disaster prevention, e.g., when monitoring ocean currents, in assisted navigation for the location of dangerous rocks in shallow waters, or for disturbed tactical surveillance for intrusion detection.

The fact that such wireless sensor networks are deployed underwater results in profound differences from terrestrial wireless sensor networks. The key aspects that are different include the communication method, i.e., radio waves vs. acoustic signals, cost (while terrestrial networks experience decreasing prices of components, underwater sensors are still expensive devices), memory capacity (because water is a problematic medium resulting in the loss of large quantities of data), power limitations due to the nature of the signal and longer distances handled, as well as problems related to the deployment of the network, i.e., issues connected to static or dynamic deployment. In underwater sensor networks, we commonly face challenges of limited bandwith, high bit error rates, large propagation delays, and limited battery resources caused by the fact that in an underwater environment, sensor batteries are impossible to recharge especially because no solar energy is available underwater. The power losses, which cannot be avoided, result in the need to reconfigure the network topology in order to maintain network connectivity and communication between sensor nodes. Thus, size of the UWSN coverage area and efficiency of data aggregation are affected. Obviously, efficiency in battery use influences network lifetime without sacrificing system performances. These differences are shown in

Table 1.

We use different protocols for discovering and maintaining routes between sensor nodes. As mentioned in Novák, Křehlík, and Ovaliadis [

1], the most commonly used routing protocols are: Flooding, multipath, cluster, and miscellaneous protocols, see Wahid and Dongkyun [

2]. In the flooding approach, the transmitters send a packet to all nodes within the transmission range. In the multipath approach, source sensor nodes establish more than one path towards sink nodes on the surface. Finally, in the clustering approach the sensor nodes are grouped together in a cluster. For an easy-to-follow reading on how UWSN’s work and on advantages of clustering see Domingo and Prior [

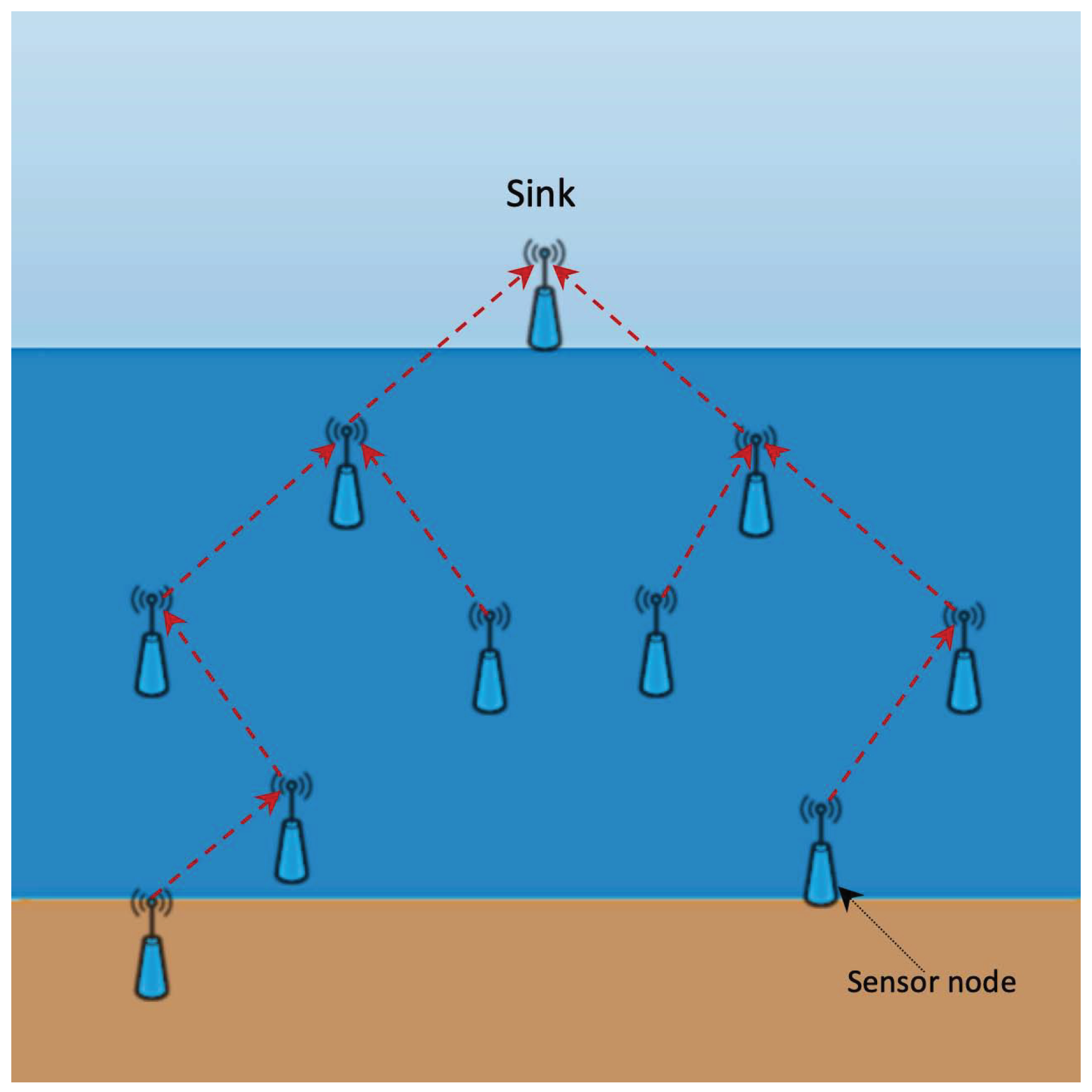

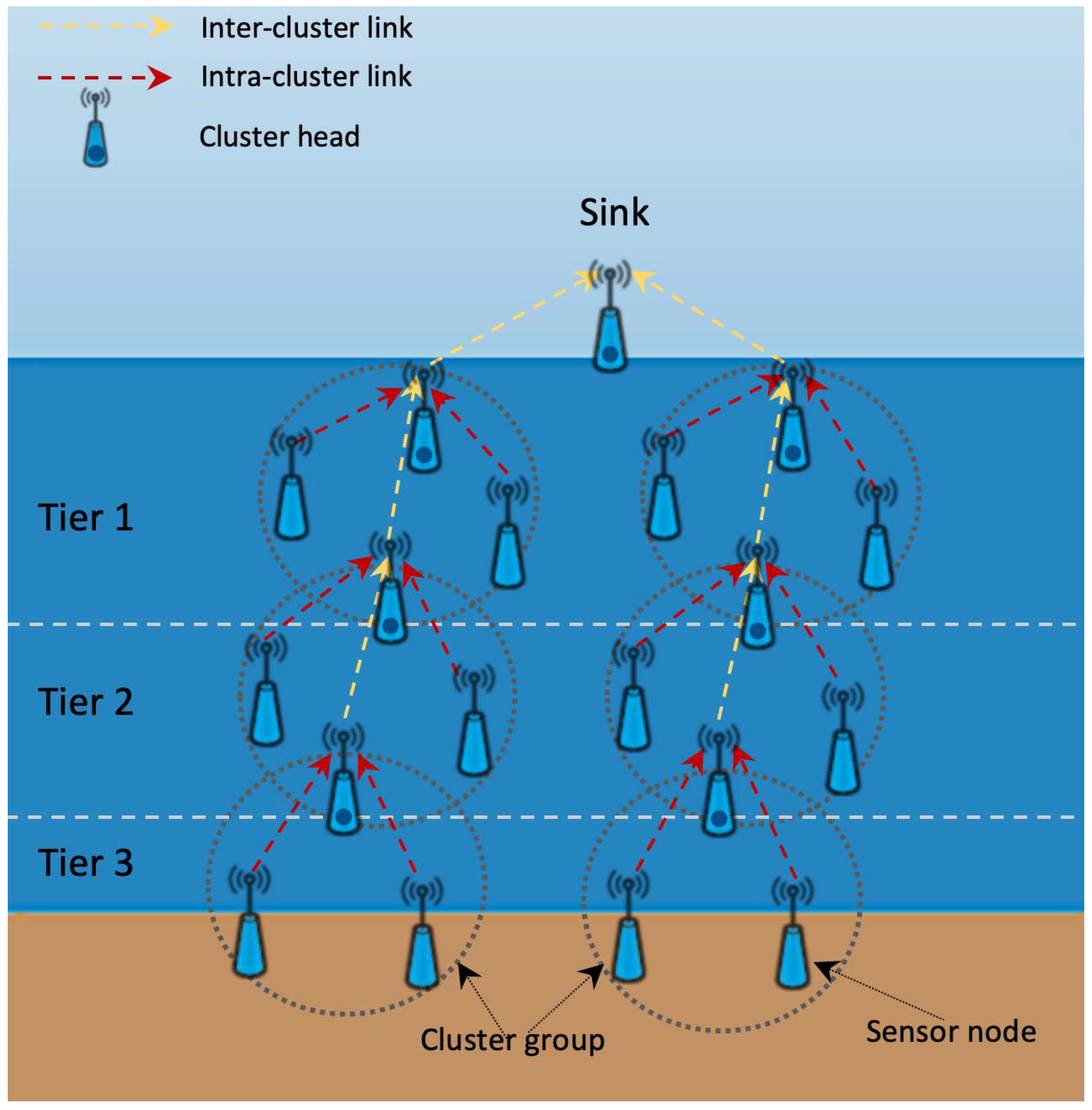

3], the basic idea is shown in

Figure 1 and

Figure 2.

Recent research shows that the cluster based protocols give a great contribution towards the concept of energy efficient networks, see Ayaz et al. [

4], Ovaliadis and Savage [

5], or Rault, Abdelmadjid, and Yacine [

6]. A common cluster based network consists of a centralized station deployed at the surface of the sea called a sink (or surface station) and sensor nodes deployed at various tiers inside the sea environment. These are grouped into clusters. In this architecture, each cluster has a head sensor node called a cluster head (

). The cluster head is assumed to be inside the transmission range of all sensor nodes that belong to its cluster. Every cluster head operates as a coordinator for its cluster, performing significant tasks such as cluster maintenance, transmission arrangements, data aggregation, and data routing (

Figure 2).

Mathematical Background of the Model

In the UWSN topology, several aspects are important for successful data aggregation. First of all, there must exist a path linking every element of the network to the surface station. However, these paths need not be unique as there might be multiple possible paths which the data from a given element can use to reach the surface station. Second, there always exists a cetain kind of ordering of the set of the network elements. They can be ordered with respect to their physical depth, with respect to their importance, with respect to communication priority, remaining battery power, etc. Finally, as data are collected, they are combined in the "upwards" elements in order to be sent further on.

Thus one may employ techniques of algebra or graph theory in the description of the data aggregation process as has been recently done by Aboyamita et al., Domingo, or Jiang et al. [

7,

8,

9]. However, given the multivalued nature of data aggregation (multiple paths, more than one possible links of elements, etc.), it seems relevant to make use of the elements of the algebraic hyperstructure theory. Notice that while in "classical" algebra, we regard operations, i.e., mappings

, in the algebraic hyperstructure theory we work with hyperoperations, i.e., mappings

, where

is the power set of

H with ∅ excluded (one need not consider this exclusion though). For the general introduction to the theory as well as definitions of concepts not explicitly defined further on, see Corsini and Leoreanu [

10].

In the algebraic hyperstructure theory, there are several concepts which make use of the aspect of ordering. A small selection includes Comer, Corsini, Cristea, De Salvo et al. [

11,

12,

13,

14,

15]. Further on we discuss three of these:

–hyperstructures, quasi-order hypergroups, and ordered hyperstructures. Each of these concepts uses somewhat different background and assumptions:

–hyperstructures are constructed from pre- and partially-ordered semigroups, i.e., the hyperoperation is defined using an operation and a relation compatible with it;

Quasi-order hypergroups are constructed from pre-ordered sets, i.e., the hyperoperation is defined using a relation only;

Ordered hyperstructures are algebraic hyperstructures on which a relation compatible with the hyperoperation is defined.

All of these have been studied in depth and numerous results have been achieved in their respective theories. The idea of

–hyperstructures has been implicitely present in a number of works since at least the 1960s, for example Pickett [

16]. The definition and first results were given by Chvalina [

17] and the theory has been elaborated by Novák (later jointly with Chvalina, Křehlík, and Cristea) in a series of papers including [

18,

19,

20,

21,

22]. It is to be noted that, since the class of

–hyperstructures is rather broad, the aim of many theorems included in some of those papers was to establish a common ground for some already existing ad hoc derived results. Recently, some examples concerning various types of cyclicity in hypergroups have been constructed using

–hyperstructures, see Novák, Křehlík and Cristea [

23].

The idea of quasi-order hypergroups was proposed by Chvalina in [

17,

24,

25]. Some results achieved with the help of this concept are included in Corsini and Leoreanu [

10]. Not to be missed are results concerning the theory of automata collected in Chvalina and Chvalinová [

25]. It should be stressed that these results were motivated by Comer [

26] and Massouros and Mittas [

27].

Ordered hyperstructures were introduced by Heidari and Davvaz [

28]. Numerous results have been published since, mainly by Iranian authors.

For the following set of basic definitions see Novák, Křehlík, and Ovaliadis [

1].

Definition 1. By an –semihypergroup we mean a semihypergroup, in which, for all , there is , where is a quasi-ordered semigroup.

Proposition 1. [20,22] If, for all , there is , then the –semihypergroup is a hypergroup. If is a partially ordered group, then its –hypergroup is a join space. Definition 2. Let be a hypergroupoid. We say that H is a quasi-order hypergroup, i.e., a hypergroup determined by a quasi-order, if, for all , , and . Moreover, if holds for all , then is called an order hypergroup.

Proposition 2. [10] A hypergroupoid is a quasi-order hypergroup if and only if there exists a quasi-order "≤" on the set H such that, for all , there is Definition 3. An ordered semihypergroup is a semihypergroup together with a partial ordering "⪯" which is compatible with the hyperoperation, i.e., for all . By we mean that for every there exists such that .

Notation. Further on, for some , by means the set . For this reason, closed intervals will not be denoted by but by .

2. Mathematical Model

The mathematical model presented in this section was published as an extended abstract of the conference contribution Novák, Ovaliadis, and Křehlík [1] presented by the authors of this paper at International Conference on Numerical Analysis and Applied Mathematics (ICNAAM 2017). UWSNs consist of elements of different types: First, we have surface stations, which pass data to a ship or to a data-collecting station located on the sea shore; second, we have sensor nodes deployed at various tiers in water or at the sea bed. The sensors, which are deployed in water, can function as sensors measuring the requested data or as transporters of information from seabed sensors. In any case, information collected from all sensors must be passed to surface stations. From these it can be collected either by a ship passing by or, alternatively, transmitted to a data-collecting station located on the sea shore. The ship or the data-collecting stations are central nodes.

Denote

H the set of all elements of an arbitrary UWSN. Suppose that all elements are capable of handling (i.e., receiving or transmitting) data in the same way. Also suppose that they perform the same set of tasks. Thus they are, from the mathematical point of view, interchangeable and equal (of course, with respect to their functionality as sinks and sensor nodes). The aim of the system is to collect information. Therefore, our elements of

H must communicate data. This should be done ideally upwards, towards the surface. As we have mentioned above, there are different ways of passing information. In our model we concentrate on multipath and cluster routing approach (see

Figure 1 and

Figure 2). For details concerning these see Ayaz et al. and Li et al. [

4,

29]. Multipath routing protocols (

Figure 1), forward the data packets to the sink via other nodes while in cluster based routing protocols (

Figure 2), data packets are first aggregated to the respective cluster heads and only then forwarded via other cluster heads to the sink. For our purposes, we denote the

i–th cluster by

. Its cluster head will be denoted by

. We call non-

nodes

ordinary and sinks will be treated as cluster heads.

Now, suppose that the elements of our system are clustered. In other words, some elements of H function as cluster heads, i.e., masters, while others are ordinary. The data aggregation process goes as follows: Within their cluster, the ordinary elements pass information to their cluster head while between clusters, i.e., supposedly over longer distances, only cluster heads communicate. At a given point in time, each cluster has the unique cluster head, and each element can belong to exactly one cluster. We denote the i–th cluster by and its cluster head by .

Now, for a given pair

, regard a binary hyperoperation, where

is, for arbitrary

, defined by:

By

we mean a set

, where

is a result of a single-valued binary operation such that

is, for arbitrary

, defined by:

and

is such a cluster head that

,

, where

is a relation between elements of

H such that: (1)

,

and

for all clusters

, (2) within the same cluster

we have

for all

while mutually different ordinary elements of the cluster are incomparable, (3) between clusters for

,

the fact that

means that the tier of

b (measured towards the surface) is smaller than or equal to the tier of

a, and (4) in all other cases

a and

b are not related. By

above we mean a cluster head on the closest tier above both

and

. Of course,

always exists yet need not be unique as there may be more cluster heads at this closest tier. In such a case, we choose the most suitable one or regard all cluster heads as equal. Notice that, in our definitions, the fact that

and simultaneously

does not mean that

, rather it only means that

and

are on the same tier. If we are able to chose the most suitable cluster head (further on we remark that we are), the relation "≤" (restricted to

) becomes partial ordering and we can write

(with respect to the relation "≤"). Finally, the element

s is an element of

H reserved for situations when

a and

b fail to communicate. It is artificially added to our set of elements

H or we can agree that one (given the actual sensor deployment is of course carefully chosen) of elements of

H will be

s. In this way, technically speaking, we should in fact write

, where

could mean "expanded". Of course, if we choose the option of

, then

.

Under these definitions, is the element in which the data from a and b meet, and is the path in which the data from both a and b can spread. The facts that or or all stand for communication failure.

Lemma 1. [

1]

is a quasi-ordered set. Suppose now that we have arbitrary . Since the result of is such an element of H in which the data from a and b meet, it is natural to suppose that , i.e., that "·" is commutative. However, we can suppose this only on condition that there exists such an algorithms that for arbitrary clusters . Further on suppose that such an algorithm exists, i.e., that is a commutative groupoid. The following lemma is obvious.

Lemma 2. [

1]

If is a commutative groupoid, then is a commutative hypergroupoid. In the following lemma notice that weak associativity of the hyperoperation is defined as for all ; a quasi-hypergroup is a reproductive hypergroupoid.

Lemma 3. [

1]

The hypergroupoid is a –group, i.e., a weak associative quasi-hypergroup. Lemma 4. [

1]

The quasi-ordering "≤" and the operation "·" are compatible, i.e., for all such that and an arbitrary there is and . Now, denote

the set of cluster heads. This notation enables us to regard both clustering based systems and multipath systems because the fact that

means that every element of

H is a cluster head, i.e., the system is in fact a multipath one. In such a case the model simplifies substantially. This is because there is no need for the special element

s and we do not distinguish between communication within and between clusters. The operation "·" defined by Equation (

2) reduces to

(we still suppose that it is commutative) and, consequently, the hyperoperation Equation (

1) reduces to

, in both cases for all

.

Lemma 5. [

1]

If we are able to uniquely identify in Equation (2), then is a partially ordered semigroup. Finally, what is

? This means that

, i.e., that the data from the element

a reach the element

x. Thus, if

x is a sink, than the fact that

means that the data from

a can be successfully collected. What we want is that, if we denote

S the set of all sinks, for all

there exists at least one

such that

, which means that data from all elements of our network

H can be successfully collected. Of course, in order to achieve this, it is crucial to have an algorithm for unique determination of

in Equation (

2). Yet clustering algorithms such as the Distributed Underwater Clustering Scheme (DUCS) [

3] or Low Energy Adaptive Clustering Hierarchy (LEACH) protocol can provide this.

3. Use of the Theory of Quasi-Automata

In Definition 2, the concept of quasi-order hypergroup is defined. Chvalina and Chvalinová [

25] relate these to the theory of quasi-automata, i.e., automata without output. For an automaton they construct a quasi-order hypergroup of its state set and show that the automaton is connected if and only if the state hypergroup is inner irreducible as well as strogly connected, i.e., we can reach any state from any other state, if and only if the state hypergroup is (in a special way) cyclic. In other words, if we look at the problem of data aggregation from the point of view of the automata theory, where every step is an application of the transition function with the initial state "data aggregation to begin" and the desirable state "data from all elements collected" (or rather "useful data from all elements sent" since every

not only receives data but also separates useful data from useless ones), we should be interested in constructing such automata or studying their properties.

We call the concept defined below quasi-automaton even though this term is not much frequent (we do this to be consistent with some earlier papers on hyperstructure theory). In fact, we could speak of semiautomata or deterministic finite automata (the below mentioned paper Chvalina and Chvalinová [

25] uses a general term automaton; however, notice that [

25], p. 107, plain text, defines automaton in the way of Definition 4, which is a definition adopted by the authors of [

25] in later years). For an overall discussion of the concepts and the reasons for our choice of the name see Novák et al. [

30]. For some further reading and applications see also Hošková et al. [

31,

32,

33].

Definition 4. By a quasi–automaton we mean a structure such that is a monoid, and satisfies the following condition:

- 1.

There exists an element such that for any state ;

- 2.

for any pair and any state

The set I is called the input set or input alphabet, the set S is called the state set and the mapping δ is called next-state or transition function. Condition 2 is called GMAC (Generalized Mixed Associativity Condition).

In [

25], Chvalina and Chvalinová defined what they called a state hypergroup of an automaton. This is in fact a state set with a special hyperoperation, defined by means of the transition function. In this way, the concept of a state hypergroup is fixed to the automata theory. However, the way of defining this concept is a parallel to the concept of quasi-order hypergroups, which means that state hypergroups of quasi-automata are quasi-order hypergroups. The fact that the below defined

is a hypergroup, or rather quasi-order hypergroup, (hence the name state hypergroup) was proved in [

25]. (Notice that in [

25]

I and

S are swapped.)

Definition 5. Let be an automaton. We define a binary hyperoperation "∘" on the state set S by:for any pair of states , where is a free monoid of words over the (non-empty) alphabet A. The hyperstructure is called state hypergroup of the automaton . Some properties of automata following from properties of its state hypergroup are proved in [

25]. This includes the properties of being connected or separated.

Definition 6. Let be a quasi-automaton. A quasi-automaton such that and is a restriction of δ on and for any state and any word , is called a sub quasi-automaton of . A sub quasi-automaton of a quasi-automaton is called separated if . A quasi-automaton is called connected if it does not posses any separated proper subautomaton. A quasi-automaton is called strongly connected if for any states there exists a word such that .

If in quasi–automata we suppose that the input set

I is a semihypergroup instead of a free monoid, we arrive at the concept of a quasi–multiautomaton. When defining this concept, caution must be exercised when adjusting the conditions imposed on the transition function

as on the left-hand side of condition 2 we get a state while on the right-hand side we get

a set of states. However, in the dichotomy

deterministic —

nondeterministic, quasi–multiautomata still are deterministic because the range of

is

S. The difference between the transition function of a quasi-automaton and the transition function of a quasi-multiautomaton is that in quasi-automata the state achieved by applying

y in a state, which is the result of application of

x in

s, is the same as the state achieved by applying

in

s, while condition (

4) says that it is one of the many states achievable by applying any command from

in state

s.

Definition 7. A quasi–multiautomaton is a triad , where is a semihypergroup, S is a non–empty set, and is a transition map satisfying the condition:The hyperstructure is called the input semihypergroup of the quasi–multiautomaton (I alone is called the input set or input alphabet), the set S is called the state set of the quasi–multiautomaton , and δ is called next-state or transition function. Elements of the set S are called states, elements of the set I are called input symbols. Further on, we will make use of the above mentioned concepts to model the process of data aggregation. Notice that in Novák et al. [

30], Cartesian composition of automata resulting in a quasi-multiautomaton is used to describe a task from collective robotics. Moreover, in Chvalina et al. [

18], the issue of state sets and input sets having the form of vectors and matrices (of both numbers and special classes of functions) is discussed in the context of quasi-multiautomata.

In

Figure 2 we can see that the elements of the UWSN are divided into several tiers. Also, the nodes are grouped into clusters. The process of data aggregation happens as follows: First, data is collected in cluster heads and then transmitted between cluster heads towards the surface, i.e., "upwards". Obviously, we can only transmit the amount of data that the capacity of available memory allows. Suppose that clusters cover areas of more or less the same size, i.e., it does not matter how many nodes there are in respective clusters. Now, regard a set of vectors:

of such a number of components that corresponds to the number of tiers (with index 1 meaning surface and index

n meaning seabed or the deepest tier). The components

carry information about how much total memory all cluster heads at a given tier has been used. In other words,

means that at the second tier

memory capacity has been used, regardless of whether this

means that every cluster head at this level has

free capacity or whether 6 out of 10 cluster heads already have no available memory while 4 are

free.

The process of data aggregation starts with

, i.e., at the moment when all cluster heads have empty memory. Since, at first data are collected within clusters,

immediately becomes non-zero. The process of communication between cluster heads is described by the change of

by means of multiplying

by a square matrix of real numbers:

where

is the column norm of

. In other words,

is a subset of the set of upper triangular matrices, i.e., we can also write:

Now, these two sets

and

will be linked with a transition function

by:

for all

and all

. If we regard the usual matrix multiplication (only swapped), i.e.,

for all

, then

is a monoid such that the free monoid

. Thus we can regard the triple

and study whether it is a quasi-automaton. In our context, the operation of creating words from input symbols of our alphabet

I will be matrix multiplication, i.e., a word will be a product of matrices, i.e., again a matrix.

Theorem 1. The triple is a quasi-automaton.

Proof. The identity matrix

is the neutral element of

. Property 1 of Definition 4 holds trivially. Verification of Property 2 is also straightforward:

□

The set consists of matrices such that the column norm is at most one. In the following Remark, we show that without this condition the set would not be closed with respect to "⊙".

Remark 1. Suppose two matrices . If we denote:then,and it is obvious that the column norm will not exceed 1, i.e., . Indeed, suppose that is an all-ones matrix upper triangular matrix (which, of course violates the condition that ). Then all the sums in reduce to which are (due to the fact that ) smaller than 1. If moreover , none of the sums becomes greater. Of course, in we could have used the row norm instead of column one with the same result. Example 1. Regard sensor nodes deployed under water, which are divided into four tiers with tier 1 being , tier 2 being , tier 3 being , and tier 4 being under water. Every tier has an arbitrary number of clusters, i.e., an arbitrary number of cluster heads, each with the same memory capacity. Then by vector e.g., , we describe such a state of the system that, at a certain point in time, data has been collected (or rather, memory capacity has been used) from tier 1 with the numbers being from tier 2, from tier 3, and from tier 4. Now regard a matrix:which we apply on . Then , which describes the new state of the system. Of course, the first of data (represented by the first component of ) did not get lost, which we can regard as output, i.e., to each quasi-automaton we can assign output, meaning that data from the upmost tier 1 have been processed, sent out of the system. Furthermore, in our example, data from tier 2 were transferred to tier 1 (and left in tier 2, i.e., in this case we have a backup copy). Also, data from tier 4 were transferred to tier 3, which had been empty, and simultaneously deleted in tier 4. It can be easily observed that the issue of suitable construction of matrices used for quasi-automata inputs is a topic for further research which will be closely linked to various aspects of optimization theory. When describing the state hypergroup of

, we must first of all decide whether or not

defined by Equation (

3) is trivial. However, this task is rather simple.

Lemma 6. The state hypergroup of is not trivial, i.e., there exist states such that .

Proof. The transition function

is defined on

by

for all

and all

. Moreover, by Equation (

5), components of vectors from

are real numbers from interval

, and by (

6) matrices from

are upper triangular with entries taken from the same interval. Finally, in a text preceding Theorem 1 we have already mentioned that

. Thus, for all

and all

, we have that:

Now suppose that . Obviously in this case there cannot be because we are not able to find such a matrix that as this would require which is out of the interval in which all entries of should be. Naturally, there are infinitely many of these choices. The fact that is obvious. □

Regard now the set which will be different from because the condition of the column norm will be dropped and we will assume that for all and , i.e., that each diagonal element will be greater than all other elements in the given row.

On

we define a hyperoperation "*" by:

for all

, where "·" is the usual multiplication of real numbers and

belongs to a closed interval bounded by the product

and either 1 or the product of the corresponding diagonal elements.

Remark 2. Notice that by removing the assumption regarding the column norm we are making our model more real-life because we regard memory losses and overflows. Indeed, suppose we have a vector e.g., and a matrix e.g., . Then , which (since vector components are taken from the interval because they describe percentages of memory capacity) must be reduced to .

Theorem 2. The pair is a commutative hypergroup.

Proof. Commutativity of the hyperoperation "∗" is obvious because we work with entry-wise multiplication of real numbers.

For the test of associativity, i.e.,

for all triples

, recall that we will be comparing sets of matrices of the same dimension, elements of which fall within intervals defined by Equation (

8). As far as diagonal elements are concerned, there is obviously no problem because we make use of the multiplication of real numbers. For the non-zero, non-diagonal elements the lower bounds of the intervals are the products of their smallest elements, i.e.,

, while the upper bounds of the intervals are products of their greatest possible, i.e., diagonal, elements i.e.,

. Thus, in all cases, associativity of the hyperoperation follows from the associativity of (positive) real numbers (smaller than 1).

Reproductive axiom is also obviously valid because since the null matrix is an element of and . □

Notice that in the following theorem, is the usual vector and matrix multiplication with the additional condition that no diagonal component is allowed to exceed 1. For its motivation see Remark 2.

Theorem 3. The triple is a quasi-multiautomaton, where, for all and all ,where in case that for some , we set, by default, . Proof. Thanks to Theorem 2, we shall verify condition Equation (

4) only. The left-hand side of the condition is:

which, if we regard that

are upper triangular matrices, is:

On the right-hand side of the condition we get:

which is a vector of intervals upper-bounded by 1 such that their lower bounds are sums of lower bounds of respective intervals in the definition of the hyperoperation in Equation (

8) after multiplication by vector

has been performed. (Notice that we work with non-negative matrix and vector entries only.) We have to show that:

i.e.,

Now, if we move the sums on the left-hand side of the inequalities to the right-hand side and expand all the sums, we get for the first sum, i.e., for the first component of the vectors:

which, after we put elements

,

in front of the brackets, means that:

which, since all numbers are non-negative, will be greater than zero if

for all

. In a completely analogous manner we get for the second sum, i.e., for the second component of the vectors:

which will give us condition that

for all

, etc. until the

component will result in condition

for

; and the first and the last condition

for all

hold trivially.

Thus, altogether we get that for all and . In other words, each diagonal element must be greater than all other elements in the given row, which is exactly what we suppose to be. □

Remark 3. If we want to find out whether we are able to reach any state from any other state with the help of our transition functions (i.e., test strong conectedness), we must take into account that the fact that we regard diagonal matrices means that for known vectors and an unknown matrix the equation results in a linear system in the row echelon form and the task of finding the unknown matrix is equivalent to finding its solution. Of course, we have to consider the fact that might have components being zero, which will affect the solvability of the linear system.

Example 2. The hyperoperation on the input set, i.e., the fact that we work with hypergroups, gives us a better tool for controlling the process of data aggregation. Suppose that we have the same vector as in Example 1, i.e., . Furthermore, assume the same context as in Example 1. Let the matrix be: Suppose that in our quasi-multiautomaton this matrix is applied on vector , i.e. Now, focus on the multiplication of and the first column of . We get that:which means that the capacity of tier 1 is filled by in such a way that the original was accompanied by further from tier 2 (which had originally been filled up by , i.e., we have a transmission loss), from tier 3, and from tier 4. In this case it is obvious that the transmission from tier 4 is extremely difficult with a substantial data loss. Theoretically, we could model the process of data aggregation with matrices with all-one columns. However, this would not be a real-life case. When looking for an optimal transition input (i.e., an optimal matrix), we could regard matrices such as those in Equation (8) with increasing entries. The definition of the hyperoperation (8) means that from a certain moment on, the memory capacity could be filled to maximum and data will start being lost, i.e., not stored in cluster heads on upper tiers anymore. However, this depends also on the initial state of the system, i.e., on the initial vector. In the case of our vector, , even matrix:i.e., a matrix with maximal possible entries will secure successful data aggregation because vector suggests that the process started when all tiers had enough free memory capacity in all cluster heads without any risk of memory overflow.