1. Introduction

As mentioned in the PhD thesis [

1], neurons are the atoms of neural computation. Out of those simple computational units all neural networks are build up. The output computed by a neuron can be expressed using two functions

. The details of computation consist in several steps: In a first step the input to the neuron,

, is associated with the weights of the neuron,

, by involving the so-called propagation function

f. This can be thought as computing the activation potential from the pre-synaptic activities. Then from that result the so-called activation function

g computes the output of the neuron. The weights, which mimic synaptic strength, constitute the adjustable internal parameters of the neuron. The process of adapting the weights is called learning [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15,

16,

17,

18].

From the biological point of view it is appropriate to use an integrative propagation function. Therefore, a convenient choice would be to use the weighted sum of the input

, that is the activation potential equal to the scalar product of input and weights. This is, in fact, the most popular propagation function since the dawn of neural computation. However, it is often used in a slightly different form:

The special weight is called bias. Applying for and for as the above activation function yields the famous perceptron of Rosenblatt. In that case the function works as a threshold.

Let

be a general non-linear (or piece-wise linear) transfer function. Then the action of a neuron can be expressed by

where

is input value in discrete time

k where

is weight value in discrete time where

is bias,

is output value in discrete time

Notice that in some very special cases the transfer function

F can be also linear. Transfer function defines the properties of artificial neuron and this can be any mathematical function. Usually it is chosen on the basis of the problem that the artificial neuron (artificial neural network) needs to solve and in most cases it is taken (as mentioned above) from the following set of functions: step function, linear function and non-linear (sigmoid) function [

1,

2,

5,

7,

9,

12,

16,

19].

In what follows we will consider a certain generalization of classical artificial neurons mentioned above such that inputs

and weight

will be functions of an argument

t belonging into a linearly ordered (tempus) set

T with the least element

. As the index set we use the set

of all continuous functions defined on an open interval

. So, denote by

W the set of all non-negative functions

forming a subsemiring of the ring of all real functions of one real variable

. Denote by

for

,

the mapping

which will be called the artificial neuron with the bias

By

we denote the collection of all such artificial neurons.

Neurons are usually denoted by capital letters

or

, nevertheless we use also notation

, where

is the vector of weights [

20,

21,

22].

We suppose - for the sake of simplicity - that transfer functions (activation functions) are the same for all neurons from the collection and the role of this function plays the identity function .

Feedforward multilayer networks are architectures, where the neurons are assembled into layers, and the links between the layers go only into one direction, from the input layer to the output layer. There are no links between the neurons in the same layer. Also, there may be one or several hidden layers between the input and the output layer [

5,

9,

16].

2. Preliminaries on Hyperstructures

From an algebraic point of view, it is useful to describe the terms and concepts used in the field of algebraic structures. A hypergroupoid is a pair

, where

H is a (nonempty) set and

is a binary hyperoperation on the set

If

for all

(the associativity axiom), the the hypergroupoid

is called a semihypergroup. A semihypergroup is said to be a hypergroup if the following axiom:

for all

(the reproduction axiom), is satisfied. Here, for sets

we define as usually

Thus, hypergroups considered in this paper are hypergroups in the sense of F. Marty [

23,

24]. In some constructions it is useful to apply the following lemma (called also the Ends-lemma having many applications—cf. [

25,

26,

27,

28,

29]). Recall, first that by a (quasi-)ordered semigroup we mean a triad

where

is a semigroup,

is a (quasi-)ordered set, i.e., a set

S endowed with a reflexive and transitive binary relation “≤” and for all triads of elements

the implication

holds.

Lemma 1 (Ends-Lemma). Let be a (quasi-)ordered semigroup. Define a binary hyperoperationThen is a semihypergroup. Moreover, if the semigroup is commutative, then the semihypergroup is also commutative and if is a (quasi-)ordered group then the semihypergroup is a hypergroup. Notice, that if

are (semi-)hypergroups, then a mapping

is said to be the homomorphism of

into

if for any pair

we have

If for any pair

the equality

holds, the homomorphism

h is called the good (or strong) homomorphism—cf. [

30,

31]. By

we denote the endomorphism monoid of a semigroup (group)

Concerning the basics of the hypergroup theory see also [

23,

25,

26,

27,

28,

32,

33,

34,

35,

36,

37,

38,

39,

40,

41].

Linear differential operators described in the article and used e.g., in [

29,

42] are of the following form:

Definition 1. Let be an open interval, be the ring of all continuous functions For we define(the ring of all smooth functions up to order n, i.e., having derivatives up to order n defined on the interval Definition 2 ([

41,

49])

. Let be a semigroup and A hyperoperation defined by for any pair is said to be the P-hyperoperation in G. Ifholds for any triad the P-hyperoperation is associative. If also the axiom of reproduction is satisfied, the hypergrupoid is said to be a P-hypergroup. Evidently, if is a group, then also is a P-hypergroup. If the set P is a singleton, then the P-operation is a usual single—valued operation.

Definition 3. A subset is said to be a sub-P-hypergroup of if and is a hypergroup.

Now, similarly as in the case of the collection of linear differential operators [

29], we will construct a group and hypergroup of artificial neurons, cf. [

29,

32,

42,

43,

44].

Denote by Kronecker delta, i.e., and , whenever

Suppose

Let

be a such an integer that

. We define

where

and, of course, the neuron

is defined as the mapping

Further, for a pair

of neurons from

we put

if

and

and with the same bias. Evidently

is an ordered set. A relationship (compatibility) of the binary operation "

" and the ordering

on

is given by this assertion analogical to Lemma 2 in [

29].

Lemma 2. The triad (algebraic structure with an ordering) is a non-commutative ordered group.

Sketch of the proof was published in [

21].

Denoting

we get the following assertion:

Proposition 1 (Prop. 1. [

21], p. 239)

. Let . Then for any positive integer and for any integer m such that the semigroup is an invariant subgroup of the group Proposition 2 (Prop. 2. [

21], p. 240)

. Let and are integers such that . Define a mapping by this rule: For an arbitrary neuron where we put with the action: Then the mapping is a homomorphism of the group into the group

Now, using the construction described in the Lemma 1, we obtain the final transposition hypergroup (called also non-commutative join space). Denote by

the power set of

consisting of all nonempty subsets of the last set and define a binary hyperoperation

by the rule

for all pairs

. More in detail if

, then

if

Then we have that

is a non-commutative hypergroup. We say that this hypergroup is constructed by using the Ends Lemma (cf. e.g., [

8,

25,

29]. These hypergroups can be called as EL-hypergroups. The above defined invariant (called also normal) subgroup

of the group

is the carrier set of a subhypergroup of the hypergroup

and it has certain significant properties.

Using certain generalization of methods from [

42] (p. 283), we obtain, after we investigate the constructed structures, the following result:

Theorem 1. Let Then for any positive integer and for any integer m such that the hypergroup ,whereis a transposition hypergroup (i.e., a non-commutative join space) such that is its subhypergroup, which is - -

invertible (i.e., implies and implies for all pairs of neurons

- -

closed (i.e., for all pairs

- -

reflexive (i.e., for any neuron and

- -

normal (i.e. for any neuron

Remark 1. A certain generalization of the formal (artificial) neuron can be obtained from expression of a linear differential operator of the n-th order. Recall the expression of formal neuron with inner potential where is the vector of inputs, is the vector of weights. Using the bias b of the considered neuron and the transfer function σ we can expressed the output as

Now consider a tribal function where is an open interval; inputs are derived from as follows: Inputs Further the bias As weights we use the continuous functions

Then formulais a description of the action of the neuron which will be called a formal(artificial) differential neuron. This approach allows to use solution spaces of corresponding linear differential equations. Proposition 3 ([

41], p. 16)

. Let be two groups and Then the homomorphism f is a good homomorphism between P-hypergroups and Concerning the discussed theme see [

26,

27,

28,

30,

32,

36,

39,

45]. Now denote by

an arbitrary non/empty subset and let

Then defining

for any pair of neurons

, we obtain a P-hypergroup of artificial time varying neurons. If

S is a singleton, i.e., P is a one-element subset of

, the obtained structure is a variant of

. Notice, that any

for a group

induces a good homomorphism of the P-hypergroups

and any automorphism creates an isomorphism beween the above P-hypergroups.

Let

be the additive group of all integers. Let

be arbitrary but fixed chosen artificial neuron with the output function

. Denote by

the left translation within the group of time varying neurons determined by

, i.e.,

for any neuron

. Further, denote by

the

r-th iteration of

for

. Define the projection

by

It is easy to see that we get a usual (discrete) transformation group, i.e., the action of

(as the phase group) on the group

. Thus the following two requirements are satisfied:

for any neuron ,

for any integers and any artificial neuron . Notice that, in the dynamical system theory this structure is called a cascade.

On the phase set we will define a binary hyperoperation. For any pair of neurons

define

Then we have that

is a commutative binary hyperoperation and since

, we obtain that the hypergroupoid

is a commutative, extensive hypergroup [

20,

27,

29,

30,

31,

34,

35,

38,

43,

46,

47]. Using its properties we can characterize certain properties of the cascade

The hypergroup

can be called phase hypergroup of the given cascade.

Recall now the concept of invariant subsets of the phase set of a cascade and the concept of a critical point. A subset M of of a phase set X of the cascade is called invariant whenever , for all and all . A critical point of a cascade is an invariant singleton. It is evident that a subset M of neurons, i.e., is invariant in the cascade whenever it is a carrier set of a subhypergroup of the hypergroup , i.e., M is closed with respect to the hyperoperation ∗, which means . Moreover, union or intersection of an arbitrary non-empty system is also invariant.

3. Main Results

Now, we will construct series of groups and hypergroups of artificial neurons using certain analogy with series of groups of differential operators described in [

29].

We denote by

(for an open interval

) the set of all linear differential operators

i.e., the ring of all continuous functions defined on the interval

J, acting as

and endowed the binary operation

Now denote by

the set of all operators

acting as

with similarly defined binary operations such that

are noncommutative groups. Define mappings

by

and

by

It can be easily verified that both and for an arbitrary , are group homomorphisms.

Evidently,

for all

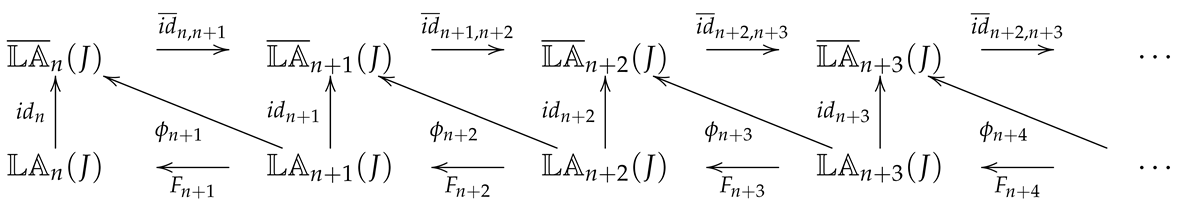

. Thus we obtain complete sequences of ordinary linear differential operators with linking homomorphisms

![Symmetry 11 00927 i001 Symmetry 11 00927 i001]()

Now consider the groups of time-varying neurons

from Proposition 3 and above defined homomorphism of the group

into the group

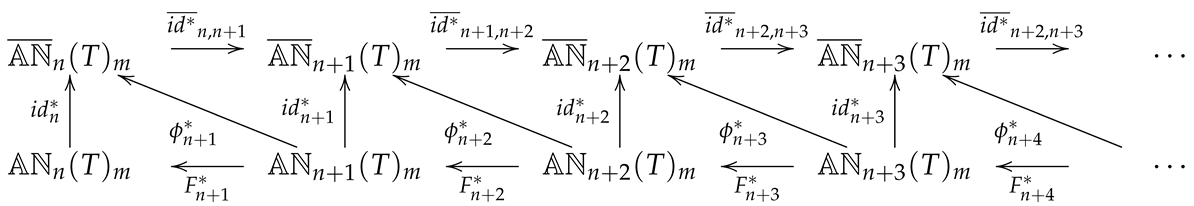

. Then we can change the diagram in the following way:

![Symmetry 11 00927 i002 Symmetry 11 00927 i002]()

Using the

Ends lemma and results the theory of linear operators we can describe also mapping morphisms in sequences groups of linear differential operators:

Theorem 2. Let , such that such that . Let be the hypergroup obtained from the group by Proposition 2. Suppose that are the above defined surjective group homomorphisms. Then are surjective homomorphisms of hypergroups.

Remark 2. The second sequence of (2) can thus be bijectively mapped onto sequence of hypergroupswit the linking surjective homomorphisms . Therefore, the bijective mapping of the above mentioned sequences is functorial. Now, shift to the concept of an automaton. This was developed as a mathematical interpretation of real-life systems that work on a discrete time-scale. Using the binary operation of concatenation of chains of input symbols we obtain automata with input alphabets endowed with the structure of a semigroup or a group. Considering mainly the structure given by transition function and neglecting output functions with output sets we reach a very useful generalization of the concept of automaton called quasi—automaton [

29,

31,

48,

49]. Let us introduce the concept of automata as an action of time varying neurons. Moreover, let system

, consists of nonempty time-varying neuron set of states

, arbitrary semigroup of their inputs

S and let mapping

fulfill the following condition:

for arbitrary

and

can be understood as a analogy of concept of quasi-automaton, as a generalization of the Mealy-type automaton. The above condition is some times called Mixed Associativity Condition (MAC).

Definition 4. Let A be a nonempty set, a semihypergroup and a mapping satisfying the condition:for any triad where . The triad is called a quasi-multiautomaton with the state set A and the input semihypergroup . The mapping is called transition function (or next-state function) of the quasi-multiautomaton . Condition is called Generalized Mixed Associativity Condition (or GMAC). The just defined structures are also called as actions of semihypergroups on sets A (called state sets).

Neuron

acts as described above:

where

i goes from 0 to

is the weight value in continuous time,

b is a bias and

is the output value in continuous time

. Here the transition function

F is the identity function.

Now suppose that the input functions are differentiable up to arbitrary order .

We consider linear differential operators

defined

Then we denote by

the additive Abelian group of linear differential operators

, where for

with the bias b we define

where

Suppose that

and define

by

are weights corresponding with inputs and b is the bias of a neuron corresponding to the operator

.

Theorem 3. Let be the above defined structures and be the above defined mapping. Then the triad is an action of the group on the group , i.e., a quasi-automaton with the state space and with the alphabet with the group structure of artificial neurons.

Proof. We are going to verify the mixed associativity condition (MAC). Suppose

and

. Then we have

thus MAC is satisfied. □

Consider an interval

and the ring

of all continuous functions defined on the interval. Let

be a sequence of ring-endomorphism of

. Denote

the EL-hypergroup of artificial neurons constructed above, with vectors of weights of the dimension

. Let

is the

-dimensional cartesian cube. Denote by

the extension of

such that

. Let us denote the mapping

defined by

with

. Consider underlying sets of hypergroups

endowed with the above defined ordering relation:

we hanve

if

. Now, for

such that

which means

(

and biases of corresponding neurons are the same) we have

which implies

.

Consequently the mapping

is order-preserving, i.e., this is an order-homomorphism of hypergroups. The final result of our considerations is the following sequence of hypergroups of artificial neurons and linking homomorphisms: