Over the years, researchers have been attracted to study the scientific problems modelled in fractional differential equations due to their constant appearance in the different disciplines of mathematical sciences and engineering such as fluid mechanics, viscoelasticity, mathematical physics, mathematical biology, system identification, control theory, electrochemistry and signal processing [

1,

2,

3,

4,

5,

6]. Several analytical and numerical techniques have been developed to solve such kind of equations in the literature. Among these methods, Li and Sun [

7] derived the generalized block pulse operational matrix to find the solution of fractional differential equations in terms of block pulse function. A truncated Legendre series together with generalized Legendre operational matrix is used to solve fractional differential equations by Saadatmandi and Dehgan in [

8] and they also have presented shifted Legendre-tau method for finding the solution of fractional diffusion equations with variable coefficients in [

9]. Doha et al. [

10] used the shifted Jacobi operational matrix of fractional derivatives applied together with spectral tau-method for solving fractional differential equations. Kazem et al. [

11] constructed an orthogonal fractional order Legendre function based on Legendre polynomials to solve fractional differential equations. Mokhtary et al. [

12] provided the operational tau method based on Muntz–Legendre polynomials for solving fractional differential equations. Bernoulli wavlet operational matrix of fractional order integration has been derived to approximate the numerical solution of fractional differential equations in [

13]. Fractional-order Lagrange polynomials have been proposed to solve the fractional differential equations in [

14]. Albadarneh et al. [

15] adopted the fractional finite difference method for solving linear and nonlinear fractional differential equations. Garrappa [

16] provided a detailed survey on the two methods, i.e., product integration rules (PI) and Lubich’s fractional linear multi step methods (FLMMs) for solving fractional differential equations. Dehghan et al. [

17] adopted homotopy analysis method to solve linear fractional partial differential equations, a meshless approximation strategy for solving fractional partial differential equations based on radial basis function is used in [

18]. Haar wavlets have been employed to obtain the solutions of boundary value problems for linear fractional partial differential equations by Rehman and Khan [

19]. Li et al. [

20] solved the linear fractional partial differential equations based on operational matrix of fractional Bernstein polynomials. Yang et al. [

21] discussed the solution of fractional order diffusion equations within the negative Prabhakar kernel comprise with the Laplace transform and the series solutions in terms of general Mittag–Leffler functions. In [

22], a new factorization technique has been adopted for nonlinear differential equations involving local fractional derivatives and found the exact solutions for nonlinear local fractional FitzHugh–Nagumo and Newell–Whitehead equations. Cesarano [

23] proposed the Hermite polynomials based operational method to solve fractional diffusive equations. From the last few decades, the Laplace transform method has become popular and adopted by many researchers to solve differential and integral equations. Since then it is necessary to find the inverse Laplace transform for finding the solution in its original domain. There exist a number of analytical and numerical methods for inverting a Laplace transform. For details one can refer [

24,

25,

26,

27,

28,

29,

30,

31,

32,

33,

34,

35,

36,

37,

38,

39,

40,

41]. The Laplace transform method reduces the differential or integral equation into a system of algebraic equations due to the Heaviside’s operational method. Further, Hasio and Chen [

42] extended the idea of Heaviside’s operational method to operational matrix of integration. In the literature, few papers reported the use of operational matrices for inversion of Laplace transform: Chen et al. [

39] obtained the walsh operational matrices and applied to distributed system, Wu et al. [

40] adopted Haar wavlet operational matrices for numerical inverse Laplace transform of certain functions, Aznam and Hussin [

38] modified Haar wavlet operational matrices by using generalized block pulse functions, Babolian and Shamloo [

24] used operational matrices based on piecewise-constant block pulse function to invert the Laplace transform and solved Volterra integral equations, Maleknejad and Nouri [

25] improved the operational matrices based on block pulse functions and Shamloo et al. [

41] adopted this method to solve first kind of integral equations. The main purpose of using an operational matrix is that it converts the differential or integral equations into a system of algebraic equations that is simple and easy to solve.

Bernstein polynomials and its operational matrix is used to solve many differential, integral and integro-differential equations [

43,

44,

45,

46,

47,

48,

49]. But these are not adopted with Laplace transform for inverting the Laplace transform. A Bernstein operational matrix of integration has been developed to find the numerical inverse Laplace transform of certain functions in our paper [

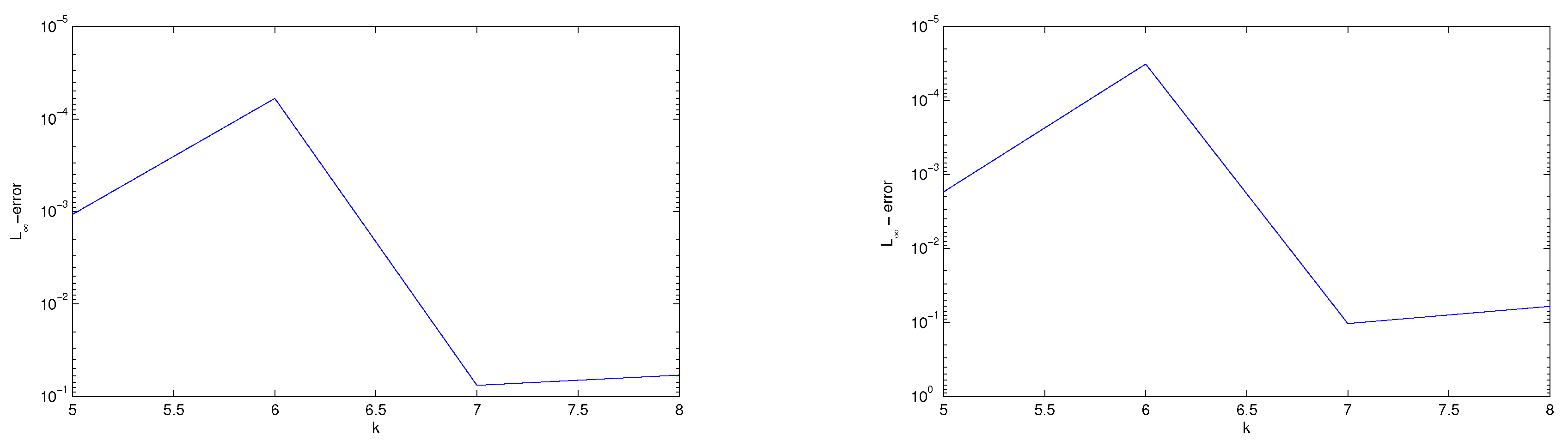

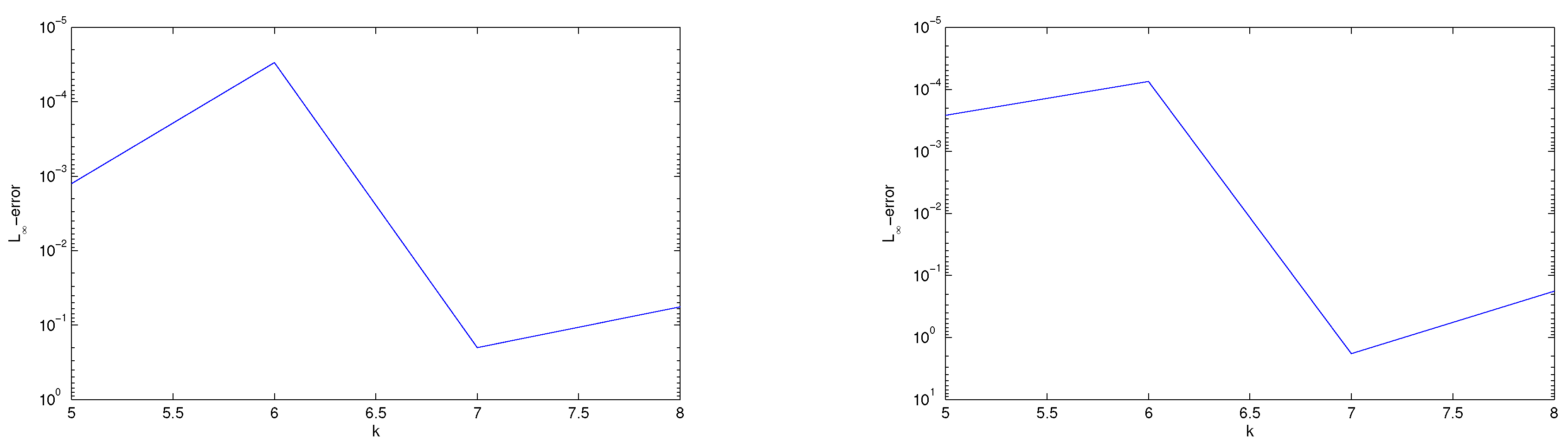

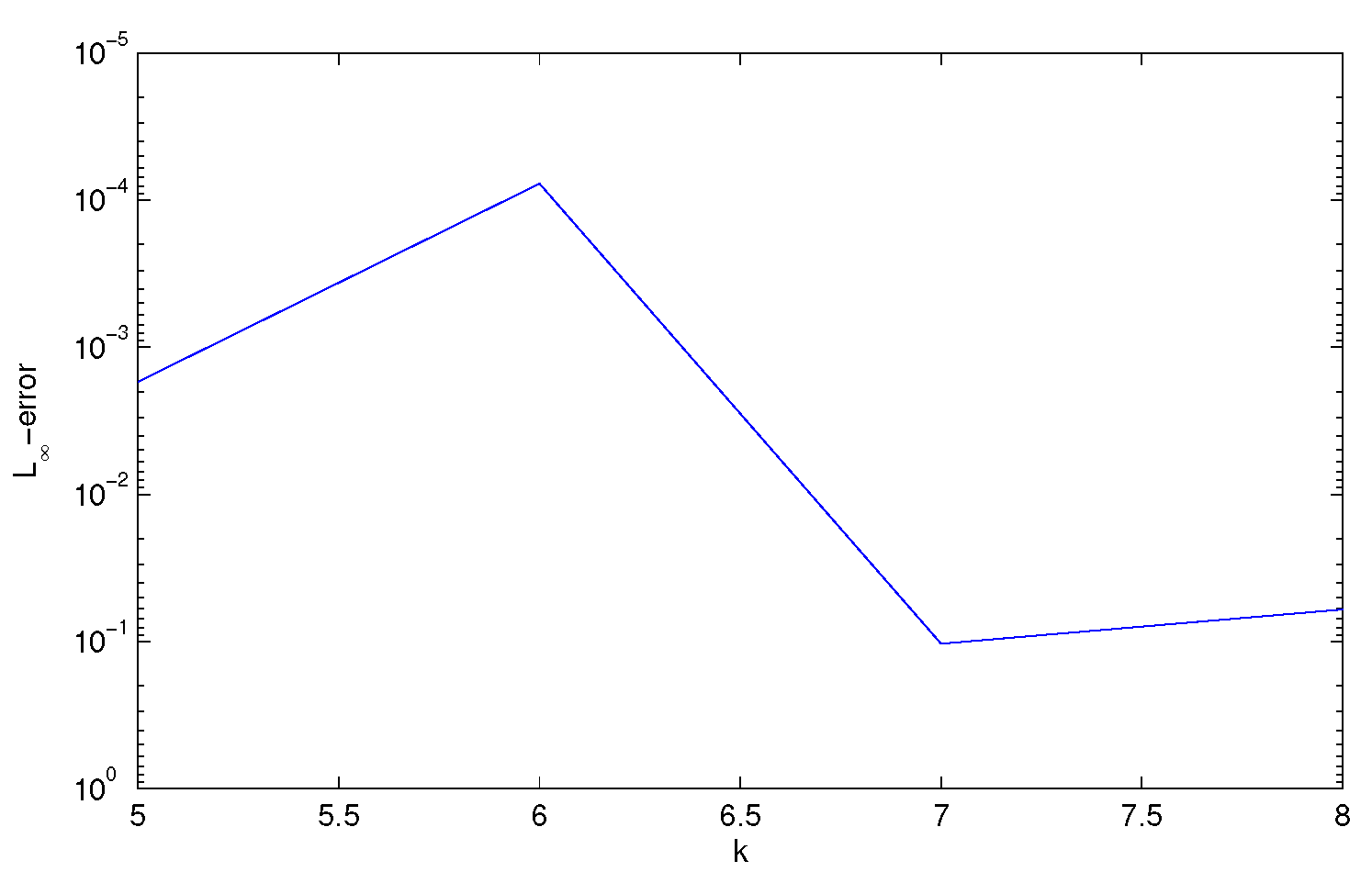

50]. The proposed method expresses the solution of equations in terms of truncated Bernstein expansion and then using its operational matrix of integration, numerical inverse Laplace transform is obtained. The operational matrix of integration of Bernstein polynomials is easily calculated using a single formula of integration rather than Haar or block pulse function where the order of matrix is taken too large, i.e.,

. Here in our method, we achieve the accuracy using a matrix of order 6 or 7. In the present research, our aim is to find the numerical solutions of linear fractional ordinary and partial differential equations, nonlinear fractional differential equations and system of fractional differential equations using numerical inverse Laplace transform method based on Bernstein operational matrix of integration developed in [

50]. At first, for linear problems, we transform the fractional differential equations to system of linear algebraic equations using Laplace transform then the numerical approach for calculating the inverse Laplace transform is used to retrieve the time-domain. Here, we extend our numerical approach to solve some nonlinear fractional differential equations together with a well-known iterative method, i.e., a Laplace Adomian decomposition method [

51] (briefly explained in the

Section 4). One more advantage of using our proposed method is that there is no need of any fractional order matrix of integration for numerical inversion to solve fractional order differential equations.

The paper is organized as follows: In

Section 2, some necessary definitions and preliminaries of the fractional calculus theory and Laplace transform are given.

Section 3 reveals the basics of Bernstein polynomials, derivation of operational matrix of integration and function approximation in Bernstein polynomials. In

Section 4, the proposed method is explained and presented the application to a class of fractional differential equations.

Section 5 represents the error estimation and convergence analysis. In

Section 6 the illustrative examples are given to show the applicability of the method.

Section 7 is refers to conclusions.