Automatic Defect Inspection for Coated Eyeglass Based on Symmetrized Energy Analysis of Color Channels

Abstract

1. Introduction

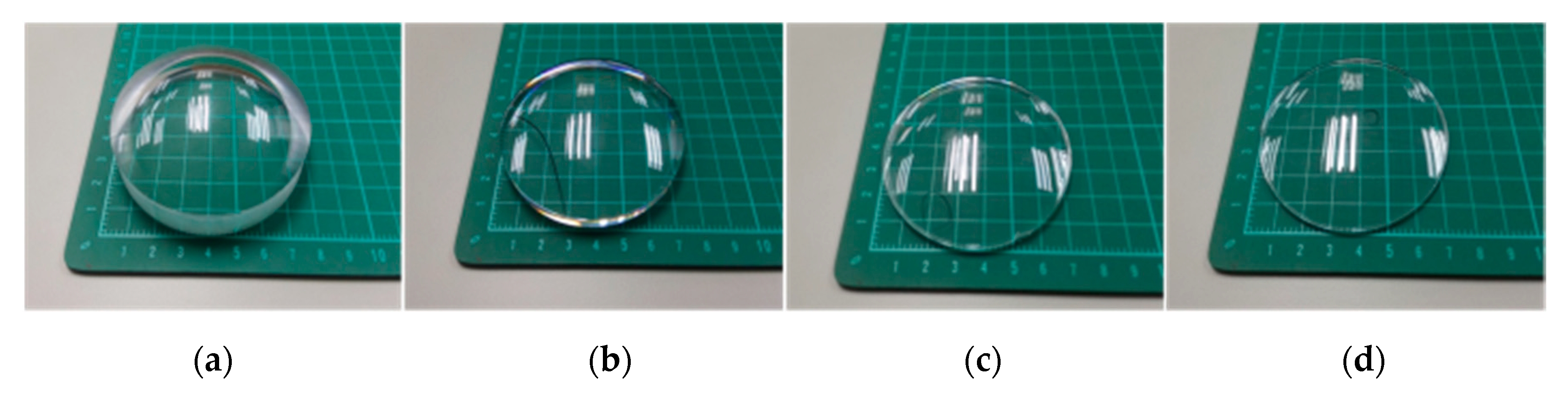

2. Overview of Eyeglass Types and Their Coating Process

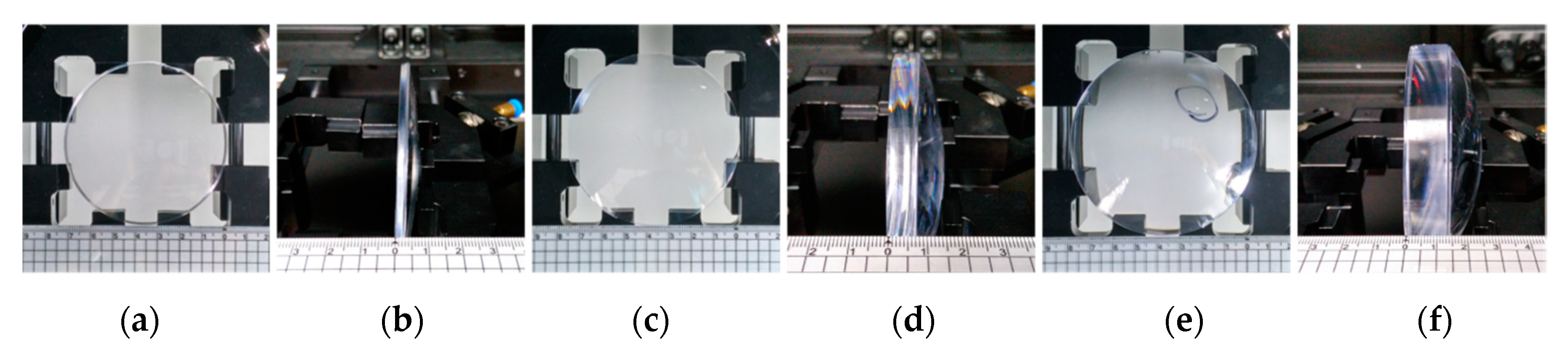

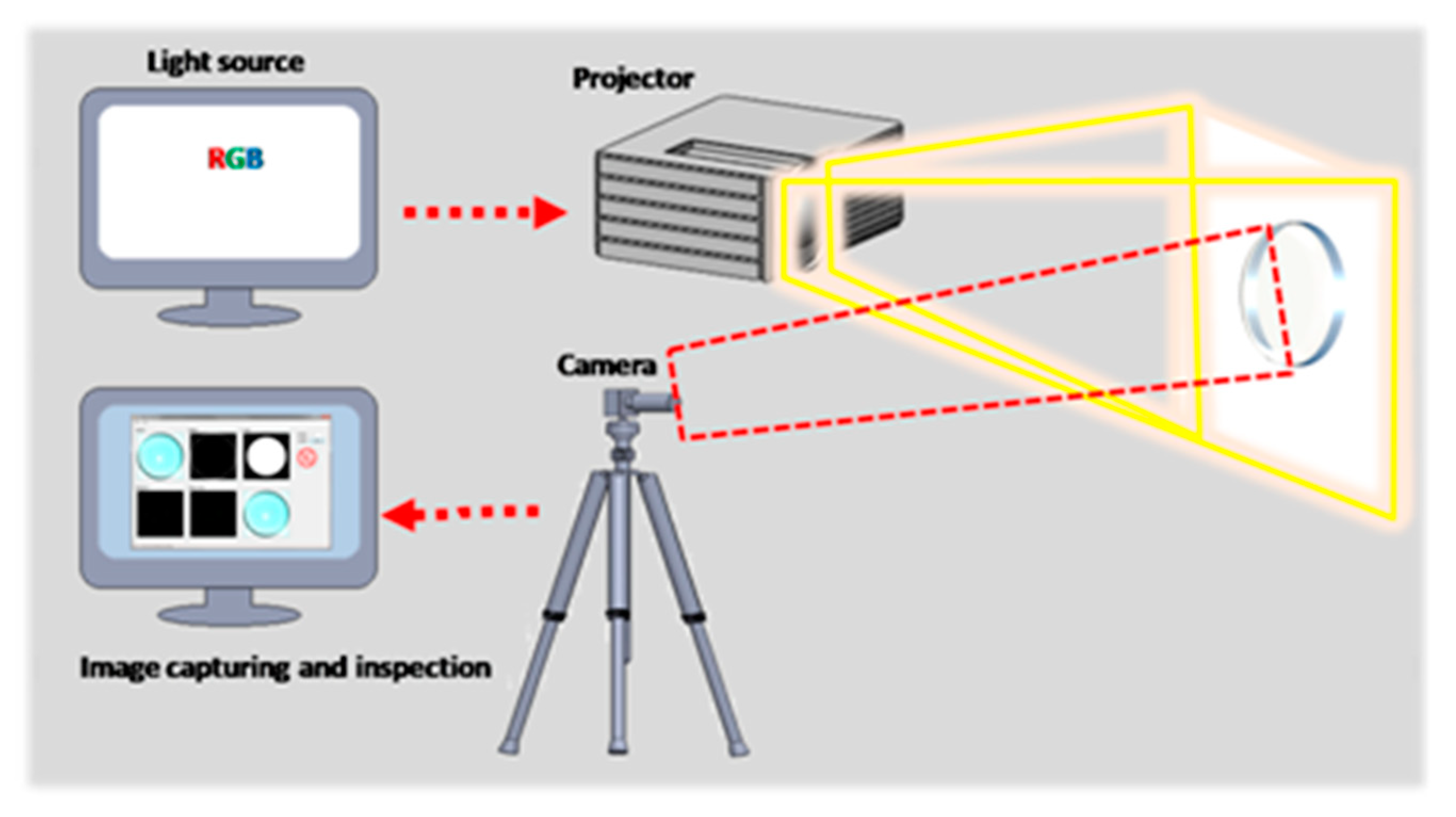

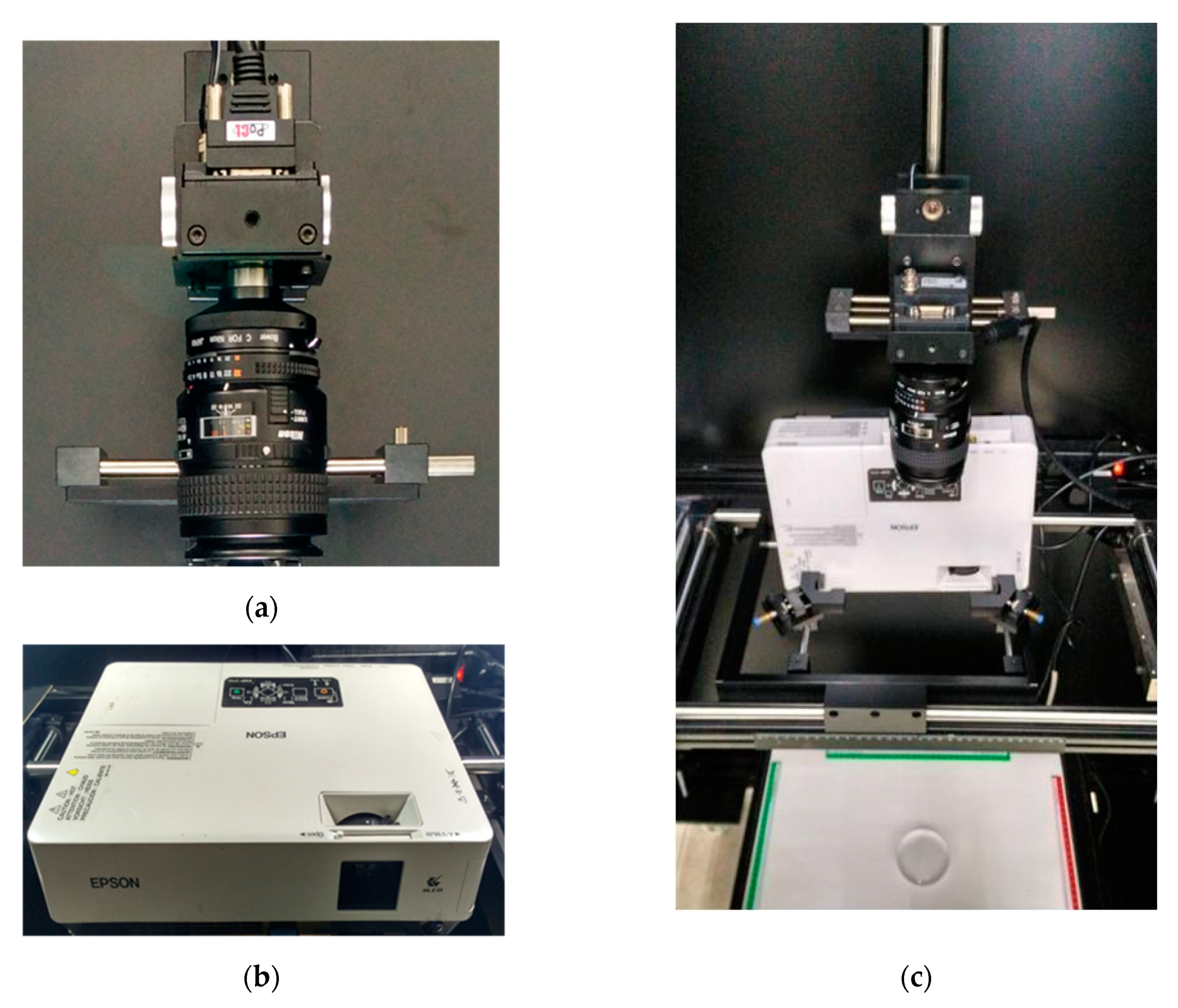

3. Image Acquisition System

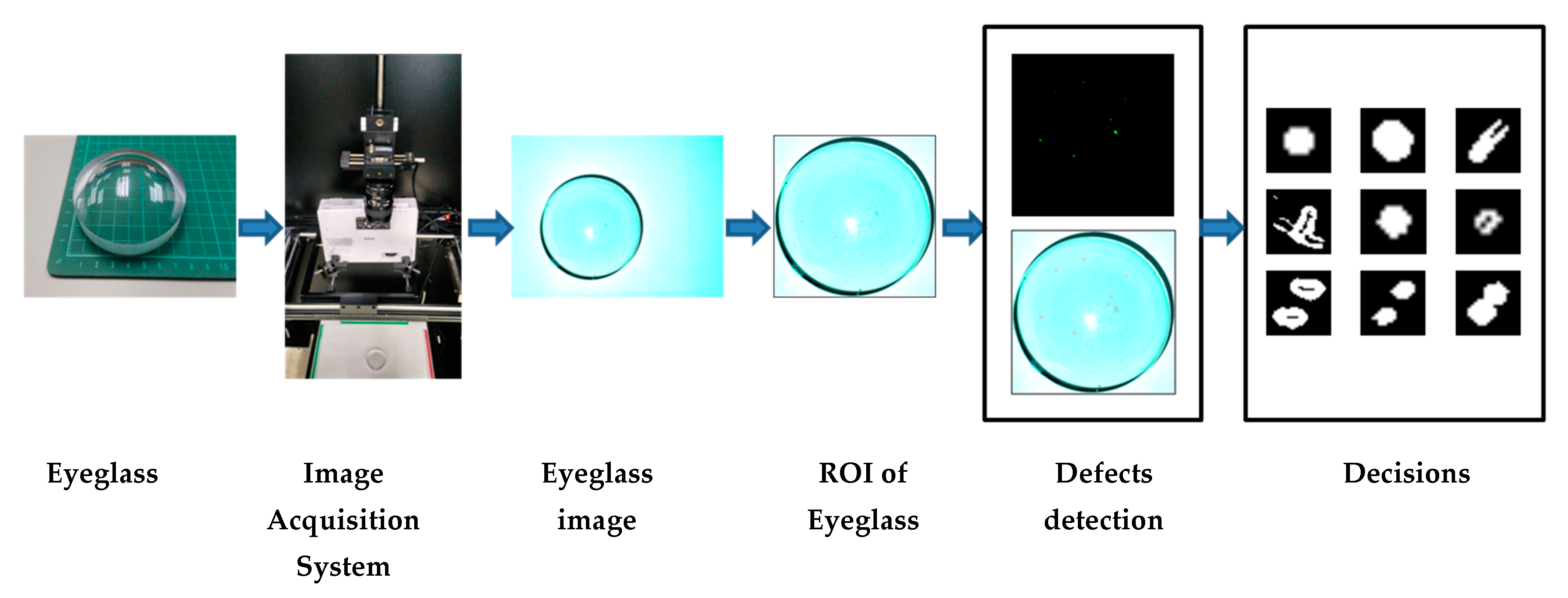

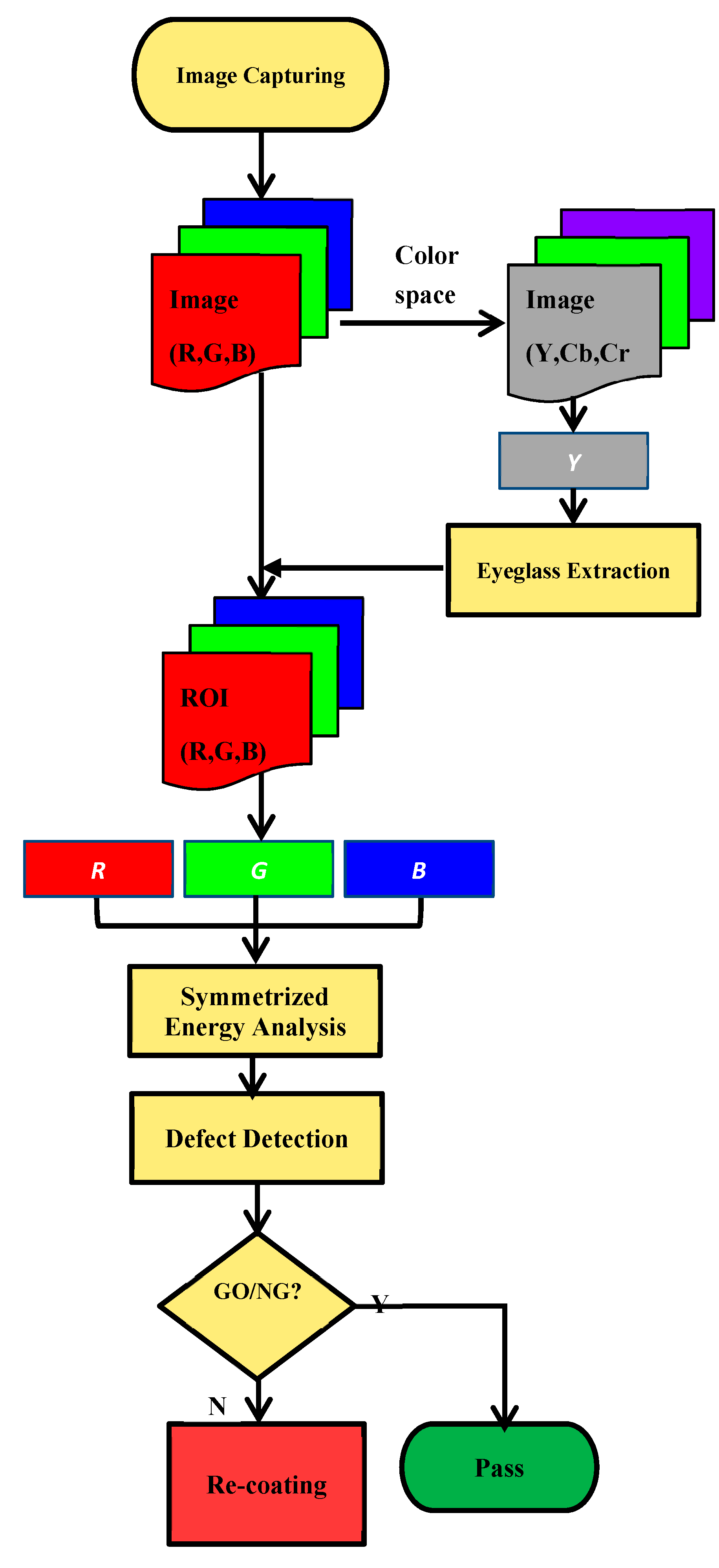

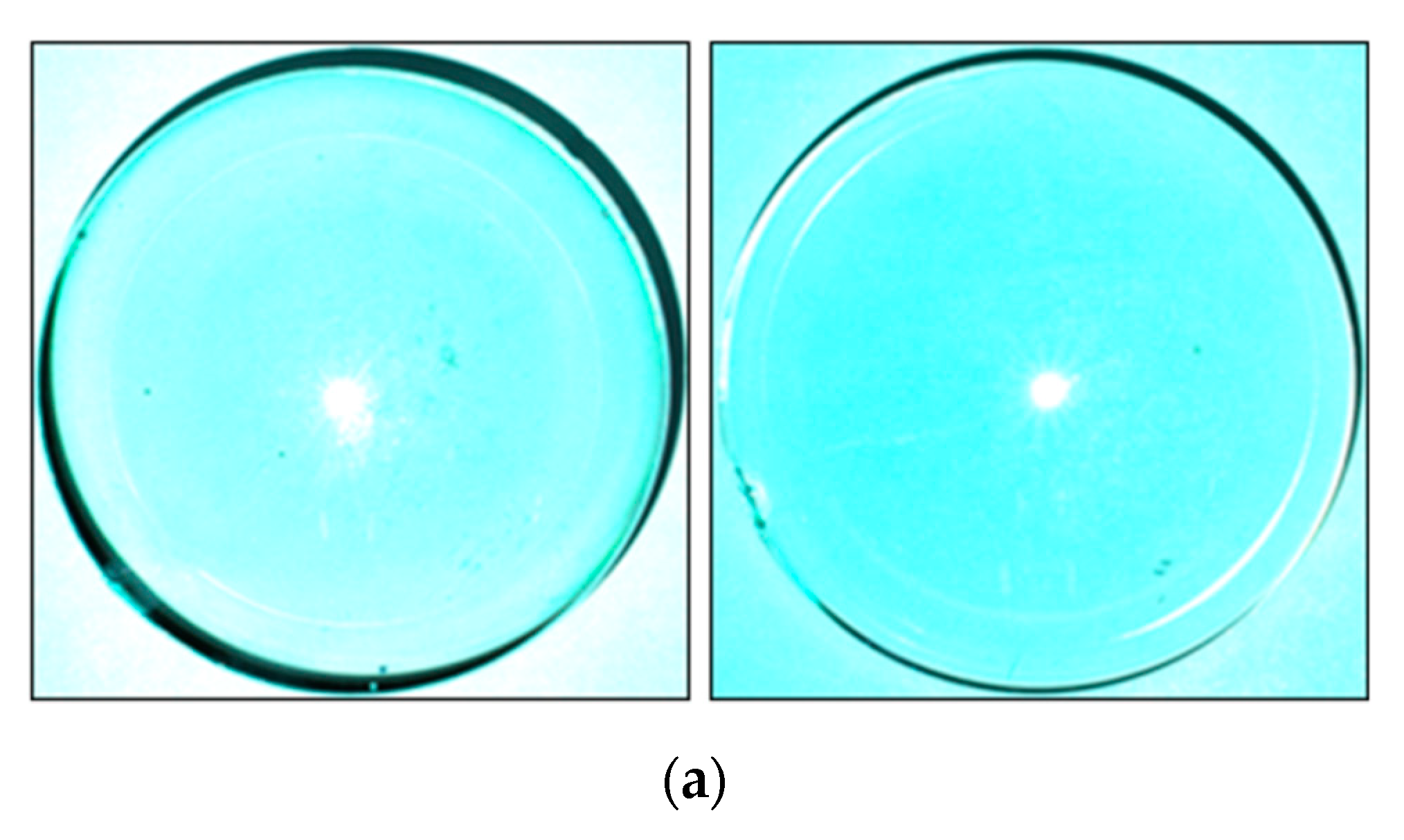

4. CEDDS

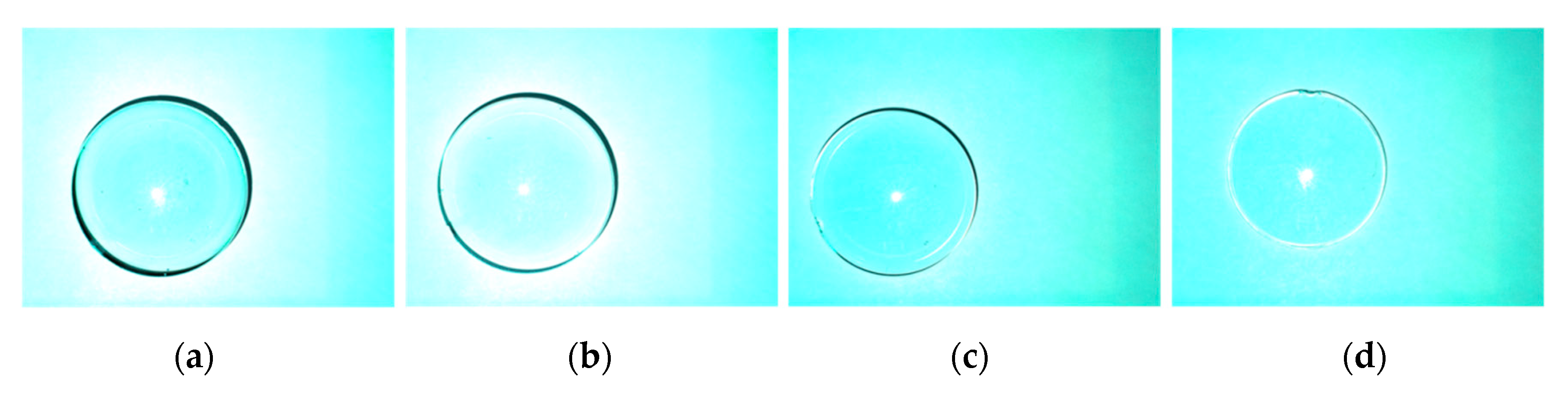

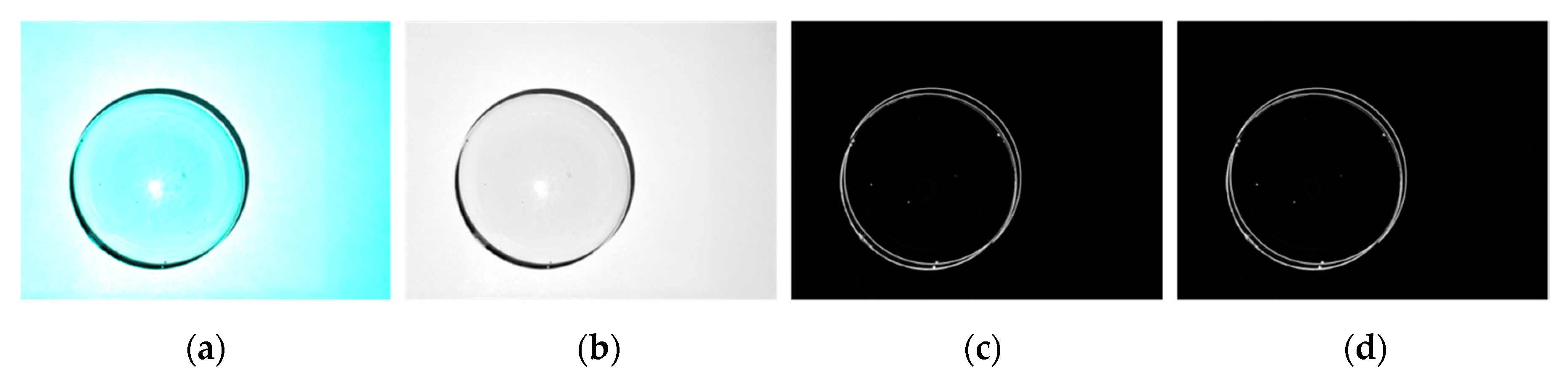

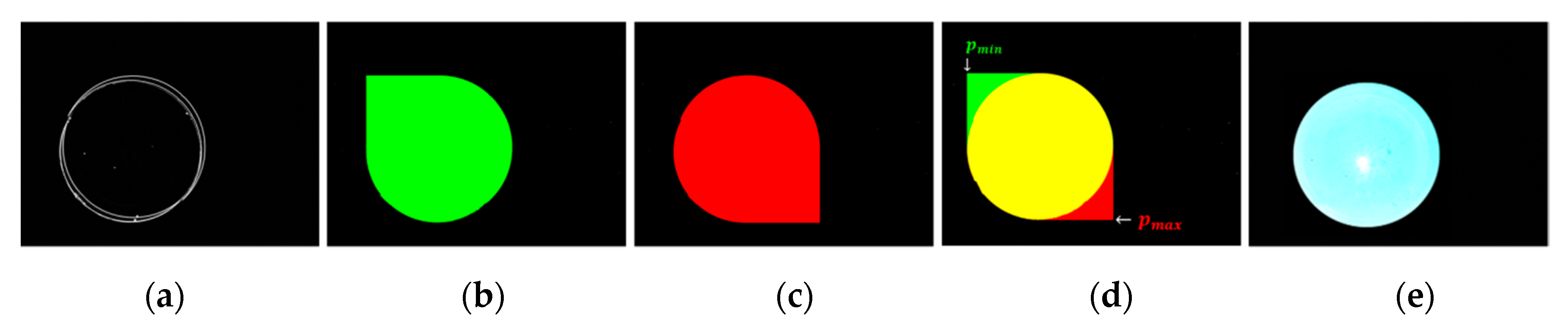

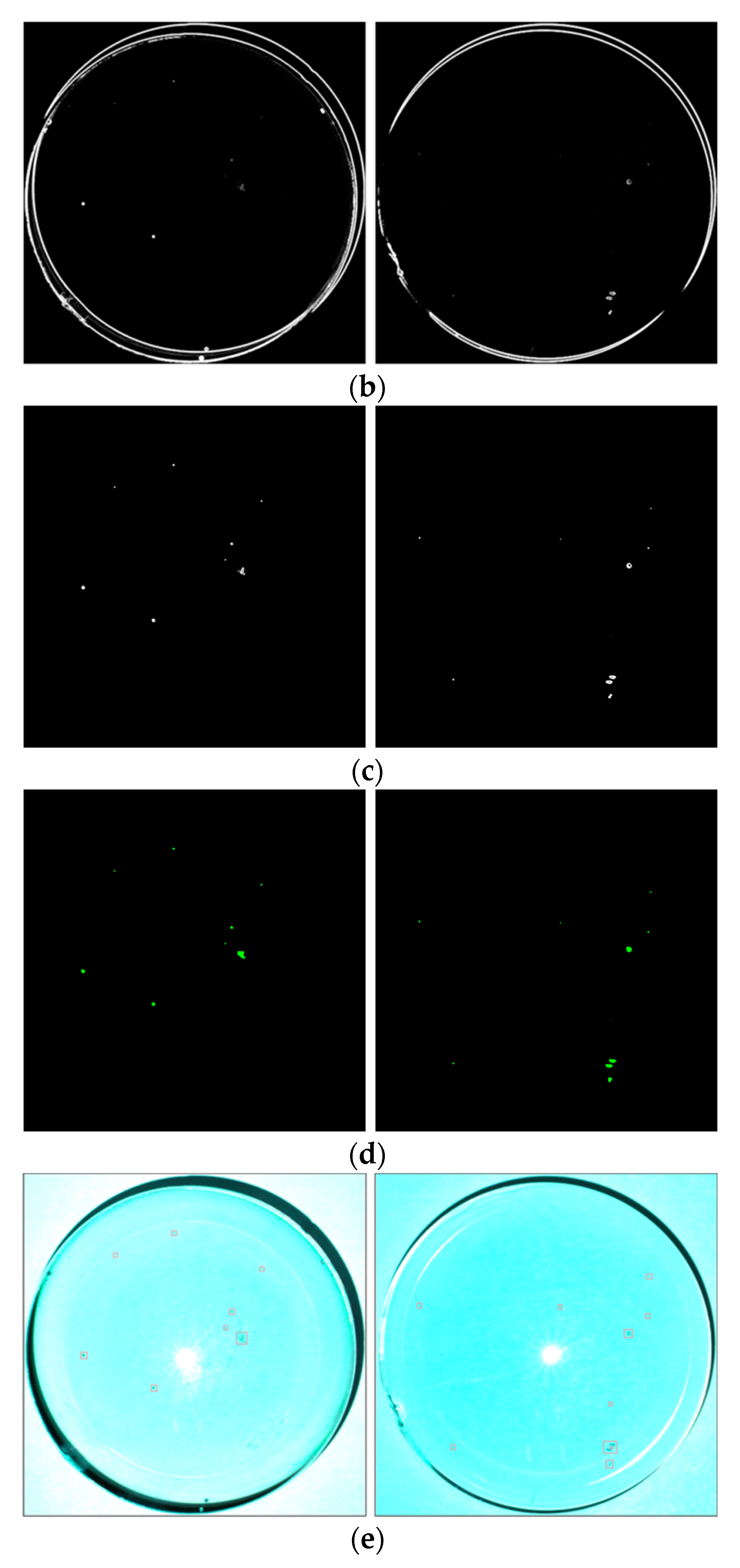

4.1. Eyeglass Extraction

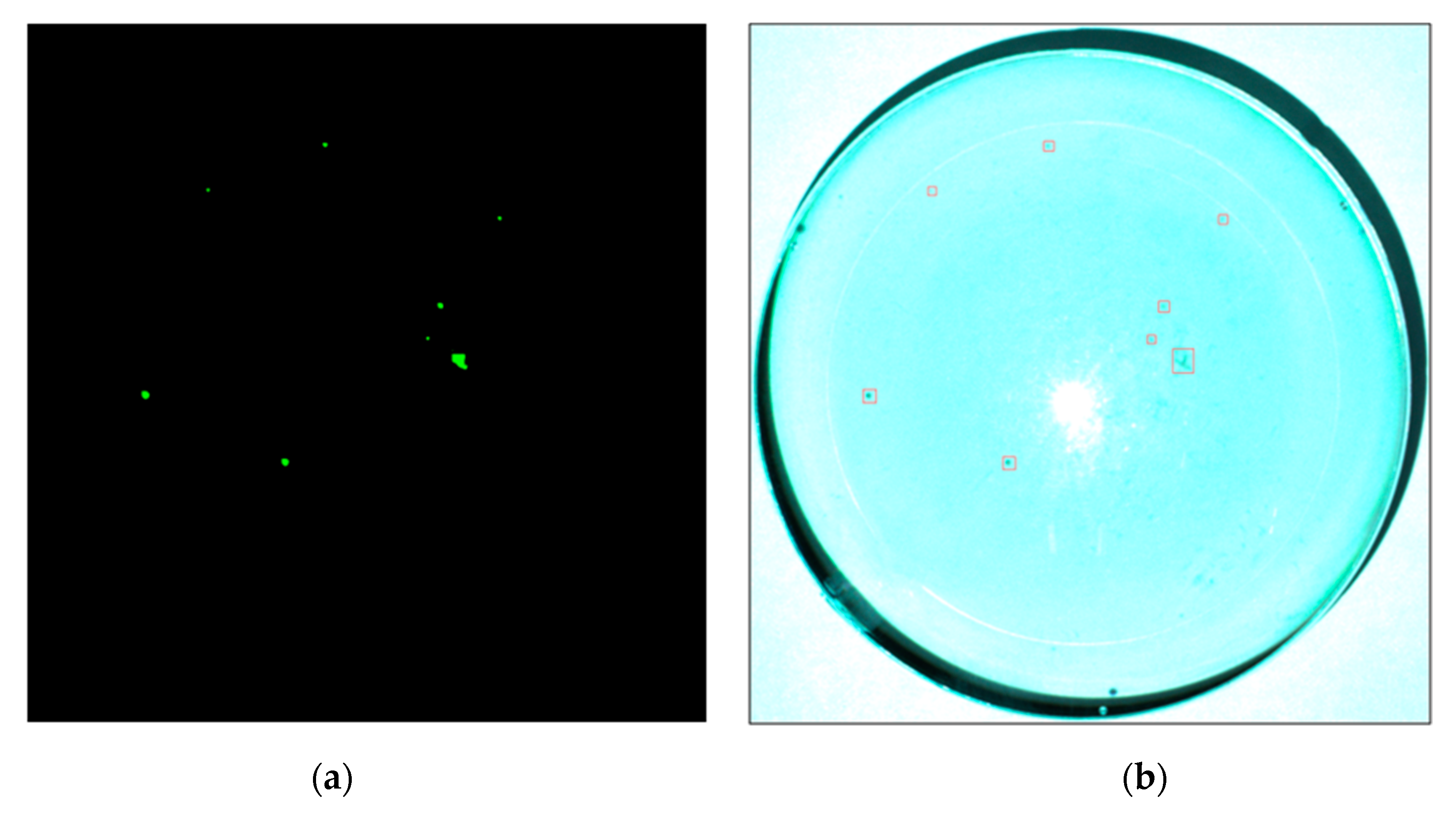

4.2. Defect Detection Based on Symmetrized Energy Analysis of Three Color Channels

5. Experimental Results and Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Moganti, M.; Ercal, F. Automatic PCB inspection systems. IEEE Potentials 1995, 14, 6–10. [Google Scholar] [CrossRef]

- Kaur, K. Various techniques for PCB defect detection. Int. J. Eng. Sci. 2016, 17, 175–178. [Google Scholar]

- Ong, A.T.; Ibrahim, Z.B.; Ramli, S. Computer machine vision inspection on printed circuit boards flux detects. Am. J. Eng. Appl. Sci. 2013, 6, 263–273. [Google Scholar]

- Mar, N.; Yarlagadda, P.; Fookes, C. Design and development of automatic visual inspection system for pcb manufacturing. Robot. Comput. Integr. Manuf. 2011, 27, 949–962. [Google Scholar] [CrossRef]

- Yun, J.P.; Kim, D.; Kim, K.H.; Lee, S.J.; Park, C.H.; Kim, S.W. Vision-based surface defect inspection for thick steel plates. Opt. Eng. 2017, 56, 053108. [Google Scholar] [CrossRef]

- Luo, Q.; He, Y. A cost-effective and automatic surface defect inspection system for hot-rolled flat steel. Rob. Comput. Integr. Manuf. 2016, 38, 16–30. [Google Scholar] [CrossRef]

- Zhao, Y.J.; Yan, Y.H.; Song, K.C. Vision-based automatic detection of steel surface defects in the cold rolling process: Considering the influence of industrial liquids and surface textures. Int. J. Adv. Manuf. Technol. 2016, 90, 1–14. [Google Scholar] [CrossRef]

- Yun, J.P.; Choi, D.C.; Jeon, Y.J.; Park, C.Y.; Kim, S.W. Defect inspection system for steel wire rods produced by hot rolling process. Int. J. Adv. Manuf. Technol. 2014, 70, 1625–1634. [Google Scholar] [CrossRef]

- Kang, D.; Jang, Y.J.; Won, S. Development of an inspection system for planar steel surface using multispectral photometric stereo. Opt. Eng. 2013, 52, 039701. [Google Scholar] [CrossRef]

- Li, Q.; Ren, S. A real-time visual inspection system for discrete surface defects of rail heads. IEEE Trans. Instrum. Meas. 2012, 61, 2189–2199. [Google Scholar] [CrossRef]

- Pan, R.; Gao, W.; Liu, J.; Wang, H. Automatic recognition of woven fabric pattern based on image processing and BP neural network. J. Text. Inst. 2011, 102, 19–30. [Google Scholar] [CrossRef]

- Kumar, A. Computer-vision-based fabric defect detection: A survey. IEEE Trans. Ind. Electron. 2008, 55, 348–363. [Google Scholar] [CrossRef]

- Yang, X.; Pang, G.; Yung, N. Robust fabric defect detection and classification using multiple adaptive wavelets. IEE Proc. Vis. Image Signal Process. 2005, 152, 715–723. [Google Scholar] [CrossRef]

- Tsai, D.-M.; Li, G.-N.; Li, W.-C.; Chiu, W.-Y. Defect detection in multi-crystal solar cells using clustering with uniformity measures. Adv. Eng. Inform. 2015, 29, 419–430. [Google Scholar] [CrossRef]

- Li, W.-C.; Tsai, D.-M. Automatic saw-mark detection in multi crystal line solar wafer images. Sol. Energy Mater. Sol. Cells 2011, 95, 2206–2220. [Google Scholar] [CrossRef]

- Chiou, Y.-C.; Liu, F.-Z. Micro crack detection of multi-crystalline silicon solar wafer using machine vision techniques. Sens. Rev. 2011, 31, 154–165. [Google Scholar] [CrossRef]

- Tsai, D.-M.; Chang, C.-C.; Chao, S.-M. Micro-crack inspection in heterogeneously textured solar wafers using anisotropic diffusion. Image Vis. Comput. 2010, 28, 491–501. [Google Scholar] [CrossRef]

- Fuyuki, T.; Kitiyanan, A. Photographic diagnosis of crystalline silicon solar cells utilizing electroluminescence. Appl. Phys. A 2009, 96, 189–196. [Google Scholar] [CrossRef]

- Michaeli, W.; Berdel, K. Inline inspection of textured plastics surfaces. Opt. Eng. 2011, 50, 027205. [Google Scholar] [CrossRef]

- Liu, B.; Wu, S.J.; Zou, S.F. Automatic detection technology of surface defects on plastic products based on machine vision. In Proceedings of the 2010 International Conference on Mechanic Automation and Control Engineering, Wuhan, China, 26–28 June 2010; pp. 2213–2216. [Google Scholar]

- Gan, Y.; Zhao, Q. An effective defect inspection method for LCD using active contour model. IEEE Trans. Instrum. Meas. 2013, 62, 2438–2445. [Google Scholar] [CrossRef]

- Bi, X.; Zhuang, C.G.; Ding, H. A new Mura defect inspection way for TFT-LCD using level set method. IEEE Signal Process. Lett. 2009, 16, 311–314. [Google Scholar]

- Chen, S.L.; Chou, S.T. TFT-LCD Mura defect detection using wavelet and cosine transforms. J. Adv. Mech. Des. Syst. Manuf. 2008, 2, 441–453. [Google Scholar] [CrossRef][Green Version]

- Lee, J.Y.; Yoo, S.I. Automatic detection of region-Mura defect in TFT-LCD. IEICE Trans. Inf. Syst. 2004, 87, 2371–2378. [Google Scholar]

- Peng, X.; Chen, Y.; Yu, W.; Zhou, Z.; Sun, G. An online defects inspection method for float glass fabrication based on machine vision. Int. J. Adv. Manuf. Technol. 2008, 39, 1180–1189. [Google Scholar] [CrossRef]

- Adamo, F.; Attivissimo, F.; Nisio, A.D.; Savino, M. An online defects inspection system for satin glass based on machine vision. In Proceedings of the 2009 IEEE Instrumentation and Measurement Technology Conference, Singapore, 5–7 May 2009; pp. 288–293. [Google Scholar]

- Zhou, X.; Wang, Y.; Xiao, C.; Zhu, Q.; Lu, X.; Zhang, H.; Ge, J.; Zhao, H. Automated visual inspection of glass bottle bottom with saliency detection and template matching. IEEE Trans. Instrum. Meas. 2019, 1–15. [Google Scholar] [CrossRef]

- Liang, Q.; Xiang, S.; Long, J.; Sun, W.; Wang, Y.; Zhang, D. Real-time comprehensive glass container inspection system based on deep learning framework. Electr. Lett. 2018, 55, 131–132. [Google Scholar] [CrossRef]

- Jin, Y.; Wang, Z.; Zhu, L.; Yang, J. Research on in-line glass defect inspection technology based on dual CCFL. Proc. Eng. 2011, 15, 1797–1801. [Google Scholar] [CrossRef][Green Version]

- Adamo, F.; Attivissimo, F.; Nisio, A.D.; Savino, M. An automated visual inspection system for the glass industry. In Proceedings of the 16th IMEKOTC4 International Symposium, Florence, Italy, 22–24 September 2008. [Google Scholar]

- Liu, Y.; Yu, F. Automatic inspection system of surface defects on optical IR-CUT filter based on machine vision. Opt. Laser Eng. 2014, 55, 243–257. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 3rd ed.; Prentice-Hall: Englewood Cliffs, NJ, USA, 2008. [Google Scholar]

- Lu, S.; Wang, Z.; Shen, J. Neuro-fuzzy synergism to the intelligent system for edge detection and enhancement. Pattern Recognit. 2008, 36, 2395–2409. [Google Scholar] [CrossRef]

- Lu, D.S.; Chen, C.C. Edge detection improvement by ant colony optimization. Pattern Recognit. Lett. 2008, 29, 416–425. [Google Scholar] [CrossRef]

- Santosh, K.C.; Wendling, L.; Antani, S.; Thoma, G.R. Overlaid arrow detection for labeling regions of interest in biomedical images. IEEE Intell. Syst. 2016, 31, 66–75. [Google Scholar] [CrossRef]

- Santosh, K.C.; Alam, N.; Roy, P.P.; Wendling, L.; Antani, S.; Thoma, G.R. A simple and efficient arrowhead detection technique in biomedical images. Int. J. Pattern Recognit. Artif. Intell. 2016, 30, 1657002. [Google Scholar] [CrossRef]

- Santosh, K.C.; Roy, P.P. Arrow detection in biomedical images using sequential classifier. Int. J. Mach. Learn. Cybern. 2018, 9, 993–1006. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Pugin, E.; Zhiznyakov, A. Histogram Method of Image Binarization based on Fuzzy Pixel Representation. In Proceedings of the Dynamics of Systems, Mechanisms and Machines, Omsk, Russia, 14–16 November 2017. [Google Scholar]

| Object | The Distance between Two Objects | ||

|---|---|---|---|

| Projector to Screen | CCD Camera to Screen | Sample to Screen | |

| Distance | 680 mm | 715 mm | 0 mm |

| Item Mode | Balanced Ratio | Exposure Time | ||

|---|---|---|---|---|

| R | G | B | ||

| E.T. 13.0 E.T. 7.0 | 120 | 80 | 120 | 60 ms |

| E.T. 3.2 E.T. 2.9 | 120 | 80 | 120 | 56 ms |

| Type of Coated Eyeglass | ||||||||

|---|---|---|---|---|---|---|---|---|

| E.T. 13.0 | E.T. 7.0 | E.T. 3.2 | E.T. 2.9 | |||||

| No. | Defect | Size | Defect | Size | Defect | Size | Defect | Size |

| 1 |  | 7 × 8 |  | 5 × 5 |  | 7 × 5 |  | 27 × 20 |

| 2 |  | 5 × 5 |  | 6 × 12 |  | 5 × 3 |  | 18 × 9 |

| 3 |  | 6 × 6 | - | - |  | 6 × 6 |  | 5 × 6 |

| 4 |  | 9 × 9 | - | - |  | 17 × 17 |  | 17 × 17 |

| 5 |  | 5 × 5 | - | - |  | 28 × 33 |  | 5 × 5 |

| 6 |  | 31 × 26 | - | - |  | 5 × 6 |  | 15 × 14 |

| 7 |  | 13 × 13 | - | - |  | 15 × 13 |  | 4 × 5 |

| 8 |  | 12 × 12 | - | - | - | - |  | 14 × 11 |

| Item | Defect Detection Rate | Go/NG Decision Time | Total Running Time | ||

|---|---|---|---|---|---|

| Method | |||||

| Mode | Pugin and Zhiznyakov Method | Our Method | |||

| E.T. 13.0 E.T. 7.0 | 98.6% | 100% | 1.583 s/pc. | 6.022 s/pc. | |

| E.T. 3.2 E.T. 2.9 | 98.6% | 100% | 1.596 s/pc. | 6.102 s/pc. | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Le, N.T.; Wang, J.-W.; Wang, C.-C.; Nguyen, T.N. Automatic Defect Inspection for Coated Eyeglass Based on Symmetrized Energy Analysis of Color Channels. Symmetry 2019, 11, 1518. https://doi.org/10.3390/sym11121518

Le NT, Wang J-W, Wang C-C, Nguyen TN. Automatic Defect Inspection for Coated Eyeglass Based on Symmetrized Energy Analysis of Color Channels. Symmetry. 2019; 11(12):1518. https://doi.org/10.3390/sym11121518

Chicago/Turabian StyleLe, Ngoc Tuyen, Jing-Wein Wang, Chou-Chen Wang, and Tu N. Nguyen. 2019. "Automatic Defect Inspection for Coated Eyeglass Based on Symmetrized Energy Analysis of Color Channels" Symmetry 11, no. 12: 1518. https://doi.org/10.3390/sym11121518

APA StyleLe, N. T., Wang, J.-W., Wang, C.-C., & Nguyen, T. N. (2019). Automatic Defect Inspection for Coated Eyeglass Based on Symmetrized Energy Analysis of Color Channels. Symmetry, 11(12), 1518. https://doi.org/10.3390/sym11121518