Abstract

Nowadays, video surveillance has become ubiquitous with the quick development of artificial intelligence. Multi-object detection (MOD) is a key step in video surveillance and has been widely studied for a long time. The majority of existing MOD algorithms follow the “divide and conquer” pipeline and utilize popular machine learning techniques to optimize algorithm parameters. However, this pipeline is usually suboptimal since it decomposes the MOD task into several sub-tasks and does not optimize them jointly. In addition, the frequently used supervised learning methods rely on the labeled data which are scarce and expensive to obtain. Thus, we propose an end-to-end Unsupervised Multi-Object Detection framework for video surveillance, where a neural model learns to detect objects from each video frame by minimizing the image reconstruction error. Moreover, we propose a Memory-Based Recurrent Attention Network to ease detection and training. The proposed model was evaluated on both synthetic and real datasets, exhibiting its potential.

1. Introduction

Video surveillance aims to analyze video data recorded by cameras. It has been widely used in crime prevention, industrial processes, traffic monitoring, sporting events, etc. A key step in video surveillance is object detection, i.e., locating multiple objects with bounding boxes in each video frame. This is crucial for downstream tasks such as recognition, tracking, behavior analysis, and event parsing.

Multi-object detection (MOD) from visual data has been extensively studied for many years by computer vision communities. Classical methods such as Deformable Part Models (DPMs) [1] follow the “divide and conquer” pipeline that a sliding window approach is first used to generate image regions, then a classifier (e.g., a Support Vector Machine [2]) is employed to categorize each region into object/non-object, and finally post-processing is applied to refine the bounding boxes of object regions (e.g., removing outliers, merging duplicates, and rectifying boundaries). To improve both the efficiency and performance of MOD, methods based on Region-based Convolutional Neural Networks (R-CNNs) [3,4,5,6] are proposed and perform well on various popular object detection datasets [7,8,9,10,11]. In contrast to previous methods, they selectively generate only a small amount of image region proposals and use Convolutional Neural Networks (CNNs) [12,13] as more expressive classifiers. However, as this “divide and conquer” pipeline breaks down the MOD problem as several sub-problems and optimizes them separately, the resulting solutions are usually sub-optimal. To jointly optimize the MOD problem, Huang et al. [14], Redmon et al. [15] and Liu et al. [16] formulated object detection as a single regression problem that directly maps the image to object bounding boxes, achieving end-to-end learning which greatly simplifies the MOD process.

Nevertheless, all above methods rely on supervised learning that requires labeled data, while manually labeling the object bounding boxes is very expensive. Moreover, unlike general MOD tasks, for video surveillance, we are more interested in a specific class of objects, and the backgrounds usually does not change over time and thus can be easily extracted to ease detection. To this end, we propose a novel framework to achieve unsupervised end-to-end learning of MOD for video surveillance. We summarize our contribution as follows:

- We propose an Unsupervised Multi-Object Detection (UMOD) framework, where a neural model learns to detect objects from each video frame by minimizing the image reconstruction error.

- We propose aMemory-Based Recurrent Attention Network to improve detection efficiency and ease model training.

- We assess the proposed model on both the synthetic dataset (Sprites) and the real dataset (DukeMTMC [17]), exhibiting its advantages and practicality.

2. Unsupervised Multi-Object Detection

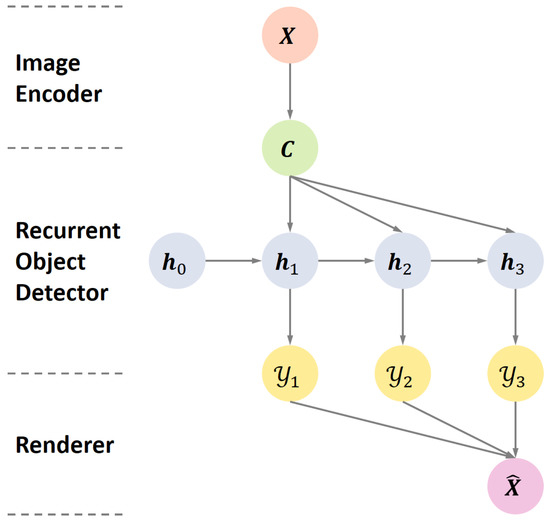

The UMOD framework is composed of four modules, including: (i) an image encoder extracting input features from the input image; (ii) a recurrent object detector recursively detecting objects using the input features; (iii) a parameter-free renderer reconstructing the input image using the detector outputs; and (iv) a reconstruction loss driving the learning of Modules (i) and (ii), in an unsupervised and end-to-end fashion.

2.1. Image Encoder

Firstly, we use a neural image encoder to compress the input image into input feature :

where has a height H, width W, and channel number D); has a height M, width N, and channel number S; and is the network parameters to be learned. By making contain significantly fewer elements than and taking it as the input for succeeding modules, we can largely reduce the computation complexity for object detection.

2.2. Recurrent Object Detector

Based on the observation that different objects usually have common patterns in video surveillance MOD tasks, we iteratively apply a same neural model, namely the recurrent object detector, to extract objects from the input feature . This can not only regularize the model, but also reduce the number of parameters, thereby maintaining learning efficiency when object number increases.

The Recurrent Object Detector consists of a recurrent module and a neural decoder . In the t-th iteration ( where T is the maximum detection step), the detector first updates its state vector (Throughout this paper, we assume the vectors are in row form) via (parameterized by ):

Although could be naturally represented as a Recurrent Neural Network (RNN) [18,19,20] ( needs to be vectorized), we model using a novel architecture to improve the network efficiency, which is discussed in Section 3.

Given , the detector output can be then generated through a neural decoder (parameterized by ):

where the quintuple is a mid-level representation of the object. Concretely, is the object confidence denoting its existence; is an I-dimensional one-hot vector denoting which image layer the object possesses; is the object pose containing a normalized scale and translation , where the real scale is produced by and are constants; is the mask representing the object shape; and is the object appearance. To obtain output variables of desired range, in the final layer of , we use the sigmoid function to generate and , use the tanh function to generate , and sample from the Categorical and Bernoulli distributions to get and , respectively. As the sampling process is not differentiable, a Straight-Through Gumbel–Softmax estimator [21] is employed to reparameterize both distributions so that back propagation can still be applied. We have found by experiments that discretizing and is crucial to obtain interpretable output variables.

The mid-level representation defined above is both flexible and interpretable. As would be shown below, the output variables can be directly used to reconstruct the input image, through which their interpretability is enforced.

2.3. Renderer

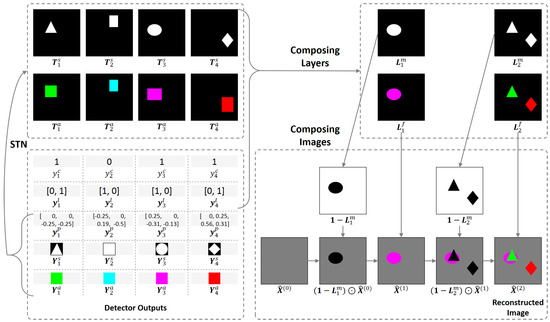

Given the detector outputs without training labels, how can we define a training objective? Our solution is to first convert all these outputs into a reconstructed image using a renderer which is differentiable, and then use back propagation to minimize the reconstruction error. To enforce the model to learn to produce desired detector outputs, we make the renderer deterministic and contain no parameters. In this case, correct detector outputs correspond to a correct reconstruction.

Firstly, we use the object pose to scale and shift its shape and appearance by using a Spatial Transformer Network (STN) [22]:

where is the transformed shape and is the transformed appearance.

Then, by using the object confidence and layer , we compose I image layers (), where the i-th layer can be possessed by several objects and is obtain by:

where and are the layer’s foreground mask and foreground, respectively, and ⊙ is the element-wise multiplication (If the operands have different sizes, we simply broadcast them).

Finally, by using these layers, the input image could be reconstructed in an iterative way, i.e., for :

where we initialize as the background (Note that the background is assumed easy to extract or known in advance), and take as the final reconstructed image. Illustrations of the UMOD framework and the renderer are shown in Figure 1 and Figure 2, respectively.

Figure 1.

Illustration of the Unsupervised Multi-Object Detection (UMOD) framework, where the detection step .

Figure 2.

Illustration of the renderer that converts the detector outputs to the reconstructed image, where the detection step and the layer number .

Note that our rendering process can be accelerated since matrix operations can be used to parallelize the composition of layers (defined in Equations (6) and (7)). Although handling occlusion requires iterations (defined in Equation (8)) that still cannot be parallelized, we can use fewer layers by setting a smaller I. This is reasonable since occlusion usually happens among a few objects, and it is unnecessary to allocate a layer for each object (non-occluded objects can share a same layer).

2.4. Loss

With the reconstructed image , we can then define the loss l for each sample to drive the learning of the image encoder and recurrent object detector:

where is the Mean Squared Error for reconstruction, and is the coefficient of the tightness constraint used to penalize object scales in order to avoid loose bounding boxes.

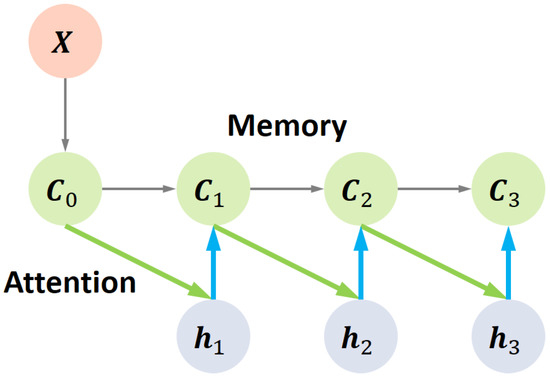

3. Memory-Based Recurrent Attention Networks

When using RNN to model the recurrent module defined in Equation (2), it can suffer two issues: (i) to avoid repeated detection, the detector state must carry information from the previous detections (for ), which couples memory and computation, thereby making the detection of the current object less effective; and (ii) to extract features for a specific object, the detector must learn to focus on a local area on the input feature , making training more difficult. To this end, we propose a Memory-Based Recurrent Attention Network (MRAN), which overcomes Issue (i) by directly taking the input feature as an external memory, and overcomes Issue (ii) by explicitly employing the attention mechanism.

Concretely, we initialize the memory as , which is then sequentially read and written by the recurrent object detector so that all messages from the past t detections are recorded by instead of . In iteration t, the detector first reads from the previous memory , then updates its state , and finally write new contents into the current memory . Thus, in contrast to Equation (2), the recurrent module has the form:

We set defined in Equation (10) as a MRAN, where a location-based addressing is first adopted to explicitly impose attention on the input feature. The attention weight is generated by an attention network :

where satisfies (by using a softmax output layer).

Then, let be a feature vector of , we define the read operation as:

where the read vector represents the attended input features relevant to the current detection.

Next, the detector state is updated through a linear transformation followed by a tanh function, where is taken as the input feature (instead of ):

Finally, we use to generate an erase vector and a write vector :

and define the write operation as:

An illustrations of the MRAN is shown in Figure 3.

Figure 3.

Illustration of the Memory-Based Recurrent Attention Network (MRAN), where the detection step and the green/blue bold lines denote the attentive read/write operations on the memory.

Although the attention is now imposed on the input feature , we would like to further impose it on the input image so that the detector is only related to a local image region rather than the whole image. Therefore, we use a Fully Convolutional Network (FCN) [23] (only contains convolution layers) as the image encoder . By controlling the receptive field of , can also be attentively accessed by the detector. Another advantage of using FCN is that, through parameter sharing, it can well-capture the regularity among different objects (they usually have similar patterns).

4. Experiments

The goals of our experiments were: (i) to investigate the importance of the layered representation and MRAN in our model (Note that setting a supervised counterpart for our model could be difficult as computing the supervised loss requires finding the best matching between the detector outputs and the ground truth data, which is also an optimization problem); and (ii) to test whether our model is well-suited for video surveillance data taken from cameras. For Goal (i), we created a synthetic dataset, namely Sprites, and were interested in the configurations below:

- UMOD-MRAN-noOcc. UMOD-MRAN without occlusion reasoning, which is achieved by fixing the layer number I to 1.

- UMOD-RNN. UMOD with RNN, which is achieved by setting the recurrent module as a Gated Recurrent Unit [20] as described in Section 2.2, thereby disabling the external memory and attention.

- AIR. Our implementation of the generative model proposed in [26] that could be used for MOD through inference.

For Goal (ii), we evaluated UMOD-MRAN on the challenging DukeMTMC dataset [17], and compared results with those of the state-of-the-art.

There are some common settings for the implementation of the above configurations. For the image encoder defined in Equation (1), we set it as a FCN, where each convolution layer was composed via Convolution→Pooling→ReLU and the convolution stride was set to 1 for all layers. For the decoder defined in Equation (3), we set it as a Fully-Connected network (FC), where the ReLU was chosen as its activation function for each hidden layer. We also set the object scale coefficients . For the renderer, we set the image layer (except for UMOD-MRAN-noOcc and AIR where ). For the loss defined in Equation (9), we set . To train the model, we minimized the averaged loss on the training set with respect to all network parameters using Adam [27] with a learning rate of . Early stopping was used to terminate training.

4.1. Sprites

As a toy example, we wanted to see whether the model could robustly handle occlusion and infer the object existence, position, scale, shape, and appearance, thereby generating accurate object bounding boxes. Therefore, we created a new Sprites dataset composed of 1 M color images, each of which is of size 128 × 128 × 3, comprising a black background and 0–3 sprites which could occlude each other. Each sprite is a 21 × 21 × three-color patch with a random scale, position, shape (diamond/rectangle/triangle/circle), and color (cyan/magenta/yellow/blue/green/red).

To deal with the task, for the UMOD configuration’s we set the detection step and the background . Please refer to Table 1 for other configurations. The mini-batch size was set to 128 for training.

Table 1.

Model hyper-parameters for Sprites and DukeMTMC.

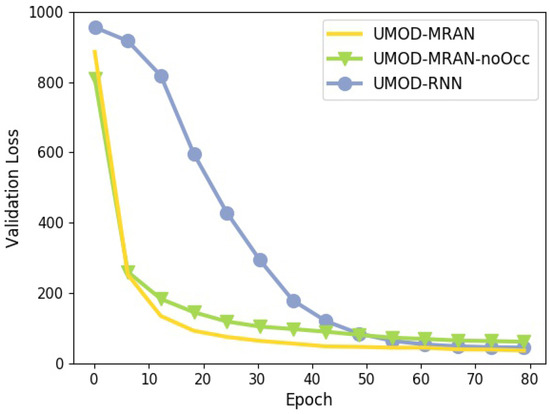

Figure 4 shows the training curves. UMOD-MRAN and UMOD-MRAN-noOcc converge significantly faster than UMOD-RNN, indicating that, with MRAN, UMOD can be trained more easily. However, for the final validation losses, UMOD-MRAN and UMOD-RNN are slightly better than UMOD-MRAN-noOcc, meaning that, without layered representation which can model occlusion, the input images could not be well-constructed.

Figure 4.

Training curves of different configurations on Sprites (Note that we do not compare the loss of AIR since it uses a different training objective).

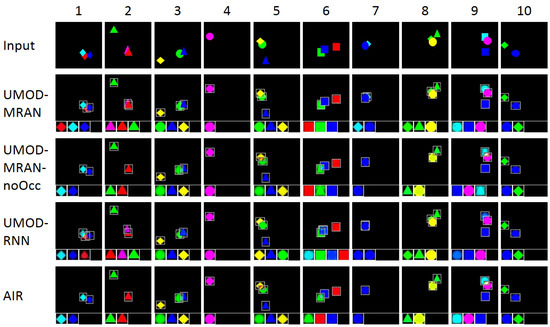

To visualize the detection performance, UMOD-MRAN was compared against other configurations on sampled images. Qualitative results are shown in Figure 5. We can see that UMOD-MRAN performs well and can robustly infer the existence, layer, position, scale, shape, and appearance of the object. UMOD-RNN performs slightly worse than UMOD-MRAN since it sometimes fails to recover the occlusion order (Columns 2, 5, and 7). However, with a layer number , UMOD-MRAN-noOcc and AIR perform even worse since they could handle occlusion (Columns 3, 5, 6, and 9), sometimes losing detection (Columns 1, 2, 7, and 8)—we conjecture that the model has learned to suppress the occluded outputs, as adding their pixel values to a single layer probably causes a high reconstruction error.

Figure 5.

Qualitative results of different configurations on Sprites. For each configuration, the reconstructed images are shown, with the detector outputs on the bottom (produced at detection Steps 1–4 from left to right).

To quantitatively assess the model, we also evaluated different configurations with the commonly used MOD metrics, including the Average Precision (AP) [9], Multi-Object Detection Accuracy (MODA), Multi-Object Detection Precision (MODP) [28], average False Alarm number per Frame (FAF), total True Positive number (TF), total False Positive number (FP), total False Negative number (FN), Precision (), and Recall (). Results are presented in Table 2. UMOD-MRAN outperforms all other configurations with respect to all metrics. Without layered representation, the performances of UMOD-MRAN-noOcc and AIR are largely affected by higher FNs (1702 and 1964, respectively), which again suggests that the detector outputs are suppressed when only a single image layer is used. Moreover, when the attention and external memory are not explicitly modeled, UMOD-RNN and AIR perform slightly worse than UMOD-MRAN and UMOD-MRAN-noOcc (in all metrics), respectively, which means incorporating these two prior knowledge can well regularize the model so that it can learn to extract more desired outputs.

Table 2.

Detection performances of different configurations on Sprites.

4.2. DukeMTMC

To examine the performance of our model when applied to real-world data that are highly flexible and complex, we assessed the UMOD-MRAN on the challenging DukeMTMC dataset [17]. It is a video surveillance dataset comprising eight videos with 60 fps and a resolution of 1080 × 1920, which were collected from eight fixed cameras that record people’s movements at different places in Duke university. Each video is divided into a training set (50 min), a hard test set (10 min), and an easy test (25 min).

For UMOD-MRAN, the detection step T was set to 10 and the IMBS algorithm [29] was used for extracting the background . Please see Table 1 for other configurations. For training, we set the mini-batch size to 32. To ease processing, we resized the input images to 108 × 192. We trained a single model and evaluated it on the easy test sets of all scenarios. Note that we did not evaluate our model on the hard test sets as they contain very different data statistics from the training sets.

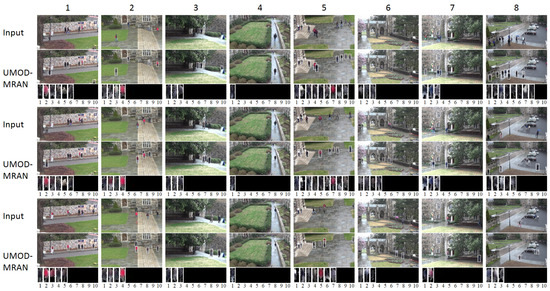

Qualitative results are shown in Figure 6. We can see that UMOD-MRAN performs well under various scenarios: (i) a few people (Column 4 in Rows 1–3); (ii) many people (Column 5 ins Row 1 and 2); (iii) occluded people (Column 2 in Row 2); (iv) people that are near to the camera (Column 4 in Row 1); (v) people that are far from the camera (Column 6 in Row 2); (vi) people with different shapes/appearances (Column 8 in Row 1); and (vii) people that are hard to be distinguished from the background (Column 6 in Row 1).

Figure 6.

Qualitative results in different scenarios on DukeMTMC.

Table 3 reports the quantitative results. UMOD-MRAN outperforms the DPM [1] with respect to all metrics. It reaches an AP of 87.2%, which is significantly higher than that of the DPM (79.3% AP), and is also very competitive to the recently proposed Faster R-CNN [5] (89.7% AP). Although the CRAFT [30,31] perform the best (with 91.1% and 92.0% APs, respectively), our model is the first one free of any training labels or extracted features.

Table 3.

Detection performance of the UMOD-MRAN compared with those of the state-of-the-art methods on DukeMTMC.

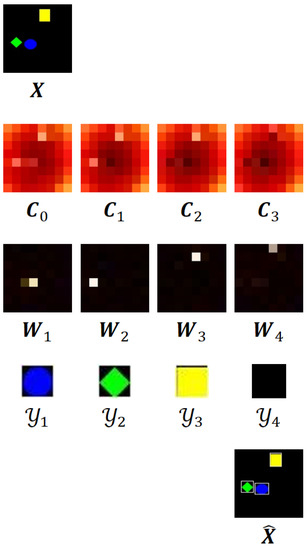

4.3. Visualizing the UMOD-MRAN

To further understand the model, we visualize the inner working of the UMOD-MRAN on Sprites, as shown in Figure 7. Both the memory and the attention weight are visualized as () matrices (brighter pixels indicate higher values), where for the matrix consists of its mean values along the last dimension. The detector output is visualized as . At detection step t, the memory produces an attention weight , through which the detector first reads from and then writes to . We can find that at each detection step, the memory content (bright region on ) related to the associated object () is erased (becomes dark) by the write operation, thereby preventing the detector from reading it again in the next detection step.

Figure 7.

Visualization of the UMOD-MRAN on Sprites, where the detection step .

5. Related Work

Recently, unsupervised learning has been used in some works to extract desired patterns from images. For example, Kulkarni et al. [32], Chen et al. [33] and Rolfe [34] focused on finding lower-level disentangled factors; Le Roux et al. [35], Moreno et al. [36] and Huang and Murphy [37] focused on extracting mid-level semantics; and Eslami et al. [26], Yan et al. [38], Rezende et al. [39], Stewart and Ermon [40] and Wu et al. [41] focused on discovering higher-level semantics. However, unlike these methods, the proposed UMOD-MRAN focuses on MOD tasks. It uses a novel rendering scheme to handle occlusion and integrates memory and attention to improve the efficiency, being well-suited for real applications such as video surveillance.

6. Conclusions

In this paper, we propose a novel UMOD framework to tackle the MOD task for video surveillance. The main advantage of our model over other popular methods is that it is free of any training labels or extracted features. Another important advantage of our model is that the MRAN module can largely improve the detection efficiency and ease model training. The proposed model was evaluated on both synthetic and real datasets, exhibiting its superiority and practicality.

For future work, we would like to extend our model in two aspects. First, it is useful to incorporate the idea of “adaptive computation time” [42] into our framework so that the recurrent object detector can adaptively choose an appropriate detection step T for efficiency. Second, it is intriguing to model the object dynamics by employing temporal RNNs so that our model can directly deal with multi-object tracking problems for video surveillance.

Author Contributions

Methodology, Z.H. and H.H.; Software, Z.H.; Validation, Z.H.; Investigation, Z.H.; Writing—Original Draft Preparation, Z.H.; Writing—Review & Editing, Z.H. and H.H.; Visualization, Z.H.; Supervision, H.H.; Project Administration, Z.H. and H.H.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Washington, DC, USA, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in neural information processing systems, Montreal, DC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Enzweiler, M.; Gavrila, D.M. Monocular pedestrian detection: Survey and experiments. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 2179–2195. [Google Scholar] [CrossRef] [PubMed]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.-F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The KITTI dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Huang, L.; Yang, Y.; Deng, Y.; Yu, Y. Densebox: Unifying landmark localization with end to end object detection. arXiv, 2015; arXiv:1509.04874. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Ristani, E.; Solera, F.; Zou, R.; Cucchiara, R.; Tomasi, C. Performance measures and a data set for multi-target, multi-camera tracking. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 17–35. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Gers, F.A.; Schmidhuber, J.; Cummins, F. Learning to forget: Continual prediction with LSTM. Neural Comput. 2000, 12, 2451–2471. [Google Scholar] [CrossRef] [PubMed]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv, 2014; arXiv:1406.1078. [Google Scholar]

- Jang, E.; Gu, S.; Poole, B. Categorical Reparameterization with Gumbel-Softmax. arXiv, 2016; arXiv:1611.01144. [Google Scholar]

- Jaderberg, M.; Simonyan, K.; Zisserman, A. Spatial transformer networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 2017–2025. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Graves, A.; Wayne, G.; Danihelka, I. Neural turing machines. arXiv, 2014; arXiv:1410.5401. [Google Scholar]

- Graves, A.; Wayne, G.; Reynolds, M.; Harley, T.; Danihelka, I.; Grabska-Barwińska, A.; Colmenarejo, S.G.; Grefenstette, E.; Ramalho, T.; Agapiou, J.; et al. Hybrid computing using a neural network with dynamic external memory. Nature 2016, 538, 471–476. [Google Scholar] [CrossRef] [PubMed]

- Eslami, S.A.; Heess, N.; Weber, T.; Tassa, Y.; Szepesvari, D.; Hinton, G.E. Attend, infer, repeat: Fast scene understanding with generative models. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 3225–3233. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A method for stochastic optimization. arXiv, 2014; arXiv:1412.6980. [Google Scholar]

- Stiefelhagen, R.; Bernardin, K.; Bowers, R.; Garofolo, J.; Mostefa, D.; Soundararajan, P. The CLEAR 2006 evaluation. In International Evaluation Workshop on Classification of Events, Activities and Relationships; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1–44. [Google Scholar]

- Bloisi, D.; Iocchi, L. Independent multimodal background subtraction. In CompIMAGE; CRC Press: Boca Raton, FL, USA, 2012. [Google Scholar]

- Yang, B.; Yan, J.; Lei, Z.; Li, S.Z. Craft objects from images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 6043–6051. [Google Scholar]

- Ren, J.; Chen, X.; Liu, J.; Sun, W.; Pang, J.; Yan, Q.; Tai, Y.-W.; Xu, L. Accurate single stage detector using recurrent rolling convolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Kulkarni, T.D.; Whitney, W.F.; Kohli, P.; Tenenbaum, J. Deep convolutional inverse graphics network. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 2539–2547. [Google Scholar]

- Chen, X.; Duan, Y.; Houthooft, R.; Schulman, J.; Sutskever, I.; Abbeel, P. Infogan: Interpretable representation learning by information maximizing generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 2172–2180. [Google Scholar]

- Rolfe, J.T. Discrete variational autoencoders. arXiv, 2016; arXiv:1609.02200. [Google Scholar]

- Le Roux, N.; Heess, N.; Shotton, J.; Winn, J. Learning a generative model of images by factoring appearance and shape. Neural Comput. 2011, 23, 593–650. [Google Scholar] [CrossRef] [PubMed]

- Moreno, P.; Williams, C.K.; Nash, C.; Kohli, P. Overcoming occlusion with inverse graphics. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 170–185. [Google Scholar]

- Huang, J.; Murphy, K. Efficient inference in occlusion-aware generative models of images. arXiv, 2015; arXiv:1511.06362. [Google Scholar]

- Yan, X.; Yang, J.; Yumer, E.; Guo, Y.; Lee, H. Perspective transformer nets: Learning single-view 3d object reconstruction without 3D supervision. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 1696–1704. [Google Scholar]

- Rezende, D.J.; Eslami, S.A.; Mohamed, S.; Battaglia, P.; Jaderberg, M.; Heess, N. Unsupervised learning of 3D structure from images. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 4996–5004. [Google Scholar]

- Stewart, R.; Ermon, S. Label-free supervision of neural networks with physics and domain knowledge. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Wu, J.; Tenenbaum, J.B.; Kohli, P. Neural scene de-rendering. In Proceedings of the Computer Vision Foundation, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Graves, A. Adaptive computation time for recurrent neural networks. arXiv, 2016; arXiv:1603.08983. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).