The Potential of Deep Learning for Studying Wilderness with Copernicus Sentinel-2 Data: Some Critical Insights

Abstract

1. Introduction

- Different deep learning architectures usable in standard use cases and with limited computing resources. For this reason, we focused on the already well-known and used architectures ResNet, U-Net, FCN, and DeepLabV3 and did not consider more recent implementations.

- Impact of data dimensionality, seeking a trade-off between accuracy and computational load.

- Impact of spectral setup, seeking the effectiveness of transfer learning (pre-trained networks) in real-world scenarios.

2. Materials and Methods

2.1. Study Area

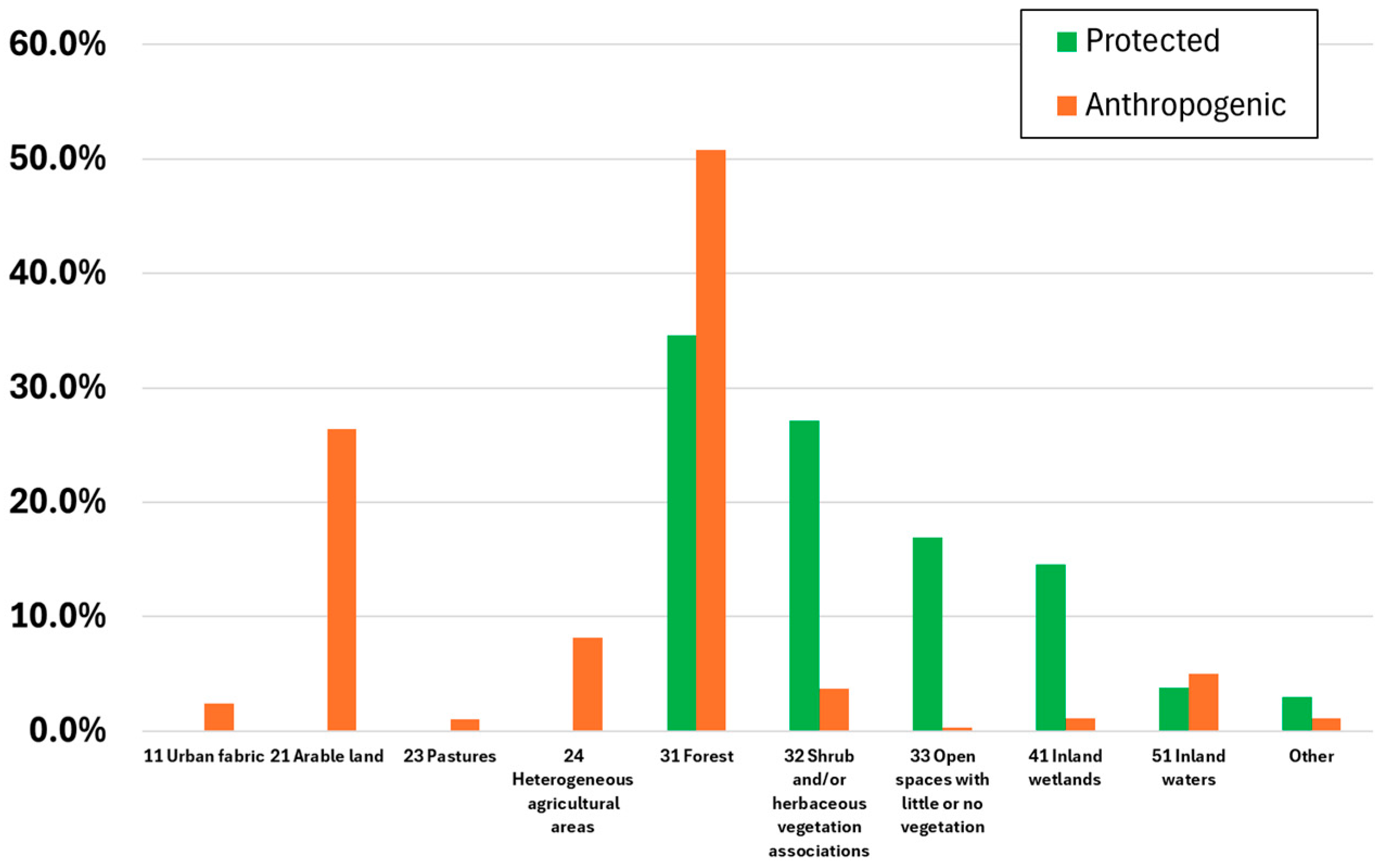

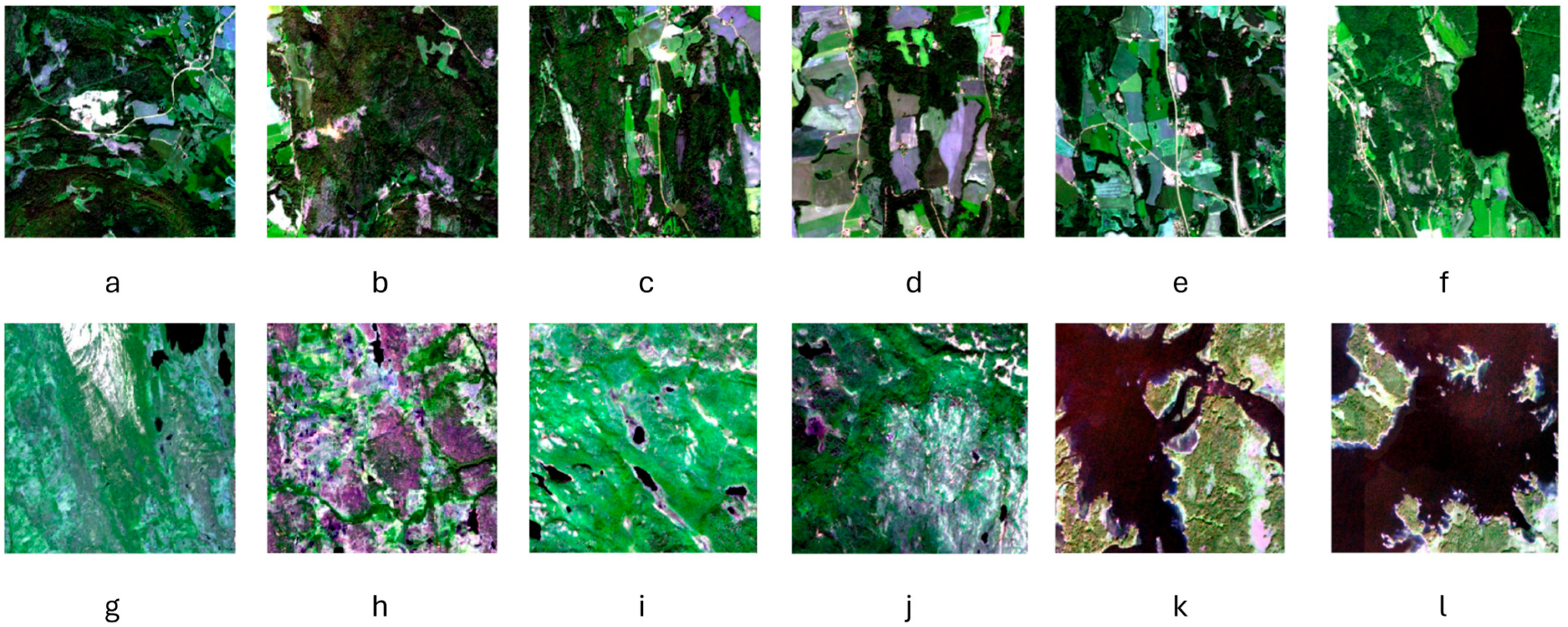

2.2. AnthroProtect Dataset

2.3. Methods

- Image classification: A machine learning task that uses a model to map the input to a discrete output [47]. This task labels each image tile of the AnthroProtect dataset as ‘wild’ or ‘anthropogenic’.

- Semantic segmentation: A deep learning task aiming to label every image pixel or image object/segment (i.e., a group of image pixels with similar features) into different land cover categories [48]. This task assigns each image pixel of the AnthroProtect dataset to one of the 44 thematic classes of the CORINE Land Cover (Figure 2).

- Batch sizes;

- Architectures;

- Spectral band setups.

2.3.1. Data Pre-Processing

2.3.2. Image Classification Task

Architecture

- ResNet18: A network with 18 layers;

- ResNet50: A network with 50 layers.

Batch Size

- Computational efficiency and memory requirements: Larger batch sizes simultaneously process a larger amount of data. This can lead to faster training because fewer iterations are needed to process the entire dataset. However, with the increase in batch sizes, memory use increases. On the other hand, smaller batch sizes require fewer memory resources, but more iterations are needed to process the whole dataset, and the training time increases significantly.

- Convergence: Larger batch sizes might lead to faster training per epoch. However, they do not always result in more rapid convergence to a high-accuracy solution because of possible poor local minima. On the other hand, smaller batch sizes might lead to faster convergence because they can avoid poor local minima (though this might only sometimes prove to be true).

- Stability, quality of learning, and generalisation capabilities: Larger batch sizes might lead to a more stable and reliable gradient estimate since the average takes over a larger amount of data, which can result in a smoother convergence. However, as stated before, it might also cause poorer performance in finding local minima, thus getting stuck in them and disrupting the generalisation. On the other hand, smaller batch sizes could introduce more noise in the training process. This can be beneficial because it provides a sort of regularisation and can lead to better generalisation on unseen data (i.e., validation and testing datasets); nevertheless, the noisiness might also make the process less stable.

- Medium batch size (64): This is a good trade-off between computational efficiency and the stochasticity of gradient updates.

- Medium-large batch size (128): This is still a good trade-off between computational efficiency and the stochasticity of gradient updates and reduces the computation time.

- Large batch size (256): This size leads to faster convergence but requires GPU processing, distributed computing (not always available), and careful tuning of hyperparameters.

Spectral Band Setup

- RGB: Sentinel-2 bands B2, B3, and B4.

- RGB + NIR (RGBN): Sentinel-2 bands B2, B3, B4, and B8.

- Full spectrum: Sentinel-2 bands B2, B3, B4, B5, B6, B7, B8, B8A, B11, and B12.

2.3.3. Semantic Segmentation Task

Architecture

- U-Net;

- DeepLab V3;

- FCN.

Batch Size

- 2;

- 4;

- 8.

Spectral Band Setup

2.4. Software Implementation

2.5. Computation Constraints and Experimental Setup

3. Results

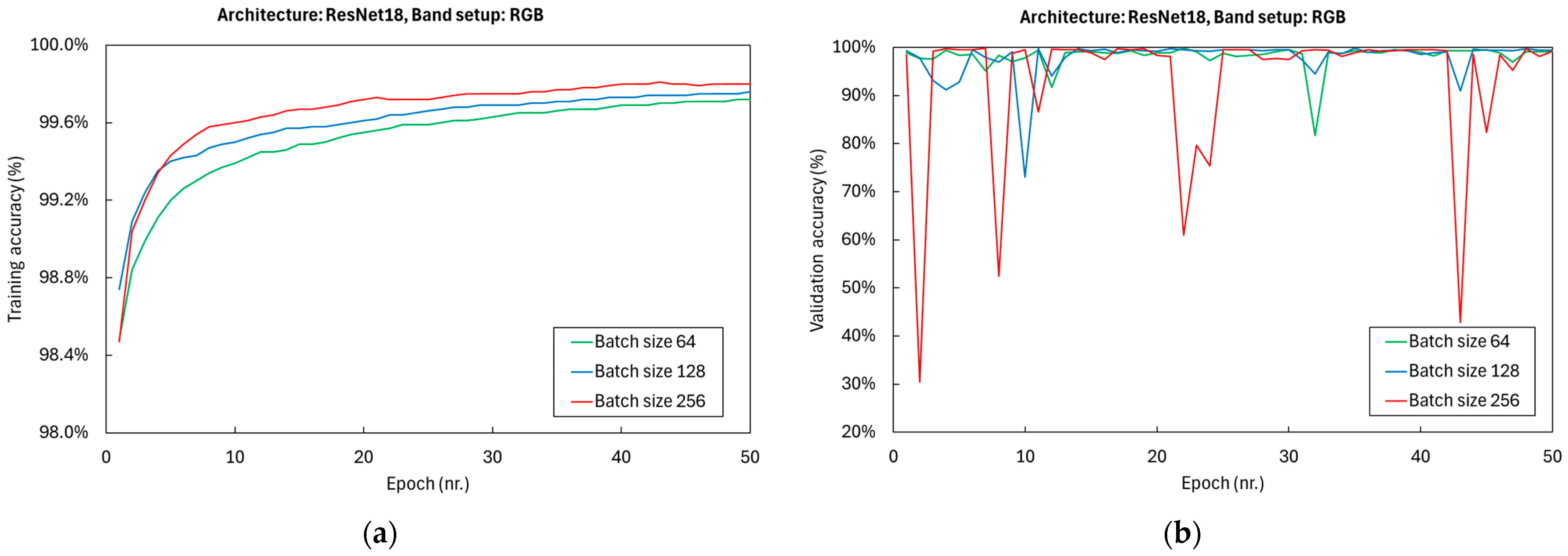

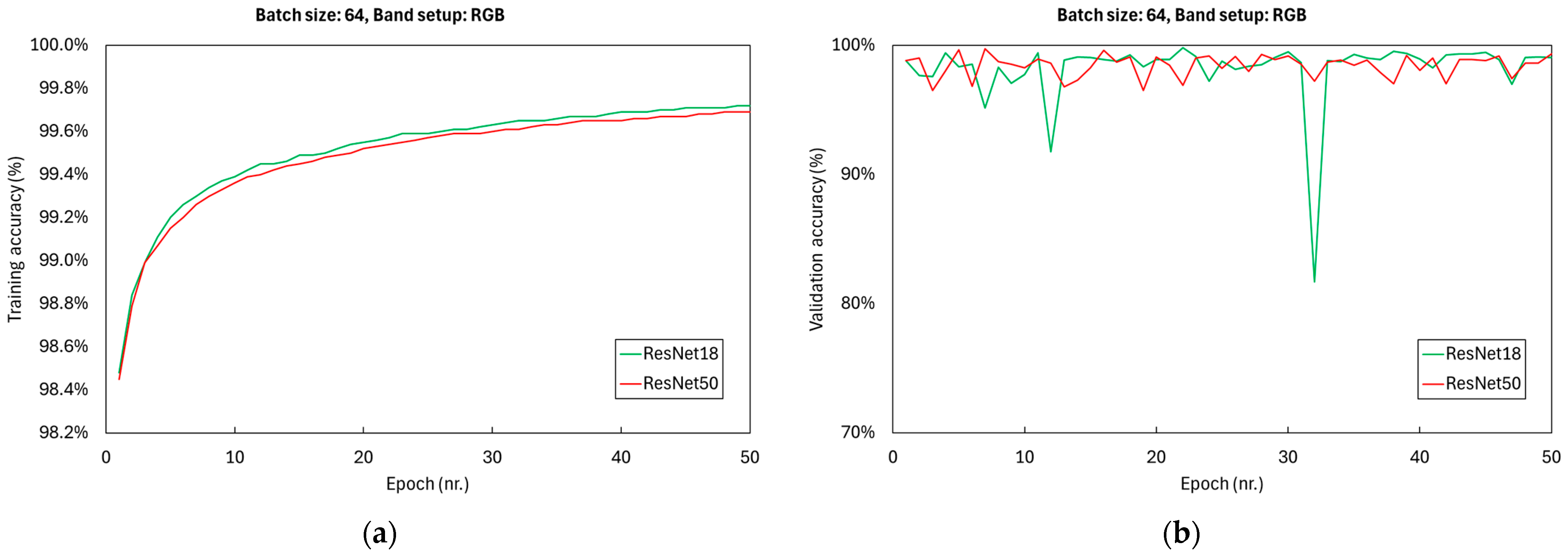

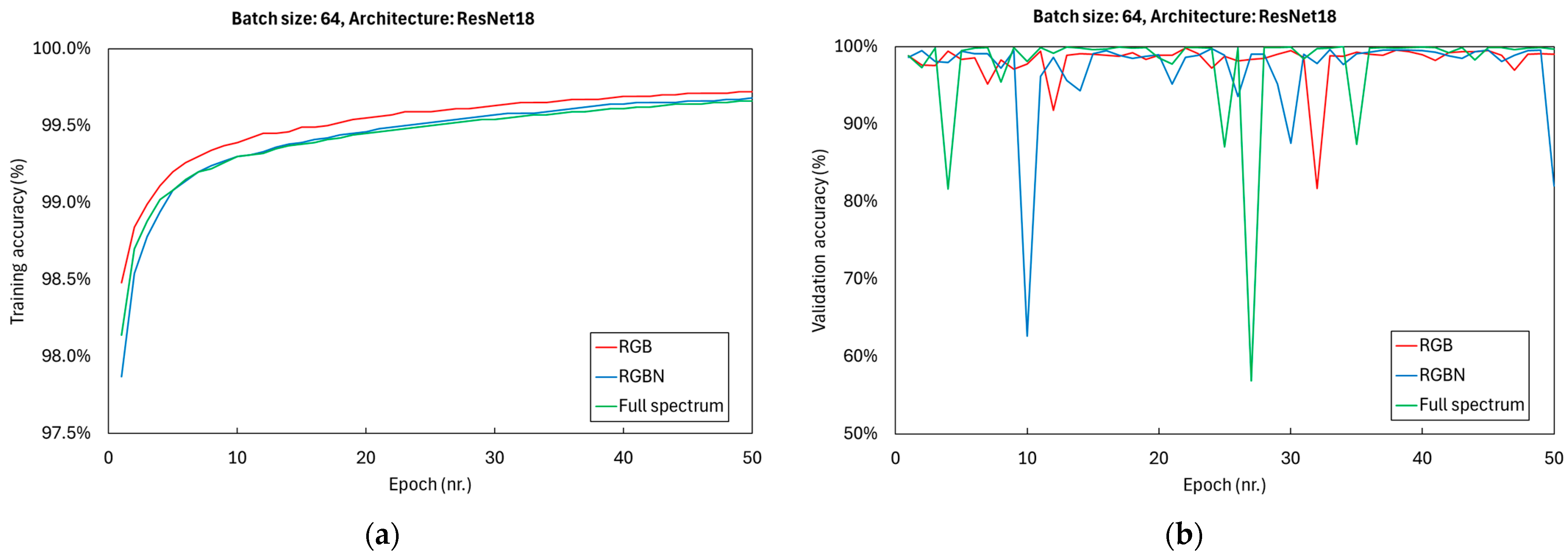

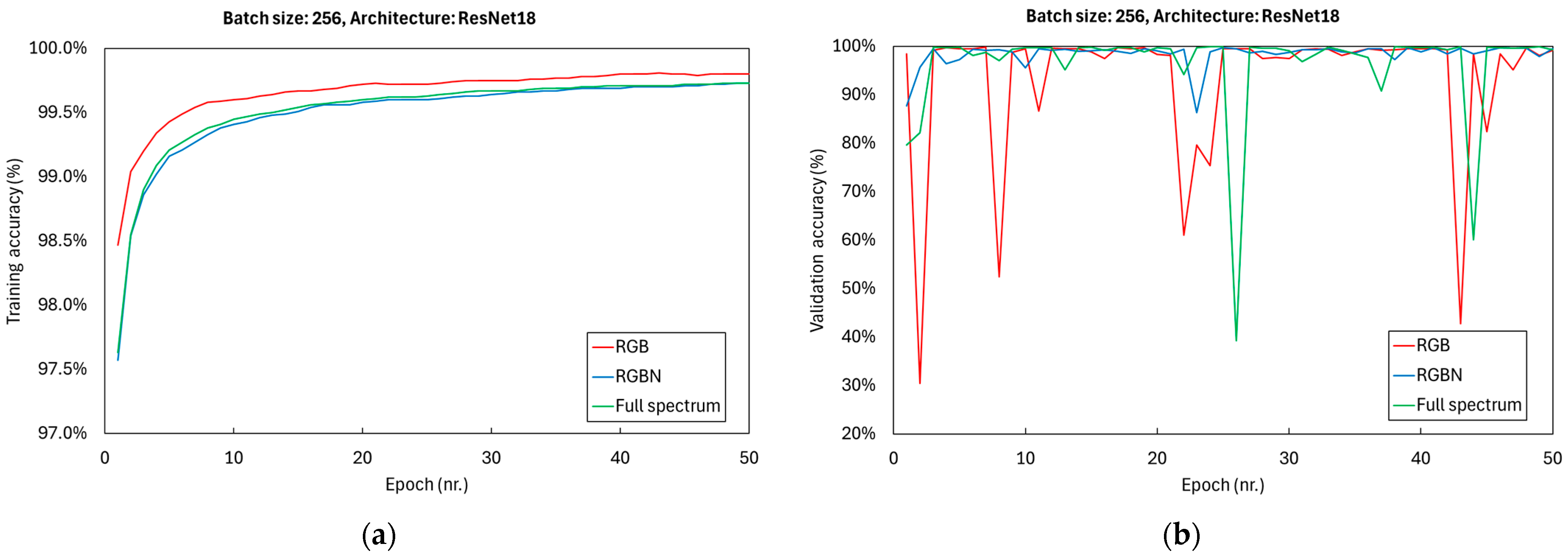

3.1. Image Classification Task

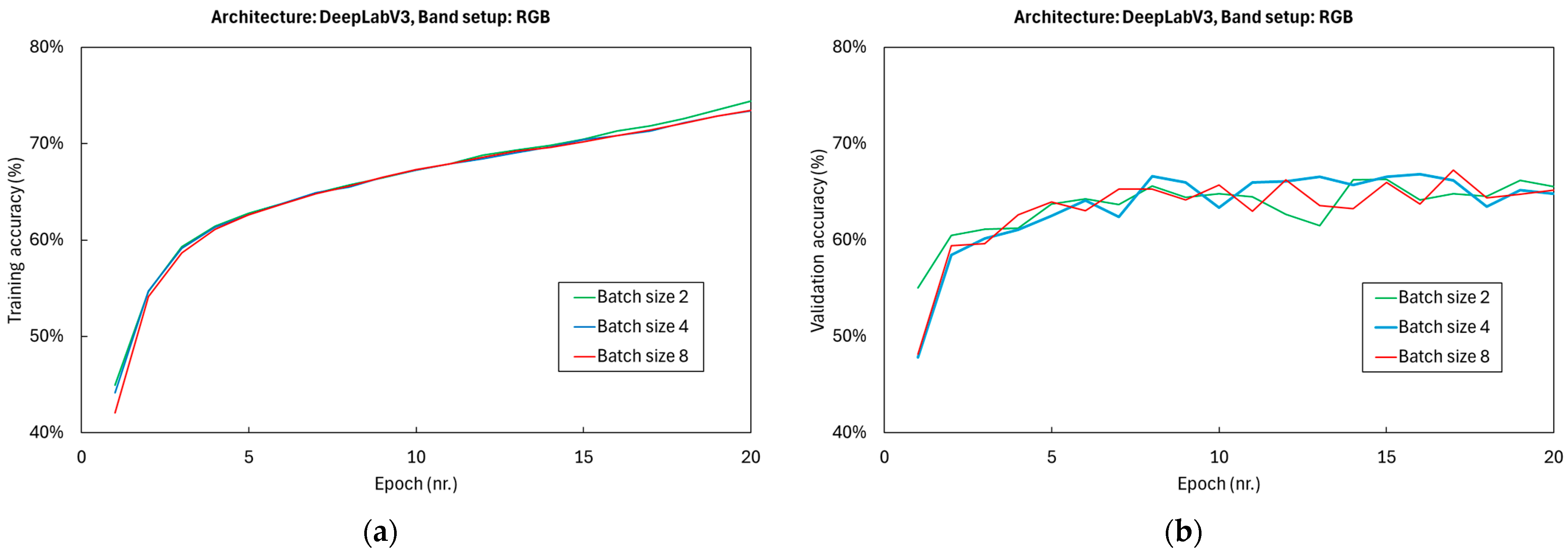

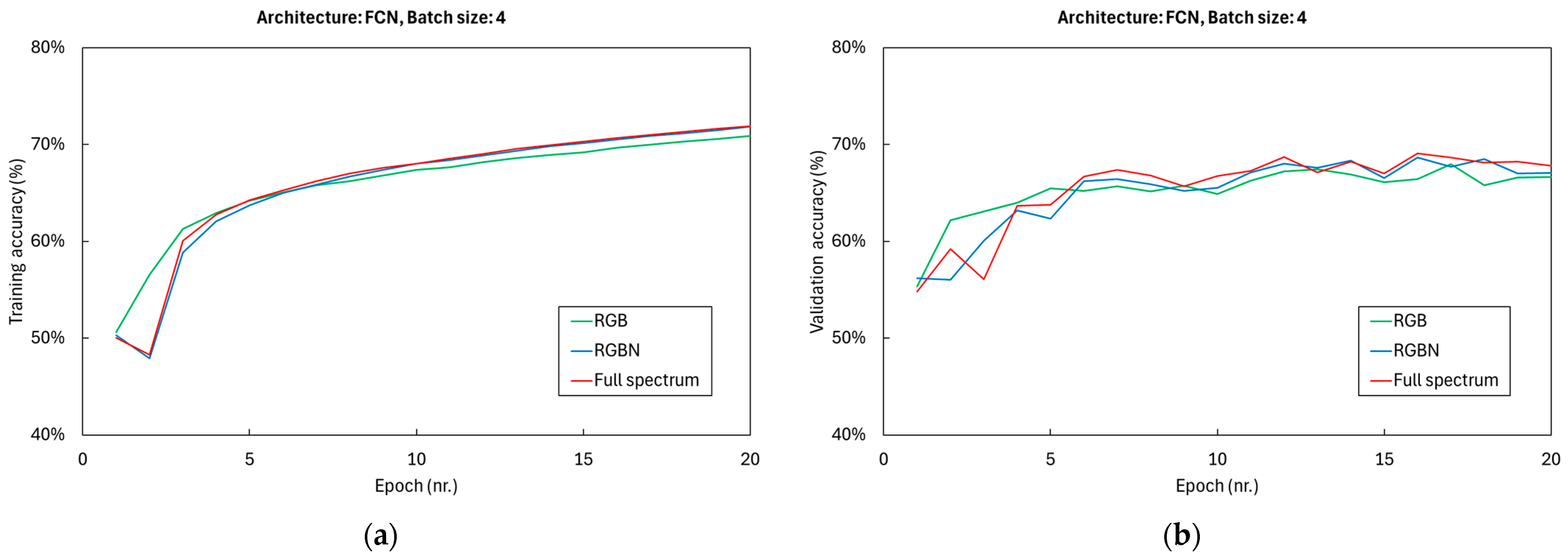

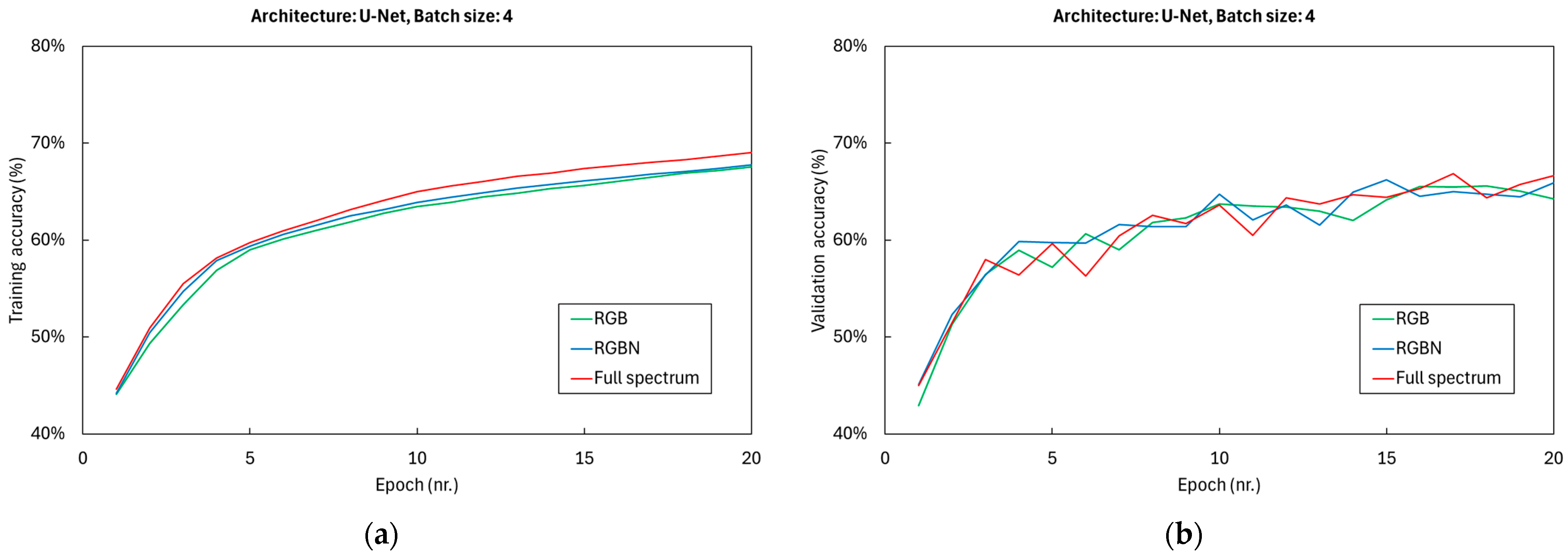

3.2. Semantic Segmentation

4. Discussion

4.1. Image Classification Task

4.1.1. Impact of Batch Size

4.1.2. Impact of Architecture

4.1.3. Impact of Spectral Band Setup

4.2. Semantic Segmentation Task

4.2.1. Impact of Batch Size

4.2.2. Impact of Architecture

4.2.3. Impact of Spectral Band Setup

4.3. Comparison with Similar Studies

- The vegetation was similar, with many species of birch, aspen, and needle leaf in both biomes [76];

- The presence of different degrees of forestry management activities;

- The use of RGB and RGBN optical images;

- The use of ResNet architecture with batches made of 256 images.

4.4. Current Limitations and Future Perspectives

- Computational resources: More computational power was required to run all the experiments with the demanding ResNet50 architecture. Moreover, slow processing limited semantic segmentation experiments due to limited computational resources. Overall, with more resources and faster data processing, we could have tested more combinations of parameters. However, our focus was on limited computational resources.

- Dataset: This study focused only on the AnthroProtect dataset, which was built ad hoc to study wilderness. Unfortunately, we could not test the architectures on other data. This limitation is also due to the enormous effort required to build such a dataset.

- The contribution of larger batch sizes.

- The contribution of each spectral band, with a specific focus on the individual contribution to accuracy. Also, the architectures used in this study have been optimised to work on RGB images. It would be interesting to investigate what this means in terms of using a multiclass and multispectral dataset and if any change could be implemented to the architectures fully to exploit the spectral richness of input data.

- Linked to the previous point, the impact of hyperspectral data on wilderness studies.

- The investigation of more recent and less commonly used neural networks, such as EfficientNets or VisionTransformers (ViTs), which might offer improvements in both image classification and semantic segmentation. However, our focus was on already well-known and used architectures.

- A deeper investigation of the trade-off between training efficiency and final accuracy. In this regard, it would make sense to explore techniques such as adaptive batch sizing or optimisation algorithms that can dynamically adjust during training.

- Finally, testing deep learning on different wilderness datasets. Ongoing research on foundational models increasingly focuses on their applications in satellite imaging, which is an interesting direction that warrants further investigation.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Di Marco, M.; Ferrier, S.; Harwood, T.D.; Hoskins, A.J.; Watson, J.E.M. Wilderness areas halve the extinction risk of terrestrial biodiversity. Nature 2019, 573, 582–585. [Google Scholar] [CrossRef]

- Watson, J.E.M.; Evans, T.; Venter, O.; Williams, B.; Tulloch, A.; Stewart, C.; Thompson, I.; Ray, J.C.; Murray, K.; Salazar, A.; et al. The exceptional value of intact forest ecosystems. Nat. Ecol. Evol. 2018, 2, 599–610. [Google Scholar] [CrossRef]

- Maxwell, S.L.; Cazalis, V.; Dudley, N.; Hoffmann, M.; Rodrigues, A.S.L.; Stolton, S.; Visconti, P.; Woodley, S.; Kingston, N.; Lewis, E.; et al. Area-based conservation in the twenty-first century. Nature 2020, 586, 217–227. [Google Scholar] [CrossRef] [PubMed]

- Allan, J.R.; Venter, O.; Watson, J.E.M. Temporally inter-comparable maps of terrestrial wilderness and the Last of the Wild. Sci. Data 2017, 4, 170187. [Google Scholar] [CrossRef]

- Ekim, B.; Stomberg, T.T.; Roscher, R.; Schmitt, M. MapInWild: A remote sensing dataset to address the question of what makes nature wild [Software and Data Sets]. IEEE Geosci. Remote Sens. Mag. 2023, 11, 103–114. [Google Scholar] [CrossRef]

- Emam, A.; Farag, M.; Roscher, R. Confident Naturalness Explanation (CNE): A Framework to Explain and Assess Patterns Forming Naturalness Motivation. IEEE Geosci. Remote Sens. Lett. 2024, 21, 8500505. [Google Scholar] [CrossRef]

- Emam, A.; Stomberg, T.; Roscher, R. Leveraging Activation Maximization and Generative Adversarial Training to Recognize and Explain Patterns in Natural Areas in Satellite Imagery Motivation. IEEE Geosci. Remote Sens. Lett. 2024, 21, 8500105. [Google Scholar] [CrossRef]

- Raiyani, K.; Gonçalves, T.; Rato, L.; Salgueiro, P.; Marques da Silva, J.R. Sentinel-2 Image Scene Classification: A Comparison between Sen2Cor and a Machine Learning Approach. Remote Sens. 2021, 13, 300. [Google Scholar] [CrossRef]

- Cheng, G.; Xie, X.; Han, J.; Guo, L.; Xia, G.-S. Remote Sensing Image Scene Classification Meets Deep Learning: Challenges, Methods, Benchmarks, and Opportunities. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3735–3756. [Google Scholar] [CrossRef]

- Saha, S.; Shahzad, M.; Mou, L.; Song, Q.; Zhu, X.X. Unsupervised Single-Scene Semantic Segmentation for Earth Observation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5228011. [Google Scholar] [CrossRef]

- Khan, S.D.; Basalamah, S. Multi-Branch Deep Learning Framework for Land Scene Classification in Satellite Imagery. Remote Sens. 2023, 15, 3408. [Google Scholar] [CrossRef]

- Ouma, Y.O.; Keitsile, A.; Nkwae, B.; Odirile, P.; Moalafhi, D.; Qi, J. Urban land-use classification using machine learning classifiers: Comparative evaluation and post-classification multi-feature fusion approach. Eur. J. Remote Sens. 2023, 56, 2173659. [Google Scholar] [CrossRef]

- Vali, A.; Comai, S.; Matteucci, M. Deep Learning for Land Use and Land Cover Classification Based on Hyperspectral and Multispectral Earth Observation Data: A Review. Remote Sens. 2020, 12, 2495. [Google Scholar] [CrossRef]

- Dimitrovski, I.; Kitanovski, I.; Kocev, D.; Simidjievski, N. Current Trends in Deep Learning for Earth Observation: An Open-Source Benchmark Arena for Image Classification. ISPRS J. Photogramm. Remote Sens. 2023, 197, 18–35. [Google Scholar] [CrossRef]

- Hoeser, T.; Kuenzer, C. Object Detection and Image Segmentation with Deep Learning on Earth Observation Data: A Review-Part I: Evolution and Recent Trends. Remote Sens. 2020, 12, 1667. [Google Scholar] [CrossRef]

- Yu, X.; Li, S.; Zhang, Y. Incorporating convolutional and transformer architectures to enhance semantic segmentation of fine-resolution urban images. Eur. J. Remote Sens. 2024, 57, 2361768. [Google Scholar] [CrossRef]

- El Sakka, M.; Mothe, J.; Ivanovici, M. Images and CNN applications in smart agriculture. Eur. J. Remote Sens. 2024, 57, 2352386. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Liang, J.; Xu, J.; Shen, H.; Fang, L. Land-use classification via constrained extreme learning classifier based on cascaded deep convolutional neural networks. Eur. J. Remote Sens. 2020, 53, 219–232. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the 26th Annual Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; ISBN 9781627480031. Available online: https://proceedings.neurips.cc/paper/2012 (accessed on 20 September 2025).

- Coţolan, L.; Moldovan, D. Applicability of pre-trained CNNs in temperate deforestation detection. Eur. J. Remote Sens. 2024, 57, 2367221. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015; pp. 1–14. Available online: https://dblp.org/db/conf/iclr/iclr2015.html (accessed on 20 September 2025).

- Gao, Y.; Shi, J.; Li, J.; Wang, R. Remote sensing scene classification based on high-order graph convolutional network. Eur. J. Remote Sens. 2021, 54 (Suppl. S1), 141–155. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019; Available online: https://proceedings.mlr.press/v97/ (accessed on 20 September 2025).

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Volume 9351, pp. 234–241. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European conference on computer vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Skidmore, A.K.; Pettorelli, N.; Coops, N.C.; Geller, G.N.; Hansen, M.; Lucas, R.; Mücher, C.A.; O’Connor, B.; Paganini, M.; Pereira, H.M.; et al. Environmental Science: Agree on Biodiversity Metrics to Track from Space. Nature 2015, 523, 403–405. [Google Scholar] [CrossRef]

- Luque, S.; Pettorelli, N.; Vihervaara, P.; Wegmann, M. Improving Biodiversity Monitoring Using Satellite Remote Sensing to Provide Solutions Towards the 2020 Conservation Targets. Methods Ecol. Evol. 2018, 9, 1784–1786. [Google Scholar] [CrossRef]

- Giuliani, G.; Egger, E.; Italiano, J.; Poussin, C.; Richard, J.-P.; Chatenoux, B. Essential Variables for Environmental Monitoring: What Are the Possible Contributions of Earth Observation Data Cubes? Data 2020, 5, 100. [Google Scholar] [CrossRef]

- Ustin, S.L.; Middleton, E.M. Current and near-term advances in Earth observation for ecological applications. Ecol. Process. 2021, 10, 1. [Google Scholar] [CrossRef] [PubMed]

- Šandera, J.; Štych, P. Mapping changes of grassland to arable land using automatic machine learning of stacked ensembles and H2O library. Eur. J. Remote Sens. 2023, 57, 2294127. [Google Scholar] [CrossRef]

- Li, W.; Zuo, X.; Liu, Z.; Nie, L.; Li, H.; Wang, J.; Cui, L. Predictions of Spartina alterniflora leaf functional traits based on hyperspectral data and machine learning models. Eur. J. Remote Sens. 2023, 57, 2294951. [Google Scholar] [CrossRef]

- Morais, T.G.; Rodrigues, N.R.; Gama, I.; Domingos, T.; Teixeira, R.F.M. Development of an algorithm for identification of sown biodiverse pastures in Portugal. Eur. J. Remote Sens. 2023, 56, 2238878. [Google Scholar] [CrossRef]

- Dang, K.B.; Nguyen, M.H.; Nguyen, D.A.; Phan, T.T.H.; Giang, T.L.; Pham, H.H.; Nguyen, T.N.; Tran, T.T.V.; Bui, D.T. Coastal Wetland Classification with Deep U-Net Convolutional Networks and Sentinel-2 Imagery: A Case Study at the Tien Yen Estuary of Vietnam. Remote Sens. 2020, 12, 3270. [Google Scholar] [CrossRef]

- Fisher, M.; The Wildland Research Institute. Review of Status and Conservation of Wild Land in Europe. 2010. Available online: http://www.self-willed-land.org.uk/rep_res/0109251.pdf (accessed on 20 September 2025).

- Sanderson, E.W.; Jaiteh, M.; Levy, M.A.; Redford, K.H.; Wannebo, A.V.; Woolmer, G. The Human Footprint and the Last of the Wild: The Human Footprint is a Global Map of Human Influence on the Land Surface, Which Suggests That Human Beings are Stewards of Nature, Whether We Like It or Not. BioScience 2002, 52, 891–904. [Google Scholar] [CrossRef]

- University of Bonn, Remote Sensing Group. AnthroProtect Dataset. Available online: https://rs.ipb.uni-bonn.de/data/anthroprotect/ (accessed on 20 September 2025).

- Stomberg, T.T.; Leonhardt, J.; Weber, I.; Roscher, R. Recognizing protected and anthropogenic patterns in landscapes using interpretable machine learning and satellite imagery. Front. Artif. Intell. 2023, 6, 1278118. [Google Scholar] [CrossRef] [PubMed]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google earth engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Zeng, D.; Liao, M.; Tavakolian, M.; Guo, Y.; Zhou, B.; Hu, D.; Pietikäinen, M.; Liu, L. Deep Learning for Scene Classification: A Survey. arXiv 2021, arXiv:2101.10531. [Google Scholar] [CrossRef]

- Mo, Y.; Wu, Y.; Yang, X.; Liu, F.; Liao, Y. Review the State-of-the-Art Technologies of Semantic Segmentation Based on Deep Learning. Neurocomputing 2022, 493, 626–646. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Shehab, L.H.; Al-Ani, A.; Al-Ani, T.; Jassim, S.; Hussain, A. An efficient brain tumor image segmentation based on deep residual networks (ResNets). J. King Saud Univ. Eng. Sci. 2021, 33, 404–412. [Google Scholar] [CrossRef]

- Smith, S.L.; Kindermans, P.-J.; Ying, C.; Le, Q.V. Don’t Decay the Learning Rate, Increase the Batch Size. arXiv 2018, arXiv:1711.00489. [Google Scholar] [CrossRef]

- Wilson, D.R.; Martinez, T.R. The general inefficiency of batch training for gradient descent learning. Neural Netw. 2003, 16, 1429–1451. [Google Scholar] [CrossRef]

- Bengio, Y. Practical Recommendations for Gradient-Based Training of Deep Architectures. In Neural Networks: Tricks of the Trade; Lecture Notes in Computer Science; Montavon, G., Orr, G.B., Müller, K.R., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7700, pp. 437–478. [Google Scholar] [CrossRef]

- Goyal, P.; Dollár, P.; Girshick, R.; Noordhuis, P.; Wesolowski, L.; Kyrola, A.; Tulloch, A.; Jia, Y.; He, K. Accurate, Large Minibatch SGD: Training ImageNet in 1 Hour. arXiv 2018, arXiv:1706.02677. [Google Scholar] [CrossRef]

- Keskar, N.S.; Nocedal, J.; Tang, P.T.P.; Mudigere, D.; Smelyanskiy, M. On large-batch training for deep learning: Generalization gap and sharp minima. In Proceedings of the 5th International Conference on Learning Representations, Toulon, France, 24–26 April 2017; Available online: https://dblp.org/db/conf/iclr/iclr2017.html (accessed on 20 September 2025).

- Cheng, K.; Scott, G.J. Deep Seasonal Network for Remote Sensing Imagery Classification of Multi-Temporal Sentinel-2 Data. Remote Sens. 2023, 15, 4705. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA; 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Le, Q.T.; Dang, K.B.; Giang, T.L.; Tong, T.H.A.; Nguyen, V.G.; Nguyen, T.D.L.; Yasir, M. Deep Learning Model Development for Detecting Coffee Tree Changes Based on Sentinel-2 Imagery in Vietnam. IEEE Access 2022, 10, 109097–109107. [Google Scholar] [CrossRef]

- Yan, C.; Fan, X.; Fan, J.; Yu, L.; Wang, N.; Chen, L.; Li, X. HyFormer: Hybrid Transformer and CNN for Pixel-Level Multispectral Image Land Cover Classification. Int. J. Environ. Res. Public Health 2023, 20, 3059. [Google Scholar] [CrossRef]

- Astola, H.; Seitsonen, L.; Halme, E.; Molinier, M.; Lönnqvist, A. Deep Neural Networks with Transfer Learning for Forest Variable Estimation Using Sentinel-2 Imagery in Boreal Forest. Remote Sens. 2021, 13, 2392. [Google Scholar] [CrossRef]

- Orynbaikyzy, A.; Gessner, U.; Mack, B.; Conrad, C. Crop Type Classification Using Fusion of Sentinel-1 and Sentinel-2 Data: Assessing the Impact of Feature Selection, Optical Data Availability, and Parcel Sizes on the Accuracies. Remote Sens. 2020, 12, 2779. [Google Scholar] [CrossRef]

- Praticò, S.; Solano, F.; Di Fazio, S.; Modica, G. Machine Learning Classification of Mediterranean Forest Habitats in Google Earth Engine Based on Seasonal Sentinel-2 Time-Series and Input Image Composition Optimisation. Remote Sens. 2021, 13, 586. [Google Scholar] [CrossRef]

- Chaves, M.E.D.; Picoli, M.C.A.; Sanches, D.I. Recent Applications of Landsat 8/OLI and Sentinel-2/MSI for Land Use and Land Cover Mapping: A Systematic Review. Remote Sens. 2020, 12, 3062. [Google Scholar] [CrossRef]

- Abdi, A.M. Land Cover and Land Use Classification Performance of Machine Learning Algorithms in a Boreal Landscape Using Sentinel-2 Data. GIScience Remote Sens. 2020, 57, 1–20. [Google Scholar] [CrossRef]

- Zhang, T.; Su, J.; Liu, C.; Chen, W.-H.; Liu, H.; Liu, G. Band Selection in Sentinel-2 Satellite for Agriculture Applications. In Proceedings of the 23rd International Conference on Automation and Computing, Huddersfield, UK, 7–8 September 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Simón Sánchez, A.-M.; González-Piqueras, J.; De La Ossa, L.; Calera, A. Convolutional Neural Networks for Agricultural Land Use Classification from Sentinel-2 Image Time Series. Remote Sens. 2022, 14, 5373. [Google Scholar] [CrossRef]

- Gaia, V.; Emam, A.; Farag, M.; Genzano, N.; Roscher, R.; Gianinetto, M. Source code for the optimisation of deep learning parameters for classification and segmentation of wilderness areas with Copernicus Sentinel-2 images. Zenodo 2024. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization 3rd International Conference on Learning Representations. In Proceedings of the ICLR 2015-Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015; Volume 1. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Wagner, F.H.; Sanchez, A.; Takahashi, F.; Latorraca, M.; Ferreira, M.; Valeriano, D.; Rigueira, D.; Latorraca, L.; Lefebvre, J.; Salgado, C.; et al. Using the U-net convolutional network to map forest types and disturbance in the Atlantic rainforest with very high resolution images. Remote Sens. Ecol. Conserv. 2019, 5, 360–375. [Google Scholar] [CrossRef]

- Hızal, C.; Gülsu, G.; Akgün, H.Y.; Kulavuz, B.; Bakırman, T.; Aydın, A.; Bayram, B. Forest Semantic Segmentation Based on Deep Learning Using Sentinel-2 Images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2024, XLVIII-4/W9, 229–236. [Google Scholar] [CrossRef]

- Waldeland, A.U.; Trier, Ø.D.; Salberg, A.-B. Forest mapping and monitoring in Africa using Sentinel-2 data and deep learning. Int. J. Appl. Earth Obs. Geoinf. 2022, 111, 102840. [Google Scholar] [CrossRef]

- Brandt, M.; Tucker, C.J.; Kariryaa, A.; Rasmussen, K.; Abel, C.; Small, J.; Chave, J.; Saatchi, S.; Meyfroidt, P.; Fanin, T.; et al. An unexpectedly large count of trees in the West African Sahara and Sahel. Nature 2020, 587, 78–82. [Google Scholar] [CrossRef]

- De Bem, P.P.; Mello, M.P.; Formaggio, A.R.; Fernandes, R.; Rudorff, B.F.T.; Oliveira, C.G.; Berveglieri, A. Change detection of deforestation in the Brazilian Amazon using Landsat data and convolutional neural networks. Remote Sens. 2020, 12, 901. [Google Scholar] [CrossRef]

- Sylvain, J.-D.; Drolet, G.; Brown, N. Mapping dead forest cover using a deep convolutional neural network and digital aerial photography. ISPRS J. Photogramm. Remote Sens. 2019, 156, 14–26. [Google Scholar] [CrossRef]

- Breidenbach, J.; Puliti, S.; Solberg, S.; Næsset, E.; Bollandsås, O.M.; Gobakken, T. National mapping and estimation of forest area by dominant tree species using Sentinel-2 data. Can. J. For. Res. 2021, 51, 365–379. [Google Scholar] [CrossRef]

| Architecture | Batch Size | Band Setup | Calculation Time | Training Accuracy | Validation Accuracy | Testing Accuracy | F1 Score (Testing Set) |

|---|---|---|---|---|---|---|---|

| ResNet18 | 64 | RGB | 0 d 11 h 5 min | 99.72% | 99.04% | 99.54% | 99.60% |

| ResNet18 | 64 | RGBN | 0 d 11 h 8 min | 99.68% | 82.02% | 90.41% | N/A |

| ResNet18 | 64 | Full spectrum | 0 d 11 h 17 min | 99.66% | 99.71% | 99.79% | 99.74% |

| ResNet18 | 128 | RGB | 0 d 5 h 58 min | 99.76% | 99.37% | 99.54% | 99.36% |

| ResNet18 | 256 | RGB | 0 d 3 h 24 min | 99.80% | 99.16% | 99.67% | 99.54% |

| ResNet18 | 256 | RGBN | 0 d 3 h 13 min | 99.73% | 99.46% | 99.92% | 99.94% |

| ResNet18 | 256 | Full spectrum | 0 d 3 h 49 min | 99.73% | 99.25% | 99.75% | 99.88% |

| ResNet50 | 64 | RGB | 1 d 1 h 56 min | 99.69% | 99.33% | 99.79% | 99.71% |

| ResNet50 | 64 | Full spectrum | 1 d 2 h 12 min | 99.62% | 98.95% | 99.21% | 98.65% |

| Architecture | Batch Size | Band Setup | Calculation Time | Training Accuracy | Validation Accuracy | Testing Accuracy | mIoU |

|---|---|---|---|---|---|---|---|

| DeepLabV3 | 2 | RGB | 1 d 15 h 3 min | 74.39% | 65.54% | 66.80% | 87.65% |

| DeepLabV3 | 4 | RGB | 1 d 15 h 3 min | 74.39% | 65.54% | 66.80% | 87.65% |

| DeepLabV3 | 4 | RGBN | 1 d 16 h 4 min | 72.60% | 64.80% | 67.72% | 87.46% |

| DeepLabV3 | 4 | Full Spectrum | 1 d 16 h 21 min | 74.32% | 66.82% | 67.19% | 87.95% |

| DeepLabV3 | 8 | RGB | 1 d 15 h 7 min | 73.46% | 66.92% | 66.92% | 88.28% |

| FCN | 4 | RGB | 1 d 5 h 0 min | 70.90% | 66.62% | 66.94% | 88.39% |

| FCN | 4 | RGBN | 1 d 8 h 28 min | 71.92% | 67.79% | 68.14% | 89.08% |

| FCN | 4 | Full Spectrum | 1 d 7 h 12 min | 74.32% | 66.82% | 67.19% | 87.94% |

| U-Net | 4 | RGB | 1 d 0 h 7 min | 67.57% | 64.23% | 65.69% | 87.53% |

| U-Net | 4 | RGBN | 1 d 1 h 8 min | 67.73% | 65.90% | 66.54% | 88.48% |

| U-Net | 4 | Full Spectrum | 1 d 1 h 8 min | 74.32% | 66.82% | 67.19% | 87.94% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vallarino, G.; Genzano, N.; Gianinetto, M. The Potential of Deep Learning for Studying Wilderness with Copernicus Sentinel-2 Data: Some Critical Insights. Land 2025, 14, 2333. https://doi.org/10.3390/land14122333

Vallarino G, Genzano N, Gianinetto M. The Potential of Deep Learning for Studying Wilderness with Copernicus Sentinel-2 Data: Some Critical Insights. Land. 2025; 14(12):2333. https://doi.org/10.3390/land14122333

Chicago/Turabian StyleVallarino, Gaia, Nicola Genzano, and Marco Gianinetto. 2025. "The Potential of Deep Learning for Studying Wilderness with Copernicus Sentinel-2 Data: Some Critical Insights" Land 14, no. 12: 2333. https://doi.org/10.3390/land14122333

APA StyleVallarino, G., Genzano, N., & Gianinetto, M. (2025). The Potential of Deep Learning for Studying Wilderness with Copernicus Sentinel-2 Data: Some Critical Insights. Land, 14(12), 2333. https://doi.org/10.3390/land14122333