1. Introduction

Because the laser as a light source has the characteristics of good monochromaticity, strong coherence, and high collimation, lidar technology has developed rapidly. As an active measurement method, lidar has been widely used in atmospheric remote sensing and environmental monitoring due to its high temporal and spatial resolution. In particular, it has made important progress in the fine detection of atmospheric aerosol optical properties, microphysical properties, atmospheric temperature, relative humidity and other parameters, and has become an important tool for the study of atmospheric environmental parameters and their spatial-temporal evolution. However, in the actual detection process, the lidar return signal is greatly affected by noise. As the detection range increases, the return signal strength becomes weaker and weaker, and the far-field signal is easily submerged in noise [

1]. Therefore, it is of great importance to reduce the noise of the return signal.

For denoising lidar return signals, many methods have been performed. In traditional signal processing, the Fourier transform has been widely used in signal denoising. This method separates out the useful signal according to the principle that the frequency of the useful signal is lower than that of the noise, and it can be effective for the processing of linear stationary signals. However, the lidar signal is a nonlinear, nonstationary signal. If it is processed by traditional Fourier transform, it will cause significant distortion [

2], thus making the noise-reduction effect unsatisfactory.

The wavelet transform can overcome the shortcomings of the traditional Fourier transform, and has been widely used in signal noise reduction. When using wavelet transform to denoise data, the signal will be divided into a low frequency part and high frequency part. Useful signals are mostly concentrated in the low frequency part, and the high frequency part is considered to be noise, so only the low frequency part is restructured as useful signals. In this way, the denoising effect is better, but some useful signal components of the high frequency part are ignored, which can also easily cause significant distortion. In 2011, on the basis of wavelet denoising algorithm, J. Mao et al. used wavelet packet analysis method to denoise the lidar signal by restructuring the high and low frequency component, thereby inverting a more accurate extinction coefficient profile [

3]. In 2016, X. Qin et al. employed an adaptive method combining wavelet analysis and neural networks to denoise lidar return signals [

4], which combined the advantages of wavelet analysis and neural networks to obtain better denoising effects.

In recent years, empirical mode decomposition (EMD) algorithms have also been applied in lidar signal denoising. In 1998, Huang et al. proposed the Hilbert–Huang transform method, including EMD and Hilbert analysis, and decomposed the signal characteristics into different eigenmode functions layer by layer [

5]. In 2009, F. Zheng et al. tried to apply the EMD algorithm to lidar signal filtering, achieving remarkable results [

6]. In 2020, X. Cheng et al. proposed a denoising method based on ensemble empirical mode decomposition (EEMD), combining segmented singular value decomposition and lifting wavelet transform, which is more suitable for the denoising of lidar return signals [

7]. Moreover, in 2014, Konstantin Dragomire et al. proposed the variational modal decomposition (VMD) method, which has obvious advantages in processing nonlinear and nonstationary signals [

8]. In 2018, F. Xu et al. successfully applied VMD to denoise lidar return signals, and the effect was significantly better than that of wavelet analysis and other methods [

9].

In addition to these studies, there have been studies on noise reduction of photon counting lidar signals, such as the use of Poisson distribution. In 2016, a new method was proposed by W. Marais et al. to solve the problem of effective inversion of high-resolution and lower signal-to-noise ratio observations in nonuniform scenes. By using spatial and temporal correlation in the image and the Poisson distributed noise model, the inversion results were better and precisely maintained the spatial and temporal resolution, while significantly reducing the noise [

10]. In 2017, they considered the denoising and reconstruction of images corrupted by Poisson noise, proposed a regularized maximum likelihood formula for reconstruction of Poisson images, and proved that this formula can be solved by a coarse-to-fine proximal gradient optimization algorithm and is easier to generalize to inverse problem settings compared to the BM3D denoising method [

11]. In 2020, targeting the problem of suppressing random noise of photon-counting lidar signals, M. Hayman et al. introduced the Poisson refinement to generate statistically independent profiles from photon counting data, which can make the best adjustment to signal processing and optimize the smooth core of a specific photon-counting scene to achieve optimal filtering effects [

12].

In recent years, deep learning has been widely used in speech recognition, computer vision, natural language processing and other fields as a popular technology [

13]. The deep learning methods are also used in the lidar fields. In 2016, G. Jorge et al. carried out a preliminary study on the applicability of deep learning to improve biomass estimation based on lidar, and the results showed that the autoencoder statistically improved the quality of multi linear regression estimation [

14]. In 2020, S. Jennifer et al. used machine learning methods to detect boundary layer heights from the backscattered signal of lidar [

15]. In 2021, A. Andreas et al. proposed a new data-driven lidar waveform processing method, which extracted depth information using convolutional neural networks to generate realistic waveform data sets based on specific experimental parameters or large-scale synthesis scenes [

16].

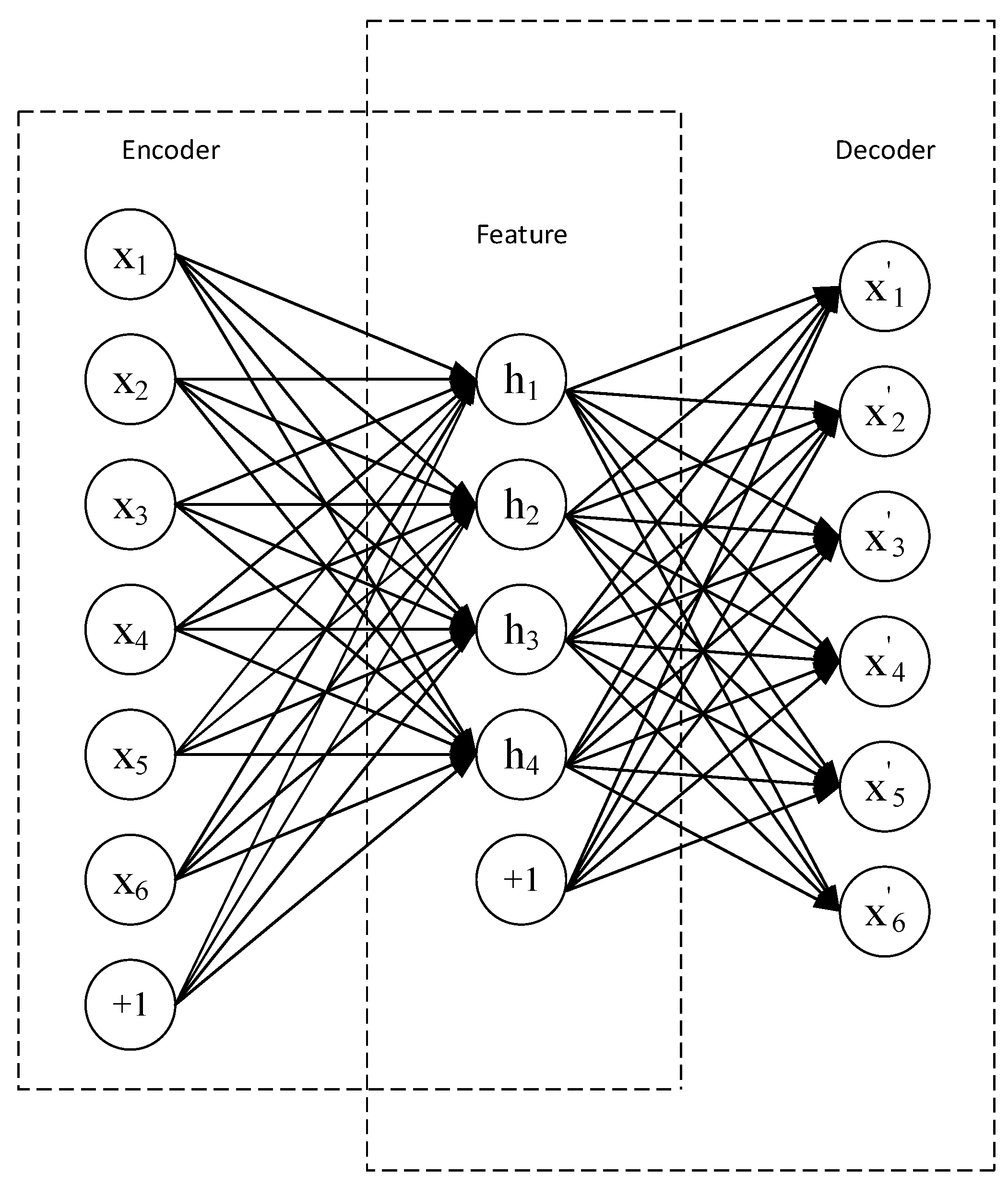

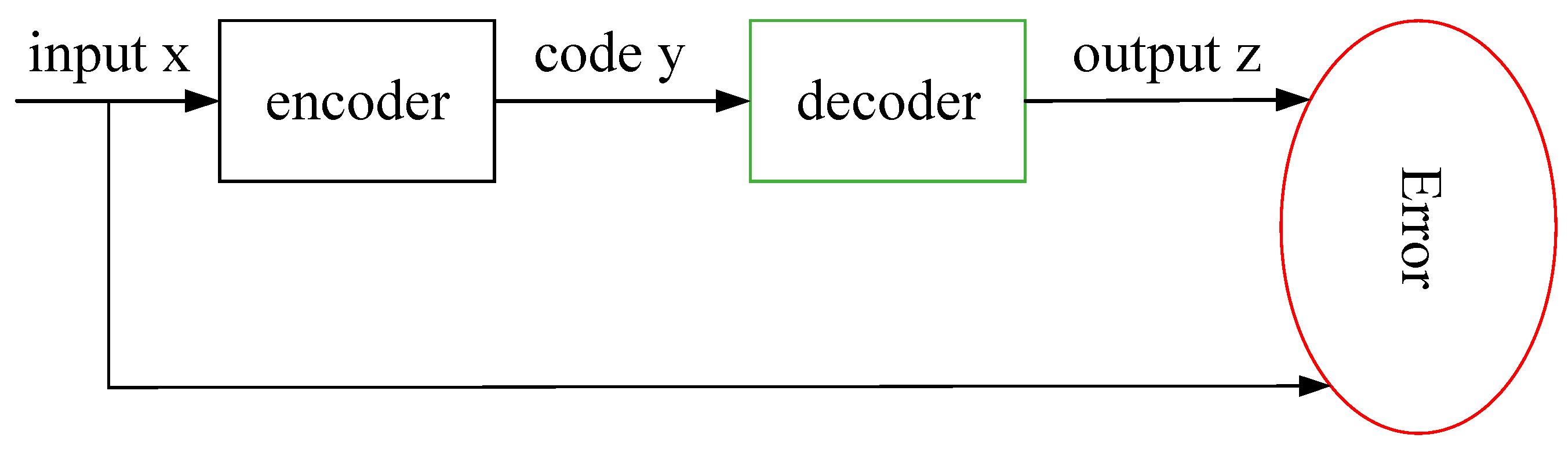

Although researchers have developed a large number of denoising methods, few of them applied the deep learning methods for denoising lidar signals. At present, the wavelet neural network algorithm is used for signal denoising, but such an adaptive denoising algorithm often needs a given tutor signal. But in actual lidar measurement, it is difficult for signal denoising to give a tutor signal. Therefore, an adaptive deep learning denoising method that does not require a given tutor signal has good potential. As an unsupervised learning algorithm, the autoencoder meets the requirements very well. As a learning method for feature extraction and data dimensionality reduction, the autoencoder is a branch of neural network consisting of two parts: encoding and decoding [

17]. The encoding part sparsely expresses the input data, and the decoding part completes the reconstruction of the data. The autoencoder developed from the initial data dimensionality reduction method into a data generation model, and ultimately evolved into several models, such as the denoising autoencoder, the sparse autoencoder, the convolutional autoencoder, the contraction autoencoder, the variational autoencoder and so on [

18]. Among them, the convolutional autoencoder was proposed by Masci et al. in 2011 to build convolutional neural networks [

19]. On the basis of retaining the advantages of traditional autoencoders, convolutional autoencoders combine the advantages of strong the feature-extraction capabilities of convolutional neural networks, which are more suitable for feature extraction with lidar return signals.

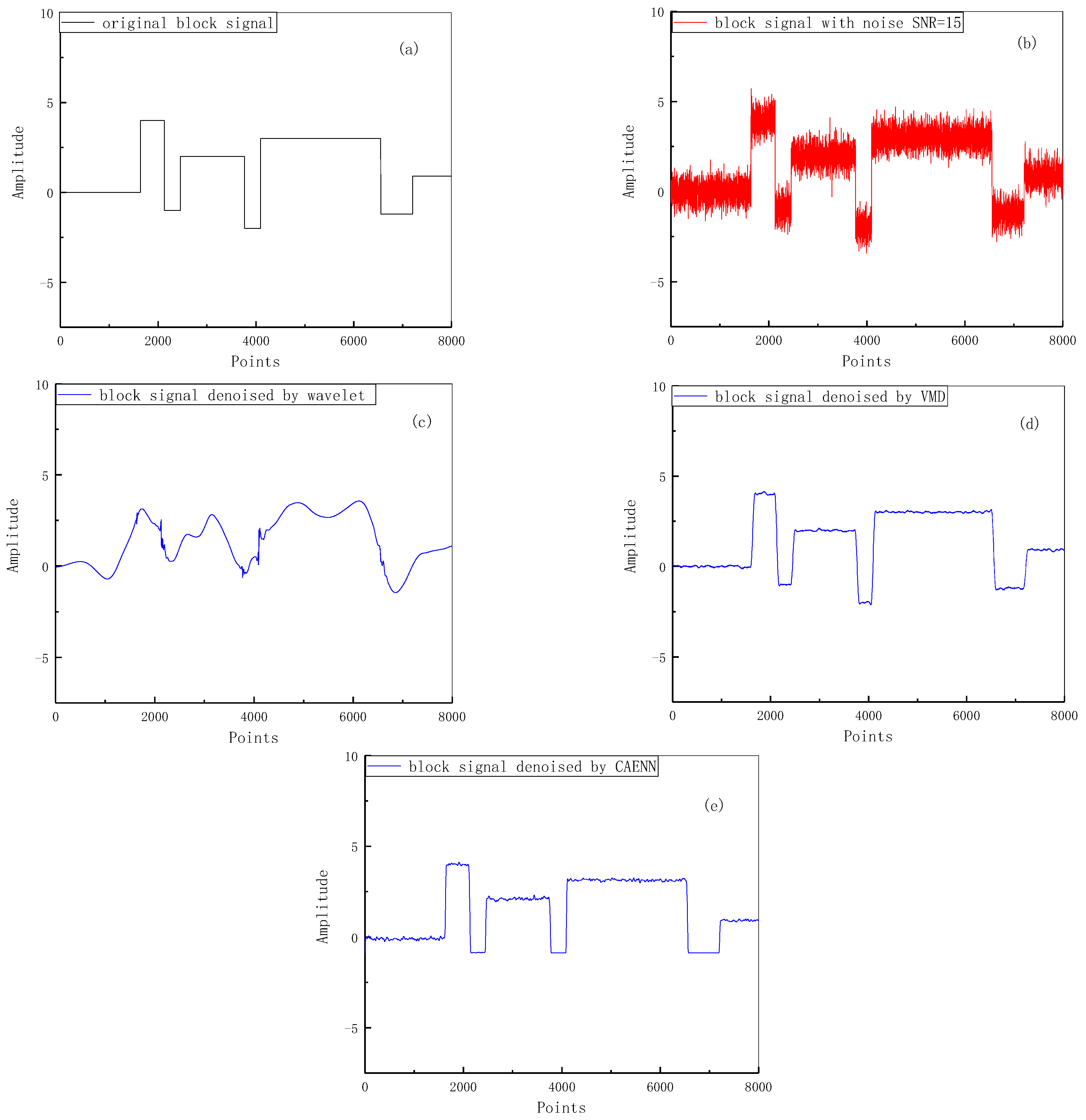

In this paper, a method based on convolutional autoencoding neural networks (CAENN) was proposed for denoising the lidar return signal. The method uses the encoding and decoding characteristics of the autoencoder to construct deep learning networks for learning the mapping from noised return signals to clean return signals. A large number of return signals measured by Mie-scattering lidar developed by North Minzu University were used to train the network to realize the automatic features of extraction and denoising of return signals. Several simulations and actual experiments were performed and the feasibility and practicability of the proposed CAENN method were proven by comparison with other methods, including wavelet threshold and the VMD method.

5. Conclusions

In this paper a method for denoising lidar return signals based on the CAENN method is proposed, which uses the encoding and decoding characteristics of the autoencoder to extract the deep features of lidar return signals layer by layer by constructing convolutional neural networks. Through the encoding–decoding process, the CAENN network can obtain the sparse expression of lidar return signals, which can convert the original lidar return signals with a lot of noise to lidar return signals with only effective signals, so as to filter out the noise. The encoding part extracts the features through the convolutional network, and eliminates the noise at the same time, while the decoding part completes the reconstruction of the data. To verify the feasibility of the method proposed, some simulations and experiments were carried out. The results show that the signal processed by CAENN method has the highest SNR ratio and the smallest MSE compared with the wavelet threshold and VMD methods, and its denoising effect is the most obvious. The algorithm proposed in this paper has strong adaptive ability, and its excellent denoising effect can be easily seen through the calculation of SNR and MSE. However, due to the scarcity of data involving special weather among the data sets, the processing of such data may lead to a small amount of noise remaining in the reconstructed signal. In future, we will collect more lidar signals in special weather to expand the data sets in order to train the network and optimize the model, thus further improving the universality of the model. This proposed method effectively improves the SNR of the lidar return signal, while retaining the complete characteristics of the signal, proving its effectiveness in denoising the lidar return signal.