Sensitivity of a Bowing Mesoscale Convective System to Horizontal Grid Spacing in a Convection-Allowing Ensemble

Abstract

1. Introduction

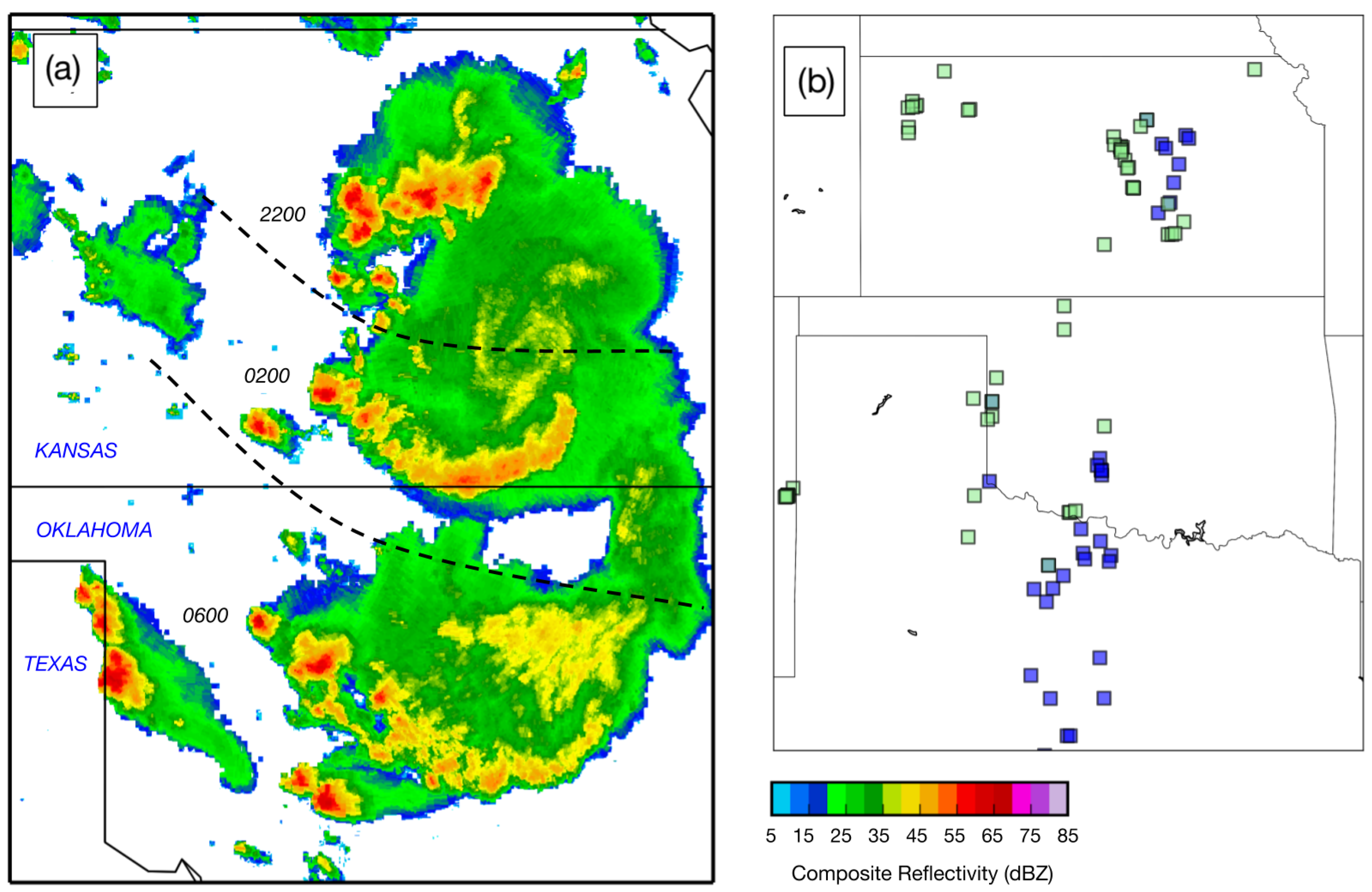

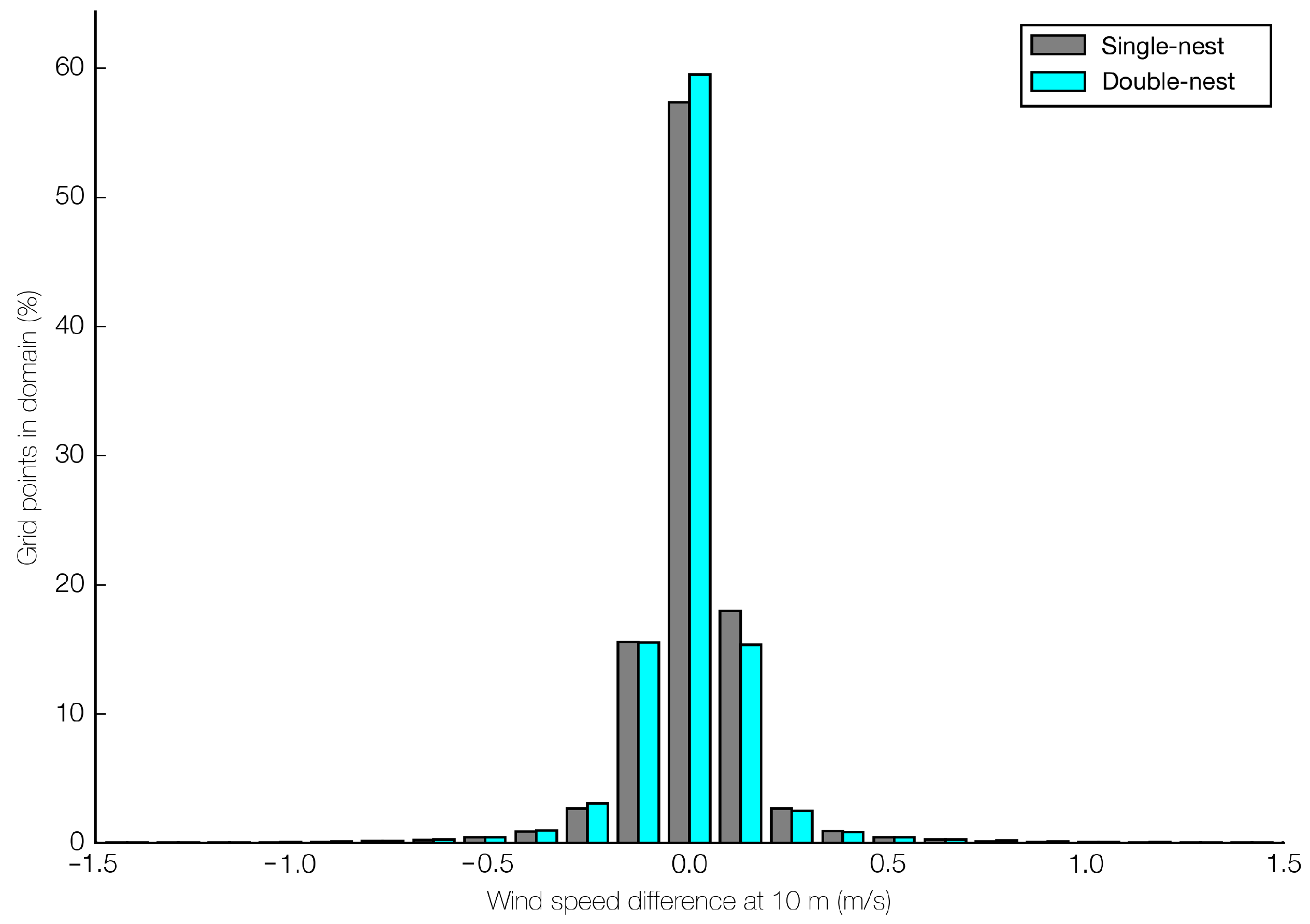

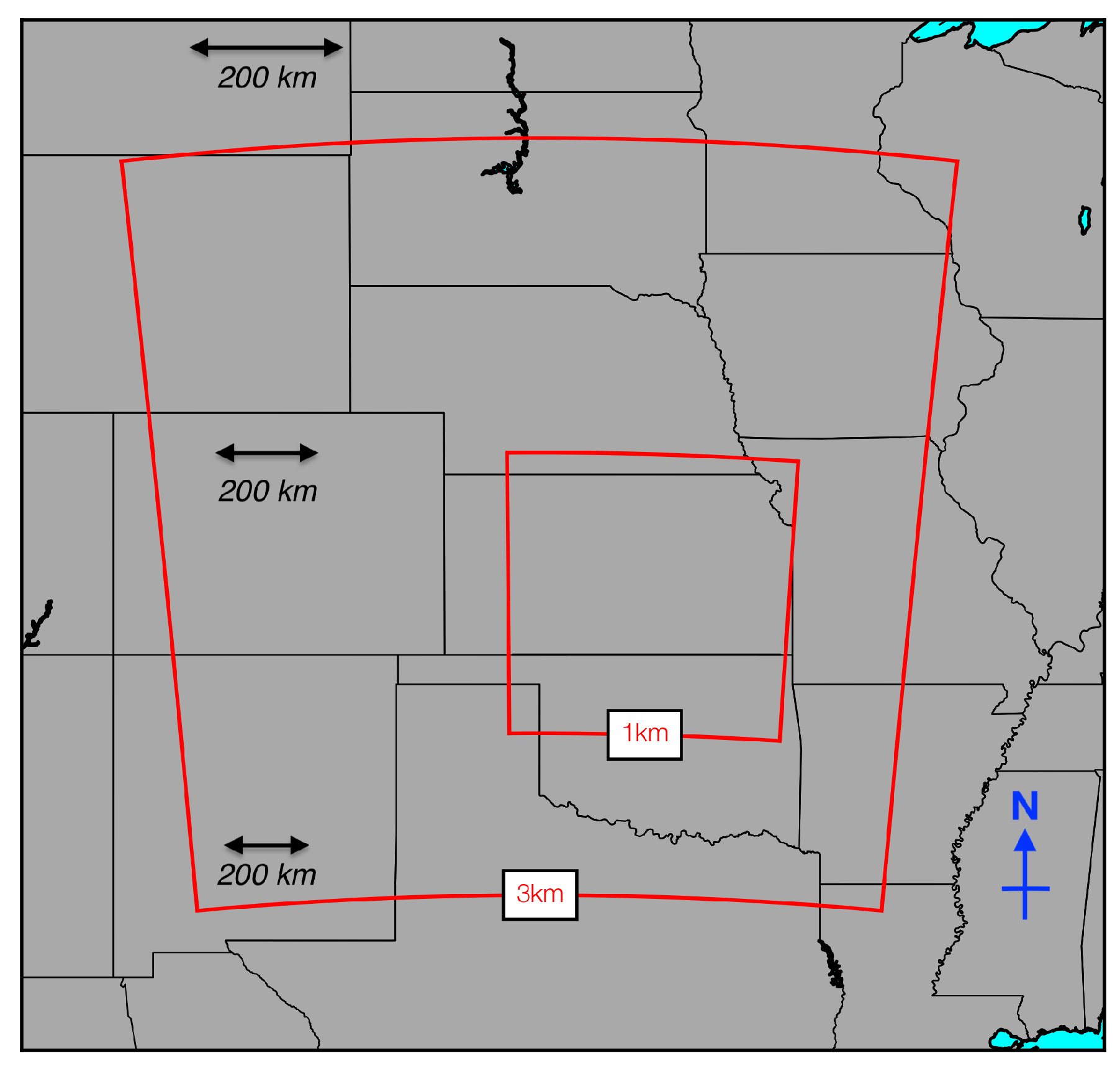

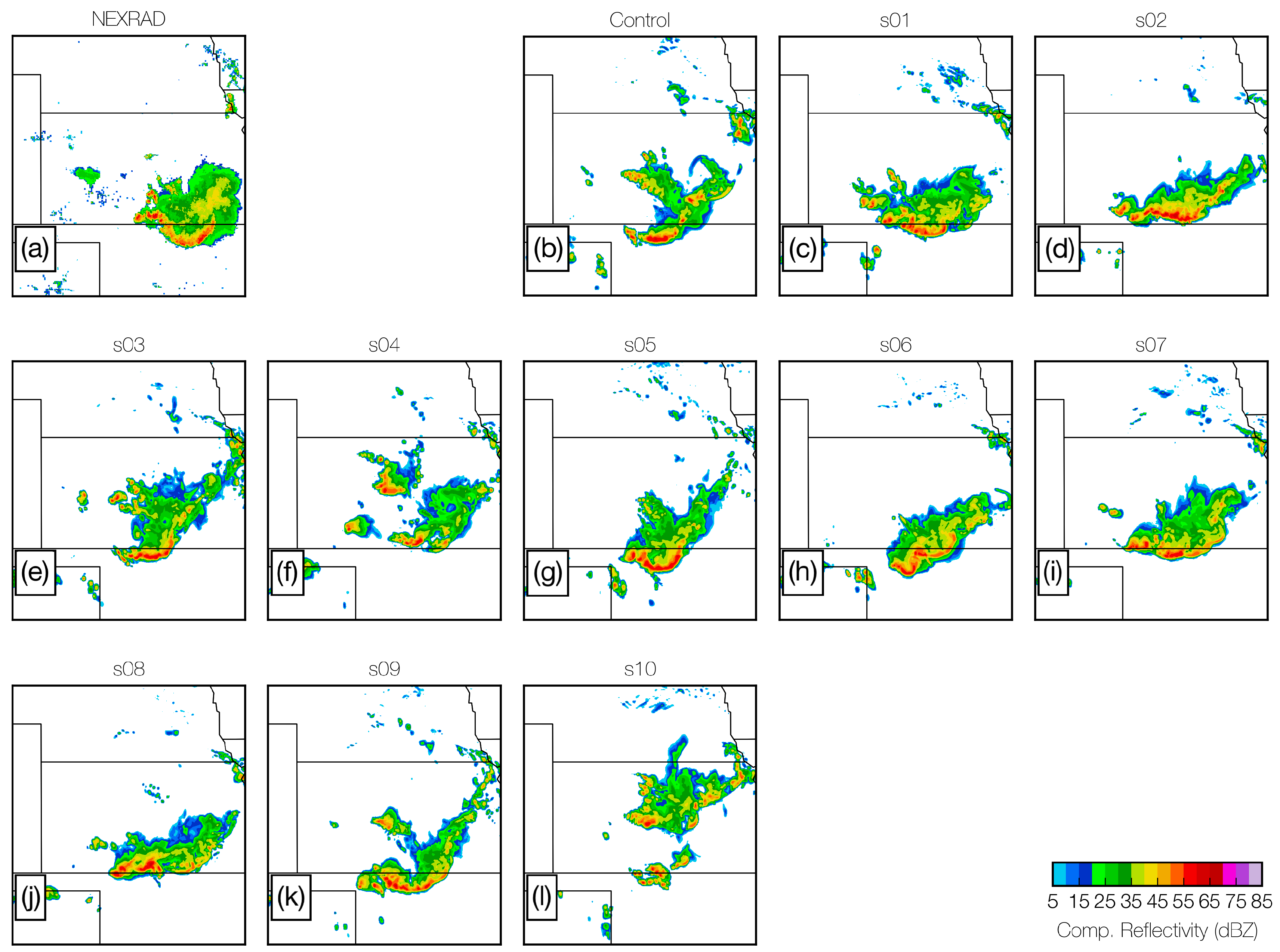

2. Data and Methods

Verification and Spread

- Structure (S), between −2 and +2. A positive value indicates simulated objects are too large and/or too flat;

- Amplitude (A), between −2 and +2. A positive value indicates the simulation has overestimated the domain-averaged variable (precipitation, reflectivity, etc.);

- Location (L), between 0 and +2. A positive value indicates a displacement of simulated objects from those observed.

3. Results

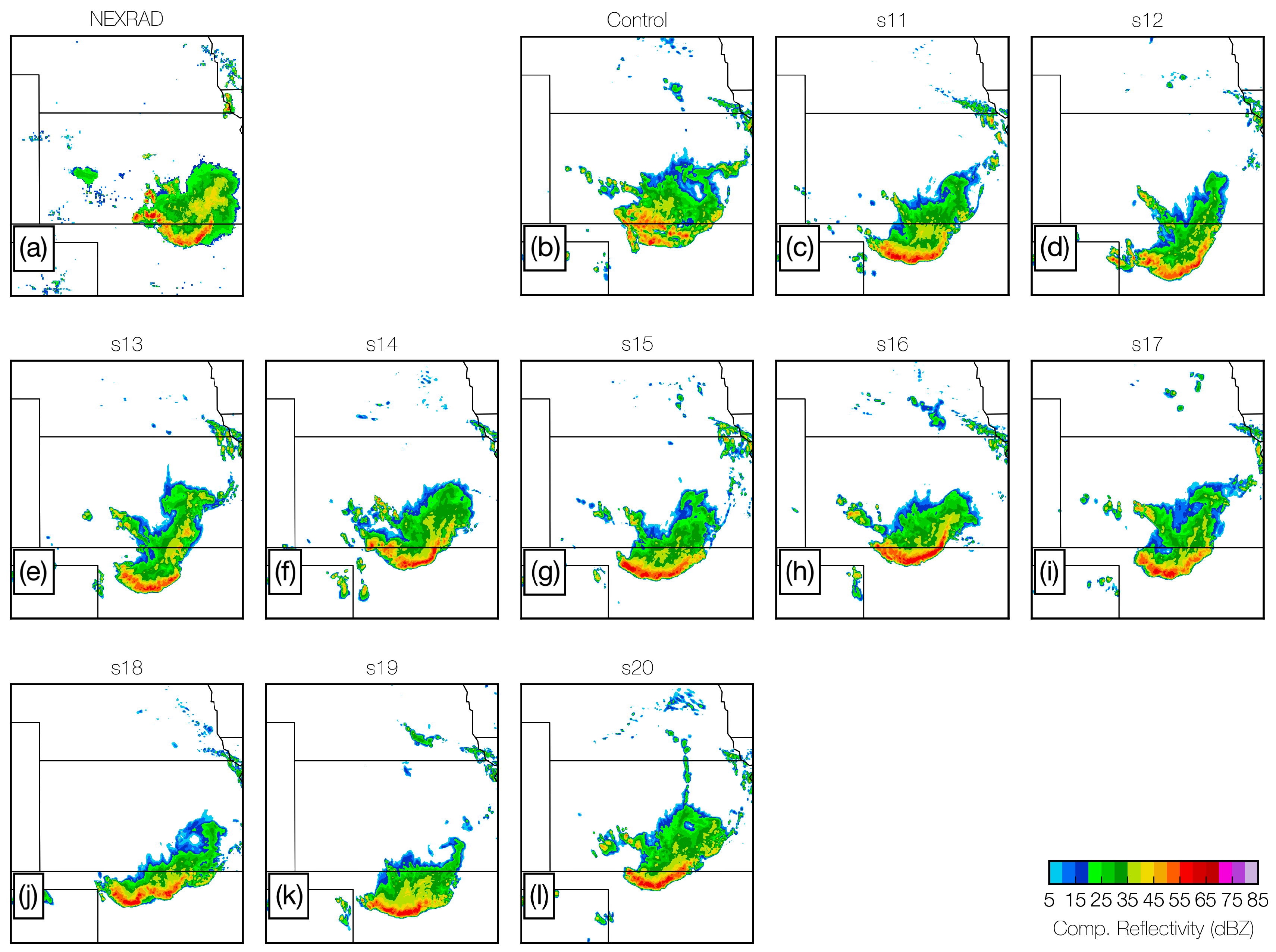

3.1. Sensitivity of Structure to

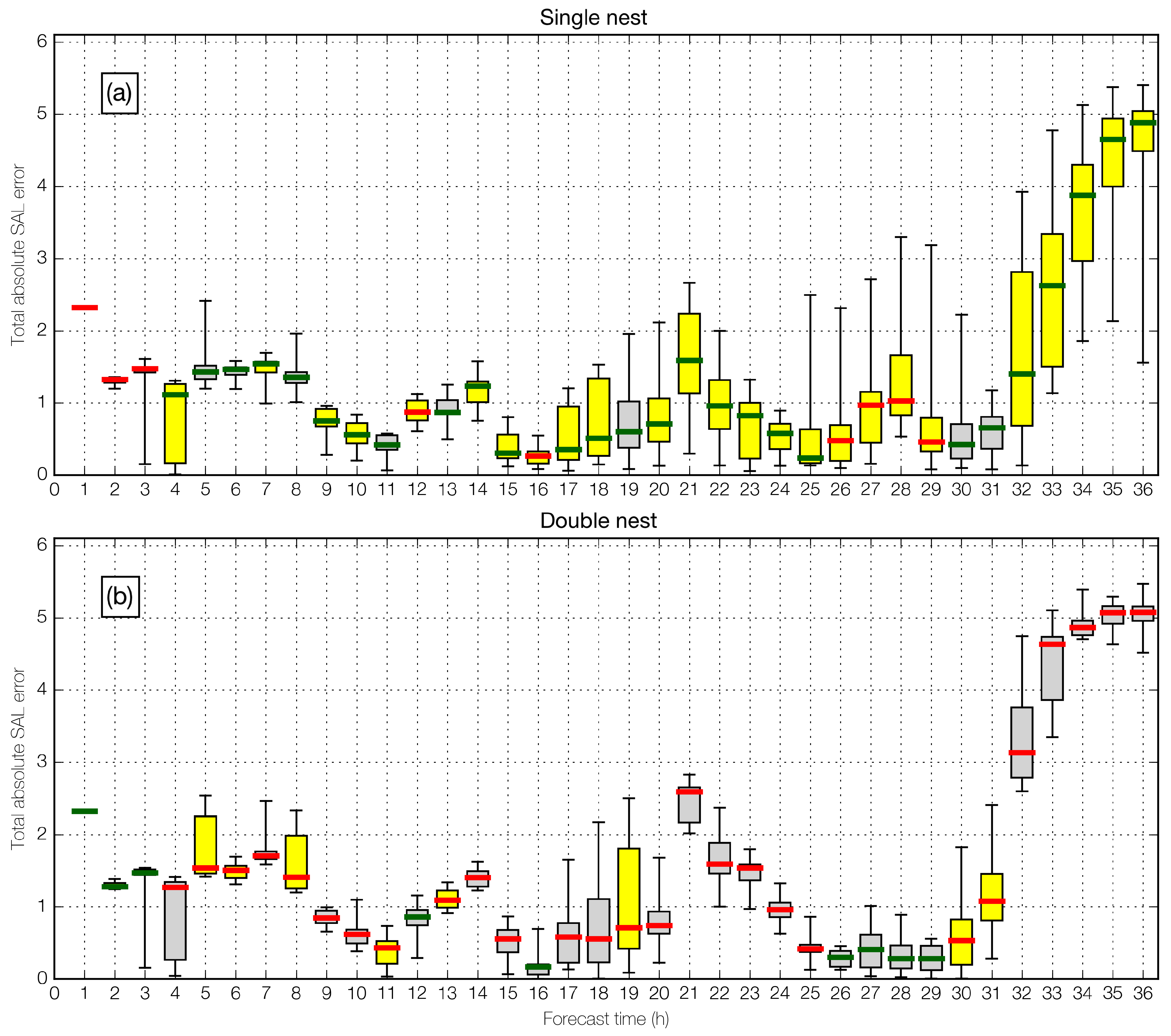

3.2. Sensitivity of Spread and Skill to

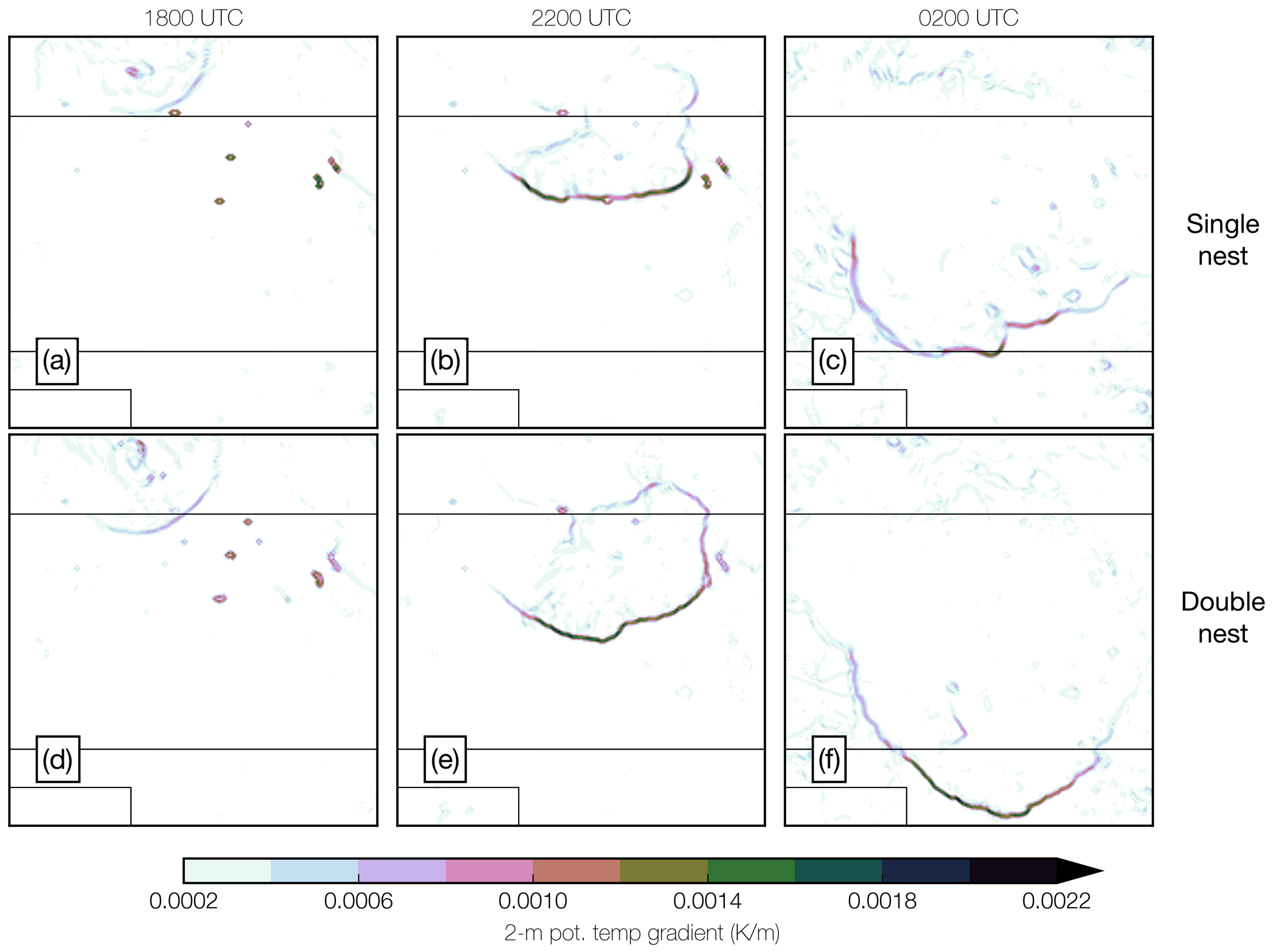

3.3. Sensitivity of System Speed to

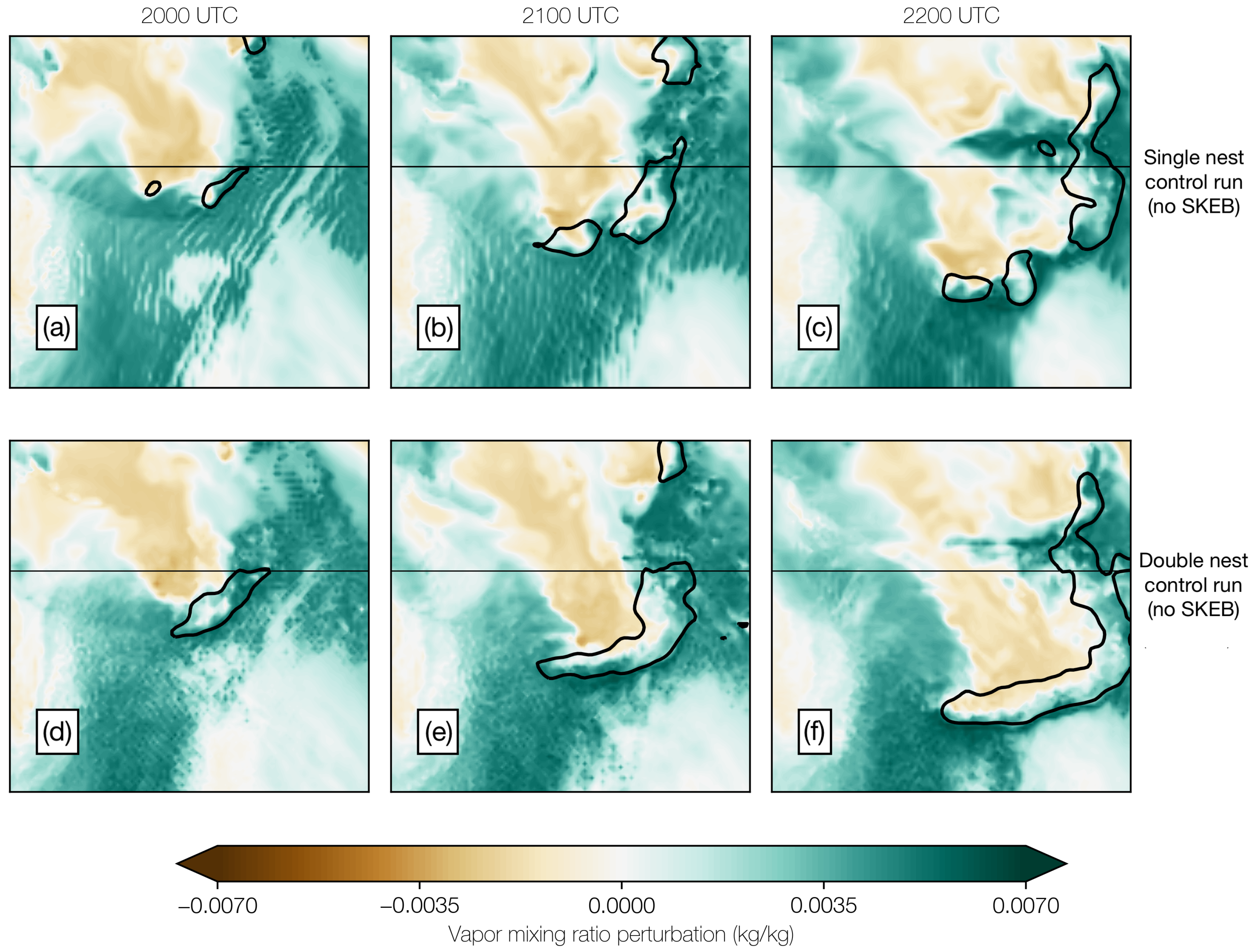

3.4. Sensitivity of System Speed to Skeb

4. Summary and Conclusions

- The spread of taSAL scores is larger in the single-nest ensemble. This disputes the hypothesis that spread increases as decreases;

- Skill is higher in the single-nest ensemble, as measured objectively using the ensemble median; however, MCS structure in simulated reflectivity is subjectively more realistic in the double-nest ensemble, as expected from the nesting of a higher-resolution domain;

- While both taSAL-measured spread and skill are higher in the single-nest ensemble overall, there is a lack of correlation between the two over the hourly forecast times, in both ensembles.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| NWP | Numerical weather prediction |

| MCS | Mesoscale convective system |

| MCV | Mesoscale convective vortex |

| GEFS/R2 | Global Ensemble Forecasting System, Reforecast version 2 |

| WRF | Weather Research and Forecast (model) |

| Horizontal grid spacing |

References

- Snively, D.V.; Gallus, W.A. Prediction of Convective Morphology in Near-Cloud-Permitting WRF Model Simulations. Weather Forecast. 2014, 29, 130–149. [Google Scholar] [CrossRef][Green Version]

- Lawson, J.; Gallus, W.A., Jr. On Contrasting Ensemble Simulations of Two Great Plains Bow Echoes. Weather Forecast. 2016, 31, 787–810. [Google Scholar] [CrossRef]

- Durran, D.R.; Weyn, J.A. Thunderstorms Do Not Get Butterflies. Bull. Am. Meteorol. Soc. 2016, 97, 237–243. [Google Scholar] [CrossRef]

- Lorenz, E.N. Deterministic Nonperiodic Flow. J. Atmos. Sci. 1963, 20, 130–141. [Google Scholar] [CrossRef]

- Stensrud, D.J.; Bao, J.W.; Warner, T.T. Using initial condition and model physics perturbations in short-range ensemble simulations of mesoscale convective systems. Mon. Weather Rev. 2000, 128, 2077–2107. [Google Scholar] [CrossRef]

- Buizza, R.; Milleer, M.; Palmer, T.N. Stochastic representation of model uncertainties in the ECMWF ensemble prediction system. Q. J. R. Meteorol. Soc. 1999, 125, 2887–2908. [Google Scholar] [CrossRef]

- Leutbecher, M.; Palmer, T.N. Ensemble forecasting. J. Comput. Phys. 2008, 227, 3515–3539. [Google Scholar] [CrossRef]

- Whitaker, J.S.; Loughe, A.F. The Relationship between Ensemble Spread and Ensemble Mean Skill. Mon. Weather Rev. 1998, 126, 3292–3302. [Google Scholar] [CrossRef]

- Lorenz, E.N. The predictability of a flow which possesses many scales of motion. Tellus 1969, 21, 289–307. [Google Scholar] [CrossRef]

- Palmer, T.N.; Döring, A.; Seregin, G. The real butterfly effect. Nonlinearity 2014, 27, R123. [Google Scholar] [CrossRef]

- Potvin, C.K.; Murillo, E.M.; Flora, M.L.; Wheatley, D.M. Sensitivity of Supercell Simulations to Initial-Condition Resolution. J. Atmos. Sci. 2017, 74, 5–26. [Google Scholar] [CrossRef]

- Schwartz, C.S.; Sobash, R.A. Revisiting sensitivity to horizontal grid spacing in convection-allowing models over the central–eastern United States. Mon. Weather Rev. 2019, 147, 4411–4435. [Google Scholar] [CrossRef]

- Thielen, J.E.; Gallus, W.A. Influences of Horizontal Grid Spacing and Microphysics on WRF Forecasts of Convective Morphology Evolution for Nocturnal MCSs in Weakly Forced Environments. Weather Forecast. 2019, 34, 1495–1517. [Google Scholar] [CrossRef]

- Sobash, R.A.; Schwartz, C.S.; Romine, G.S.; Weisman, M.L. Next-Day Prediction of Tornadoes Using Convection-Allowing Models with 1-km Horizontal Grid Spacing. Weather Forecast. 2019, 34, 1117–1135. [Google Scholar] [CrossRef]

- Sobash, R.A.; Schwartz, C.S.; Romine, G.S.; Fossell, K.R.; Weisman, M.L. Severe Weather Prediction Using Storm Surrogates from an Ensemble Forecasting System. Weather Forecast. 2016, 31, 255–271. [Google Scholar] [CrossRef]

- Potvin, C.K.; Flora, M.L. Sensitivity of Idealized Supercell Simulations to Horizontal Grid Spacing: Implications for Warn-on-Forecast. Mon. Weather Rev. 2015, 143, 2998–3024. [Google Scholar] [CrossRef]

- Berner, J.; Jung, T.; Palmer, T.N. Systematic Model Error: The Impact of Increased Horizontal Resolution versus Improved Stochastic and Deterministic Parameterizations. J. Clim. 2012, 25, 4946–4962. [Google Scholar] [CrossRef]

- Bryan, G.H.; Morrison, H. Sensitivity of a Simulated Squall Line to Horizontal Resolution and Parameterization of Microphysics. Mon. Weather Rev. 2012, 140, 202–225. [Google Scholar] [CrossRef]

- Squitieri, B.J.; Gallus, W.A. On the forecast sensitivity of MCS cold pools and related features to horizontal grid-spacing in convection-allowing WRF simulations. Weather Forecast. 2020, 35, 325–346. [Google Scholar] [CrossRef]

- Lebo, Z.J.; Morrison, H. Effects of Horizontal and Vertical Grid Spacing on Mixing in Simulated Squall Lines and Implications for Convective Strength and Structure. Mon. Weather Rev. 2015, 143, 4355–4375. [Google Scholar] [CrossRef]

- Varble, A.; Morrison, H.; Zipser, E. Effects of Under-Resolved Convective Dynamics on the Evolution of a Squall Line. Mon. Weather Rev. 2020, 148, 289–311. [Google Scholar] [CrossRef]

- Johnson, A.; Wang, X.; Kong, F.; Xue, M. Object-Based Evaluation of the Impact of Horizontal Grid Spacing on Convection-Allowing Forecasts. Mon. Weather Rev. 2013, 141, 3413–3425. [Google Scholar] [CrossRef]

- Mason, P.J.; Thomson, D.J. Stochastic backscatter in large-eddy simulations of boundary layers. J. Fluid Mech. 1992, 242, 51–78. [Google Scholar] [CrossRef]

- Shutts, G. A kinetic energy backscatter algorithm for use in ensemble prediction systems. Q. J. R. Meteorol. Soc. 2005, 131, 3079–3102. [Google Scholar] [CrossRef]

- Berner, J.; Ha, S.Y.; Hacker, J.P.; Fournier, A.; Snyder, C. Model Uncertainty in a Mesoscale Ensemble Prediction System: Stochastic versus Multiphysics Representations. Mon. Weather Rev. 2011, 139, 1972–1995. [Google Scholar] [CrossRef]

- Storm Prediction Center. Available online: http://www.spc.noaa.gov/products/md/2013/md1723.html (accessed on 1 March 2016).

- Powers, J.G.; Klemp, J.B.; Skamarock, W.C.; Davis, C.A.; Dudhia, J.; Gill, D.O.; Coen, J.L.; Gochis, D.J.; Ahmadov, R.; Peckham, S.E.; et al. The Weather Research and Forecasting Model: Overview, System Efforts, and Future Directions. Bull. Am. Meteorol. Soc. 2017, 98, 1717–1737. [Google Scholar] [CrossRef]

- Hamill, T.M.; Bates, G.T.; Whitaker, J.S.; Murray, D.R.; Fiorino, M.; Galarneau, T.J.; Zhu, Y.; Lapenta, W. NOAA’s second-generation global medium-range ensemble reforecast data set. Bull. Am. Meteorol. Soc. 2013, 94, 1553–1565. [Google Scholar] [CrossRef]

- Berner, J.; Achatz, U.; Batté, L.; Bengtsson, L.; Cámara, A.D.L.; Christensen, H.M.; Colangeli, M.; Coleman, D.R.B.; Crommelin, D.; Dolaptchiev, S.I.; et al. Stochastic Parameterization: Toward a New View of Weather and Climate Models. Bull. Am. Meteorol. Soc. 2017, 98, 565–588. [Google Scholar] [CrossRef]

- Wernli, H.; Paulat, M.; Hagen, M.; Frei, C. SAL—A novel quality measure for the verification of quantitative precipitation forecasts. Mon. Weather Rev. 2008, 136, 4470–4487. [Google Scholar] [CrossRef]

- Lawson, J.R.; Gallus, W.A. Adapting the SAL method to evaluate reflectivity forecasts of summer precipitation in the central United States. Atmos. Sci. Lett. 2016, 17, 524–530. [Google Scholar] [CrossRef]

- Iowa State University NEXRAD Composites. Available online: https://mesonet.agron.iastate.edu/docs/nexrad_composites/ (accessed on 1 December 2019).

- Davis, C.A.; Brown, B.G.; Bullock, R.; Halley-Gotway, J. The Method for Object-Based Diagnostic Evaluation (MODE) Applied to Numerical Forecasts from the 2005 NSSL/SPC Spring Program. Weather Forecast. 2009, 24, 1252–1267. [Google Scholar] [CrossRef]

- Tennekes, H. Turbulent Flow in Two and Three Dimensions. Bull. Am. Meteorol. Soc. 1978, 59, 22–28. [Google Scholar] [CrossRef]

- Markowski, P.; Richardson, Y. Mesoscale Meteorology in Mid-Latitudes; Wiley-Blackwell: Hoboken, NJ, USA, 2010; p. 407. [Google Scholar]

- Weisman, M.L.; Skamarock, W.C.; Klemp, J.B. The Resolution Dependence of Explicitly Modeled Convective Systems. Mon. Weather Rev. 1997, 125, 527–548. [Google Scholar] [CrossRef]

- Mandelbrot, B. How long is the coast of Britain? Statistical self-similarity and fractional dimension. Science 1967, 156, 636–638. [Google Scholar] [CrossRef]

- Droegemeier, K.K.; Wilhelmson, R.B. Numerical Simulation of Thunderstorm Outflow Dynamics. Part I: Outflow Sensitivity Experiments and Turbulence Dynamics. J. Atmos. Sci. 1987, 44, 1180–1210. [Google Scholar] [CrossRef]

- Adlerman, E.J.; Droegemeier, K.K. The Sensitivity of Numerically Simulated Cyclic Mesocyclogenesis to Variations in Model Physical and Computational Parameters. Mon. Weather Rev. 2002, 130, 2671–2691. [Google Scholar] [CrossRef]

- Gilleland, E. A New Characterization within the Spatial Verification Framework for False Alarms, Misses, and Overall Patterns. Weather Forecast. 2017, 32, 187–198. [Google Scholar] [CrossRef]

- Radanovics, S.; Vidal, J.P.; Sauquet, E. Spatial Verification of Ensemble Precipitation: An Ensemble Version of SAL. Weather Forecast. 2018, 33, 1001–1020. [Google Scholar] [CrossRef]

- Flora, M.L.; Skinner, P.S.; Potvin, C.K.; Reinhart, A.E.; Jones, T.A.; Yussouf, N.; Knopfmeier, K.H. Object-based verification of short-term, storm-scale probabilistic mesocyclone guidance from an experimentalWarn-on-Forecast system. Weather Forecast. 2019, 34, 1721–1739. [Google Scholar] [CrossRef]

- Squitieri, B.J.; Gallus, W.A., Jr. On the Acceleration of Nocturnal Mesoscale Convective Systems in Simulations with Increased Horizontal Grid Spacing. In Proceedings of the 97th AMS Annual Meeting, Seattle, WA, USA, 22–26 January 2017. [Google Scholar]

| Parameterization | Scheme |

|---|---|

| Microphysics | Thompson |

| Longwave Radiation | RRTM |

| Shortwave Radiation | Dudhia |

| Surface Layer | MYNN |

| Land Surface | Noah |

| Planetary Boundary Layer | MYNN Level 2.5 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lawson, J.R.; Gallus, W.A., Jr.; Potvin, C.K. Sensitivity of a Bowing Mesoscale Convective System to Horizontal Grid Spacing in a Convection-Allowing Ensemble. Atmosphere 2020, 11, 384. https://doi.org/10.3390/atmos11040384

Lawson JR, Gallus WA Jr., Potvin CK. Sensitivity of a Bowing Mesoscale Convective System to Horizontal Grid Spacing in a Convection-Allowing Ensemble. Atmosphere. 2020; 11(4):384. https://doi.org/10.3390/atmos11040384

Chicago/Turabian StyleLawson, John R., William A. Gallus, Jr., and Corey K. Potvin. 2020. "Sensitivity of a Bowing Mesoscale Convective System to Horizontal Grid Spacing in a Convection-Allowing Ensemble" Atmosphere 11, no. 4: 384. https://doi.org/10.3390/atmos11040384

APA StyleLawson, J. R., Gallus, W. A., Jr., & Potvin, C. K. (2020). Sensitivity of a Bowing Mesoscale Convective System to Horizontal Grid Spacing in a Convection-Allowing Ensemble. Atmosphere, 11(4), 384. https://doi.org/10.3390/atmos11040384