1. Introduction

Pineapple is a commercially significant tropical crop predominantly cultivated in tropical and subtropical agro-ecological zones [

1]. Commercial orchards are typically established on undulating or mountainous terrain characterized by irregular topography. Characteristic planting patterns are high-density and exhibit substantial inter-plant variability in height [

2]. These environmental and agronomic factors markedly constrain the operational efficacy of conventional agricultural machinery. Moreover, mechanical transit across sloped surfaces frequently causes mechanical damage to immature inflorescences, thereby compromising subsequent yield cycles. Consequently, despite progressive advances in automation technologies, pineapple harvesting remains overwhelmingly dependent on manual labor. Manual operations are not only labor-intensive and skill-intensive but also entail significant occupational hazards, including lacerations from foliar spines, pericarp abrasion, and puncture injuries; these obstacles to production collectively elevate production costs and diminish harvesting efficiency [

3,

4]. Empirical surveys [

5] indicate that labor costs associated with pineapple harvesting account for 42.71% of the total production expenditures. In response to evolving developmental imperatives, the development of mechanized harvesting technologies specifically tailored to pineapple production is therefore imperative.

Rapid advancements in machine vision and robot-control technologies have driven innovations in agricultural automation, including robotic harvesting systems for various fruits such as apples, citrus, and bananas [

6,

7,

8]. For pineapples, however, robotic harvesting solutions remain comparatively scarce. Previous research has explored diverse designs, such as flexible finger rollers for manual-like picking [

9,

10], three-degree-of-freedom robotic arms for continuous harvesting [

11], and double-gantry frameworks for specialized equipment [

12]. These robots typically integrate key components, including robotic arms, end-effectors, vision systems, and mobility devices. Roller-type harvesters and gantry-based mobile frameworks encounter significant operational constraints in hilly or mountainous terrains; furthermore, software-level picking solutions tailored to the specific growth environment of pineapple remain scarce. This study employs a tracked mountain chassis as the mobile platform and focuses on the development of vision-based recognition and robotic arm path-planning algorithms for harvesting.

Early-stage fruit-picking robots predominantly relied on traditional image-processing algorithms, utilizing manually crafted features such as color, shape, and texture for detection [

13]. Despite achieving reasonable recognition rates, these methods often suffered from slow processing speeds and poor generalization, rendering them unsuitable for real-time applications in dynamic orchard environments. Modern deep learning-based detectors, such as the YOLO series and SSD models, have significantly improved detection efficiency and robustness, especially under challenging field conditions such as variable lighting and heavy occlusion. Recent research on the subject of deep learning for pineapple detection has seen various model enhancements. Liu [

14] enhanced YOLOv5s by incorporating CBAM and Ghost-CBAM attention mechanisms along with GhostConv to reduce model parameters, achieving a recognition accuracy of 91.89%. Li [

15] adapted YOLOv7 using a MobileOne backbone and a slender-neck architecture, greatly increasing detection speed (to 17.52 FPS) in real-time applications. Similarly, Lai [

16] refined YOLOv7 by integrating SimAM attention mechanisms, MPConv, and Soft-NMS, resulting in a mAP of 95.82% and a recall of 89.83%. Chen [

17] further embedded the ECA attention mechanism into RetinaNet, achieving a recognition rate of 94.75% even under heavily occluded conditions. These models demonstrate the capacity of deep learning-based methods to address challenges in agricultural environments, particularly with respect to robustness and precision.

Robotic arms have become essential for fruit harvesting, including harvesting of apples, citrus, and tomatoes. A critical aspect of their functionality is path planning, which aims to create safe, collision-free trajectories from a start point to a goal point within a constrained workspace, optimizing for both distance and time [

18,

19]. Widely employed methods for path planning include Ant Colony Optimization [

20], Artificial Potential Field (APF) [

21], A* [

22], and the Rapidly-exploring Random Tree (RRT). However, the inherent randomness of RRT can often lead to suboptimal solutions. To mitigate this, researchers have proposed various improvements tailored to agricultural scenarios, Zhang [

23] introduced a Cauchy goal-biased bidirectional RRT algorithm, which leverages a Cauchy distribution to enhance sampling efficiency and integrates a goal-attraction mechanism to guide tree expansion; this algorithm was specifically designed for multi-DOF manipulators. Ma [

24] developed an improved RRT-Connect algorithm by incorporating guidance points derived from prior knowledge of the configuration space, significantly enhancing its performance in obstacle avoidance. While the aforementioned approaches refine stochasticity within the original algorithmic framework, several investigators have sought to attain superior planning performance through hybrid strategies. Yin [

25] introduced GI-RRT, which synergizes deep learning-based grasp-pose estimation with the RRT algorithm to attain precise and efficient grasping in complex agricultural scenarios. Xiong [

26] fused the artificial potential-field method with reinforcement learning, substantially enhancing convergence efficiency. Hybrid planning paradigms consistently demonstrate heightened robustness and adaptability in intricate environments. In the highly unstructured settings characteristic of agriculture, traditional robotic-arm path-planning algorithms alone prove insufficient to satisfy harvesting requirements.

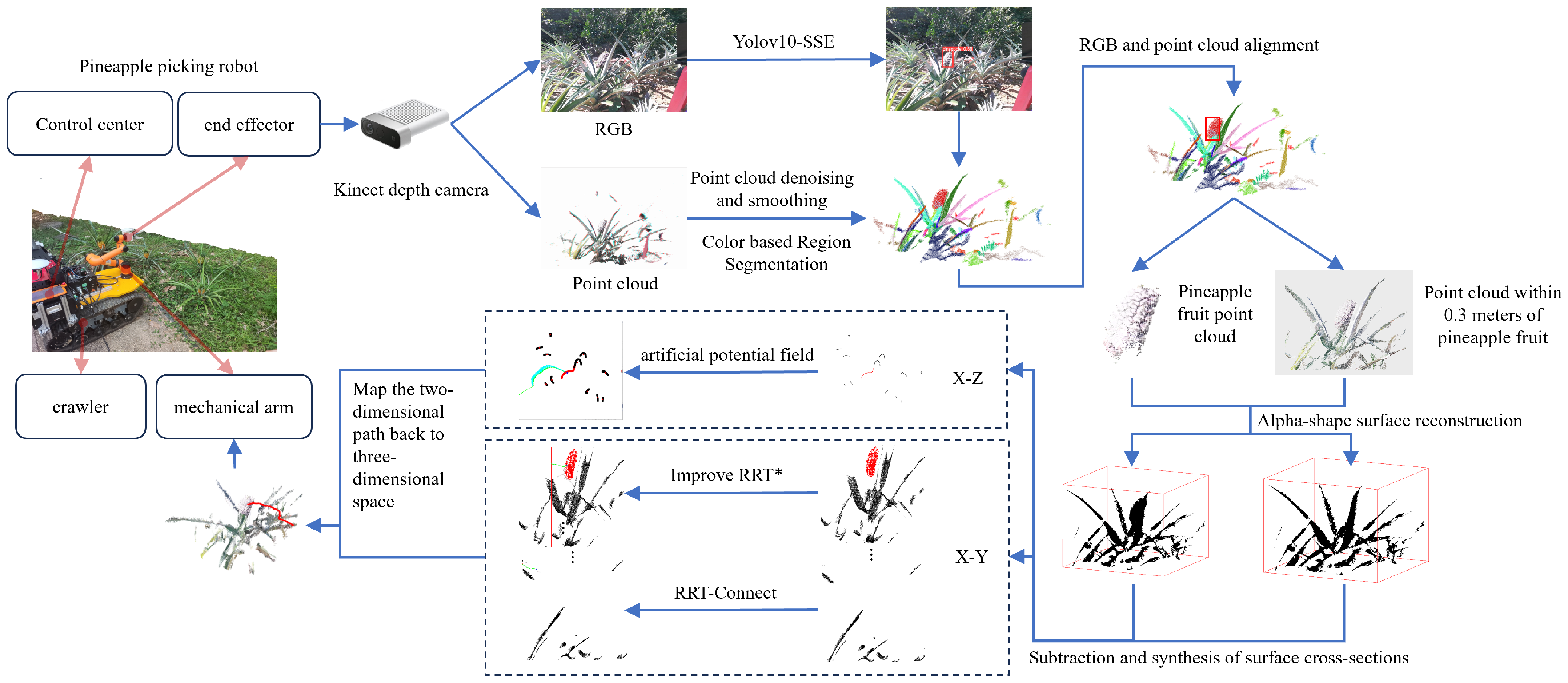

In conclusion, this study addresses two primary challenges in the deployment of pineapple-harvesting robots: robust visual detection and planning of efficient robotic arm motion. To enhance detection reliability in complex field environments, an improved YOLOv10n model is proposed. This model incorporates advanced feature extraction and attention mechanisms for higher accuracy and computational efficiency. For motion planning, the 3D pathfinding problem is simplified to a 2D plane through preprocessing of point cloud data, enabling more efficient trajectory generation. A hybrid algorithm combining the APF method with an improved RRT* algorithm is employed to ensure optimal collision-free path planning. This integrated approach provides a scalable solution for automated pineapple harvesting and mitigates key challenges in the deployment of agricultural robotics. A detailed workflow for this methodology is illustrated in

Figure 1, below.

4. Conclusions

This study addresses critical challenges in automated pineapple harvesting posed by high occlusion rates and complex terrains in natural orchard environments. By integrating enhanced visual detection and efficient path planning methodologies, the proposed approach demonstrates significant improvements in both detection accuracy and trajectory generation for the robotic arm.

The visual detection model, Yolov10n-SSE, achieves precision, recall, and mAP metrics of 93.8%, 84.9%, and 91.8%, respectively, outperforming several baseline models in occlusion-heavy conditions. These results highlight its applicability for robust fruit detection in real-world field environments, where traditional methods often struggle under conditions of fluctuating lighting and dense foliage. The integration of split convolution, squeeze-and-excitation attention, and multi-scale attention modules further reduces computational complexity, enabling real-time deployment on resource-constrained systems.

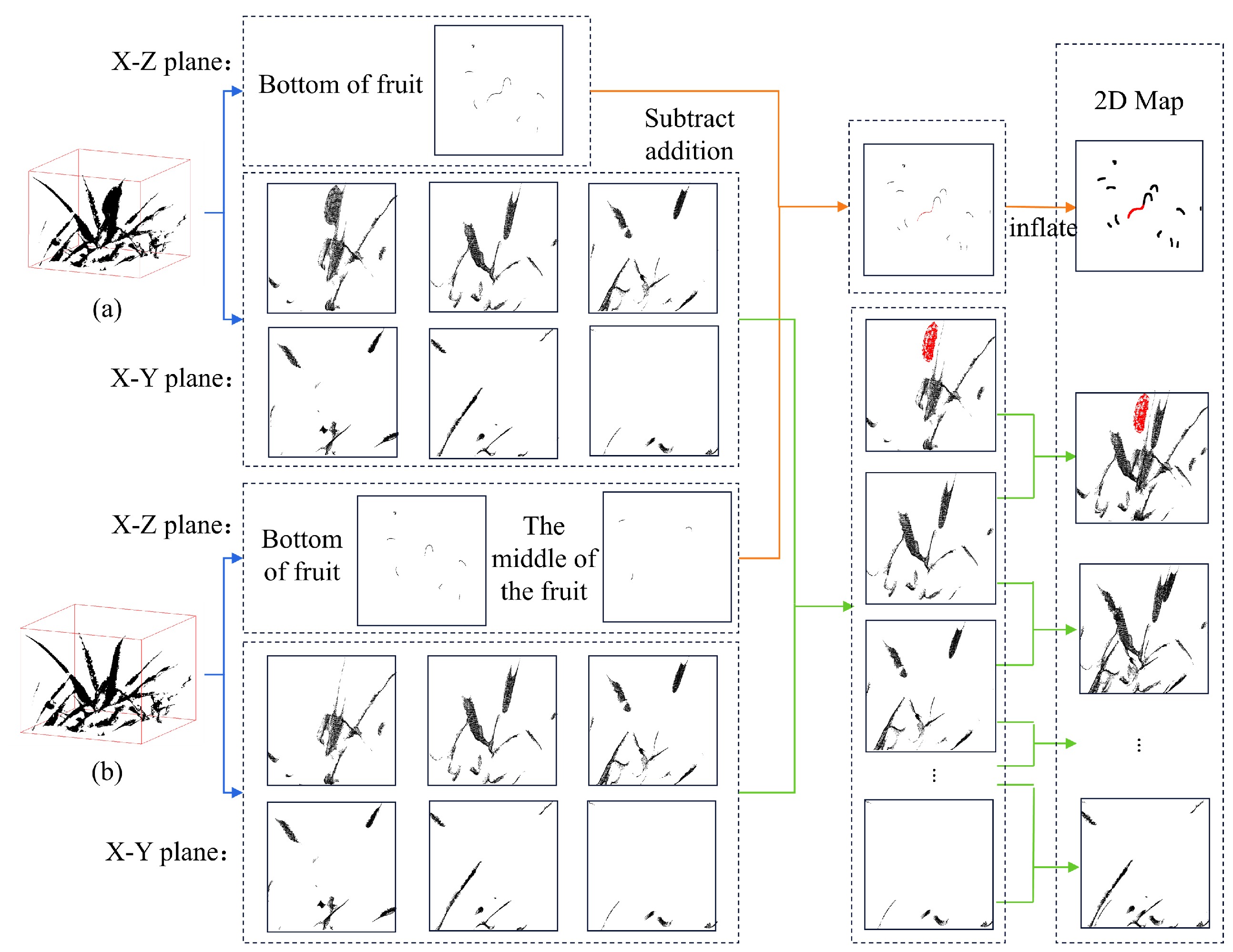

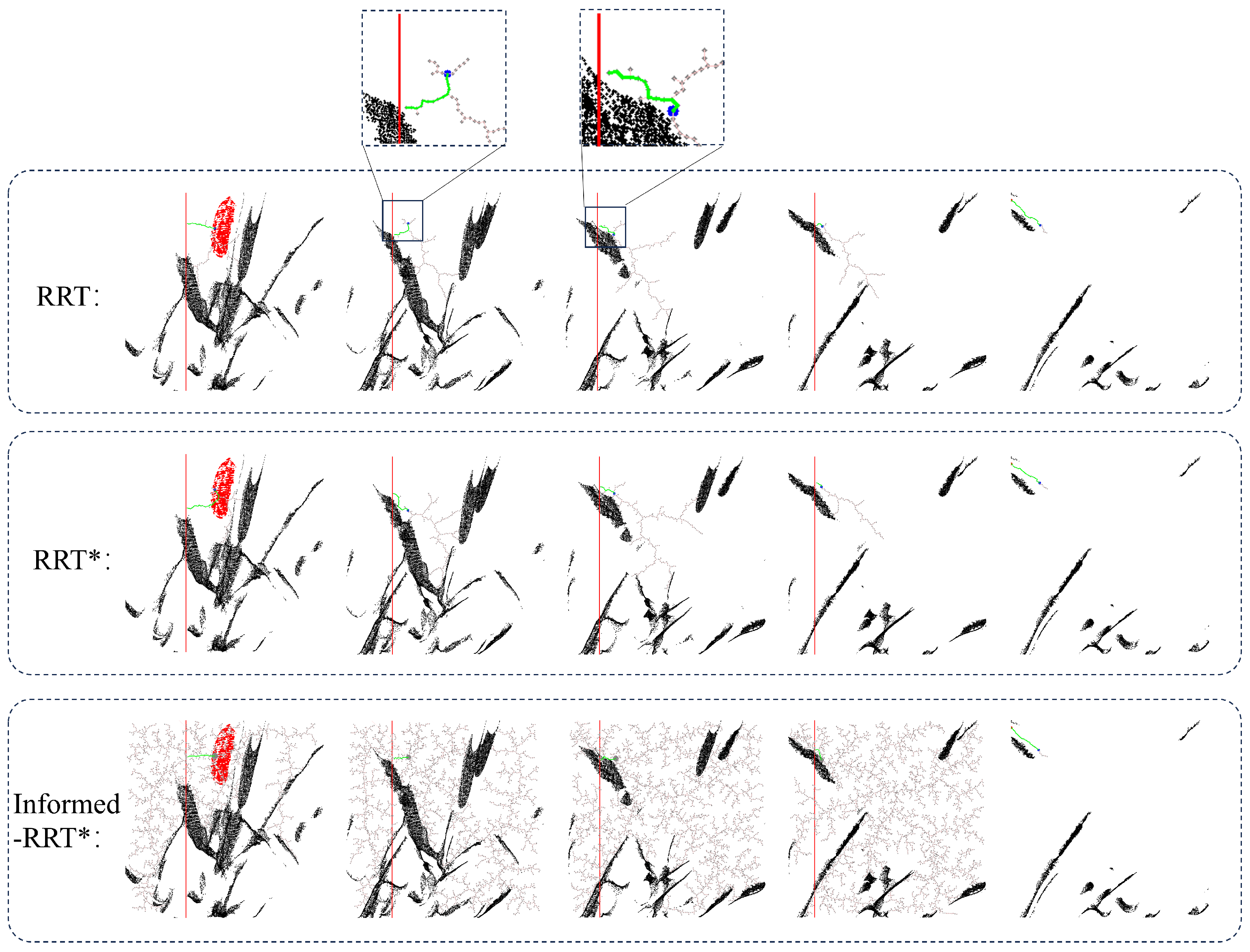

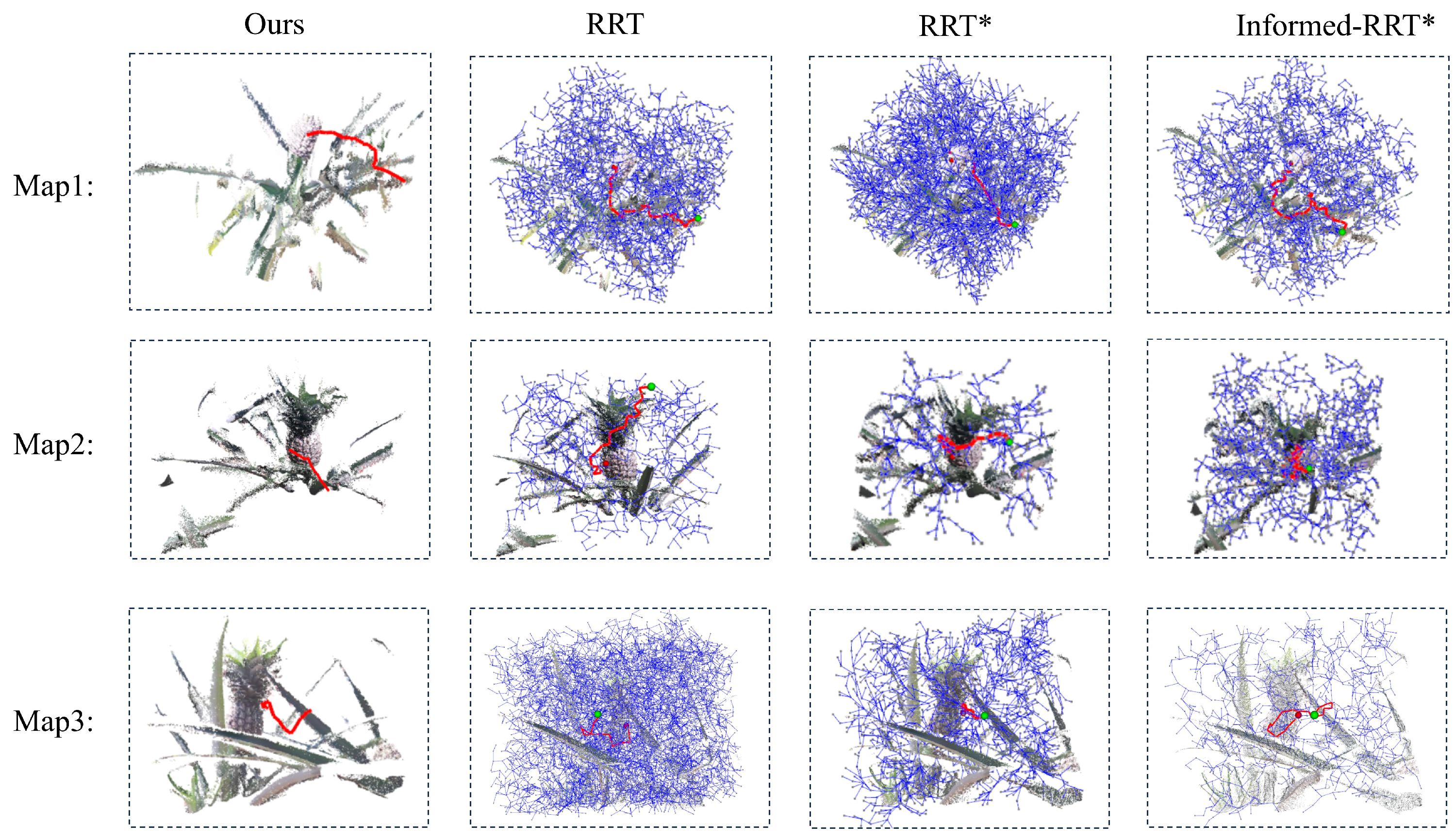

A novel dimensionality-reduction strategy was introduced to simplify 3D path planning into a tractable 2D image-space task using depth camera point clouds. By combining the APF method with an improved RRT* algorithm, the proposed approach ensures the generation of collision-free paths while achieving faster convergence and stable trajectory planning. Experimental results show an 18.7% reduction in computation time compared to standard RRT algorithms and a high success rate in generating obstacle-free paths. Validation in ROS-based robotic arm simulations confirms the feasibility of the methodology for real-world applications, demonstrating scalability across different types of agricultural tasks.

The integration of advanced visual detection and hybrid path planning algorithms offers a viable technical solution for automated pineapple harvesting. Beyond increasing operational efficiency, this study establishes foundational methodologies that can be adapted for harvesting other fruits or operations in highly cluttered agricultural environments. The findings support a broader transformation of traditional agricultural practices into sustainable, automated systems, addressing labor shortages, reducing costs, and enhancing productivity in precision farming.

Despite the promising results achieved in this study, several limitations remain that warrant further investigation and improvement. Specifically, scenarios where pineapples are partially outside the camera’s field of view or subject to severe occlusion pose challenges that require additional solutions. Additionally, depth estimation inaccuracies arising from environmental influences on the depth camera also necessitate further refinement. These issues will be addressed in future work to enhance the robustness and applicability of the proposed methodologies.