Revolutionizing Data Exchange Through Intelligent Automation: Insights and Trends

Abstract

1. Introduction

2. Background

2.1. Main Definitions

- –

- Data Exchange: The process of transferring data across various systems, platforms, or organizations. This involves not only the physical transmission of data but also the transformation and integration of formats, protocols, and system architectures [8].

- –

- Intelligent Automation: The application of advanced technologies—such as Artificial intelligence (AI), Machine learning (ML), and Robotic process automation (RPA)—to automate complex tasks traditionally performed by humans. The core aim is to enhance operational efficiency and productivity [9].

- –

- Blockchain: A decentralized digital ledger system that records transactions across multiple nodes. It ensures transparency and data integrity, especially in contexts where mutual trust between parties is limited [10].

- –

- FPGA: A field-programmable gate array is an integrated circuit that can be configured post-manufacturing. It is often employed in specialized hardware applications to optimize data processing capabilities [11].

- –

- Data Integrity: Refers to the accuracy, consistency, and reliability of data throughout its lifecycle. It is vital to maintain unaltered and dependable data within databases or other data structures [12].

- –

- Data Privacy: Involves the appropriate management of data in accordance with relevant data protection laws and ethical guidelines. This is particularly important when handling personal or sensitive information [13].

- –

- Interoperability: The ability of heterogeneous computer systems and software applications to seamlessly exchange and utilize information. It is a critical requirement for efficient and effective data sharing [14].

2.2. Data Exchange Evolution

2.3. Main Issues

2.4. Automation and Data Sharing

3. Research Methodology

- –

- Planning the Review: A detailed protocol was established to define the scope and objectives of the study. This included the formulation of research questions, selection criteria for inclusion and exclusion, and the identification of databases and keywords for the search. The protocol aimed to address critical aspects of data exchange systems, including methodologies, tools, challenges, and emerging trends.

- –

- Conducting the Review: A comprehensive search was conducted across multiple academic databases, including IEEE Xplore, ACM Digital Library, and SpringerLink, targeting publications from 2020 to 2024. Keywords such as “data exchange”, “blockchain in data processing”, “FPGA for data handling”, and “AI in secure data sharing” were used to retrieve relevant articles. Additionally, snowballing techniques were applied to identify key studies cited in the primary sources, ensuring broader coverage.

- –

- Data Extraction and Synthesis: The selected studies were critically evaluated to extract relevant data on methodologies, tools, frameworks, and challenges. Each study was cataloged using reference management software, allowing for consistent organization and traceability. The extracted data was systematically synthesized to identify common trends, gaps, and innovative solutions in the field of data exchange.

- –

- Quality Assessment and Validation: Each study was assessed against predefined quality metrics, including methodological rigor, relevance to the research objectives, and contribution to the field. To enhance validation, findings were cross-referenced with related works and corroborated through domain expertise.

4. Technological Approaches to Data Exchange

4.1. Technology and Infrastructure

4.2. Security, Privacy, and Compliance

4.3. AI Impact, Applications, and Ethical Considerations

4.4. Emerging Challenges and Technological Responses

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Tenopir, C.; Rice, N.; Allard, S.L.; Baird, L.; Borycz, J.; Christian, L.; Grant, B.; Olendorf, R.; Sandusky, R.J. Data sharing, management, use, and reuse: Practices and perceptions of scientists worldwide. PLoS ONE 2020, 15, e0229003. [Google Scholar] [CrossRef] [PubMed]

- Stach, C. Data Is the New Oil-Sort of: A View on Why This Comparison Is Misleading and Its Implications for Modern Data Administration. Future Internet 2023, 15, 71. [Google Scholar] [CrossRef]

- Fischer, R.P.; Schnicke, F.; Beggel, B.; Antonino, P. Historical Data Storage Architecture Blueprints for the Asset Administration Shell. In Proceedings of the 2022 IEEE 27th International Conference on Emerging Technologies and Factory Automation (ETFA), Stuttgart, Germany, 6–9 September 2022; pp. 1–8. [Google Scholar] [CrossRef]

- Derakhshannia, M.; Gervet, C.; Hajj-Hassan, H.; Laurent, A.; Martin, A. Data Lake Governance: Towards a Systemic and Natural Ecosystem Analogy. Future Internet 2020, 12, 126. [Google Scholar] [CrossRef]

- Chamoli, S. Big Data with Cloud Computing: Discussions and Challenges. Math. Stat. Eng. Appl. 2021, 5, 32–40. [Google Scholar] [CrossRef]

- Ge, L.; YanLi, P. Research on Network Data Monitoring and Legal Evidence Integration Based on Cloud Computing. Mob. Inf. Syst. 2022, 2022, 1544981. [Google Scholar] [CrossRef]

- Gao, J. Computer Data Processing Mode in the era of Big Data: From Feature Analysis to Comprehensive Mining. In Proceedings of the 2021 6th International Conference on Inventive Computation Technologies (ICICT), Coimbatore, India, 20–22 January 2021; pp. 614–617. [Google Scholar] [CrossRef]

- Contreras, J.P.; Majumdar, S.; El-Haraki, A. Methods for Transferring Data from a Compute to a Storage Cloud. In Proceedings of the 2022 9th International Conference on Future Internet of Things and Cloud (FiCloud), Rome, Italy, 22–24 August 2022; pp. 67–74. [Google Scholar] [CrossRef]

- Siderska, J.; Aunimo, L.; Süße, T.; von Stamm, J.; Kedziora, D.; Aini, S.N.B.M. Towards Intelligent Automation (IA): Literature Review on the Evolution of Robotic Process Automation (RPA), its Challenges, and Future Trends. Eng. Manag. Prod. Serv. 2023, 15, 90–103. [Google Scholar] [CrossRef]

- Abdu, N.A.A.; Wang, Z. Blockchain Framework for Collaborative Clinical Trials Auditing. Wirel. Pers. Commun. 2023, 132, 39–65. [Google Scholar] [CrossRef]

- Moore, C.H.; Lin, W. FPGA Correlator for Applications in Embedded Smart Devices. Biosensors 2022, 12, 236. [Google Scholar] [CrossRef]

- Medileh, S.; Laouid, A.; Hammoudeh, M.; Kara, M.; Bejaoui, T.; Eleyan, A.; Al-Khalidi, M. A Multi-Key with Partially Homomorphic Encryption Scheme for Low-End Devices Ensuring Data Integrity. Information 2023, 14, 263. [Google Scholar] [CrossRef]

- OneTrust. Data Privacy Program Guide: How to Build a Privacy Program that Inspires Trust and Achieves Compliance; OneTrust White Paper: Atlanta, GA, USA, 2019. [Google Scholar]

- Liu, X. Design and implementation of heterogeneous data exchange platform based on web technology. In Proceedings of the Proceedings Volume 12332 International Conference on Intelligent Systems, Communications, and Computer Networks (ISCCN 2022), Chengdu, China, 17–19 June 2022; Volume 12332, p. 123320M. [Google Scholar] [CrossRef]

- Hasan, H.; Ali, M. A Modern Review of EDI: Representation, Protocols and Security Considerations. In Proceedings of the 2019 2nd IEEE Middle East and North Africa COMMunications Conference (MENACOMM), Manama, Bahrain, 19–21 November 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Blakely, B.E.; Pawar, P.; Jololian, L.; Prabhaker, S. The Convergence of EDI, Blockchain, and Big Data in Health Care. In Proceedings of the SoutheastCon 2021, Atlanta, GA, USA, 10–13 March 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Oleiwi, R. The Impact of Electronic Data Interchange on Accounting Systems. Int. J. Prof. Bus. Rev. 2023, 8, e01163. [Google Scholar] [CrossRef]

- Kuhn, M.; Franke, J. Data continuity and traceability in complex manufacturing systems: A graph-based modeling approach. Int. J. Comput. Integr. Manuf. 2021, 34, 549–566. [Google Scholar] [CrossRef]

- Andrianov, A.M. Analysing Technologies for the Comprehensive Digitalisation of Hightech Industrial Production in an «Industry 4.0» Paradigm. Sci. Work Free Econ. Soc. Russ. 2021, 228, 298–317. [Google Scholar] [CrossRef]

- Fadhel, S.A.; Jameel, E.A. A comparison between NOSQL and RDBMS: Storage and retrieval. MINAR Int. J. Appl. Sci. Technol. 2022, 4, 172–184. [Google Scholar] [CrossRef]

- Dhasmana, G.; J, P.G.; S, G.M.; R, P.K.H. SQL and NOSQL Databases in the Application of Business Analytics. In Proceedings of the 2023 International Conference on Computer Science and Emerging Technologies (CSET), Bangalore, India, 10–12 October 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Kanungo, S.; Morena, R.D. Concurrency versus consistency in NoSQL databases. J. Auton. Intell. 2023. [Google Scholar] [CrossRef]

- Karunarathne, S.M.; Saxena, N.; Khan, M. Security and Privacy in IoT Smart Healthcare. IEEE Internet Comput. 2021, 25, 37–48. [Google Scholar] [CrossRef]

- Alzahrani, A.G.; Alhomoud, A.; Wills, G. A Framework of the Critical Factors for Healthcare Providers to Share Data Securely Using Blockchain. IEEE Access 2022, 10, 41064–41077. [Google Scholar] [CrossRef]

- Spengler, H.; Gatz, I.; Kohlmayer, F.; Kuhn, K.A.; Prasser, F. Improving data quality in medical research: A monitoring architecture for clinical and translational data warehouses. In Proceedings of the 2020 IEEE 33rd International Symposium on Computer-Based Medical Systems (CBMS), Rochester, MN, USA, 28–30 July 2020; IEEE: New York, NY, USA, 2020; pp. 415–420. [Google Scholar]

- Hawig, D.; Zhou, C.; Fuhrhop, S.; Fialho, A.S.; Ramachandran, N. Designing a distributed ledger technology system for interoperable and general data protection regulation–compliant health data exchange: A use case in blood glucose data. J. Med. Internet Res. 2019, 21, e13665. [Google Scholar] [CrossRef]

- Rahmatulloh, A.; Nugraha, F.; Gunawan, R.; Darmawan, I. Event-Driven Architecture to Improve Performance and Scalability in Microservices-Based Systems. In Proceedings of the 2022 International Conference Advancement in Data Science, E-learning and Information Systems (ICADEIS), Bandung, Indonesia, 23–24 November 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Rafik, H.; Maizate, A.; Ettaoufik, A. Data Security Mechanisms, Approaches, and Challenges for e-Health Smart Systems. Int. J. Online Biomed. Eng. 2023, 19, 42–66. [Google Scholar] [CrossRef]

- Williamson, S.; Vijayakumar, K. Artificial intelligence techniques for industrial automation and smart systems. Concurr. Eng. 2021, 29, 291–292. [Google Scholar] [CrossRef]

- Quispe, J.F.P.; Diaz, D.Z.; Choque-Flores, L.; León, A.L.C.; Carbajal, L.V.R.; Serquen, E.E.P.; García-Huamantumba, A.; García-Huamantumba, E.; García-Huamantumba, C.F.; Paredes, C.E.G. Quantitative Evaluation of the Impact of Artificial Intelligence on the Automation of Processes. Data Metadata 2023. [Google Scholar] [CrossRef]

- Kulakov, Y.; Korenko, D. Methods of applying artificial intelligence in software-defined networks. Probl. Informatiz. Manag. 2023. [Google Scholar] [CrossRef]

- Abiteboul, S.; Stoyanovich, J. Transparency, fairness, data protection, neutrality: Data management challenges in the face of new regulation. J. Data Inf. Qual. 2019, 11, 1–9. [Google Scholar] [CrossRef]

- Voss, W.G. The CCPA and the GDPR Are Not the Same: Why You Should Understand Both. AARN Law Technol. 2021. Available online: https://www.competitionpolicyinternational.com/wp-content/uploads/2021/01/1-The-CCPA-and-the-GDPR-Are-Not-the-Same-Why-You-Should-Understand-Both-By-W.-Gregory-Voss.pdf (accessed on 1 January 2025).

- Kitchenham, B.; Charters, S.M. Guidelines for Performing Systematic Literature Reviews in Software Engineering; ver. 2.3 EBSE Technical Report; EBSE: Rio de Janeiro, Brazil, 2007. [Google Scholar]

- Bobda, C.; Mbongue, J.; Chow, P.; Ewais, M. The Future of FPGA Acceleration in Datacenters and the Cloud. Commun. ACM 2022, 15, 1–42. [Google Scholar] [CrossRef]

- Weber, L.; Sommer, L.; Solis-Vasquez, L.; Vinçon, T.; Knödler, C.; Bernhardt, A.; Petrov, I.; Koch, A. A Framework for the Automatic Generation of FPGA-based Near-Data Processing Accelerators in Smart Storage Systems. In Proceedings of the 2021 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), Portland, OR, USA, 17–21 June 2021; pp. 136–143. [Google Scholar] [CrossRef]

- Liu, J.; Dragojević, A.; Flemming, S.; Katsarakis, A.; Korolija, D.; Zablotchi, I.; Ng, H.c.; Kalia, A.; Castro, M. Honeycomb: Ordered key-value store acceleration on an FPGA-based SmartNIC. IEEE Trans. Comput. 2023, 73, 857–871. [Google Scholar] [CrossRef]

- Hu, Y.; Yao, X.; Zhang, R.; Zhang, Y. Freshness Authentication for Outsourced Multi-Version Key-Value Stores. IEEE Trans. Dependable Secur. Comput. 2023, 20, 2071–2084. [Google Scholar] [CrossRef]

- Zhang, T.; Wang, J.; Cheng, X.; Xu, H.; Yu, N.; Huang, G.; Zhang, T.; He, D.; Li, F.; Cao, W.; et al. FPGA-Accelerated Compactions for LSM-based Key-Value Store. In Proceedings of the 18th USENIX Conference on File and Storage Technologies, FAST 2020, Santa Clara, CA, USA, 24–27 February 2020; Noh, S.H., Welch, B., Eds.; USENIX Association: Berkeley, CA, USA, 2020; pp. 225–237. [Google Scholar]

- Siddiqui, M.F.; Ali, F.; Javed, M.A.; Khan, M.B.; Saudagar, A.K.J.; Alkhathami, M.; Abul Hasanat, M.H. An FPGA-Based Performance Analysis of Hardware Caching Techniques for Blockchain Key-Value Database. Appl. Sci. 2023, 13, 4092. [Google Scholar] [CrossRef]

- Cai, M.; Jiang, X.; Shen, J.; Ye, B. SplitDB: Closing the Performance Gap for LSM-Tree-Based Key-Value Stores. IEEE Trans. Comput. 2024, 73, 206–220. [Google Scholar] [CrossRef]

- Li, L.; Yue, Z.; Wu, G. Electronic Medical Record Sharing System Based on Hyperledger Fabric and InterPlanetary File System. In Proceedings of the 2021 ACM Symposium on Document Engineering (DocEng ’21), Sanya, China, 2–4 February 2021. [Google Scholar] [CrossRef]

- Ali, M.; Vecchio, M.; Antonelli, F. A Blockchain-Based Framework for IoT Data Monetization Services. Comput. J. 2020, 64, 195–210. [Google Scholar] [CrossRef]

- Kumar, A.; Kumar, V.P. An Approach to Secure Decentralized Storage System Using Blockchain and Interplanetary File System. In Proceedings of the 2023 International Conference on Big Data & Smart Systems (ICBDS), New Raipur, India, 6–8 October 2023. [Google Scholar] [CrossRef]

- Sentausa, D.; Hareva, D.H. Decentralize Application for Storing Personal Health Record using Ethereum Blockchain and Interplanetary File System. In Proceedings of the 2022 International Conference on Telecommunications, Information, and Internet of Things Applications (ICTIIA), Tangerang, Indonesia, 23 September 2022. [Google Scholar] [CrossRef]

- Ugochukwu, N.A.; Goyal, S.B.; Rajawat, A.; Verma, C.; Illés, Z. Enhancing Logistics With the Internet of Things: A Secured and Efficient Distribution and Storage Model Utilizing Blockchain Innovations and Interplanetary File System. IEEE Access 2023, 12, 4139–4152. [Google Scholar] [CrossRef]

- Popchev, I.; Radeva, I.; Doukovska, L.; Dimitrova, M. A Web Application for Data Exchange Blockchain Platform. In Proceedings of the 2023 International Conference on Big Data, Knowledge and Control Systems Engineering (BdKCSE), Sofia, Bulgaria, 2–3 November 2023; pp. 1–7. [Google Scholar] [CrossRef]

- AlSobeh, A.M.; Magableh, A.A. BlockASP: A framework for AOP-based model checking blockchain system. IEEE Access 2023, 11, 115062–115075. [Google Scholar] [CrossRef]

- Azzopardi, S.; Ellul, J.; Falzon, R.; Pace, G.J. AspectSol: A solidity aspect-oriented programming tool with applications in runtime verification. In Proceedings of the International Conference on Runtime Verification; Springer: Berlin/Heidelberg, Germany, 2022; pp. 243–252. [Google Scholar]

- AlSobeh, A.; Shatnawi, A.; Al-Ahmad, B.; Aljmal, A.; Khamaiseh, S. AI-Powered AOP: Enhancing Runtime Monitoring with Large Language Models and Statistical Learning. Int. J. Adv. Comput. Sci. Appl. 2024, 15. [Google Scholar] [CrossRef]

- Rajesh, S.C.; Borada, D. AI-Powered Solutions for Proactive Monitoring and Alerting in Cloud-Based Architectures. Int. J. Res. Mod. Eng. Emerg. Technol. (IJRMEET) 2024, 12, 208–215. [Google Scholar]

- Walker, D.; Tarver, W.; Jonnalagadda, P.; Ranbom, L.; Ford, E.W.; Rahurkar, S. Perspectives on Challenges and Opportunities for Interoperability. JMIR Med Inform. 2023, 11, e43848. [Google Scholar] [CrossRef]

- Goel, A.K.; Campbell, W.S.; Moldwin, R. Structured Data Capture for Oncology. JCO Clin. Cancer Inform. 2021, 5, 194–201. [Google Scholar] [CrossRef]

- Jaffe, C.; Vreeman, D.; Kaminker, D.; Nguyen, V. Implementing HL7 FHIR. J. Healthc. Manag. Stand. 2023, 2, 1–8. [Google Scholar] [CrossRef]

- Dullabh, P.; Hovey, L.; Heaney-Huls, K.; Rajendran, N.; Wright, A.; Sittig, D.F. Application Programming Interfaces in Health Care. Appl. Clin. Inform. 2020, 11, 59–69. [Google Scholar] [CrossRef]

- Akhtar, S.; Rauf, A.; Abbas, H.; Amjad, M.F. Inter Cloud Interoperability Use Cases and Gaps in Corresponding Standards. In Proceedings of the 2020 IEEE Intl Conf on Dependable, Autonomic and Secure Computing, Calgary, AB, Canada, 17–22 August 2020; pp. 585–592. [Google Scholar] [CrossRef]

- Rutz, A.; Sorokina, M.; Galgonek, J.; Mietchen, D.; Willighagen, E.; Gaudry, A.; Graham, J.; Stephan, R.; Page, R.; Vondrášek, J.; et al. The LOTUS initiative for open knowledge management in natural products research. eLife 2022, 11, e70780. [Google Scholar] [CrossRef]

- Narkhede, N.; Shapira, G.; Palino, T. Kafka: A Distributed Messaging System for Log Processing; O’Reilly Media: Sebastopol, CA, USA, 2017. [Google Scholar]

- Carlson, J.L. Redis: Lightweight Key/Value Store That Exceeds Expectations; Apress: New York, NY, USA, 2013. [Google Scholar]

- Deyhim, P. The Tale of Two Messaging Platforms: Apache Kafka and Amazon Kinesis. Amaz. Web Serv. Blog. 2016. Available online: https://aws.amazon.com/blogs/startups/the-tale-of-two-messaging-platforms-apache-kafka-and-amazon-kinesis/ (accessed on 1 January 2025).

- Morais, J.; George, J.; Rojas, R.L. Low Latency Real-Time Cache Updates with Amazon ElastiCache for Redis and Confluent Cloud Kafka. AWS Blog. 2021. Available online: https://aws.amazon.com/blogs/apn/low-latency-real-time-cache-updates-with-amazon-elasticache-for-redis-and-confluent-cloud-kafka/ (accessed on 1 January 2025).

- Bowen, J. Data Integration with Talend Open Studio; Packt Publishing: Birmingham, UK, 2012. [Google Scholar]

- Dossot, D.; D’Emic, J. Mule in Action; Manning Publications: Shelter Island, NY, USA, 2014. [Google Scholar]

- Bende, B.; Payne, M.; Dyer, J. Dataflow Management with Apache NiFi; Packt Publishing: Birmingham, UK, 2017. [Google Scholar]

- Nwokike, C. Dell Boomi AtomSphere: A Cloud-Based Integration Platform. J. Cloud Comput. 2013. [Google Scholar]

- Feinman, T. Data Loss Prevention: Protecting Sensitive Data in the Enterprise; McGraw-Hill Education: Columbus, OH, USA, 2010. [Google Scholar]

- Chen, W.-J.; Barkai, B.; DiPietro, J.M.; Langman, V.; Perlov, D.; Riah, R.; Rozenblit, Y.; Santos, A. Implementing IBM InfoSphere Guardium; IBM Redbooks: New York, NY, USA, 2015. [Google Scholar]

- Santos, O. Cisco CyberOps Associate CBROPS 200–201 Official Cert Guide; Cisco Press: San Jose, CA, USA, 2020. [Google Scholar]

- Networks, P.A. Prisma Access SASE Security: Secure Your Remote Workforce. Palo Alto Netw. White Pap. 2020. [Google Scholar]

- Zadeh, R.B.; Ramsundar, B. Machine Learning with TensorFlow on Google Cloud Platform; O’Reilly Media: Sebastopol, CA, USA, 2017. [Google Scholar]

- Barnes, J. Azure Machine Learning: Microsoft Azure Essentials; Microsoft Press: Redmond, WA, USA, 2015. [Google Scholar]

- Institute, S. White Paper, North Carolina; SAS White Paper: Cary, NC, USA, 2016. [Google Scholar]

- Bakshi, T.; Gaikwad, A. Getting Started with IBM Watson: How to Build and Deploy AI Models; Packt Publishing: Birmingham, UK, 2020. [Google Scholar]

- Calderon, G.; Campo, G.D.; Saavedra, E.; Santamaria, A. Management and Monitoring IoT Networks through an Elastic Stack-based Platform. In Proceedings of the 2021 8th International Conference on Future Internet of Things and Cloud (FiCloud), Rome, Italy, 23–25 August 2021; pp. 184–191. [Google Scholar] [CrossRef]

- Bano, S. PhD Forum Abstract: Efficient Computing and Communication Paradigms for Federated Learning Data Streams. In Proceedings of the 2021 IEEE International Conference on Smart Computing (SMARTCOMP), Irvine, CA, USA, 23–27 August 2021; pp. 410–411. [Google Scholar] [CrossRef]

- Vyas, S.; Tyagi, R.; Jain, C.; Sahu, S. Performance Evaluation of Apache Kafka—A Modern Platform for Real Time Data Streaming. In Proceedings of the 2022 2nd International Conference on Innovative Practices in Technology and Management (ICIPTM), Gautam Buddha Nagar, India, 23–25 February 2022; pp. 465–470. [Google Scholar] [CrossRef]

- Aung, T.; Min, H.Y.; Maw, A. CIMLA: Checkpoint Interval Message Logging Algorithm in Kafka Pipeline Architecture. In Proceedings of the 2020 International Conference on Advanced Information Technologies (ICAIT), Yangon, Myanmar, 4–5 November 2020; pp. 30–35. [Google Scholar] [CrossRef]

- Wang, G.; Chen, L.; Dikshit, A.; Gustafson, J.; Chen, B.S.; Sax, M.; Roesler, J.; Blee-Goldman, S.; Cadonna, B.; Mehta, A.; et al. Consistency and Completeness: Rethinking Distributed Stream Processing in Apache Kafka. In Proceedings of the Proceedings of the 2021 International Conference on Management of Data, Virtual, 20–25 June 2021. [Google Scholar] [CrossRef]

- Zhang, H.; Fang, L.; Jiang, K.; Zhang, W.; Li, M.; Zhou, L. Secure Door on Cloud: A Secure Data Transmission Scheme to Protect Kafka’s Data. In Proceedings of the 2020 IEEE 26th International Conference on Parallel and Distributed Systems (ICPADS), Hong Kong, China, 2–4 December 2020; pp. 406–413. [Google Scholar] [CrossRef]

- Song, R.; Gao, S.; Song, Y.; Xiao, B. A Traceable and Privacy-Preserving Data Exchange Scheme based on Non-Fungible Token and Zero-Knowledge. In Proceedings of the IEEE 42nd International Conference on Distributed Computing Systems (ICDCS), Bologna, Italy, 10–13 July 2022. [Google Scholar] [CrossRef]

- Shalannanda, W. Using Zero-Knowledge Proof in Privacy-Preserving Networks. In Proceedings of the 2023 17th International Conference on Telecommunication Systems, Services, and Applications (TSSA), Lombok, Indonesia, 12–13 October 2023. [Google Scholar] [CrossRef]

- Nasri, J.Z.; Rais, H. zk-BeSC: Confidential Blockchain Enabled Supply Chain Based on Polynomial Zero-Knowledge Proofs. In Proceedings of the IEEE 19th International Wireless Communications and Mobile Computing Conference (IWCMC), Marrakesh, Morocco, 19–23 June 2023. [Google Scholar] [CrossRef]

- Cabot-Nadal, M.A.; Playford, B.; Payeras-Capellà, M.; Gerske, S.; Mut-Puigserver, M.; Pericàs-Gornals, R. Private Identity-Related Attribute Verification Protocol Using SoulBound Tokens and Zero-Knowledge Proofs. In Proceedings of the IEEE International Symposium on Networks, Computers and Communications (ISNCC), Montreal, QC, Canada, 16–18 October 2023. [Google Scholar] [CrossRef]

- Fotiou, N.; Pittaras, I.; Chadoulos, S.; Siris, V.; Polyzos, G.; Ipiotis, N.; Keranidis, S. Authentication, Authorization, and Selective Disclosure for IoT data sharing using Verifiable Credentials and Zero-Knowledge Proofs. arXiv 2022, arXiv:2209.00586. [Google Scholar]

- Cuzzocrea, A.; Damiani, E. Privacy-Preserving Big Data Exchange: Models, Issues, Future Research Directions. In Proceedings of the 2021 IEEE International Conference on Big Data (Big Data), Orlando, FL, USA, 15–18 December 2021; pp. 5081–5084. [Google Scholar] [CrossRef]

- Madan, S. SABPP: Privacy-Preserving Data Exchange in The Big Data Market Using The Smart Contract Approach. Indian J. Sci. Technol. 2023, 16, 4388–4400. [Google Scholar] [CrossRef]

- Li, T.; Ren, W.; Xiang, Y.; Zheng, X.; Zhu, T.; Choo, K.-K.R.; Srivastava, G. FAPS: A fair, autonomous and privacy-preserving scheme for big data exchange based on oblivious transfer, Ether cheque and smart contracts. Inf. Sci. 2021, 544, 469–484. [Google Scholar] [CrossRef]

- Jena, L.; Mohanty, R.; Mohanty, M.N. Privacy-Preserving Cryptographic Model for Big Data Analytics. In Privacy and Security Issues in Big Data; Springer: Singapore, 2021. [Google Scholar] [CrossRef]

- Kulkarni, A.; Manjunath, T.N. Hybrid Cloud-Based Privacy Preserving Clustering as Service for Enterprise Big Data. Int. J. Recent Technol. Eng. 2023, 11, 146–156. [Google Scholar] [CrossRef]

- Azam, N.; Michala, A.L.; Ansari, S.; Truong, N.B. Modelling Technique for GDPR-Compliance: Toward a Comprehensive Solution. In Proceedings of the GLOBECOM 2023—2023 IEEE Global Communications Conference, Kuala Lumpur, Malaysia, 4–8 December 2023; pp. 3300–3305. [Google Scholar] [CrossRef]

- Merlec, M.; Lee, Y.; Hong, S.; In, H. A Smart Contract-Based Dynamic Consent Management System for Personal Data Usage under GDPR. Sensors 2021, 21, 7994. [Google Scholar] [CrossRef]

- Kaal, W. AI Governance via Web3 Reputation System. Stan. J. Blockchain L. Pol’y 2025, 8, 1. [Google Scholar] [CrossRef]

- Radanliev, P. AI Ethics: Integrating Transparency, Fairness, and Privacy in AI Development. Appl. Artif. Intell. 2025, 39, 2463722. [Google Scholar] [CrossRef]

- Dotan, R.; Blili-Hamelin, B.; Madhavan, R. Evolving AI Risk Management: A Maturity Model Based on the NIST AI Risk Management Framework. arXiv 2024, arXiv:2401.15229. [Google Scholar]

- Nam, Y.; Shin, E.; Lee, S.; Jung, S.; Bae, Y.; Kim, J. Global-scale GDPR Compliant Data Sharing System. In Proceedings of the 2020 International Conference on Electronics, Information, and Communication (ICEIC), Barcelona, Spain,, 19–22 January 2020. [Google Scholar] [CrossRef]

- Kim, D.-Y.; Elluri, L.; Joshi, K. Trusted Compliance Enforcement Framework for Sharing Health Big Data. In Proceedings of the IEEE International Conference on Big Data (Big Data), Orlando, FL, USA, 15–18 December 2021. [Google Scholar] [CrossRef]

- Anonymous. Advancing Through Data: A Critical Review of the Evolution of Medical Information Management Systems. Int. J. Biomed. Med. Eng. Health Sci. 2023, 11, 73–78. [Google Scholar] [CrossRef]

- Babu, M.S.; Raj, K.J.; Devi, D. Data Security and Sensitive Data Protection using Privacy by Design Technique. In Proceedings of the 2nd EAI International Conference on Big Data Innovation for Sustainable Cognitive Computing (BDCC 2019), Coimbatore, India, 12–13 December 2019. [Google Scholar] [CrossRef]

- Arbabi, M.S.; Lal, C.; Veeraragavan, N.; Marijan, D.; Nygård, J.; Vitenberg, R. A Survey on Blockchain for Healthcare: Challenges, Benefits, and Future Directions. IEEE Commun. Surv. Tutor. 2022, 25, 386–424. [Google Scholar] [CrossRef]

- Seth, M. Mulesoft—Salesforce Integration Using Batch Processing. In Proceedings of the 2018 5th International Conference on Computational Science/Intelligence and Applied Informatics (CSII), Yonago, Japan, 10–12 July 2018; pp. 7–14. [Google Scholar] [CrossRef]

- Madhavi, T.; Rithvik, M.; Sindhura, N. A CONJOIN Approach to IOT snd Software Engineering Using TIBCO B.W. Int. J. Eng. Technol. Manag. Res. 2020, 4, 36–42. [Google Scholar] [CrossRef]

- Gregg, T.A.; Pandey, K.M.; Errickson, R.K. The Integrated Cluster Bus for the IBM S/390 Parallel Sysplex. IBM J. Res. Dev. 1999, 43, 795–806. [Google Scholar] [CrossRef]

- Rawat, S.; Narain, A. Introduction to Azure Data Factory. In Understanding Azure Data Factory; Apress: Berkeley, CA, USA, 2018. [Google Scholar] [CrossRef]

- Pogiatzis, A.; Samakovitis, G. An Event-Driven Serverless ETL Pipeline on AWS. Appl. Sci. 2020, 11, 191. [Google Scholar] [CrossRef]

- Ebert, N.; Weber, K.; Koruna, S. Integration Platform as a Service. Bus. Inf. Syst. Eng. 2017, 59, 375–379. [Google Scholar] [CrossRef]

- Wang, H.; Cen, Y. Implementation of Information Integration Platform in Chinese Tobacco Industry Enterprise Based on SOA. Adv. Mater. Res. 2013, 765–767, 1360–1364. [Google Scholar] [CrossRef]

- Faruk, M.J.H.; Saha, B.; Basney, J. A Comparative Analysis Between SciTokens, Verifiable Credentials, and Smart Contracts: Novel Approaches for Authentication and Secure Access to Scientific Data. In Proceedings of the 2023 ACM Symposium, Portland, OR, USA, 23–27 July 2023. [Google Scholar] [CrossRef]

- Westergaard, G.; Erden, U.; Mateo, O.A.; Lampo, S.M.; Akinci, T.C.; Topsakal, O. Time Series Forecasting Utilizing Automated Machine Learning (AutoML): A Comparative Analysis Study on Diverse Datasets. Information 2024, 15, 39. [Google Scholar] [CrossRef]

- Nedosnovanyi, O.; Cherniak, O.; Golinko, V. Comparative Analysis of Cloud Services for Geoinformation Data Processing. Inf. Technol. Comput. Eng. 2023, 57, 50–57. [Google Scholar] [CrossRef]

- Parhad, O.; Naik, V. Comparative analysis of Data Extraction for Qualcomm based android devices. In Proceedings of the ICCCNT, Delhi, India, 6–8 July 2023. [Google Scholar] [CrossRef]

- Robu, E. Enhancing data security and protection in marketing: A comparative analysis of Golang and PHP approaches. Ecosoen 2024, 19–30. [Google Scholar] [CrossRef]

- Rathod, P.; Hamalainen, T. Leveraging the Benefits of Big Data with Fast Data for Effective and Efficient Cybersecurity Analytics Systems: A Robust Optimisation Approach. In Proceedings of the 15th International Conference on Cyber Warfare and Security (ICCWS 2020), Norfolk, VA, USA, 12–13 March 2020; Payne, B.K., Wu, H., Eds.; Academic Conferences International: South Oxfordshire, UK, 2020; pp. 290–298. [Google Scholar]

- Islam, M.; Aktheruzzaman, K. An Analysis of Cybersecurity Attacks against Internet of Things and Security Solutions. J. Comput. Commun. 2020, 8, 11–25. [Google Scholar] [CrossRef]

- Altulaihan, E.; Almaiah, M.; Aljughaiman, A. Cybersecurity Threats, Countermeasures and Mitigation Techniques on the IoT: Future Research Directions. Electronics 2022, 11, 3330. [Google Scholar] [CrossRef]

- Sukumaran, R.P.; Benedict, S. Survey on Blockchain Enabled Authentication for Industrial Internet of Things. Comput. Commun. 2021, 182, 1–14. [Google Scholar] [CrossRef]

- Verma, P.; Sharma, R.; Mistry, M. A Paradigm Shift in IoT Cyber-Security: A Systematic Review. Int. J. Res. Appl. Sci. Eng. Technol. 2022, 10, 1024–1034. [Google Scholar] [CrossRef]

- Mishra, S.; Sharma, S.; Alowaidi, M.A. Multilayer Self-defense System to Protect Enterprise Cloud. Comput. Mater. Contin. 2020, 66, 71–85. [Google Scholar] [CrossRef]

- Mathew, A. The Power of Cybersecurity Data Science in Protecting Digital Footprints. Cogniz. J. Multidiscip. Stud. 2023, 3, 1–4. [Google Scholar] [CrossRef]

- Allouche, Y.; Tapas, N.; Longo, F.; Shabtai, A.; Wolfsthal, Y. TRADE: TRusted Anonymous Data Exchange: Threat Sharing Using Blockchain Technology. arXiv 2021, arXiv:2103.13158. [Google Scholar]

- Santoso, K.; Muin, M.; Mahmudi, M. Implementation of AES cryptography and twofish hybrid algorithms for cloud. J. Physics Conf. Ser. 2020, 1517, 012099. [Google Scholar] [CrossRef]

- Stodt, J.; Reich, C. Data Confidentiality In P2P Communication And Smart Contracts Of Blockchain In Industry 4.0. arXiv 2020, arXiv:2020.101001. [Google Scholar] [CrossRef]

- Shahmoradi, L.; Ebrahimi, M.; Shahmoradi, S.; Farzanehnejad, A.R.; Moammaie, H.; Koolaee, M.H. Usage of Standards to Integration of Hospital Information Systems. Front. Health Inform. 2020, 9, 28. [Google Scholar] [CrossRef]

- Abdellatif, A.A.; Samara, L.; Mohamed, A.M.; Erbad, A.; Chiasserini, C.F.; Guizani, M.; O’Connor, M.D.; Laughton, J. MEdge-Chain: Leveraging Edge Computing and Blockchain for Efficient Medical Data Exchange. IEEE Internet Things J. 2021, 8, 15762–15775. [Google Scholar] [CrossRef]

- Shmatko, O.; Kliuchka, Y. A novel architecture of a secure medical system using dag. InterConf 2022. [Google Scholar] [CrossRef]

- Salomi, M.; Claro, P.B. Adopting Healthcare Information Exchange among Organizations, Regions, and Hospital Systems toward Quality, Sustainability, and Effectiveness. Technol. Investig. 2020, 11, 58–97. [Google Scholar] [CrossRef]

- Tariq, F.; Khan, Z.; Sultana, T.; Rehman, M.; Shahzad, Q.; Javaid, N. Leveraging Fine-Grained Access Control in Blockchain-Based Healthcare System. In Proceedings of the 34th International Conference on Advanced Information Networking and Applications (AINA 2020), Caserta, Italy, 15–17 April 2020; pp. 106–115. [Google Scholar] [CrossRef]

- Ecarot, T.; Fraikin, B.; Ouellet, F.; Lavoie, L.; McGilchrist, M.; Éthier, J.F. Sensitive Data Exchange Protocol Suite for Healthcare. In Proceedings of the 2020 IEEE Symposium on Computers and Communications (ISCC), Rennes, France, 7–10 July 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Alsaleem, M.; Hasoon, S.O. Comparison of DT & GBDT Algorithms for Predictive Modeling Of Currency Exchange Rates. Ureka Phys. Eng. 2020, 1, 56–61. [Google Scholar] [CrossRef]

- Fallucchi, F.; Coladangelo, M.; Giuliano, R.; Luca, E.D. Predicting Employee Attrition Using Machine Learning Techniques. Computers 2020, 9, 86. [Google Scholar] [CrossRef]

- Bag, S.; Gupta, S.; Kumar, A.; Sivarajah, U. An integrated artificial intelligence framework for knowledge creation and B2B marketing rational decision making for improving firm performance. Ind. Mark. Manag. 2021, 92, 178–189. [Google Scholar] [CrossRef]

- Yang, N. Financial Big Data Management and Control and Artificial Intelligence Analysis Method Based on Data Mining Technology. Wirel. Commun. Mob. Comput. 2022, 2022, 7596094. [Google Scholar] [CrossRef]

- Xiong, X.; Wei, W.; Zhang, C. Dynamic User Allocation Method and Artificial Intelligence in the Information Industry Financial Management System Application. In Proceedings of the 2022 6th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 25–27 May 2022; pp. 982–985. [Google Scholar] [CrossRef]

- Tedeschi, L. ASAS-NANP Symposium: Mathematical Modeling in Animal Nutrition: The progression of data analytics and artificial intelligence in support of sustainable development in animal science. J. Anim. Sci. 2022, 100, skac111. [Google Scholar] [CrossRef]

- Badyal, S.; Kumar, R. Insightful Business Analytics Using Artificial Intelligence—A Decision Support System for E-Businesses. In Proceedings of the 2021 3rd International Conference on Advances in Computing, Communication Control and Networking (ICAC3N), Greater Noida, India, 17–18 December 2021; pp. 109–115. [Google Scholar] [CrossRef]

- Lepenioti, K.; Bousdekis, A.; Apostolou, D.; Mentzas, G. Human-Augmented Prescriptive Analytics With Interactive Multi-Objective Reinforcement Learning. IEEE Access 2021, 9, 100677–100693. [Google Scholar] [CrossRef]

- Mijwil, M.M. Has the Future Started? The Current Growth of Artificial Intelligence, Machine Learning, and Deep Learning. Iraqi J. Comput. Sci. Math. 2022, 3, 13. [Google Scholar] [CrossRef]

- Lakhan, N. Applications of Data Science and AI in Business. Int. J. Res. Appl. Sci. Eng. Technol. 2022, 10, 4115–4118. [Google Scholar] [CrossRef]

- Hayashi, T.; Ohsawa, Y. Variable-Based Network Analysis of Datasets on Data Exchange Platforms. arXiv 2020, arXiv:2003.05109. [Google Scholar]

- Nair, S. A review on ethical concerns in big data management. Int. J. Big Data Manag. 2020, 1, 8. [Google Scholar] [CrossRef]

- Bernasconi, L.; Sen, S.; Angerame, L.; Balyegisawa, A.P.; Hui, D.H.Y.; Hotter, M.; Hsu, C.Y.; Ito, T.; Jorger, F.; Krassnitzer, W.; et al. Legal and ethical framework for global health information and biospecimen exchange—An international perspective. BMC Med. Ethics 2020, 21, 8. [Google Scholar] [CrossRef]

- Morrison, M. Research using free text data in medical records could benefit from dynamic consent and other tools for responsible governance. J. Med. Ethics 2020, 46, 380–381. [Google Scholar] [CrossRef]

- Benson, T.; Grieve, G. Privacy and Consent; Springer: Cham, Switzerland, 2020; pp. 363–378. [Google Scholar] [CrossRef]

- Brewer, S.; Pearson, S.; Maull, R.; Godsiff, P.; Frey, J.G.; Zisman, A.; Parr, G.; McMillan, A.; Cameron, S.; Blackmore, H.; et al. A trust framework for digital food systems. Nat. Food 2021, 2, 543–545. [Google Scholar] [CrossRef]

- Garcia, R.; Ramachandran, G.S.; Jurdak, R.; Ueyama, J. A Blockchain-based Data Governance with Privacy and Provenance: A case study for e-Prescription. In Proceedings of the 2022 IEEE International Conference on Blockchain and Cryptocurrency (ICBC), Shanghai, China, 2–5 May 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Milne, R.; Brayne, C. We need to think about data governance for dementia research in a digital era. Alzheimer’s Res. Ther. 2020, 12, 17. [Google Scholar] [CrossRef]

- Lucivero, F.; Samuel, G.; Blair, G.; Darby, S.J. Data-Driven Unsustainability? An Interdisciplinary Perspective on Governing the Environmental Impacts of a Data-Driven Society; SSRN: Rochester, NY, USA, 2020. [Google Scholar] [CrossRef]

- Wang, Z.; Chen, C.; Guo, C. Dynamic Resources Management Under Limited Communication Based on Multi-level Agent System. In Proceedings of the 2023 IEEE 6th International Conference on Electronic Information and Communication Technology (ICEICT), Qingdao, China, 21–24 July 2023; IEEE: New York, NY, USA, 2023; pp. 846–849. [Google Scholar]

- Joshi, N.; Srivastava, S. Online Task Allocation and Scheduling in Fog IoT using Virtual Bidding. In Proceedings of the 2022 IEEE 10th Region 10 Humanitarian Technology Conference (R10-HTC), Hyderabad, India, 16–18 September 2022; IEEE: New York, NY, USA, 2022; pp. 81–86. [Google Scholar]

- Hu, G.; Zhu, Y.; Zhao, D.; Zhao, M.; Hao, J. Event-triggered communication network with limited-bandwidth constraint for multi-agent reinforcement learning. IEEE Trans. Neural Netw. Learn. Syst. 2021, 34, 3966–3978. [Google Scholar] [CrossRef]

- Dos Santos, L.M.; Gracioli, G.; Kloda, T.; Caccamo, M. On the design and implementation of real-time resource access protocols. In Proceedings of the 2020 X Brazilian Symposium on Computing Systems Engineering (SBESC), Florianopolis, Brazil, 24–27 November 2020; IEEE: New York, NY, USA, 2020; pp. 1–8. [Google Scholar]

- Kim, M.; Sinha, S.; Orso, A. Adaptive REST API Testing with Reinforcement Learning. In Proceedings of the 2023 38th IEEE/ACM International Conference on Automated Software Engineering (ASE), Echternach, Luxembourg, 11–15 November 2023; IEEE: New York, NY, USA, 2023; pp. 446–458. [Google Scholar]

- Noel, R.R.; Mehra, R.; Lama, P. Towards self-managing cloud storage with reinforcement learning. In Proceedings of the 2019 IEEE International Conference on Cloud Engineering (IC2E), Prague, Czech Republic, 24–27 June 2019; IEEE: New York, NY, USA, 2019; pp. 34–44. [Google Scholar]

- Banno, R.; Shudo, K. Adaptive topology for scalability and immediacy in distributed publish/subscribe messaging. In Proceedings of the 2020 IEEE 44th Annual Computers, Software, and Applications Conference (COMPSAC), Madrid, Spain, 13–17 July 2020; IEEE: New York, NY, USA, 2020; pp. 575–583. [Google Scholar]

- Dincă, A.M.; Axinte, S.D.; Bacivarov, I.C.; Petrică, G. Reliability enhancements for high-availability systems using distributed event streaming platforms. In Proceedings of the 2023 IEEE 29th International Symposium for Design and Technology in Electronic Packaging (SIITME), Craiova, Romania, 18–20 October 2023; IEEE: New York, NY, USA, 2023; pp. 41–46. [Google Scholar]

- Kozhaya, D.; Decouchant, J.; Rahli, V.; Esteves-Verissimo, P. Pistis: An event-triggered real-time byzantine-resilient protocol suite. IEEE Trans. Parallel Distrib. Syst. 2021, 32, 2277–2290. [Google Scholar] [CrossRef]

- Štufi, M.; Bačić, B. Designing a real-time iot data streaming testbed for horizontally scalable analytical platforms: Czech post case study. arXiv 2021, arXiv:2112.03997. [Google Scholar]

- Otavio Chervinski, J.; Kreutz, D.; Yu, J. Towards Scalable Cross-Chain Messaging. arXiv 2023, arXiv:2310.10016. [Google Scholar]

- Vimal, S.; Vadivel, M.; Baskar, V.V.; Sivakumar, V.; Srinivasan, C. Integrating IoT and Machine Learning for Real-Time Patient Health Monitoring with Sensor Networks. In Proceedings of the 2023 4th International Conference on Smart Electronics and Communication (ICOSEC), Trichy, India, 20–22 September 2023; IEEE: New York, NY, USA, 2023; pp. 574–578. [Google Scholar]

- Im, J.; Lee, J.; Lee, S.; Kwon, H.Y. Data pipeline for real-time energy consumption data management and prediction. Front. Big Data 2024, 7, 1308236. [Google Scholar] [CrossRef] [PubMed]

- Reddy, K.P.; Satish, M.; Prakash, A.; Babu, S.M.; Kumar, P.P.; Devi, B.S. Machine Learning Revolution in Early Disease Detection for Healthcare: Advancements, Challenges, and Future Prospects. In Proceedings of the 2023 IEEE 5th International Conference on Cybernetics, Cognition and Machine Learning Applications (ICCCMLA), Hamburg, Germany, 7–8 October 2023; IEEE: New York, NY, USA, 2023; pp. 638–643. [Google Scholar]

- Sedaghat, A.; Arbabkhah, H.; Jafari Kang, M.; Hamidi, M. Deep Learning Applications in Vessel Dead Reckoning to Deal with Missing Automatic Identification System Data. J. Mar. Sci. Eng. 2024, 12, 152. [Google Scholar] [CrossRef]

- Chang, A.; Wu, X.; Liu, K. Deep learning from latent spatiotemporal information of the heart: Identifying advanced bioimaging markers from echocardiograms. Biophys. Rev. 2024, 5, 011304. [Google Scholar] [CrossRef]

- Yin, K.; Huang, H.; Liang, W.; Xiao, H.; Wang, L. Network Coding for Efficient File Transfer in Narrowband Environments. Inf. Technol. Control 2023, 52. [Google Scholar] [CrossRef]

- Hao, Q.; Qin, L. The design of intelligent transportation video processing system in big data environment. IEEE Access 2020, 8, 13769–13780. [Google Scholar] [CrossRef]

- Van Besien, W.L.; Ferris, B.; Dudish, J. Reliable, Efficient Large-File Delivery over Lossy, Unidirectional Links. In Proceedings of the 2021 IEEE Aerospace Conference (50100), Big Sky, MT, USA, 6–13 March 2021; IEEE: New York, NY, USA, 2021; pp. 1–10. [Google Scholar]

- He, Q.; Gao, P.; Zhang, F.; Bian, G.; Zhang, W.; Li, Z. Design and optimization of a distributed file system based on RDMA. Appl. Sci. 2023, 13, 8670. [Google Scholar] [CrossRef]

- AbuDaqa, A.A.; Mahmoud, A.; Abu-Amara, M.; Sheltami, T. Survey of network coding based P2P file sharing in large scale networks. Appl. Sci. 2020, 10, 2206. [Google Scholar] [CrossRef]

| Phase | Description |

|---|---|

| 1. Planning the Review | Defined the review scope and objectives, formulated research questions, and established inclusion/exclusion criteria. Targeted studies published between 2020–2024 in English. Followed the guidelines proposed by Keele [34]. |

| 2. Article Retrieval | Searched IEEE Xplore, ACM Digital Library, and SpringerLink using terms such as “data exchange”, “blockchain in data processing”, “AI in secure data sharing”, and “FPGA for data handling”. Snowballing was applied to capture additional relevant studies. |

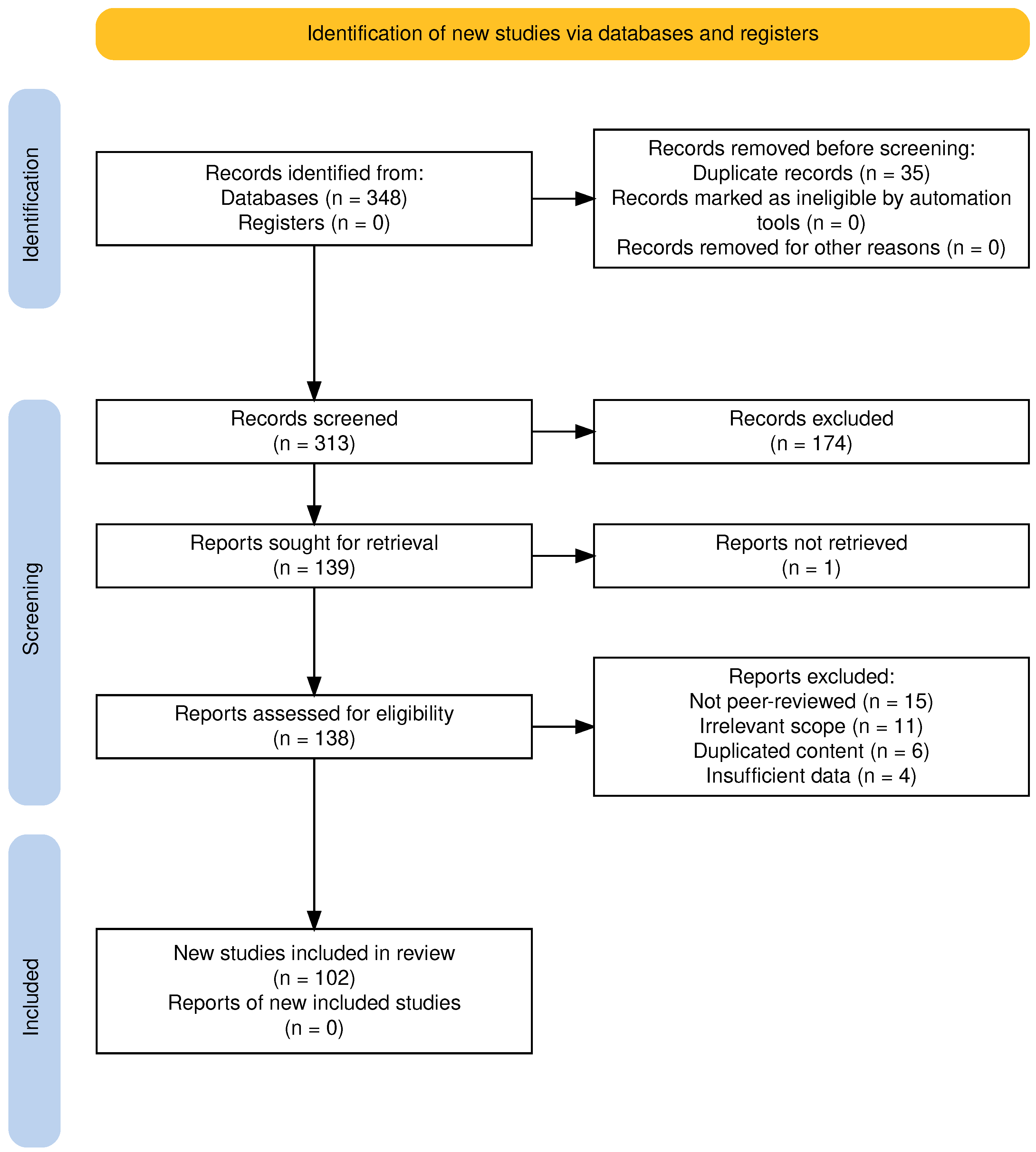

| 3. Screening and Eligibility | Removed duplicates and screened 313 articles by title, abstract, and full text. Applied PRISMA 2020 framework (Figure 1). Retained 102 eligible studies for full review. |

| 4. Data Extraction and Analysis | Reviewed each article to extract methodological details, challenges addressed, and domain relevance. 92 studies were classified thematically (Table 2) and 74 by problem area (Table 3). Quality assessment ensured analytical rigor. |

| Topic | Number of References |

|---|---|

| Blockchain and Distributed Ledger | 16 |

| Data Privacy and Security | 14 |

| Healthcare and Medical Data | 10 |

| AI and Machine Learning Applications | 9 |

| Ethical and Legal Frameworks | 9 |

| IoT (Internet of Things) | 7 |

| Big Data and Analytics | 7 |

| Zero-Knowledge Proofs | 6 |

| Cybersecurity | 5 |

| FPGA-Based Acceleration | 5 |

| Digital Food Systems | 4 |

| Problem Addressed | Number of Papers |

|---|---|

| Interoperability and Standardization | 14 |

| Scalability and Performance | 11 |

| Data Security and Privacy | 23 |

| Real-Time Processing | 9 |

| Regulatory Compliance | 10 |

| Ethical and Governance Aspects | 7 |

| Technology | Security | Scalability | Latency | Regulatory Compliance |

|---|---|---|---|---|

| Blockchain | High | Medium | High | Strong (e.g., GDPR-ready) |

| FPGA-Based Systems | Medium | High | Low | Low |

| Zero-Knowledge Proofs | Very High | Low | High | Very Strong |

| IoT Architectures | Low | Very High | Medium | Weak |

| AI-Driven Solutions | Variable | High | Medium | Medium |

| Category | Tool (Reference) | Key Features | Applications in Industries |

|---|---|---|---|

| Real-Time Data Management | Apache Kafka [58] | Real-time streaming • High-throughput • Fault-tolerant | Telecommunications, Finance |

| Redis [59] | In-memory • Low latency • Message broker | E-commerce, Gaming | |

| Amazon Kinesis [60] | Scalable • Real-time • Analytics-ready | Log analysis, Media monitoring | |

| Confluent Platform [61] | Kafka-enhanced • Secure • Manageable | Recommendation systems, IoT | |

| Data Integration | Talend [62] | ETL • Cloud/on-premise • Transformations | Healthcare, Finance |

| MuleSoft [63] | API-based • Multi-environment • Connectivity | Retail, Financial services | |

| Apache NiFi [64] | Visual flows • Routing • Real-time | Government, Cybersecurity | |

| Dell Boomi [65] | Low-code • Visual workflows • iPaaS | Education, Healthcare | |

| Data Security | Symantec Data Loss Prevention [66] | Data protection • Monitoring • Prevention | Corporate IT, Legal |

| IBM Guardium [67] | Threat protection • Monitoring • Compliance | Finance, Healthcare | |

| Cisco SecureX [68] | Unified visibility • Threat response | Corporate, Critical infrastructure | |

| Palo Alto Networks Prisma Access [69] | Cloud-delivered • Remote access • Secure | Healthcare, Government | |

| Predictive Analysis and Machine Learning | Google Cloud AI Platform [70] | End-to-end ML • Scalable • Deployment | Retail, Technology |

| Microsoft Azure Machine Learning [71] | Cloud-based • Workflow management • Scalable | Healthcare, Finance | |

| SAS Viya [72] | Advanced ML • Unified platform • Analytics | Retail, Financial services | |

| IBM Watson [73] | AI+NLP • Analytics • Automation | Healthcare, Education |

| Database Technology (Reference) | Scalability | Native Integration | Protocol Support | Regulatory Compliance |

|---|---|---|---|---|

| MuleSoft Anypoint [100] | Vertical/Horiz | Salesforce, SAP | HTTP, REST, SOAP | GDPR, HIPAA |

| TIBCO [101] | Vertical | Salesforce | HTTP, REST, JMS | GDPR |

| IBM Integration Bus [102] | Vertical | IBM Cloud | HTTP, REST, SOAP, MQTT | GDPR, HIPAA |

| Microsoft Azure Data Factory [103] | Horizontal | Azure services | HTTP, REST | GDPR, Azure Policy |

| AWS Data Pipeline [104] | Horizontal | AWS services | AWS SDK | GDPR, HIPAA |

| Apache Kafka [58] | Horizontal | Hadoop, Spark | Kafka Protocol | - |

| Dell Boomi [105] | Vertical | Salesforce, SAP | HTTP, REST | GDPR, HIPAA |

| SAP Data Services [106] | Vertical | SAP | HTTP, REST, SOAP | GDPR, SAP Policy |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cardona-Álvarez, Y.N.; Álvarez-Meza, A.M.; Castellanos-Dominguez, G. Revolutionizing Data Exchange Through Intelligent Automation: Insights and Trends. Computers 2025, 14, 194. https://doi.org/10.3390/computers14050194

Cardona-Álvarez YN, Álvarez-Meza AM, Castellanos-Dominguez G. Revolutionizing Data Exchange Through Intelligent Automation: Insights and Trends. Computers. 2025; 14(5):194. https://doi.org/10.3390/computers14050194

Chicago/Turabian StyleCardona-Álvarez, Yeison Nolberto, Andrés Marino Álvarez-Meza, and German Castellanos-Dominguez. 2025. "Revolutionizing Data Exchange Through Intelligent Automation: Insights and Trends" Computers 14, no. 5: 194. https://doi.org/10.3390/computers14050194

APA StyleCardona-Álvarez, Y. N., Álvarez-Meza, A. M., & Castellanos-Dominguez, G. (2025). Revolutionizing Data Exchange Through Intelligent Automation: Insights and Trends. Computers, 14(5), 194. https://doi.org/10.3390/computers14050194