1. Introduction

In today’s digital era, technologies such as smartphones and smart devices have become essential tools in many aspects of our lives. With the development of Internet technology, attackers tend to launch more sophisticated malware programs that have malicious behavior to devastate victims’ systems. A staggering number of over 450,000 new malware and PUAs are registered by the AV-TEST Institute daily [

1]. Moreover, malware primarily relies on infecting executable files, leaving a clear footprint for detection by antivirus software. Static and heuristic analysis techniques traditionally used by antivirus software have well-known limitations in detecting modern, sophisticated malware. These methods often fail when confronted with obfuscation or encryption, which conceal malicious behavior and allow malware to evade detection systems [

2,

3]. Recognizing these weaknesses, attackers have increasingly adopted fileless malware, which operates entirely in system memory rather than relying on executable files. Because it leaves no traces on the disk, this type of malware renders conventional file-based detection approaches largely ineffective.

To address these challenges, researchers and practitioners have turned to advanced detection strategies, including memory forensics and image-based analysis, which analyze in-memory artifacts and visual patterns of malicious activity. These emerging techniques have shown strong potential for effectively identifying and mitigating the growing threat of fileless and obfuscated malware [

4,

5,

6,

7].

Memory forensics focuses on acquiring and examining the volatile data stored in a computer’s Random Access Memory (RAM). Unlike data on a hard drive, information in RAM is temporary and disappears once the system is powered off, which makes it an appealing location for attackers to conceal malicious activity. To safely study such behavior, malware is often executed in a controlled environment, or sandbox, such as Cuckoo Sandbox [

8], which allows analysts to observe and capture memory artifacts without risking the integrity of real systems. To guarantee comprehensive analysis and detection of malware inside the sandbox, researchers can safely watch the behavior of the running malware and record memory data. As a result, in order to identify memory residents and fileless malware, the researchers can gather processes, network connections, open files, and registry modifications executed by the malware. An excellent work in this direction was performed by Ilker Kara in [

9]. In this work, Kara analyzed the recent work in detecting and preventing memory-resident malware executables in the Windows operating system and suggested a novel method for evaluating the features of fileless malware examples in terms of the nature and method of the attack. As fileless malware infects the RAM, the method analyzes the RAM content by gathering dump files for each process using FTK Imager with 5000 ms intentional delay to ensure that only Volatility and the malicious program are running. Many tools are applied to deal with dump files, such as the “string” tool, to obtain the ASCII character set and find anything interesting that happens. Moreover, the “netstat” tool identifies any unknown connections that the malware could try to communicate with, and the “ollydbg” is applied to obtain the key information.

Memory dumps transform raw OS memory dumps into image representations to capture complex relationships of memory data. Images are used to train machine learning algorithms for relevance in feature extraction in order to enhance the analysis process without depending on manual feature selection [

10,

11]. The research in this direction shows that features extracted from memory dumps can be used along with machine learning algorithms to classify malware with high accuracy [

12,

13,

14,

15]. However, extracting relevant features requires domain-specific knowledge of memory forensics. Moreover, the identification of the right time to capture a memory dump and extract features becomes crucial for real-time threat detection.

Machine learning and deep learning algorithms are used effectively in malware detection [

16,

17,

18,

19,

20]. Moreover, machine learning algorithms are increasingly employed to analyze the visualized memory dumps in image-based malware detection and extract relevant features, such as those extracted from texture analysis [

21,

22]. Texture analysis draws interest in the repetitive patterns and surface characteristics within an image. Several researchers reported that malware images of the same family often present similar patterns and textures using this texture analysis, whereby these also become vital in malware detection methods in memory forensics [

23,

24]. That is, the apparent pattern in the texture of memory dumps could be recognized from visualization and analysis by researchers to have a relation with certain malware families.

This paper deeply and comprehensively examines several parameters that can be effective in using machine learning models for image-based malware detection using memory dumps. It shows, individually and in combination, several local and global image descriptors with different image resolutions to point out which combination of features, resolution, and machine learning model yields the best performance with a low computational cost while being highly effective in classifying malware. However, the effectiveness of texture analysis on malware images requires high computational efforts to address this challenge. In this paper, the LIME-based regions-of-interest approach is proposed to identify forensic features from images that highly affect classification results by cropping images based on these regions. The key contributions are:

Application of LIME for feature region identification: The key contribution in this study is the application of LIME on the memory forensics images to dynamically identify, focus, and crop important regions of images.

Investigating the effectiveness of local and global descriptors: This study investigates the use of various local (HOG, LBP, SIFT) and global (HOG, GIST, GLCM) texture descriptors for feature extraction from memory dump images. These descriptors are analyzed individually and in combination at different resolutions and sizes.

Extracting two datasets of cropped images based on LIME’s region of interest using ResNet50 and MobileNet pretrained models: This study used Dumpware10 to apply the LIME technique on the images for each family to create new datasets, which are smaller and more efficient since irrelevant regions were cropped out.

2. Related Work

Memory analysis is a useful technique to learn more about the behavior and patterns of malware since malware leaves specific traces in computer memories. Malicious programs residing in memory present a significant challenge. This section discusses some related work in analyzing memory-resident malware and extracting discriminative features to facilitate malware detection and classification. Related work is classified into two categories: analyze the features of memory dump files directly for different memory-resident software and image-based detection by converting dump files to images.

2.1. Feature-Based Extraction from Memory Dumps

Sihwail et al. [

25] proposed a technique for detecting malware by analyzing memory files using the Volatility tool, a memory forensics method. The dataset consisted of 3468 malicious and benign executable files. The samples were executed in the Cuckoo Sandbox to obtain dump files for every sample. Next, the technique involved extracting various features that discover malicious behavior, such as DLL, API, Process Handle Feature, Privilege Feature, Network feature, and Code Injection Feature. Then, these features were transformed into binary vectors and used for classification using machine learning techniques. IG and correlation are two feature selection methods applied to remove the irrelevant features from the dataset. The model achieved a high classification accuracy of 98.5% using the SVM classifier.

Mosli et al. [

26] proposed a framework that can detect malware apps by analyzing the characteristics of handle usage. They extracted data from memory dump files using the Volatility tool, and the handle data were captured with the help of the Handles plugin. The dataset they analyzed consisted of 3139 instances of malicious software and 1157 benign software instances. To classify software, they saved a handle table of every process as a text file and used the TF-IDF approach to extract features from these files. They extracted the vocabulary indicating the handle types from the handle text files and computed the frequency of each handle type for each process. The produced data were then used to train machine learning techniques for classification. The study reported that their model achieved 91% accuracy using a Random Forest classifier. Moreover, they claimed that the primary object type used by malware programs was Mutants, with an average of 21.84.

Lashkari et al. [

27] introduced a Python tool to extract critical features from a memory dump during malware execution. The tool extracted thirty-six features from memory dumps for each program using the Volatility tool, including nine categories: processes, DLLs, handles, loaded modules, code injections, connections, sockets, services, drivers, and callbacks. The results showed that the model achieved 93% accuracy using a Random Forest classifier.

Dener et al. [

28] explored the use of memory data in malware detection within a big data environment. Nine different deep learning and machine learning algorithms were applied to the CIC-MalMem-2022 dataset using the Apache Spark platform on Google Colaboratory. The highest accuracy, 99.97%, was achieved using the Logistic Regression algorithm. The authors showed different approaches for memory-based malware detection and provided a foundation for future research on memory data.

By analyzing the features that are directly extracted from memory dump files, machine learning algorithms can be trained to differentiate benign software from malicious code residing in memory. Moreover, these techniques reduced the computational cost of processing memory dumps and focused on specific information that can accurately detect malicious programs. However, the accuracy of detection frameworks is based on different features of memory-resident malware, which requires expert knowledge in the field of memory analysis to extract features that can detect the malware that obfuscates these features. Furthermore, the existing detection tools do not effectively leverage memory data information.

2.2. Image-Based Memory Forensics Malware Detection

Classical malware detection methods rely on extracting specific features or analyzing raw data in memory dump files. Nevertheless, this method may not fully capture the complex relationships and patterns found in memory dumps. Recent research explores how to transform memory dumps into visual representations that are appropriate for computer vision and image processing methods.

Dai et al. [

29] considered other forms of malware, including ransomware and boot sectors. The authors developed a framework for memory dump file extraction and grayscale conversion. Later, grayscale images were resized into fixed-size images, and relevant features were extracted by the HOG technique; classification was performed using a multilayer perceptron classifier. They claimed that their proposed method achieved high detection accuracy and a maximum precision of as high as 95% in malware classification, outperforming the considered state-of-the-art methods.

Bozkir et al. [

30] proposed a method that extracts memory dump data to detect obfuscated or encrypted malware. In the first phase, the memory dump of suspicious processes was collected as PE files for ten different malware families and benign files. Next, the memory dumps were converted to RGB images in different image renderings, such as 224 px, 300 px, 4096 px, and square root schemes, to differentiate malware files from benign ones. Consequently, the features extracted from the images using the GIST and HOG descriptor methods were fed into machine learning algorithms. The results achieved an accuracy of 96.39% using the SMO algorithm on the feature vectors with GIST+HOG together. Moreover, the Uniform Manifold approximation and projection for dimension reduction, “UMAP,” was applied to detect unknown malware files by binary classification as malware or benign. The algorithm was applied by splitting the dataset into three folds. Each fold contains seven malware samples for training and three for testing as unknown malware files. The results showed that the accuracy increased by 21% using the SVM algorithm.

Zhang et al. [

31] proposed a conventional neural network approach to detect memory-resident malware executables instead of analyzing the whole PE file, based on memory segments at different locations and lengths. First of all, it started converting a binary file to decimal numbers between 0 and 255, suitable for the numeric values of an image. In addition, every 8 bits has been converted to a decimal number. The results are presented to show that the maximum accuracy, with only 4096 bytes of header fragments of a PE file, reached about 97.48%. In addition, the effectiveness of the proposed model was depicted in the detection of fileless malware PE files.

Kosmidis and Kalloniatis [

32] proposed a malware detection approach using GIST texture feature extraction. The Malimg dataset was employed, and the Random Forest classifier led to about 91.6% accuracy in detection. Both these methods show the crucial role that image-based feature extraction techniques can play in malware detection. However, their dependence on RGB images may raise some issues regarding computational efficiency, which is a major concern in memory forensics and malware detection.

Liu et al. [

23] proposed MRm-DLDet, a deep learning framework for the detection of memory-resident malware. This approach proposes a concept whereby memory-resident malware may be visualized by carrying out memory dumps followed by conversion into high-resolution RGB images. After that, images were divided into sub-images using a sliding window. Then, the size of the dump file was reduced by removing duplicate memory pages. Further, a neural network was used to extract features from the sub-images for malware classification. In this way, 512 features in each sub-image were extracted. After that, the GRU layer further divided the features extracted from sub-images into training, validation, and testing. Then, the extracted features were considered a sequence of vectors. Further, an attention layer was used to calculate the relevance degree of these features to prevent crucial information from being lost and a voting layer to score the general classification result of each memory file. According to the results, the accuracy of MRm-DLDet was about 98.34% when it came to detection.

2.3. LIME Explanation Method

LIME (Local Interpretable Model-agnostic Explanations) is a method designed to make machine learning models more understandable by providing clear, human-interpretable explanations for individual predictions. It does this by approximating the complex model locally—using a simple, interpretable model such as a linear regressor—to explain how the original model behaves in the neighborhood of a specific instance. One of LIME’s main strengths is its model-agnostic nature, meaning it can be applied to any type of machine learning model, regardless of its complexity or architecture [

33].

LIME has been widely used across domains such as healthcare, finance, and cybersecurity, where understanding why a model makes a certain decision is essential [

33]. By providing localized explanations, LIME helps researchers and practitioners identify potential biases, increase transparency, and support more informed, trustworthy decision-making.

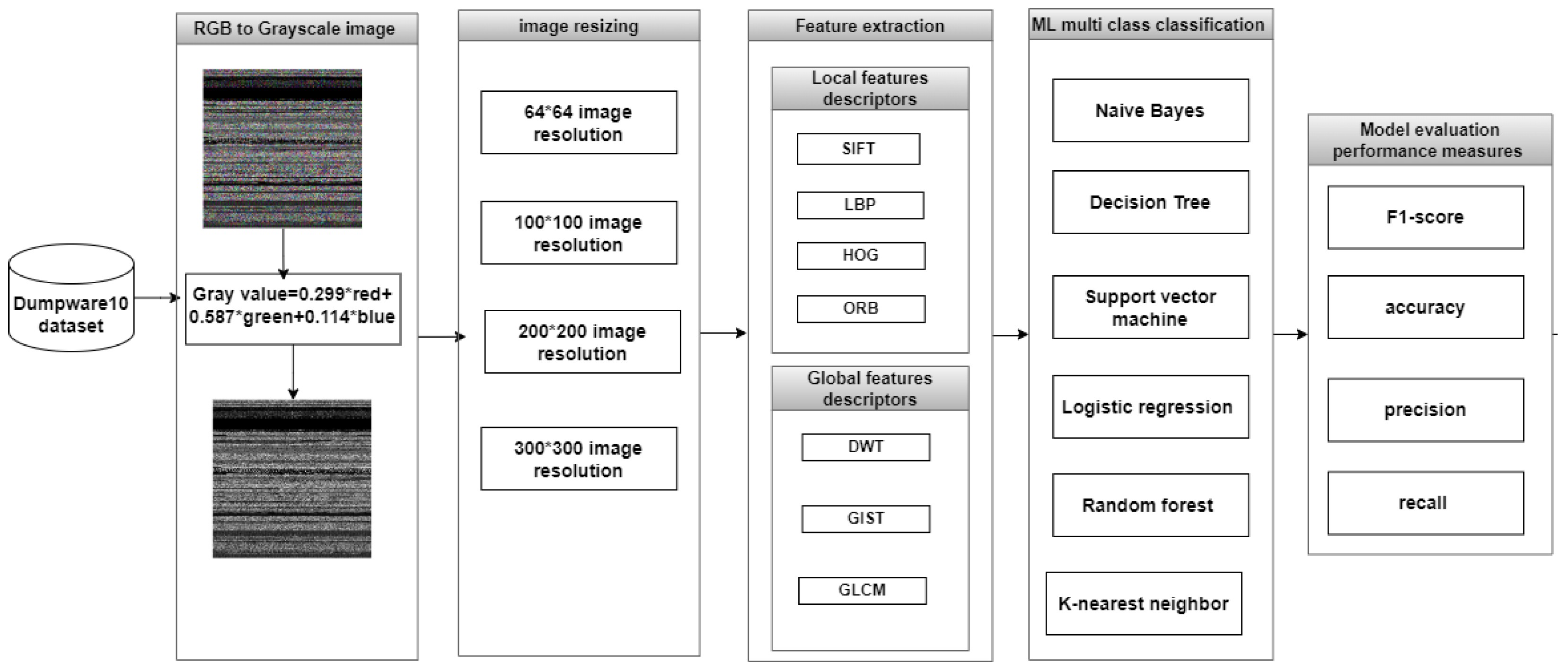

3. Methodology

The proposed approach is considered in further detail within this section. Forensics of memory is conducted by the proposed method with memory dump images to identify malware families and classify them. This paper is organized into two main parts. First, an intensive analysis is conducted involving various image resolutions and local-global texture descriptors to identify the most efficient combinations of these parameters for the best accuracy in fileless malware detection while utilizing minimum resources. Following this investigation, a novel approach is proposed, leveraging LIME-based feature extraction to focus on the most informative regions in malware images, therefore enhancing the efficiency and performance of the model.

3.1. Texture-Based Detection

The proposed approach follows a memory forensics and pattern detection approach, which includes four steps. First, it transforms the RGB images, which are extracted from memory dumps, into grayscale images because the applied texture descriptors in this study are designed to operate on intensity variations rather than color information, thus regularizing the data to give better pattern analysis. Second, it resizes the grayscale images into further resolutions, which are 64 × 64, 100 × 100, and 200 × 200, along with the original resolution, which is 300 × 300 pixels. It further resizes the images into those resolutions while seeking to optimize computational efficiency and carry out performance comparisons at different image scales. The resolutions 64 × 64, 100 × 100, 200 × 200, and 300 × 300 were selected to examine the impact of image size on performance and computational cost. The 100 × 100 resolution corresponds to the LIME-cropped output size, while 200 × 200 serves as an intermediate level between compact and full-resolution images. Third, it extracts the local and global texture features from the resized grayscale images using the different descriptors: Local Binary Patterns LBP, Histogram of Oriented Gradients HOG, Scale-Invariant Feature Transform SIFT, Oriented FAST and Rotated BRIEF ORB, Discrete Wavelet Transform DWT, and Grey Level Co-occurrence Matrix GLCM. This is further analyzed individually and in various combinations of each feature combination with several machine-learning models to determine the best classifier for malware family detection and classification. Next, the proposed approach evaluates the classifiers: Decision Trees, Support Vector Machine (SVM), Naïve Bayes, Random Forest, K-Nearest Neighbor (KNN), and Logistic Regression.

Figure 1 summarizes the framework of texture analysis.

3.1.1. Data Collection and Processing

This paper utilized the open-source Dumpware10 dataset [

30]. The dataset comprises 4294 RGB images generated by converting binary files of malware into images using the bin2png Python3.10 tool [

34]. The dataset consists of 3686 malware images and 608 images of benign programs. The dataset is organized into two directories: one for training and one for testing. The training directory is used to train the classifiers, while the testing directory evaluates the performance of the proposed approach. Cross-validation was not applied because the LIME-based segmentation and cropping steps are computationally intensive and would need to be repeated for every fold, greatly increasing runtime. Instead, we used an 80%:20% split to preserve the class balance and ensure a fair, consistent, and reproducible comparison between the texture-based and LIME-based approaches. All images were analyzed at their original resolution of 300 × 300 pixels. The dataset includes the following primary malware families and their corresponding subfamilies:

Adware: (Adposhel, Amonetize, InstallCore.C, MultiPlug, and BrowseFox).

Worm: (Allaple.A and AutoRun-PU).

Trojan: (Dinwod and Vilsel).

Macro Malware: (VBA).

The RGB images were converted to grayscale format for further feature extraction, where the conversion process was sufficient for feature extraction. OpenCV [

35], a widely used open-source computer vision library, provides the cv2.cvtColor function to convert RGB images to grayscale. This process removes the color components and focuses solely on the intensity information of each pixel, which represents its brightness level. In other words, the conversion discards hue and saturation, retaining only the luminance values that define the grayscale image. The formula used for this conversion is shown in

Figure 2, where the computed gray value corresponds to the grayscale intensity for each pixel in the original image.

3.1.2. Texture Features Overview

The primary goal of feature extraction in images is to identify and represent distinctive patterns or characteristics as numerical feature vectors. Texture is one of the key visual properties that describe the surface structure and appearance of an image, and it plays an important role in many computer vision tasks such as image classification, object recognition, and content-based image retrieval. Along with texture, features such as color and shape can also be extracted using various texture descriptors, which combine quantitative and qualitative analyses to capture meaningful information from visual data.

In the field of malware detection, images from the same malware family often use consistent patterns and textures. Although these images may not display repetitive patterns in a strictly mathematical sense, they have distinct “textures” that are valuable for automated categorization. Furthermore, malware images from the same family typically show clear visual similarities. To enhance malware classification efficiency, this paper uses different global and local texture descriptors chosen based on their reliability and computational cost-effectiveness.

Global Texture Descriptors

This type of descriptor extracts the statistical properties of the entire image or a large region within it. Moreover, it does not focus on the details of pixel arrangement. Analyzing the statistical properties of the whole image is computationally less expensive than analyzing smaller portions. Furthermore, it provides a high-level overview. However, applying these descriptors may miss some important details. In this work, three different global descriptors have been applied individually and combined to capture the most discriminative features.

Table 1 summarizes the properties and configuration of the global feature extraction methods.

Gray-Level Co-occurrence Matrix (GLCM): It examines how each pixel is arranged in space over the entire image by considering all pixel pairs within the image. The calculation for the GLCM algorithm starts by converting the image to grayscale, defining a specific neighborhood size (e.g., 4 × 4 pixels), calculating the co-occurrence matrix for all possible gray level pairs, and capturing general texture features, such as contrast, homogeneity, or entropy, which can be extracted from the co-occurrence matrix. These features represent the statistical distribution of spatial pixel associations throughout the entire image.

Generic Image Descriptor (GIST): It extracts the dominant structures within the image and the general spatial layout. The calculation for the GIST algorithm starts with filtering the images using a Gabor filter at different orientations and scales. The information from the full image is essentially summarized by pooling the filter responses at various spatial areas. Finally, the pooled responses are normalized to create a compact descriptor vector representing the global structure of the image.

Figure 3 shows a visual representation of GIST features extracted from a malware image belonging to the Adposhel malware family.

Figure 3.

GIST features visualization.

Figure 3.

GIST features visualization.

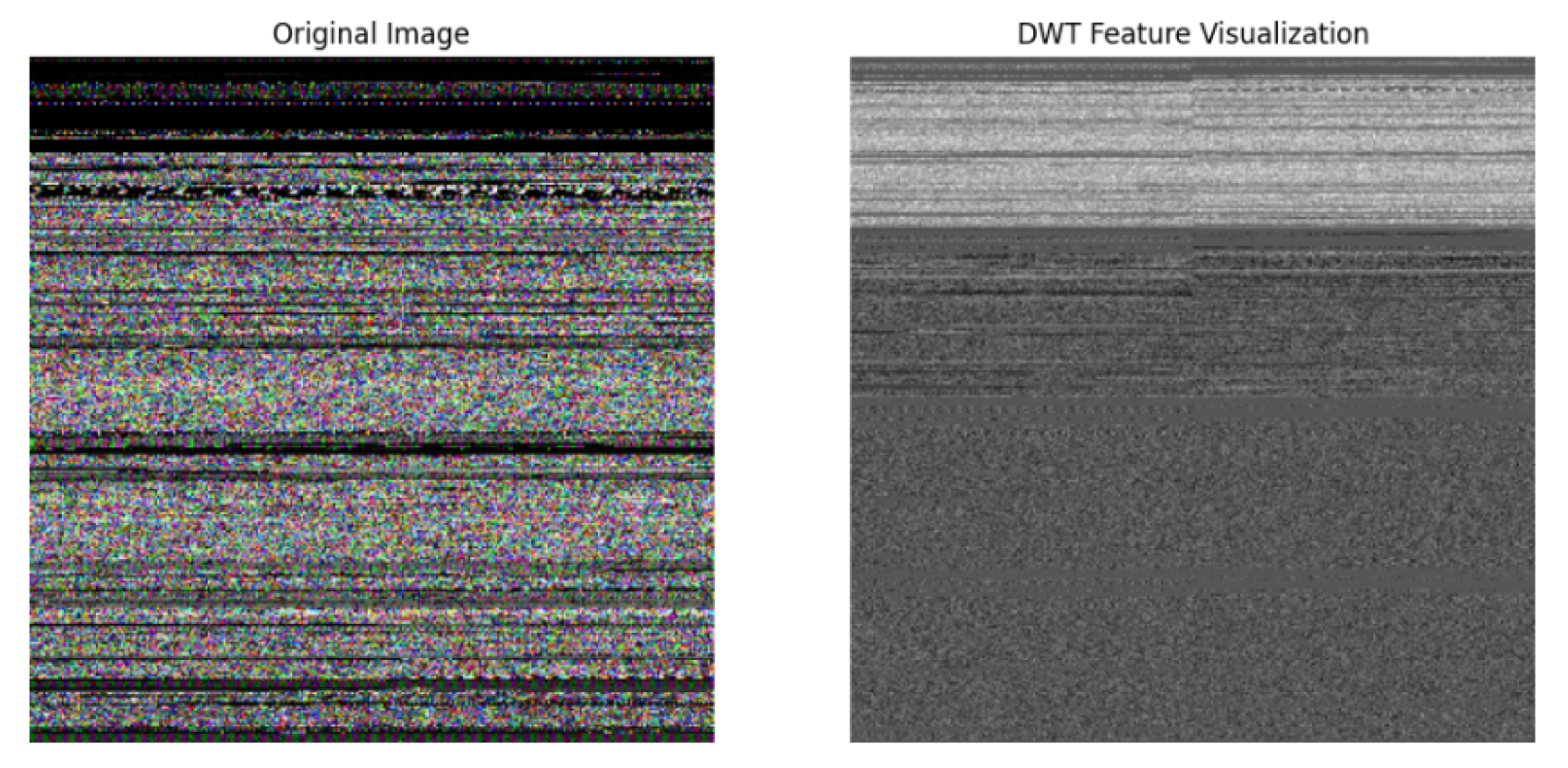

Discrete Wavelet Transform (DWT): It provides information at different scales and orientations by breaking the image into distinct frequency subbands. The decomposition offers a multi-scale analysis for feature extraction. However, the DWT is not a global descriptor in the same sense as GLCM or GIST. Still, it can offer a basis for extracting other features that can be useful for image analysis, such as malware identification in memory dump visualizations.

Figure 4 shows a visual representation of DWT features extracted from a malware image belonging to the Adposhel malware family.

Figure 4.

DWT features visualization.

Figure 4.

DWT features visualization.

Local Texture Descriptors

Local texture descriptors provide detailed information on the local structure by extracting spatial patterns and textural characteristics within specific regions of the image. They provide a deeper understanding of the image content compared to global descriptors. Some descriptors, like SIFT and SURF, are robust to lighting, scale, and rotation variations. Although some descriptors are computationally expensive, others, like LBP, are very efficient. However, they provide a limited scope by focusing on specific regions and may miss the information about the broader context of the image. In addition, some of the descriptors are sensitive to noise. The following local texture descriptors were used in this work.

Table 2 summarizes the properties and configuration of local feature extraction methods.

Scale-Invariant Feature Transform (SIFT): SIFT is strong at identifying and characterizing key points within an image. It provides robust features that are invariant to changes in size, rotation, and lighting. The SIFT utilizes the scale-space technique to identify the locations with significant changes in intensity or curvatures by incrementally compromising the image at various scales and places and analyzing variations between adjacent scales. After possible key points are found, SIFT fits a model function to the local image gradient information for fine-tuning. Furthermore, this ensures that the key point is localized accurately, even in the case of slight variations.

Figure 5 shows an example of SIFT key points visualization extracted from a malware image belonging to the Adposhel malware family. To deal with the variable-length set of SIFT descriptors and convert them to a fixed length, we used the Bag-of-Visual-Words (BoVW) approach. The SIFT descriptors, which are extracted from training images, are clustered using the K-Means algorithm to visualize the vocabulary, where each cluster center represents a visual word. Furthermore, each image is encoded as a histogram that counts the occurrences of these visual words to capture the distribution of the local patterns of the image effectively.

Figure 5.

SIFT feature visualization.

Figure 5.

SIFT feature visualization.

ORB (Oriented FAST and Rotated BRIEF) Descriptor: ORB utilizes the FAST algorithm to detect key points. The FAST algorithm is very efficient, making it suitable for real-time tasks. ORB works by finding the key points by comparing the intensity of a pixel to its surrounding pixels in a circular pattern and computing the orientation of key points using the intensity centroid method. ORB is more efficient than SIFT and is designed to be robust to changes in scale and rotation, making it reliable under different views of images.

Figure 6 shows an example of ORB key points visualization extracted from a malware image belonging to the Adposhel malware family.

Figure 6.

ORB features visualization.

Figure 6.

ORB features visualization.

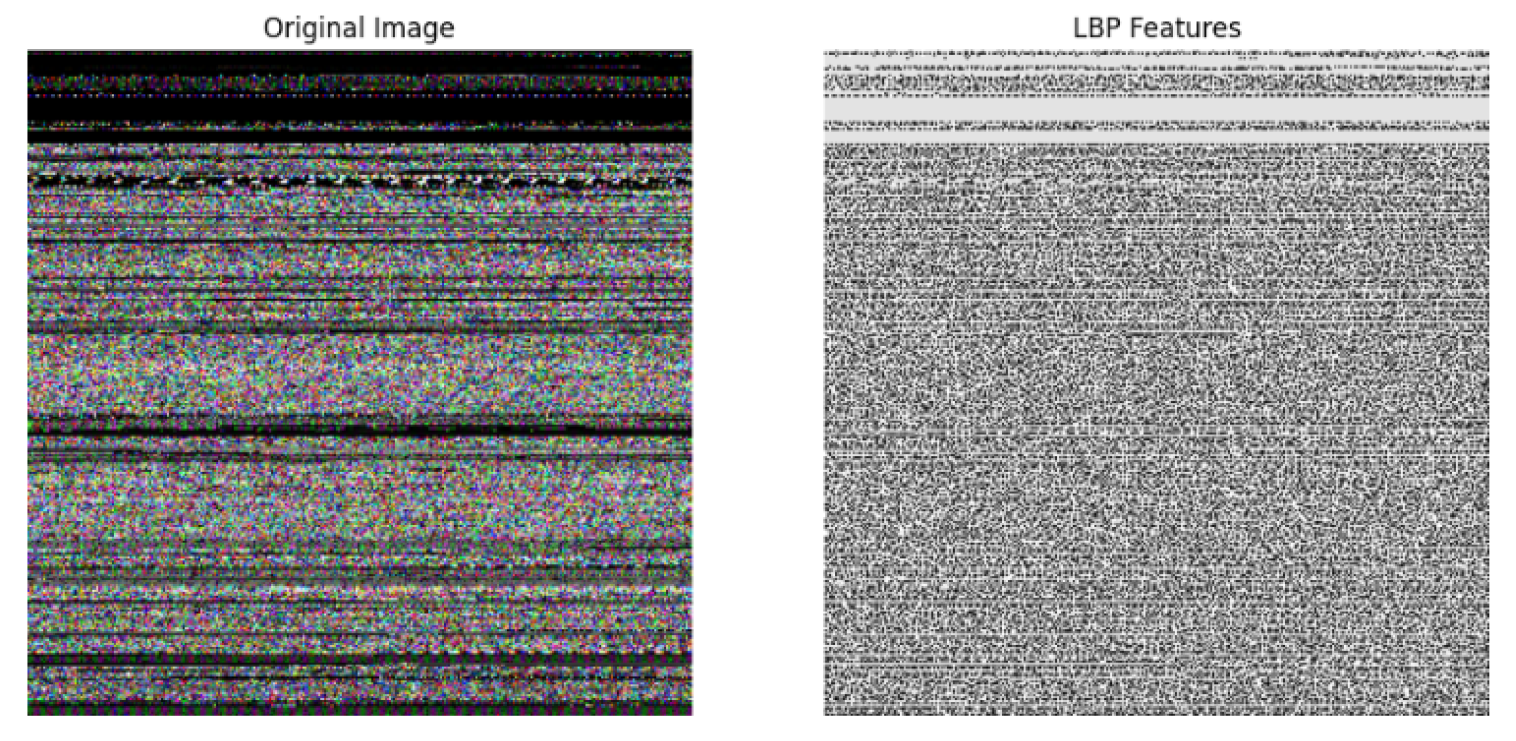

Local Binary Patterns (LBP): LBP is a straightforward approach that focuses on capturing the spatial patterns of pixel intensities within a small neighborhood. LBP works on a small neighborhood around a central pixel in the image. It compares the intensity of the central pixel with each neighbor in a predefined neighborhood. If a neighbor’s intensity is greater than or equal to the central pixel’s, the corresponding bit position value is set to 1 in a binary string; otherwise, a 0 is assigned. By concatenating the binary string bits, a unique descriptor value is obtained for the central pixel.

Figure 7 shows an example of LBP features visualization extracted from a malware image belonging to the Adposhel malware family.

Figure 7.

LBP features visualization.

Figure 7.

LBP features visualization.

Histogram of Oriented Gradients (HOG): HOG captures the distribution of oriented gradients within specific image regions. It divides the image into a rectangular grid of cells and calculates the direction and intensity of gradients for each pixel in those cells, which shows the local area’s edges and shapes. HOG’s histograms then capture the distribution of these gradients or the number of points in each direction (horizontal, vertical, and diagonal). Then, neighboring cells are grouped into blocks, and their features are normalized. Finally, the overall gradient distribution within that block is represented by combining these histograms into a feature vector.

Figure 8 shows an example of HOG features visualization extracted from a malware image belonging to the Adposhel malware family.

Figure 8.

HOG features visualization.

Figure 8.

HOG features visualization.

3.2. Machine Learning Classifiers and Performance Evaluation Metrics

The classification performance in memory forensics malware detection is critical for accurately distinguishing between benign and malicious software and correctly classifying malware into its respective family. In this work, we evaluated several machine learning algorithms to identify the most effective algorithm for this task. The models considered in this work are Support Vector Machine (SVM), Random Forest (RF), Decision Tree (DT), Naïve Bayes, K-Nearest Neighbors (KNN), and Logistic Regression.

No separate validation set was used for hyperparameter tuning. To ensure consistency and avoid bias, default hyperparameters commonly adopted in related studies were applied for traditional classifiers such as SVM and Random Forest. This setup emphasizes the comparative impact of the proposed LIME-based preprocessing rather than fine-tuning model configurations.

To evaluate the proposed approach, we utilized a variety of evaluation metrics to provide a comprehensive assessment of the classification models. While accuracy is a common measure, it does not fully capture a model’s performance, especially in cases where datasets are imbalanced. Therefore, in addition to accuracy, we compute precision, recall, and F1 score. These metrics, derived from the confusion matrix presented in

Figure 9, offer a more nuanced evaluation of model performance by considering both the quality of positive predictions and the balance between precision and recall. This approach ensures a comprehensive evaluation of the effectiveness of our model, and not its accuracy only.

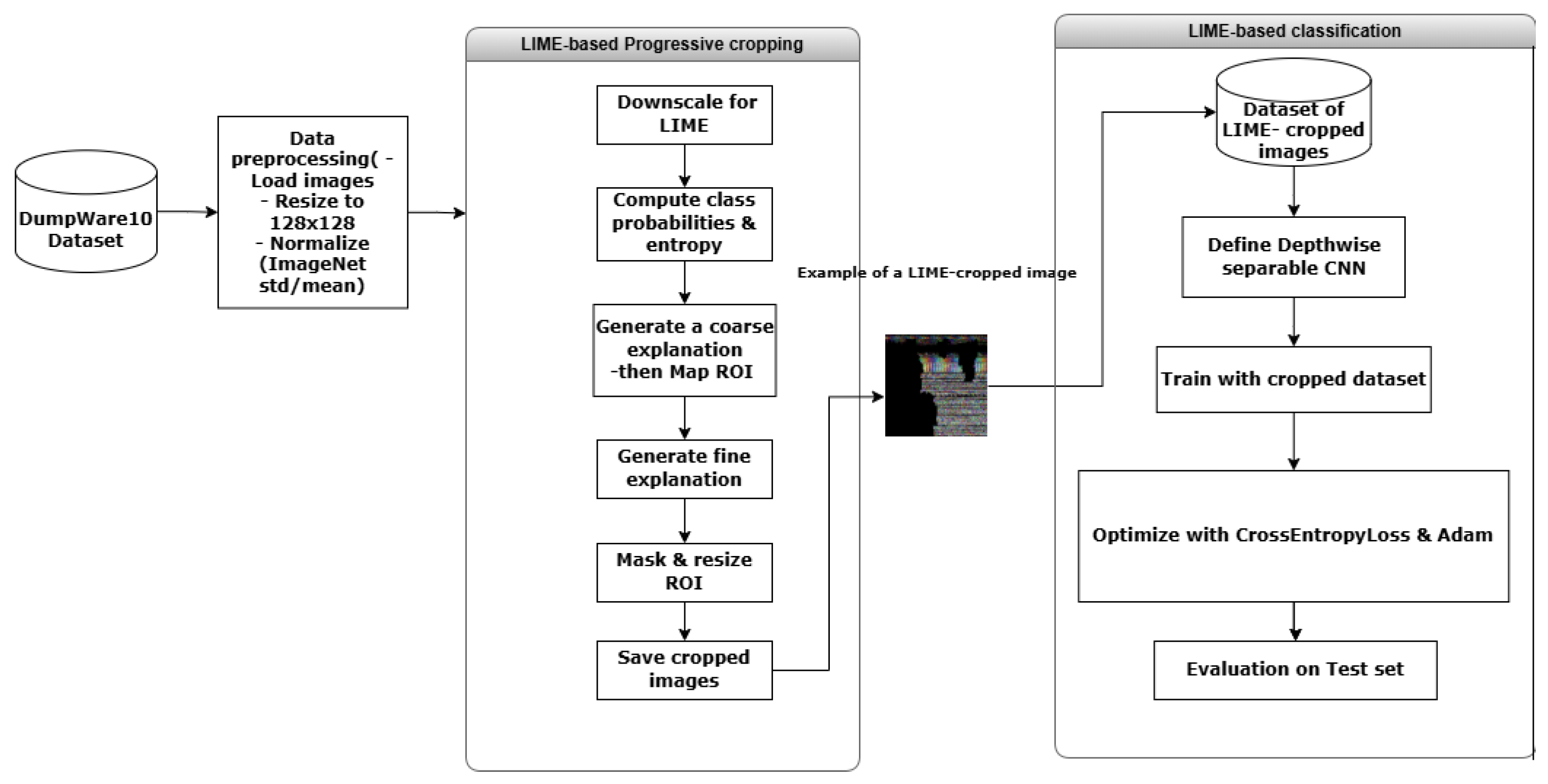

3.3. LIME-Based Detection

The Local Interpretable Model-Agnostic Explanation (LIME) is an explainable AI technique that explains the model prediction of any classifier in a local, interpretable, and model-agnostic manner. These features demonstrate the method’s ability to explain the model’s prediction of each image separately, in a human-understanding way, and on different complex machine learning algorithms, without depending on the internal workings of any algorithm. The LIME method estimates the feature importance in the image by generating perturbed samples of the same instance and observing the model’s predictions on those perturbed samples. In this paper, the LIME method is applied to extract the most informative features from images and crop them based on the identified regions. Furthermore, the cropped images are fed to a new CNN for evaluation. The following steps show the main phases of the proposed LIME-based malware classification as illustrated in

Figure 10.

3.3.1. Model Selection and Preprocessing:

In this study, the ResNet50 and MobileNet are two pre-trained models utilized separately to generate the most informative features using the LIME method for the Dumpware10 dataset in the RGB format for further comparisons. These models were selected due to their effectiveness in image classification, having been trained on a large and diverse ImageNet dataset. Moreover, to evaluate the LIME-based method fairly, these models have opposite sides in terms of accuracy and efficiency. The ResNet50 model consists of a deep residual architecture, making it highly reliable for extracting deep and complex visual features. Moreover, MobileNet is designed for computational efficiency and is suitable for lightweight environments due to its use of a depthwise separable convolutions architecture. The pre-trained models were set to evaluation mode to provide consistent and reliable results, which ensures that batch normalization layers utilize learned population statistics and that stochastic training components like dropout are disabled. For each image in the Dumpware dataset, a preprocessing process is applied to ensure that the input images match the format and distribution the model was trained on and accelerate accurate inference. The preprocessing includes normalizing the pixel values based on ImageNet standards and converting them into tensors consistent with the model. Therefore, ResNet50 and MobileNet separately process the preprocessed image to generate predictions that serve as a basis for the identification of important LIME-based features.

3.3.2. Entropy-Based Sampling Strategy

To reduce computational cost while maintaining the quality of LIME explanations. In this study, the number of perturbed samples is generated dynamically based on the entropy value of the model’s predictions. The entropy values serve as an indicator of the confidence of the model. High entropy means high confidence, requiring fewer perturbations, and low confidence, demanding more samples, while low entropy indicates high confidence, requiring fewer perturbations. Let

p be the model’s output probabilities for an image, where

is the softmax probability of class

i and

C is the total number of classes. The Shannon entropy

is defined as:

where

is a small constant added to prevent numerical instability. The model’s confidence is determined by the entropy value.

The number of perturbation samples

is then dynamically calculated as:

where

is the maximum possible entropy, and

and

denote the lower and upper sampling limits (set to 20 and 150, respectively). This adaptive approach reduces redundant perturbations when the model is highly confident.

3.3.3. Two-Stage Segmentation

To accurately and efficiently identify the most informative region in each image, we apply the two-stage progressive process, consisting of coarse and fine segmentation steps. This approach balanced the computational cost and interpretability and gave more accurate region localization than the single process approach.

Coarse Segmentation:

In this step, the resized 128 × 128 images are segmented using the Simple Linear Iterative Clustering (SLIC) method. The number of superpixels identified is

. The approach divides the image into broad regions, so it is easier and faster to compute the explanation and extract general high-level features. Furthermore, LIME makes perturbations on these coarse superpixels and generates an interpretable explanation using a limited number of samples (up to 50, based on the entropy value). The generated mask highlights the broad regions that strongly impact the model’s predictions. These regions are then mapped to the original image dimensions to extract regions of interest. Consider the following representation of the image:

where a coarse superpixel is represented by each

.

After that, LIME modifies these superpixel combinations and trains a local linear model

to approximate the classifier

in the image’s closest neighbors:

where

represents the important weight of each segment and

indicates the presence (1) or masking (0) of superpixel

.

The region of interest (ROI)

, which includes the broad image regions most responsible for the model’s decision, is defined by the top

k segments with the largest

values:

The identified ROI is then mapped back to the original image size for the second stage refinement.

Fine Segmentation:

The ROI identified in the coarse stage is extracted from the original image. Therefore, a second SLIC segmentation is applied using a larger number of superpixels (

). This segmentation allows for the extraction of more specified important regions. The LIME is applied again using an entropy-sampling-based procedure to produce a refined explanation mask that captures the critical subregions. The masked region is then mapped back to its original image size to keep spatial details. Using a higher segmentation

(100 superpixels), the ROI retrieved from the coarse step,

, is processed in the second stage as follows:

To approximate the classifier

, a new LIME-based explanation

is then calculated as follows:

where

represents the importance weight for each fine segment, and

specifies whether the fine superpixel

is visible (1) or masked (0).

A fine-grained explanation mask that emphasizes the most significant subregions within is the result of this approach. The final cropped region utilized for model training and classification is then extracted by applying the generated mask to the original image.

The final cropped images are resized to 100 × 100, consisting of the most important visual regions. Algorithm 1 summarizes the complete process. starting with image preprocessing, entropy-based sample adjustment, coarse and fine segmentation, and final image cropping. The entire process is parallelized to enable efficient processing.

| Algorithm 1: Two-stage progressive LIME-based cropping with entropy-based sampling |

![Computers 14 00467 i022 Computers 14 00467 i022]() |

3.3.4. Evaluation of Lime-Based Cropped Images:

The evaluation model implemented in this study is a lightweight conventional neural network, which is deployed to be trained and tested on the cropped images based on the LIME technique. To enhance efficiency and reduce computational efforts by reducing the number of parameters while maintaining effective feature extraction, the proposed network utilizes depthwise separable convolutions, which divide the standard convolution processes into depthwise and pointwise convolutions. The architecture consists of four depthwise separable convolution layers, followed by batch normalization and ReLU activation. Furthermore, progressively, the number of feature channels is increased while spatial dimensions are downsampled. Moreover, an average pooling layer summarizes the features into a fixed-size vector, which is fed into a fully connected layer that outputs class probabilities.

4. Results and Discussion

The experiments are divided into two parts. First, a review analysis was performed using local descriptors (HOG, LBP, SIFT, and ORB) and global descriptors (DWT, GIST, GLCM), both individually and in combination with different image resolutions, to provide a comprehensive analysis and comparison. The goal is to identify the best descriptors or combinations, the optimal resolution, and the most effective classifier to achieve high performance in malware detection based on texture-based memory forensics. Features extracted from each descriptor (i.e., HOG, SIFT, GLCM) were first standardized individually using z-score normalization and then concatenated into a single composite feature vector. This ensured scale consistency and fair contribution of each descriptor to the classification process. Then, the LIME-based malware classification is applied to find the effectiveness of this explainable model in identifying influential regions in malware and benign image datasets.

All experiments were conducted on a consistent hardware setup: Intel Core i7-10750H CPU, 16 GB RAM, NVIDIA RTX 2060 GPU, SSD storage, with batch size = 32 and parallel data loading enabled. Reported throughput values were averaged over five independent runs, with less than ±3% variance.

In this section, we focus on all the results presented in tables, while the figures highlight only the best results to identify which combination performs best. That is, the figures focus on the best results achieved for each texture descriptor at various resolutions, regardless of the classifier used. This approach is used because the decision boundaries for each classifier vary based on the features used for classification. Moreover, the proposed approach used Local Interpretable Model-agnostic Explanations (LIME) to understand and highlight the most important regions used for classification. Furthermore, the proposed approach investigated whether LIME integration enhances the performance of the classification task by focusing only on the key features specified by the LIME method. In addition, the experiments were conducted to assess the effect of resizing images based on these identified regions to improve accuracy. This section consists of four subsections: local descriptors results, global descriptors results, the results of combining local and global descriptors, and the results of integrating Local Interpretable Model-agnostic Explanations (LIME).

4.1. Local Descriptors Results

4.1.1. Multi-Class Classification Weighted Results Using Local Feature Descriptors and 64 × 64 Image Resolution

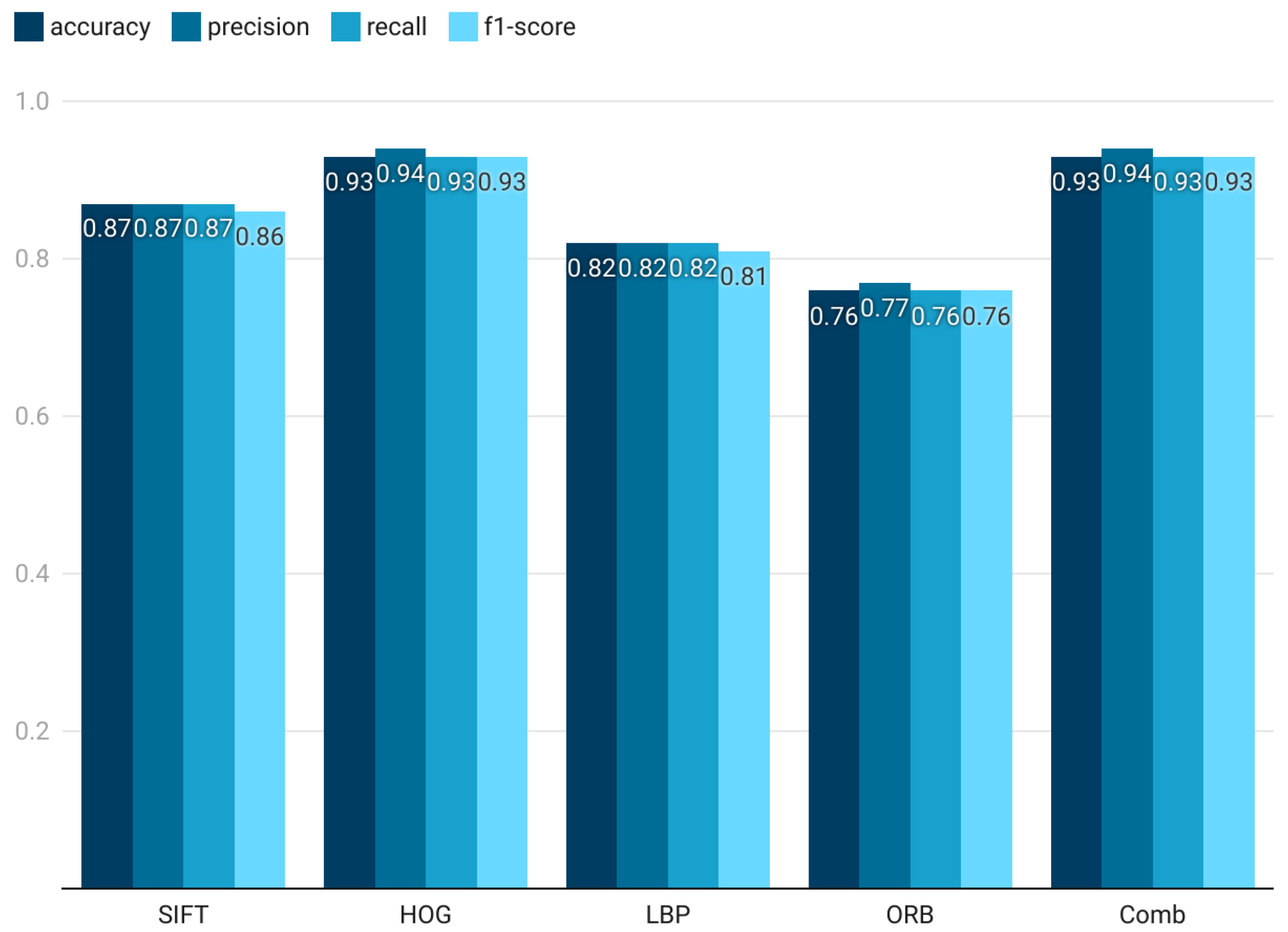

Table 3 summarizes the results of accuracy, precision, recall, and F1-score achieved by different machine learning classifiers for image-based memory forensics tasks using local feature descriptors from 64 × 64 resolution images. Moreover,

Figure 11 demonstrates the best results achieved for each descriptor and classifier. The results show that the HOG and SIFT descriptors, using SVM classifiers, outperform other descriptors by achieving F1-scores of 74% and 70%, respectively. This shows that SIFT and HOG effectively capture distinctive features for memory forensics classification tasks even at low resolution. Likely, the effectiveness of these descriptors is due to their ability to encode edge gradients and essential spatial information. On the contrary, LBP and ORB demonstrated lower performance across all classifiers, which shows their limitations in distinguishing memory forensics families and differentiating them from benign programs. As shown in the results, LBP achieved a maximum accuracy of 61% with RF, while ORB’s performance was significantly lower, with a 22% F1-score only. This performance gap raises the importance of choosing feature descriptors that can effectively capture the subtleties of memory forensics images, even when computational constraints limit the resolution to 64 × 64. Moreover, SVM’s F1-score in the combined features scenario is 80%, which is the greatest of all the combinations and 6% better than the best result from the best individual descriptor (HOG). This combined approach allows for more comprehensive feature representation and improves classification accuracy despite the low resolution. The overall results show the importance of selecting robust feature descriptors like HOG and SIFT for low-resolution image analysis in memory forensics, balancing the need for computational efficiency with classification performance.

4.1.2. Multi-Class Classification Weighted Results Using Local Feature Descriptors and 100 × 100 Image Resolution

Table 4 summarizes the accuracy, precision, recall, and F1-score achieved by the machine learning classifiers for image-based memory forensics tasks. Local feature descriptors were extracted from images of 100 × 100 resolution to train the classifiers.

Figure 12 presents the best performance results obtained for each texture descriptor. Among them, the HOG descriptor combined with the SVM classifier achieved the highest performance, reaching an F1-score of 91%. This result highlights the strong ability of HOG—and to some extent, ORB—to take advantage of higher image resolutions by capturing finer details that improve performance in multi-class classification tasks. In particular, HOG demonstrates consistent effectiveness at resolutions above 64 × 64, where richer spatial information becomes available.

The ORB descriptor also showed noticeable improvement at higher resolutions, although it did not reach the same performance level as HOG. This indicates that ORB benefits from increased image detail but remains less discriminative than HOG in complex classification scenarios. By contrast, SIFT and LBP achieved relatively stable results across both 64 × 64 and 100 × 100 resolutions, suggesting that these descriptors are less sensitive to additional spatial detail.

When multiple descriptors were combined, the overall classification performance improved, particularly when using an SVM classifier, which achieved an F1-score of 92%. This finding emphasizes the advantage of integrating multiple feature descriptors to form a richer and more comprehensive feature representation, ultimately enhancing classification performance at higher resolutions.

4.1.3. Multi-Class Classification Weighted Results Using Local Feature Descriptors and 200 × 200 Image Resolution

Table 5 summarizes the results of malware classification in memory forensics using 200 × 200 image resolutions, while

Figure 13 illustrates the best performance achieved by each classifier across the different feature descriptors. Overall, the results show a clear improvement in performance metrics at 200 × 200 resolution for all descriptors. The figure particularly highlights the strength of the HOG descriptor, which achieved an F1-score of 93%, demonstrating its strong ability to capture spatial structure and edge-gradient information.

Similarly, the combined feature approach produced excellent results, with both SVM and Logistic Regression classifiers reaching an F1-score of 93%. The consistent performance across resolutions confirms that integrating multiple descriptors enhances classification accuracy by providing a richer and more diverse feature representation.

These findings indicate that SVM and Logistic Regression consistently deliver the highest performance when paired with the HOG and combined feature sets, underscoring their effectiveness in analyzing high-resolution images for malware classification in memory forensics tasks.

4.1.4. Multi-Class Classification Weighted Results Using Local Feature Descriptors on Original Image Resolution

Table 6 summarizes the results of memory forensics malware classification on images with original resolutions.

Figure 14 illustrates the best results achieved by classifiers for each feature descriptor. The results indicate that using the original image size of 300 × 300 pixels slightly improves or maintains performance compared to 200 × 200 pixels. However, the F1-score of SIFT descriptors decreased from 90% at 200 × 200 resolution to 86% at 300 × 300 resolution. This reduction in SIFT accuracy is potentially due to the increased complexity and noise in larger images, which can negatively affect feature extraction and classification performance.

4.2. Global Descriptors Results

4.2.1. Multi-Class Classification Weighted Results Using Global Features Descriptors and 64 × 64 Image Resolution

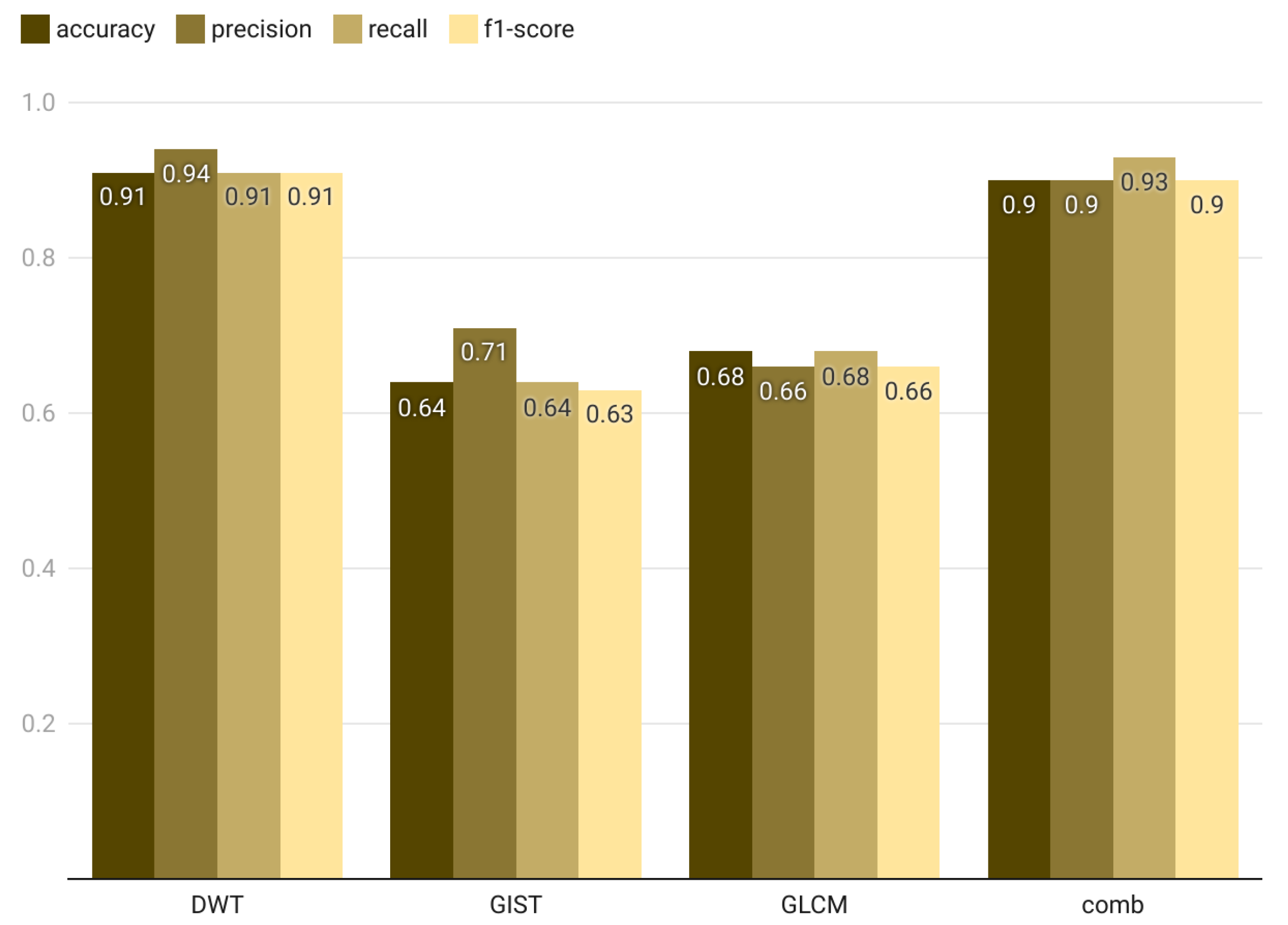

The results of applying global descriptors on 64 × 64 memory forensics program images using various machine learning classifiers are presented in

Table 7. The analysis in

Figure 15 highlights the classifier that achieved the highest performance metrics. The Discrete Wavelet Transform (DWT) demonstrates outstanding results with an F1-score of 90%, underscoring its effectiveness in capturing global image properties crucial for memory forensics classification, even at a low resolution. This high accuracy is likely due to DWT’s ability to represent spatial frequency information effectively. In contrast, the GIST and GLCM descriptors achieved moderate F1-scores (around 65–69%), showing their limitations in capturing discriminative features at this resolution. Interestingly, the combination of feature descriptors also achieved high performance with an F1-score of 90%, but it did not surpass the DWT. This may be because incorporating multiple features can introduce noise, potentially reducing the overall performance slightly. Compared to the best local descriptor (HOG), which achieved a 74% F1-score at 64 × 64 image resolution, the global descriptor (DWT) outperforms HOG with an F1-score of 90%.

4.2.2. Multi-Class Classification Weighted Results Using Global Features Descriptors and 100 × 100 Image Resolution

Table 8 and

Figure 16 describe the results of applying global image descriptors to memory forensics malware images, where the resolution of the images is 100 × 100. The results show that no significant improvements occur when the resolution of images increases from 64 × 64 to 100 × 100. Furthermore, the DWT maintains exceptional performance with 91% F1-score. This suggests that DWT effectively captures informative global image characteristics for memory forensics tasks, as it can represent both spatial and frequency information in the image. However, the GIST and GLCM performances remain moderate even with increasing the resolution of images to 100 × 100. These descriptors primarily capture statistical properties of the entire image, and these properties might not fully capture the spatial details crucial for differentiating memory forensics families, especially at a still-limited resolution of 100 × 100. This indicates these descriptors might still struggle to capture highly discriminative features at this resolution for the memory forensics classification task. However, the combination of global feature descriptors at 100 × 100 resolution shows high-performance measures; it does not exceed the DWT’s individual performance results.

4.2.3. Multi-Class Classification Weighted Results Using Global Features Descriptors and 200 × 200 Image Resolution

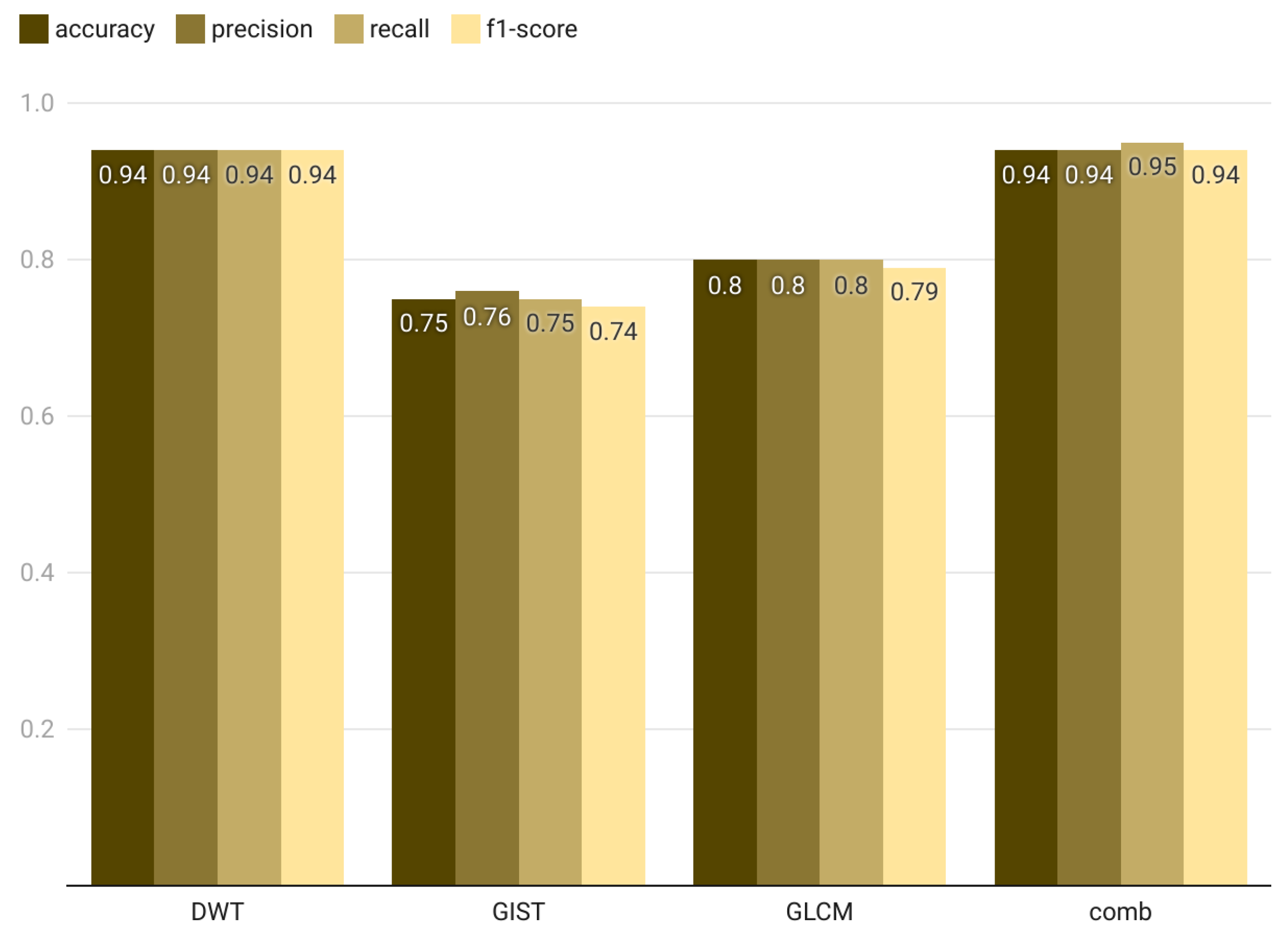

The results of extracting global feature descriptors using a 200 × 200 image resolution are shown in

Table 9 and

Figure 17. The DWT descriptors maintain high performance, consistently capturing relevant global image characteristics for memory forensics tasks. DWT achieves an F1-score of 94% at 200 × 200 resolution, demonstrating an improvement from the F1-score of 91% at 100 × 100 resolution. GIST and GLCM show improvement in performance measures when compared to the results at 64 × 64 resolution and 100 × 100 resolution. For example, the F1-score of the classification result using the GIST descriptor improved from 63% at 100 × 100 image resolutions to 75% at 200 × 200 resolution. Moreover, the F1-score of classification using GLCM improved from 68% at 100 × 100 resolution to 79% at 200 × 200 resolution. These improvements suggest that higher resolutions help GIST and GLCM better capture the spatial details needed for effective memory forensics classification.

4.2.4. Multi-Class Classification Weighted Results Using Global Feature Descriptors and Original Image Resolution

The results of extracting global feature descriptors from the original image size (300 × 300 pixels) are presented in

Table 10.

Figure 18 highlights the best results each descriptor achieves, regardless of the classifier used. The findings reveal an improvement or at least stability across all global descriptors, both individually and in combination, when using the original image resolution compared to the 200 × 200 resolution. For example, the F1-score of the GIST descriptor increased from 74% at a resolution of 200 × 200 pixels to 76% at 300 × 300 pixels, demonstrating the advantages of retaining higher resolution for enhancing model performance. Moreover, combining features led to a slight improvement in performance metrics compared to using the best individual descriptor. Specifically, the DWT F1-score, which was 93% when used alone, improved to 94% when combined with other features.

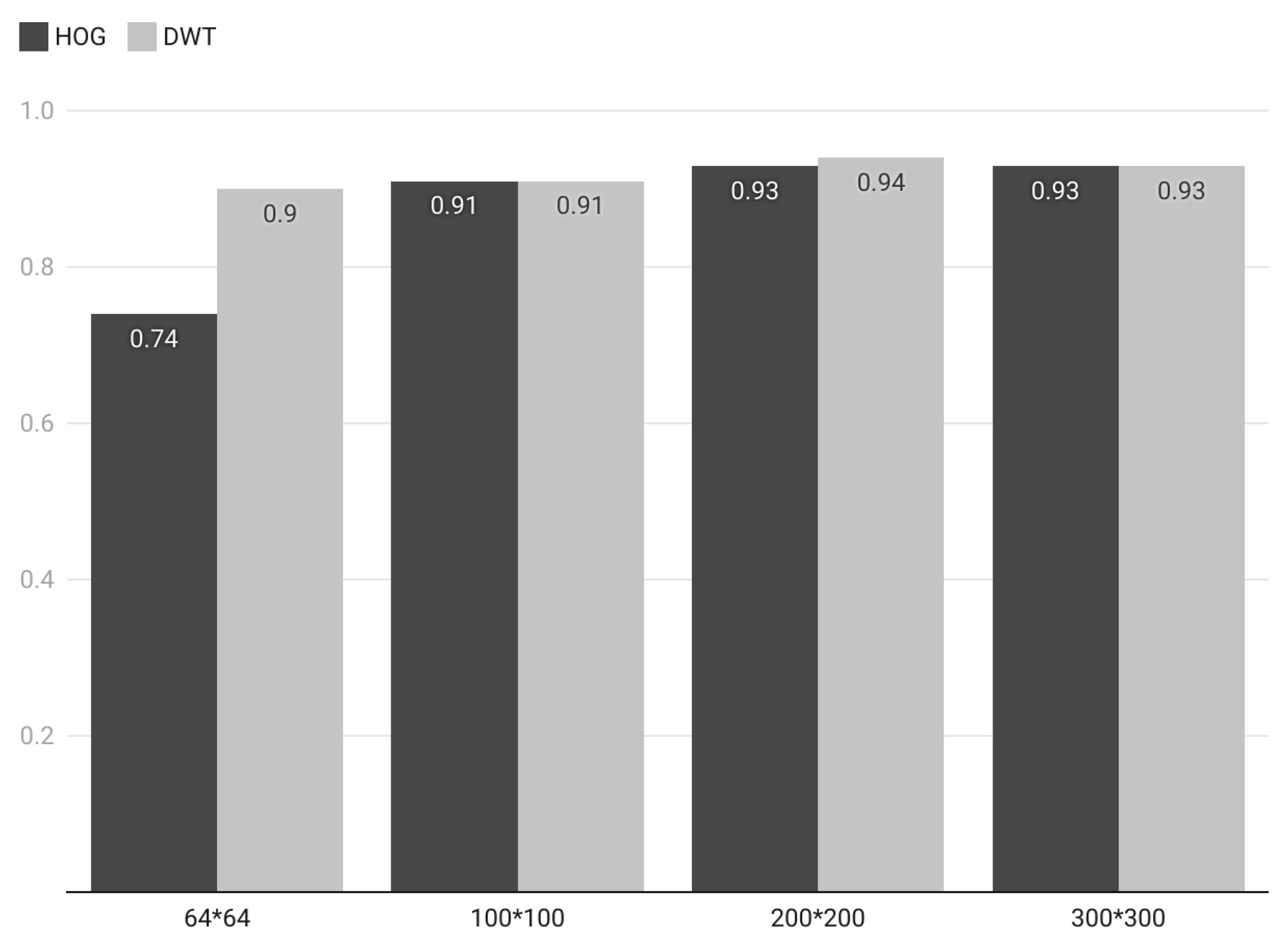

4.2.5. Comparative Analysis Between DWT and HOG Descriptors

Figure 19 analyzes the impact of image resolution on F1-scores in image classification using HOG (local descriptor) and DWT (global descriptor) for a memory forensics image classification task. HOG and DWT were selected for this analysis because they achieved the highest performance measures in our classification task. The results demonstrate that the HOG descriptor significantly benefits from higher image resolutions, with F1-scores increasing from 74% at 64 × 64 to 93% at 300 × 300. This indicates that HOG effectively captures discriminative features for memory forensics classification by leveraging the additional spatial information provided by higher resolutions. Furthermore, the global descriptor DWT maintains consistently high F1-scores across all resolutions, ranging from 90% to 94%. This suggests that DWT can capture significant and discriminative features for classification, even at lower resolutions, likely because it integrates both spatial and frequency information. However, at the 300 × 300 resolution (the original size of the images), the F1-scores for both DWT and HOG do not show significant improvement, with DWT’s F1-score slightly decreasing from 94% to 93%.

4.3. Local and Global Descriptors Combination Results

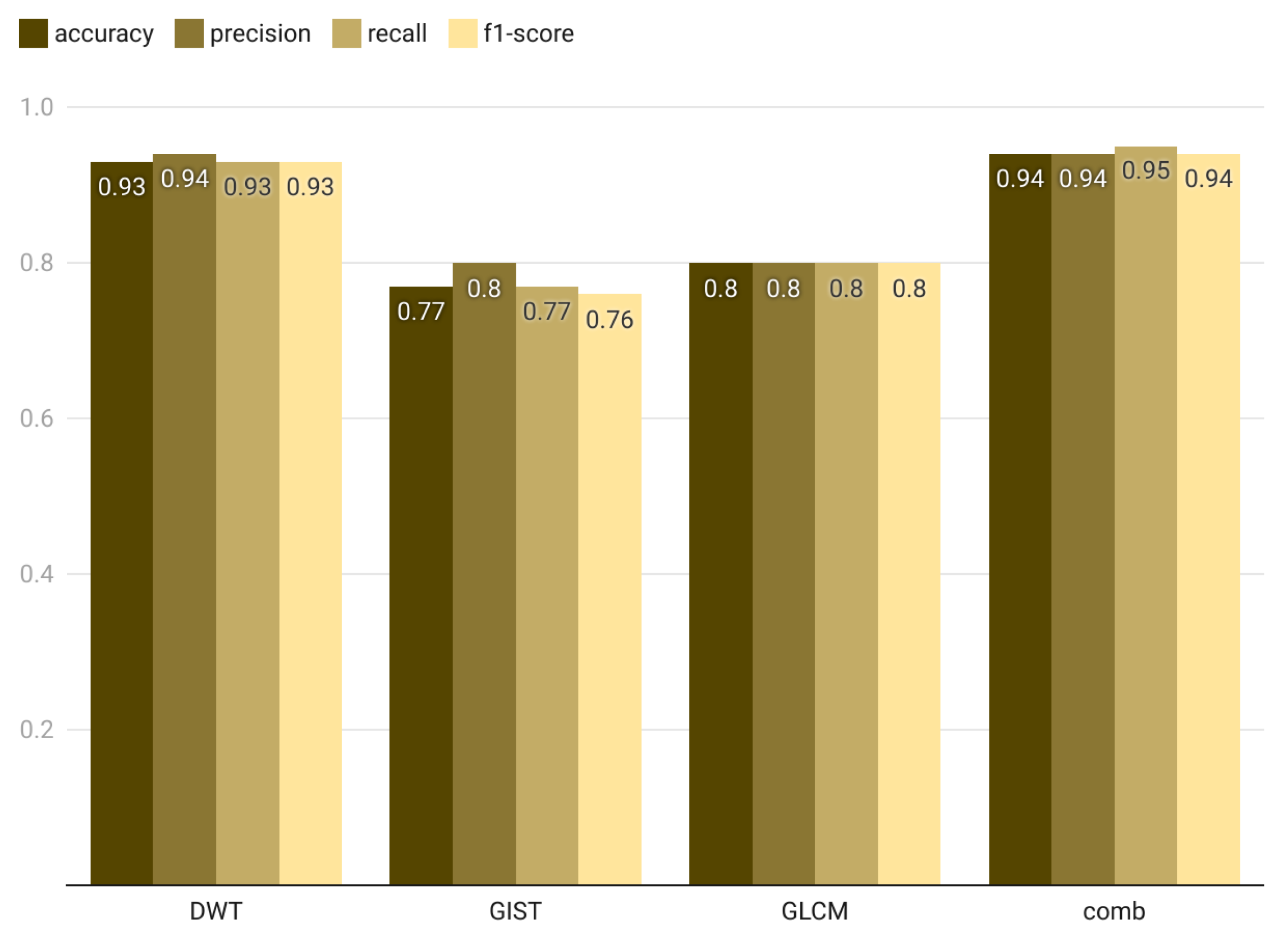

Table 11 summarizes the accuracy, precision, recall, and F1-score achieved by various machine learning classifiers with the combined local and global descriptors (SIFT, HOG, ORB, LBP, GLCM, GIST, DWT) for image-based memory forensics tasks.

Figure 20a–d illustrates the best results achieved by the top-performing classifier at each resolution. The results reveal that the effectiveness of classifiers varies with image resolution when combining local and global descriptors for malware classification. All classifiers exhibit high performance at the original resolution of 300 × 300. However, at lower resolutions, combining local and global descriptors notably improves classification performance. This enhancement is particularly evident for classifiers such as SVM, Random Forest, and Logistic Regression, which adeptly manage the increased feature complexity and dependencies. Logistic Regression, in particular, shows remarkable performance, achieving an F1-score of 95% at the 200 × 200 resolution, up from 87% at 64 × 64 and 91% at 100 × 100. This trend indicates that Logistic Regression benefits significantly from integrating combined features. In addition, Random Forest demonstrates excellent performance, with F1-score rates of 90% at 64 × 64, 84% at 100 × 100, and 94% at 200 × 200. Similarly, the SVM classifier maintains high accuracy across different resolutions, reflecting its efficiency in handling varying image sizes. However, the Naïve Bayes and KNN classifiers show comparatively lower performance. Naïve Bayes achieves F1-score rates of 35% at 64 × 64, 62% at 100 × 100, and 37% at 200 × 200. This low performance is likely due to its assumption of feature independence, which does not accurately capture the complex feature correlations in image data. Although KNN performs better than Naïve Bayes at higher resolutions, with F1-scores of 26% at 64 × 64, 37% at 100 × 100, and 77% at 200 × 200, it still faces limitations due to its sensitivity to the choice of distance metric.

Figure 21 shows the ROC curve for the multiclass classification of malware using a Logistic Regression classifier for combined global and local features at 300 × 300 resolution. The results show that the performance of the proposed method is significant for different classes in memory malware families and benign programs in terms of AUC values.

4.4. Results of Integrating Local Interpretable Model-Agnostic Explanations (LIME)

The analysis of LIME-based cropped images using a depthwise neural network is conducted with parameters of 20 epochs, a learning rate of

, Adam optimizer, and a cross-entropy loss function. The experiment is conducted for multiclass classification and binary classification for each malware, along with the benign class, to demonstrate the effectiveness of LIME-based features in classifying images to the appropriate family and their robustness in distinguishing malware from benign samples. The new datasets comprising cropped regions are constructed based on LIME explanations generated using the predictions of ResNet50 and MobileNet and are analyzed to evaluate which model’s architecture is more appropriate in classifying the in-memory malware families. The results demonstrate the effectiveness and efficiency of using identified regions based on LIME for in-memory malware classification.

Table 12 and

Table 13 demonstrate the results of the evaluation metrics using cropped images based on ResNet50 and MobileNet models for a multiclass classification task. The MobileNet-based model achieved an overall accuracy of 85.02%, which outperformed ResNet50, which achieved 80.49%. Regarding the class-level results, MobileNet consistently outperforms ResNet50 in most categories. The F1-score for different malware classes using MobileNet is higher than that of ResNet50, for example, Adposhel (0.90 vs. 0.81), Allaple (0.91 vs. 0.85), BrowseFox (0.83 vs. 0.70), and the ’Other’ class, which indicates the benign images (0.60 vs. 0.71). Overall, the MobileNet-based LIME cropping images demonstrate better performance than ResNet50 in terms of accuracy, consistency, and robustness across frequent and less frequent malware families in the dataset. Furthermore,

Table 14 and

Table 15 illustrate the performance measures of the experiments conducted to evaluate the effectiveness of the proposed LIME-based cropping approach on a binary classification task.

4.5. Time Efficiency of the LIME Approach

The LIME approach provides a light and fast approach to malware classification for memory-resident malware. To show the time efficiency of the LIME approach, we compared it to the Texture-Based approach, as shown in

Table 16. The table presents the computational time comparison for the texture-based analysis using the 200 × 200 resolution for the combination of local and global descriptors and the LIME-based approach using the MobileNet model. The results highlight the efficiency of LIME-based cropped images using the proposed depthwise approach over the traditional texture analysis, making it a suitable solution for real-time or large-scale in-memory malware detection situations. Although the LIME-based approach achieves slightly lower accuracy than full-resolution texture analysis, it provides more than 98% faster processing. This balance between speed and accuracy is well suited for real-time or resource-limited applications, where interpretability and efficiency are key priorities.

The proposed approach reports runtime measured after image preprocessing, focusing on the feature extraction and classification phases for both the original and LIME-cropped images. The LIME preprocessing step (sampling, perturbation, and segmentation) is executed once during dataset preparation and excluded from the per-image throughput measurement.

5. Comparison to the State-of-the-Art Approaches

In this section, we compare the proposed work with state-of-the-art (SOTA) methods. The SOTA works achieved high results for memory-resident malware detection, such as the work proposed by Bozkir et al. [

30], which achieved 96.39% accuracy using HOG+GIST features. However, this work introduces an advancement by integrating model interpretability with feature localization through LIME. Unlike the approach in [

30], which depended on a full RGB image at various resolutions, our approach focuses only on the most important regions of interest. Therefore, it reduces computational efforts by significantly reducing input features and noise. Moreover, some studies, such as the work by Liu et al. [

23], used deep learning approaches or local and global texture descriptors with sliding windows, which lack explainability. Meanwhile, the proposed work enables more adaptive analysis by applying the two-stage segmentation approach based on entropy-driven LIME explanations. This offers a suitable solution for resource-constrained environments. Furthermore, the resolution used in LIME-based cropped images is very low (100 × 100) compared to other works, which indicates the effectiveness of the strategy even under low resolutions. Finally, adjusting LIME computational load based on the entropy-based sampling strategy is absent in prior work.

The results demonstrate that integrating LIME and pretrained models into memory-resident malware detection is an effective and efficient approach in terms of accuracy (up to 85%) using very low resolution and computational efforts (time and resources).

Table 17 comprehensively compares the proposed method with the state-of-the-art approaches in terms of computational resources and performance metrics.

The comparisons demonstrate the effectiveness of the proposed method in achieving an optimal balance between computational efficiency and performance measures as a feasible alternative to existing state-of-the-art approaches.

6. Conclusions

Memory-resident or fileless malware forms a serious threat to computer systems since it cannot be detected using disk scans. Therefore, memory forensics is crucial in detecting such types of malware. Extracting memory dumps and converting them to images to be used later with machine learning classifiers is one of the promising approaches to the detection of memory-resident or fileless malware. However, processing high-resolution RGB images and extracting relevant features is costly due to the high computations it requires. Moreover, selecting relevant features while reducing the classification computations is challenging.

In this paper, we have presented a comprehensive methodology for detecting memory-resident malware utilizing images of memory forensics. The experiments were applied using the DumpWare10 dataset, and this paper has analyzed and compared the use of local and global descriptors (HOG, LBP, SIFT, ORB, DWT, GIST, and GLCM) individually and combined using different image resolutions, which are 64 × 64, 100 × 100, 200 × 200, and 300 × 300. Moreover, this paper has investigated the use of different machine learning classifiers. The results have shown that the best performance was achieved using the local descriptor HOG and the global descriptor DWT by achieving F1-scores of 93% and 94%, respectively. Moreover, the experiments have shown that the DWT achieved an excellent F1-score of 91% at 64 × 64 resolution, which highlights the reliable performance of DWT even at very low resolutions. However, the results have shown that HOG works very well at high resolutions by achieving F1 scores of 93% at 200 × 200 resolution, but it performs poorly at low resolutions by achieving F1 scores of 74% at 64 × 64. A worthy notice of the results of the work at the 300 × 300 resolution (the original size of the images) is that the F1-scores for both DWT and HOG do not show significant improvement, with DWT’s F1-score slightly decreasing from 94% to 93%. This concludes that the resolution 200 × 200 is an optimal resolution for balancing between performance and computational efficiency.

To further reduce the computational cost without losing features, we have converted RGB images to grayscale. The LIME explainability technique was applied to identify the most relevant regions in each image based on the prediction of two selected pretrained models (ResNet50 and MobileNet) separately. The depthwise DNN is trained and evaluated on the generated cropped images as two separate datasets. The results have shown that even at lower resolutions (100 × 100), the proposed approach has achieved competitive classification performance (85% accuracy based on MobileNet predictions extracted by LIME) with a notable reduction in processing time. In conclusion, this paper has highlighted the importance of balancing resolution, feature selection, and model interpretability to optimize performance in memory forensics image classification. Memory-resident or fileless malware forms a serious threat to computer systems since it cannot be detected using disk scans. Therefore, memory forensics is crucial in detecting such types of malware. Extracting memory dumps and converting them to images to be used later with machine learning classifiers is one of the promising approaches to the detection of memory-resident or fileless malware. However, processing high-resolution RGB images and extracting relevant features is costly due to the high computations it requires. Moreover, selecting relevant features while reducing the classification computations is challenging.

In future work, we plan to run a new dataset of malware to extract the memory dumps at different time windows to investigate the best timings for taking memory dumps and extract the most relevant features from the images of memory dumps to enhance malware detection using memory forensics. Moreover, we will apply LIME using different method parameters and higher resolutions to improve the accuracy.

Author Contributions

Conceptualization, Q.M.Y.; methodology, Q.M.Y.; software, E.O.; validation, E.O., Q.M.Y., and M.A.; formal analysis, Q.M.Y. and E.O.; data curation, E.O.; writing—original draft preparation, E.O.; writing—review and editing, Q.M.Y., M.A., and S.F.; visualization, S.F.; supervision, Q.M.Y., M.A., and S.F.; project administration, Q.M.Y.; funding acquisition, Q.M.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Ajman University, United Arab Emirates, under Grant 2023-IRG-ENIT-28.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- AV-TEST. Malware Statistics. 2024. Available online: https://www.av-test.org/en/statistics/malware/ (accessed on 5 September 2024).

- Hasan, S.M.R.; Dhakal, A. Obfuscated Malware Detection: Investigating Real-world Scenarios through Memory Analysis. arXiv 2024, arXiv:2404.02372. [Google Scholar] [CrossRef]

- Qawasmeh, E.; Al-Saleh, M.I. On Producing Events Timeline for Memory Forensics: An Experimental Study. In Proceedings of the 2020 Seventh International Conference on Information Technology Trends (ITT), Abu Dhabi, United Arab Emirates, 25–26 November 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Case, A.; Richard, G.G. Memory forensics: The path forward. Digit. Investig. 2017, 20, 23–33. [Google Scholar] [CrossRef]

- Nataraj, L.; Karthikeyan, S.; Jacob, G.; Manjunath, B.S. Malware images: Visualization and automatic classification. In Proceedings of the Visualization for Computer Security, Pittsburgh, PA, USA, 20 July 2011. [Google Scholar]

- Odeh, A.; Taleb, A.A.; Alhajahjeh, T.; Navarro, F. Advanced memory forensics for malware classification with deep learning algorithms. Clust. Comput. 2025, 28, 353. [Google Scholar] [CrossRef]

- Fahad Malik, M.; Gul, A.; Saadia, A.; Alserhani, F.M. Few-Shot Learning with Prototypical Networks for Improved Memory Forensics. IEEE Access 2025, 13, 79397–79409. [Google Scholar] [CrossRef]

- Cuckoo Sandbox Developers. Cuckoo Sandbox: Automated Malware Analysis. 2010. Available online: https://cuckoosandbox.org/ (accessed on 23 August 2025).

- Kara, I. Fileless malware threats: Recent advances, analysis approach through memory forensics and research challenges. Expert Syst. Appl. 2023, 214, 119133. [Google Scholar] [CrossRef]

- Pant, D.; Bista, R. Image-Based Malware Classification Using Deep Convolutional Neural Network and Transfer Learning. In Proceedings of the 2021 3rd International Conference on Advanced Information Science and System (AISS 2021), Sanya, China, 26–28 November 2021. [Google Scholar] [CrossRef]

- Shah, S.S.H.; Jamil, N.; Khan, A.u.R. Memory Visualization-Based Malware Detection Technique. Sensors 2022, 22, 7611. [Google Scholar] [CrossRef]

- Ucci, D.; Aniello, L.; Baldoni, R. Survey of machine learning techniques for malware analysis. Comput. Secur. 2019, 81, 123–147. [Google Scholar] [CrossRef]

- Souri, A.; Hosseini, R. A state-of-the-art survey of malware detection approaches using data mining techniques. Hum.-Cent. Comput. Inf. Sci. 2018, 8, 3. [Google Scholar] [CrossRef]

- Ali-Gombe, A.; Sudhakaran, S.; Vijayakanthan, R.; Richard, G.G. cRGBMem: At the intersection of memory forensics and machine learning. Forensic Sci. Int. Digit. Investig. 2023, 45, 301564. [Google Scholar]

- Daoudi, N.; Samhi, J.; Kabore, A.K.; Allix, K.; Bissyandé, T.F.; Klein, J. DexRay: A Simple, Yet Effective Deep Learning Approach to Android Malware Detection Based on Image Representation of Bytecode. Commun. Comput. Inf. Sci. 2021, 1482, 81–106. [Google Scholar] [CrossRef]

- Yaseen, Q.M. The Effect of the Ransomware Dataset Age on the Detection Accuracy of Machine Learning Models. Information 2023, 14, 193. [Google Scholar] [CrossRef]

- AlOmari, H.; Yaseen, Q.M.; Al-Betar, M.A. A Comparative Analysis of Machine Learning Algorithms for Android Malware Detection. Procedia Comput. Sci. 2023, 220, 763–768. [Google Scholar] [CrossRef]

- AlJarrah, M.N.; Yaseen, Q.M.; Mustafa, A.M. A Context-Aware Android Malware Detection Approach Using Machine Learning. Information 2022, 13, 563. [Google Scholar] [CrossRef]

- Aldiabat, M.; Yaseen, Q.M.; Abu Ein, Q. An Efficient Random Forest Classifier for Detecting Malicious Docker Images in Docker Hub Repository. IEEE Access 2024, 12, 185586–185604. [Google Scholar] [CrossRef]

- Odat, E.; Yaseen, Q.M. A Novel Machine Learning Approach for Android Malware Detection Based on the Co-Existence of Features. IEEE Access 2023, 11, 15471–15484. [Google Scholar] [CrossRef]

- Bai, W.; Zhang, Z.; Li, B.; Wang, P.; Li, Y.; Zhang, C.; Hu, W. Robust Texture-Aware Computer-Generated Image Forensic: Benchmark and Algorithm. IEEE Trans. Image Process. 2021, 30, 8439–8453. [Google Scholar] [CrossRef]

- Shakir Hameed Shah, S.; Jamil, N.; ur Rehman Khan, A.; Mohd Sidek, L.; Alturki, N.; Muhammad Zain, Z. MalRed: An innovative approach for detecting malware using the red channel analysis of color images. Egypt. Inform. J. 2024, 26, 100478. [Google Scholar] [CrossRef]

- Liu, J.; Feng, Y.; Liu, X.; Zhao, J.; Liu, Q. MRm-DLDet: A memory-resident malware detection framework based on memory forensics and deep neural network. Cybersecurity 2023, 6, 21. [Google Scholar] [CrossRef]

- Sudhakar; Kumar, S. An emerging threat Fileless malware: A survey and research challenges. Cybersecurity 2020, 3, 1. [Google Scholar] [CrossRef]

- Sihwail, R.; Omar, K.; Arifin, K.A.Z. An Effective Memory Analysis for Malware Detection and Classification. Comput. Mater. Contin. 2021, 67, 2301–2320. [Google Scholar] [CrossRef]

- Mosli, R.; Li, R.; Yuan, B.; Pan, Y. A Behavior-Based Approach for Malware Detection. In Advances in Digital Forensics XIII; Peterson, G., Shenoi, S., Eds.; Springer: Cham, Switzerland, 2017; pp. 187–201. [Google Scholar]

- Lashkari, A.H.; Li, B.; Carrier, T.L.; Kaur, G. VolMemLyzer: Volatile Memory Analyzer for Malware Classification Using Feature Engineering. In Proceedings of the 2021 Reconciling Data Analytics, Automation, Privacy, and Security: A Big Data Challenge (RDAAPS), Hamilton, ON, Canada, 18–19 May 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Dener, M.; Ok, G.; Orman, A. Malware Detection Using Memory Analysis Data in Big Data Environment. Appl. Sci. 2022, 12, 8604. [Google Scholar] [CrossRef]

- Dai, Y.; Li, H.; Qian, Y.; Lu, X. A malware classification method based on memory dump grayscale image. Digit. Investig. 2018, 27, 30–37. [Google Scholar] [CrossRef]

- Bozkir, A.S.; Tahillioglu, E.; Aydos, M.; Kara, I. Catch them alive: A malware detection approach through memory forensics, manifold learning and computer vision. Comput. Secur. 2021, 103, 102166. [Google Scholar] [CrossRef]

- Zhang, S.; Hu, C.; Wang, L.; Mihaljevic, M.J.; Xu, S.; Lan, T. A Malware Detection Approach Based on Deep Learning and Memory Forensics. Symmetry 2023, 15, 758. [Google Scholar] [CrossRef]

- Kosmidis, K.; Kalloniatis, C. Machine Learning and Images for Malware Detection and Classification. In Proceedings of the 21st Pan-Hellenic Conference on Informatics, PCI’17, New York, NY, USA, 28–30 September 2017. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why Should I Trust You?”: Explaining the Predictions of Any Classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD’16, New York, NY, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar] [CrossRef]

- Cbhua. GitHub-cbhua/binary-to-png: A Useful Tool for Extracting Images from Binary Files. Available online: https://github.com/cbhua/binary-to-png (accessed on 20 August 2025).

- Bradski, G.; Kaehler, A. Learning OpenCV—Computer Vision with the OpenCV Library: Software That Sees; O’Reilly: Springfield, MO, USA, 2008; ISBN 978-0-596-51613-0. [Google Scholar]

Figure 1.

Texture-based analysis.

Figure 1.

Texture-based analysis.

Figure 2.

The conversion of an image from RGB to gray formula.

Figure 2.

The conversion of an image from RGB to gray formula.

Figure 9.

Confusion matrix.

Figure 9.

Confusion matrix.

Figure 10.

LIME-based classification.

Figure 10.

LIME-based classification.

Figure 11.

The best results of local descriptors at 64 × 64 resolution.

Figure 11.

The best results of local descriptors at 64 × 64 resolution.

Figure 12.

The best results of local descriptors at 100 × 100 resolution.

Figure 12.

The best results of local descriptors at 100 × 100 resolution.

Figure 13.

The best results of local descriptors at 200 × 200 resolution.

Figure 13.

The best results of local descriptors at 200 × 200 resolution.

Figure 14.

The best results of local descriptors at 300 × 300 resolution.

Figure 14.

The best results of local descriptors at 300 × 300 resolution.

Figure 15.

The best results of global descriptors at 64 × 64 resolution.

Figure 15.

The best results of global descriptors at 64 × 64 resolution.

Figure 16.

The best results of global descriptors at 100 × 100 resolution.

Figure 16.

The best results of global descriptors at 100 × 100 resolution.

Figure 17.

The best results of global descriptors at 200 × 200 resolution.

Figure 17.

The best results of global descriptors at 200 × 200 resolution.

Figure 18.

The best results of global descriptors at 300 × 300 resolution.

Figure 18.

The best results of global descriptors at 300 × 300 resolution.

Figure 19.

F1-score-based comparison analysis between DWT and HOG.

Figure 19.

F1-score-based comparison analysis between DWT and HOG.

Figure 20.

Local and global combination performance at different image resolutions. (a) Local and global combination performance at 64 × 64 resolution. (b) Local and global combination performance at 100 × 100 resolution. (c) Local and global combination performance at 200 × 200 resolution. (d) Local and global combination performance at 300 × 300 resolution.

Figure 20.

Local and global combination performance at different image resolutions. (a) Local and global combination performance at 64 × 64 resolution. (b) Local and global combination performance at 100 × 100 resolution. (c) Local and global combination performance at 200 × 200 resolution. (d) Local and global combination performance at 300 × 300 resolution.

Figure 21.

ROC curve for multiclass classification using Logistic Regression classifier for combined global and local features at 300 × 300 resolution.

Figure 21.

ROC curve for multiclass classification using Logistic Regression classifier for combined global and local features at 300 × 300 resolution.

Table 1.

Properties and configuration of global feature extraction methods.

Table 1.

Properties and configuration of global feature extraction methods.

| Method | Main Parameters/Settings | Key Properties Leveraged |

|---|

| DWT (Discrete Wavelet Transform) | Wavelet family = ‘db1’ (Daubechies); Decomposition level = 2. | Decomposes images into segments to capture both spatial and frequency information; sensitive to structural and textural changes in the spatial–frequency domain. |

| GIST Descriptor | Gabor filter bank: distance = 1; angle = 0°. | Summarizes frequency content and global spatial layout; offers a comprehensive depiction of scene structure and textured distribution. |

| GLCM (Gray-Level Co-occurrence Matrix) | Distance = 1; Angle = 0°; Levels = 256; symmetric and normalized; Extracted features: contrast, dissimilarity, homogeneity, energy, correlation, ASM. | Quantifies surface characteristics like contrast, smoothness, and homogeneity; models second-order statistical texture connections between pixel intensities. |

Table 2.

Properties and configuration of local feature extraction methods.

Table 2.

Properties and configuration of local feature extraction methods.

| Method | Main Parameters/Settings | Key Properties Leveraged |

|---|

| HOG (Histogram of Oriented Gradients) | Orientations = 9; Cell size = 8 × 8; Block size = 2 × 2 cells;

Block normalization = L2-Hys; transform_sqrt=True. | Captures gradient structures and local edge directions; resistant to illumination and geometrical changes. |

| LBP (Local Binary Pattern) | P = 16 sampling points; R = 2 radius; Method = ‘uniform’;

18-bin normalized histogram. | Encodes patterns of micro-texture by comparing each pixel to its neighbors; resistant to illumination changes. |