1. Introduction

Financial fraud refers to the use of deceptive or illegal practices to obtain unauthorized financial gains [

1]. This broad category includes credit card fraud, tax evasion, financial statement manipulation, money laundering, and synthetic identity creation [

2]. Among these, credit card fraud remains one of the most pervasive and damaging forms, affecting the global financial landscape, costing businesses, consumers, and governments billions of dollars annually. The scope and sophistication of fraud have escalated sharply in recent years, driven by the widespread adoption of digital technologies and the emergence of advanced tools like generative artificial intelligence.

According to the European Central Bank and European Banking Authority, over 70% of fraudulent payment transactions across the EU in recent years were associated with digital channels, including phishing attacks, account takeovers, and card-not-present (CNP) fraud scenarios [

3]. This trend underscores the growing exposure of electronic payment systems to cyber-enabled threats. In a global context, the Nilson Report highlights a significant imbalance in fraud distribution. Although U.S.-issued cards accounted for just 25.29% of global transaction volume in 2023, they were responsible for a disproportionate 42.32% of total card fraud losses worldwide [

4]. This reflects a persistent vulnerability within U.S. payment infrastructure, where fraud losses remain concentrated despite widespread implementation of Europay, Mastercard, and Visa (EMV) technology and stronger authentication measures. Total global fraud losses exceeded

$34 billion in 2023, marking the highest level in the past seven years and illustrating the continued escalation of sophisticated, cross-border financial crime. The Entrust 2025 Identity Fraud Report further emphasizes the exponential growth of AI-assisted fraud, with digital document forgeries increasing 244% year-over-year and deepfakes now comprising 40% of biometric fraud incidents [

5].

As the digital economy expands, so too does the complexity of fraud, necessitating advanced, scalable, and explainable detection systems. Traditional manual detection mechanisms are no longer sufficient. In response, the financial industry is increasingly turning toward machine learning (ML) and explainable artificial intelligence (XAI) technologies to enhance fraud detection and resilience [

6,

7,

8]. These intelligent systems are capable of processing high-dimensional data, identifying complex fraud patterns, and offering interpretable insights critical for compliance and trustworthiness.

Despite the growing body of research in financial fraud detection, many existing review studies have addressed specific domains such as online banking fraud [

9,

10], payment card misuse [

11,

12], or healthcare fraud [

13,

14]. At the same time, relatively few have provided a consolidated view focusing exclusively on credit card fraud detection through ML approaches [

1,

15,

16]. Furthermore, a significant portion of the reviewed works rely on oversampled or synthetically balanced datasets, which do not fully reflect real-world transaction environments where fraud cases remain extremely rare [

17].

This review addresses a critical gap by synthesizing machine learning approaches for credit card fraud detection under the original class distribution, without applying over- or undersampling during training or evaluation, and drawing on recent studies published between 2019 and 2025. Using a Kitchenham-style protocol [

18], we systematically screened and extracted evidence from the literature, retaining only studies that reported at least Precision and Recall alongside a minimum set of evaluation metrics to enable comparability. The analysis quantifies patterns in dataset usage, model choice, and evaluation practices under true class priors, and assesses the extent to which interpretability is incorporated to support operational deployment.

The remainder of this article is structured as follows.

Section 2 describes the review methodology, including the search strategy, screening protocol, inclusion and exclusion criteria, and quality assessment procedures.

Section 3 presents the results of the systematic review, organized thematically to address dataset usage, algorithmic approaches, evaluation practices, and the integration of interpretability techniques.

Section 4 offers a critical discussion of the implications for both research and practice.

Section 5 outlines the study’s limitations and threats to validity.

Section 6 concludes with key takeaways and directions for future research.

2. Methodology

This research adopts a systematic literature review (SLR) framework to collect, evaluate, and synthesize existing research on ML methods for credit card fraud detection. The purpose of using an SLR approach is to ensure that the review process is transparent, unbiased, and replicable, allowing for a structured analysis of relevant literature that directly addresses the defined research questions. By aggregating high-quality, peer-reviewed sources, this review aims to offer evidence-based insights and minimize potential researcher bias throughout the selection and interpretation phases.

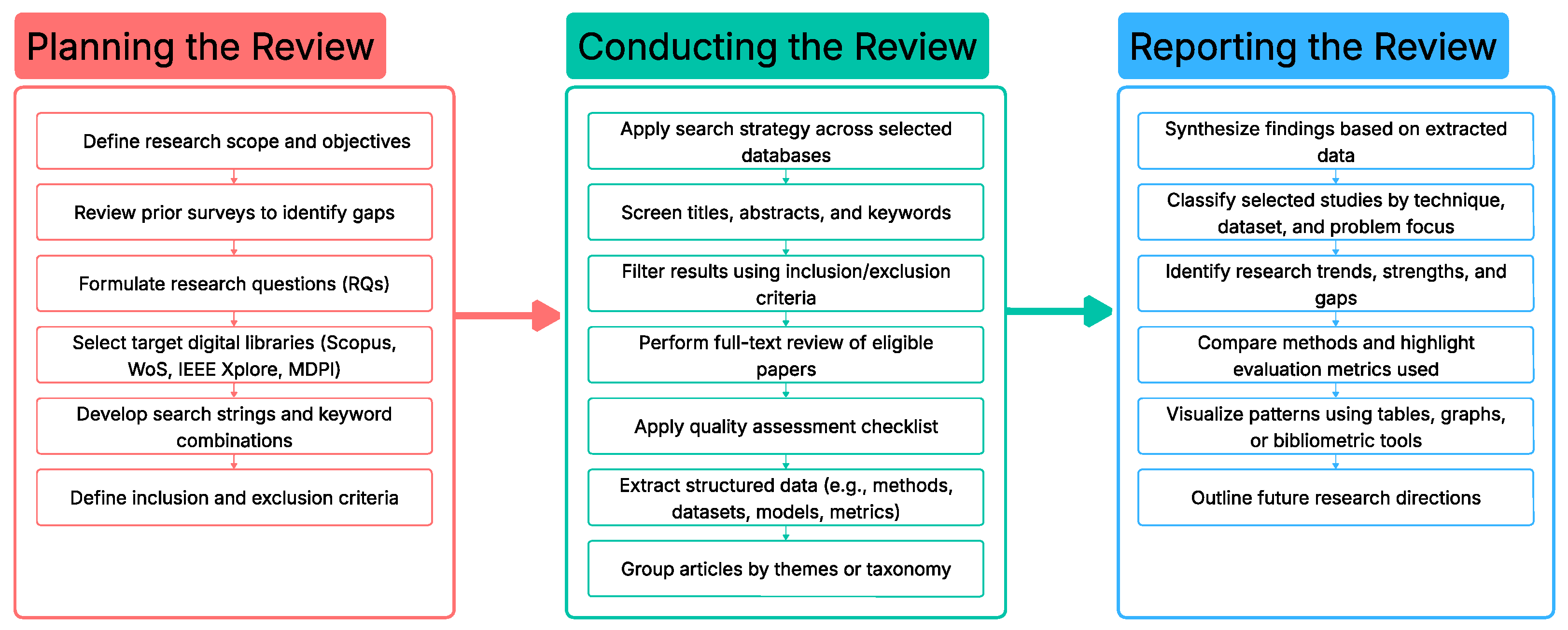

The methodology employed in this review follows the guidelines outlined by Kitchenham and colleagues [

18], which organize the SLR process into three primary phases: planning the review, conducting the review, and reporting the review. Each phase involves specific tasks that ensure the integrity and comprehensiveness of the review process, as illustrated in

Figure 1.

The primary objective of this review is to provide a comprehensive synthesis of ML-based approaches for credit card fraud detection, with particular emphasis on:

Techniques that address highly imbalanced datasets;

Models that prioritize Recall, area under the precision–recall curve (AUC-PR), and explainability;

The use of Shapley additive explanations (SHAP) and other XAI methods for fraud interpretability.

This study does not focus on generic financial fraud domains such as insurance, telecom, or health fraud, but instead narrows the scope to transaction-level fraud in credit card systems.

As part of the review planning phase, we conducted an exploratory analysis of existing literature surveys in the domain of financial fraud detection. We identified several review articles related to credit card fraud detection [

1,

2,

15,

19]. However, none of them emphasized studies that retain the original class imbalance typically present in real-world fraud datasets. This review aims to address that gap by focusing on models evaluated under naturally imbalanced conditions, where fraud remains a rare event.

Moreover, prior surveys commonly rely on general metrics and area under the receiver operating characteristic curve (AUC-ROC), which may not adequately reflect performance in imbalanced settings. In contrast, this review prioritizes AUC-PR over AUC-ROC, which is more appropriate for evaluating fraud detection models. Additionally, the role of model interpretability, crucial in financial applications, has received limited attention in prior reviews [

1,

2,

15,

16].

This study addresses these gaps by focusing on ML-based credit card fraud detection methods that preserve dataset imbalance, apply suitable evaluation metrics, and incorporate explainability techniques such as SHAP.

To guide the structure and focus of this systematic review, we formulated a set of research questions (RQs). These questions were designed to capture the core aspects of ML-based credit card fraud detection, with a particular emphasis on real-world challenges such as class imbalance, metric selection, and model interpretability.

Table 1 outlines the four main research questions along with their corresponding motivations, which serve as the foundation for article selection, categorization, and analysis in subsequent sections.

The search process was guided by a structured keyword query combining domain-specific and technical terms. Boolean logic (AND, OR) was used to form robust search strings across multiple digital libraries. The detailed criteria and sources are summarized in

Table 2.

The systematic search was conducted in July 2025 across four major scholarly databases: Scopus, Web of Science, IEEE Xplore, and MDPI. The search strategy and eligibility criteria (outlined in

Table 2) were developed in accordance with the PRISMA 2020 guidelines [

20] to identify relevant studies on machine learning applications in credit card fraud detection. Study screening involved title and abstract filtering, exclusion of irrelevant publication types, and full-text review of eligible articles.

To illustrate the temporal distribution of retrieved publications,

Figure 2 presents results from the Scopus database as a representative example. The number of publications related to credit card fraud detection using machine learning increased consistently from 2019 to 2024, indicating growing academic and industrial interest in combating fraud with AI-driven techniques. The peak in 2024 reflects heightened attention to this domain, while preliminary data for 2025 suggest sustained research activity.

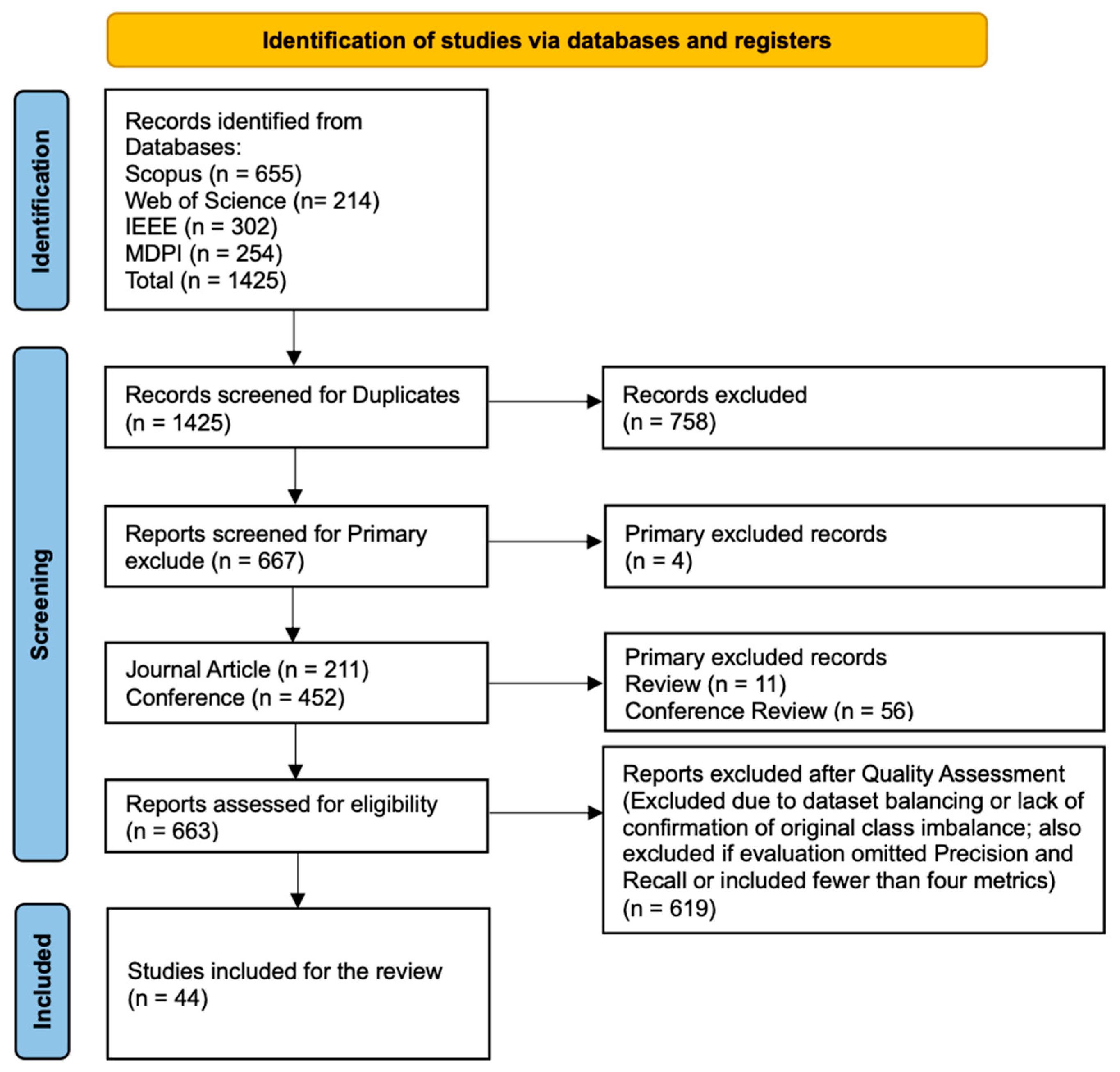

The study selection process, along with the number of records included and excluded at each stage, is illustrated in the PRISMA 2020 flow diagram (

Figure 3) and is further described in the

Section 3.

3. Results

3.1. Study Selection

The systematic search conducted across four major digital libraries: Scopus, Web of Science, IEEE Xplore, and MDPI yielded a total of 1425 records (Scopus: 655; Web of Science: 214; IEEE: 302; MDPI: 254). After removing 758 duplicates and irrelevant entries, 667 records remained for further screening.

An additional four records were excluded at the preliminary stage due to incorrect metadata or inaccessible full texts. The remaining 663 records (211 journal articles and 452 conference papers) were subjected to full-text assessment against the inclusion and exclusion criteria (outlined in

Table 2).

Following a rigorous quality assessment, 619 records were excluded due to:

Resampling or synthetic augmentation (e.g., SMOTE/ADASYN, random over/undersampling, GAN-based generation);

Absence of confirmation that the original class imbalance was retained;

Insufficient evaluation: studies that did not report both Recall and Precision, or reported fewer than four evaluation metrics.

Lack of dataset transparency, such as unnamed or proprietary datasets without a clear description.

The final set of included studies comprised 44 peer-reviewed publications, including 26 conference papers and 18 journal articles. These studies specifically addressed credit card fraud detection using machine learning while retaining the original class imbalance and reporting at least four evaluation metrics, including both Recall and Precision. A visual summary of the selection process is shown in

Figure 3, following the PRISMA 2020 flow diagram.

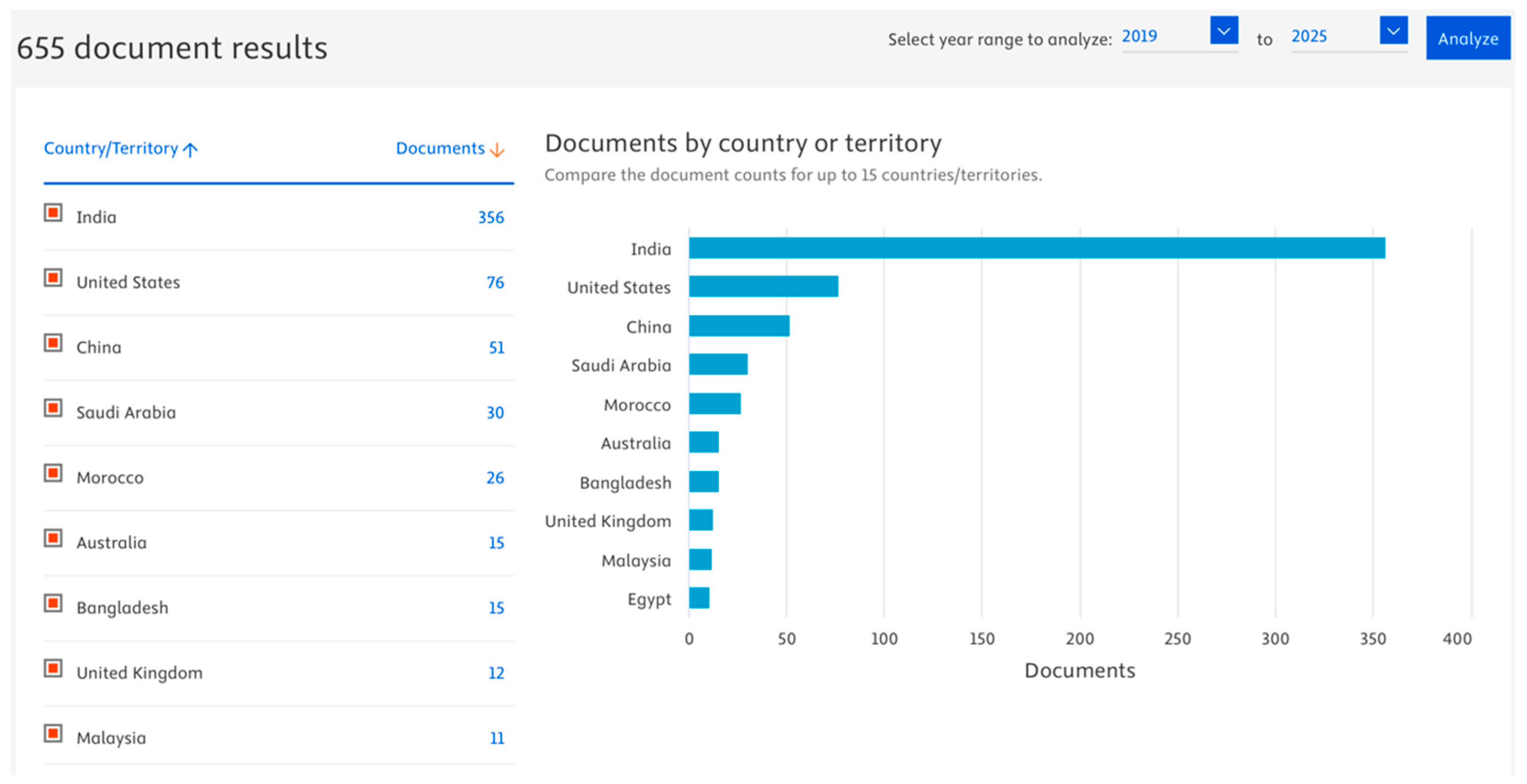

To complement the PRISMA analysis and provide an overview of the research landscape,

Figure 4 and

Figure 5 summarize the country-wise and document-type distributions of the retrieved Scopus records (

n = 655), prior to quality assessment and inclusion.

As shown in

Figure 4, India led in the number of publications (

n = 356), followed by the United States (

n = 76) and China (

n = 51), indicating regional research concentration in fraud detection using ML.

In terms of publication type,

Figure 5 illustrates that conference proceedings constituted the majority (69.5%,

n = 455), while journal articles accounted for 30.5% (

n = 200). This dominance of conference papers suggests rapid dissemination of evolving methodologies in this fast-paced research domain.

3.2. Results Organized by Research Questions

This section presents the findings of the systematic literature review, systematically organized by the four predefined Research Questions (RQs). Each subsection synthesizes the relevant evidence extracted from the included studies.

3.2.1. RQ1: Which Publicly Available or Private Datasets Are Most Frequently Used in Credit Card Fraud Detection Studies That Retain the Original Class Imbalance Without Applying Resampling Techniques

A review of the 44 included studies shows that a relatively small set of benchmark datasets dominates research in credit card fraud detection when the original class distribution is preserved. These datasets span real-world and synthetic sources and vary in size, feature semantics, and fraud prevalence, but all are used without oversampling or undersampling during training or evaluation.

Publicly available datasets remain the primary empirical basis. Particularly, the European Credit Card Fraud dataset is used in 32 of 44 studies (72.7%) and continues to serve as the benchmark due to its accessibility, realistic prevalence (~0.17% fraud), and long-standing role in comparative evaluation. The following most frequently used public sources are the IEEE-CIS/Vesta e-commerce dataset, employed by three of the 44 studies (6.8%), and the Credit Card Transactions Fraud Detection Dataset (Sparkov Dataset), also used by three of the 44 studies (6.8%). IEEE-CIS provides large-scale, multi-table online payment records with identity and device attributes. In contrast, Sparkov Dataset offers a synthetic, privacy-preserving transaction stream with documented class prevalence suitable for rare-event benchmarking.

Private datasets appear less often because of access constraints but add functional heterogeneity: a Brazilian bank dataset features in three studies (6.8%), the UCSD–FICO 2009 dataset and the Greek financial institution dataset each appear in only one study (2.3%). Among synthetic sources beyond Sparkov, BankSim and PaySim each appear in two studies (4.5%), and IBM TabFormer is used in one study (2.3%). These synthetic resources support controlled experimentation and privacy protection, but inevitably abstract away operational semantics and merchant- or customer-level context.

Table 3 summarizes the key datasets identified in the reviewed studies, including their source, size, fraud ratio, availability, and frequency of use in the literature.

Following

Table 3, authors commonly justify retaining the native class skew to mirror operational conditions and to ensure that reported performance reflects deployment realities. Many note that training on the original distribution avoids synthetic noise and preserves decision boundaries better than resampling, while others caution that oversampling, particularly before train-validation splitting, can induce information leakage and inflate reported results.

While concentration on the European dataset has facilitated reproducibility and comparability, heavy reliance on a single anonymized benchmark risks narrowing insights into robustness and transferability. Future progress would benefit from public, semantics-preserving benchmarks that balance privacy with interpretability, thereby enabling stronger XAI, more realistic threshold selection, and more explicit cross-method comparisons.

3.2.2. RQ2: What Types of Machine Learning Algorithms Are Most Frequently Applied in Credit Card Fraud Detection Studies That Retain the Original Class Imbalance

Across the 44 included studies that preserve the original class distribution, a wide range of ML algorithms have been employed, spanning classical supervised learners, tree-based ensembles, deep and hybrid models, and unsupervised anomaly detection methods. In these works, class imbalance is addressed primarily through model-level strategies, such as class/cost weighting [

7,

23,

26,

61,

69], custom loss functions [

29], and decision-threshold tuning [

7,

26,

48,

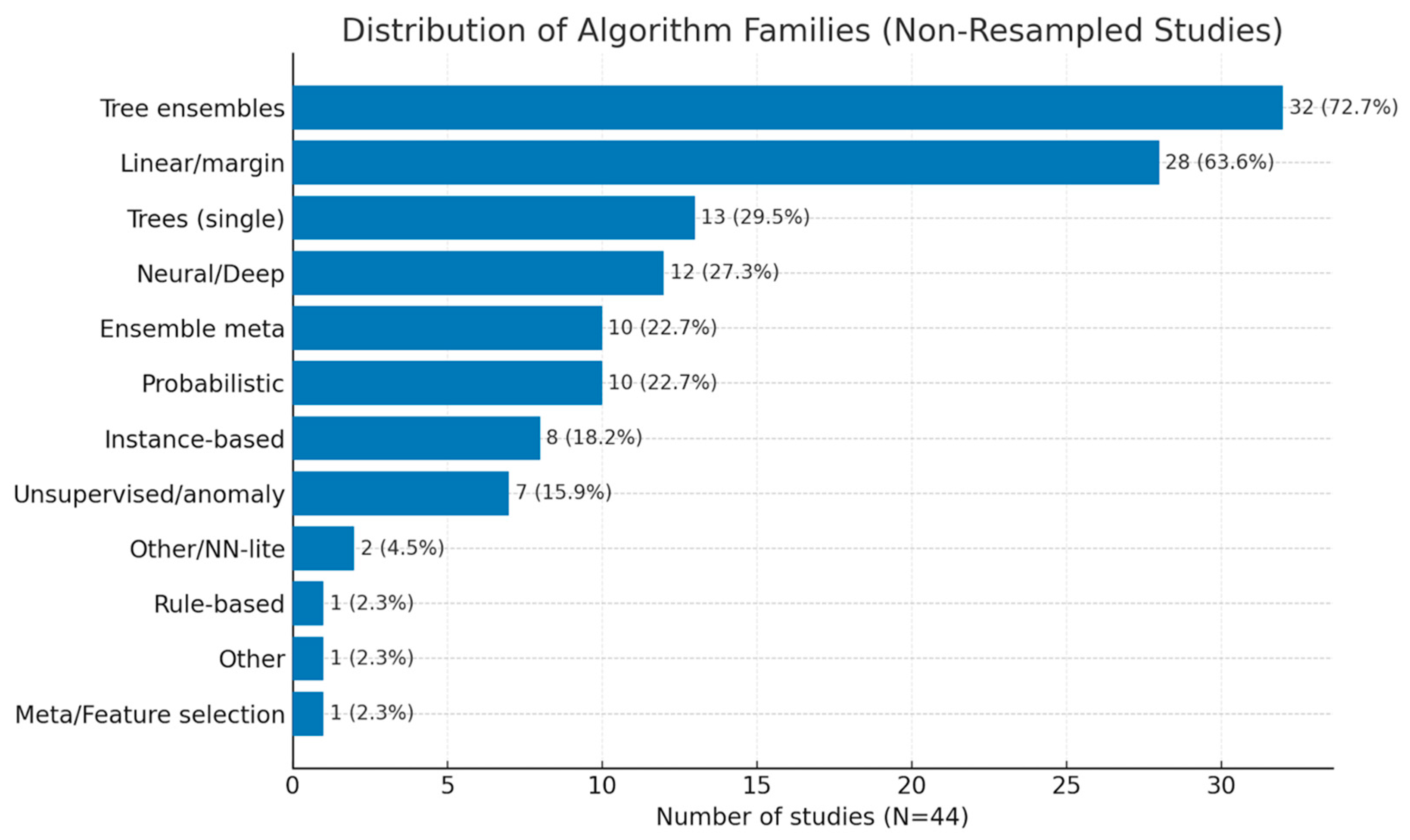

49], rather than oversampling or undersampling. Tree-based ensembles are the most prevalent family, appearing in thirty-two of the forty-four studies (72.7%). Linear/margin models (primarily Logistic Regression and Support Vector Machines (SVM)) are also widespread, followed by single decision trees and neural/deep architectures. Secondary families include ensemble meta-strategies such as bagging/stacking and probabilistic methods (Naïve Bayes/Gaussian Mixture Models (GMM)). Instance-based methods (k-NN) and unsupervised/anomaly detectors (Isolation Forest, Local Outlier Factor (LOF), One-Class SVM, Autoencoders) are less common.

Figure 6 visualizes the distribution of algorithm families, while the detailed per-algorithm counts and references are presented across

Table 4,

Table 5,

Table 6 and

Table 7, grouped by tree-based ensembles, linear/probabilistic models, neural network approaches, and emerging methods.

Across individual algorithms, Random Forest (RF) is most common (24 studies; 54.5%), followed by Logistic Regression (LR) (19 studies; 43.2%) and Support Vector Machines (SVM) (14 studies; 31.8%). Among the boosting variants, XGBoost appears in twelve studies (27.3%), LightGBM in ten (22.7%), and CatBoost in five (11.4%). This pattern reflects the field’s preference for tree ensembles on tabular transactions, while retaining LR and SVM as strong, interpretable baselines.

Deep and hybrid models are reported in twelve studies (27.3%), including feed-forward neural networks (MLP/ANN), convolutional networks (CNNs), recurrent networks (LSTM/GRU), CNN–BiLSTM hybrids, graph neural networks (GNNs), and tabular attention models such as TabTransformer. These architectures are often paired with boosted trees in stacked ensembles and trained with weighted losses and regularization to mitigate bias toward the majority class. Unsupervised and semi-supervised anomaly detection remains less common (approximately 16% of studies), leveraging Isolation Forest, LOF, One-Class SVM, and Autoencoders to model legitimate behavior and flag deviations. Several papers integrate these detectors into hybrid pipelines in which a supervised model subsequently classifies anomalies identified in an unsupervised stage.

Overall, the evidence indicates a clear methodological concentration on tree-based ensembles, particularly Random Forest and gradient-boosting methods, supported by interpretable linear baselines and a growing, though still modest, adoption of deep, attention-based, and graph-oriented architectures. This pattern reflects a pragmatic trade-off between achieving high recall-oriented performance under heavy class imbalance and retaining computational efficiency and interpretability for operational deployment.

3.2.3. RQ3: In Studies That Retain the Original Class Imbalance, Which Evaluation Metrics Are Prioritized, and How Frequently Are Recall and AUC-PR Favored over Accuracy or AUC-ROC

Selecting appropriate performance metrics is critical for financial fraud detection, where the positive class is exceedingly rare and the misclassification costs are highly asymmetric [

2,

74]. Under such conditions, overall Accuracy can be misleading. In contrast, metrics conditioned on the positive class, such as Recall, Precision, F1-score, and the area under the Precision–Recall curve (AUC-PR), provide a more faithful characterization of detection capability and support principled threshold selection for deployment [

7,

75,

76,

77]. Nevertheless, there is no universally accepted evaluation standard [

2,

16]. Recent studies report a heterogeneous mix of scalar and curve-based indicators, and only a small subset incorporates cost- or profit-based measures aligned with operational risk.

Table 8 details each metric’s formula and representative references.

In fraud detection, Accuracy quantifies the proportion of correctly classified transactions overall, while Precision reflects the reliability of positive (fraud) alerts. Recall (Sensitivity/TPR) measures the share of truly fraudulent transactions that are correctly identified, and Specificity (TNR) measures the share of legitimate transactions correctly rejected [

2]. Because Precision and Recall move in opposite directions as the decision threshold is varied, practitioners face the well-known precision–recall trade-off. A common scalar compromise is the F1-score, the harmonic mean of Precision and Recall [

78]. In practice, varying the threshold and examining the induced sequence of confusion matrix constitutes parametric evaluation, which supports the selection of an operating point consistent with business constraints.

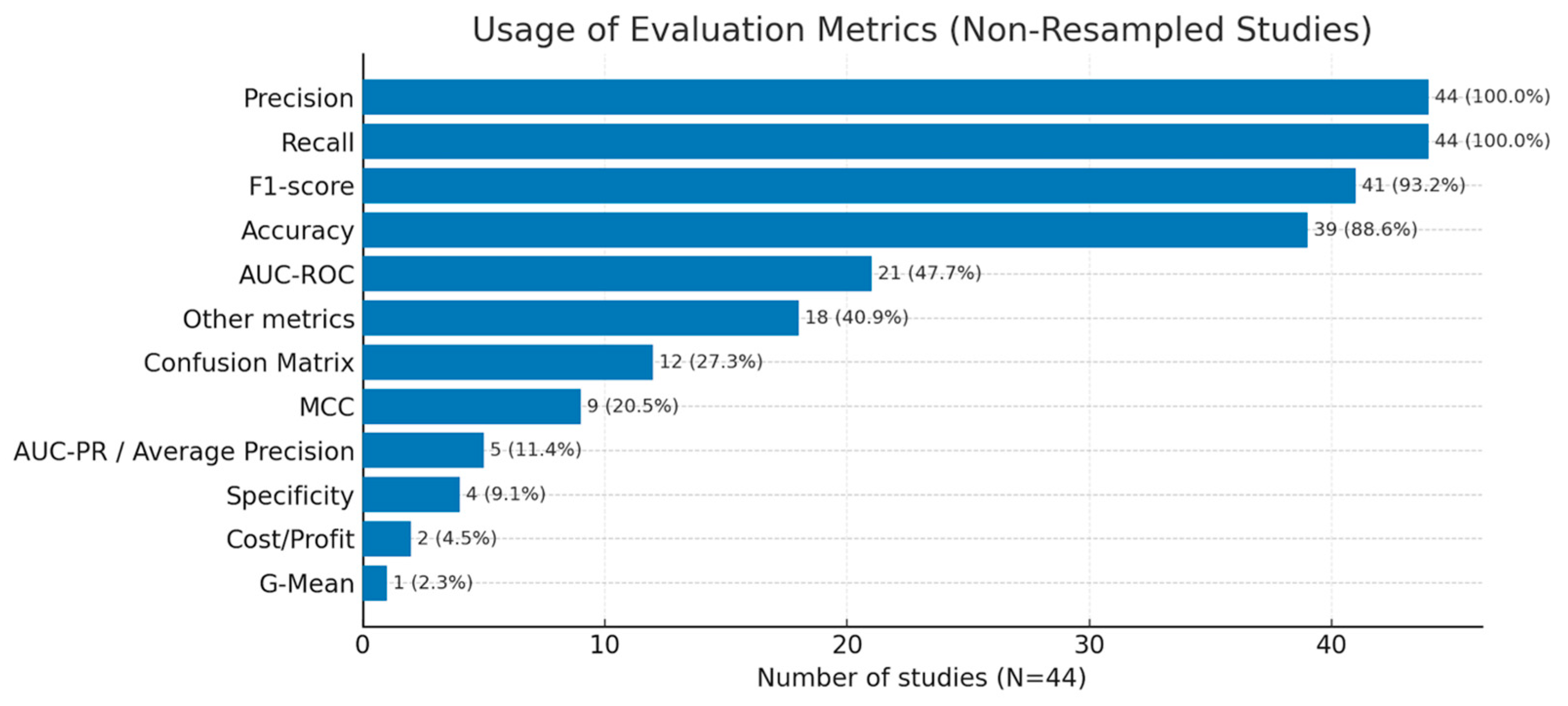

To ensure comparability, our inclusion criteria required studies to report both Precision and Recall, which are therefore present in all 44 studies (100%). Beyond these two, reporting practices concentrate on F1-score in 41 studies (93.2%) and Accuracy in 39 studies (88.6%). Curve-based metrics are less uniform. AUC-ROC appears in 21 studies (47.7%), whereas AUC-PR (or Average Precision) appears in only five studies (11.4%), typically where authors explicitly foreground severe class imbalance or adopt anomaly-detection framings [

7,

28,

38,

59,

64]. Additional reported measures include Matthews Correlation Coefficient (MCC) (20.5%) and Specificity (TNR) (9.1%). At the same time, several papers provide confusion-matrix counts and, more occasionally, cost/profit or alert-rate summaries to support operational decision-making.

Figure 7 provides an overview of how often each evaluation metric is reported across the included studies.

Because Recall and Precision were mandatory for inclusion, all studies report them. However, the selection narratives are generally recall-oriented, balancing Recall with Precision or F1-score on the premise that missed fraud is costlier than extra alerts [

25,

31,

32,

33,

36,

37,

38,

39,

42,

50]. Accuracy is typically kept for completeness rather than for decision-making. Although many authors note that AUC-PR is more appropriate under severe imbalance, AUC-ROC persists as the default curve metric, mainly for historical and tooling reasons. While AUC-PR is treated as a co-primary indicator alongside Recall, F1-score [

7,

28,

38,

59,

64]. Several studies explicitly caution that AUC-ROC can overstate performance in rare-event regimes [

31,

36,

37,

38,

42,

48,

55,

69,

72]. Only a small minority connect statistical metrics to business outcomes, with explicit cost or profit reporting limited to total processing cost (TPC) in a cost-sensitive boosting setting [

23] and total cost under cost-aware ensembles [

62]. Taken together, the literature favors recall-conscious, precision–recall (PR)-aware evaluation, but there remains significant scope for standardization of reporting on AUC-PR, threshold-dependent summaries, confusion matrix disclosure, MCC, and cost-linked measures to support deployment decisions better.

3.2.4. RQ4: How Are Interpretability Techniques Operationalized in Non-Resampled Credit-Card Fraud Studies to Explain Predictions and Support Operational Deployment

Explainable artificial intelligence (XAI) aims to make the behavior of ML models transparent and understandable, particularly for end-users, regulatory authorities, organizational personnel, and other stakeholders [

79]. In credit card fraud detection, this involves producing explanations that data scientists can validate, business users and investigators can act upon, and risk managers or regulators can audit. Interest in XAI has grown alongside the increasing deployment of ML in finance, driven by the need for trust, accountability, robustness, and compliance in high-stakes environments [

80].

Within the reviewed literature, only two studies implemented operational interpretability, and both adopted SHAP [

7,

73]. In the FraudX AI framework [

7], SHAP was applied to provide global and local attributions over PCA-anonymized features. Summary plots and feature-importance rankings supported auditor understanding and regulatory transparency, although the authors note that anonymization restricted semantic interpretation despite stable ranking of influential components.

In [

73], an unsupervised, production-proximate study using a Greek bank’s transaction data also integrated SHAP to generate both global and per-transaction explanations. Force and decision plots highlighted the drivers behind anomaly scores, aiding analysts in prioritization and reducing cognitive load. Interviews with monitoring staff indicated improved case review efficiency when SHAP outputs accompanied model alerts.

Across both implementations, SHAP is positioned as a model-agnostic interpretability layer serving two primary purposes:

Global governance—identifying the most influential features driving fraud predictions;

Local justification—providing case-level explanations to support analyst workflows and post hoc threshold adjustments.

Two additional studies did not implement XAI but explicitly identified it as planned work. Renganathan et al. [

46] suggested that adding explainability could enhance model transparency, foster user trust, and mitigate bias. Jain et al. [

58] likewise propose adopting SHAP and other model-agnostic methods to illuminate decision processes. Although not counted as operational XAI, these proposals reflect growing awareness of the need for explanation in governance and auditability, especially under original imbalanced distributions.

Overall, SHAP emerges as the predominant technique for operationalizing interpretability in non-resampled credit card fraud detection studies, valued for its model-agnostic design and ability to deliver both governance-level summaries and transaction-level justifications. However, adoption remains rare, constrained by: (i) semantic loss from anonymized feature spaces; (ii) lack of analyst-friendly explanation interfaces; and (iii) concerns about explanation stability under data drift and evolving fraud tactics. While SHAP dominates current implementations, other model-agnostic explainability techniques are emerging that could also be relevant for fraud detection. Local interpretable model-agnostic explanations (LIME), for instance, approximates complex model decisions with local surrogate models and have been applied in adjacent financial risk settings [

81]. More recent approaches such as counterfactual explanations, which identify minimal feature changes that would alter a model’s decision, are gaining attention as actionable tools for providing recourse in fraud detection. Diverse counterfactual explanations (DiCE) have been benchmarked alongside SHAP, LIME, and Anchors in ANN-based fraud models, showing complementary strengths and challenges [

82]. Advances such as conformal prediction interval counterfactuals (CPICFs) further illustrate how individualized counterfactuals can reduce uncertainty and improve interpretability in transaction fraud datasets [

83]. In parallel, attention-based interpretability mechanisms have been applied in online banking fraud detection, where hierarchical attention models can highlight both the most suspicious transactions and the most informative features, offering human-understandable explanations [

84]. Recent studies further demonstrate the value of combining SHAP and LIME with human-in-the-loop oversight to support auditability and regulatory transparency in financial fraud detection frameworks [

85]. Although not yet adopted in the non-resampled credit card fraud detection studies we reviewed, these techniques represent promising directions for extending explainability beyond SHAP in future research and deployment. This points to a concrete research agenda: (a) retain or document semantics-preserving feature mappings so attributions remain actionable; (b) provide threshold-dependent summaries alongside explanations to inform policy-setting; (c) assess the fidelity and robustness of explanations under temporal drift; and (d) evaluate the operational impact of XAI, measuring its influence on triage speed, alert quality, and analyst consensus in addition to standard statistical performance metrics.

4. Discussion

This review highlights the close interplay between dataset availability, model choice, evaluation practice, and explainability in credit card fraud detection studies that preserve the original class imbalance. The European Credit Card Fraud dataset overwhelmingly dominates as the benchmark of choice, appearing in two-thirds of the included studies, providing a shared basis for cross-paper comparability but also imposing constraints on interpretability due to PCA-based anonymization of features. Public datasets like IEEE-CIS and PaySim appear far less frequently. In contrast, private datasets from financial institutions remain valuable but are typically accessible only through collaborations or competition-based platforms [

24,

73,

86]. Synthetic datasets such as BankSim and PaySim are leveraged where privacy concerns or controlled experimentation are priorities, though they often cannot fully capture evolving fraud behaviors.

Across the included studies, tree-based ensembles, notably RF and gradient boosting, are the most frequently applied algorithms. Their dominance is unsurprising for high-dimensional, heterogeneous tabular data, where they offer strong handling of mixed feature types, built-in support for class weighting, and competitive precision–recall trade-offs. Linear and margin-based baselines (LR, SVM) remain common for interpretability and benchmarking, while deep and hybrid architectures (CNN–BiLSTM, TabTransformer) are emerging in contexts where temporal or relational patterns are essential. Notably, most studies handle imbalance at the model level, using class weights, focal or modified loss functions, or threshold calibration, rather than applying resampling techniques such as SMOTE or undersampling. This reflects production realities, where preserving the actual fraud prior facilitates integration with downstream controls, prevents oversampling artifacts, and avoids leakage risks.

Evaluation practices in the reviewed literature are universally positive, class-oriented, with all studies reporting Recall and Precision. However, AUC-ROC remains more frequently reported than AUC-PR, despite the latter’s greater relevance under extreme skew. For future research, AUC-PR should be treated as the primary evaluation metric, with AUC-ROC retained only as a supplementary indicator. Threshold-dependent reporting, such as confusion matrix counts, and operating-point precision/recall is also essential for transparency. Beyond statistical measures, cost-sensitive and profit-based metrics should be incorporated into future studies to reflect the asymmetric risks of false negatives and false positives in real-world deployment. Such adoption would significantly improve the comparability, operational realism, and policy relevance of fraud detection research.

Explainability remains underdeveloped: only two studies operationalize model interpretability, and both adopt SHAP. In these implementations, SHAP serves as a model-agnostic layer for generating global feature rankings to support governance and local transaction-level explanations to aid case review. While both report positive analyst feedback and improved triage efficiency, the utility of explanations is limited by anonymized feature spaces and the lack of user-friendly analyst interfaces. A small number of additional studies acknowledge the importance of explainability and propose integrating it in future work, underscoring its perceived but unrealized value in the field.

Taken together, these findings suggest several methodological and practical priorities for advancing credit card fraud detection research under non-resampled conditions:

Dataset diversification and transparency. Broaden the set of publicly available benchmark datasets, ideally with non-anonymized, privacy-preserving features to enable more actionable explainability and cross-study comparability;

Balanced model exploration. Maintain strong tree-based ensemble baselines while expanding evaluation of sequence, graph, and attention-based models in scenarios where temporal and relational structures are prominent;

Evaluation alignment with deployment needs. Prioritize PR-space metrics, threshold-specific performance, and cost/profit analysis to bridge model evaluation with operational decision-making;

Operational explainability. Preserve or securely map feature semantics to enable actionable attributions, design analyst-friendly explanation interfaces, and assess explanation robustness under data drift.

By integrating methodological rigor with operational realism, future studies can better align academic advances with the needs of fraud detection teams, regulators, and financial institutions, ultimately enhancing both the performance and trustworthiness of deployed systems.

5. Limitations and Threats to Validity

Several limitations should be acknowledged when interpreting this review. First, the evidence base is heavily skewed toward the European Credit Card Fraud dataset, which facilitates reproducibility but restricts external validity and constrains explainability due to PCA-based anonymization.

Second, our inclusion criteria, requiring explicit reporting of both Precision and Recall and at least four evaluation metrics, may bias the corpus toward studies with more comprehensive reporting practices, potentially excluding otherwise relevant work. Of the 663 reports assessed in full text, only 44 (6.6%) satisfied all quality requirements, reflecting the limited number of studies meeting these standards in the literature.

Third, heterogeneity in experimental protocols (data splits, cross-validation folds, temporal ordering, hyperparameter tuning strategies) limits the strict comparability of reported results across studies.

Fourth, the body of published studies may be subject to publication bias, as works with negative or inconclusive findings are less likely to be reported, which may limit the visibility of alternative approaches or experimental failures.

Finally, limited transparency in code, seeds, and preprocessing steps in many studies restricts the ability to replicate results, assess robustness, and conduct fair head-to-head comparisons.

These limitations also affect the generalizability of our findings. Heavy reliance on the European Credit Card dataset may restrict the applicability of observed methodological trends to other domains such as mobile payments or online banking, where transaction structures and fraud patterns differ. Likewise, inclusion and reporting biases may overrepresent well-documented or positive outcomes, overstating the maturity of the field. While the trends identified here are robust within the reviewed corpus, caution is warranted when generalizing these findings to the broader landscape of fraud detection research under class imbalance.

6. Conclusions

Financial fraud remains a persistent threat across banking and e-commerce, with losses amplified by the rarity and evolving nature of fraudulent transactions. Modern ML methods offer scalable screening of high-volume streams. Still, their operational value depends on faithfully reflecting real-world class imbalance, applying rigorous evaluation protocols, and delivering outputs that stakeholders can interpret and act upon.

This review systematically examined forty-four primary studies that retained the native class distribution. The evidence is grouped into four categories. Datasets: a small set of public benchmarks, most notably the European Credit Card Fraud dataset, anchors much of the literature, enabling replication but constraining semantic interpretability due to anonymization; private and synthetic datasets appear less frequently and are inconsistently documented. Algorithms: tree-based ensembles dominate non-resampled settings, with RL and SVM serving as standard baselines; deep and hybrid architectures (sequence, convolutional, attention-based, graph) are present but less common. Imbalance handling occurs primarily at the model/threshold level (class/cost weighting, modified losses, operating-point calibration) rather than via resampling. Evaluation: Precision and Recall are consistently reported, F1-score and Accuracy are frequent, while AUC-PR, more suitable for rare-event regimes, remains underused compared to AUC-ROC. Links between statistical performance and business outcomes are rare. Explainability: operational XAI adoption is limited; where present, SHAP is used for global governance and local triage, but anonymized features limit business-semantic insight.

The findings suggest clear priorities for future work. Data assets would benefit from semantics-preserving benchmarks or secure feature dictionaries to support interpretable modeling and policy translation. Methodologically, evaluation protocols must move beyond over-reliance on AUC-ROC. AUC-PR should be treated as the primary evaluation metric, complemented by threshold-specific reporting. In addition, cost- or profit-sensitive measures should become mandatory standards in fraud detection research under non-resampled conditions. This shift would better align academic benchmarks with the realities of operational deployment, where precision–recall trade-offs and asymmetric error costs dictate effectiveness.