ViT-DCNN: Vision Transformer with Deformable CNN Model for Lung and Colon Cancer Detection

Simple Summary

Abstract

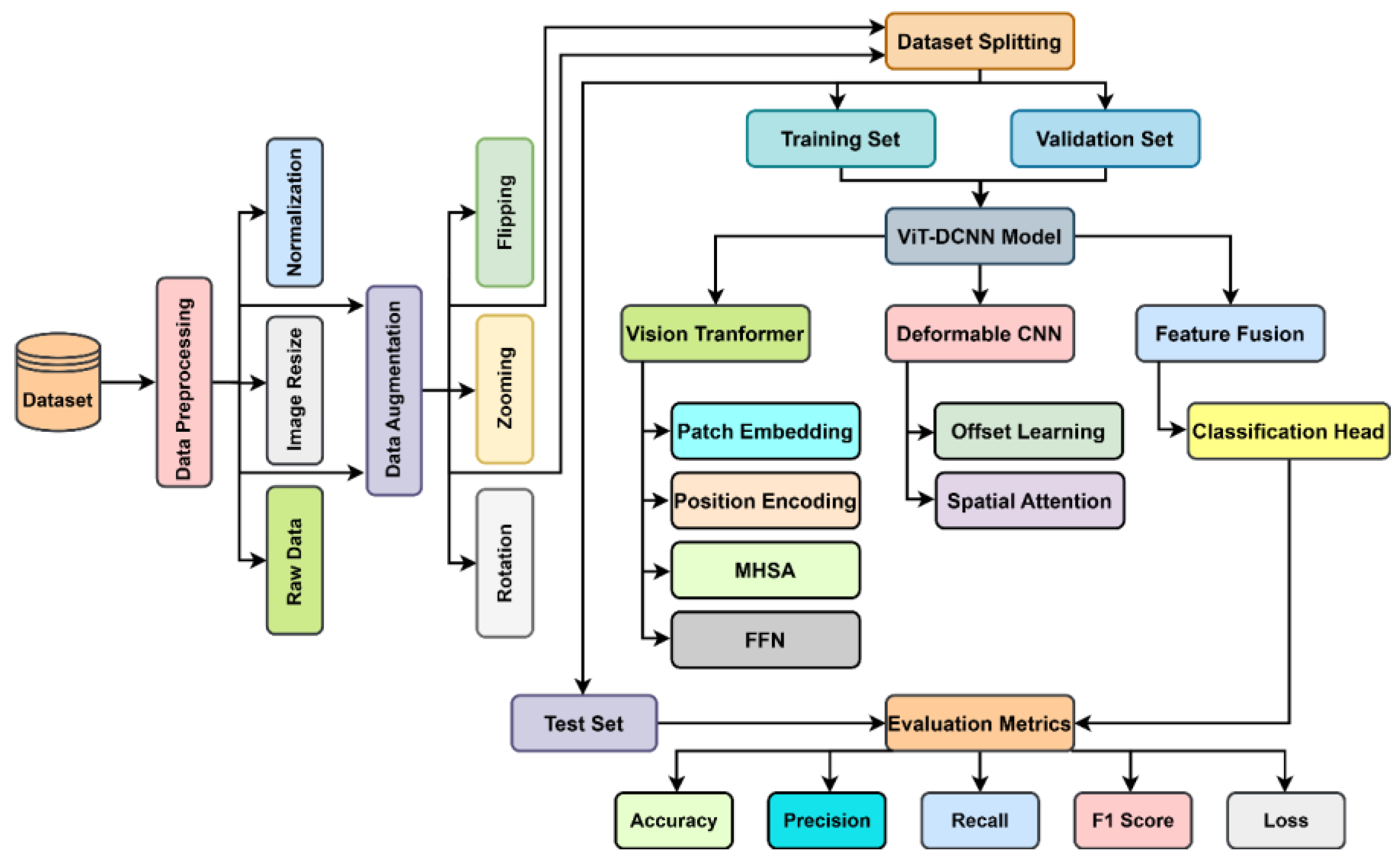

1. Introduction

- Integrated ViT-DCNN Model: The study proposes a novel integrated model combining Vision Transformer (ViT) with Deformable Convolutional Neural Network (DCNN), leveraging ViT’s self-attention for global contextual feature extraction and DCNN’s adaptive receptive fields for capturing fine-grained, localized spatial details in histopathological images.

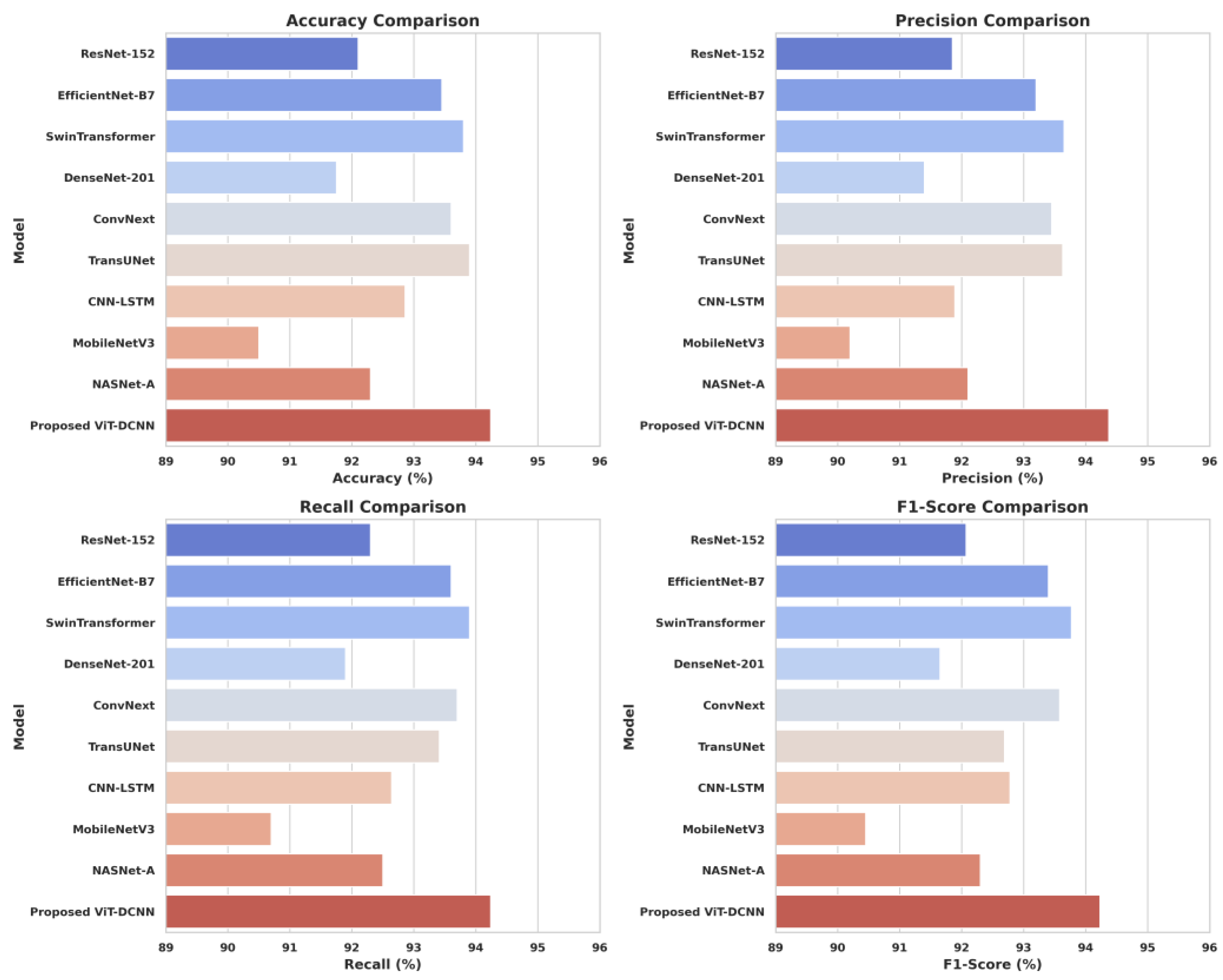

- Superior Performance Metrics: The ViT-DCNN model achieves a test accuracy of 94.24%, precision of 94.37%, recall of 94.24%, and F1-score of 94.23%, outperforming state-of-the-art models like ResNet-152 (92.10%), SwinTransformer (93.80%), and TransUNet (93.90%) across all major metrics.

- Hierarchical Feature Fusion (HFF): The model introduces an HFF module with a Squeeze-and-Excitation (SE) block to effectively combine global features from ViT and local features from DCNN, enhancing feature representation and improving classification accuracy for lung and colon cancer detection.

- Robust Data Preprocessing: The study employs comprehensive preprocessing methods, including resizing images to 224 × 224 pixels, min-max normalization, and data augmentation (rotation, zooming, and flipping), to improve model generalization and reduce overfitting on the Lung and Colon Cancer Histopathological Images dataset.

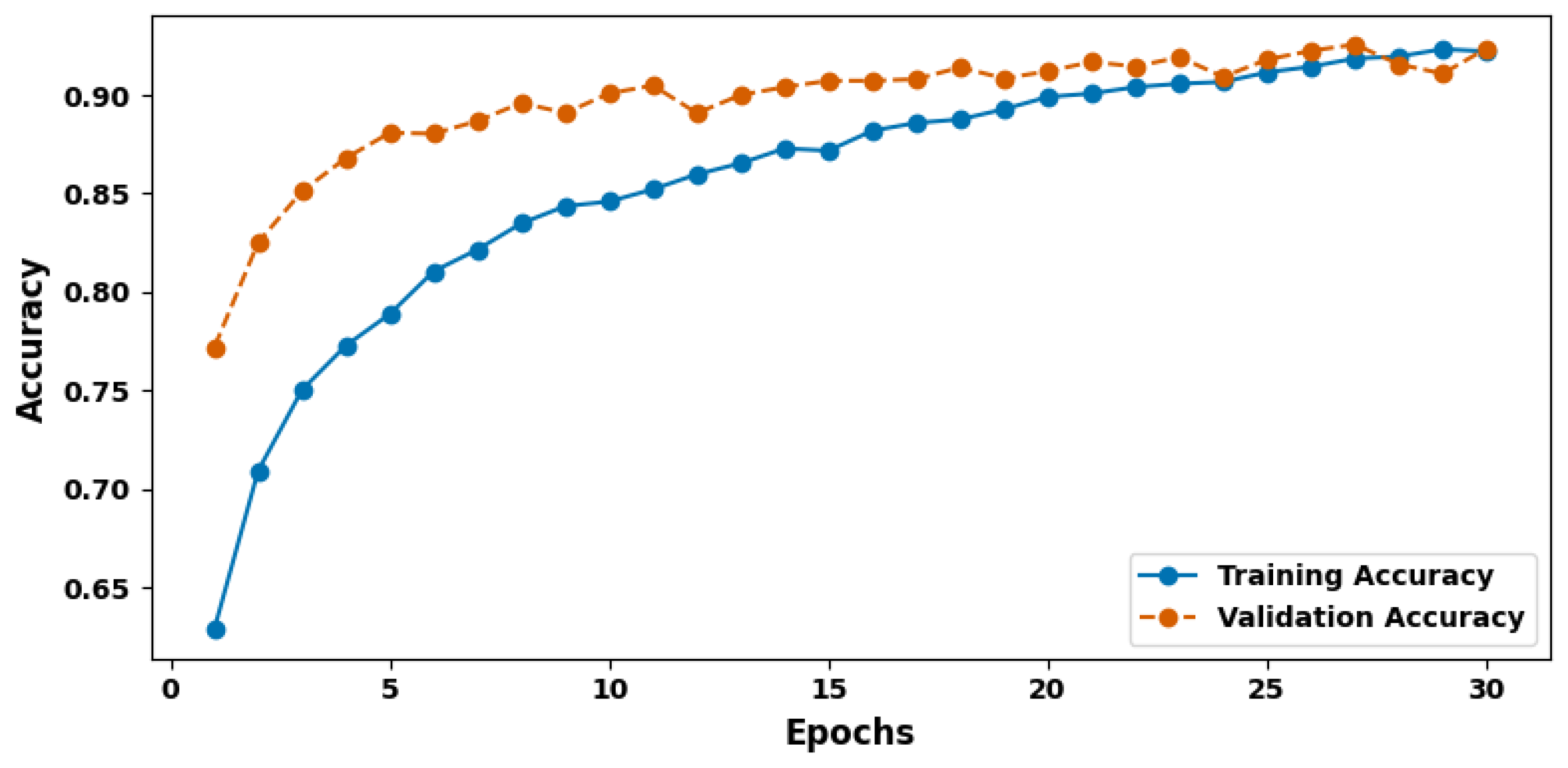

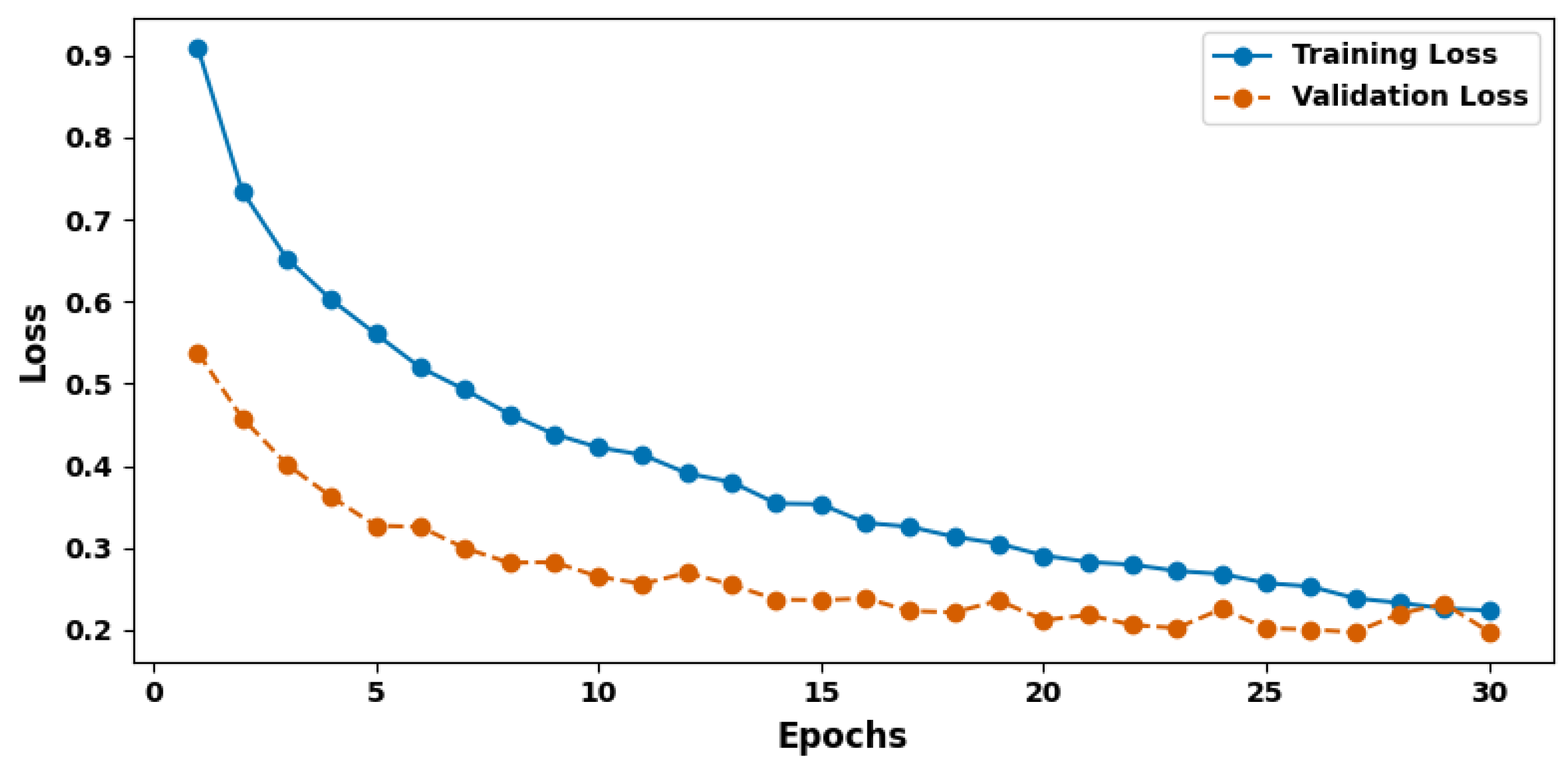

- Effective Training Strategy: Utilizing the AdamW optimizer with a learning rate of 1 × 10−5 and early stopping after five epochs of no validation accuracy improvement, the model ensures efficient training over 50 epochs, achieving stable convergence and high generalizability (validation accuracy of 92.04%).

- Clinical Relevance: The model’s high precision and recall minimize false positives and negatives, making it a reliable tool for efficient lung and colon cancer detection, with the potential to assist radiologists in clinical settings by improving diagnostic accuracy and patient outcomes.

2. Materials and Methods

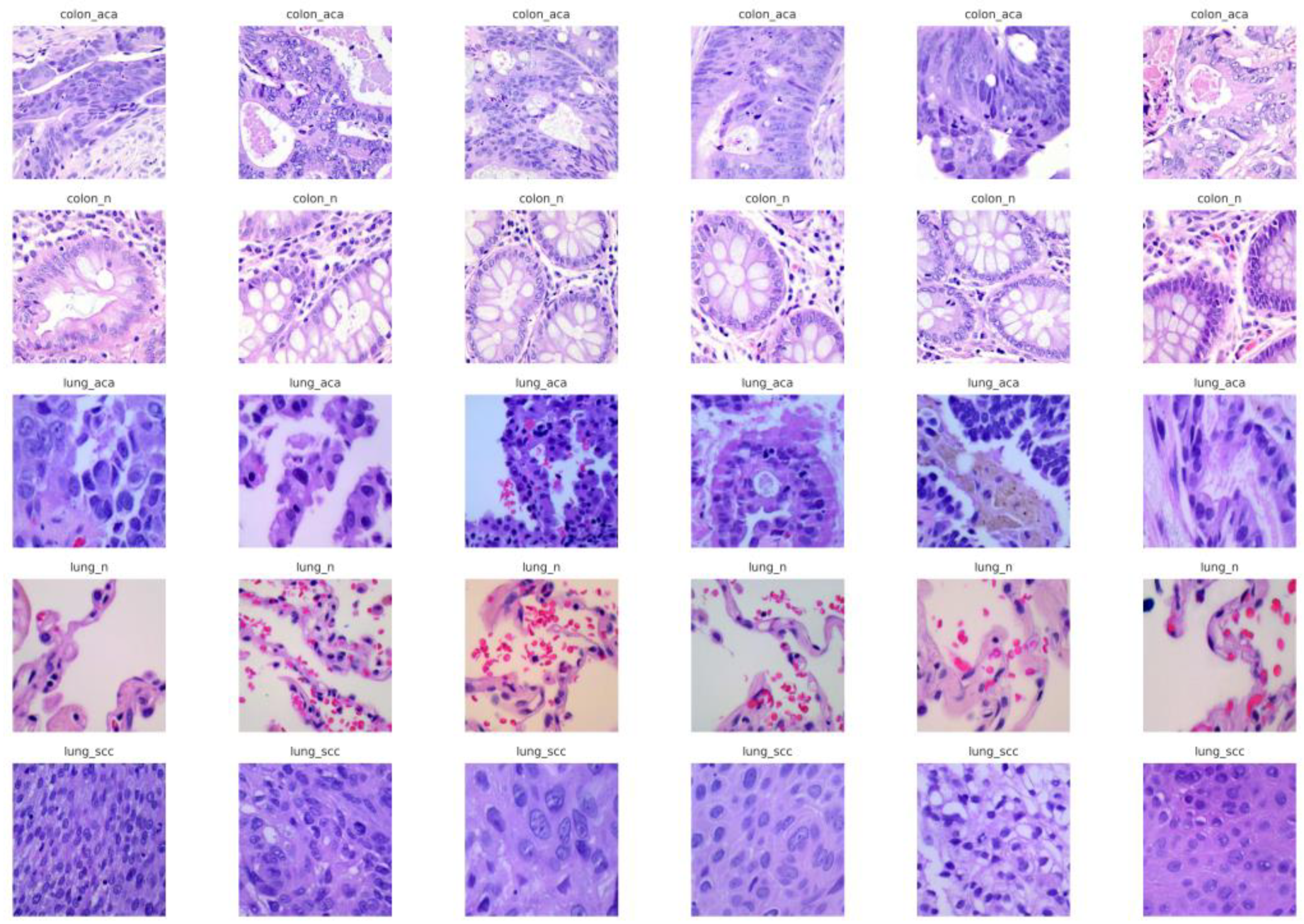

2.1. Dataset

2.2. Data Preprocessing

2.2.1. Image Resizing

2.2.2. Normalization

2.2.3. Data Augmentation

- Rotation: Additionally, images were rotated randomly to values up to 20 degrees. The rotation matrix R(θ) used for this technique is defined as:

- Zooming: The model was trained to recognize cancerous patterns at different scales by applying random zoom in/out with a magnification factor up to 20%. This is represented as:

- Flipping: Flipping of images both horizontally and vertically was performed, but the order of the flipping was random, emulating different positions of the tissue samples. These augmentations enlarge the training dataset for the CNN with more diverse images, and in this way, they help the CNN to learn more features.

- Stratified Splitting: To ensure that both the training set and test set had a proper distribution of classes, the data was split into a training set (80%), validation set (10%), and test set (10%) employing the stratified random sampling method. This made sure that each class was fairly divided during each split, taking a number of factors into consideration, particularly where class imbalances exist in routine delivery of medical diagnoses [31]. As a result of data resizing, normalization, augmentations, and stratified splitting, the dataset is well preprocessed for feeding into the deep learning model while improving its performance and also its ability to generalize [32].

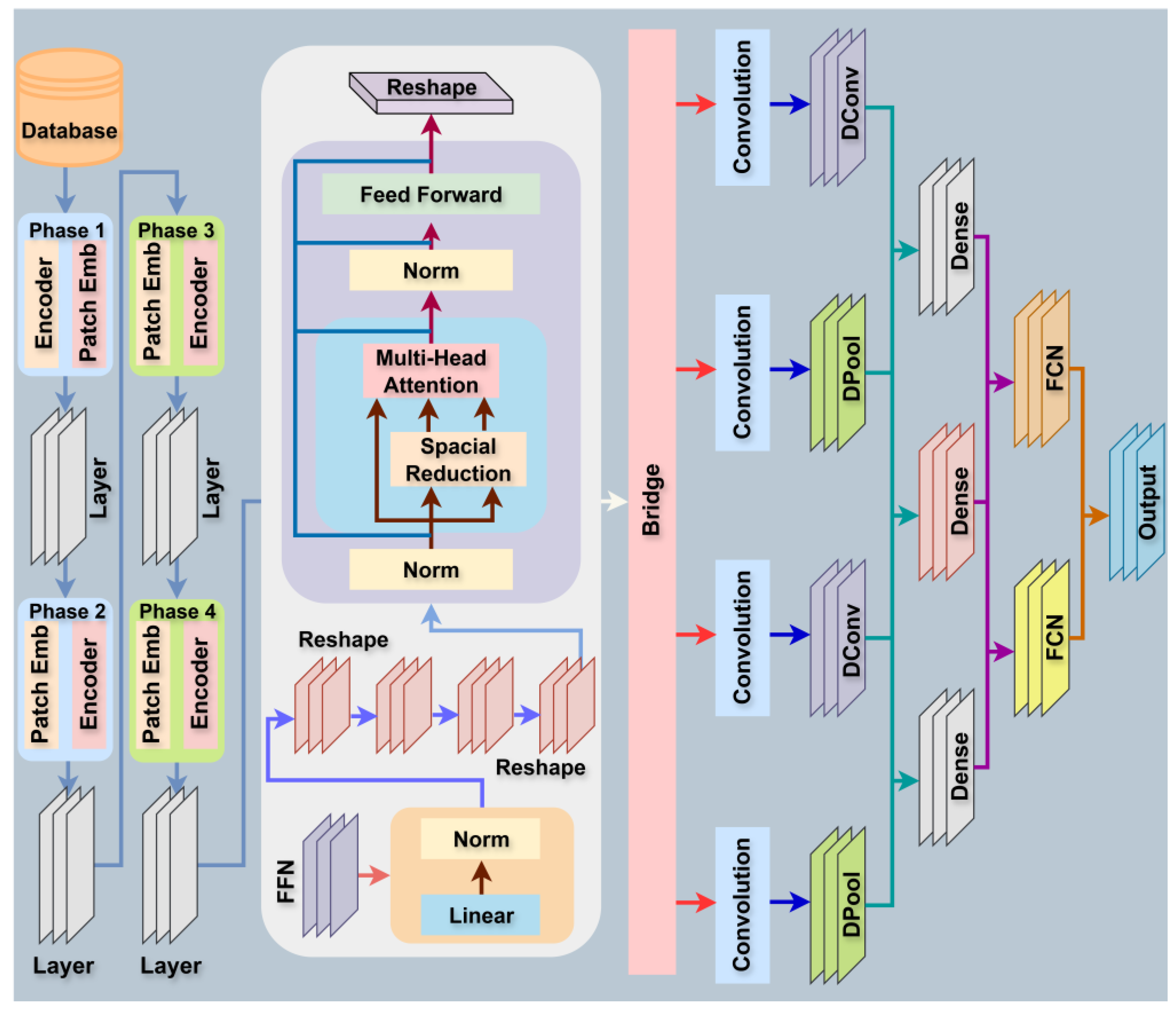

2.3. Model Design and Description

2.3.1. Vision Transformer (ViT) Backbone

- is the ith patch embedding.

- is the learnable projection matrix.

- is the bias term.

- Xi denotes the ith image patch.

2.3.2. Positional Encoding

- is the positional encoding, computed as:

2.3.3. Multi-Head Self-Attention (MHSA):

- and are the query, key, and value matrices derived from the input embeddings Z.

- dk is the dimensionality of the keys and queries.

- dv is the dimensionality of the values.

2.3.4. Feed-Forward Network (FFN)

- are the weights.

- are the biases.

- dff is the size of the hidden layer.

2.3.5. Deformable Convolutional Neural Network (Deformable CNN)

2.3.6. Deformable Convolution Layer (DConv)

- x is the input feature map.

- w is the convolution filter.

- Δmij, Δnij are the learned spatial offsets at each location (i, j).

2.3.7. Offset Learning via Deformable Convolutions

2.3.8. Spatial Attention for Deformable Convolutions

- is the sigmoid activation function.

- Conv(Fij) applies a convolutional filter to the feature map Fij to generate attention weights.

2.3.9. Hierarchical Feature Fusion (HFF) Module

2.3.10. Feature Concatenation

2.3.11. Squeeze-and-Excitation (SE) Block

2.3.12. Classification Head

2.3.13. Output Layer

2.3.14. Loss Function

- yij is the binary indicator (0 or 1) if the class label j is the correct classification for the observation i.

- is the predicted probability of observation i being classified as class j.

2.3.15. Training Strategy

| Algorithm 1: ViT-DCNN (Vision Transformer with Deformable Convolution) for Lung and Colon Cancer Classification |

| 1: Input: D = {(Xi, Yi)}, α, T, B, θViT, θDConv, N |

| 2: Initialize: θViT, θDConv |

| 3: for epoch = 1 to T do |

| 4: for batch = 1 to do |

| 5: Extract mini-batch: |

| 6: |

| 7: Apply Data Augmentation: |

| 8: |

| 9: Vision Transformer (ViT) Forward Pass: |

| 10: Patch Embedding: |

| 11: |

| 12: Positional Encoding: |

| 13: Zi = Pi + PEi |

| 14: Multi-Head Self-Attention: |

| 15: |

| 16: Feed-Forward Network: |

| 17: FFN(Z) = max(0, ZW1 + b1) W2 + b2 |

| 18: Deformable Convolution Forward Pass: |

| 19: Deformable Convolution: |

| 20: |

| 21: Offset Learning: |

| 22: |

| 23: Spatial Attention: |

| 24: |

| 25: Hierarchical Feature Fusion (HFF): |

| 26: Concatenate Vision Transformer and Deformable CNN Features: |

| 27: Fconcat = concat(FViT, FDConv) |

| 28: Squeeze-and-Excitation (SE) Block: |

| 29: |

| 30: Refined Feature Map: |

| 31: |

| 32: Prediction and Softmax Activation: |

| 33: Global Average Pooling: |

| 34: |

| 35: Softmax Layer: |

| 36: |

| 37: Predicted Class: |

| 38: |

| 39: Compute Class: |

| 40: Cross-Entropy Loss: |

| 41: |

| 42: Gradient Computation: |

| 43: |

| 44: Parameter Update (Using AdamW optimizer): |

| 45: Update the Vision Transformer parameters: |

| 46: |

| 47: Update the Deformable CNN parameters: |

| 48: |

| 49: end for |

| 50: end for |

| 51: Output: |

| 52: Trained ViT-Deformable CNN model with updated parameters θViT and θDConv |

2.4. Evaluation Metrics

- TP (True Positive) is the number of correctly predicted positive instances (lung and colon cancer cases).

- TN (True Negative) is the number of correctly predicted negative instances (non-cancer cases).

- FP (False Positive) is the number of incorrectly predicted positive instances.

- FN (False Negative) is the number of incorrectly predicted negative instances.

- yij is the binary indicator (0 or 1) if the class label j is the correct classification for the observation i.

- is the predicted probability of observation i being classified as class j.

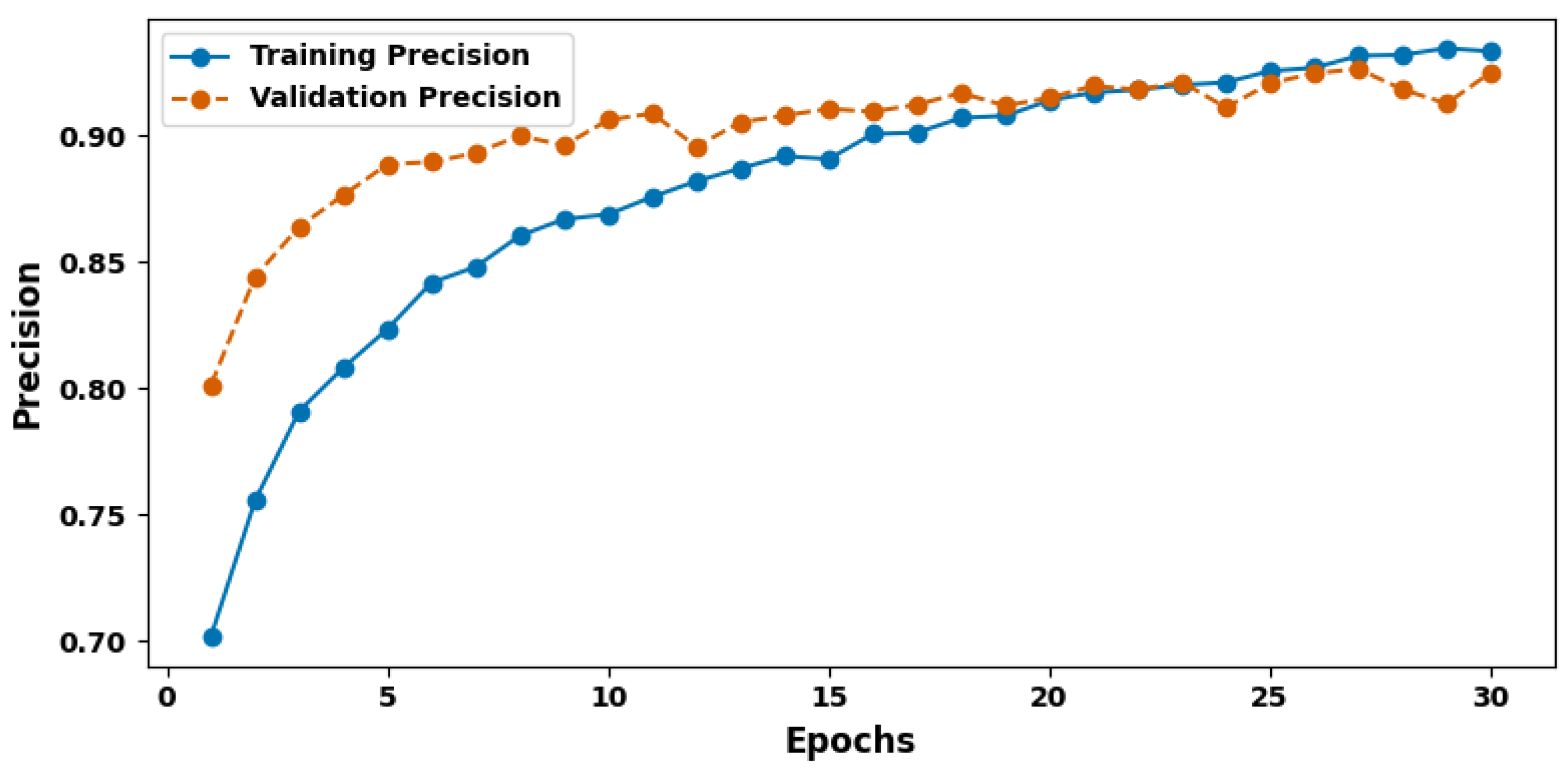

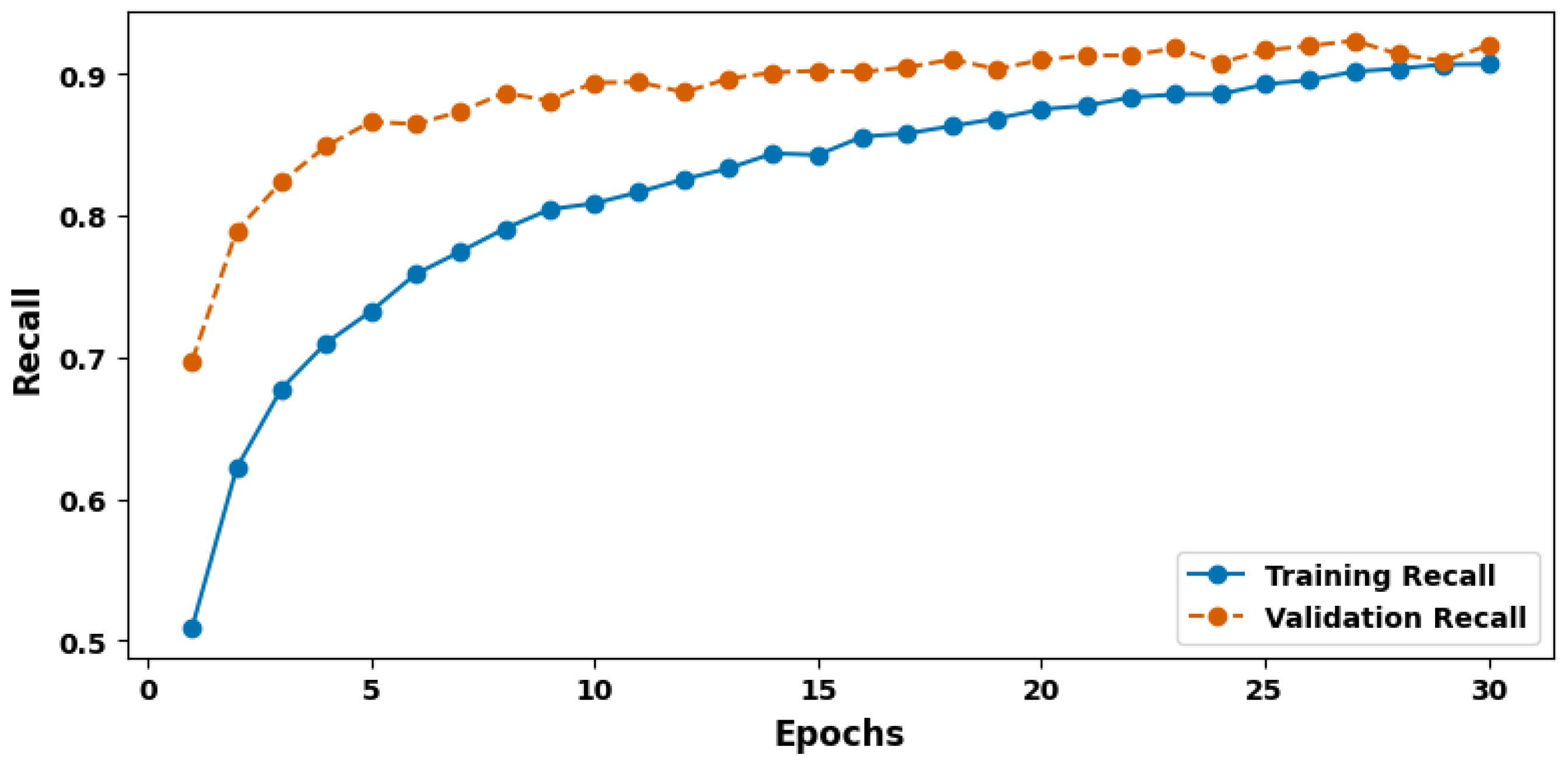

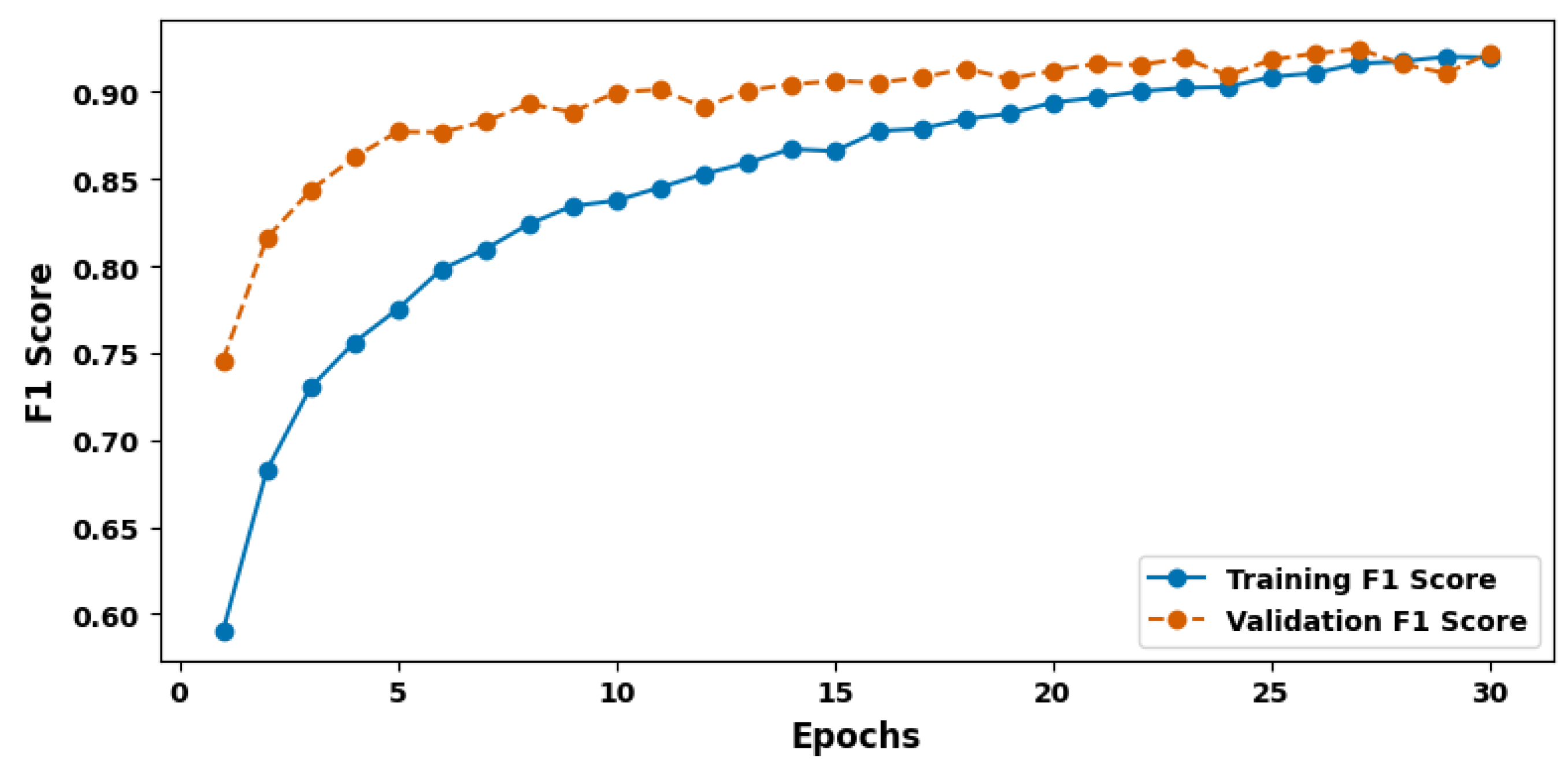

3. Experimental Result

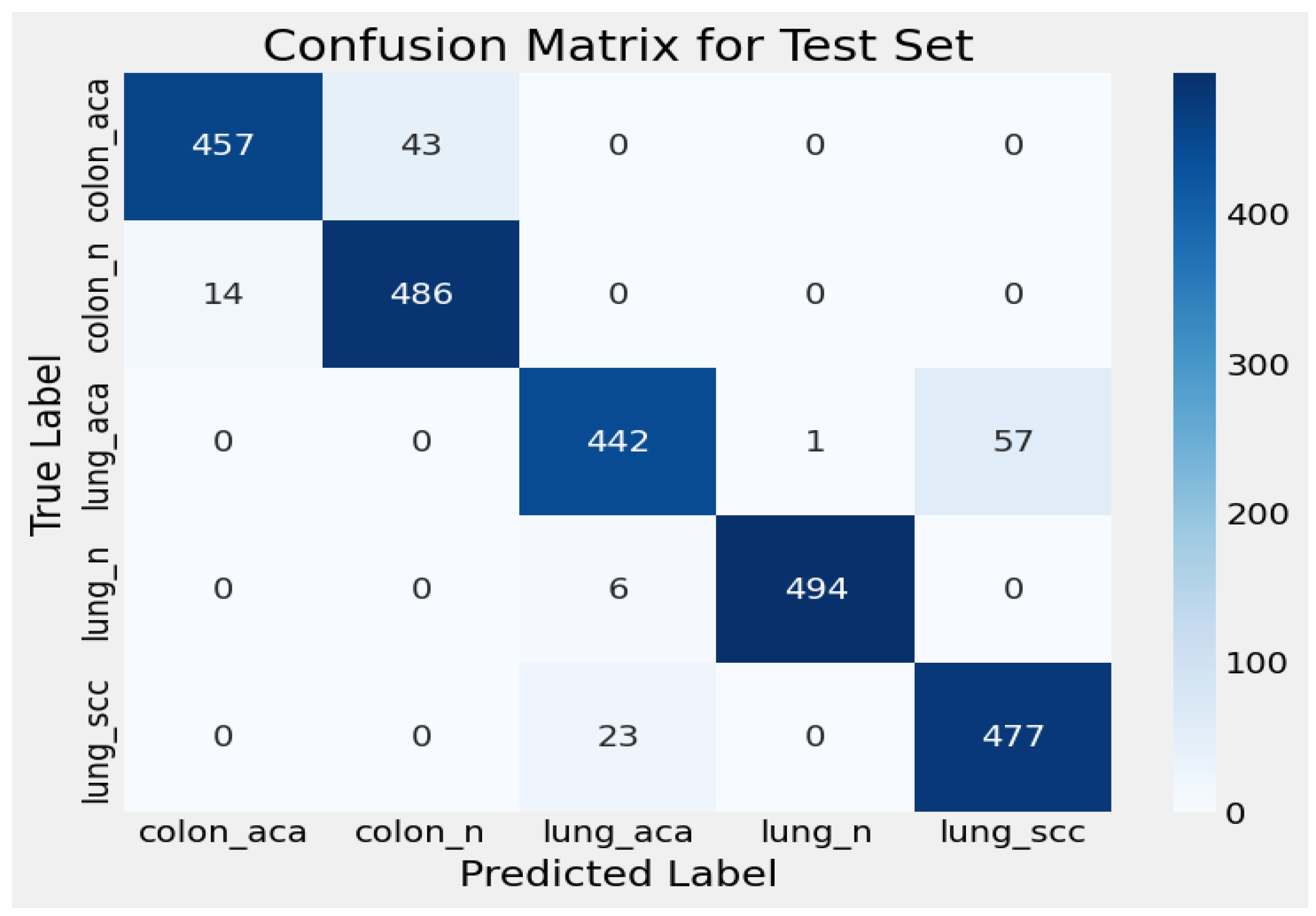

3.1. Confusion Matrix for Test Set

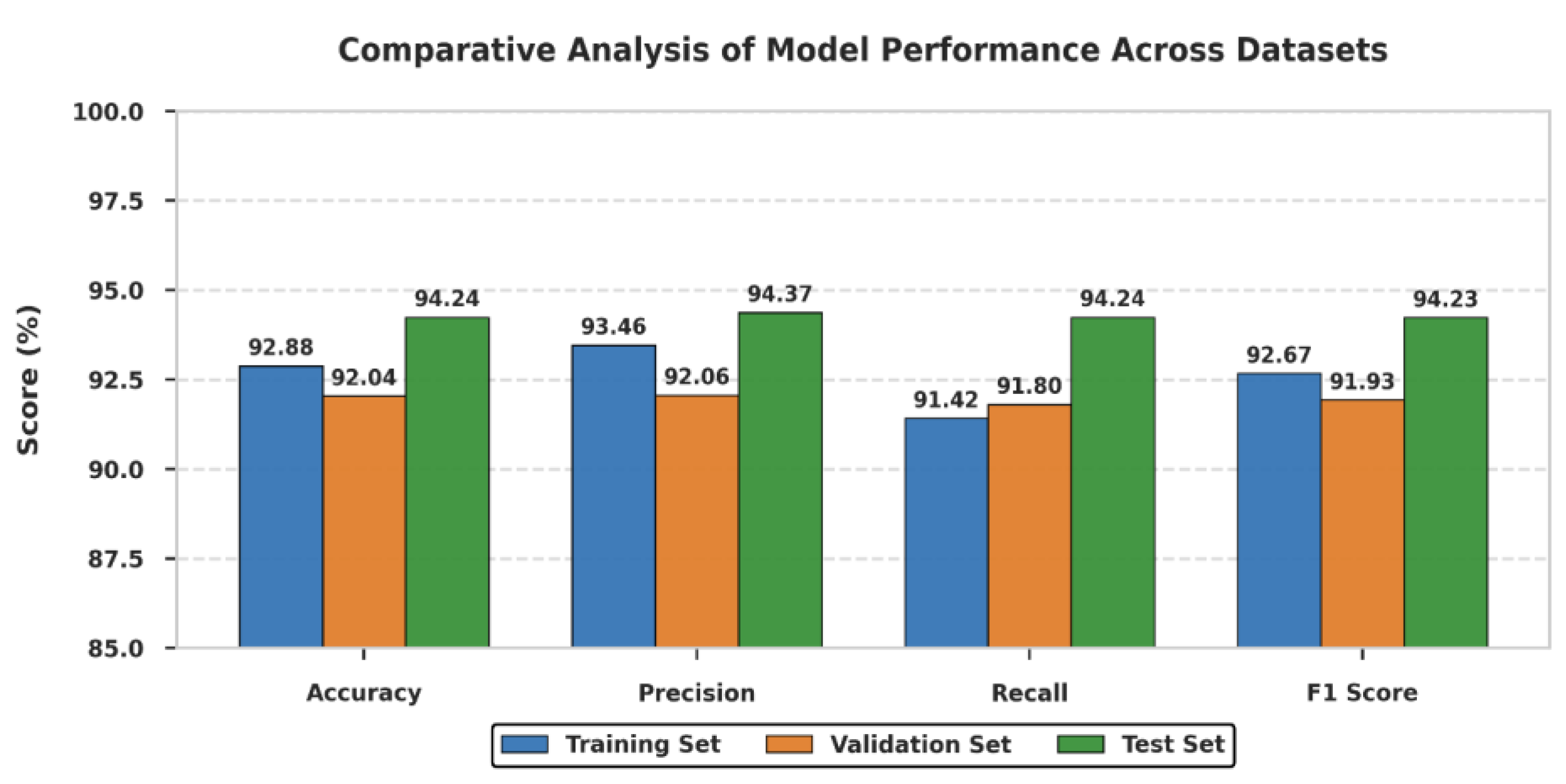

3.2. Model Evaluation Metric Comparison

4. Discussion

SWOT Analysis of Proposed ViT-DCNN Model

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Nomenclature

| Symbols | Description |

| D | Dataset consisting of image-label pairs |

| α | Learning rate |

| T | Total number of epochs |

| B | Batch size |

| θViT | Weights of the Vision Transformer model |

| N | Total number of samples |

| θDConv | Weights of the Deformable Convolutional model |

| Xbatch | A mini-batch of input images extracted for training |

| Ybatch | Corresponding labels of the images in the mini-batch |

| Xaug | Augmented version of the mini-batch images |

| Yaug | Labels corresponding to the augmented images |

| Pi | Patch embedding of input image |

| Wemb, bemb | Patch embedding weight and bias |

| Zi | Positional encoding of input patches |

| PEi | Positional encoding vector |

| Q, K, V | Query, Key, and Value matrices in Multi-Head Self-Attention |

| dk | Dimension of the key vectors in attention mechanism |

| Attention (Q, K, V) | Multi-Head Self-Attention mechanism |

| W1, W2, b1, b2 | Weights and biases for Feed-Forward Network (FFN) in ViT |

| yij | Output of the Deformable Convolution at position |

| Input feature at position with learned offsets | |

| wmn | Deformable convolution kernel weights |

| [Δmij, Δnij] | Learned spatial offsets in deformable convolution |

| Fij | Input feature map at position |

| Aij | Attention weight for spatial feature refinement |

| Frefined | Output of spatial attention mechanism |

| FViT | Features extracted from Vision Transformer |

| FDConv | Features extracted from Deformable CNN |

| Fconcat | Concatenated feature map from ViT and Deformable CNN |

| s | Squeeze-and-Excitation scaling factor |

| Activation function (Sigmoid in SE block) | |

| Fse | Refined feature map after SE block |

| Z | Global Averaged Pooled (GAP) feature vector |

| H, W | Height and width of the feature map |

| GAP (F) | Global Average Pooling operation |

| Pc | Probability distribution over classes (softmax output) |

| W, b | Weights and bias for classification layer |

| Predicted class label (argmax of softmax probabilities) | |

| Lcross | Cross-Entropy loss function |

| θVITLcross | Gradient of the loss with respect to Vision Transformer weights |

| θDConvLcross | Gradient of the loss with respect to Deformable CNN weights |

References

- Siegel, R.L.; Miller, K.D.; Jemal, A. Cancer statistics, 2019. CA Cancer J. Clin. 2019, 69, 7–34. [Google Scholar] [CrossRef]

- Purandare, N.C.; Rangarajan, V. Imaging of lung cancer: Implications on staging and management. Indian J. Radiol. Imaging 2015, 25, 109–120. [Google Scholar] [CrossRef]

- Li, Y.; Wu, X.; Yang, P.; Jiang, G.; Luo, Y. Machine Learning for Lung Cancer Diagnosis, Treatment, and Prognosis. Genom. Proteom. Bioinform. 2022, 20, 850–866. [Google Scholar] [CrossRef]

- Liu, M.; Wu, J.; Wang, N.; Zhang, X.; Bai, Y.; Guo, J.; Zhang, L.; Liu, S.; Tao, K. The value of artificial intelligence in the diagnosis of lung cancer: A systematic review and meta-analysis. PLoS ONE 2023, 18, e0273445. [Google Scholar] [CrossRef]

- Thanoon, M.A.; Zulkifley, M.A.; Mohd Zainuri, M.A.A.; Abdani, S.R. A Review of Deep Learning Techniques for Lung Cancer Screening and Diagnosis Based on CT Images. Diagnostics 2023, 13, 2617. [Google Scholar] [CrossRef]

- Nanglia, P.; Kumar, S.; Mahajan, A.N.; Singh, P.; Rathee, D. A hybrid algorithm for lung cancer classification using SVM and Neural Networks. ICT Express 2021, 7, 335–341. [Google Scholar] [CrossRef]

- Wang, L. Deep Learning Techniques to Diagnose Lung Cancer. Cancers 2022, 14, 5569. [Google Scholar] [CrossRef] [PubMed]

- Ausawalaithong, W.; Thirach, A.; Marukatat, S.; Wilaiprasitporn, T. Automatic Lung Cancer Prediction from Chest X-ray Images Using the Deep Learning Approach. In Proceedings of the 2018 11th Biomedical Engineering International Conference (BMEiCON), Chiang Mai, Thailand, 21–24 November 2018; IEEE: New York, NY, USA, 2018; pp. 1–5. [Google Scholar]

- Lu, Y.; Aslani, S.; Zhao, A.; Shahin, A.; Barber, D.; Emberton, M.; Alexander, D.C.; Jacob, J. A hybrid CNN-RNN approach for survival analysis in a Lung Cancer Screening study. Heliyon 2023, 9, e18695. [Google Scholar] [CrossRef] [PubMed]

- Grenier, P.A.; Brun, A.L.; Mellot, F. The Potential Role of Artificial Intelligence in Lung Cancer Screening Using Low-Dose Computed Tomography. Diagnostics 2022, 12, 2435. [Google Scholar] [CrossRef] [PubMed]

- Dehdar, S.; Salimifard, K.; Mohammadi, R.; Marzban, M.; Saadatmand, S.; Fararouei, M.; Dianati-Nasab, M. Applications of different machine learning approaches in prediction of breast cancer diagnosis delay. Front. Oncol. 2023, 13, 1103369. [Google Scholar] [CrossRef]

- Qarmiche, N.; Chrifi Alaoui, M.; El Kinany, K.; El Rhazi, K.; Chaoui, N. Soft-Voting colorectal cancer risk prediction based on EHLI components. Inform. Med. Unlocked 2022, 33, 101070. [Google Scholar] [CrossRef]

- Hoang, P.H.; Landi, M.T. DNA Methylation in Lung Cancer: Mechanisms and Associations with Histological Subtypes, Molecular Alterations, and Major Epidemiological Factors. Cancers 2022, 14, 961. [Google Scholar] [CrossRef]

- Ye, Q.; Falatovich, B.; Singh, S.; Ivanov, A.V.; Eubank, T.D.; Guo, N.L. A Multi-Omics Network of a Seven-Gene Prognostic Signature for Non-Small Cell Lung Cancer. Int. J. Mol. Sci. 2021, 23, 219. [Google Scholar] [CrossRef] [PubMed]

- Rajasekar, V.; Vaishnnave, M.P.; Premkumar, S.; Sarveshwaran, V.; Rangaraaj, V. Lung cancer disease prediction with CT scan and histopathological images feature analysis using deep learning techniques. Results Eng. 2023, 18, 101111. [Google Scholar] [CrossRef]

- Firmino, M.; Morais, A.H.; Mendoça, R.M.; Dantas, M.R.; Hekis, H.R.; Valentim, R. Computer-aided detection system for lung cancer in computed tomography scans: Review and future prospects. Biomed. Eng. Online 2014, 13, 41. [Google Scholar] [CrossRef]

- Palani, D.; Venkatalakshmi, K. An IoT Based Predictive Modelling for Predicting Lung Cancer Using Fuzzy Cluster Based Segmentation and Classification. J. Med. Syst. 2019, 43, 21. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef]

- Shah, A.A.; Malik, H.A.M.; Muhammad, A.; Alourani, A.; Butt, Z.A. Deep learning ensemble 2D CNN approach towards the detection of lung cancer. Sci. Rep. 2023, 13, 2987. [Google Scholar] [CrossRef]

- Cai, L.; Gao, J.; Zhao, D. A review of the application of deep learning in medical image classification and segmentation. Ann. Transl. Med. 2020, 8, 713. [Google Scholar] [CrossRef] [PubMed]

- Shen, D.; Wu, G.; Suk, H.-I. Deep Learning in Medical Image Analysis. Annu. Rev. Biomed. Eng. 2017, 19, 221–248. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Klang, E.; Soroush, A.; Nadkarni, G.; Sharif, K.; Lahat, A. Deep Learning and Gastric Cancer: Systematic Review of AI-Assisted Endoscopy. Diagnostics 2023, 13, 3613. [Google Scholar] [CrossRef]

- Chandrashekar, G.; Sahin, F. A survey on feature selection methods. Comput. Electr. Eng. 2014, 40, 16–28. [Google Scholar] [CrossRef]

- Mallick, S.; Paul, S.; Sen, A. A Novel Approach to Breast Cancer Histopathological Image Classification Using Cross-Colour Space Feature Fusion and Quantum–Classical Stack Ensemble Method. In Proceedings of the ICADCML 2024: 5th International Conference on Advances in Distributed Computing and Machine Learning, Andhra Pradesh, India, 5–6 January 2024; pp. 15–26. [Google Scholar]

- Anaya-Isaza, A.; Mera-Jiménez, L.; Zequera-Diaz, M. An overview of deep learning in medical imaging. Inform. Med. Unlocked 2021, 26, 100723. [Google Scholar] [CrossRef]

- Dash, C.S.K.; Behera, A.K.; Dehuri, S.; Ghosh, A. An outliers detection and elimination framework in classification task of data mining. Decis. Anal. J. 2023, 6, 100164. [Google Scholar] [CrossRef]

- Yang, S.; Xiao, W.; Zhang, M.; Guo, S.; Zhao, J.; Shen, F. Image Data Augmentation for Deep Learning: A Survey. arXiv 2022, arXiv:2204.08610. [Google Scholar] [CrossRef]

- Castaldo, R.; Pane, K.; Nicolai, E.; Salvatore, M.; Franzese, M. The Impact of Normalization Approaches to Automatically Detect Radiogenomic Phenotypes Characterizing Breast Cancer Receptors Status. Cancers 2020, 12, 518. [Google Scholar] [CrossRef] [PubMed]

- Galić, I.; Habijan, M.; Leventić, H.; Romić, K. Machine Learning Empowering Personalized Medicine: A Comprehensive Review of Medical Image Analysis Methods. Electronics 2023, 12, 4411. [Google Scholar] [CrossRef]

- Zakaria, R.; Abdelmajid, H.; Zitouni, D. Deep Learning in Medical Imaging: A Review. In Applications of Machine Intelligence in Engineering; CRC Press: New York, NY, USA, 2022; pp. 131–144. [Google Scholar]

- Haixiang, G.; Yijing, L.; Shang, J.; Mingyun, G.; Yuanyue, H.; Bing, G. Learning from class-imbalanced data: Review of methods and applications. Expert Syst. Appl. 2017, 73, 220–239. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Dong, Y.; Zhang, M.; Qiu, L.; Wang, L.; Yu, Y. An Arrhythmia Classification Model Based on Vision Transformer with Deformable Attention. Micromachines 2023, 14, 1155. [Google Scholar] [CrossRef]

- Ji, M.; Zhao, G. DEViT: Deformable Convolution-Based Vision Transformer for Bearing Fault Diagnosis. IEEE Trans. Instrum. Meas. 2024, 73, 1–13. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Alfatih, M.I.; Wibowo, S.A. Star Classifier Head on Deformable Attention Vision Transformer for Small Datasets. IEEE Access 2025, 13, 145680–145689. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 7–9 July 2015. [Google Scholar]

- Padshetty, S.; Ambika. A novel twin vision transformer framework for crop disease classification with deformable attention. Biomed. Signal Process. Control 2025, 105, 107551. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef] [PubMed]

- Adam, K.D.B.J. A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980. [Google Scholar] [PubMed]

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Davis, J.; Goadrich, M. The relationship between Precision-Recall and ROC curves. In Proceedings of the 23rd International Conference on Machine Learning-ICML’06, Pittsburgh, PA, USA, 25–29 June 2006; ACM Press: New York, NY, USA, 2006; pp. 233–240. [Google Scholar]

- Tharwat, A. Classification assessment methods. Appl. Comput. Inform. 2021, 17, 168–192. [Google Scholar] [CrossRef]

- Kuhn, M.; Johnson, K. Applied Predictive Modeling; Springer: New York, NY, USA, 2013; ISBN 978-1-4614-6848-6. [Google Scholar]

- Du, Y.; Hu, L.; Wu, G.; Tang, Y.; Cai, X.; Yin, L. Diagnoses in multiple types of cancer based on serum Raman spectroscopy combined with a convolutional neural network: Gastric cancer, colon cancer, rectal cancer, lung cancer. Spectrochim. Acta Part A Mol. Biomol. Spectrosc. 2023, 298, 122743. [Google Scholar] [CrossRef] [PubMed]

- Depciuch, J.; Jakubczyk, P.; Paja, W.; Pancerz, K.; Wosiak, A.; Kula-Maximenko, M.; Yaylım, İ.; Gültekin, G.İ.; Tarhan, N.; Hakan, M.T.; et al. Correlation between human colon cancer specific antigens and Raman spectra. Attempting to use Raman spectroscopy in the determination of tumor markers for colon cancer. Nanomed. Nanotechnol. Biol. Med. 2023, 48, 102657. [Google Scholar] [CrossRef] [PubMed]

- Azar, A.T.; Tounsi, M.; Fati, S.M.; Javed, Y.; Amin, S.U.; Khan, Z.I.; Alsenan, S.; Ganesan, J. Automated System for Colon Cancer Detection and Segmentation Based on Deep Learning Techniques. Int. J. Sociotechnol. Knowl. Dev. 2023, 15, 1–28. [Google Scholar] [CrossRef]

- Mehan, V. Advanced artificial intelligence driven framework for lung cancer diagnosis leveraging SqueezeNet with machine learning algorithms using transfer learning. Med. Nov. Technol. Devices 2025, 27, 100383. [Google Scholar] [CrossRef]

- Murthy, N.N.; Thippeswamy, K. TPOT with SVM hybrid machine learning model for lung cancer classification using CT image. Biomed. Signal Process. Control 2025, 104, 107465. [Google Scholar] [CrossRef]

| Evaluation Criteria | Training Set | Validation Set | Test Set |

|---|---|---|---|

| Accuracy (%) | 92.88 | 92.04 | 94.24 |

| Precision (%) | 93.46 | 92.06 | 94.37 |

| Recall (%) | 91.42 | 91.80 | 94.24 |

| F1 Score (%) | 92.67 | 91.93 | 94.23 |

| Model | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|

| ResNet-152 | 92.10 | 91.85 | 92.30 | 92.07 |

| EfficientNet-B7 | 93.45 | 93.20 | 93.60 | 93.40 |

| SwinTransformer | 93.80 | 93.65 | 93.90 | 93.77 |

| DenseNet-201 | 91.75 | 91.40 | 91.90 | 91.65 |

| ConvNext | 93.60 | 93.45 | 93.70 | 93.58 |

| TransUNet | 93.90 | 93.63 | 93.41 | 92.69 |

| CNN-LSTM | 92.86 | 91.89 | 92.64 | 92.78 |

| MobileNetV3 | 90.50 | 90.20 | 90.70 | 90.45 |

| NASNet-A | 92.30 | 92.10 | 92.50 | 92.30 |

| Proposed ViT-DCNN | 94.24 | 94.37 | 94.24 | 94.23 |

| References | Purpose | Method | Key Metrics (%) | Challenges |

|---|---|---|---|---|

| [6] | Multi-class lung cancer detection and prediction | SVM classifier-based approach using MATLAB version 9.8.0.1417392 (R2020a) for image processing | Precision: 94.68 Recall: 92.84 | Binarization may oversimplify complex predictions, limiting generalizability |

| [46] | Develop low-cost, non-destructive cancer screening using serum Raman spectroscopy | Raman spectra database with 1D-CNN for classifying gastric, colon, rectal, and lung cancers | Accuracy: 94.5 Precision: 94.7 Recall: 94.5 F1 Score: 94.5 Kappa Coefficient: 93 | CNN interpretability remains limited |

| [8] | Lung cancer prediction using chest imaging | DensNet-121 with transfer learning on Chest X-ray 14 and JSRT databases | Accuracy: 74.43 ± 6.01 Specificity: 74.96 ± 9.85 Sensitivity: 74.68 ± 15.33 | Computational complexity due to deep network layers |

| [9] | Risk assessment and estimation using pulmonary cancer CT images | CNN for feature extraction, fine-tuning ResNet18, and training with Cox model | AUC: 76 F1 Score: 63 Matthew Correlation Coefficient: 42 | Requires large, annotated datasets to train effectively |

| [47] | Rapid colon cancer detection with tumor markers and spectroscopy | Serum Raman spectroscopy with ELISA for tumor markers and machine learning for classification | Accuracy: 95 | Limited biomarker correlation explored |

| [48] | Explore deep learning techniques for colon cancer classification | Compared optimizers such as SGD, Adamax, AdaDelta, RMSprop, Adam, and Nadam on CNN models | Accuracy: 90 Precision: 89 Recall: 87 F1 Score: 87 | Optimizer performance varied between datasets |

| [12] | Colon cancer risk prediction | Soft-Voting classifier with CatBoost, LightGBM, and Gradient Boosting | Accuracy: 65.8 ± 5.4 Recall: 69.5 ± 6.8 F1 Score: 67.3 ± 2.5 Precision: 66.2 ± 8.3 | Requires optimization for increased accuracy |

| [15] | Improve recognition accuracy for lung cancer from histopathological slides | Evaluated six deep learning models including CNN, CNN-GD, VGG16, VGG19, InceptionV3, and ResNet-50 | Accuracy: 96.52 Precision: 92.14 Sensitivity: 93.71 Specificity: 92.91 F1 Score: 94.21 | Algorithms lacked sufficient explainability |

| [49] | Enhance lung cancer detection using hybrid deep learning and machine learning pipeline | Used SqueezeNet for feature extraction followed by machine learning classifiers on chest CT scans | Accuracy: 92.9 Precision: 92.8 Recall: 92.9 F1 Score: 92.8 | Dataset size was relatively small |

| [50] | Improve early lung cancer classification using TPOT SVM | CT images processed through AMF preprocessing and M-SegNet segmentation followed by feature extraction and TPOT SVM classifier | Accuracy: 91.77 True Positive Rate: 94.79 False Positive Rate: 11.24 | False positive rate remained comparatively high |

| Proposed ViT-DCNN model | To revolutionize efficient and accurate detection of lung and colon cancers by leveraging advanced deep learning architectures for improved clinical decision making | Integrated (ViT-DCNN) Vision Transformer with Deformable CNN for feature extraction and classification | Accuracy: 94.24 Precision: 94.37 Recall: 94.24 F1 Score: 94.23 | Offers opportunities for broader external validation and enhanced interpretability through future explainable AI integration |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pal, A.; Rai, H.M.; Yoo, J.; Lee, S.-R.; Park, Y. ViT-DCNN: Vision Transformer with Deformable CNN Model for Lung and Colon Cancer Detection. Cancers 2025, 17, 3005. https://doi.org/10.3390/cancers17183005

Pal A, Rai HM, Yoo J, Lee S-R, Park Y. ViT-DCNN: Vision Transformer with Deformable CNN Model for Lung and Colon Cancer Detection. Cancers. 2025; 17(18):3005. https://doi.org/10.3390/cancers17183005

Chicago/Turabian StylePal, Aditya, Hari Mohan Rai, Joon Yoo, Sang-Ryong Lee, and Yooheon Park. 2025. "ViT-DCNN: Vision Transformer with Deformable CNN Model for Lung and Colon Cancer Detection" Cancers 17, no. 18: 3005. https://doi.org/10.3390/cancers17183005

APA StylePal, A., Rai, H. M., Yoo, J., Lee, S.-R., & Park, Y. (2025). ViT-DCNN: Vision Transformer with Deformable CNN Model for Lung and Colon Cancer Detection. Cancers, 17(18), 3005. https://doi.org/10.3390/cancers17183005