Early-Stage Melanoma Benchmark Dataset

Simple Summary

Abstract

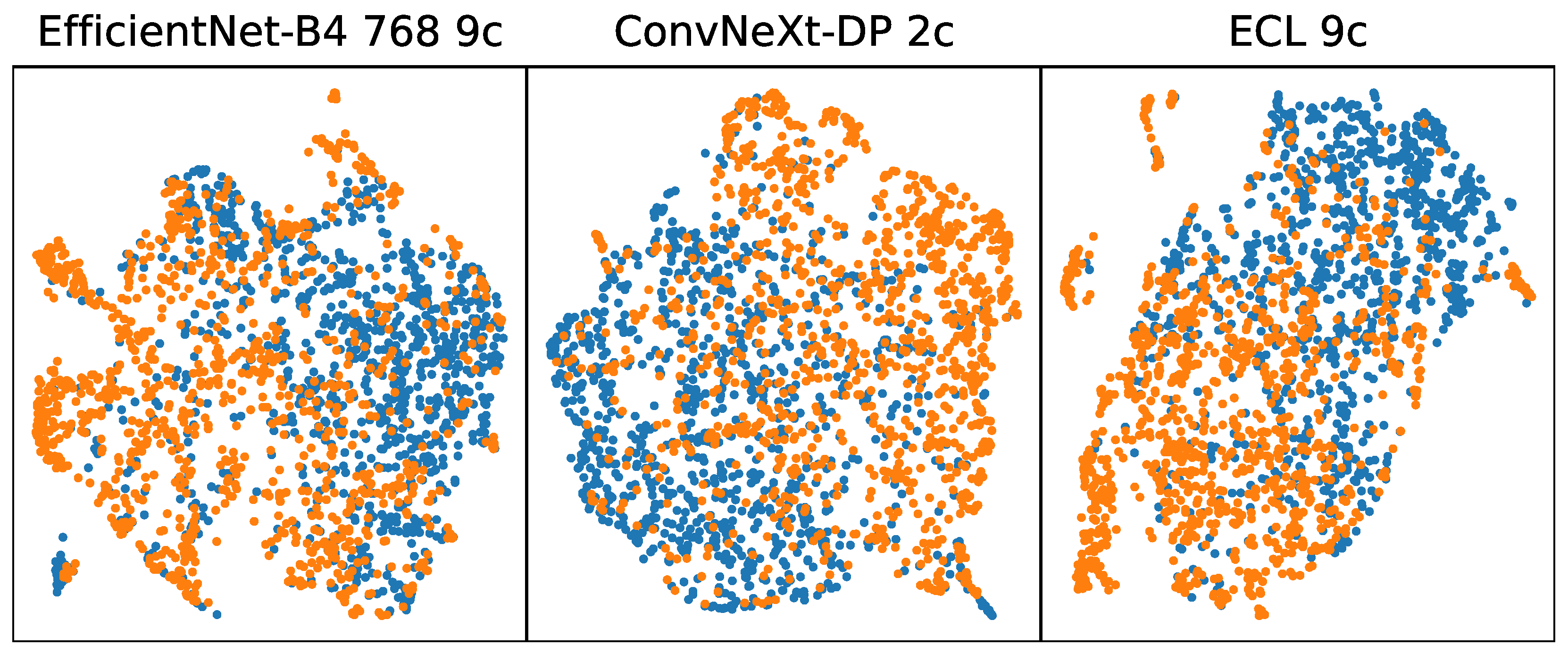

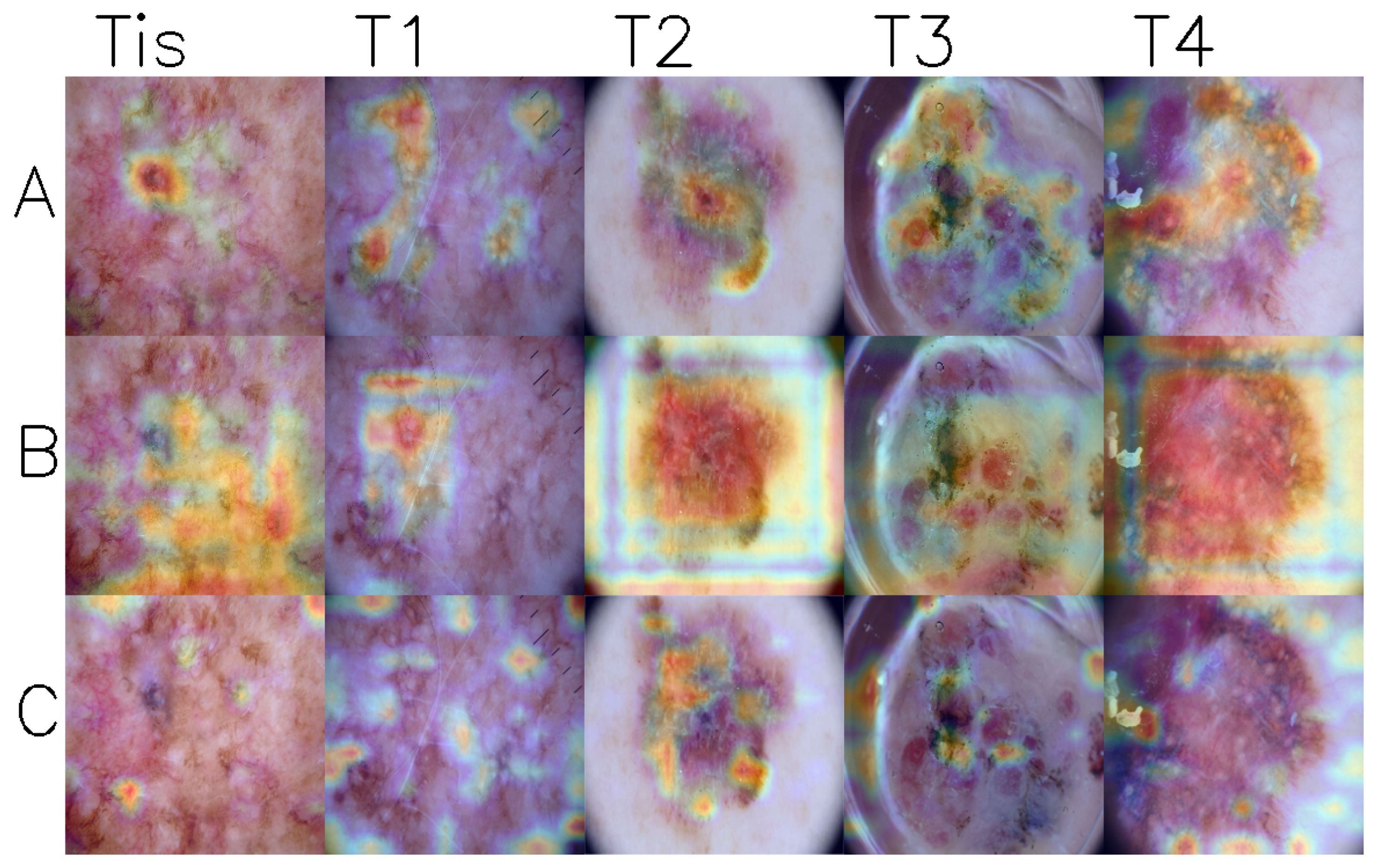

1. Introduction

2. Methods

2.1. Data Collection

2.2. T-Category Label

3. Results

4. Limitations and Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ISIC | The International Skin Imaging Collaboration |

| UMAP | Uniform Manifold Approximation and Projection for Dimension Reduction |

| EMB | Early Melanoma Benchmark |

| Grad-CAM | Gradient-weighted Class Activation Mapping |

| DMP | deep mask pixel-wise supervision |

| ECL | Class-Enhancement Contrastive Learning for Long-tailed Skin Lesion Classification |

References

- Five-Year Survival Rates|SEER Training. Available online: https://training.seer.cancer.gov/melanoma/intro/survival.html (accessed on 13 January 2025).

- Xu, R.; Wang, C.; Zhang, J.; Xu, S.; Meng, W.; Zhang, X. SkinFormer: Learning Statistical Texture Representation with Transformer for Skin Lesion Segmentation. IEEE J. Biomed. Health Inform. 2024, 28, 6008–6018. [Google Scholar] [CrossRef]

- Pandimurugan, V.; Ahmad, S.; Prabu, A.V.; Rahmani, M.K.I.; Abdeljaber, H.A.M.; Eswaran, M.; Nazeer, J. CNN-Based Deep Learning Model for Early Identification and Categorization of Melanoma Skin Cancer Using Medical Imaging. SN Comput. Sci. 2024, 5, 911. [Google Scholar] [CrossRef]

- Lin, T.L.; Lu, C.T.; Karmakar, R.; Nampalley, K.; Mukundan, A.; Hsiao, Y.P.; Hsieh, S.C.; Wang, H.C. Assessing the Efficacy of the Spectrum-Aided Vision Enhancer (SAVE) to Detect Acral Lentiginous Melanoma, Melanoma In Situ, Nodular Melanoma, and Superficial Spreading Melanoma. Diagnostics 2024, 14, 1672. [Google Scholar] [CrossRef]

- Elshahawy, M.; Elnemr, A.; Oproescu, M.; Schiopu, A.G.; Elgarayhi, A.; Elmogy, M.M.; Sallah, M. Early Melanoma Detection Based on a Hybrid YOLOv5 and ResNet Technique. Diagnostics 2023, 13, 2804. [Google Scholar] [CrossRef]

- Patel, R.H.; Foltz, E.A.; Witkowski, A.; Ludzik, J. Analysis of Artificial Intelligence-Based Approaches Applied to Non-Invasive Imaging for Early Detection of Melanoma: A Systematic Review. Cancers 2023, 15, 4694. [Google Scholar] [CrossRef]

- Alenezi, F.; Armghan, A.; Polat, K. A Novel Multi-Task Learning Network Based on Melanoma Segmentation and Classification with Skin Lesion Images. Diagnostics 2023, 13, 262. [Google Scholar] [CrossRef]

- Akash R J, N.; Kaushik, A.; Sivaswamy, J. Evidence-Driven Differential Diagnosis of Malignant Melanoma. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2023 Workshops, Proceedings of the MICCAI 2023, Vancouver, BC, Canada, 8–12 October 2023; Celebi, M.E., Salekin, M.S., Kim, H., Albarqouni, S., Barata, C., Halpern, A., Tschandl, P., Combalia, M., Liu, Y., Zamzmi, G., et al., Eds.; Springer Nature: Cham, Switzerland, 2023; Volume 14393, pp. 57–66. [Google Scholar] [CrossRef]

- Mahmud, M.A.A.; Afrin, S.; Mridha, M.F.; Alfarhood, S.; Che, D.; Safran, M. Explainable Deep Learning Approaches for High Precision Early Melanoma Detection Using Dermoscopic Images. Sci. Rep. 2025, 15, 24533. [Google Scholar] [CrossRef]

- Yu, Z.; Nguyen, J.; Nguyen, T.D.; Kelly, J.; Mclean, C.; Bonnington, P.; Zhang, L.; Mar, V.; Ge, Z. Early Melanoma Diagnosis with Sequential Dermoscopic Images. IEEE Trans. Med. Imaging 2022, 41, 633–646. [Google Scholar] [CrossRef]

- Polesie, S.; Gillstedt, M.; Kittler, H.; Rinner, C.; Tschandl, P.; Paoli, J. Assessment of Melanoma Thickness Based on Dermoscopy Images: An Open, Web-based, International, Diagnostic Study. J. Eur. Acad. Dermatol. Venereol. 2022, 36, 2002. [Google Scholar] [CrossRef]

- Dominguez-Morales, J.P.; Hernández-Rodríguez, J.C.; Duran-Lopez, L.; Conejo-Mir, J.; Pereyra-Rodriguez, J.J. Melanoma Breslow Thickness Classification Using Ensemble-Based Knowledge Distillation with Semi-Supervised Convolutional Neural Networks. IEEE J. Biomed. Health Inform. 2025, 29, 443–455. [Google Scholar] [CrossRef]

- Jaworek-Korjakowska, J.; Kleczek, P.; Gorgon, M. Melanoma Thickness Prediction Based on Convolutional Neural Network with VGG-19 Model Transfer Learning. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 15–20 June 2019; pp. 2748–2756. [Google Scholar] [CrossRef]

- Nogales, M.; Acha, B.; Alarcón, F.; Pereyra, J.; Serrano, C. Robust Melanoma Thickness Prediction via Deep Transfer Learning Enhanced by XAI Techniques. arXiv 2024, arXiv:2406.13441. [Google Scholar] [CrossRef]

- Kawahara, J.; Daneshvar, S.; Argenziano, G.; Hamarneh, G. 7-Point Checklist and Skin Lesion Classification Using Multi-Task Multi-Modal Neural Nets. IEEE J. Biomed. Health Inform. 2018, 23, 538–546. [Google Scholar] [CrossRef]

- Ferrara, G.; Argenziano, G. The WHO 2018 Classification of Cutaneous Melanocytic Neoplasms: Suggestions From Routine Practice. Front. Oncol. 2021, 11, 675296. [Google Scholar] [CrossRef]

- Mirikharaji, Z.; Barata, C.; Abhishek, K.; Bissoto, A.; Avila, S.; Valle, E.; Celebi, M.E.; Hamarneh, G. A Survey on Deep Learning for Skin Lesion Segmentation. arXiv 2022, arXiv:2206.00356. [Google Scholar] [CrossRef]

- Daneshjou, R.; Smith, M.P.; Sun, M.D.; Rotemberg, V.; Zou, J. Lack of Transparency and Potential Bias in Artificial Intelligence Data Sets and Algorithms: A Scoping Review. JAMA Dermatol. 2021, 157, 1362. [Google Scholar] [CrossRef]

- Hendrycks, D.; Dietterich, T. Benchmarking Neural Network Robustness to Common Corruptions and Perturbations. arXiv 2019, arXiv:1903.12261. [Google Scholar] [CrossRef]

- Groh, M.; Harris, C.; Soenksen, L.; Lau, F.; Han, R.; Kim, A.; Koochek, A.; Badri, O. Evaluating Deep Neural Networks Trained on Clinical Images in Dermatology with the Fitzpatrick 17k Dataset. arXiv 2021, arXiv:2104.09957. [Google Scholar] [CrossRef]

- Daneshjou, R.; Vodrahalli, K.; Novoa, R.A.; Jenkins, M.; Liang, W.; Rotemberg, V.; Ko, J.; Swetter, S.M.; Bailey, E.E.; Gevaert, O.; et al. Disparities in Dermatology AI Performance on a Diverse, Curated Clinical Image Set. Sci. Adv. 2022, 8, eabq6147. [Google Scholar] [CrossRef]

- ISIC Archive. Available online: https://gallery.isic-archive.com/#!/topWithHeader/onlyHeaderTop/gallery?filter=%5B%5D (accessed on 12 November 2024).

- Dermoscopy Atlas|Home. Available online: https://www.dermoscopyatlas.com/ (accessed on 12 November 2024).

- Gutman, D.; Codella, N.C.F.; Celebi, E.; Helba, B.; Marchetti, M.; Mishra, N.; Halpern, A. Skin Lesion Analysis toward Melanoma Detection: A Challenge at the International Symposium on Biomedical Imaging (ISBI) 2016, Hosted by the International Skin Imaging Collaboration (ISIC). arXiv 2016, arXiv:1605.01397. [Google Scholar] [CrossRef]

- Codella, N.C.F.; Gutman, D.; Celebi, M.E.; Helba, B.; Marchetti, M.A.; Dusza, S.W.; Kalloo, A.; Liopyris, K.; Mishra, N.; Kittler, H.; et al. Skin Lesion Analysis Toward Melanoma Detection: A Challenge at the 2017 International Symposium on Biomedical Imaging (ISBI), Hosted by the International Skin Imaging Collaboration (ISIC). arXiv 2018, arXiv:1710.05006. [Google Scholar] [CrossRef]

- Codella, N.; Rotemberg, V.; Tschandl, P.; Celebi, M.E.; Dusza, S.; Gutman, D.; Helba, B.; Kalloo, A.; Liopyris, K.; Marchetti, M.; et al. Skin Lesion Analysis Toward Melanoma Detection 2018: A Challenge Hosted by the International Skin Imaging Collaboration (ISIC). arXiv 2019, arXiv:1902.03368. [Google Scholar] [CrossRef]

- Combalia, M.; Codella, N.C.F.; Rotemberg, V.; Helba, B.; Vilaplana, V.; Reiter, O.; Carrera, C.; Barreiro, A.; Halpern, A.C.; Puig, S.; et al. BCN20000: Dermoscopic Lesions in the Wild. arXiv 2019, arXiv:1908.02288. [Google Scholar] [CrossRef]

- Tschandl, P.; Rosendahl, C.; Kittler, H. The HAM10000 Dataset, a Large Collection of Multi-Source Dermatoscopic Images of Common Pigmented Skin Lesions. Sci. Data 2018, 5, 180161. [Google Scholar] [CrossRef]

- The ISIC 2020 Challenge Dataset. Available online: https://challenge2020.isic-archive.com/ (accessed on 8 January 2023).

- Cassidy, B.; Kendrick, C.; Brodzicki, A.; Jaworek-Korjakowska, J.; Yap, M.H. Analysis of the ISIC Image Datasets: Usage, Benchmarks and Recommendations. Med. Image Anal. 2022, 75, 102305. [Google Scholar] [CrossRef]

- Mikrut, R. Qarmin/Czkawka. 2025. Available online: https://github.com/qarmin/czkawka (accessed on 8 January 2025).

- Keung, E.Z.; Gershenwald, J.E. The Eighth Edition American Joint Committee on Cancer (AJCC) Melanoma Staging System: Implications for Melanoma Treatment and Care. Expert Rev. Anticancer. Ther. 2018, 18, 775. [Google Scholar] [CrossRef]

- Ha, Q.; Liu, B.; Liu, F. Identifying Melanoma Images Using EfficientNet Ensemble: Winning Solution to the SIIM-ISIC Melanoma Classification Challenge. arXiv 2020, arXiv:2010.05351. [Google Scholar]

- Zhang, Y.; Chen, J.; Wang, K.; Xie, F. ECL: Class-Enhancement Contrastive Learning for Long-tailed Skin Lesion Classification. arXiv 2023, arXiv:2307.04136. [Google Scholar]

- Dzieniszewska, A.; Garbat, P.; Piramidowicz, R. Deep Pixel-Wise Supervision for Skin Lesion Classification. Comput. Biol. Med. 2025, 193, 110352. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

| T-Category | Tis | T1 | T2 | T3 | T4 |

|---|---|---|---|---|---|

| Breslow thickness [mm] | 0 | ≤1.0 | >1.0–2.0 | >2.0–4.0 | >4.0 |

| Model | Rep. | All | Tis | T1 | T2 | T3 | T4 |

|---|---|---|---|---|---|---|---|

| ConvNeXt-DP 224 9c | 0.880 | 0.445 | 0.428 | 0.433 | 0.603 | 0.471 | 0.565 |

| ConvNeXt-DMP 224 9c | 0.880 | 0.488 | 0.455 | 0.490 | 0.630 | 0.588 | 0.826 |

| ConvNeXt-DP 224 2c | 0.890 | 0.414 | 0.365 | 0.440 | 0.589 | 0.588 | 0.565 |

| ConvNeXt-DMP 224 2c | 0.907 | 0.551 | 0.532 | 0.534 | 0.740 | 0.529 | 0.739 |

| EfficientNet-B4 448 9c | 0.974 | 0.477 | 0.431 | 0.505 | 0.644 | 0.529 | 0.652 |

| EfficientNet-B4 896 9c | 0.974 | 0.455 | 0.418 | 0.446 | 0.685 | 0.588 | 0.739 |

| EfficientNet-B4 640 9c | 0.977 | 0.486 | 0.469 | 0.456 | 0.699 | 0.588 | 0.696 |

| EfficientNet-B4 768 9c | 0.977 | 0.542 | 0.519 | 0.521 | 0.726 | 0.824 | 0.696 |

| EfficientNet-B5 640 4c | 0.977 | 0.485 | 0.463 | 0.472 | 0.658 | 0.647 | 0.609 |

| EfficientNet-B5 640 9c | 0.977 | 0.524 | 0.494 | 0.518 | 0.740 | 0.529 | 0.739 |

| EfficientNet-B5 448 9c | 0.975 | 0.482 | 0.461 | 0.469 | 0.644 | 0.588 | 0.652 |

| EfficientNet-B6 448 9c | 0.974 | 0.510 | 0.488 | 0.500 | 0.671 | 0.588 | 0.696 |

| EfficientNet-B6 576 9c | 0.976 | 0.467 | 0.446 | 0.443 | 0.658 | 0.647 | 0.696 |

| EfficientNet-B6 640 9c | 0.976 | 0.463 | 0.421 | 0.474 | 0.685 | 0.588 | 0.565 |

| EfficientNet-B7 576 9c | 0.976 | 0.477 | 0.456 | 0.469 | 0.630 | 0.647 | 0.565 |

| EfficientNet-B7 640 9c | 0.975 | 0.482 | 0.455 | 0.477 | 0.603 | 0.824 | 0.652 |

| ResNeSt-101 640 9c | 0.973 | 0.471 | 0.436 | 0.469 | 0.658 | 0.529 | 0.783 |

| SE-ResNeXt-101 640 9c | 0.974 | 0.501 | 0.479 | 0.497 | 0.671 | 0.529 | 0.565 |

| ECL 224 9c | 0.861 | 0.395 | 0.364 | 0.391 | 0.548 | 0.588 | 0.652 |

| ECL 224 8c | 0.872 | 0.214 | 0.172 | 0.223 | 0.397 | 0.353 | 0.478 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dzieniszewska, A.; Garbat, P.; Pietkiewicz, P.; Piramidowicz, R. Early-Stage Melanoma Benchmark Dataset. Cancers 2025, 17, 2476. https://doi.org/10.3390/cancers17152476

Dzieniszewska A, Garbat P, Pietkiewicz P, Piramidowicz R. Early-Stage Melanoma Benchmark Dataset. Cancers. 2025; 17(15):2476. https://doi.org/10.3390/cancers17152476

Chicago/Turabian StyleDzieniszewska, Aleksandra, Piotr Garbat, Paweł Pietkiewicz, and Ryszard Piramidowicz. 2025. "Early-Stage Melanoma Benchmark Dataset" Cancers 17, no. 15: 2476. https://doi.org/10.3390/cancers17152476

APA StyleDzieniszewska, A., Garbat, P., Pietkiewicz, P., & Piramidowicz, R. (2025). Early-Stage Melanoma Benchmark Dataset. Cancers, 17(15), 2476. https://doi.org/10.3390/cancers17152476