Simple Summary

The Internet of Things (IoT) uses connected devices and sensors, like high-resolution cameras and specific sensors in wearable devices, for the collection of skin images with abnormalities. Skin cancer detection is difficult because of differences in lesion size, shape, and lighting conditions. To address this, an innovative approach called “ODL-SCDC”, combining deep learning with IoT technology, is developed. The proposed model uses advanced techniques like hyperparameter selection and feature extraction to improve skin cancer classification. The results show that ODL-SCDC outperforms other methods in accurately identifying skin lesions, which could have a significant impact on early cancer detection in the medical field.

Abstract

Internet of Things (IoT)-assisted skin cancer recognition integrates several connected devices and sensors for supporting the primary analysis and monitoring of skin conditions. A preliminary analysis of skin cancer images is extremely difficult because of factors such as distinct sizes and shapes of lesions, differences in color illumination, and light reflections on the skin surface. In recent times, IoT-based skin cancer recognition utilizing deep learning (DL) has been used for enhancing the early analysis and monitoring of skin cancer. This article presents an optimal deep learning-based skin cancer detection and classification (ODL-SCDC) methodology in the IoT environment. The goal of the ODL-SCDC technique is to exploit metaheuristic-based hyperparameter selection approaches with a DL model for skin cancer classification. The ODL-SCDC methodology involves an arithmetic optimization algorithm (AOA) with the EfficientNet model for feature extraction. For skin cancer detection, a stacked denoising autoencoder (SDAE) classification model has been used. Lastly, the dragonfly algorithm (DFA) is utilized for the optimal hyperparameter selection of the SDAE algorithm. The simulation validation of the ODL-SCDC methodology has been tested on a benchmark ISIC skin lesion database. The extensive outcomes reported a better solution of the ODL-SCDC methodology compared with other models, with a maximum sensitivity of 97.74%, specificity of 99.71%, and accuracy of 99.55%. The proposed model can assist medical professionals, specifically dermatologists and potentially other healthcare practitioners, in the skin cancer diagnosis process.

1. Introduction

The Internet of Things (IoT) is designed by interconnecting devices to the Internet using modern communication technology for sharing data [1]. Recently, IoT has been popularly implemented in various appliances such as vehicular ad hoc networks, smart grids, body sensor networks, smart cities, and smart homes [2,3]. The IoT development depends on diverse advanced technologies, namely, wireless sensor networks (WSNs), cloud computing (CC), and information sensing [4]. The IoT is usually exploited to enhance and develop medical systems due to its effective power for integrating with the resources of substructures and offering essential data to users [5]. The medical system puts a considerable quantity of data through WSNs when distributing various e-health services, namely, electronic health records, remote monitoring for patients, and medical platforms [6]. Skin cancer is considered the sixth major cancer variety, which could be improved around the world. The skin layer comprises three forms of cells, melanocytes, basal cells, and squamous cells, in which cells are responsible for tissues to induce cancer [7,8]. Hence, there are different skin tumors, namely, basal cell carcinoma (BCC), melanoma, and squamous cell carcinoma (SCC), which can be a serious variety of cancers. People are mainly affected by skin cancer in Australia and the United States [9]. Diagnosis of skin cancer at an earlier phase is challenging for dermatologists, which stimulates research workers to develop a simplified and automated cancer detector for identifying skin cancer at an earlier phase [10].

Dermoscopy improves melanoma diagnostic accuracy; however, it can be quite difficult to accurately analyze some cancers, and especially earlier melanomas have insufficient special dermoscopic features [11]. Although dermoscopy analyzes skin cancers with better accuracy, it is not appropriate for identifying featureless melanoma, and it requires increased accuracy to improve the patient’s survival rates [12,13]. The difficulties with dermoscopy and the requirement to enhance the identification accuracy of skin tumors then positions the substructure for emerging computer-aided detection (CAD) techniques for analyzing skin cancers [14]. In general, there have been five stages in computer-aided skin cancer analysis such as feature extraction, segmentation, classification, preprocessing, and image acquisition [15]. The important stages in the CAD of skin cancers are classification and segmentation [16]. But, identifying skin cancer employing CAD is simple, and we should consider numerous aspects for accurate identification, for instance, artefacts like ruler signs, dark corners, ink marks, water bubbles, hairs, and marker signs, which may lead to incorrect segmentation and misclassification of skin cancers [17,18]. In several computer-aided techniques, deep learning (DL)-based algorithms provide optimistic outcomes for the classification and segmentation of skin cancers due to their capability for extracting complex features from skin cancer images for extremely specific diagnosis [19]. Also, DL methods learn function-specific features and are more effective than other techniques.

This article presents an optimal deep learning-based skin cancer detection and classification (ODL-SCDC) algorithm in the IoT environment. The goal of the ODL-SCDC technique is to exploit metaheuristic-based hyperparameter selection approaches with a DL model for skin cancer classification. To achieve this, the ODL-SCDC technique undergoes preprocessing using a Wiener filtering (WF) system. Moreover, the ODL-SCDC algorithm involves an arithmetic optimization algorithm (AOA) with an EfficientNet model for feature extraction. For skin cancer detection, a stacked denoising autoencoder (SDAE) classification model has been used. Lastly, the dragonfly algorithm (DFA) is utilized for the optimal hyperparameter selection of the SDAE algorithm. The simulation validation of the ODL-SCDC algorithm can be tested on a benchmark skin lesion database. The key contributions of the paper are summarized as follows.

- Develop an automated ODL-SCDC technique comprising WF-based preprocessing, AOA with EfficientNet-based feature extraction, SDAE classifier, and DFA-based hyperparameter tuning. To the best of our knowledge, the proposed ODL-SCDC technique never existed in the literature.

- Propose AOA with the EfficientNet model for feature extraction, a critical aspect of skin cancer classification. The AOA-based fine-tuning process is crucial for optimizing the performance of the classification model.

- Present an SDAE classifier for skin cancer classification and DFA is employed for optimal hyperparameter selection of the SDAE model. Hyperparameter optimization of the SDAE model using DFA using cross-validation helps to boost the predictive outcome of the proposed model for unseen data.

2. Related Works

In [20], a powerful skin cancer identification model was presented for enhancing accuracy by learning and extracting significant image representations through a MobileNetV3 framework. Subsequently, the removed features were employed as input to an adapted Hunger Games Search (HGS) based on Dynamic-Opposite Learning (DOLHGS) and PSO. Ramya and Sathiyabhama’s [21] primary aim was creating an ensemble ML with an improved genetic algorithm (GA) method for attaining high-level accurateness in the prognosis of skin cancers at an early phase by comparison with other present methods. Then, the feature selection (FS) was implemented by utilizing an Enhanced-GA (EGA) that generates enhanced solutions through processes such as ensemble, mutations, and crossover with ELM (EGA-ELM) for classifying the images as non-cancerous or cancerous. Abd Elaziz et al. [22] designed a robust technique for skin cancer diagnosis with a DL-based algorithm as the extracted features support that a diagnosis could be attained by employing the MobileNetV3 framework. Further, an innovative technique named the Improved Artificial Rabbits Optimization (IARO) was presented that exploits the crossover operator and Gaussian mutation to avoid the irrelevant features from the feature extraction by the MobileNetV3 framework.

Khamparia et al. [23] introduced a new DL Internet of Health and Things (IoHT)-determined model for classifying skin cancers in skin images by implementing the TL method. In this developed model, automated features are removed from images employing various pretrained frameworks, namely, SqueezeNet, VGG19, Inception V3, and ResNet50, that were provided in the fully connected layer (FCL) of a CNN for the classification of malignant and benign skin cells utilizing a dense and max pooling process. The authors of [24] suggested a novel skin cancer detection technique named DL with Evolutionary Algorithm Image Segmentation (DL-EAIS) for IoT and cloud-based smart medical fields. Firstly, dermoscopic images could be taken by employing IoT devices that must be transferred to cloud servers for additional identification. Secondly, the shallow CNN (SCNN) framework was exploited for feature extraction. Moreover, the Deep-Kernel-ELM (D-KELM) algorithm has been utilized as a classification technique for identifying the class labels of dermoscopic images. In [25], the DL technique (CNN) was utilized to develop a computer technique to forecast novel conditions of skin cancers. Later, this developed method made a CNN approach that contains four fully connected layers, three convolution layers, and three max pooling layers. Adjobo et al. [26] implemented a Gabor Convolutional Network (GCN) method to enhance the effectiveness of the automatic method of analysis for skin tumors. This algorithm integrates a CNN and Gabor filtering (GF) and supports three operations such as the collection of GF banks, a CNN model, and filter injection. In [27], a DL-assisted hybrid optimizer was employed to identify skin cancer and segmenting lesions. Two optimization techniques have been implemented for diagnosing cancers and segmenting skin lesions. MultiScale Residual Fusion Network (MSRFNet) was exploited for the segmentation of skin cancer and could be trained by the developed Average Subtraction Student Psychology-Based Optimizer (ASSPBO) technique.

3. The Proposed Model

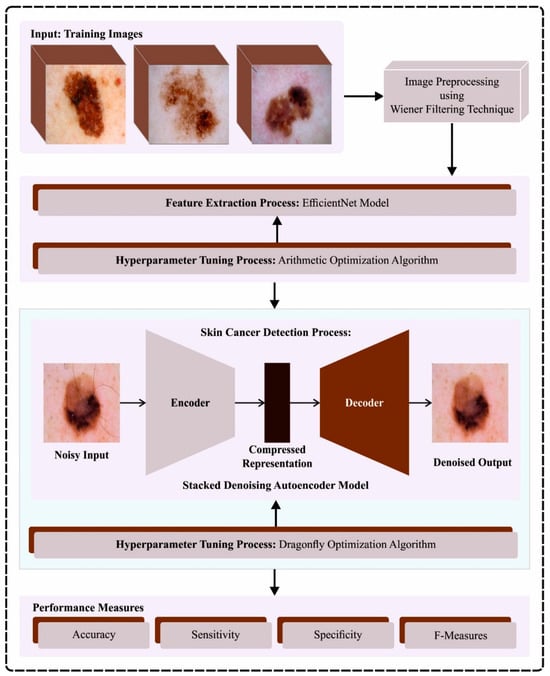

In this article, we have designed and developed an automated skin cancer classification and detection model using the ODL-SCDC technique in the IoT environment. The goal of the ODL-SCDC technique is to exploit metaheuristic-based hyperparameter selection approaches with a DL model for skin cancer classification. To achieve this, the ODL-SCDC technique performs a series of processes such as WF-based processing, EfficientNet-based feature extraction, AOA-based hyperparameter tuning, SDAE-based classification, and DFA-based parameter tuning. Figure 1 depicts the entire process of the ODL-SCDC approach.

Figure 1.

Overall process of ODL-SCDC algorithm.

3.1. Image Preprocessing

To preprocess the input images, the WF approach is used. The WF is named after Norbert Wiener, and it is a mathematical model for signal processing and filtering [28]. It is mainly utilized in the domains of statistics, engineering, and image processing for estimating an unknown signal or system by decreasing the mean squared error (MSE) among the evaluated signal and true signal. The WF is extremely beneficial if dealing with noisy signals or once the features of noises are known. Mathematically, the WF can plan for minimizing the MSE among the estimation signal and true signal. It usually contains convolutional, spectral analysis, and statistical estimates. The filtering is executed in either the time or frequency domains, based on the nature of the problems and the existing data.

3.2. Feature Extraction Using EfficientNet Model

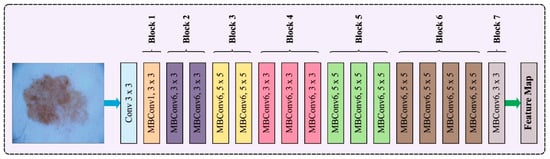

In this work, the EfficientNet approach is applied for feature extraction. A model scaling algorithm is used to enhance the accuracy and speed of the model. To accomplish this, different sizes of scaling models can be re-examined as suggested by the predecessors, involving the width, depth, and resolution of the network [29]. The researchers recognized that the dimension is mutually influential and EfficientNet was proposed through experiments, while earlier research had focused typically on expanding one of these dimensions to enhance performance. Figure 2 represents the architecture of EfficientNet. Particularly, they formulated the problem description for exploring the relationships between the width, depth, and resolution of the network to achieve model accuracy. Consider the entire net as , and the layer is formulated by , where represents the operator, denotes the output tensor, and indicates the input tensor. Where has convolution layers, . The convolution layer is generally divided into similar architecture phases, hence is formulated as:

In Equation (1), refers to the stage index, denotes the convolution layer of stages, repeats times, and shows the shape of the input images.

Figure 2.

Architecture of EfficientNet.

The research workers established some constraints involving fixing the fundamental architecture of the network, which imposes equivalent scaling on each layer and incorporates computation and memory constraints to decrease the search range. Consequently, the scaling of the network is enhanced by multiplying the baseline network as with the constant magnification:

In Equation (2), and signify the coefficients for scaling the width, depth, and resolution of the network.

The authors presented a compound scaling method after conducting an experiment that involved modifying only one dimension simultaneously, along with adjusting each of the three dimensions at a time. This technique includes a compound co-efficient to equally scale the resolution, width, and depth of the network:

In Equation (3), and are the constants representing a small grid search.

3.3. Hyperparameter Tuning Using AOA

To adjust the parameters related to the EfficientNet, the AOA is used. The concept behind the AOA technique is to perform mathematical operations such as addition, division, subtraction, and multiplication operators [30]. AOA is a basic structure with lower computation difficulty, and it can be associated with a sine-cosine algorithm (SCA). Assume that M&D companies are turning out large phases in each iteration; the exploration stage is where most of the work is performed.

where referring to the simple positive number and the constant coefficient represented as 1 (0.499) are two factors from the proposal model. MOP includes a nonlinear reduction from 0 to 1 as the iterations progress.

where is a constant value fixed as 5. Note that both the and operators in Equation (5) generate a high random starting point for the best search agent. At the same time, the and operators are employed to devote greater attention to local exploitation, thus decreasing the count of stages beneath the search space.

The right equilibrium between use and discovery is critical to the accomplishment of maximum efficiencies in any model. The AOA parameter was utilized to switch between exploitation and exploration at each iteration.

In Equation (6), and Max indicate constant values. According to Equation (6), MOA enhances Min to Max. Thus, the search agent has an additional chance to conduct exploration in the searching range; then, the search agent is very likely to conduct a search near the optimal position.

The AOA technique produces an FF to enhance classification accuracy. It shows a positive integer for describing the real-time accuracy of the possible performance. The classifier error rate is supposed to be FF, and its minimization is the goal.

3.4. Skin Cancer Detection Using Optimal SDAE Model

For skin cancer classification, the SDAE model is applied. Autoencoders (AEs) are allowed to convert high-dimension input data into low-dimension feature representations [31]. For improved robustness of AE, the DAE is capable of mapping real data instances for corrupted instances . Stacking multiple DAEs allows the input data that were compressed as distinct hidden spaces to be extracted for in-depth features. Therefore, the resultant layer of the SDAE is expressed as Equation (8):

where denotes the corrupted input for implies the weighted matrix, represents the bias vectors, and stands for the activation functions like Relu, Sigmoid, and Tanh. signifies the count of hidden layers (HLs), with for the input layer and for the resultant layer. By diminishing the error among the original input and reconstructed output, the main function of the SDAE is expressed as Equation (9):

Compared to the simple AE and DAE, the SDAE is a specific hierarchical model for learning the feature representation from depth in the corrupted input.

Finally, the DFA is utilized for the hyperparameter selection of the SDAE model. DFA is a recent metaheuristic technique that drew its inspiration from the static and dynamic strategies of crowding [32]. Both steps in the metaheuristic algorithm are called exploitation and exploration. DFs form small groups and fly in dissimilar regions as a static group. In the static group, DFs fly in one direction and in large groups, which are desired behaviors from the exploitation stage. To inspire the behaviors of DFs, five fundamental rules, three of which are developed by Reynold and two novel concepts, are discussed in detail:

Separation, which represents the avoidance of people’s contact with others, is given as follows.

where the measured tap of transformer signifies the individual location, refers to the amount of power of resources in the location, and shows the number of measurements.

Alignment: Compared to the total tap transformer measurement, this implies the amount of the tap transformer at different hours.

In Equation (11), denotes the number of transformer and refers to the amount of transformer tap measurements.

Cohesion: This implies the quantity of passing power measured in relation to the overall amount of measured powers in various hours.

In Equation (12), denotes the amount closer to the reference value and shows the transformer tap rate.

Attraction: The principal objective is to maintain survival; consequently, each individual should be attracted to the food sources:

In Equation (13), denotes the reduction in transformer tap loss and is the power transmission from the network.

Distraction: This means staying away from the enemy that is shown below.

In Equation (14), denotes the location of the enemy and shows the location of individuals.

Position vector and the step length vector are the two vectors considered for updating the location of artificial DFs and simulating their movement.

In Equation (15), the step length vector is the same as the speed vector in PSO, and based on the PSO technique, the DFA is developed. denotes the alignment value based on the load and indicates the co-efficient related to the direction; denotes the number of transformer taps from the presence of scattered production; denotes the separation rate compared with the loss; the value represents the nutrition factor; and indicates the food source for the load. The conditions of the tap transformer regarding the passing power are noted by , implies the inertia weight, and shows the repetition count of the model. shows the cohesion coefficient and denotes the cohesion value connected to . indicates the deviation of power transmitted, . After evaluating the step vector, the position vector is evaluated using the following expression.

4. Results Analysis

The proposed model is simulated using the Python 3.8.5 tool on a PC with the following specifications: i5-8600k, GeForce 1050 Ti 4 GB, 16 GB RAM, 250 GB SSD, and 1 TB HDD. The parameter settings are given as follows: learning rate: 0.01, dropout: 0.5, batch size: 5, epoch count: 50, and activation: ReLU. For experimental validation, 80:20 and 70:30 ratios of training/testing data are used.

In this study, the performance validation of the ODL-SCDC algorithm has been tested on the ISIC database including distinct classes, namely, Angioma (ANG) (21 images), Nevus (NEV) (46 images), Lentigo NOS (LNOS) (41 images), Solar Lentigo (SLG) (68 images), Melanoma (MEL) (51 images), Seborrheic Keratosis (SKT) (54 images), and Basal Cell Carcinoma (BCC) (37 images). Table 1 represents the details of the database.

Table 1.

Database details.

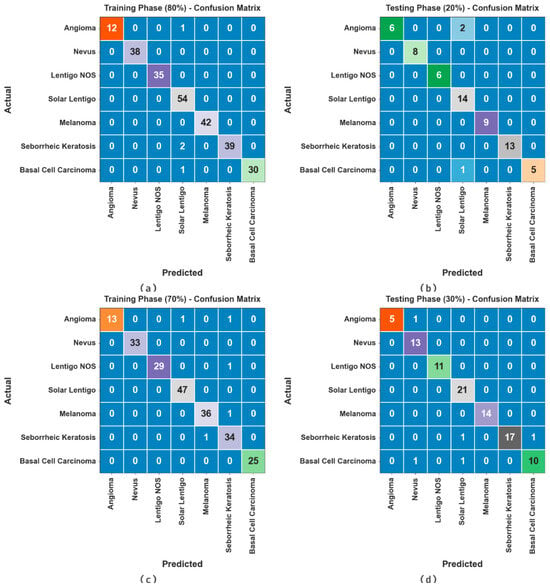

Figure 3 exhibits the confusion matrices attained by the ODL-SCDC methodology at 80:20 and 70:30 of the TR phase/TS phase. The outcome inferred the effective recognition and classification of all seven classes.

Figure 3.

Confusion matrices at (a,b) 80:20 of TR phase/TS phase and (c,d) 70:30 of TR phase/TS phase.

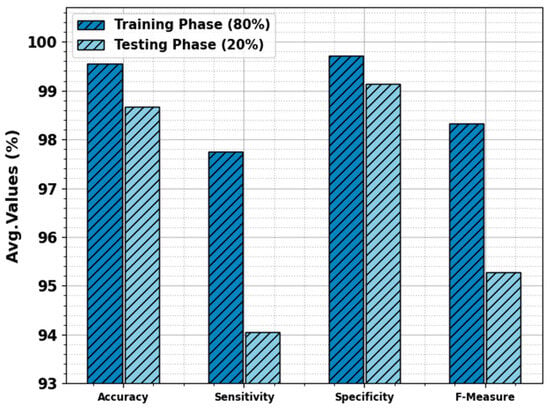

The skin cancer classification result of the ODL-SCDC technique is provided at 80:20 of the TR phase/TS phase in Table 2 and Figure 4. The experimental values inferred that the ODL-SCDC technique gains enhanced performance under all classes. With 80% of the TR phase, the ODL-SCDC technique offers average , , , and of 96.55%, 97.74%, 99.71%, and 98.33%, respectively. Additionally, with 20% of the TS phase, the ODL-SCDC system gains average , , , and of 98.66%, 94.05%, 99.14%, and 95.28%, correspondingly.

Table 2.

Skin cancer classifier outcome of ODL-SCDC algorithm at 80:20 of TR phase/TS phase.

Figure 4.

Average of ODL-SCDC algorithm at 80:20 of TR phase/TS phase.

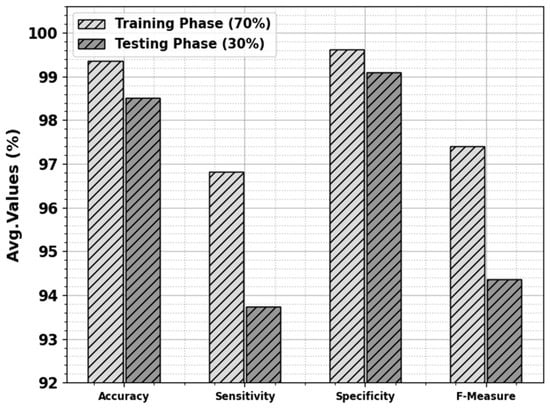

The skin cancer classification outcome of the ODL-SCDC technique is provided at 70:30 of the TR phase/TS phase in Table 3 and Figure 5. The simulation values implied that the ODL-SCDC method obtains higher outcomes under all classes. With 70% of the TR phase, the ODL-SCDC system attains average , , , and of 99.36%, 96.82%, 99.61%, and 97.41%, correspondingly. Furthermore, with 30% of the TS phase, the ODL-SCDC algorithm gains average , , , and of 98.51%, 93.73%, 99.10%, and 94.37%, correspondingly.

Table 3.

Skin cancer classifier outcome of ODL-SCDC algorithm at 70:30 of TR phase/TS phase.

Figure 5.

Average of ODL-SCDC algorithm at 70:30 of TR phase/TS phase.

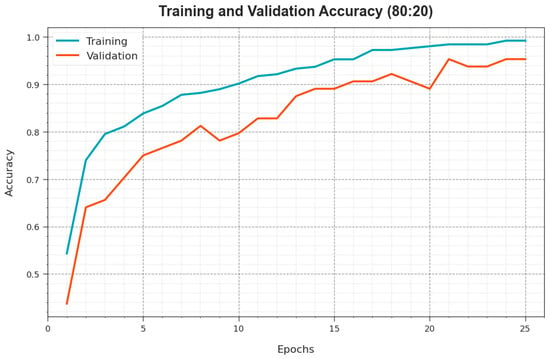

To calculate the performance of the ODL-SCDC approach at 80:20 of the TR phase/TS phase, TR and TS curves are determined, as revealed in Figure 6. The TR and TS curves establish the performance of the ODL-SCDC model over several epochs. The figure provides meaningful details regarding the learning task and generalisation abilities of the ODL-SCDC model. With an enhanced epoch count, it is noticed that the TR and TS curves are improved. It is experimental that the ODL-SCDC algorithm obtains better testing accuracy which has the capability of recognizing the patterns in the TR and TS data.

Figure 6.

curve of ODL-SCDC approach at 80:20 of TR phase/TS phase.

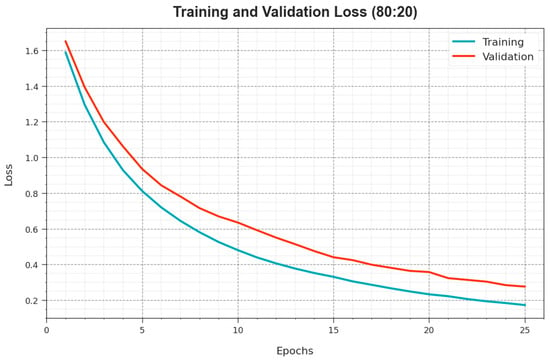

Figure 7 exhibits the overall TR and TS loss values of the ODL-SCDC algorithm at 80:20 of the TR phase/TS phase over epochs. The TR loss exhibits that the method loss is minimal over epochs. Primarily, the loss values are lesser as the model modifies the weight to minimize the prediction error on the TR and TS data. The loss curves demonstrate the extent to which the model fits the training data. It is detected that the TR and TS loss is steadily decreased and depicted that the ODL-SCDC system effectually learns the patterns exhibited in the TR and TS data. It is also observed that the ODL-SCDC methodology modifies the parameters to decrease the discrepancy between the prediction and the original training label.

Figure 7.

Loss curve of ODL-SCDC approach at 80:20 of TR phase/TS phase.

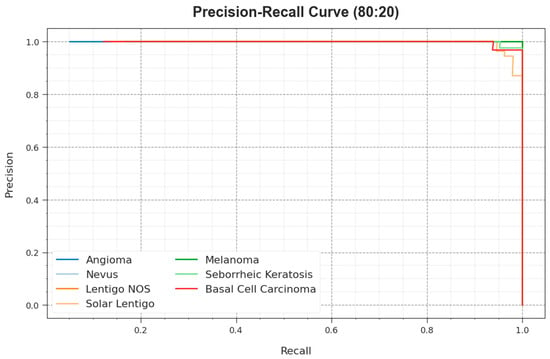

The precision–recall curve of the ODL-SCDC system at 80:20 of the TR phase/TS phase is demonstrated by plotting precision against recall as defined in Figure 8. The outcome confirms that the ODL-SCDC approach reaches higher precision–recall outcomes under all classes. The figure represents that the model learns to recognize various classes. The ODL-SCDC model accomplishes improved results in the recognition of positive instances with minimal false positives.

Figure 8.

PR curve of ODL-SCDC system at 80:20 of TR phase/TS phase.

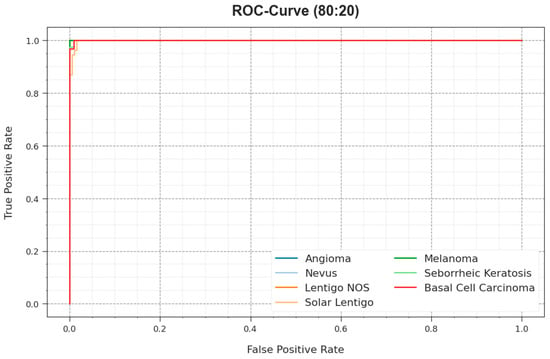

The ROC curves offered by the ODL-SCDC model at 80:20 of the TR phase/TS phase are illustrated in Figure 9, which have the ability the discriminate the class labels. The figure implies valuable insights into the trade-off between the TPR and FPR rates over distinct classification thresholds and varying numbers of epochs. It presents the accurate predictive performance of the ODL-SCDC system on the classification of various classes.

Figure 9.

ROC curve of ODL-SCDC approach at 80:20 of TR phase/TS phase.

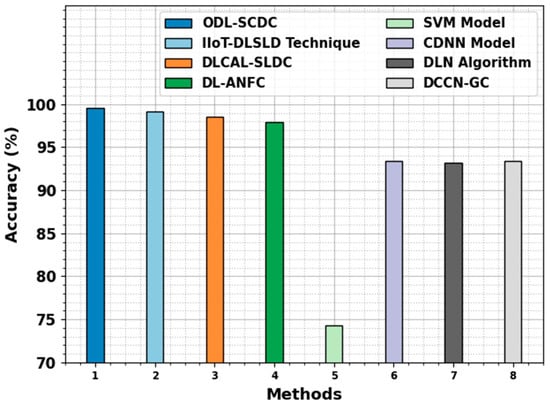

In Table 4, a comprehensive comparison study of the ODL-SCDC technique is made [1]. Figure 10 represents the comparative results of the ODL-SCDC technique in terms of . Based on , the ODL-SCDC technique gains an increasing of 99.55%, whereas the IIOT-DLSLD, DLCAL-SLDC, DL-ANFC, SVM, CDNN, DLN, and DCCN-GC models obtain decreasing values of 99.20%, 98.50%, 97.90%, 74.30%, 93.40%, 93.20%, and 93.40%, respectively.

Table 4.

Comparative outcome of ODL-SCDC algorithm with other approaches.

Figure 10.

outcome of ODL-SCDC algorithm with other approaches.

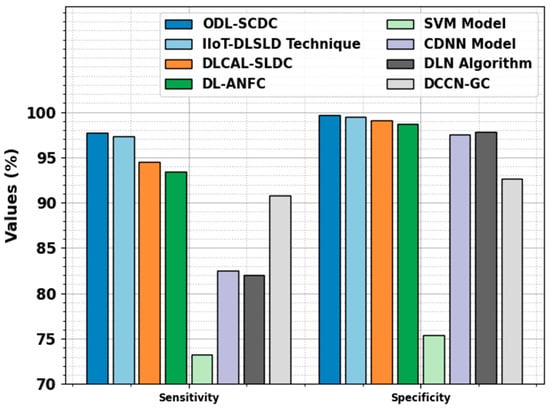

Figure 11 signifies the comparative outcomes of the ODL-SCDC approach in terms of and . Based on , the ODL-SCDC technique gains a higher of 97.74%, whereas the IIOT-DLSLD, DLCAL-SLDC, DL-ANFC, SVM, CDNN, DLN, and DCCN-GC systems obtain decreasing values of 97.30%, 94.50%, 93.40%, 73.20%, 82.50%, 82%, and 90.80%, correspondingly. Based on , the ODL-SCDC methodology achieves a higher of 99.71%, whereas the IIOT-DLSLD, DLCAL-SLDC, DL-ANFC, SVM, CDNN, DLN, and DCCN-GC algorithms obtain lesser values of 99.50%, 99.10%, 98.70%, 75.40%, 97.50%, 97.80%, and 92.70%, correspondingly.

Figure 11.

and outcomes of ODL-SCDC algorithm with other approaches.

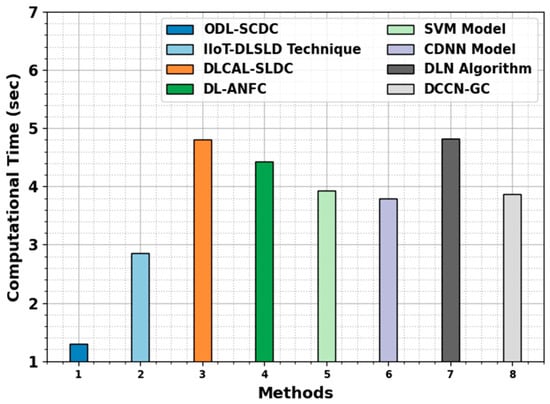

Lastly, the computation time (CT) results of the ODL-SCDC technique are compared with recent models in Table 5 and Figure 12. The experimental outcomes infer the lowest CT value of the ODL-SCDC technique with 1.30 s. On the other hand, the IIoT-DLSLD, DLCAL-SLDC, DL-ANFC, SVM, CDNN, DLN, and DCCN-GC models obtain increasing CT values. Therefore, the ODL-SCDC technique exhibits effectual performance of skin cancer classification.

Table 5.

CT outcome of ODL-SCDC algorithm with other approaches.

Figure 12.

CT outcome of ODL-SCDC algorithm with other approaches.

5. Conclusions

In this article, we have designed and developed an automated skin cancer classification and detection model using the ODL-SCDC technique in the IoT environment. The goal of the ODL-SCDC technique is to exploit metaheuristic-based hyperparameter selection approaches with a DL model for skin cancer classification. To achieve this, the ODL-SCDC technique performs a series of processes such as WF-based processing, EfficientNet-based feature extraction, AOA-based hyperparameter tuning, SDAE-based classification, and DFA-based parameter tuning. In addition, the ODL-SCDC system involves the AOA with the EfficientNet algorithm for feature extraction. For skin cancer detection, the SDAE classification model has been used. Lastly, the DFA is utilized for the optimal hyperparameter selection of the SDAE algorithm. The simulation validation of the ODL-SCDC algorithm has been tested on a benchmark skin lesion database. The extensive results reported the enhanced performance of the ODL-SCDC technique with other approaches with respect to distinct measures.

Author Contributions

Conceptualization, M.O. and M.A.A.; Methodology, M.O., M.A.A., N.S.A. and M.A.S.; Software, S.S.A.; Validation, N.S.A., S.S.A. and A.S.; Investigation, M.O.; Resources, N.S.A.; Data curation, N.S.A.; Writing—original draft, M.O., M.A.A., M.A.S. and A.S.; Writing—review & editing, M.O., M.A.A., N.S.A., S.S.A., M.A.S. and A.S.; Visualization, N.S.A. and S.S.A.; Project administration, M.A.A.; Funding acquisition, M.O. and M.A.A. All authors have read and agreed to the published version of the manuscript.

Funding

The authors extend their appreciation to the Deanship of Scientific Research at King Khalid University for funding this work through a large group Research Project under grant number (RGP2/61/44). Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2023R203), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia. Research Supporting Project number (RSPD2023R521), King Saud University, Riyadh, Saudi Arabia. The authors would like to thank the Deanship of Scientific Research at Majmaah University for supporting this work under Project Number R-2023-656. This study is partially funded by the Future University in Egypt (FUE).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing does not apply to this article as no datasets were generated during the current study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Asiri, Y.; Halawani, H.T.; Algarni, A.D.; Alanazi, A.A. IoT-enabled healthcare environment using intelligent deep learning enabled skin lesion diagnosis model. Alex. Eng. J. 2023, 78, 35–44. [Google Scholar] [CrossRef]

- Riaz, L.; Qadir, H.M.; Ali, G.; Ali, M.; Raza, M.A.; Jurcut, A.D.; Ali, J. A Comprehensive Joint Learning System to Detect Skin Cancer. IEEE Access 2023, 11, 79434–79444. [Google Scholar] [CrossRef]

- Shinde, R.K.; Alam, S.; Hossain, B.; Imtiaz, S.M.; Kim, J.; Padwal, A.A.; Kim, N. Squeeze-mnet: Precise skin cancer detection model for low computing IOT devices using transfer learning. Cancers 2022, 15, 12. [Google Scholar] [CrossRef] [PubMed]

- Abdelhafeez, A.; Mohamed, H.K. Skin Cancer Detection using Neutrosophic c-means and Fuzzy c-means Clustering Algorithms. J. Intell. Syst. Internet Things 2023, 8, 33–42. [Google Scholar] [CrossRef]

- Singh, B.; Ebrahim, A.M.A.; Rajan, R.; Gupta, S.; Babu, D.V. February. IoT enabled Primary Skin Cancer Prediction Using Pigmented Lesions. In Proceedings of the 2022 Second International Conference on Artificial Intelligence and Smart Energy (ICAIS), Coimbatore, India, 23–25 February 2022; IEEE: New York, NY, USA, 2022; pp. 1315–1319. [Google Scholar]

- Singh, S.K.; Abolghasemi, V.; Anisi, M.H. Fuzzy Logic with Deep Learning for Detection of Skin Cancer. Appl. Sci. 2023, 13, 8927. [Google Scholar] [CrossRef]

- Alenezi, F.; Armghan, A.; Polat, K. A multi-stage melanoma recognition framework with deep residual neural network and hyperparameter optimization-based decision support in dermoscopy images. Expert Syst. Appl. 2023, 215, 119352. [Google Scholar] [CrossRef]

- Kaur, R.; GholamHosseini, H.; Sinha, R.; Lindén, M. Melanoma classification using a novel deep convolutional neural network with dermoscopic images. Sensors 2022, 22, 1134. [Google Scholar] [CrossRef]

- Tajjour, S.; Garg, S.; Chandel, S.S.; Sharma, D. A novel hybrid artificial neural network technique for the early skin cancer diagnosis using color space conversions of original images. Int. J. Imaging Syst. Technol. 2023, 33, 276–286. [Google Scholar] [CrossRef]

- Jojoa Acosta, M.F.; Caballero Tovar, L.Y.; Garcia-Zapirain, M.B.; Percybrooks, W.S. Melanoma diagnosis using deep learning techniques on dermatoscopic images. BMC Med. Imaging 2021, 21, 6. [Google Scholar] [CrossRef]

- Meenakshi, K.; Adepu, A.; Nagandla, V.V.T.; Agarwal, S. A Machine learning based melanoma skin cancer using hybrid texture features. In Proceedings of the 2023 3rd International Conference on Intelligent Technologies (CONIT), Hubballi, India, 23–25 June 2023; IEEE: New York, NY, USA, 2023; pp. 1–5. [Google Scholar]

- Mehr, R.A.; Ameri, A. Skin Cancer Detection Based on Deep Learning. J. Biomed. Phys. Eng. 2022, 12, 559. [Google Scholar]

- Khan, M.A.; Akram, T.; Zhang, Y.; Alhaisoni, M.; Al Hejaili, A.; Shaban, K.A.; Tariq, U.; Zayyan, M.H. SkinNet-ENDO: Multiclass skin lesion recognition using deep neural network and Entropy-Normal distribution optimization algorithm with ELM. Int. J. Imaging Syst. Technol. 2023, 33, 1275–1292. [Google Scholar] [CrossRef]

- Malibari, A.A.; Alzahrani, J.S.; Eltahir, M.M.; Malik, V.; Obayya, M.; Al Duhayyim, M.; Neto, A.V.L.; de Albuquerque, V.H.C. Optimal deep neural network-driven computer aided diagnosis model for skin cancer. Comput. Electr. Eng. 2022, 103, 108318. [Google Scholar] [CrossRef]

- Veeraiah, V.; Ravikaumar, G.K.; Kalpana, R.; Sreenivasulu, K.; Singh, Y.; Shukla, S.K. December. Medical Internet of Things using Deep Learning Techniques for Skin Cancer Detection. In Proceedings of the 2022 5th International Conference on Contemporary Computing and Informatics (IC3I), Uttar Pradesh, India, 14–16 December 2022; IEEE: New York, NY, USA, 2022; pp. 317–321. [Google Scholar]

- Sivakumar, N.R.; Ghorashi, S.A.; Karim, F.K.; Alabdulkreem, E.; Al-Rasheed, A. MIoT Based Skin Cancer Detection Using Bregman Recurrent Deep Learning. Comput. Mater. Contin. 2022, 73, 6253–6267. [Google Scholar] [CrossRef]

- Pushpa, B. An Efficient Internet of Things (IoT)-Enabled Skin Lesion Detection Model using Hybrid Feature Extraction with Extreme Machine Learning Model. In Proceedings of the International Conference on Intelligent Computing, Information and Control Systems: ICICCS 2020, Madurai, India, 13–15 May 2020; Springer: Singapore, 2021; pp. 275–282. [Google Scholar]

- Ananth, C.; Therese, M.J. A Survey on Melanoma: Skin Cancer through Computerized Diagnosis. 2020. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3551811 (accessed on 8 October 2023).

- Linsangan, N.B.; Adtoon, J.J. Skin cancer detection and classification for moles using k-nearest neighbor algorithm. In Proceedings of the 5th International Conference on Bioinformatics Research and Applications, Hong Kong, 27–29 December 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 47–51. [Google Scholar]

- Dahou, A.; Aseeri, A.O.; Mabrouk, A.; Ibrahim, R.A.; Al-Betar, M.A.; Elaziz, M.A. Optimal Skin Cancer Detection Model Using Transfer Learning and Dynamic-Opposite Hunger Games Search. Diagnostics 2023, 13, 1579. [Google Scholar] [CrossRef]

- Ramya, P.; Sathiyabhama, B. Skin Cancer Prediction using Enhanced Genetic Algorithm with Extreme Learning Machine. J. Trends Comput. Sci. Smart Technol. 2023, 5, 1–13. [Google Scholar] [CrossRef]

- Abd Elaziz, M.; Dahou, A.; Mabrouk, A.; El-Sappagh, S.; Aseeri, A.O. An efficient artificial rabbits optimization based on mutation strategy for skin cancer prediction. Comput. Biol. Med. 2023, 163, 107154. [Google Scholar] [CrossRef]

- Khamparia, A.; Singh, P.K.; Rani, P.; Samanta, D.; Khanna, A.; Bhushan, B. An internet of health things-driven deep learning framework for detection and classification of skin cancer using transfer learning. Trans. Emerg. Telecommun. Technol. 2021, 32, e3963. [Google Scholar] [CrossRef]

- Anupama, C.S.S.; Natrayan, L.; Lydia, E.L.; Sait, A.W.; Escorcia-Gutierrez, J.; Gamarra, M.; Mansour, R.F. Deep learning with backtracking search optimization based skin lesion diagnosis model. Comput. Mater. Contin. 2021, 70, 1297–1313. [Google Scholar] [CrossRef]

- Ottom, M.A. Convolutional Neural Network for diagnosing skin cancer. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 333–338. [Google Scholar] [CrossRef]

- Adjobo, E.C.; Mahama, A.T.S.; Gouton, P.; Tossa, J. November. Proposition of convolutional neural network based system for skin cancer detection. In Proceedings of the 2019 15th International Conference on Signal-Image Technology & Internet-Based Systems (SITIS), Sorrento, Italy, 26–29 November 2019; IEEE: New York, NY, USA, 2019; pp. 35–39. [Google Scholar]

- Baskaran, D.; Nagamani, Y.; Merugula, S.; Premnath, S.P. MSRFNet for skin lesion segmentation and deep learning with hybrid optimization for skin cancer detection. Imaging Sci. J. 2023, 71, 616–635. [Google Scholar] [CrossRef]

- Luo, J.; Fan, Y.; Jiang, P.; He, Z.; Xu, P.; Li, X.; Yang, W.; Zhou, W.; Ma, S. Vehicle platform attitude estimation method based on adaptive Kalman filter and sliding window least squares. Meas. Sci. Technol. 2020, 32, 035007. [Google Scholar] [CrossRef]

- Chen, S.W.; Chen, J.K.; Hsieh, Y.H.; Chen, W.H.; Liao, Y.H.; Lin, Y.C.; Chen, M.C.; Tsai, C.T.; Chai, J.W.; Yuan, S.M. Improving Patient Safety in the X-ray Inspection Process with EfficientNet-Based Medical Assistance System. Healthcare 2023, 11, 2068. [Google Scholar] [CrossRef]

- Jajam, N.; Challa, N.P.; Prasanna, K.S.; Deepthi, C.H.V.S. Arithmetic Optimization with Ensemble Deep Learning SBLSTM-RNN-IGSA model for Customer Churn Prediction. IEEE Access 2023, 11, 93111–93128. [Google Scholar] [CrossRef]

- Fei, Z.; Wang, J.; Liu, K.; Attahi, E.; Huang, B. Deep feature fusion-based stacked denoising autoencoder for tag recommendation systems. IET Cyber-Syst. Robot. 2023, 5, e12095. [Google Scholar] [CrossRef]

- Rahmati, K.; Taherinasab, S. The importance of reconfiguration of the distribution network to achieve minimization of energy losses using the dragonfly algorithm. e-Prime-Adv. Electr. Eng. Electron. Energy 2023, 5, 100270. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).