Over-Detection of Melanoma-Suspect Lesions by a CE-Certified Smartphone App: Performance in Comparison to Dermatologists, 2D and 3D Convolutional Neural Networks in a Prospective Data Set of 1204 Pigmented Skin Lesions Involving Patients’ Perception

Abstract

:Simple Summary

Abstract

1. Introduction

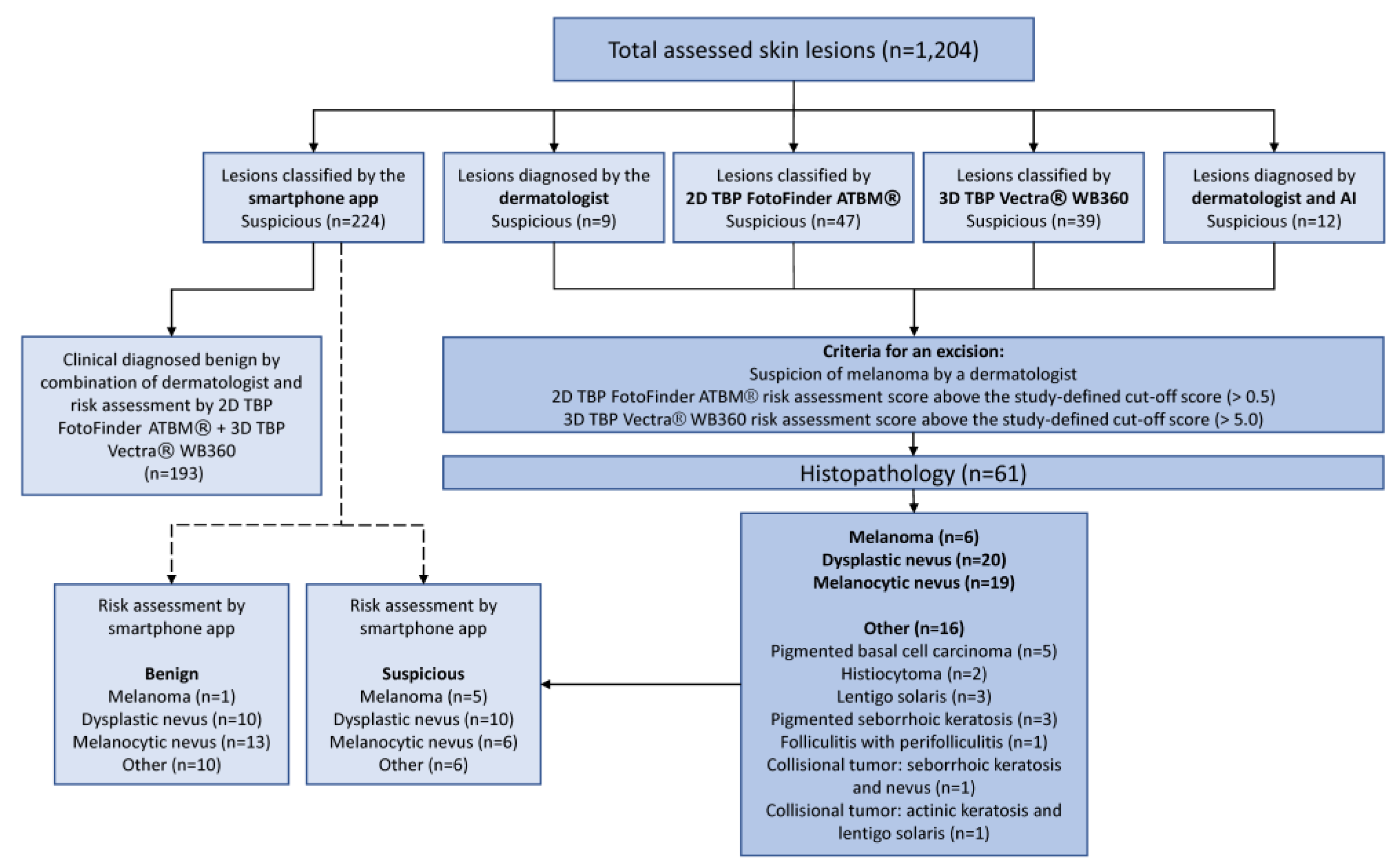

2. Materials and Methods

2.1. Study Design and Participants

2.2. Procedures

2.3. Statistical Analysis

2.4. Ethics

3. Results

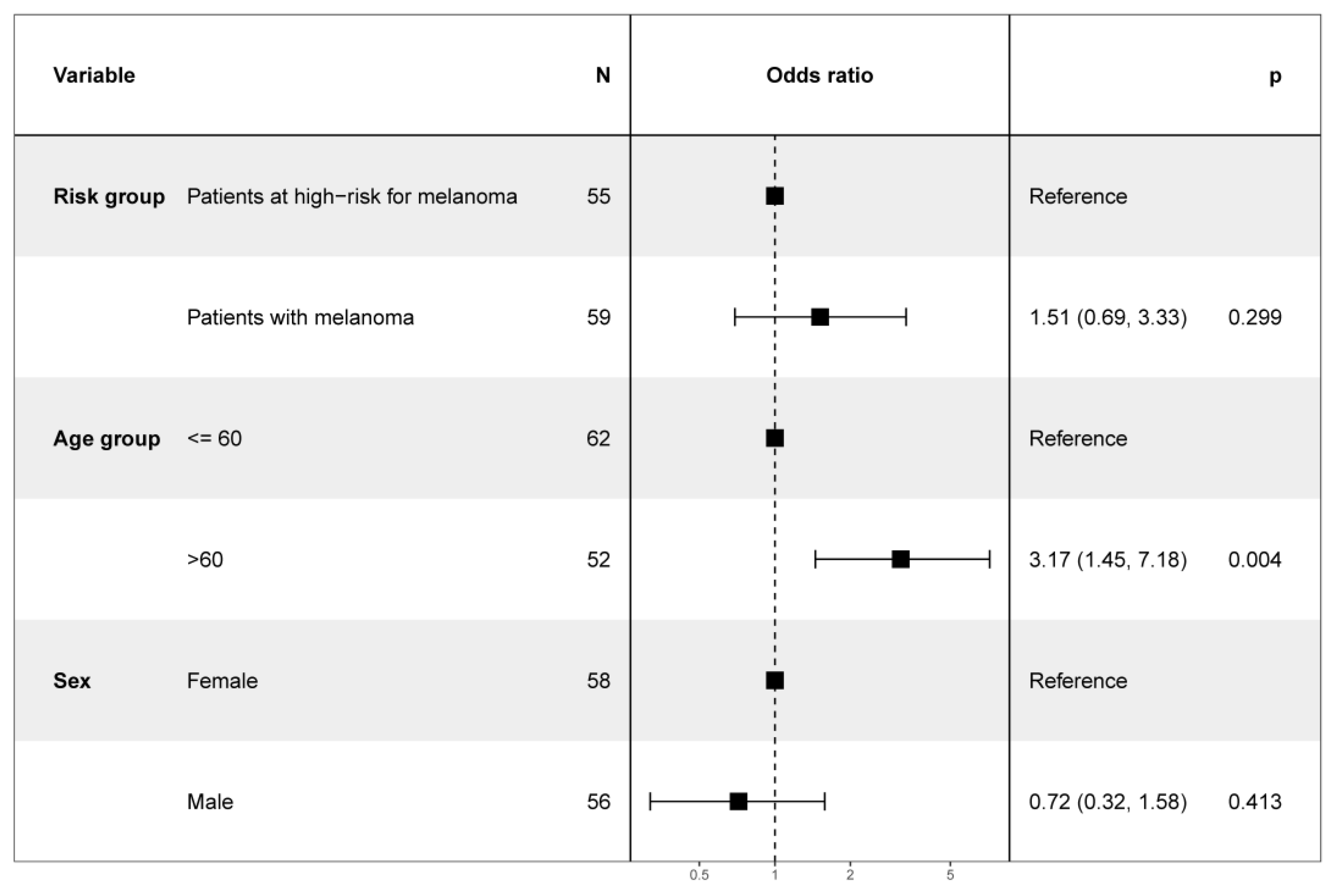

3.1. Study Population

3.2. Diagnostic Accuracy and Performance of the Smartphone App SkinVision®

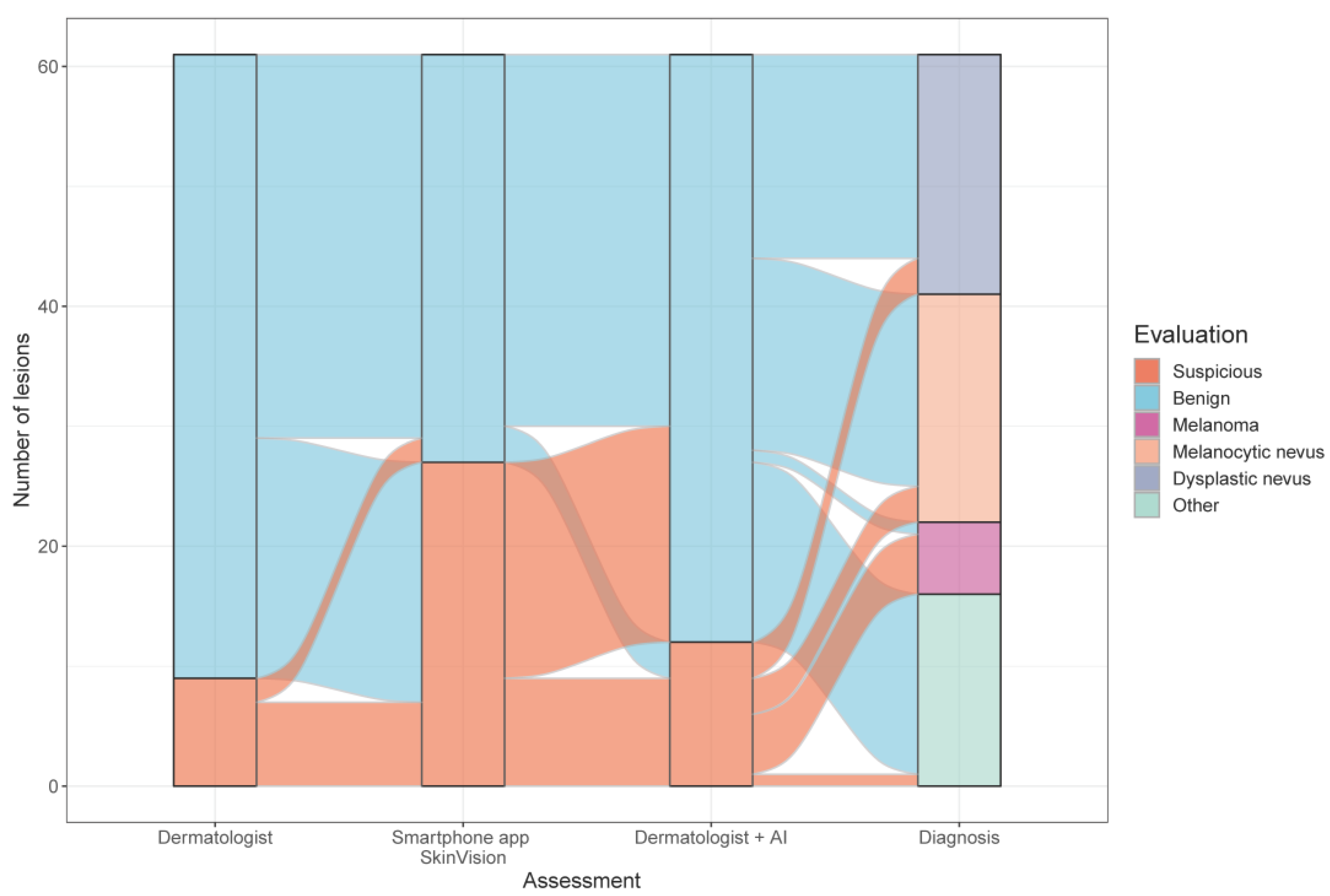

3.2.1. Comparison of all Risk Assessments

3.2.2. Diagnostic Accuracy of the Smartphone App Based on the Combination of the Dermatologist’s Evaluation plus the AI Risk-Assessment Scores of Two Independent Medical Devices

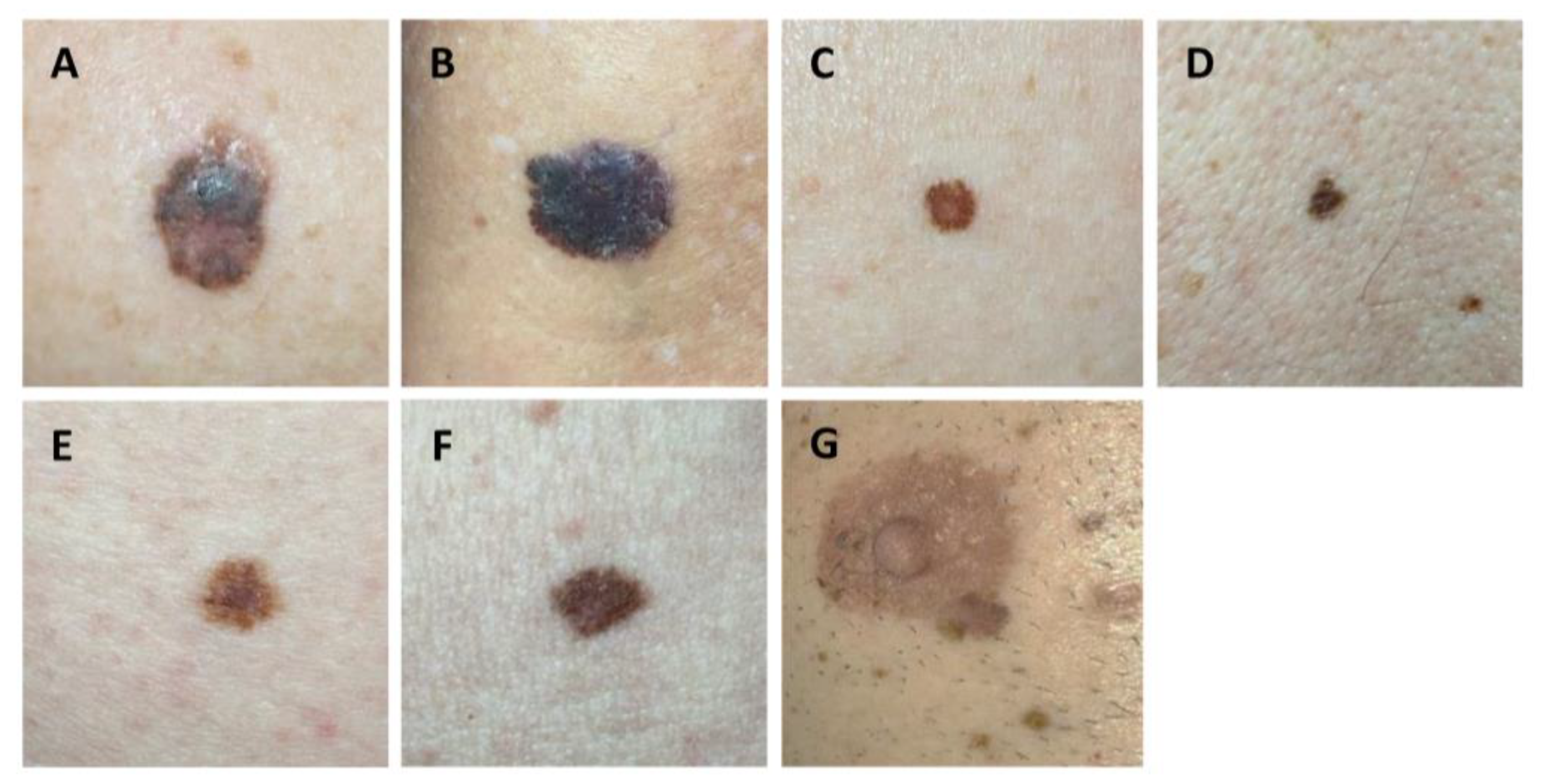

3.2.3. Diagnostic Accuracy of the Smartphone App Based on Histopathology

3.3. Patient Perspective on AI in Melanoma Screening

3.3.1. Confidence in Dermatologists vs. Smartphone App

3.3.2. Trustworthiness of the Smartphone App

3.3.3. Impact of AI vs. Dermatologists’ Examination on Patients’ Fear of Developing Skin Cancer

3.3.4. Patients’ Subjective Assessment of the Accuracy of AI vs. Dermatologists

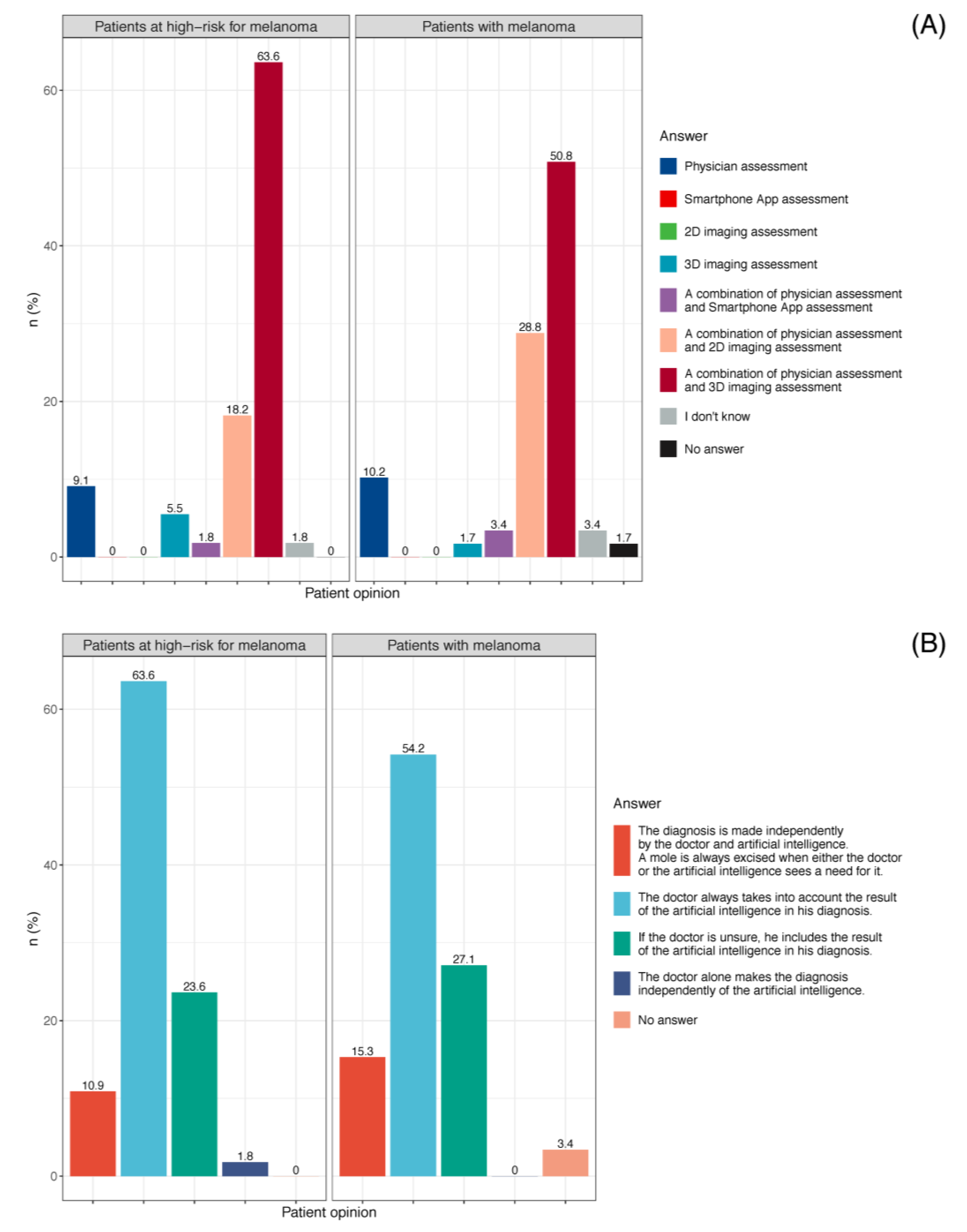

3.3.5. Patient Preference for Skin Cancer Screening

3.3.6. Dermatologists’ Perspective of Smartphone Apps for Melanoma Screening

4. Discussion

4.1. Diagnostic Accuracy and Potential Consequences of the Smartphone App SkinVision®

4.2. The Lay and Dermatologist Perspectives on the Use of Smartphone Apps and Other AI Devices in Melanoma Screening

4.3. Strengths and Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chacón, M.; Pfluger, Y.; Angel, M.; Waisberg, F.; Enrico, D. Uncommon Subtypes of Malignant Melanomas: A Review Based on Clinical and Molecular Perspectives. Cancers 2020, 12, 2362. [Google Scholar] [CrossRef]

- Leiter, U.; Keim, U.; Garbe, C. Epidemiology of Skin Cancer: Update 2019. Adv. Exp. Med. Biol. 2020, 1268, 123–139. [Google Scholar] [CrossRef]

- Larkin, J.; Chiarion-Sileni, V.; Gonzalez, R.; Grob, J.J.; Rutkowski, P.; Lao, C.D.; Cowey, C.L.; Schadendorf, D.; Wagstaff, J.; Dummer, R.; et al. Five-Year Survival with Combined Nivolumab and Ipilimumab in Advanced Melanoma. N. Engl. J. Med. 2019, 381, 1535–1546. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hamid, O.; Robert, C.; Daud, A.; Hodi, F.S.; Hwu, W.J.; Kefford, R.; Wolchok, J.D.; Hersey, P.; Joseph, R.; Weber, J.S.; et al. Five-year survival outcomes for patients with advanced melanoma treated with pembrolizumab in KEYNOTE-001. Ann. Oncol. 2019, 30, 582–588. [Google Scholar] [CrossRef] [PubMed]

- Robert, C.; Grob, J.J.; Stroyakovskiy, D.; Karaszewska, B.; Hauschild, A.; Levchenko, E.; Chiarion Sileni, V.; Schachter, J.; Garbe, C.; Bondarenko, I.; et al. Five-Year Outcomes with Dabrafenib plus Trametinib in Metastatic Melanoma. N. Engl. J. Med. 2019, 381, 626–636. [Google Scholar] [CrossRef]

- Ahmed, B.; Qadir, M.I.; Ghafoor, S. Malignant Melanoma: Skin Cancer-Diagnosis, Prevention, and Treatment. Crit. Rev. Eukaryot. Gene Expr. 2020, 30, 291–297. [Google Scholar] [CrossRef]

- Swetter, S.M.; Tsao, H.; Bichakjian, C.K.; Curiel-Lewandrowski, C.; Elder, D.E.; Gershenwald, J.E.; Guild, V.; Grant-Kels, J.M.; Halpern, A.C.; Johnson, T.M.; et al. Guidelines of care for the management of primary cutaneous melanoma. J. Am. Acad Dermatol. 2019, 80, 208–250. [Google Scholar] [CrossRef] [Green Version]

- Anderson, M. Technology Device Ownership: 2015. Available online: https://www.pewresearch.org/internet/2015/10/29/technology-device-ownership-2015/ (accessed on 21 July 2021).

- Pew Research Center. Mobile Fact Sheet. Available online: https://www.pewresearch.org/internet/fact-sheet/mobile/ (accessed on 30 July 2021).

- Al-Azzam, M.K. Research on the Impact of mHealth Apps on the Primary Healthcare Professionals in Patient Care. Appl. Bionics Biomech. 2021, 2021, 7611686. [Google Scholar] [CrossRef]

- Flaten, H.K.; St Claire, C.; Schlager, E.; Dunnick, C.A.; Dellavalle, R.P. Growth of mobile applications in dermatology—2017 update. Dermatol. Online J. 2018, 24, 1–4. [Google Scholar] [CrossRef]

- Ngoo, A.; Finnane, A.; McMeniman, E.; Soyer, H.P.; Janda, M. Fighting Melanoma with Smartphones: A Snapshot of Where We are a Decade after App Stores Opened Their Doors. Int. J. Med. Inform. 2018, 118, 99–112. [Google Scholar] [CrossRef] [Green Version]

- Ouellette, S.; Rao, B.K. Usefulness of Smartphones in Dermatology: A US-Based Review. Int. J. Environ. Res. Public Health 2022, 19, 3553. [Google Scholar] [CrossRef] [PubMed]

- Kassianos, A.P.; Emery, J.D.; Murchie, P.; Walter, F.M. Smartphone applications for melanoma detection by community, patient and generalist clinician users: A review. Br. J. Dermatol. 2015, 172, 1507–1518. [Google Scholar] [CrossRef]

- Freeman, K.; Dinnes, J.; Chuchu, N.; Takwoingi, Y.; Bayliss, S.E.; Matin, R.N.; Jain, A.; Walter, F.M.; Williams, H.C.; Deeks, J.J. Algorithm based smartphone apps to assess risk of skin cancer in adults: Systematic review of diagnostic accuracy studies. BMJ 2020, 368, m127. [Google Scholar] [CrossRef] [Green Version]

- Jaworek-Korjakowska, J.; Kleczek, P. eSkin: Study on the Smartphone Application for Early Detection of Malignant Melanoma. Wirel. Commun. Mob. Comput. 2018, 2018, 5767360. [Google Scholar] [CrossRef] [Green Version]

- Kent, C. New Analysis Raises Concerns over Accuracy of Skin Cancer Risk Apps. Available online: https://www.medicaldevice-network.com/news/skin-cancer-apps/ (accessed on 28 May 2022).

- de Carvalho Delgado Marques, T.; Noels, E.; Wakkee, M.; Udrea, A.; Nijsten, T. Development of smartphone apps for skin cancer risk assessment: Progress and promise. JMIR Dermatol. 2019, 21, e13376. [Google Scholar] [CrossRef] [Green Version]

- SkinVision. Available online: https://www.skinvision.com/ (accessed on 25 July 2021).

- Udrea, A.; Mitra, G.D.; Costea, D.; Noels, E.C.; Wakkee, M.; Siegel, D.M.; de Carvalho, T.M.; Nijsten, T.E.C. Accuracy of a smartphone application for triage of skin lesions based on machine learning algorithms. J. Eur. Acad Dermatol. Venereol. 2020, 34, 648–655. [Google Scholar] [CrossRef]

- Deeks, J.J.; Dinnes, J.; Williams, H.C. Sensitivity and specificity of SkinVision are likely to have been overestimated. J. Eur. Acad Dermatol. Venereol. 2020, 34, e582–e583. [Google Scholar] [CrossRef]

- Matin, R.N.; Dinnes, J. AI-based smartphone apps for risk assessment of skin cancer need more evaluation and better regulation. Br. J. Cancer 2021, 124, 1749–1750. [Google Scholar] [CrossRef]

- Thissen, M.; Udrea, A.; Hacking, M.; von Braunmuehl, T.; Ruzicka, T. mHealth App for Risk Assessment of Pigmented and Nonpigmented Skin Lesions-A Study on Sensitivity and Specificity in Detecting Malignancy. Telemed. e-Health 2017, 23, 948–954. [Google Scholar] [CrossRef] [PubMed]

- Chung, Y.; van der Sande, A.A.J.; de Roos, K.P.; Bekkenk, M.W.; de Haas, E.R.M.; Kelleners-Smeets, N.W.J.; Kukutsch, N.A. Poor agreement between the automated risk assessment of a smartphone application for skin cancer detection and the rating by dermatologists. J. Eur. Acad. Dermatol. Venereol. 2020, 34, 274–278. [Google Scholar] [CrossRef] [Green Version]

- Nabil, R.; Bergman, W.; Kukutsch, N.A. Conflicting results between the analysis of skin lesions using a mobile-phone application and a dermatologist’s clinical diagnosis: A pilot study. Br. J. Dermatol. 2017, 177, 583–584. [Google Scholar] [CrossRef] [PubMed]

- Sangers, T.E.; Wakkee, M.; Kramer-Noels, E.C.; Nijsten, T.; Lugtenberg, M. Views on mobile health apps for skin cancer screening in the general population: An in-depth qualitative exploration of perceived barriers and facilitators. Br. J. Dermatol. 2021, 185, 961–969. [Google Scholar] [CrossRef] [PubMed]

- Blum, A.; Bosch, S.; Haenssle, H.A.; Fink, C.; Hofmann-Wellenhof, R.; Zalaudek, I.; Kittler, H.; Tschandl, P. Artificial intelligence and smartphone program applications (Apps): Relevance for dermatological practice. Hautarzt 2020, 71, 691–698. [Google Scholar] [CrossRef]

- Chao, E.; Meenan, C.K.; Ferris, L.K. Smartphone-Based Applications for Skin Monitoring and Melanoma Detection. Dermatol. Clin. 2017, 35, 551–557. [Google Scholar] [CrossRef]

- Lucivero, F.; Jongsma, K.R. A mobile revolution for healthcare? Setting the agenda for bioethics. J. Med. Ethics 2018, 44, 685–689. [Google Scholar] [CrossRef]

- Nelson, C.A.; Pérez-Chada, L.M.; Creadore, A.; Li, S.J.; Lo, K.; Manjaly, P.; Pournamdari, A.B.; Tkachenko, E.; Barbieri, J.S.; Ko, J.M.; et al. Patient Perspectives on the Use of Artificial Intelligence for Skin Cancer Screening: A Qualitative Study. JAMA Dermatol. 2020, 156, 501–512. [Google Scholar] [CrossRef]

- Jutzi, T.B.; Krieghoff-Henning, E.I.; Holland-Letz, T.; Utikal, J.S.; Hauschild, A.; Schadendorf, D.; Sondermann, W.; Fröhling, S.; Hekler, A.; Schmitt, M.; et al. Artificial Intelligence in Skin Cancer Diagnostics: The Patients’ Perspective. Front. Med. 2020, 7, 233. [Google Scholar] [CrossRef]

- Petty, A.J.; Ackerson, B.; Garza, R.; Peterson, M.; Liu, B.; Green, C.; Pavlis, M. Meta-analysis of number needed to treat for diagnosis of melanoma by clinical setting. J. Am. Acad. Dermatol. 2020, 82, 1158–1165. [Google Scholar] [CrossRef]

- Ngoo, A.; Finnane, A.; McMeniman, E.; Tan, J.M.; Janda, M.; Soyer, H.P. Efficacy of smartphone applications in high-risk pigmented lesions. Australas. J. Dermatol. 2018, 59, e175–e182. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chuchu, N.; Takwoingi, Y.; Dinnes, J.; Matin, R.N.; Bassett, O.; Moreau, J.F.; Bayliss, S.E.; Davenport, C.; Godfrey, K.; O’Connell, S.; et al. Smartphone applications for triaging adults with skin lesions that are suspicious for melanoma. Cochrane Database Syst. Rev. 2018, 12, Cd013192. [Google Scholar] [CrossRef]

- Sun, M.D.; Kentley, J.; Mehta, P.; Dusza, S.; Halpern, A.C.; Rotemberg, V. Accuracy of commercially available smartphone applications for the detection of melanoma. Br. J. Dermatol. 2021, 186, 744–746. [Google Scholar] [CrossRef] [PubMed]

- Maier, T.; Kulichova, D.; Schotten, K.; Astrid, R.; Ruzicka, T.; Berking, C.; Udrea, A. Accuracy of a smartphone application using fractal image analysis of pigmented moles compared to clinical diagnosis and histological result. J. Eur. Acad. Dermatol. Venereol. 2015, 29, 663–667. [Google Scholar] [CrossRef] [PubMed]

- Sangers, T.; Reeder, S.; van der Vet, S.; Jhingoer, S.; Mooyaart, A.; Siegel, D.M.; Nijsten, T.; Wakkee, M. Validation of a Market-Approved Artificial Intelligence Mobile Health App for Skin Cancer Screening: A Prospective Multicenter Diagnostic Accuracy Study. Dermatology 2022, 238, 649–656. [Google Scholar] [CrossRef]

- Phillips, M.; Marsden, H.; Jaffe, W.; Matin, R.N.; Wali, G.N.; Greenhalgh, J.; McGrath, E.; James, R.; Ladoyanni, E.; Bewley, A.; et al. Assessment of Accuracy of an Artificial Intelligence Algorithm to Detect Melanoma in Images of Skin Lesions. JAMA Netw. Open 2019, 2, e1913436. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- European Commission. Medical Devices: Guidance Document. Available online: https://ec.europa.eu/docsroom/documents/10337/attachments/1/translations/en/renditions/pdf (accessed on 30 May 2022).

- Kessel, K.A.; Vogel, M.M.; Kessel, C.; Bier, H.; Biedermann, T.; Friess, H.; Herschbach, P.; von Eisenhart-Rothe, R.; Meyer, B.; Kiechle, M.; et al. Mobile Health in Oncology: A Patient Survey About App-Assisted Cancer Care. JMIR Mhealth Uhealth 2017, 5, e81. [Google Scholar] [CrossRef]

- Steeb, T.; Wessely, A.; Mastnik, S.; Brinker, T.J.; French, L.E.; Niesert, A.C.; Berking, C.; Heppt, M.V. Patient Attitudes and Their Awareness towards Skin Cancer-Related Apps: Cross-Sectional Survey. JMIR Mhealth Uhealth 2019, 7, e13844. [Google Scholar] [CrossRef] [Green Version]

- Haenssle, H.A.; Fink, C.; Schneiderbauer, R.; Toberer, F.; Buhl, T.; Blum, A.; Kalloo, A.; Hassen, A.B.H.; Thomas, L.; Enk, A.; et al. Man against machine: Diagnostic performance of a deep learning convolutional neural network for dermoscopic melanoma recognition in comparison to 58 dermatologists. Ann. Oncol. 2018, 29, 1836–1842. [Google Scholar] [CrossRef]

- Polesie, S.; Gillstedt, M.; Kittler, H.; Lallas, A.; Tschandl, P.; Zalaudek, I.; Paoli, J. Attitudes towards artificial intelligence within dermatology: An international online survey. Br. J. Dermatol. 2020, 183, 159–161. [Google Scholar] [CrossRef] [Green Version]

- Haggenmüller, S.; Krieghoff-Henning, E.; Jutzi, T.; Trapp, N.; Kiehl, L.; Utikal, J.S.; Fabian, S.; Brinker, T.J. Digital Natives’ Preferences on Mobile Artificial Intelligence Apps for Skin Cancer Diagnostics: Survey Study. JMIR Mhealth Uhealth 2021, 9, e22909. [Google Scholar] [CrossRef]

- Janda, M.; Horsham, C.; Koh, U.; Gillespie, N.; Vagenas, D.; Loescher, L.J.; Curiel-Lewandrowski, C.; Hofmann-Wellenhof, R.; Peter Soyer, H. Evaluating healthcare practitioners’ views on store-and-forward teledermoscopy services for the diagnosis of skin cancer. Digit. Health 2019, 5, 2055207619828225. [Google Scholar] [CrossRef] [Green Version]

| Characteristic | All Patients, N = 114 1 | Patients with Melanoma, N = 59 1 | Patients at High-Risk for Melanoma, N = 55 1 |

|---|---|---|---|

| Age, n (age range) | 59 (22–85) | 60 (29–81) | 55 (22–85) |

| Sex, n (%) | |||

| Female | 58 (51%) | 32 (54%) | 26 (47%) |

| Male | 56 (49%) | 27 (46%) | 29 (53%) |

| Risk profile, n (%) | |||

| Multiple melanocytic nevi (≥100) and/or dysplastic nevi (≥5) and/or positive family history for melanoma and/or diagnosis of dysplastic nevus syndrome and/or CDKN2A mutation | 55 (48%) | 0 (0%) | 55 (100%) |

| Previous resected melanoma in situ or primary cutaneous melanoma | 57 (50%) | 57 (97%) | 0 (0%) |

| Metastatic melanoma | 2 (1.8%) | 2 (3.4%) | 0 (0%) |

| Positive family history for melanoma, n (%) | 42 (37%) | 11 (19%) | 31 (56%) |

| Frequency of skin cancer screening, n (%) | |||

| Several times per year | 40 (35%) | 34 (58%) | 6 (11%) |

| Every 12 months | 39 (34%) | 16 (27%) | 23 (42%) |

| Every 1–2 years | 8 (7%) | 4 (6.8%) | 4 (7.3%) |

| Every 2 years | 9 (7.9%) | 2 (3.4%) | 7 (13%) |

| Less than every 2 years | 14 (12%) | 3 (5.1%) | 11 (20%) |

| Never | 4 (3.5%) | 0 (0%) | 4 (7.3%) |

| History of sunburns in childhood, n (%) | 70 (61%) | 32 (54%) | 38 (69%) |

| Frequency of sunburns (Child), n (%) | |||

| Rarely (less than once per year) | 44 (63%) | 20 (62%) | 24 (63%) |

| Regularly (once per year) | 22 (31%) | 10 (31%) | 12 (32%) |

| Often (more than once per year) | 4 (5.7%) | 2 (6.2%) | 2 (5.3%) |

| History of sunburns in adulthood, n (%) | 39 (34%) | 18 (31%) | 21 (38%) |

| Frequency of sunburns (Adult), n (%) | |||

| Rarely (less than once per year) | 38 (97%) | 18 (100%) | 20 (95%) |

| Regularly (once per year) | 0 (0%) | 0 (0%) | 0 (0%) |

| Often (more than once per year) | 1 (2.6%) | 0 (0%) | 1 (4.8%) |

| Previous tanning in the solarium, n (%) | 38 (33%) | 13 (22%) | 25 (45%) |

| Usage of sunscreen (SPF), n (%) | |||

| SPF 6–10 | 2 (1.8%) | 1 (1.7%) | 1 (1.8%) |

| SPF 15–25 | 10 (8.8%) | 3 (5.1%) | 7 (13%) |

| SPF 30–50 | 64 (56%) | 30 (51%) | 34 (62%) |

| SPF 50+ | 38 (33%) | 25 (42%) | 13 (24%) |

| Characteristic | N = 1204 1 |

|---|---|

| Smartphone app SkinVision® | |

| benign | 980 (81%) |

| suspicious | 224 (19%) |

| 2D Imaging FotoFinder ATBM® | |

| benign | 1157 (96%) |

| suspicious | 47 (3.9%) |

| 3D Imaging VECTRA® WB360 | |

| benign | 1165 (97%) |

| suspicious | 39 (3.2%) |

| Dermatologists | |

| benign | 1195 (99%) |

| suspicious | 9 (0.7%) |

| Dermatologists informed about risk assessment scores by FotoFinder ATBM® + VECTRA® WB360 | |

| benign | 1192 (99%) |

| suspicious | 12 (1.0%) |

| Histopathologic Diagnosis | N | Melanocytic Nevus, N = 19 1 | Dysplastic Nevus, N = 20 1 | Melanoma, N = 6 1 | Other *, N = 16 1 |

|---|---|---|---|---|---|

| Smartphone app SkinVision® | 61 | ||||

| benign | 13 (68%) | 10 (50%) | 1 (17%) | 10 (62%) | |

| suspicious | 6 (32%) | 10 (50%) | 5 (83%) | 6 (38%) | |

| 2D imaging FotoFinder ATBM® | 61 | ||||

| benign | 7 (37%) | 11 (55%) | 1 (17%) | 4 (25%) | |

| suspicious | 12 (63%) | 9 (45%) | 5 (83%) | 12 (75%) | |

| 3D imaging VECTRA® WB360 | 61 | ||||

| benign | 18 (95%) | 9 (45%) | 1 (17%) | 8 (50%) | |

| suspicious | 1 (5.3%) | 11 (55%) | 5 (83%) | 8 (50%) | |

| Dermatologists | 61 | ||||

| benign | 17 (89%) | 18 (90%) | 1 (17%) | 16 (100%) | |

| suspicious | 2 (11%) | 2 (10%) | 5 (83%) | 0 (0%) | |

| Beginner: <2 years’ work experience | 44 | N = 15 | N = 12 | N = 5 | N = 13 |

| benign | 14 (93%) | 10 (83%) | 1 (20%) | 13 (100%) | |

| suspicious | 1 (6.7%) | 2 (17%) | 4 (80%) | 0 (0%) | |

| Intermediate: 2–5 years’ work experience | 5 | N = 2 | N = 3 | N = 0 | N = 0 |

| benign | 1 (50%) | 3 (100%) | 0 (0%) | 0 (0%) | |

| suspicious | 1 (50%) | 0 (0%) | 0 (0%) | 0 (0%) | |

| Experts: >5 years’ work experience | 11 | N = 2 | N = 5 | N = 1 | N = 3 |

| benign | 2 (100%) | 5 (100%) | 0 (0%) | 3 (100%) | |

| suspicious | 0 (0%) | 0 (0%) | 1 (100%) | 0 (0%) | |

| Dermatologists informed about AI scores 2 | 61 | ||||

| benign | 16 (84%) | 17 (85%) | 1 (17%) | 15 (94%) | |

| suspicious | 3 (16%) | 3 (15%) | 5 (83%) | 1 (6.2%) | |

| Beginner: <2 years’ work experience | N = 15 | N = 12 | N = 5 | N = 13 | |

| benign | 13 (87%) | 9 (75%) | 1 (20%) | 12 (92%) | |

| suspicious | 2 (13%) | 3 (25%) | 4 (80%) | 1 (7.7%) | |

| Intermediate: 2–5 years’ work experience | N = 2 | N = 3 | N = 0 | N = 0 | |

| benign | 1 (50%) | 3 (100%) | 0 (0%) | 0 (0%) | |

| suspicious | 1 (50%) | 0 (0%) | 0 (0%) | 0 (0%) | |

| Experts: >5 years’ work experience | N = 2 | N = 5 | N = 1 | N = 3 | |

| benign | 2 (100%) | 5 (100%) | 0 (0%) | 3 (100%) | |

| suspicious | 0 (0%) | 0 (0%) | 1 (100%) | 0 (0%) |

| Characteristic | N | Patients with Melanoma, N = 59 1 | Patients at High-Risk for Melanoma, N = 55 1 | p-Value 2 |

|---|---|---|---|---|

| The following examination was trustworthy: Smartphone app assessment | 114 | 0.3 | ||

| Yes | 29 (49%) | 20 (36%) | ||

| No | 5 (8.5%) | 8 (15%) | ||

| I don’t know | 23 (39%) | 22 (40%) | ||

| No answer | 2 (3.4%) | 5 (9.1%) | ||

| Dermatologist assessment | 114 | |||

| Yes | 59 (100%) | 55 (100%) | ||

| No | 0 (0%) | 0 (0%) | ||

| I don’t know | 0 (0%) | 0 (0%) | ||

| No answer | 0 (0%) | 0 (0%) | ||

| 2D TBP assessment | 114 | 0.3 | ||

| Yes | 52 (88%) | 51 (93%) | ||

| No | 0 (0%) | 0 (0%) | ||

| I don’t know | 7 (12%) | 3 (5.5%) | ||

| No answer | 0 (0%) | 1 (1.8%) | ||

| 3D TBP assessment | 114 | 0.3 | ||

| Yes | 53 (90%) | 50 (91%) | ||

| No | 0 (0%) | 0 (0%) | ||

| I don’t know | 6 (10%) | 3 (5.5%) | ||

| No answer | 0 (0%) | 2 (3.6%) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jahn, A.S.; Navarini, A.A.; Cerminara, S.E.; Kostner, L.; Huber, S.M.; Kunz, M.; Maul, J.-T.; Dummer, R.; Sommer, S.; Neuner, A.D.; et al. Over-Detection of Melanoma-Suspect Lesions by a CE-Certified Smartphone App: Performance in Comparison to Dermatologists, 2D and 3D Convolutional Neural Networks in a Prospective Data Set of 1204 Pigmented Skin Lesions Involving Patients’ Perception. Cancers 2022, 14, 3829. https://doi.org/10.3390/cancers14153829

Jahn AS, Navarini AA, Cerminara SE, Kostner L, Huber SM, Kunz M, Maul J-T, Dummer R, Sommer S, Neuner AD, et al. Over-Detection of Melanoma-Suspect Lesions by a CE-Certified Smartphone App: Performance in Comparison to Dermatologists, 2D and 3D Convolutional Neural Networks in a Prospective Data Set of 1204 Pigmented Skin Lesions Involving Patients’ Perception. Cancers. 2022; 14(15):3829. https://doi.org/10.3390/cancers14153829

Chicago/Turabian StyleJahn, Anna Sophie, Alexander Andreas Navarini, Sara Elisa Cerminara, Lisa Kostner, Stephanie Marie Huber, Michael Kunz, Julia-Tatjana Maul, Reinhard Dummer, Seraina Sommer, Anja Dominique Neuner, and et al. 2022. "Over-Detection of Melanoma-Suspect Lesions by a CE-Certified Smartphone App: Performance in Comparison to Dermatologists, 2D and 3D Convolutional Neural Networks in a Prospective Data Set of 1204 Pigmented Skin Lesions Involving Patients’ Perception" Cancers 14, no. 15: 3829. https://doi.org/10.3390/cancers14153829

APA StyleJahn, A. S., Navarini, A. A., Cerminara, S. E., Kostner, L., Huber, S. M., Kunz, M., Maul, J.-T., Dummer, R., Sommer, S., Neuner, A. D., Levesque, M. P., Cheng, P. F., & Maul, L. V. (2022). Over-Detection of Melanoma-Suspect Lesions by a CE-Certified Smartphone App: Performance in Comparison to Dermatologists, 2D and 3D Convolutional Neural Networks in a Prospective Data Set of 1204 Pigmented Skin Lesions Involving Patients’ Perception. Cancers, 14(15), 3829. https://doi.org/10.3390/cancers14153829