Control of a Drone in Virtual Reality Using MEMS Sensor Technology and Machine Learning

Abstract

:1. Introduction

- A remote training simulator for drone flight is proposed using virtual reality, developing a simulator in the Unity development environment. The drone is controlled remotely in the virtual reality simulator via the Wi-Fi protocol using a microcontroller development board that includes a Wi-Fi transmission module. MEMS sensor technology is used for precise drone control, and a bracelet has been built for this purpose, which includes a microcontroller and a sensor module with which the user can control the drone.

- For the connection of the bracelet to the computer, a user interface was developed in the Visual Studio development environment, using the C Sharp (C#) programming language. Additionally, through this user interface, the connection with the virtual reality simulator is made.

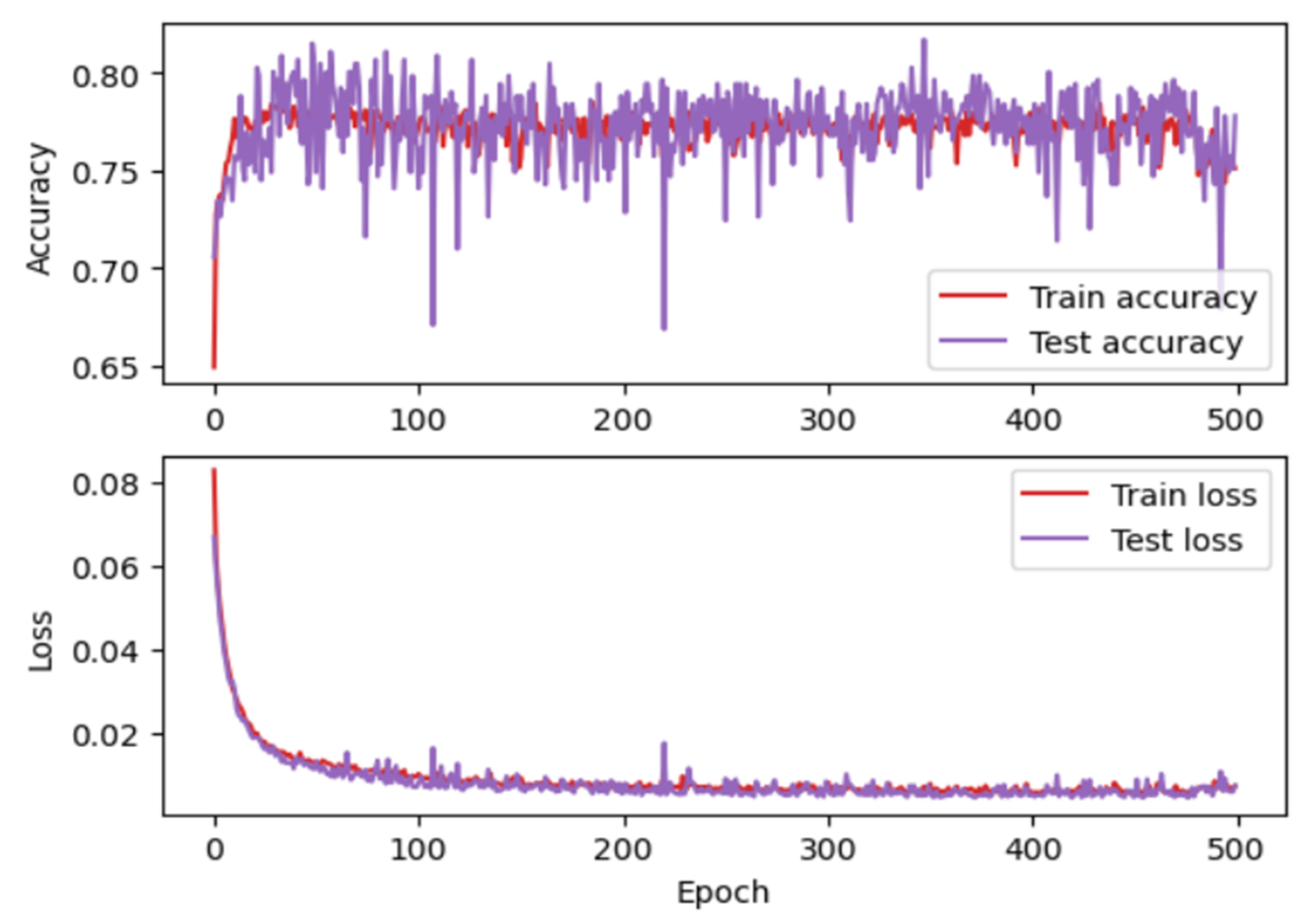

- Specific methods of artificial intelligence, namely machine learning, are used to help control the drone. With the methods implemented by the Keras library [3] from TensorFlow, an MLP neural network with four inputs and three outputs was implemented to guide the movement of the drone.

2. Background

3. Introduction to MEMS Sensors That Are Used in the Application

- MEMS accelerometer—this is an electromechanical device that converts mechanical force into an electrical signal. This device measures acceleration by measuring the change in capacity; the microstructure of this device can be seen in Figure 1. It has a mass attached to a screen that is limited to moving along a direction and a fixed outer plate, so that when acceleration is applied in a certain direction, the mass will move the capacity between the plates; therefore, this mass changes. This device can be used in civilian and military applications [23].

- MEMS magnetometer—the operation of this device is based on measuring the earth’s magnetic field using the Hall effect or resistive effect. In almost 90% of the cases, the sensors on the market work based on the Hall effect. In the case of sensors that use the Hall effect, electrons flow from one side of the board to the other side of the board (Figure 2a). If a magnetic field is brought close to the plate, the flow of electrons is disturbed, they are deflected and a division is made between negatives and positives poles (Figure 2b). If a multimeter is placed on both sides and a measurement is made, a voltage is obtained. The other 10% of sensors on the market use the magneto-resistive effect (Figure 3). These sensors use materials that are sensitive to the magnetic field and, are usually made of iron and nickel. When these materials are exposed to the magnetic field, they change their resistance [24].

- MEMS gyroscope—this device (Figure 4) measures the angular rate using the Coriolis effect when a mass moves in a certain direction at a certain speed, and when an external angular rate is applied, as shown by the yellow arrow, a force will appear (blue arrow) which will determine the perpendicular displacement of the mass; therefore, similarly to the accelerometer, this displacement will cause the change in capacity which will be a measurement process and will correspond to a certain angular rate [25].

4. Materials and Methods

4.1. Components and Connections in the System

- (1)

- Device power supply: 5 V DC;

- (2)

- Microcontroller ESP32, which is a development board that contains the following specifications:

- 240 MHz microcontroller, dual-core—Tensilica LX6 (Texas Instruments, Dallas, TX, USA);

- 520 KB SRAM memory;

- Bluetooth dual mode (classic and BLE);

- 16 MB flash memory;

- Working temperature: −40 °C to 125 °C;

- PCB antenna (has connector for external antenna);

- Wireless transfer rates: 150 Mbps@11n HT40, 72 Mbps@11n HT20, 54 Mbp@11 g and 11 Mbp@11b;

- 135 Mbps UDP connectivity;

- Average operating current: 80 mA;

- Wi-Fi: 802.11 b/g/n (802.11n up to 150 Mbps);

- Bluetooth: Bluetooth v4.2 BR/EDR and BLE;

- (3)

- Absolute orientation sensor—IMU BNO055 (Bosch Sensortec, Beijing, China), with the following characteristics:

- Using chip: Original NEW BNO-055;

- Built in nine-axis sensor and MCU resources;

- Power supply: 3–5 v (internal low dropout voltage regulator);

- Absolute orientation (Euler Vector, 100 Hz)—Orientation data on three axes based on a 360° sphere;

- Absolute orientation (Quaternion, 100 Hz)—Four-point quaternion output for more accurate data handling;

- Angular velocity vector (100 Hz)—Three axes for “rotational speed” in rad/s;

- Acceleration vector (100 Hz)—Three axes for acceleration (gravity + linear motion) in m/s2;

- Magnetic field strength vector (20 Hz)—Three axes for magnetic field strength detecting in micro Tesla (uT);

- Linear acceleration vector (100 Hz)—Three axes for linear acceleration (acceleration minus gravity) in m/s2;

- Vector for gravity (100 Hz)—Three axes for gravitational acceleration (minus any motion) in m/s2;

- Communication mode: Standard IIC/serial communication protocol.

4.2. Software Application Development

4.3. C# Software Application

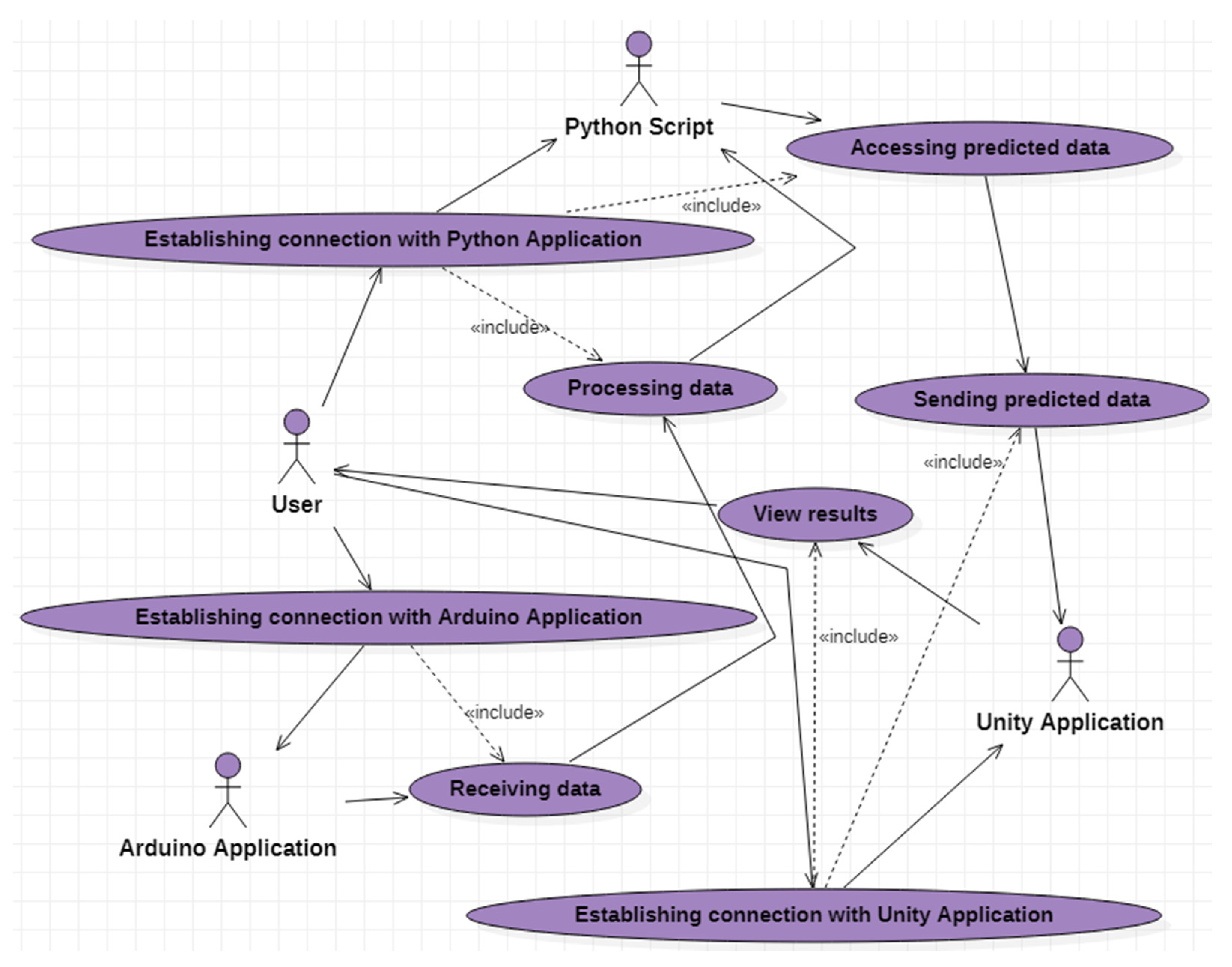

- eight use cases that describe the functionalities of the software application;

- four actors:

- ▪

- the user who represents the external entity with which the C# application interacts;

- ▪

- application implemented in Arduino;

- ▪

- Python script;

- ▪

- Unity application.

- Relations between the user and the use cases (association relations), as well as relations between the use cases (dependency relations).

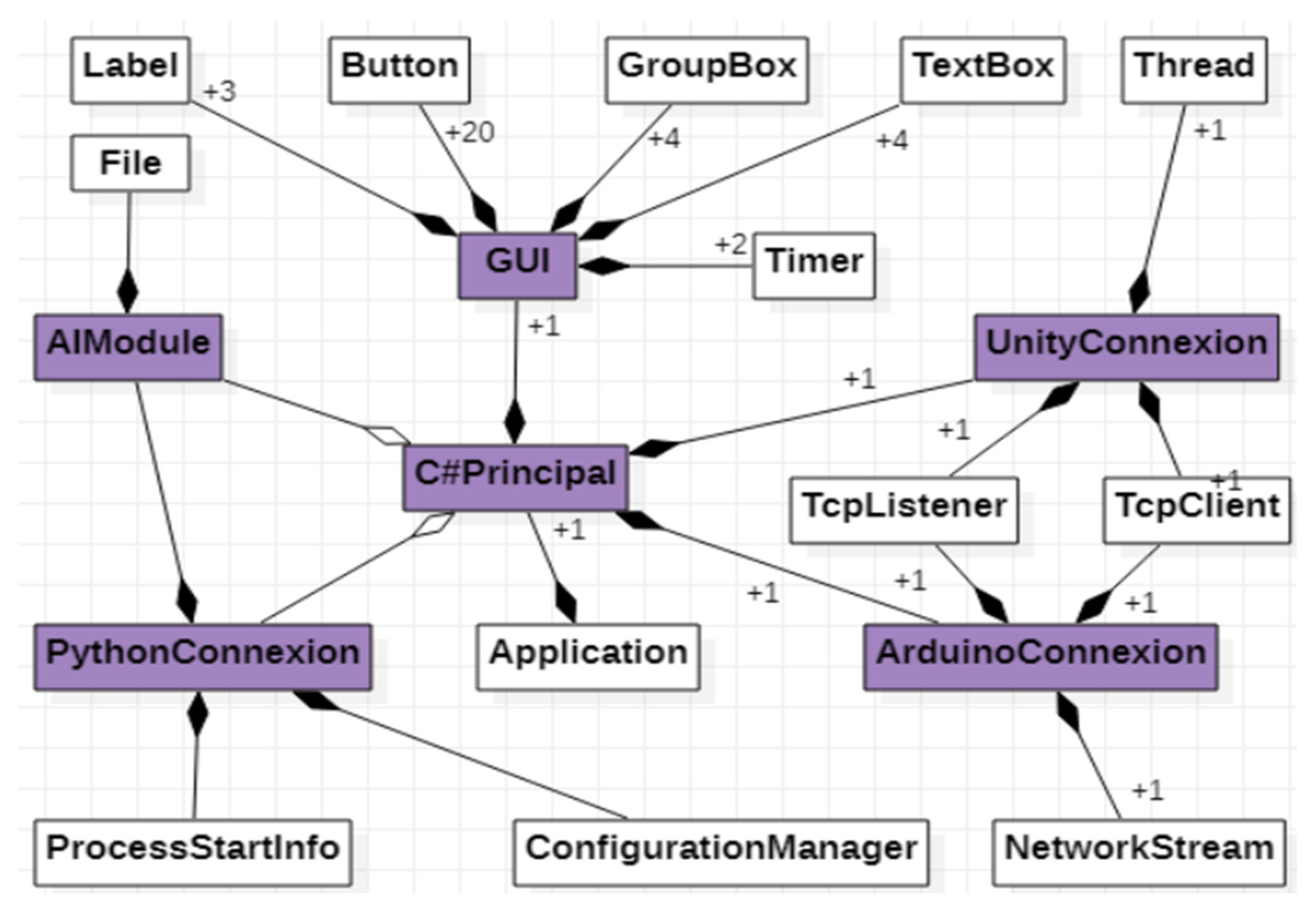

- GUI class—allows interaction with the application user.

- ArduinoConnexion class—makes the connection with the application implemented in Arduino, allowing the taking over of the values from the sensor.

- Python, allowing both the transmission of input data to the artificial neural network [34] and the values predicted by the network.

- PythonConnexion class—makes the connection with the script implemented in 4.3.1. Graphical User Interface

- UnityConnexion class—makes the connection with the virtual application implemented in Unity.

- C#Principal class—represents the main class of the application, being composed of one object of each previously specified class.

Graphical User Interface

- The first time, we choose the type of control, which can be manual or automatic. For manual control, press the “Manual” button (Figure 11, 1), and at this moment a user interface is activated through which the drone can be controlled in manual control, the automatic control of the drone being inactive. The following commands are used for manual drone control:

- ▪

- to begin, we must press on the “ConnectVirtualRealityApp” button (Figure 11, 2) to make the connection between the C# application that contains the user interface and the virtual reality application via the TCP/IP protocol;

- ▪

- by pressing the “StartApp” button (Figure 11, 3) the data communication between the C# application and the virtual reality application begins;

- ▪

- the following buttons (Figure 11, 4) control the drone, as follows:

- -

- when the “up” button is pressed, the drone rises in the vertical plane;

- -

- when the “down” button is pressed, the drone descends in the vertical plane;

- -

- when the “front” button is pressed, the drone moves forward in a horizontal plane;

- -

- to move the drone back horizontally, we must press the “down” button;

- -

- when the “turnLeft” button is pressed, the drone rotates counterclockwise;

- -

- when the “turnRight” button is pressed, the drone rotates clockwise;

- -

- via the “clockwise rotation” button, the drone will make a clockwise turn;

- -

- via the “counterclockwise rotation” button, the drone will make a counterclockwise turn.

- •

- ▪ by pressing the “ConnectionESP32/DisconnectionESP32” button (Figure 12, 6), a connection is made by Wi-Fi communication between the C# program containing the user interface and the Arduino program which is on the ESP32 microcontroller;

- ▪ pressing the “Start/Stop” button (Figure 12, 7) starts the data transfer via Wi-Fi communication between the C# application and the Arduino application;

- ▪ at the beginning of the use of the sensor device, the sensors must first be calibrated, and when the sensors have been calibrated, the status with the text “ON” on a yellow background is displayed on the user interface (Figure 12, 8);

- ▪ after the sensors have been calibrated, they can be used in the control to orient the drone on the X, Y and Z axes (Figure 12, 9);

- ▪ to connect via the TCP/IP protocol between the C# application and the virtual reality application, we must press the “ConnectionVirtualRealityApp/DisconnectionVirtualRealityApp” button (Figure 12, 10);

- ▪ to start the data transfer between the C# application and the virtual reality application, we must press the “StartApp/StopApp” button (Figure 12, 11);

- ▪ in order to control the drone directly through the sensor device that was attached to the upper limb, without using neural networks, the “No RN” button is used (Figure 12, 12);

- ▪ by pressing the “RN” button (Figure 12, 13), the data that are received from the sensors are first passed through the neural network algorithm, after which they are sent to the virtual reality application to help control the drone;

- ▪ the “Level” field (Figure 12, 14) is used to display the level traveled by the drone.

4.4. Arduino Software Application

- -

- Wire.h: through this library, the communication of the IMU sensor BNO055 with the development board ESP32 is made through the IC2 protocol;

- -

- WiFi.h: library used to connect the development board with microcontroller to the internet via the TCP/IP protocol;

- -

- Adafruit_Sensor.h, Adafruit_BNO055.h: these libraries contain methods that are used in writing the program for obtaining data from the BNO055 sensor;

- -

- utility/imumaths.h: library containing mathematical methods for inertial units of measurement.

4.5. Python Software Application

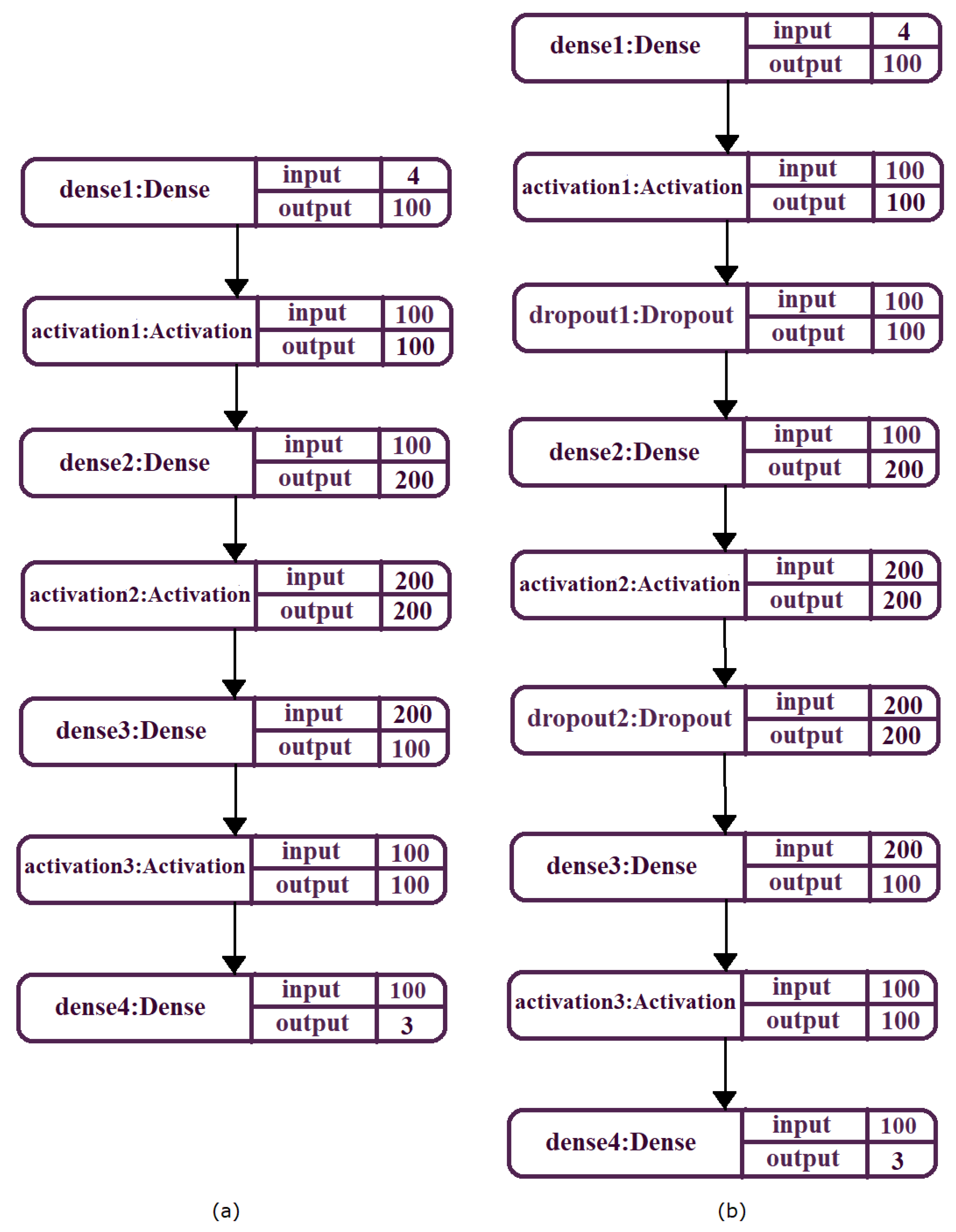

- a level corresponding to the input data;

- a level corresponding to the output data;

- one or more hidden levels that represent the computational engine of the MLP [38] network.

4.6. Unity Application

- -

- position 1: the drone remains in a static position above the earth’s surface;

- -

- position 2: moving the drone in the forward direction;

- -

- position 3: lifting the drone vertically;

- -

- position 4: lowering the drone vertically;

- -

- position 5: rotating the drone in the counterclockwise direction;

- -

- position 6: rotating the drone in the clockwise direction;

- -

- position 7: turning the drone in the counterclockwise direction;

- -

- position 8: moving the drone in the forward direction;

- -

- position 9: turning the drone in the clockwise direction;

- -

- position 10: moving the drone in the forward direction.

4.7. Experimental Validation

4.7.1. Participants

4.7.2. Performance Evaluation

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Zhu, J.; Liu, X.; Shi, Q.; He, T.; Sun, Z.; Guo, X.; Liu, W.; Sulaiman, O.B.; Dong, B.; Lee, C. Development Trends and Perspectives of Future Sensors and MEMS/NEMS. Micromachines 2020, 11, 7. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fraune, M.R.; Khalaf, A.S.; Zemedie, M.; Pianpak, P.; Mianji, Z.N.; Alharthi, S.A.; Dolgov, I.; Hamilton, B.; Tran, S.; Toups, Z.O. Developing Future Wearable Interfaces for Human-Drone Teams through a Virtual Drone Search Game. Int. J. Hum. Comput. Stud. 2021, 147, 102573. [Google Scholar] [CrossRef]

- Ullo, S.L.; Del Rosso, M.P.; Sebastianelli, A.; Puglisi, E.; Bernardi, M.L.; Cimitile, M. How to develop your network with Python and Keras. Artif. Intell. Appl. Satell. Based Remote Sens. Data Earth Obs. 2021, 98, 131–158. [Google Scholar]

- Covaciu, F.; Pisla, A.; Vaida, C.; Gherman, B.; Pisla, D. Development of a Virtual Reality Simulator for a Lower Limb Rehabilitation. In Proceedings of the 2020 IEEE International Conference on Automation, Quality and Testing, Robotics (AQTR), Cluj-Napoca, Romania, 21–23 May 2020; pp. 1–6. [Google Scholar]

- Covaciu, F.; Gherman, B.; Pisla, A.; Carbone, G.; Pisla, D. Rehabilitation System with Integrated Visual Stimulation. In New Trends in Mechanism and Machine Science, Proceedings of the European Conference on Mechanism Science (EuCoMeS); Springer: Cham, Switzerland, 2020; pp. 131–137. [Google Scholar]

- Li, Y.; Karim, M.M.; Qin, R. A Virtual Reality-based Training and Assessment System for Bridge Inspectors with an Assistant Drone. arXiv 2021, arXiv:2109.02705v3. [Google Scholar] [CrossRef]

- Koç, D.; Seçkin, A.Ç.; Satı, Z.E. Evaluation of Participant Success in Gamified Drone Training Simulator Using Brain Signals and Key Logs. Brain Sci. 2021, 11, 1024. [Google Scholar] [CrossRef]

- Nguyen, V.T.; Jung, K.; Dang, T. DroneVR: A Web Virtual Reality Simulator for Drone Operator. In Proceedings of the International Conference on Artificial Intelligence and Virtual Reality (AIVR), San Diego, CA, USA, 9–11 December 2019; pp. 257–262. [Google Scholar]

- Rognon, C.; Mintchev, S.; Agnola, F.; Cherpillod, A.; Atienza, D.; Floreano, D. FlyJacket: An Upper Body Soft Exoskeleton for Immersive Drone Control. IEEE Robot. Autom. Lett. 2018, 3, 2362–2369. [Google Scholar] [CrossRef]

- Covaciu, F.; Pisla, A.; Iordan, A.E. Development of a Virtual Reality Simulator for an Intelligent Robotic System Used in Ankle Rehabilitation. Sensors 2021, 21, 1537. [Google Scholar] [CrossRef]

- Jiang, C.; Chen, Y.; Chen, S.; Bo, Y.; Li, W.; Tian, W.; Guo, J. A Mixed Deep Recurrent Neural Network for MEMS Gyroscope Noise Suppressing. Electronics 2019, 8, 181. [Google Scholar] [CrossRef] [Green Version]

- Rybarczyk, D. Application of the MEMS Accelerometer as the Position Sensor in Linear Electrohydraulic Drive. Sensors 2021, 21, 1479. [Google Scholar] [CrossRef]

- Nevlydov, I.; Filipenko, O.; Volkova, M.; Ponomaryova, G. MEMS-Based Inertial Sensor Signals and Machine Learning Methods for Classifying Robot Motion. In Proceedings of the Second International Conference on Data Stream Mining & Processing (DSMP), Lviv, Ukraine, 21–25 August 2018; pp. 13–16. [Google Scholar]

- Xing, H.; Hou, B.; Lin, Z.; Guo, M. Modeling and Compensation of Random Drift of MEMS Gyroscopes Based on Least Squares Support Vector Machine Optimized by Chaotic Particle Swarm Optimization. Sensors 2017, 7, 2335. [Google Scholar] [CrossRef] [Green Version]

- Tsinganos, P.; Skodras, A. On the Comparison of Wearable Sensor Data Fusion to a Single Sensor Machine Learning Technique in Fall Detection. Sensors 2018, 18, 592. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Vaida, C.; Birlescu, I.; Pisla, A.; Ulinici, I.; Tarnita, D.; Carbone, G.; Pisla, D. Systematic Design of a Parallel Robot for Lower Limb Rehabilitation. IEEE Access 2020, 8, 34522–34537. [Google Scholar] [CrossRef]

- Tucan, P.; Gherman, B.; Major, K.; Vaida, C.; Major, Z.; Plitea, N.; Carbone, G.; Pisla, D. Fuzzy Logic-Based Risk Assessment of a Parallel Robot for Elbow and Wrist Rehabilitation. Int. J. Environ. Res. Public Health 2020, 17, 654. [Google Scholar] [CrossRef] [Green Version]

- Major, Z.Z.; Vaida, C.; Major, K.A.; Tucan, P.; Simori, G.; Banica, A.; Brusturean, E.; Burz, A.; Craciunas, R.; Ulinici, I.; et al. The Impact of Robotic Rehabilitation on the Motor System in Neurological Diseases. A Multimodal Neurophysiological Approach. Int. J. Environ. Res. Public Health 2020, 17, 6557. [Google Scholar] [CrossRef] [PubMed]

- Tucan, P.; Vaida, C.; Ulinici, I.; Banica, A.; Burz, A.; Pop, N.; Birlescu, I.; Gherman, B.; Plitea, N.; Antal, T.; et al. Optimization of the ASPIRE Spherical Parallel Rehabilitation Robot Based on Its Clinical Evaluation. Int. J. Environ. Res. Public Health 2021, 18, 3281. [Google Scholar] [CrossRef] [PubMed]

- Esteves, R.A.; Wang, C.; Kraft, M. Python-Based Open-Source Electro-Mechanical Co-Optimization System for MEMS Inertial Sensors. Micromachines 2022, 13, 1. [Google Scholar] [CrossRef] [PubMed]

- Smart Sensor: BNO055. Available online: www.bosch-sensortec.com/products/smart-sensors/bno055/ (accessed on 16 December 2021).

- Odemis, E.; Baysal, C.V. Development of a participation assessment system based on multimodal evaluation of user responses for upper limb rehabilitation. Biomed. Signal Process. Control 2021, 70, 103066. [Google Scholar] [CrossRef]

- Dong, X.; Huang, Y.; Lai, P.; Huang, Q.; Su, W.; Li, S.; Xu, W. Research on Decomposition of Offset in MEMS Capacitive Accelerometer. Micromachines 2021, 12, 1000. [Google Scholar] [CrossRef]

- Wu, L.; Tian, Z.; Ren, D.; You, Z. A Miniature Resonant and Torsional Magnetometer Based on Lorentz Force. Micromachines 2018, 9, 666. [Google Scholar] [CrossRef] [Green Version]

- Gu, H.; Su, W.; Zhao, B.; Zhou, H.; Liu, X. A Design Methodology of Digital Control System for MEMS Gyroscope Based on Multi-Objective Parameter Optimization. Micromachines 2020, 11, 75. [Google Scholar] [CrossRef] [Green Version]

- Grattarola, D.; Alippi, C. Graph Neural Networks in TensorFlow and Keras with Spectral. IEEE Comput. Intell. Mag. 2021, 16, 99–106. [Google Scholar] [CrossRef]

- Awar, N.; Zhu, S.; Biros, G.; Gligoric, M. A performance portability framework for Python. In Proceedings of the ACM International Conference on Supercomputing, Virtual Event USA, 14–17 June 2021; pp. 467–478. [Google Scholar]

- Rob, R.; Tirian, G.O.; Panoiu, C. Intelligent Acquisition System used in Mechanical Laboratory. In Proceedings of the International Conference on Circuits, Systems, Communications and Computers, Corfu, Greece, 14–17 July 2016; p. 76. [Google Scholar]

- Padaliya, K. C# Programming with .Net Framework; Bharti Publication: New Delhi, India, 2019. [Google Scholar]

- Iordan, A.; Panoiu, M.; Muscalagiu, I.; Rob, R. Object Oriented Development of an Interactive Software for Studying of Circle using UML. In Proceedings of the 5th European Computing Conference, Paris, France, 28–30 April 2011; pp. 291–296. [Google Scholar]

- Skeet, J.; Lippert, E. C# in Depth; Manning Publications: Shelter Island, NY, USA, 2019. [Google Scholar]

- Ivanova, L.S.; Sokolov, D.A.; Zmeev, O.A. UML Representation of Object-Oriented Design Antipatterns. In Proceedings of the International Conference on Information Technology, Amman, Jordan, 14–15 July 2021; p. 170653. [Google Scholar]

- Sergievskiy, M.; Kirpichnikova, K. Optimizing UML Class Diagrams. ITM Web Conf. 2018, 18, 03003. [Google Scholar] [CrossRef]

- Panoiu, C.; Panoiu, M.; Muscalagiu, I.; Iordan, A. Visual Interactive Environment for Study the Power Electronics using PSCAD-EMTDC Simulation Program. Comput. Appl. Eng. Educ. 2010, 18, 469–475. [Google Scholar] [CrossRef]

- Iordan, A.E. Usage of Stacked Long Short-Term Memory for Recognition of 3D Analytic Geometry Elements. In Proceedings of the 14th International Conference on Agents and Artificial Intelligence, Lisbon, Portugal, 3–5 February 2022; pp. 745–752. [Google Scholar]

- Panoiu, M.; Ghiormez, L.; Panoiu, C.; Iordan, A. A Numerical Modelling of Nonlinear Load Behaviour using Artificial Neural Networks. In Proceedings of the 11th International Conference of Numerical Analysis and Applied Mathematics, Rhodes, Greece, 21–27 September 2013; pp. 1337–1340. [Google Scholar]

- Cuntan, C.D.; Baciu, I.; Osaci, M. Operational Study of a Frequency Converter with a Control sequence utilizing Xilinx Software. Acta Polytech. Hung. 2015, 12, 201–212. [Google Scholar]

- Stoica, A.; Kadar, T.; Lemnaru, C.; Potolea, R.; Dinsoreanu, M. Intend Detection and Slot Filling with Capsule Net Architectures for a Romanian Home Assistant. Sensors 2021, 21, 1230. [Google Scholar] [CrossRef] [PubMed]

- Jin, H.; Song, Q.; Hu, X. Auto-Keras: An Efficient Neural Architecture Search System. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 1946–1956. [Google Scholar]

- Jha, A.; Ruwali, A.; Prakash, K.; Kanagachidambaresan, G. Tensorflow basics. Innov. Commun. Comput. 2021, 11, 5–13. [Google Scholar]

- Hunt, J. Introduction to Matplotlib. In Advanced Guide to Python 3 Programming; Springer: Cham, Switzerland, 2019; Volume 5, pp. 35–42. [Google Scholar]

- Czako, Z.; Sebestyen, G.; Hangan, A. AutomaticAI—A hybrid approach for automatic artificial intelligence algorithm selection and hyperparameter tuning. Expert Syst. Appl. 2021, 182, 115225. [Google Scholar] [CrossRef]

| Subject | Age | Gender | Forearm Length (in mm) |

|---|---|---|---|

| 1 | 31 | m | 265.50 |

| 2 | 27 | f | 270 |

| 3 | 35 | m | 260 |

| 4 | 42 | f | 260 |

| 5 | 41 | m | 270.50 |

| 6 | 42 | m | 270 |

| 7 | 36 | m | 260 |

| 8 | 30 | m | 250 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Covaciu, F.; Iordan, A.-E. Control of a Drone in Virtual Reality Using MEMS Sensor Technology and Machine Learning. Micromachines 2022, 13, 521. https://doi.org/10.3390/mi13040521

Covaciu F, Iordan A-E. Control of a Drone in Virtual Reality Using MEMS Sensor Technology and Machine Learning. Micromachines. 2022; 13(4):521. https://doi.org/10.3390/mi13040521

Chicago/Turabian StyleCovaciu, Florin, and Anca-Elena Iordan. 2022. "Control of a Drone in Virtual Reality Using MEMS Sensor Technology and Machine Learning" Micromachines 13, no. 4: 521. https://doi.org/10.3390/mi13040521

APA StyleCovaciu, F., & Iordan, A.-E. (2022). Control of a Drone in Virtual Reality Using MEMS Sensor Technology and Machine Learning. Micromachines, 13(4), 521. https://doi.org/10.3390/mi13040521