A Class-Aware Unsupervised Domain Adaptation Framework for Cross-Continental Crop Classification with Sentinel-2 Time Series

Highlights

- A novel spatio-temporal feature extractor that incorporates a class-aware feature alignment strategy effectively mitigates the domain shift challenge in cross-continental adaptation of crop classification.

- A new unsupervised framework shows robust cross-continental adaptation on difficult categories, boosting the Macro F1-score from 65.50% to 96.56%.

- This new approach makes the model more reliable, providing a foundation for achieving high accuracy across diverse agricultural systems.

- This robust performance makes it practical to automatically map large crop areas without needing costly local data, which helps support global food security assessments.

Abstract

1. Introduction

- Architectural Contribution: We propose a novel spatio-temporal feature extractor named PSAE-LTAE. This architecture innovatively incorporates a Pixel-Set Attention Encoder (PSAE) based on a self-attention mechanism. Compared to traditional statistical pooling methods, it can more robustly extract parcel representations, effectively mitigating the interference from mixed pixels and noise.

- Methodological Contribution: We propose and validate a class-aware MMD alignment strategy. We demonstrate the limitations of global alignment in crop classification tasks and innovatively refine the application of MMD loss from the domain level to the category level. This strategy significantly enhances alignment precision, effectively mitigating negative transfer, and is key to achieving high-performance cross-domain classification.

- Empirical Contribution: We thoroughly validate the framework’s effectiveness through a cross-continental, cross-year experiment. In a challenging transfer task from the US study area to Wensu, Xinjiang, China, our method (PLCM) outperforms multiple state-of-the-art UDA methods. Furthermore, comprehensive ablation studies systematically demonstrate the necessity and superiority of each innovative component.

2. Materials

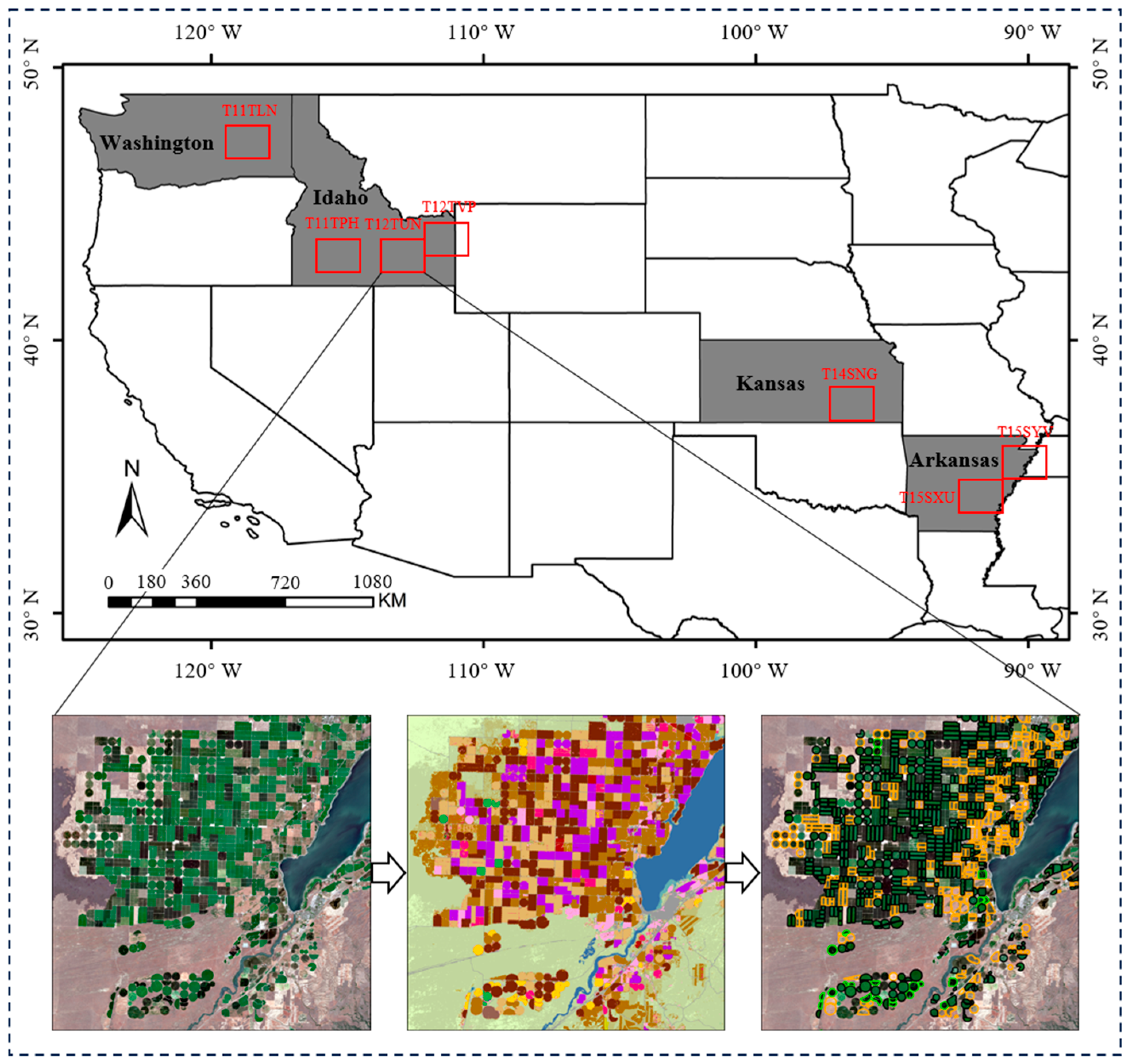

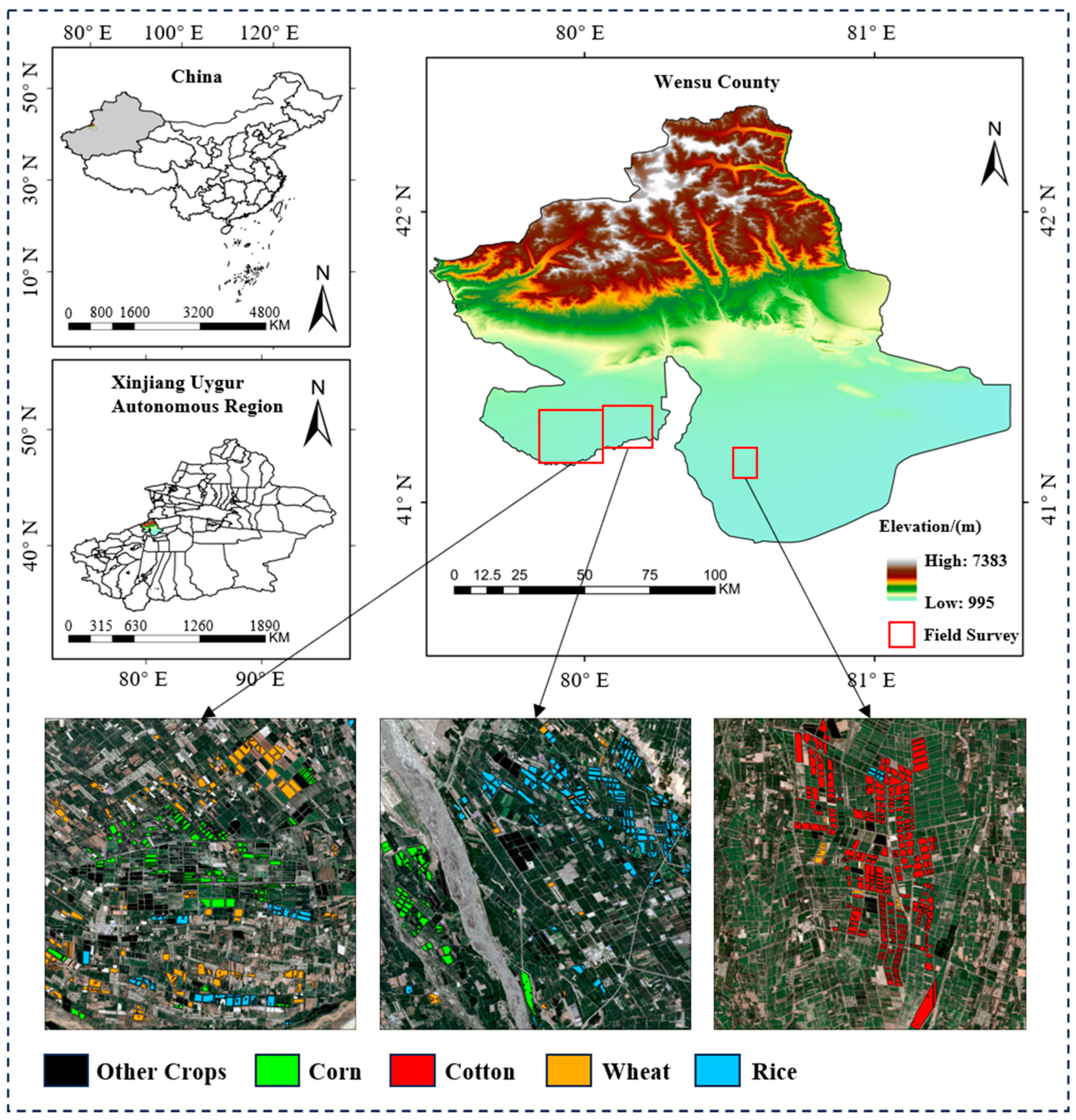

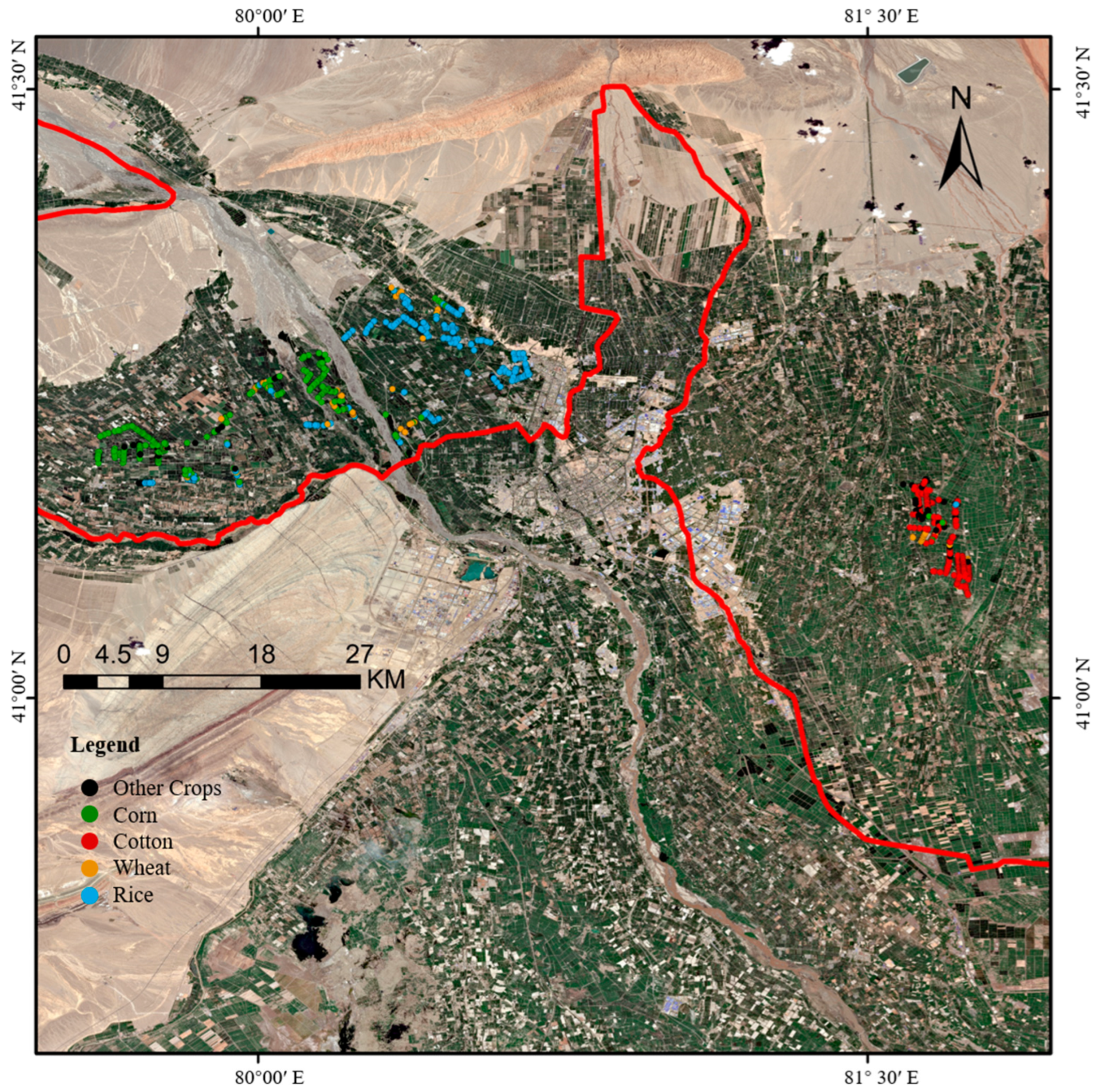

2.1. Study Area

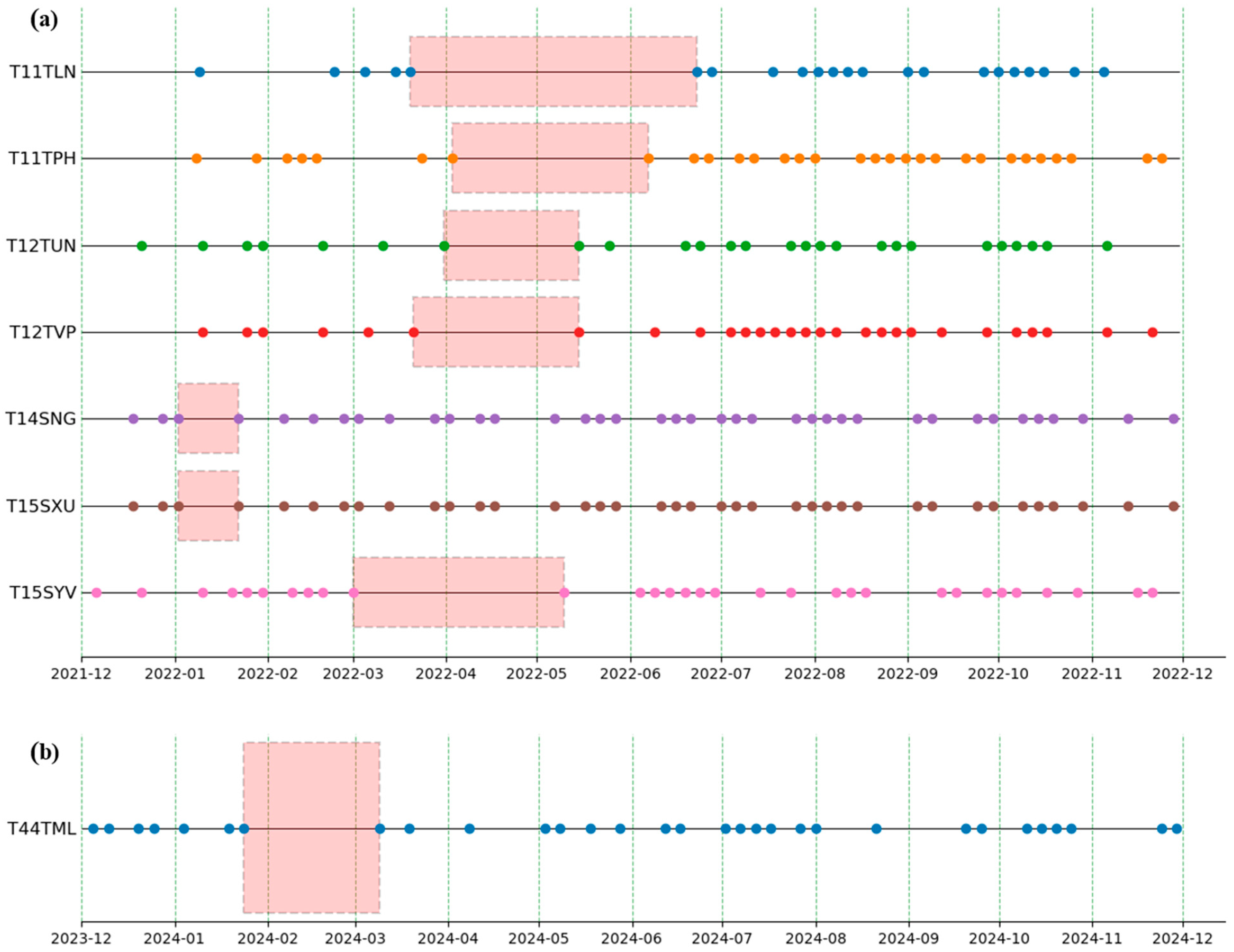

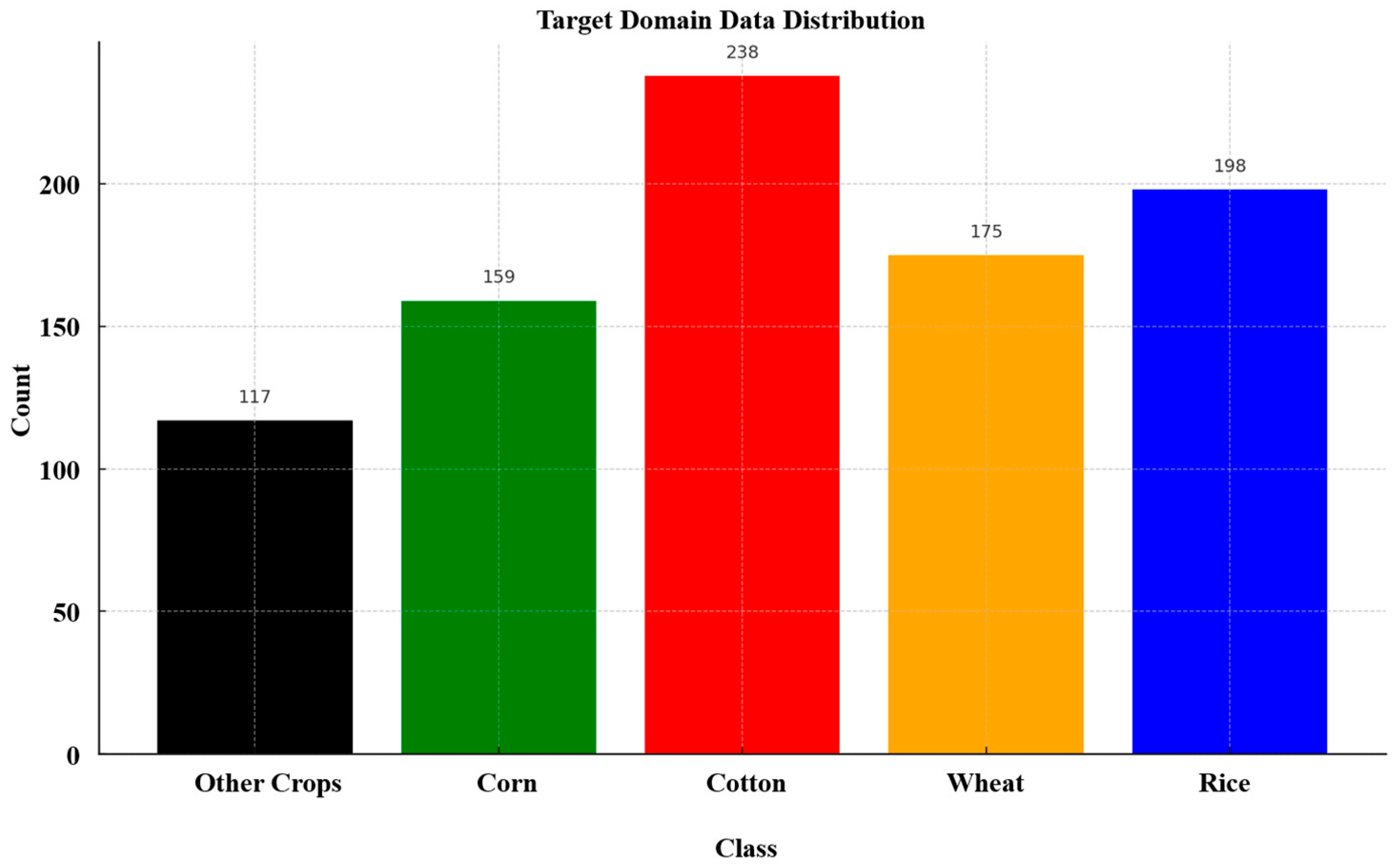

2.2. Data and Preprocessing

2.2.1. Remote Sensing Imagery

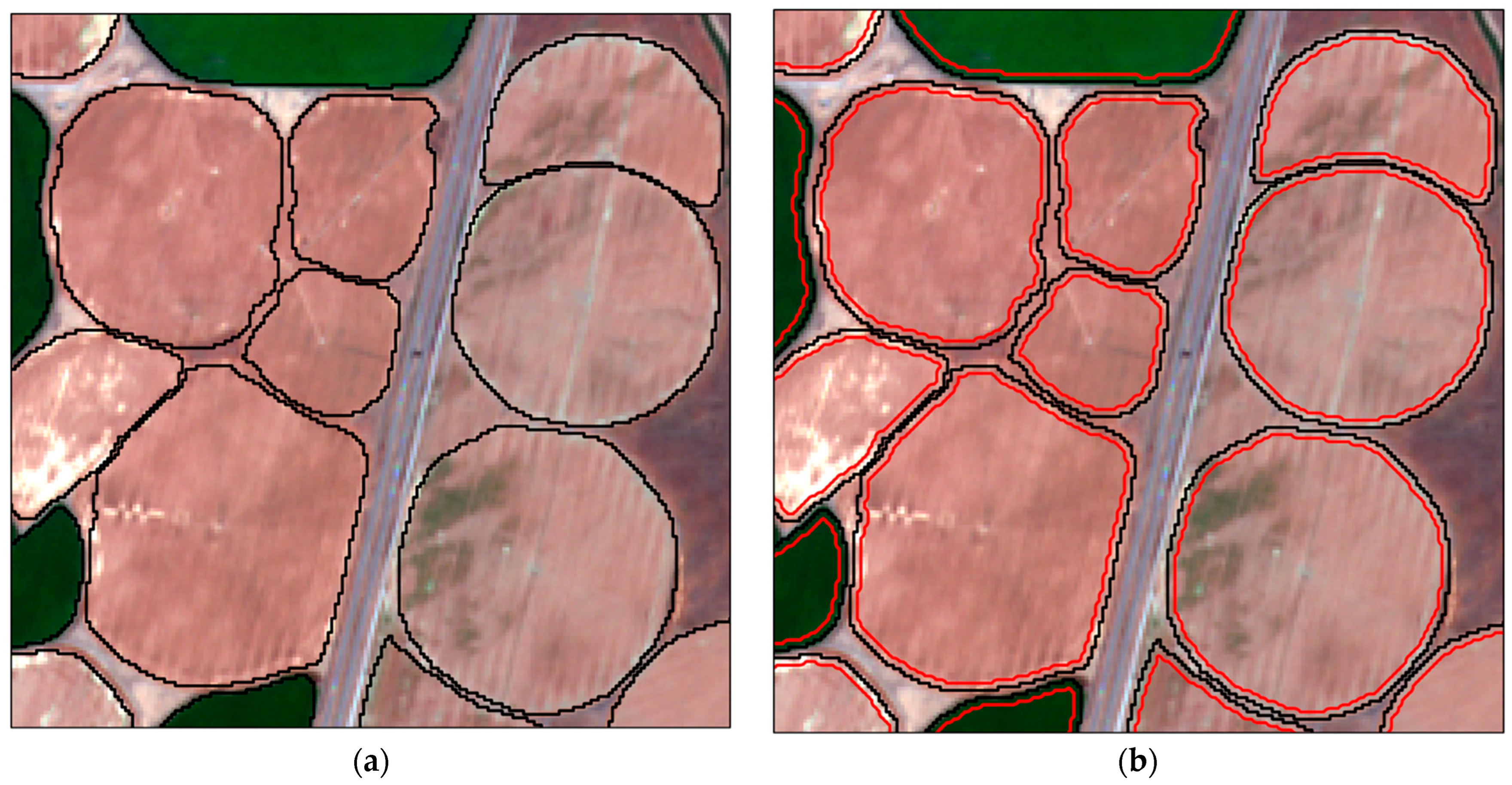

2.2.2. Ground Reference Data and Parcel Delineation

3. Methods

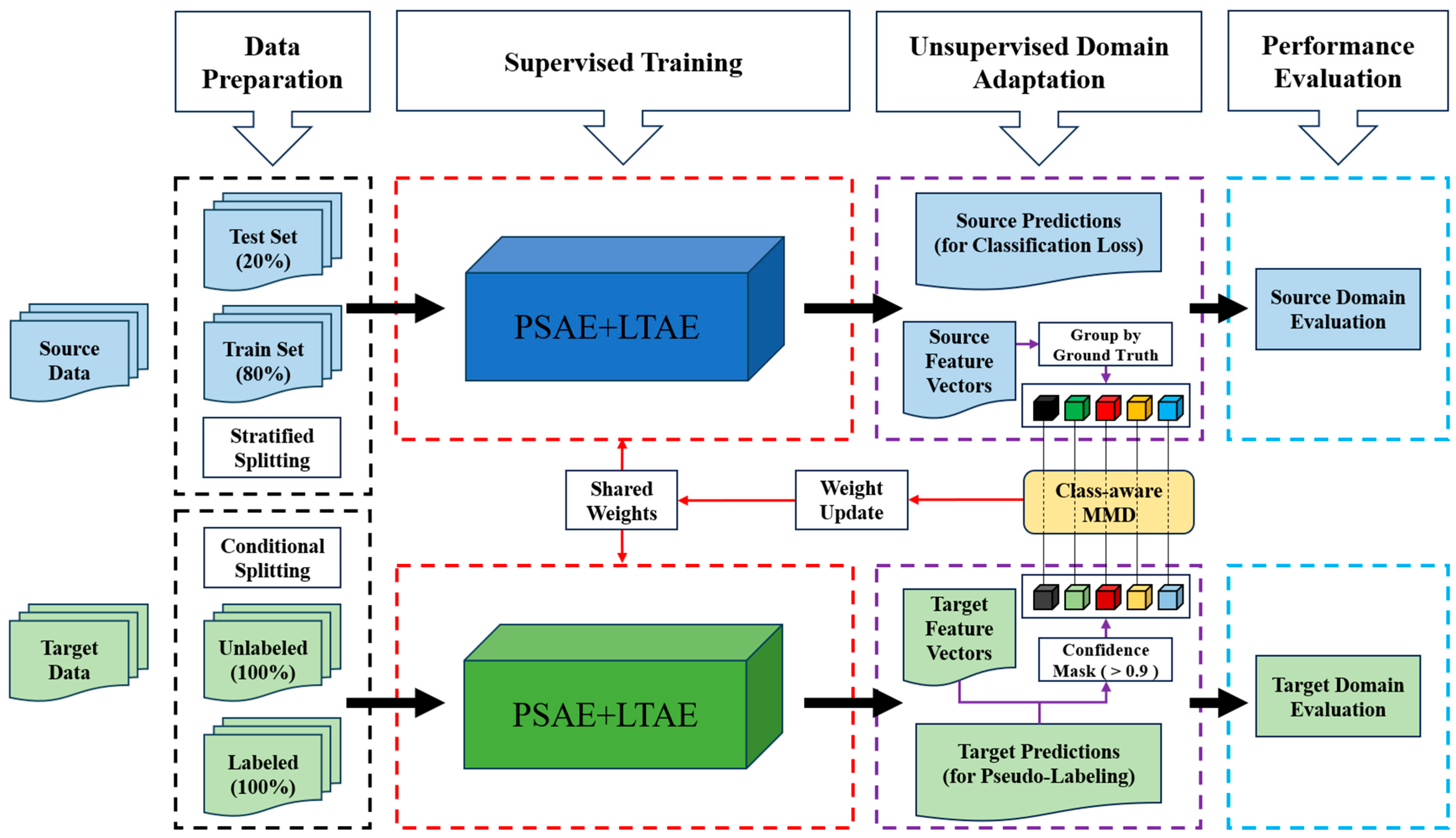

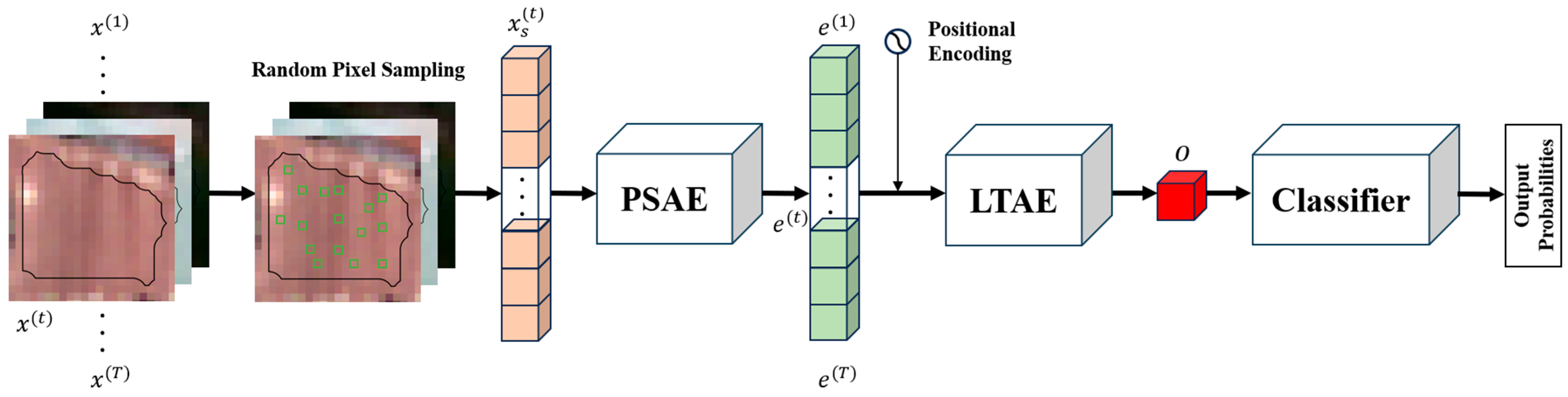

3.1. Methodological Framework

- Supervised Pre-training: In this initial stage, the core PSAE-LTAE model is trained exclusively on the labeled source dataset using a standard supervised learning approach. The PSAE-LTAE, a spatio-temporal feature extractor, first aggregates pixel-level information with its spatial encoder and then models temporal dynamics with its temporal encoder (detailed in Section 3.2). This stage is designed to learn a robust representation of crop spatio-temporal features from the source domain. The model weights that achieve the best performance on a held-out source validation set are saved for the next stage.

- UDA Fine-tuning and Prediction: Using the pre-trained weights as a starting point, the model is then fine-tuned with both the source domain and the unlabeled target domain . The objective of this stage is to minimize a joint loss function that combines the source domain classification loss with a domain alignment loss, which reduces the discrepancy between the source and target feature distributions. After fine-tuning, the final model is deployed to perform inference and generate class predictions for the target domain samples.

3.2. Backbone Network: PSAE + LTAE Architecture

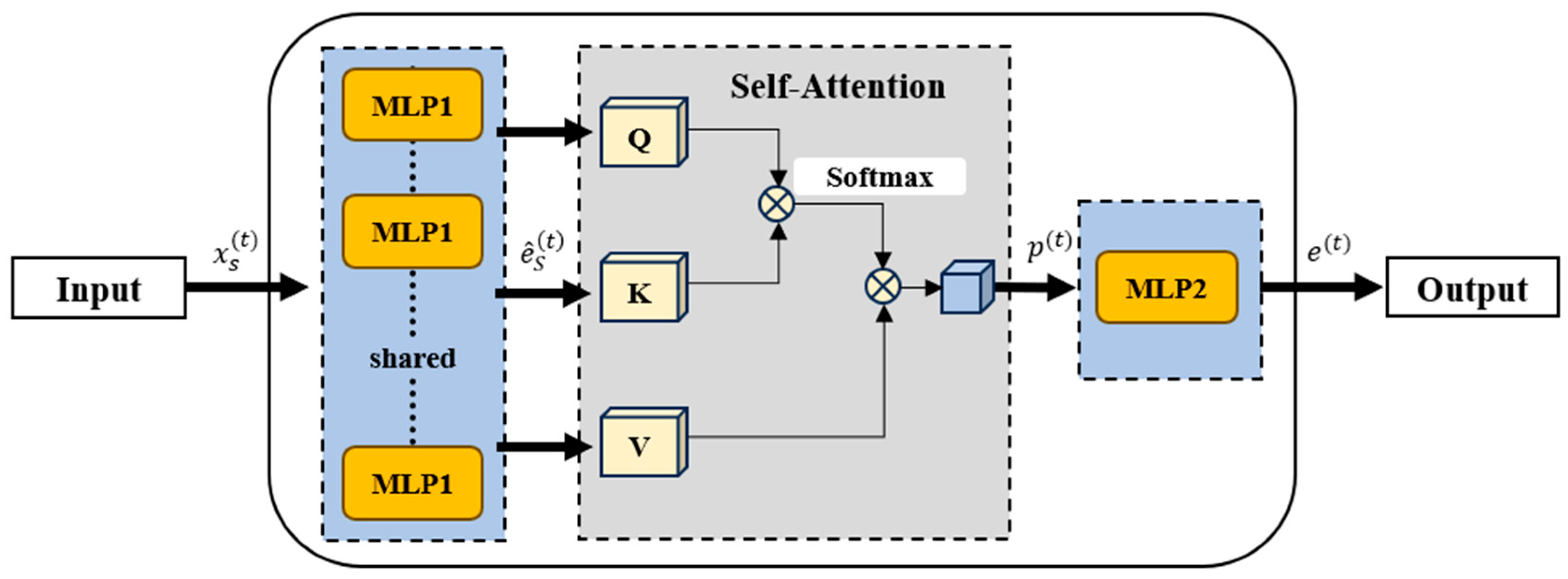

3.2.1. Spatial Encoder: PSAE

- (1)

- For a set of pixels at a given time point , a weight-sharing MLP1 is used to extract deep features for each pixel, yielding an enhanced feature set .

- (2)

- The enhanced feature set is processed by a self-attention pooling module, which aggregates the context-aware pixel features into a single vector .

- (3)

- The aggregated single vector is then passed through another MLP2 for a final transformation, producing the spatial feature output for that time point.

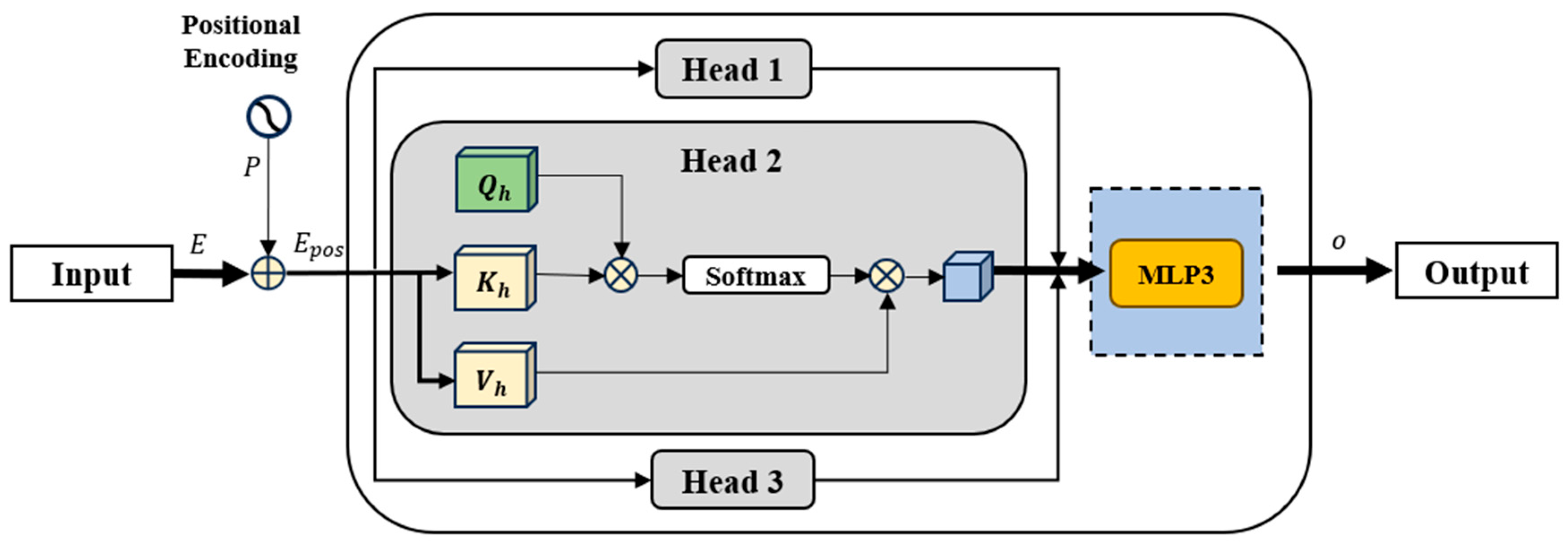

3.2.2. Time Encoder: LTAE

- (1)

- A positional encoding , corresponding to the timestamps of the feature vector sequence , is added to inject temporal order information.

- (2)

- The core of LTAE is an efficient, lightweight multi-head attention module. Unlike standard attention mechanisms, its Query (Q) is a learnable global parameter rather than being dynamically generated from the input data. This allows the model to efficiently capture the most critical global temporal patterns for the classification task. This global Q interacts with the Key (K) vectors, each with a dimension of , generated from the input sequence for each time step to compute the importance weight for each point in time. To further enhance efficiency and reduce parameters, the module employs a channel grouping strategy, distributing the input feature channels among parallel attention heads. It directly uses the input features themselves as the Value (V) for weighted aggregation, ultimately producing a single feature vector that fuses information from the entire sequence.

- (3)

- The output of the attention module is finally integrated through an MLP3 to generate the final feature vector , which represents the entire spatio-temporal sequence.

3.2.3. Output Module: Decoder

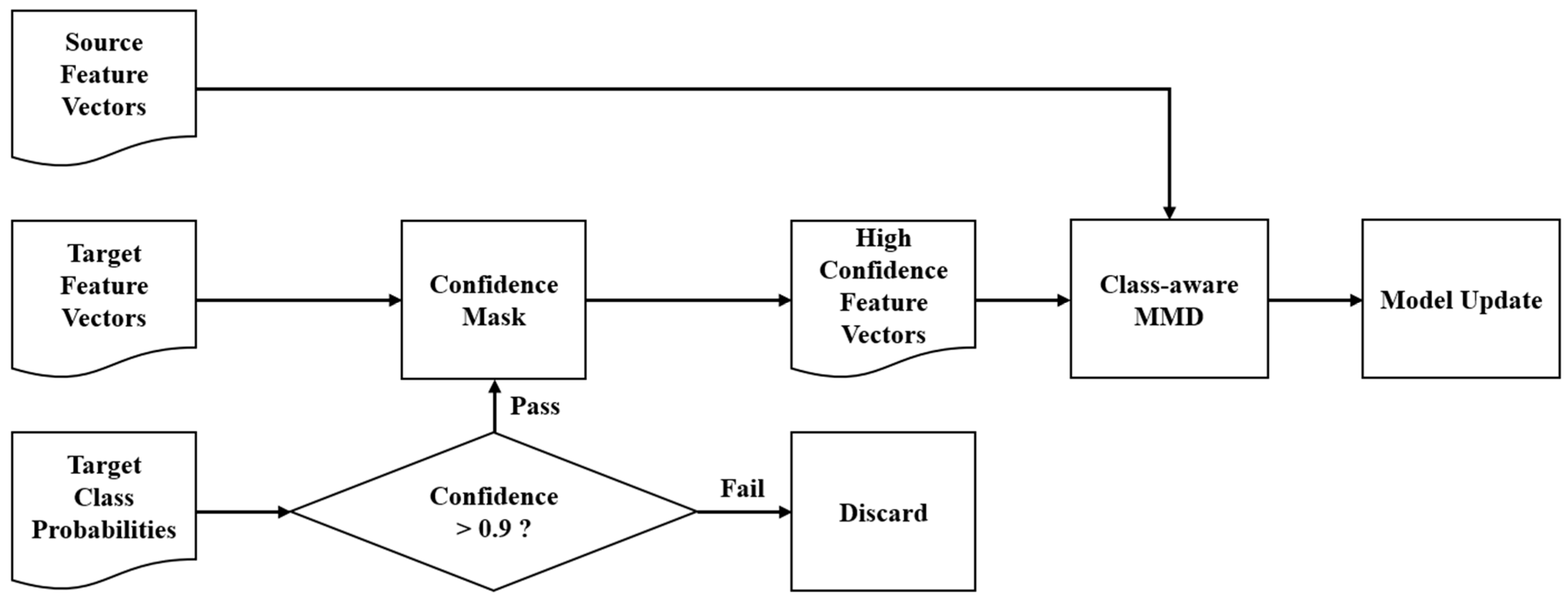

3.3. Class-Aware MMD for Domain Adaptation

- (1)

- For the target domain features , we first obtain their predicted probabilities from the model’s classifier.

- (2)

- We set a confidence threshold and select only those high-confidence samples whose predicted probabilities are greater than , along with their pseudo-labels , to be used in the MMD loss calculation.

- (3)

- For each class, we separately calculate the Gaussian kernel MMD distance between the source and target features belonging to that class. The final is the average of the MMD losses across all matched categories.

3.4. Experimental Setup

3.4.1. Baselines

- Source-Only: This model is trained exclusively on labeled data from the source domain and then directly applied to the target domain for evaluation. It represents the performance lower bound without any domain adaptation, and its results intuitively measure the extent of the domain shift between the source and target domains.

- Target-Only: This model is trained on the labeled training set of the target domain and evaluated on its test set. It represents the potential performance upper bound on this dataset, providing an ideal benchmark for all UDA methods.

- MMD [23]: A classic discrepancy-based UDA method. It achieves domain alignment by minimizing the distribution distance between source and target features in a Reproducing Kernel Hilbert Space (RKHS).

- DANN [24]: A classic adversarial learning-based UDA method. It introduces a domain discriminator that is trained adversarially against the feature extractor. A Gradient Reversal Layer (GRL) compels the feature extractor to learn domain-invariant features that are indistinguishable to the discriminator.

- CDAN+E [25]: An advanced variant of DANN. It constructs a conditional adversarial network by applying a multilinear map to the features and the classifier’s predicted probabilities, enabling a more refined distribution alignment. Additionally, it incorporates an entropy minimization loss on the target domain to encourage high-confidence predictions.

- ALDA [26]: Another adversarial UDA method distinguished by its weighted discriminator loss function. It assigns higher weights to hard-to-distinguish samples (i.e., those with prediction probabilities close to 0.5), thereby guiding the feature extractor to prioritize improvements on these challenging samples.

- JUMBOT [43]: A UDA method based on optimal transport theory. It aligns domains by computing and minimizing the optimal transport distance between the joint distributions of features and labels, and is particularly effective at handling class distribution imbalances.

3.4.2. Experimental Details

3.4.3. Evaluation Metrics

4. Results

4.1. Main Results

4.2. Ablation Study

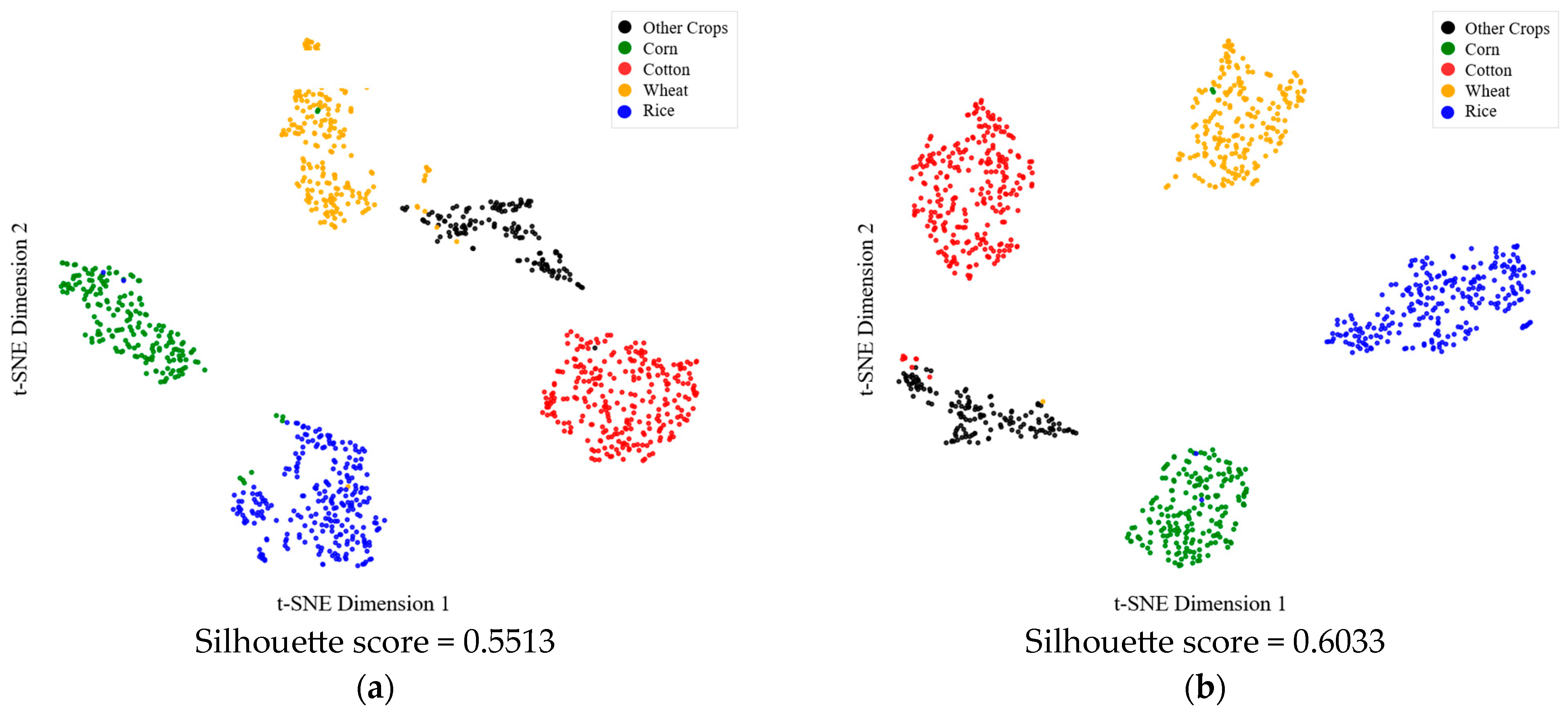

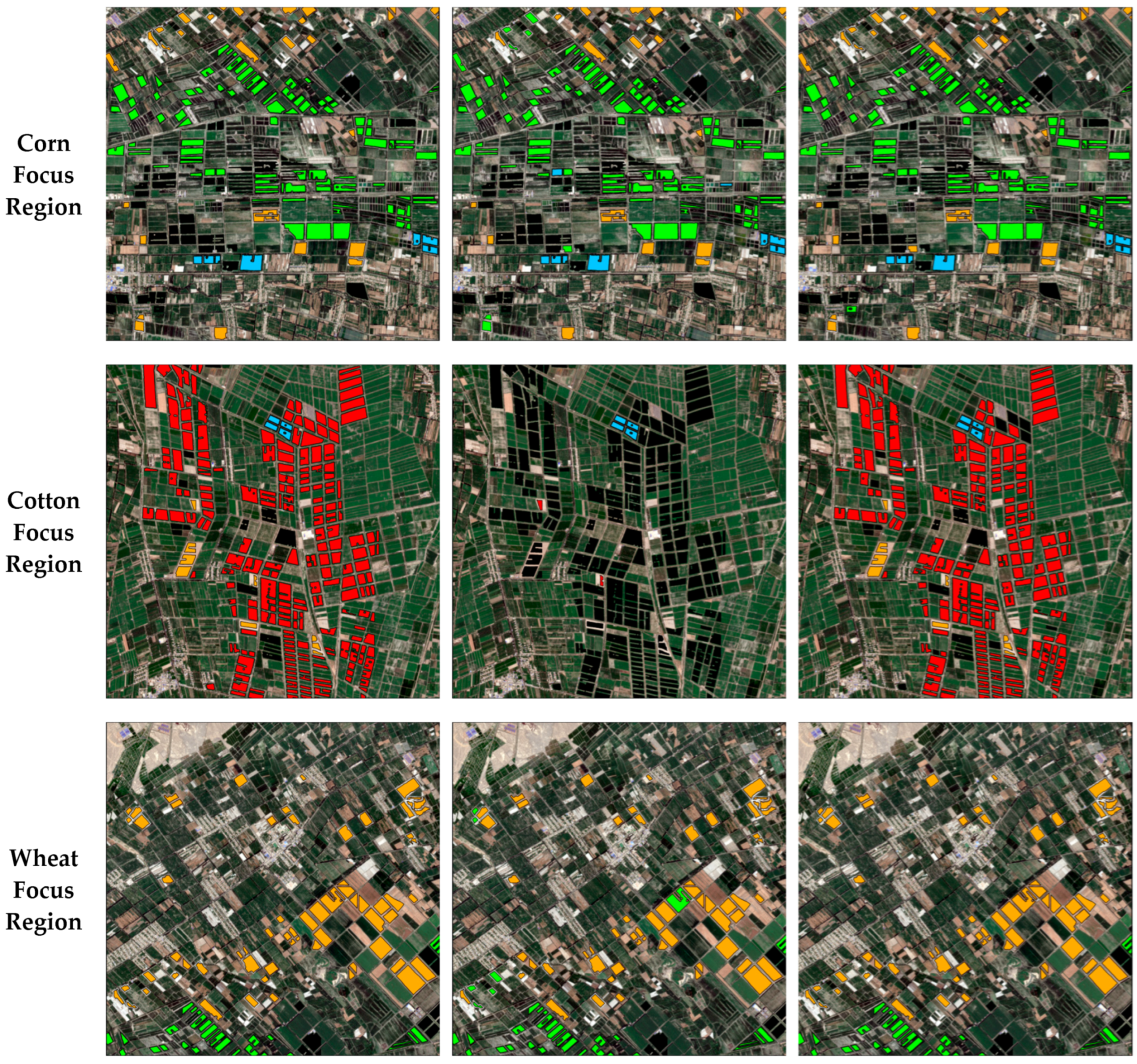

4.3. Visual Analysis

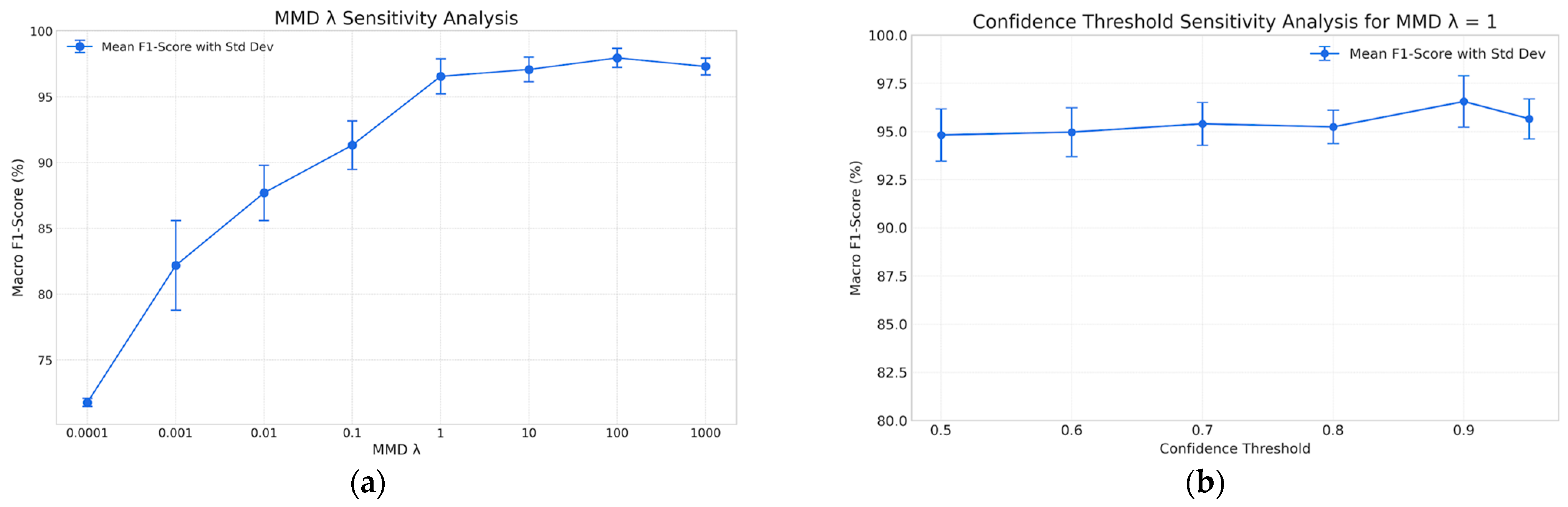

4.4. Sensitivity Analysis

5. Discussion

5.1. Overall Performance and Principal Advantages

5.2. Ablation Study: Component Necessity and Contributions

5.3. Comparison with State-of-the-Art Methods

5.4. Limitations and Future Work

- Complexity of the “Other Crops” Category, Label Shift, and Semantic Shift: A significant limitation inherent in this study relates to the constitution of the “Other Crops” category. In this work, all non-target crops were merged into this single class. It is important to clarify that this category encompasses other agricultural crops, rather than non-agricultural features (such as buildings or water bodies). Notably, the specific crop types within this category differ substantially between the domains: the source domain primarily includes soybeans, potatoes, and fallow land, whereas the target domain comprises entirely different types like peppers and tomatoes. Consequently, bundling these fundamentally distinct crops, which possess diverse phenological characteristics, into one macro-class introduces not only a complex form of label shift but also a significant semantic shift. This methodological choice simplifies the classification challenge by circumventing the task of aligning categories that lack direct semantic correspondence across domains. Therefore, while the achieved Macro F1-score of 96.56% indicates strong adaptation capability for the shared major crop types, caution is warranted when interpreting this result as a measure of overall generalization performance. It may not fully reflect the model’s ability to handle fine-grained classification tasks where precise alignment is required for all categories. The framework’s performance under conditions of strict one-to-one class correspondence remains an open question requiring further investigation. Addressing scenarios with mismatched class sets is crucial for advancing real-world applications. Future research should explore more advanced techniques specifically designed to handle such label space discrepancies. For instance, Partial Domain Adaptation (PDA) frameworks address situations where the target label set is a subset of the source [44], while Open Set Domain Adaptation (OSDA) approaches [45] explicitly account for unknown or outlier classes in the target domain. These paradigms offer theoretically more sound methodologies for tackling the “Other Crops” challenge. Furthermore, alternative strategies like Zero-Shot Learning (ZSL) adapted for remote sensing time series [46], which aim to classify unseen categories based on semantic descriptions (e.g., phenological attributes), might provide potential pathways for identifying specific minor crop types even without direct target labels. Exploring these directions constitutes a promising avenue for future investigation.

- Inconsistency of Parcel Data Sources and Potential Bias: A methodological consideration in this study arises from the different origins and delineation processes of the parcel data for the source and target domains. The source domain parcels were generated using a semi-automated approach combining automated segmentation (Geo-SAM plugin in QGIS) with manual refinement based on high-resolution imagery and Sentinel-2 data (as described in Section 2.2.2), resulting in relatively precise, pixel-level boundaries. This approach was feasible given the generally larger and more regular parcel shapes in the source regions. In contrast, the target domain parcels, characterized by smaller sizes and more complex boundaries, were initially derived from a pre-existing high-precision cropland product [36]. To enhance the reliability of the target domain ground reference data, a crucial quality control step was implemented: data from a field survey (RTK coordinates and sketches) conducted in 2024 were spatially matched with this 2020 vector dataset, and only those parcels exhibiting high boundary consistency between the field data and the existing product were retained for analysis. This filtering process undoubtedly improved the quality and accuracy of the target parcel data used in our experiments, mitigating potential issues like contamination from incorrectly merged parcels affecting the PSAE module. However, despite this quality control, a fundamental inconsistency remains due to the different origins and initial delineation methodologies of the source and target parcel datasets. This difference still introduces a potential source of bias that could influence the study’s findings. Specifically, the distinct characteristics inherent in the two sets of boundaries might artificially influence the perceived domain shift between the source and target regions, independent of true geographical or phenological variations. Furthermore, while filtering improved the quality of the utilized samples, it also means that our evaluation might not fully reflect the model’s performance when applied directly to the unfiltered high-precision cropland product across the entire target region. Assessing this broader applicability requires further investigation. Therefore, interpreting the magnitude of the domain shift and the absolute performance gains should still consider this potential methodological bias stemming from the inconsistent data generation pathways. Future work should address the challenge of validating model performance on target regions using readily available but potentially less curated parcel datasets, while simultaneously developing methods robust to varying levels of parcel boundary accuracy. Employing consistent, high-quality parcel delineation methods across both domains (though potentially at a higher cost) remains a viable option. Alternatively, investigating the integration of parcel extraction models directly into the crop classification framework, potentially leveraging unsupervised domain adaptation techniques specifically designed for segmentation [47], could lead to more robust, end-to-end systems less sensitive to inconsistencies in pre-defined parcel inputs. Addressing the challenge posed by limited ground truth for validating target parcel accuracy also remains an important direction.

- Dependence on Pseudo-Label Quality and Robustness to Large Domain Shifts: A core component of our class-aware MMD strategy is its reliance on pseudo-labels generated for the target domain to guide category-level feature alignment. To mitigate noise, we employ a high confidence threshold (τ = 0.9), aiming to select only reliable pseudo-labels for the adaptation process. However, this reliance introduces a potential limitation, particularly in scenarios involving substantial domain shifts. When transferring models across geographically distant regions, different years, or significantly varied agricultural systems, the initial performance of the Source-Only model on the target domain might be considerably low. This low performance can be exacerbated by challenges that become particularly acute under domain shift, such as the spectral mixing of crops and non-crop herbaceous vegetation (e.g., weeds). This ambiguity is often heightened in imagery captured during rainy seasons, which promotes similar vigorous growth in both crops and weeds, thereby confusing the model’s initial predictions. Under such challenging conditions, the model may fail to generate sufficient high-confidence pseudo-labels above the threshold τ, leading to a “cold-start” problem where the adaptation process cannot be effectively initiated or bootstrapped. The framework’s robustness under these extreme domain shift scenarios was not explicitly tested in this study and warrants further investigation. Future research should focus on enhancing the robustness of the adaptation process by exploring strategies that leverage limited target supervision. These include semi-supervised domain adaptation approaches utilizing a small amount of fully labeled target data [21,48], as well as weakly supervised methods that learn from more easily obtainable signals like sparse point samples [49]. Alternatively, integrating techniques like active learning [50] to intelligently select informative target samples for labeling could also improve performance and reduce reliance solely on high-confidence pseudo-labels derived from the source model. Investigating methods that can adapt effectively even with very weak initial target predictions is an essential area for future work.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ALDA | Adversarial Learning Domain Adaptation |

| CDAN+E | Conditional Adversarial Domain Adaptation with Entropy |

| CDL | Cropland Data Layer |

| CNN | Convolutional Neural Network |

| DANN | Domain-Adversarial Neural Network |

| ESA | European Space Agency |

| GRL | Gradient Reversal Layer |

| JUMBOT | Joint Unbalanced Mini-batch Optimal Transport |

| LTAE | Lightweight Temporal Attention Encoder |

| LSTM | Long Short-Term Memory |

| MMD | Maximum Mean Discrepancy |

| OSDA | Open Set Domain Adaptation |

| PAN | Phenology Alignment Network |

| PDA | Partial Domain Adaptation |

| PLCM | PSAE-LTAE + Class-aware MMD |

| PSAE | Pixel-Set Attention Encoder |

| PSE | Pixel-Set Encoder |

| RKHS | Reproducing Kernel Hilbert Space |

| RTK | Real-Time Kinematic |

| RNN | Recurrent Neural Network |

| SAM | Segment Anything Model |

| SITS | Satellite Image Time Series |

| SOTA | State-of-the-Art |

| UDA | Unsupervised Domain Adaptation |

| USDA | United States Department of Agriculture |

| ZSL | Zero-Shot Learning |

References

- Mohammadi, S.; Belgiu, M.; Stein, A. A Source-Free Unsupervised Domain Adaptation Method for Cross-Regional and Cross-Time Crop Mapping from Satellite Image Time Series. Remote Sens. Environ. 2024, 314, 114385. [Google Scholar] [CrossRef]

- Belgiu, M.; Marshall, M.; Boschetti, M.; Pepe, M.; Stein, A.; Nelson, A. PRISMA and Sentinel-2 Spectral Response to the Nutrient Composition of Grains. Remote Sens. Environ. 2023, 292, 113567. [Google Scholar] [CrossRef]

- Shen, Y.; Wang, H.; Zhang, Y.; Du, X.; Dong, Q.; Li, Q.; Wang, Y.; Zhang, S.; Dong, Y.; Xiao, J.; et al. Accurate Identification of Seed Maize Fields Based on Histogram of Stripe Slopes. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 18278–18290. [Google Scholar] [CrossRef]

- Zhen, Z.; Chen, S.; Yin, T.; Gastellu-Etchegorry, J.-P. Globally Quantitative Analysis of the Impact of Atmosphere and Spectral Response Function on 2-Band Enhanced Vegetation Index (EVI2) over Sentinel-2 and Landsat-8. ISPRS J. Photogramm. Remote Sens. 2023, 205, 206–226. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, Y.; Gao, T.; Lan, S.; Tong, F.; Li, M. Landsat 8 and Sentinel-2 Fused Dataset for High Spatial-Temporal Resolution Monitoring of Farmland in China’s Diverse Latitudes. Remote Sens. 2023, 15, 2951. [Google Scholar] [CrossRef]

- Yang, G.; Li, X.; Xiong, Y.; He, M.; Zhang, L.; Jiang, C.; Yao, X.; Zhu, Y.; Cao, W.; Cheng, T. Annual Winter Wheat Mapping for Unveiling Spatiotemporal Patterns in China with a Knowledge-Guided Approach and Multi-Source Datasets. ISPRS J. Photogramm. Remote Sens. 2025, 225, 163–179. [Google Scholar] [CrossRef]

- Zhang, H.; Kang, J.; Xu, X.; Zhang, L. Accessing the Temporal and Spectral Features in Crop Type Mapping Using Multi-Temporal Sentinel-2 Imagery: A Case Study of Yi’an County, Heilongjiang Province, China. Comput. Electron. Agric. 2020, 176, 105618. [Google Scholar] [CrossRef]

- You, N.; Dong, J.; Huang, J.; Du, G.; Zhang, G.; He, Y.; Yang, T.; Di, Y.; Xiao, X. The 10-m Crop Type Maps in Northeast China during 2017–2019. Sci. Data 2021, 8, 41. [Google Scholar] [CrossRef]

- Lin, C.; Zhong, L.; Song, X.-P.; Dong, J.; Lobell, D.B.; Jin, Z. Early- and in-Season Crop Type Mapping without Current-Year Ground Truth: Generating Labels from Historical Information via a Topology-Based Approach. Remote Sens. Environ. 2022, 274, 112994. [Google Scholar] [CrossRef]

- Yan, S.; Yao, X.; Zhu, D.; Liu, D.; Zhang, L.; Yu, G.; Gao, B.; Yang, J.; Yun, W. Large-Scale Crop Mapping from Multi-Source Optical Satellite Imageries Using Machine Learning with Discrete Grids. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102485. [Google Scholar] [CrossRef]

- Gill, H.S.; Bath, B.S.; Singh, R.; Riar, A.S. Wheat Crop Classification Using Deep Learning. Multimed. Tools Appl. 2024, 83, 82641–82657. [Google Scholar] [CrossRef]

- Lu, T.; Wan, L.; Wang, L. Fine Crop Classification in High Resolution Remote Sensing Based on Deep Learning. Front. Environ. Sci. 2022, 10, 991173. [Google Scholar] [CrossRef]

- Fan, J.; Bai, J.; Li, Z.; Ortiz-Bobea, A.; Gomes, C.P. A GNN-RNN Approach for Harnessing Geospatial and Temporal Information: Application to Crop Yield Prediction. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual Event, 22 February–1 March 2022; Volume 36, pp. 11873–11881. [Google Scholar] [CrossRef]

- Yi, Z.; Jia, L.; Chen, Q. Crop Classification Using Multi-Temporal Sentinel-2 Data in the Shiyang River Basin of China. Remote Sens. 2020, 12, 4052. [Google Scholar] [CrossRef]

- Wang, H.; Ye, Z.; Wang, Y.; Liu, X.; Zhang, X.; Zhao, Y.; Li, S.; Liu, Z.; Zhang, X. Improving the Crop Classification Performance by Unlabeled Remote Sensing Data. Expert Syst. Appl. 2024, 236, 121283. [Google Scholar] [CrossRef]

- Li, G.; Cui, J.; Han, W.; Zhang, H.; Huang, S.; Chen, H.; Ao, J. Crop Type Mapping Using Time-Series Sentinel-2 Imagery and U-Net in Early Growth Periods in the Hetao Irrigation District in China. Comput. Electron. Agric. 2022, 203, 107478. [Google Scholar] [CrossRef]

- Hu, Y.; Zeng, H.; Tian, F.; Zhang, M.; Wu, B.; Gilliams, S.; Li, S.; Li, Y.; Lu, Y.; Yang, H. An Interannual Transfer Learning Approach for Crop Classification in the Hetao Irrigation District, China. Remote Sens. 2022, 14, 1208. [Google Scholar] [CrossRef]

- Hao, P.; Di, L.; Zhang, C.; Guo, L. Transfer Learning for Crop Classification with Cropland Data Layer Data (CDL) as Training Samples. Sci. Total Environ. 2020, 733, 138869. [Google Scholar] [CrossRef]

- Ge, S.; Zhang, J.; Pan, Y.; Yang, Z.; Zhu, S. Transferable Deep Learning Model Based on the Phenological Matching Principle for Mapping Crop Extent. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102451. [Google Scholar] [CrossRef]

- Antonijević, O.; Jelić, S.; Bajat, B.; Kilibarda, M. Transfer Learning Approach Based on Satellite Image Time Series for the Crop Classification Problem. J. Big Data 2023, 10, 54. [Google Scholar] [CrossRef]

- Nyborg, J.; Pelletier, C.; Lefèvre, S.; Assent, I. TimeMatch: Unsupervised Cross-Region Adaptation by Temporal Shift Estimation. ISPRS J. Photogramm. Remote Sens. 2022, 188, 301–313. [Google Scholar] [CrossRef]

- Xu, Y.; Ebrahimy, H.; Zhang, Z. Bayesian Joint Adaptation Network for Crop Mapping in the Absence of Mapping Year Ground-Truth Samples. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–20. [Google Scholar] [CrossRef]

- Tzeng, E.; Hoffman, J.; Zhang, N.; Saenko, K.; Darrell, T. Deep Domain Confusion: Maximizing for Domain Invariance. arXiv 2014, arXiv:1412.3474. [Google Scholar] [CrossRef]

- Ganin, Y.; Lempitsky, V. Unsupervised Domain Adaptation by Backpropagation. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015; JMLR: Cambridge, MA, USA, 2015; Volume 37, pp. 1180–1189. [Google Scholar]

- Long, M.; Cao, Z.; Wang, J.; Jordan, M.I. Conditional Adversarial Domain Adaptation. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, Montréal, Canada, 3–8 December 2018; Curran Associates Inc.: Red Hook, NY, USA, 2018; Volume 31, pp. 1647–1657. [Google Scholar]

- Chen, M.; Zhao, S.; Liu, H.; Cai, D. Adversarial-Learned Loss for Domain Adaptation. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 3521–3528. [Google Scholar] [CrossRef]

- Zhang, Y.; Hao, X.; Li, F.; Wang, Z.; Li, D.; Li, M.; Mao, R. Unsupervised Domain Adaptation Semantic Segmentation Method for Wheat Disease Detection Based on UAV Multispectral Images. Comput. Electron. Agric. 2025, 236, 110473. [Google Scholar] [CrossRef]

- Wang, Y.; Feng, L.; Sun, W.; Zhang, Z.; Zhang, H.; Yang, G.; Meng, X. Exploring the Potential of Multi-Source Unsupervised Domain Adaptation in Crop Mapping Using Sentinel-2 Images. GIScience Remote Sens. 2022, 59, 2247–2265. [Google Scholar] [CrossRef]

- Wang, Y.; Feng, L.; Zhang, Z.; Tian, F. An Unsupervised Domain Adaptation Deep Learning Method for Spatial and Temporal Transferable Crop Type Mapping Using Sentinel-2 Imagery. ISPRS J. Photogramm. Remote Sens. 2023, 199, 102–117. [Google Scholar] [CrossRef]

- Wang, H.; Yao, Y.; Liu, J.; Zhang, X.; Zhao, Y.; Li, S.; Liu, Z.; Zhang, X.; Zeng, Y. Unsupervised Cross-Regional and Cross-Year Adaptation by Climate Indicator Discrepancy for Crop Classification. J. Remote Sens. 2025, 5, 0439. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, H.; He, W.; Zhang, L. Cross-Phenological-Region Crop Mapping Framework Using Sentinel-2 Time Series Imagery: A New Perspective for Winter Crops in China. ISPRS J. Photogramm. Remote Sens. 2022, 193, 200–215. [Google Scholar] [CrossRef]

- Hersbach, H.; Bell, B.; Berrisford, P.; Biavati, G.; Horányi, A.; Muñoz Sabater, J.; Nicolas, J.; Peubey, C.; Radu, R.; Rozum, I.; et al. ERA5 Hourly Data on Single Levels from 1940 to Present. Copernicus Climate Change Service (C3S) Climate Data Store (CDS). 2023. Available online: https://cds.climate.copernicus.eu/datasets/reanalysis-era5-single-levels?tab=overview (accessed on 10 November 2025).

- U.S. Department of Agriculture (USDA), National Agricultural Statistics Service (NASS). Cropland Data Layer. Available online: https://www.nass.usda.gov/Research_and_Science/Cropland/Release/index.php (accessed on 1 December 2024).

- Awada, H.; Ciraolo, G.; Maltese, A.; Provenzano, G.; Moreno Hidalgo, M.A.; Còrcoles, J.I. Assessing the Performance of a Large-Scale Irrigation System by Estimations of Actual Evapotranspiration Obtained by Landsat Satellite Images Resampled with Cubic Convolution. Int. J. Appl. Earth Obs. Geoinf. 2019, 75, 96–105. [Google Scholar] [CrossRef]

- Zhao, Z.; Fan, C.; Liu, L. Geo SAM: A QGIS Plugin Using Segment Anything Model (SAM) to Accelerate Geospatial Image Segmentation (1.1.0). Zenodo. 2023. Available online: https://zenodo.org/records/8191039 (accessed on 10 November 2025).

- Jiang, H.; Ku, M.; Zhou, X.; Zheng, Q.; Liu, Y.; Xu, J.; Li, D.; Wang, C.; Wei, J.; Zhang, J.; et al. CropLayer: A High-Accuracy 2-Meter Resolution Cropland Mapping Dataset for China in 2020 Derived from Mapbox and Google Satellite Imagery Using Data-Driven Approaches. Earth Syst. Sci. Data Discuss. 2025. preprint. [Google Scholar] [CrossRef]

- Lei, L.; Wang, X.; Zhong, Y.; Zhang, L. FineCrop: Mapping Fine-Grained Crops Using Class-Aware Feature Decoupling and Parcel-Aware Class Rebalancing with Sentinel-2 Time Series. ISPRS J. Photogramm. Remote Sens. 2025, 228, 785–803. [Google Scholar] [CrossRef]

- Chen, R.; Xiong, S.; Zhang, N.; Fan, Z.; Qi, N.; Fan, Y.; Feng, H.; Ma, X.; Yang, H.; Yang, G.; et al. Fine-Scale Classification of Horticultural Crops Using Sentinel-2 Time-Series Images in Linyi Country, China. Comput. Electron. Agric. 2025, 236, 110425. [Google Scholar] [CrossRef]

- Sainte Fare Garnot, V.; Landrieu, L.; Giordano, S.; Chehata, N. Satellite Image Time Series Classification with Pixel-Set Encoders and Temporal Self-Attention. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 12322–12331. [Google Scholar] [CrossRef]

- Sainte Fare Garnot, V.; Landrieu, L. Lightweight Temporal Self-Attention for Classifying Satellite Image Time Series. In Advanced Analytics and Learning on Temporal Data; Lemaire, V., Malinowski, S., Bagnall, A., Guyet, T., Tavenard, R., Ifrim, G., Eds.; Springer International Publishing: Cham, Switzerland, 2020; Volume 12588, pp. 171–181. [Google Scholar] [CrossRef]

- Nelder, J.A.; Wedderburn, R.W.M. Generalized Linear Models. J. R. Stat. Soc. A. 1972, 135, 370–384. [Google Scholar] [CrossRef]

- Xu, J.; Zhu, Y.; Zhong, R.; Lin, Z.; Xu, J.; Jiang, H.; Huang, J.; Li, H.; Lin, T. DeepCropMapping: A Multi-Temporal Deep Learning Approach with Improved Spatial Generalizability for Dynamic Corn and Soybean Mapping. Remote Sens. Environ. 2020, 247, 111946. [Google Scholar] [CrossRef]

- Fatras, K.; Séjourné, T.; Courty, N.; Flamary, R. Unbalanced Minibatch Optimal Transport; Applications to Domain Adaptation. In Proceedings of the 38th International Conference on Machine Learning, Virtual Event, 18–24 July 2021; Volume 139, pp. 3186–3197. [Google Scholar]

- Cao, Z.; Ma, L.; Long, M.; Wang, J. Partial Adversarial Domain Adaptation. In Computer Vision—ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer: Cham, Switzerland, 2018; Volume 11212, pp. 139–155. [Google Scholar] [CrossRef]

- Busto, P.P.; Gall, J. Open Set Domain Adaptation. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 754–763. [Google Scholar] [CrossRef]

- Wen, S.; Zhao, W.; Ji, F.; Peng, R.; Zhang, L.; Wang, Q. “Phenology Description Is All You Need!” Mapping Unknown Crop Types with Remote Sensing Time-Series and LLM Generated Text Alignment. ISPRS J. Photogramm. Remote Sens. 2025, 228, 141–165. [Google Scholar] [CrossRef]

- Wei, R.; Yang, L.; Li, X.; Zhu, C.; Zhang, L.; Wang, J.; Liu, J.; Zhu, L.; Zhou, C. Breaking the Limitations of Scenes and Sensors Variability: A Novel Unsupervised Domain Adaptive Instance Segmentation Framework for Agricultural Field Extraction. Remote Sens. Environ. 2025, 331, 115051. [Google Scholar] [CrossRef]

- Wang, G.; Wang, Y.; Zhang, J.; Wang, X.; Pan, Z. Cross-Domain Self-Supervised Few-Shot Learning via Multiple Crops with Teacher-Student Network. Eng. Appl. Artif. Intell. 2024, 132, 107892. [Google Scholar] [CrossRef]

- Cai, Z.; Xu, B.; Yu, Q.; Zhang, X.; Yang, J.; Wei, H.; Li, S.; Song, Q.; Xiong, H.; Wu, H.; et al. A Cost-Effective and Robust Mapping Method for Diverse Crop Types Using Weakly Supervised Semantic Segmentation with Sparse Point Samples. ISPRS J. Photogramm. Remote Sens. 2024, 218, 260–276. [Google Scholar] [CrossRef]

- Hamrouni, Y.; Paillassa, E.; Chéret, V.; Monteil, C.; Sheeren, D. From Local to Global: A Transfer Learning-Based Approach for Mapping Poplar Plantations at National Scale Using Sentinel-2. ISPRS J. Photogramm. Remote Sens. 2021, 171, 76–100. [Google Scholar] [CrossRef]

| Method | Macro F1-Score (%) |

|---|---|

| Source-Only | 65.50 ± 2.45 |

| MMD | 85.45 ± 5.56 |

| DANN | 94.82 ± 1.04 |

| CDAN+E | 95.65 ± 1.89 |

| ALDA | 94.29 ± 1.15 |

| JUMBOT | 93.06 ± 1.82 |

| PLCM | 96.56 ± 1.33 |

| Target-Only * | 99.17 ± 0.20 |

| Method | Other Crops | Corn | Cotton | Wheat | Rice | Macro F1-Score (%) |

|---|---|---|---|---|---|---|

| Source-Only | 49.31 ± 0.09 | 91.36 ± 5.43 | 0.00 | 88.49 ± 5.91 | 98.35 ± 0.99 | 65.50 ± 2.45 |

| MMD | 75.52 ± 9.22 | 90.07 ± 3.66 | 80.44 ± 8.60 | 90.60 ± 3.43 | 90.62 ± 3.73 | 85.45 ± 5.56 |

| CDAN+E | 89.72 ± 6.74 | 98.43 ± 0.10 | 93.30 ± 2.46 | 97.79 ± 1.12 | 99.00 ± 0.57 | 95.65 ± 1.89 |

| PLCM | 91.19 ± 4.18 | 97.93 ± 0.55 | 96.32 ± 2.23 | 98.15 ± 0.21 | 99.19 ± 0.17 | 96.56 ± 1.33 |

| Ablation | Macro F1-Score (%) | Magnitude of Decline (%) |

|---|---|---|

| PLCM | 96.56 ± 1.33 | - |

| No UDA | 65.50 ± 2.45 | ↓31.06 * |

| No balanced batch sampler | 75.43 ± 8.22 | ↓21.13 |

| Use LSTM | 75.81 ± 4.77 | ↓20.75 |

| Use PSE | 78.44 ± 4.77 | ↓18.12 |

| Use MMD | 85.45 ± 5.56 | ↓11.11 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, S.; Liu, L.; Huo, J.; Li, S.; Yin, Y.; Ma, Y. A Class-Aware Unsupervised Domain Adaptation Framework for Cross-Continental Crop Classification with Sentinel-2 Time Series. Remote Sens. 2025, 17, 3762. https://doi.org/10.3390/rs17223762

Li S, Liu L, Huo J, Li S, Yin Y, Ma Y. A Class-Aware Unsupervised Domain Adaptation Framework for Cross-Continental Crop Classification with Sentinel-2 Time Series. Remote Sensing. 2025; 17(22):3762. https://doi.org/10.3390/rs17223762

Chicago/Turabian StyleLi, Shuang, Li Liu, Jinjie Huo, Shengyang Li, Yue Yin, and Yonggang Ma. 2025. "A Class-Aware Unsupervised Domain Adaptation Framework for Cross-Continental Crop Classification with Sentinel-2 Time Series" Remote Sensing 17, no. 22: 3762. https://doi.org/10.3390/rs17223762

APA StyleLi, S., Liu, L., Huo, J., Li, S., Yin, Y., & Ma, Y. (2025). A Class-Aware Unsupervised Domain Adaptation Framework for Cross-Continental Crop Classification with Sentinel-2 Time Series. Remote Sensing, 17(22), 3762. https://doi.org/10.3390/rs17223762