DeepSwinLite: A Swin Transformer-Based Light Deep Learning Model for Building Extraction Using VHR Aerial Imagery

Abstract

1. Introduction

- i.

- Novel Architecture: We introduced a Swin Transformer-based network with three modules: MLFP fuses multi-level features, MSFA aggregates multi-scale context, and AuxHead provides auxiliary supervision to stabilize training. Together, they preserve spatial detail and improve building-boundary segmentation in VHR imagery.

- ii.

- Comprehensive Benchmarking: We conducted DeepSwinLite against both classical baselines (U-Net, DeepLabV3+, SegFormer) and recent SOTA models (LiteST-Net, SCTM, MAFF-HRNet) on the Massachusetts and WHU datasets, considering both accuracy and computational efficiency (parameters, FLOPs).

- iii.

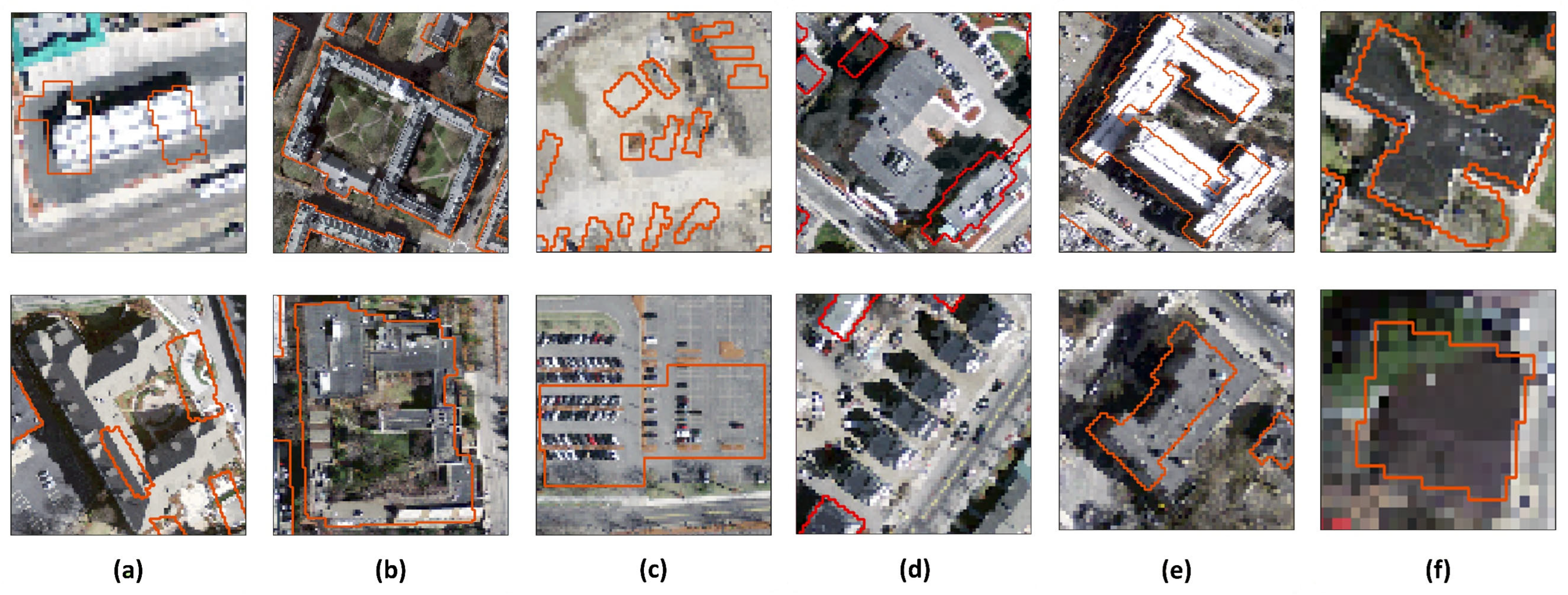

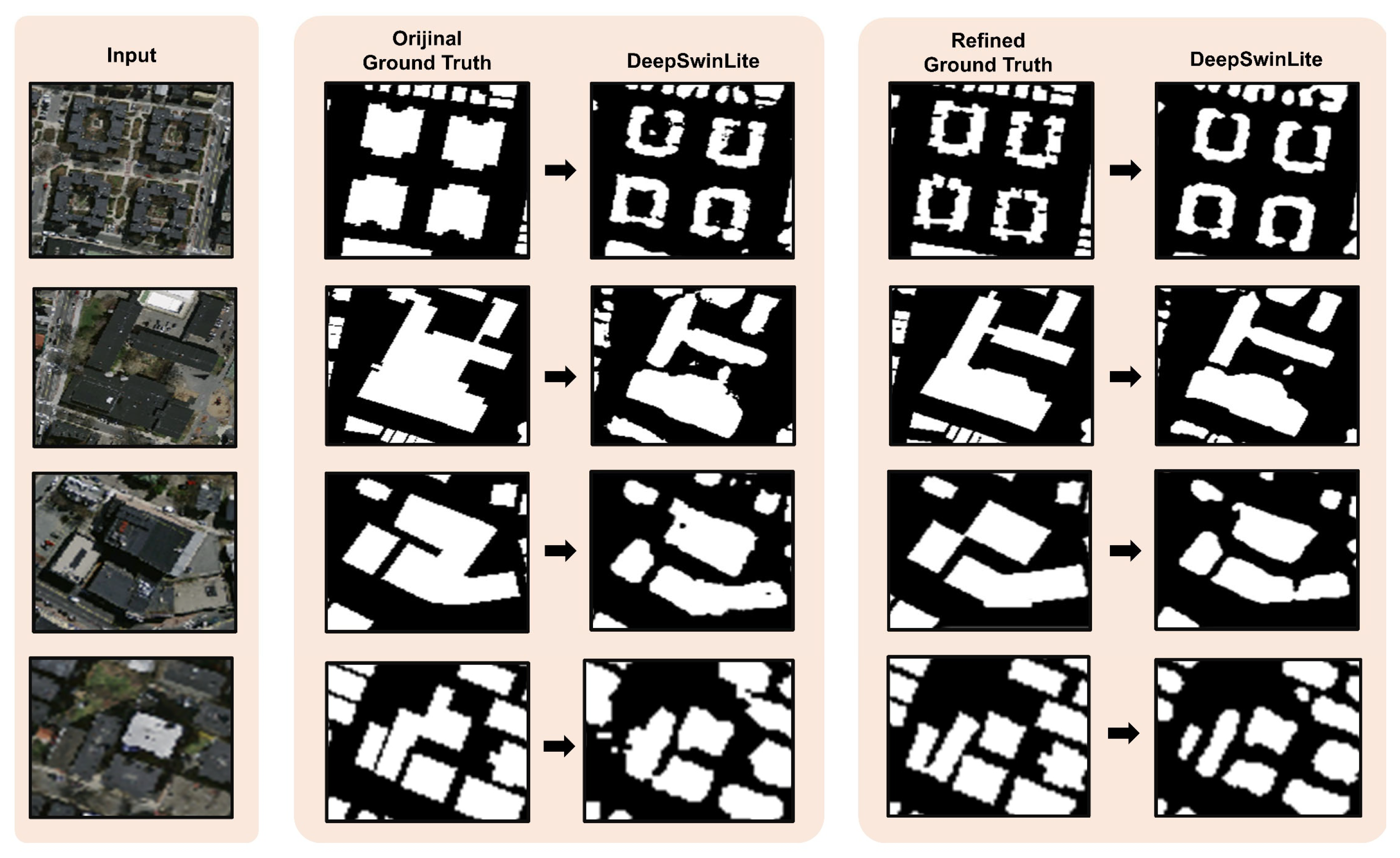

- Refined Dataset and Reproducibility: We identified and corrected annotation errors in the original Massachusetts dataset and released the refined version with source code to ensure reproducibility and foster further research.

2. Datasets

3. Methodology

3.1. Architecture Overview

3.1.1. Swin Transformer Backbone

3.1.2. Multi-Level Feature Pyramid (MLFP)

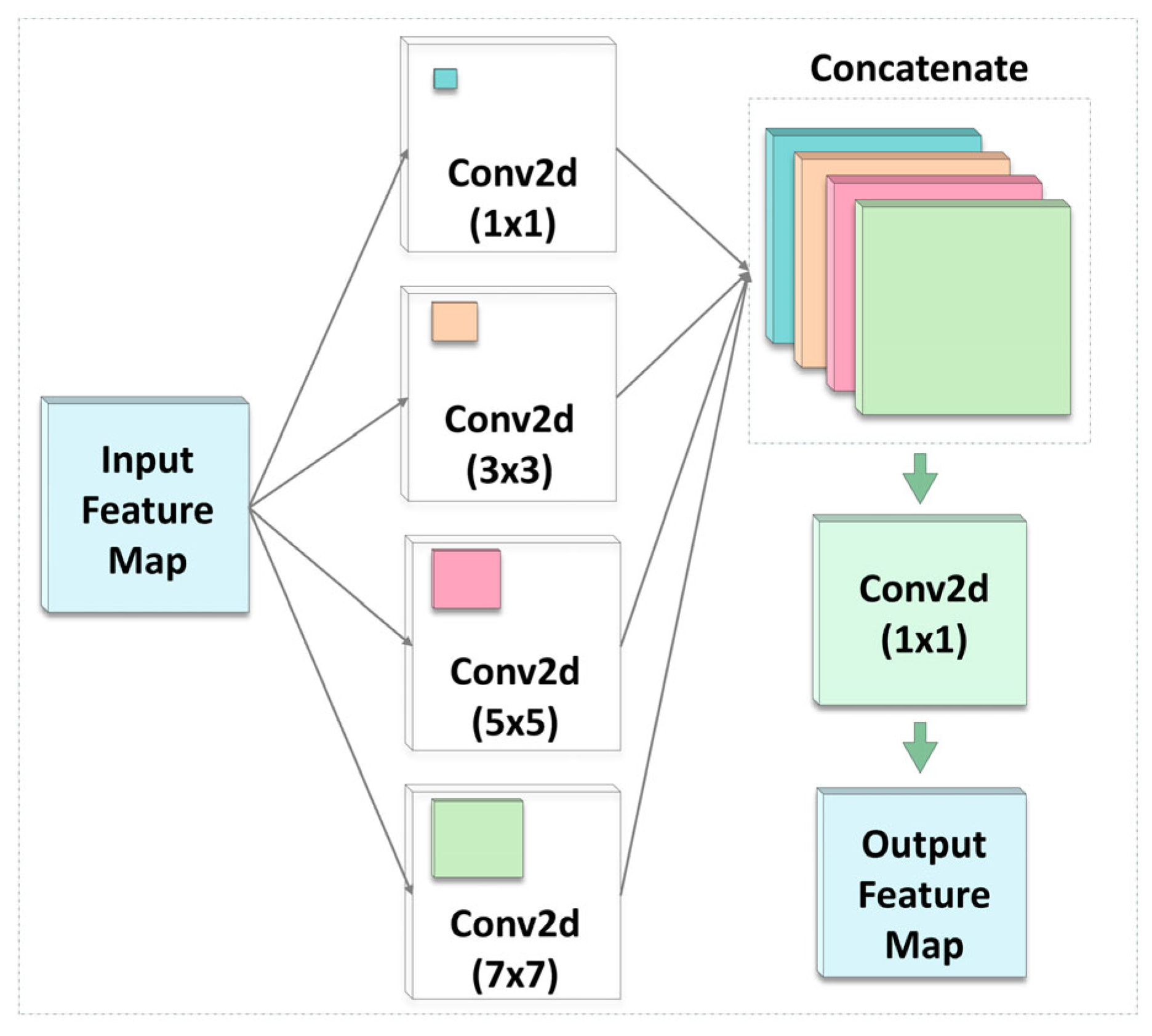

3.1.3. Multi-Scale Feature Aggregation (MSFA)

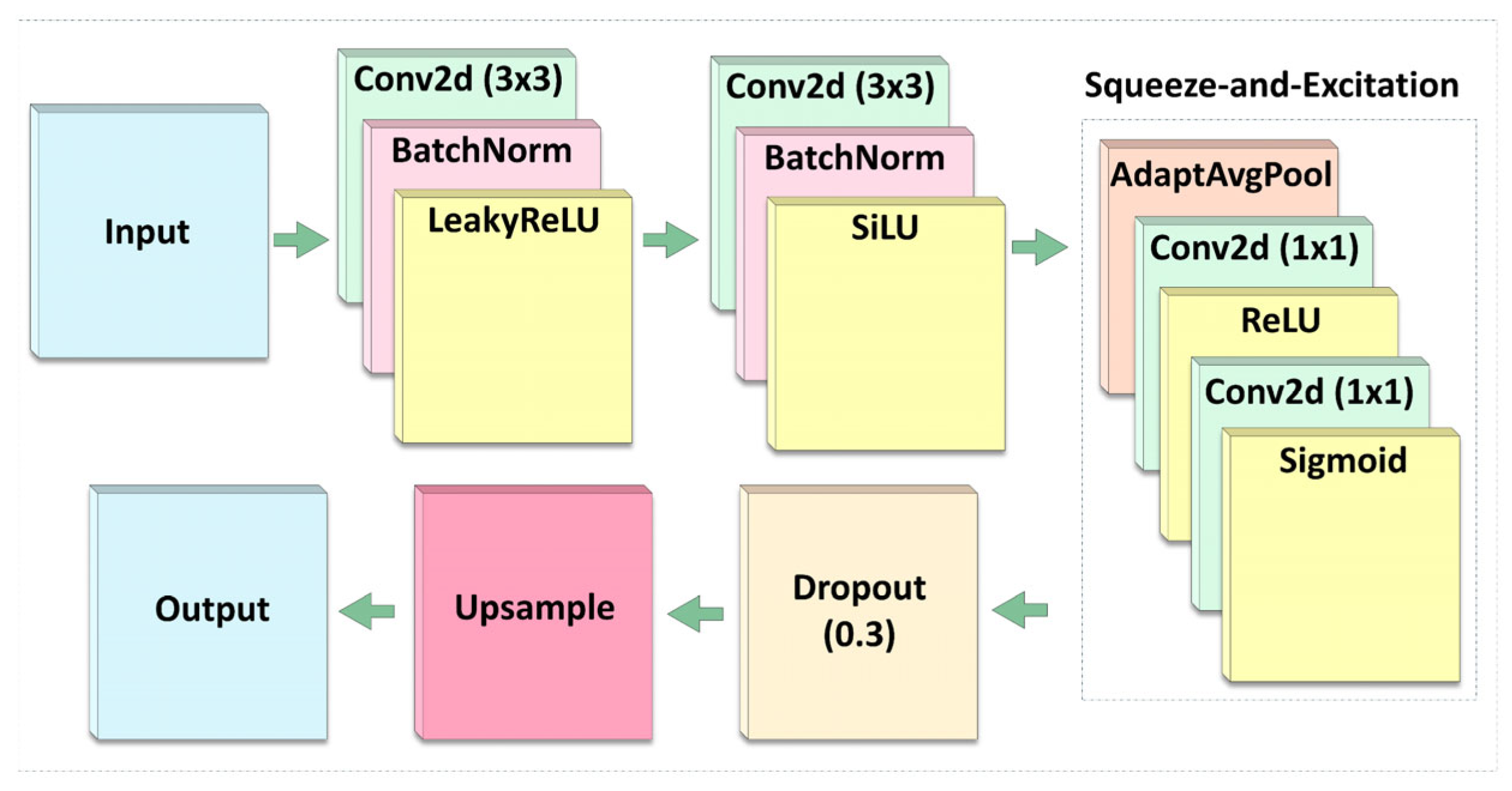

3.1.4. Decoder

3.1.5. AuxHead Module

3.2. Loss Function

3.3. Evaluation Metrics

4. Experimental Results and Evaluation

4.1. Experimental Setup

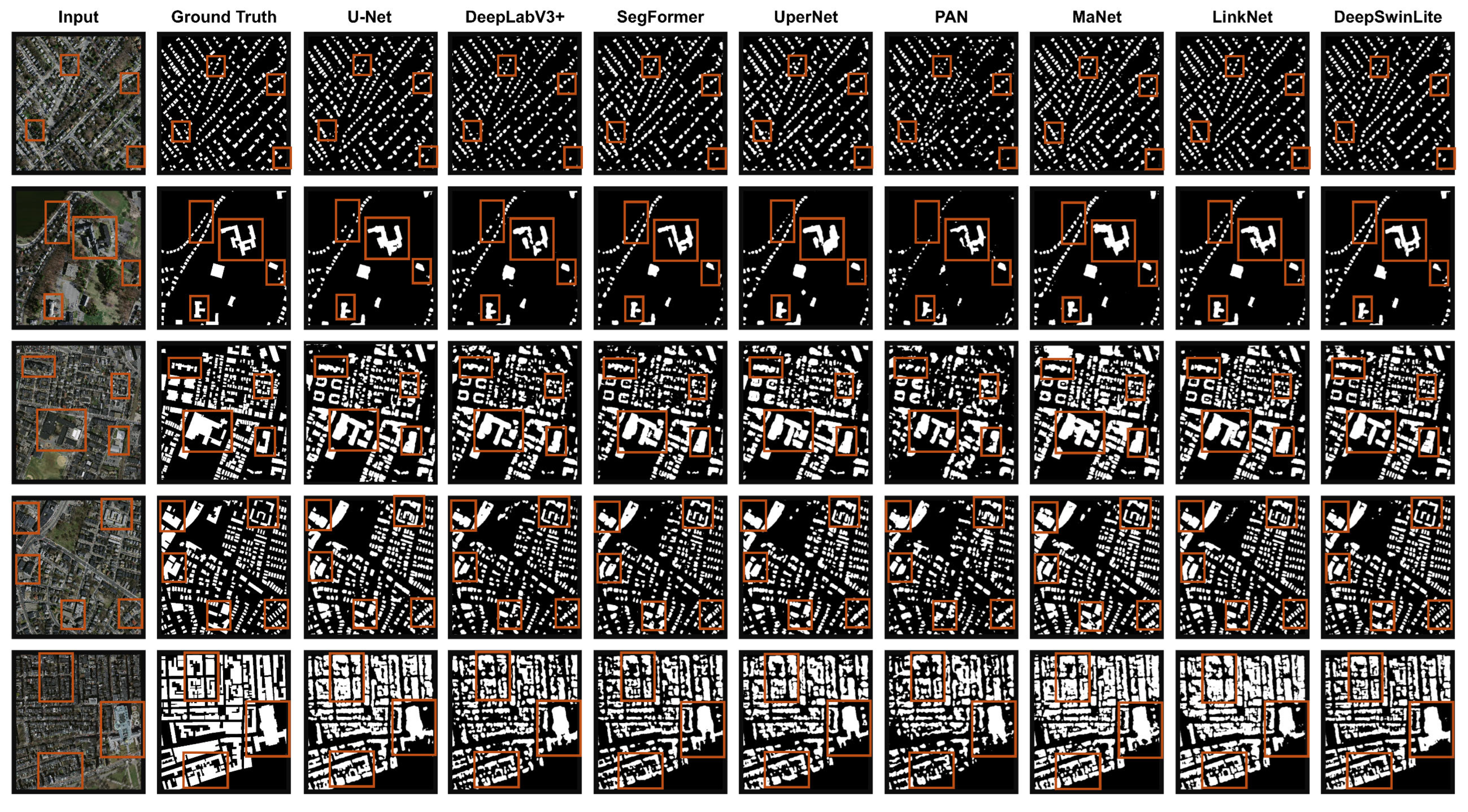

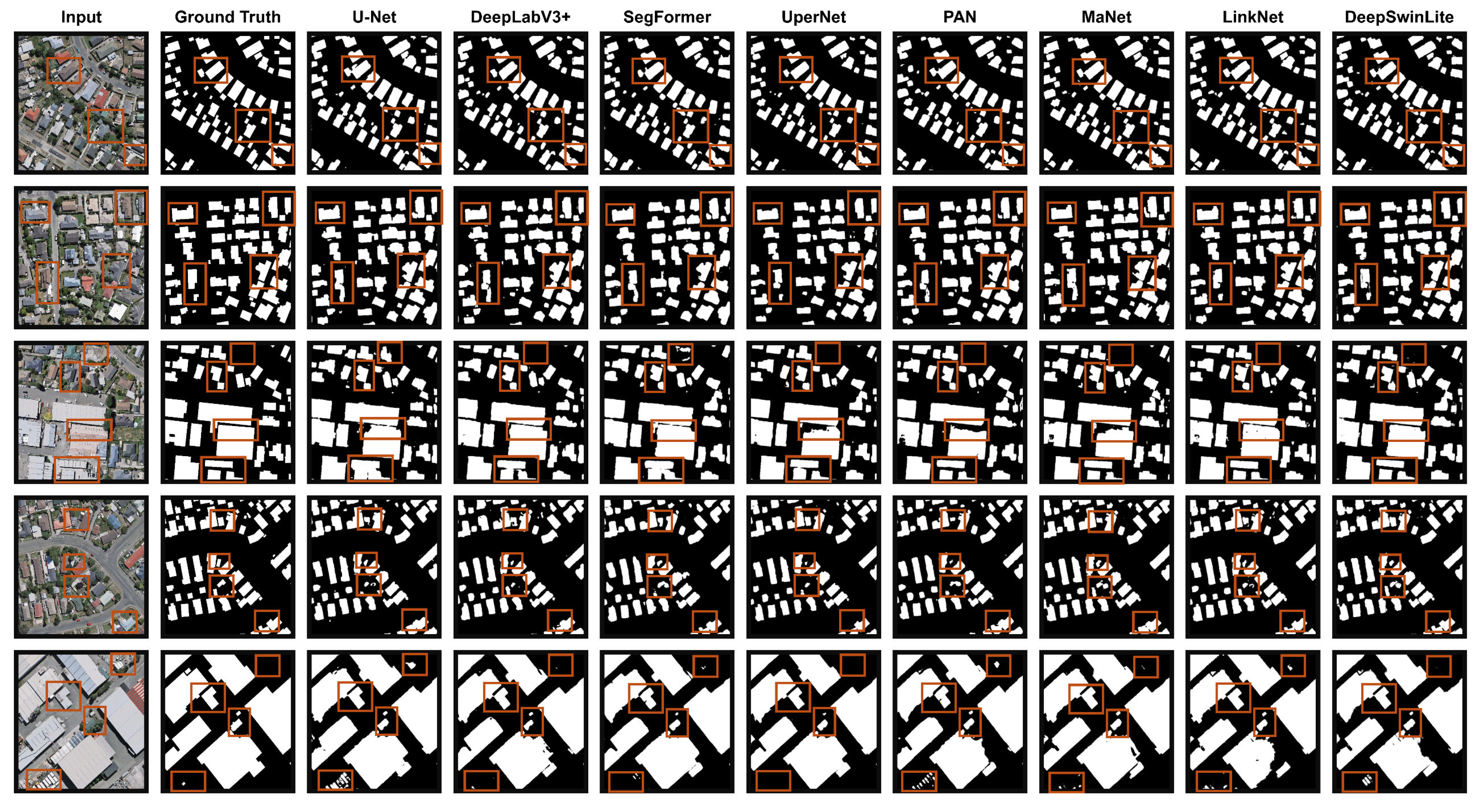

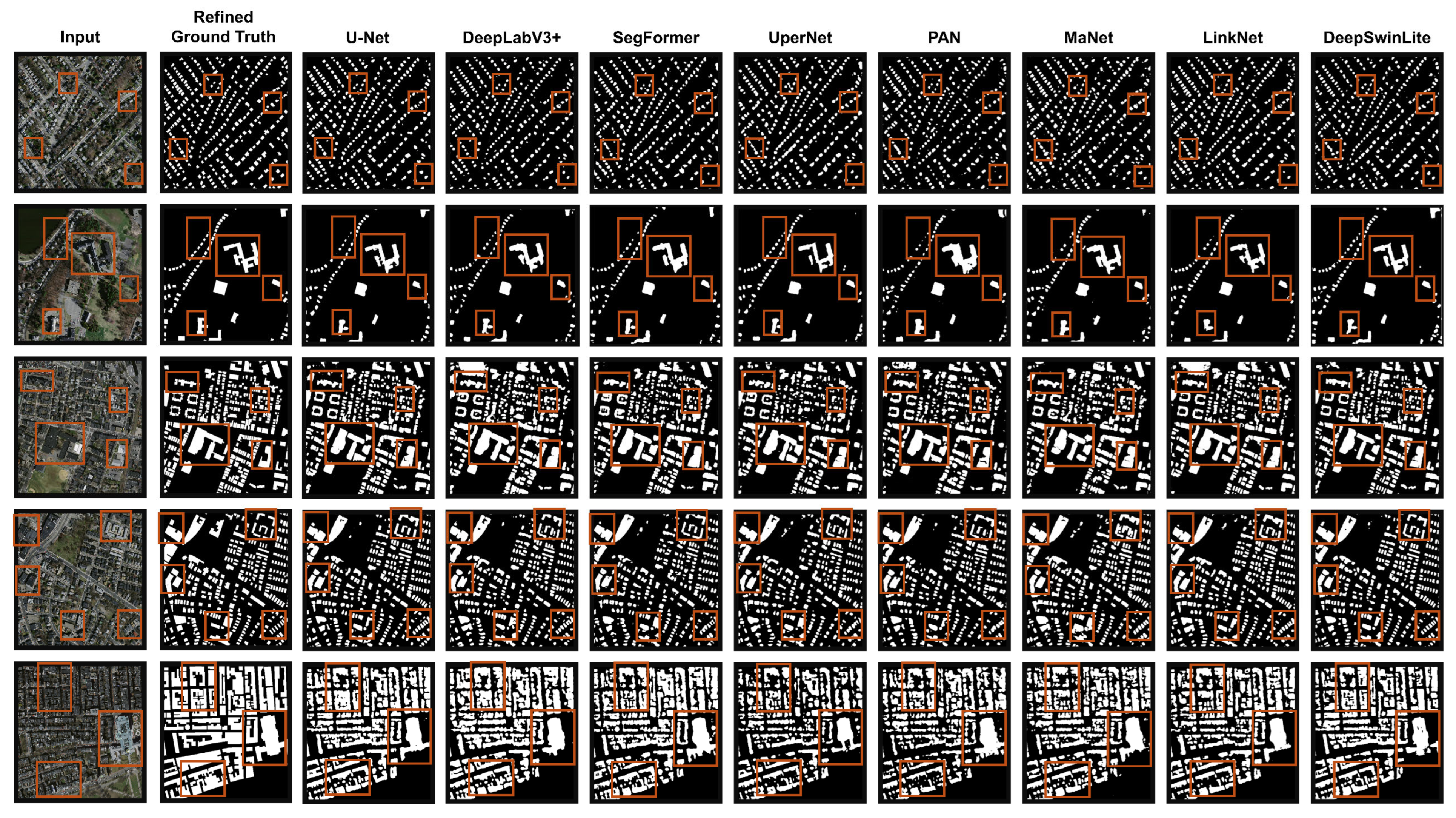

4.2. Experimental Results

4.3. Computational Cost Analysis

4.4. Ablation Study

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AF | Activation Function |

| APP | Adaptive Average Pooling |

| CE | Cross Entropy |

| CNN | Convolutional Neural Network |

| DL | Deep Learning |

| FLOPs | Floating-Point Operations |

| FN | False Negative |

| FP | False Positive |

| G | Giga |

| IoU | Intersection over Union |

| M | Millions |

| MLFP | Multi-Level Feature Pyramid |

| MSFA | Multi-Scale Feature Aggregation |

| OA | Overall Accuracy |

| OSM | OpenStreetMap |

| RGB | Red-Green-Blue |

| RS | Remote Sensing |

| SDGs | Sustainable Development Goals |

| s | Seconds |

| SOTA | The State-of-the-Art |

| TN | True Negative |

| TP | True Positive |

| VHR | Very High-Resolution |

References

- Chen, C.; Deng, J.; Lv, N. Illegal Constructions Detection in Remote Sensing Images Based on Multi-Scale Semantic Segmentation. In Proceedings of the IEEE International Conference on Smart Internet of Things, Beijing, China, 14–16 August 2020; pp. 300–303. [Google Scholar] [CrossRef]

- Hu, Q.; Zhen, L.; Mao, Y.; Zhou, X.; Zhou, G. Automated Building Extraction Using Satellite Remote Sensing Imagery. Autom. Constr. 2021, 123, 103509. [Google Scholar] [CrossRef]

- Zhou, W.; Song, Y.; Pan, Z.; Liu, Y.; Hu, Y.; Cui, X. Classification of Urban Construction Land with Worldview-2 Remote Sensing Image Based on Classification and Regression Tree Algorithm. In Proceedings of the IEEE International Conference on Computational Science and Engineering, Guangzhou, China, 21–24 July 2017; pp. 277–283. [Google Scholar] [CrossRef]

- Pan, X.Z.; Zhao, Q.G.; Chen, J.; Liang, Y.; Sun, B. Analyzing the Variation of Building Density Using High Spatial Resolution Satellite Images: The Example of Shanghai City. Sensors 2008, 8, 2541–2550. [Google Scholar] [CrossRef] [PubMed]

- Li, R.; Zheng, S.; Zhang, C.; Duan, C.; Su, J.; Wang, L.; Atkinson, P.M. Multiattention Network for Semantic Segmentation of Fine-Resolution Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5607713. [Google Scholar] [CrossRef]

- Rahnemoonfar, M.; Chowdhury, T.; Murphy, R. RescueNet: A High-Resolution UAV Semantic Segmentation Dataset for Natural Disaster Damage Assessment. Sci. Data 2023, 10, 913. [Google Scholar] [CrossRef]

- Huang, J.; Yang, G. Research on Urban Renewal Based on Semantic Segmentation and Spatial Syntax: Taking Wuyishan City as an Example. In Proceedings of the International Conference on Smart Transportation and City Engineering, Chongqing, China, 6–8 December 2024; pp. 1179–1185. [Google Scholar] [CrossRef]

- Ma, S.; Zhang, X.; Fan, H.; Li, T. An Infrastructure Segmentation Neural Network for UAV Remote Sensing Images. In Proceedings of the International Conference on Robotics, Intelligent Control and Artificial Intelligence, Hangzhou, China, 1–3 December 2023; pp. 226–231. [Google Scholar] [CrossRef]

- Wang, H.; Chen, Y.; Cai, Y.; Chen, L.; Li, Y.; Sotelo, M.A.; Li, Z. SFNet-N: An Improved SFNet Algorithm for Semantic Segmentation of Low-Light Autonomous Driving Road Scenes. IEEE Trans. Intell. Transp. Syst. 2022, 23, 21405–21417. [Google Scholar] [CrossRef]

- Chen, J.; Jiang, Y.; Luo, L.; Gong, W. ASF-Net: Adaptive Screening Feature Network for Building Footprint Extraction from Remote-Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4706413. [Google Scholar] [CrossRef]

- Haghighi Gashti, E.; Delavar, M.R.; Guan, H.; Li, J. Semantic Segmentation Uncertainty Assessment of Different U-Net Architectures for Extracting Building Footprints. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2024, 10, 141–148. [Google Scholar] [CrossRef]

- Herfort, B.; Lautenbach, S.; Porto de Albuquerque, J.; Anderson, J.; Zipt, A. A Spatio-Temporal Analysis Investigating Completeness and Inequalities of Global Urban Building Data in OpenStreetMap. Nat. Commun. 2023, 14, 3985. [Google Scholar] [CrossRef]

- Fang, F.; Zheng, K.; Li, S.; Xu, R.; Hao, Q.; Feng, Y.; Zhou, S. Incorporating Superpixel Context for Extracting Building from High-Resolution Remote Sensing Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 17, 1176–1190. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- United Nations. Transforming Our World: The 2030 Agenda for Sustainable Development. Available online: https://sdgs.un.org/2030agenda (accessed on 30 June 2025).

- Lv, J.; Shen, Q.; Lv, M.; Li, Y.; Shi, L.; Zhang, P. Deep Learning-Based Semantic Segmentation of Remote Sensing Images: A Review. Front. Ecol. Evol. 2023, 11, 1201125. [Google Scholar] [CrossRef]

- Huang, L.; Jiang, B.; Lv, S.; Liu, Y.; Fu, Y. Deep-Learning-Based Semantic Segmentation of Remote Sensing Images: A Survey. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 17, 8370–8396. [Google Scholar] [CrossRef]

- Chen, Y.; Bruzzone, L. Toward Open-World Semantic Segmentation of Remote Sensing Images. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Pasadena, CA, USA, 16–21 July 2023. [Google Scholar] [CrossRef]

- Liu, S.; Ding, W.; Liu, C.; Liu, Y.; Wang, Y.; Li, H. ERN: Edge Loss Reinforced Semantic Segmentation Network for Remote Sensing Images. Remote Sens. 2018, 10, 1339. [Google Scholar] [CrossRef]

- Zhao, D.; Wang, C.; Gao, Y.; Shi, Z.; Xie, F. Semantic Segmentation of Remote Sensing Image Based on Regional Self-Attention Mechanism. IEEE Geosci. Remote Sens. Lett. 2021, 19, 8010305. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the 18th International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar] [CrossRef]

- Li, H.; Xiong, P.; An, J.; Wang, L. Pyramid Attention Network for Semantic Segmentation. arXiv 2018, arXiv:1805.10180. [Google Scholar] [CrossRef]

- Fan, T.; Wang, G.; Li, Y.; Wang, H. MA-Net: A Multi-Scale Attention Network for Liver and Tumor Segmentation. IEEE Access 2020, 8, 179656–179665. [Google Scholar] [CrossRef]

- Chaurasia, A.; Culurciello, E. LinkNet: Exploiting Encoder Representations for Efficient Semantic Segmentation. In Proceedings of the IEEE Visual Communications and Image Processing, Petersburg, FL, USA, 10–13 December 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Xiao, T.; Liu, Y.; Zhou, B.; Jiang, Y.; Sun, J. Unified Perceptual Parsing for Scene Understanding. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 418–434. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems 30, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar] [CrossRef]

- Xiao, X.; Guo, W.; Chen, R.; Hui, Y.; Wang, J.; Zhao, H. A Swin Transformer-Based Encoding Booster Integrated in U-Shaped Network for Building Extraction. Remote Sens. 2022, 14, 2611. [Google Scholar] [CrossRef]

- Zhang, R.; Zhang, Q.; Zhang, G. SDSC-UNet: Dual Skip Connection ViT-Based U-Shaped Model for Building Extraction. IEEE Geosci. Remote Sens. Lett. 2023, 20, 6005005. [Google Scholar] [CrossRef]

- Che, Z.; Shen, L.; Huo, L.; Hu, C.; Wang, Y.; Lu, Y.; Bi, F. MAFF-HRNet: Multi-Attention Feature Fusion HRNet for Building Segmentation in Remote Sensing Images. Remote Sens. 2023, 15, 1382. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 9993–10002. [Google Scholar] [CrossRef]

- Guo, H.; Du, B.; Zhang, L.; Su, X. A coarse-to-fine boundary refinement network for building footprint extraction from remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2022, 183, 240–252. [Google Scholar] [CrossRef]

- Li, Y.; Hong, D.; Li, C.; Yao, J.; Chanussot, J. HD-Net: High-resolution decoupled network for building footprint extraction via deeply supervised body and boundary decomposition. ISPRS J. Photogramm. Remote Sens. 2024, 209, 51–65. [Google Scholar] [CrossRef]

- Tang, Q.; Li, Y.; Xu, Y.; Du, B. Enhancing building footprint extraction with partial occlusion by exploring building integrity. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5650814. [Google Scholar] [CrossRef]

- Ji, S.; Wei, S.; Lu, M. Fully Convolutional Networks for Multi-Source Building Extraction from an Open Aerial and Satellite Imagery Dataset. IEEE Trans. Geosci. Remote Sens. 2018, 57, 547–586. [Google Scholar] [CrossRef]

- Mnih, V. Machine Learning for Aerial Image Labeling. Ph.D. Thesis, University of Toronto, Toronto, ON, Canada, 2013. [Google Scholar]

- Haklay, M.; Weber, P. OpenStreetMap: User-Generated Street Maps. IEEE Pervasive Comput. 2008, 7, 12–18. [Google Scholar] [CrossRef]

- Khalel, A.; El-Saban, M. Automatic Pixelwise Object Labeling for Aerial Imagery Using Stacked U-Nets. arXiv 2018, arXiv:1803.04953. [Google Scholar] [CrossRef]

- Kavzoglu, T.; Tso, B.; Mather, P.M. Classification Methods for Remotely Sensed Data, 3rd ed.; CRC Press: Boca Raton, FL, USA, 2024; pp. 1–350. [Google Scholar]

- Kavzoglu, T. Increasing the Accuracy of Neural Network Classification Using Refined Training Data. Environ. Model. Softw. 2009, 24, 850–858. [Google Scholar] [CrossRef]

- Yang, D.; Gao, X.; Yang, Y.; Guo, K.; Han, K.; Xu, L. Advances and future prospects in building extraction from high-resolution remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 6994–7016. [Google Scholar] [CrossRef]

- Yu, Y.; Wang, C.; Kou, R.; Wang, H.; Yang, B.; Xu, J.; Fu, Q. Enhancing Building Segmentation with Shadow-Aware Edge Perception. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 1–12. [Google Scholar] [CrossRef]

- Han, J.; Zhan, B. MDBES-Net: Building Extraction from Remote Sensing Images Based on Multiscale Decoupled Body and Edge Supervision Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 17, 519–534. [Google Scholar] [CrossRef]

- Yuan, W.; Zhang, X.; Shi, J.; Wang, J. LiteST-Net: A Hybrid Model of Lite Swin Transformer and Convolution for Building Extraction from Remote Sensing Image. Remote Sens. 2023, 15, 1996. [Google Scholar] [CrossRef]

- Yan, G.; Jing, H.; Li, H.; Guo, H.; He, S. Enhancing Building Segmentation in Remote Sensing Images: Advanced Multi-Scale Boundary Refinement with MBR-HRNet. Remote Sens. 2023, 15, 3766. [Google Scholar] [CrossRef]

- Zhang, B.; Huang, J.; Wu, F.; Zhang, W. OCANet: An Overcomplete Convolutional Attention Network for Building Extraction from High-Resolution Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 18427–18443. [Google Scholar] [CrossRef]

- Ran, S.; Gao, X.; Yang, Y.; Li, S.; Zhang, G.; Wang, P. Building Multi-Feature Fusion Refined Network for Building Extraction from High-Resolution Remote Sensing Images. Remote Sens. 2021, 13, 2794. [Google Scholar] [CrossRef]

- Li, S.; Bao, T.; Liu, H.; Deng, R.; Zhang, H. Multilevel Feature Aggregated Network with Instance Contrastive Learning Constraint for Building Extraction. Remote Sens. 2023, 15, 2585. [Google Scholar] [CrossRef]

- Li, Y.; Li, Y.; Zhu, X.; Fang, H.; Ye, L. A Method for Extracting Buildings from Remote Sensing Images Based on 3DJA-UNet3+. Sci. Rep. 2024, 14, 19067. [Google Scholar] [CrossRef] [PubMed]

- Yang, D.; Gao, X.; Yang, Y.; Jiang, M.; Guo, K.; Liu, B. CSA-Net: Complex Scenarios Adaptive Network for Building Extraction for Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 938–953. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, Y.; Li, J.; Zhang, X.; Zhang, Y.; Wu, Q.; Wang, H. SCANet: Split Coordinate Attention Network for Building Footprint Extraction. arXiv 2025, arXiv:2507.20809. [Google Scholar] [CrossRef]

- OuYang, C.; Li, H. BuildNext-Net: A Network Based on Self-Attention and Equipped with an Efficient Decoder for Extracting Buildings from High-Resolution Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 16385–16402. [Google Scholar] [CrossRef]

- Chen, Z.; Chen, W.; Zheng, J.; Ding, Y. CRU-Net: An Innovative Network for Building Extraction from Remote Sensing Images Based on Channel Enhancement and Multiscale Spatial Attention with ResNet. Concurr. Comput. Pract. Exp. 2025, 37, e70249. [Google Scholar] [CrossRef]

- Chen, J.; Liu, B.; Yu, A.; Quan, Y.; Li, T.; Guo, W. Depth Feature Fusion Network for Building Extraction in Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 16577–16591. [Google Scholar] [CrossRef]

- Teke, A.; Kavzoglu, T. Exploring the Decision-Making Process of Ensemble Learning Algorithms in Landslide Susceptibility Mapping: Insights from Local and Global Explainable AI Analyses. Adv. Space Res. 2024, 74, 3765–3785. [Google Scholar] [CrossRef]

- Kavzoglu, T.; Bilucan, F. Effects of Auxiliary and Ancillary Data on LULC Classification in A Heterogeneous Environment Using Optimized Random Forest Algorithm. Earth Sci. Inform. 2023, 16, 415–435. [Google Scholar] [CrossRef]

- Kavzoglu, T.; Uzun, Y.K.; Berkan, E.; Yilmaz, E.O. Global-Scale Explainable AI Assessment for OBIA-Based Classification Using Deep Learning and Machine Learning Methods. Adv. Geod. Geoinf. 2025, 74, e62. [Google Scholar] [CrossRef]

- Yilmaz, E.O.; Uzun, Y.K.; Berkan, E.; Kavzoglu, T. Integration of LIME with Segmentation Techniques for SVM Classification in Sentinel-2 Imagery. Adv. Geod. Geoinf. 2025, 74, e61. [Google Scholar] [CrossRef]

| Model | Precision | Recall | IoU | F1-Score | Accuracy |

|---|---|---|---|---|---|

| U-Net [21] | 87.26 | 86.05 | 77.47 | 86.64 | 92.27 |

| DeeplabV3+ [22] | 86.14 | 81.60 | 73.36 | 83.60 | 90.95 |

| SegFormer [23] | 87.19 | 83.42 | 75.41 | 85.13 | 91.70 |

| UperNet [27] | 86.36 | 84.42 | 75.66 | 85.34 | 91.62 |

| PAN [24] | 82.81 | 77.17 | 69.78 | 80.21 | 92.26 |

| MANet [25] | 85.65 | 86.87 | 76.85 | 86.24 | 91.78 |

| LinkNet [26] | 87.10 | 84.54 | 76.22 | 85.74 | 91.91 |

| DeepSwinLite | 87.98 | 86.03 | 77.94 | 86.96 | 92.54 |

| Model | Precision | Recall | IoU | F1-Score | Accuracy |

|---|---|---|---|---|---|

| U-Net [21] | 93.89 | 95.92 | 90.52 | 94.87 | 97.93 |

| DeeplabV3+ [22] | 95.16 | 94.82 | 90.72 | 94.99 | 98.03 |

| SegFormer [23] | 93.77 | 94.73 | 89.45 | 94.24 | 97.70 |

| UperNet [27] | 96.40 | 93.69 | 90.73 | 94.99 | 98.08 |

| PAN [24] | 95.53 | 93.62 | 89.96 | 94.54 | 97.89 |

| MANet [25] | 94.29 | 95.69 | 90.69 | 94.97 | 97.98 |

| LinkNet [26] | 95.38 | 94.69 | 90.79 | 95.03 | 98.05 |

| DeepSwinLite | 95.47 | 96.02 | 92.02 | 95.74 | 98.32 |

| Model | Precision | Recall | IoU | F1-Score | Accuracy |

|---|---|---|---|---|---|

| U-Net [21] | 89.68 | 85.69 | 79.03 | 87.53 | 94.35 |

| DeeplabV3+ [22] | 88.84 | 83.87 | 77.06 | 86.11 | 93.79 |

| SegFormer [23] | 88.35 | 84.93 | 77.61 | 86.52 | 93.85 |

| UperNet [27] | 88.68 | 84.13 | 77.18 | 86.20 | 93.80 |

| PAN [24] | 87.59 | 83.13 | 75.76 | 85.15 | 93.33 |

| MANet [25] | 87.62 | 83.78 | 76.29 | 85.55 | 93.45 |

| LinkNet [26] | 88.13 | 85.29 | 77.75 | 86.63 | 93.86 |

| DeepSwinLite | 90.44 | 86.14 | 79.86 | 88.11 | 94.63 |

| Model | FLOPs (G) | Param. (M) | Epoch Time (s/epoch) | |

|---|---|---|---|---|

| Massachusetts | WHU | |||

| U-Net [21] | 42.87 | 32.52 | 130.0 | 346.0 |

| DeeplabV3+ [22] | 36.91 | 26.68 | 119.0 | 326.0 |

| SegFormer [23] | 21.19 | 24.70 | 149.0 | 583.0 |

| UperNet [27] | 29.01 | 25.57 | 112.0 | 586.0 |

| PAN [24] | 34.92 | 24.26 | 93.0 | 310.0 |

| MANet [25] | 74.65 | 147.44 | 98.0 | 335.0 |

| LinkNet [26] | 43.15 | 31.18 | 120.0 | 350.0 |

| DeepSwinLite | 37.17 | 7.81 | 96.0 | 304.0 |

| Model Configuration | Precision | Recall | IoU | F1-Score | Accuracy | FLOPs (G) | Param. (M) | Epoch Time (s/epoch) |

|---|---|---|---|---|---|---|---|---|

| DeepSwinLite | 87.98 | 86.03 | 77.94 | 86.96 | 92.54 | 37.17 | 7.81 | 96.0 |

| w/o Auxhead | 88.44 | 84.20 | 76.77 | 86.10 | 92.28 | 35.96 | 7.74 | 114.0 |

| w/o MLFP | 83.66 | 78.89 | 69.97 | 80.95 | 89.59 | 6.44 | 10.33 | 98.0 |

| w/o MSFA | 86.72 | 84.65 | 76.69 | 85.64 | 90.06 | 13.54 | 6.37 | 85.0 |

| w Auxhead only | 84.53 | 80.54 | 72.33 | 82.36 | 92.92 | 5.61 | 6.96 | 69.4 |

| w MLFP only | 87.11 | 83.06 | 75.60 | 84.92 | 93.93 | 12.34 | 6.30 | 77.2 |

| w MSFA only | 83.97 | 81.66 | 72.18 | 82.74 | 90.19 | 6.42 | 10.25 | 66.0 |

| Model | Precision | Recall | F1-Score | Accuracy | IoU | Param. (M) | FLOPs (G) |

|---|---|---|---|---|---|---|---|

| DeepSwinLite | 87.98 | 86.03 | 86.96 | 92.54 | 77.94 | 7.81 | 37.17 |

| SCTM [43] | - | - | - | 87.66 | 69.71 | 271.52 | - |

| MDBES-Net [44] | 86.88 | 84.21 | 85.52 | - | 75.55 | 26.42 | - |

| LiteST-Net [45] | - | - | 76.10 | 92.50 | 76.50 | 18.03 | - |

| MBR-HRNet [46] | 86.40 | 80.85 | 83.53 | - | 70.97 | 31.02 | 68.71 |

| OCANet [47] | 84.48 | 81.42 | 82.92 | - | 70.82 | 38.42 | 68.45 |

| BMFR-Net [48] | 85.39 | 84.89 | 85.14 | 94.46 | 74.12 | 20.00 | - |

| MFA-Net [49] | 87.11 | 83.84 | 85.44 | - | 74.58 | - | - |

| 3DJA-UNet3+ [50] | - | - | 86.96 | 92.04 | 77.86 | 16.05 | - |

| MAFF-HRNet [2] | 83.15 | 79.29 | 81.17 | - | 68.32 | - | - |

| CSA-Net [51] | 87.27 | 82.44 | 84.79 | 94.47 | 73.59 | 20.00 | 40.10 |

| SCANet [52] | 88.38 | 84.30 | 86.29 | - | 75.49 | 73.2 | - |

| BuildNext-Net [53] | 88.01 | 84.92 | 86.44 | 95.27 | 76.12 | 31.65 | 38.45 |

| CRU-Net [54] | 86.78 | 81.77 | 84.20 | - | 72.71 | - | - |

| DFF-Net [55] | 87.20 | 81.30 | 84.20 | 72.60 | 32.15 | 222.49 |

| Model | Precision | Recall | F1-Score | Accuracy | IoU | Param. (M) | FLOPs (G) |

|---|---|---|---|---|---|---|---|

| DeepSwinLite | 95.47 | 96.02 | 95.74 | 98.32 | 92.02 | 7.81 | 37.17 |

| SCTM [43] | - | - | - | 89.75 | 78.17 | 271.52 | - |

| MDBES-Net [44] | 95.74 | 95.63 | 95.68 | - | 91.78 | 26.42 | - |

| LiteST-Net [45] | - | - | 92.50 | 98.40 | 92.10 | 18.30 | - |

| MBR-HRNet [46] | 95.48 | 94.88 | 95.18 | - | 91.31 | 31.02 | 68.71 |

| OCANet [47] | 95.40 | 94.53 | 94.96 | - | 90.41 | 38.42 | 68.45 |

| BMFR-Net [48] | 94.31 | 94.42 | 94.36 | 98.74 | 89.32 | 20.00 | - |

| MFA-Net [49] | 94.64 | 96.02 | 95.33 | - | 91.07 | - | - |

| 3DJA-UNet3+ [50] | - | - | 95.15 | 98.07 | 91.13 | 16.05 | - |

| MAFF-HRNet [2] | 95.90 | 95.43 | 95.66 | - | 91.69 | - | - |

| CSA-Net [51] | 95.41 | 93.80 | 94.60 | 98.81 | 89.75 | 20.00 | 40.10 |

| SCANet [52] | 95.92 | 95.67 | 95.79 | - | 91.61 | 73.2 | - |

| BuildNext-Net [53] | 95.31 | 95.49 | 95.40 | 98.97 | 91.21 | 31.65 | 38.45 |

| CRU-Net [54] | 93.15 | 97.47 | 95.26 | - | 90.95 | - | - |

| DFF-Net [55] | 95.40 | 94.60 | 95.00 | - | 90.50 | 32.15 | 222.49 |

| Model A | Model B | b (A✓, B✗) | c (A✗, B✓) | χ2 (cc) | p-Value | Significance |

|---|---|---|---|---|---|---|

| DeepSwinLite | DeeplabV3+ | 86,106 | 58,044 | 5462.50 | <0.001 | Yes |

| DeepSwinLite | PAN | 65,277 | 119,177 | 26,762.05 | <0.001 | Yes |

| DeepSwinLite | UperNet | 79,164 | 60,011 | 2635.52 | <0.001 | Yes |

| DeepSwinLite | MANet | 80,934 | 63,512 | 31,347.21 | <0.001 | Yes |

| DeepSwinLite | SegFormer | 71,113 | 99,987 | 592.21 | <0.001 | Yes |

| DeepSwinLite | LinkNet | 63,144 | 120,670 | 542.15 | <0.001 | Yes |

| DeepSwinLite | U-Net | 61,117 | 60,298 | 5.51 | 0.0189 | Yes |

| Model A | Model B | b (A✓, B✗) | c (A✗, B✓) | χ2 (cc) | p-Value | Significance |

|---|---|---|---|---|---|---|

| DeepSwinLite | PAN | 25,157 | 17,386 | 1419.10 | <0.001 | Yes |

| DeepSwinLite | SegFormer | 25,206 | 18,250 | 1113.12 | <0.001 | Yes |

| DeepSwinLite | U-Net | 22,508 | 18,613 | 368.74 | <0.001 | Yes |

| DeepSwinLite | MANet | 16,609 | 20,249 | 359.27 | <0.001 | Yes |

| DeepSwinLite | UperNet | 20,318 | 22,355 | 97.14 | <0.001 | Yes |

| DeepSwinLite | DeeplabV3+ | 19,878 | 18,441 | 53.81 | <0.001 | Yes |

| DeepSwinLite | LinkNet | 17,706 | 18,260 | 8.50 | 0.0035 | Yes |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yilmaz, E.O.; Kavzoglu, T. DeepSwinLite: A Swin Transformer-Based Light Deep Learning Model for Building Extraction Using VHR Aerial Imagery. Remote Sens. 2025, 17, 3146. https://doi.org/10.3390/rs17183146

Yilmaz EO, Kavzoglu T. DeepSwinLite: A Swin Transformer-Based Light Deep Learning Model for Building Extraction Using VHR Aerial Imagery. Remote Sensing. 2025; 17(18):3146. https://doi.org/10.3390/rs17183146

Chicago/Turabian StyleYilmaz, Elif Ozlem, and Taskin Kavzoglu. 2025. "DeepSwinLite: A Swin Transformer-Based Light Deep Learning Model for Building Extraction Using VHR Aerial Imagery" Remote Sensing 17, no. 18: 3146. https://doi.org/10.3390/rs17183146

APA StyleYilmaz, E. O., & Kavzoglu, T. (2025). DeepSwinLite: A Swin Transformer-Based Light Deep Learning Model for Building Extraction Using VHR Aerial Imagery. Remote Sensing, 17(18), 3146. https://doi.org/10.3390/rs17183146