1. Introduction

The integration of remote sensing (RS) techniques with Deep Learning (DL) methodologies has emerged as a highly effective approach for detecting two-dimensional (2D) and three-dimensional (3D) building feature extraction [

1,

2]. Still, the accurate estimation of 3D changes in urban environments remains a challenging task due to factors such as obstruction from neighboring structures, variations in building materials, and complex urban geometries [

3,

4].

Focusing on 3D feature extraction, many methods for building height estimation typically utilize stereo imagery and Digital Elevation Models (DEMs) [

5,

6,

7]. These approaches leverage elevation differences to extract structural information, enabling the estimation of building heights and urban morphology. However, such methods depend on the availability and accuracy of high-quality elevation data, which may not always be accessible or up-to-date.

More recent approaches for 3D building height estimation can be categorized into three main groups according to the type of imagery utilized:

Multimodal fusion techniques that combine radar and optical data to enable the simultaneous extraction of complementary features from both image sources. This integration allows for a geometric analysis of the interactions between sunlight, buildings, and shadows in optical imagery, while also taking advantage of the all-weather, day-and-night imaging capabilities of radar data.

Methodologies that rely on a time sequence of datasets coming exclusively from a single data source, either radar or optical imagery. This strategy is motivated by the desire to simplify the pre-processing workflow, reduce computational and storage overhead, and minimize the potential for errors that can arise during data fusion.

Methodologies that rely solely on a single image from a single data source, aiming at the best trade-off between the simplicity of data acquisition and the detailed structural information necessary for precise analysis.

With respect to the first group of approaches, a good example is [

8], which introduces the Building Height Estimating Network (BHE-NET) to process Sentinel-1 and Sentinel-2 in parallel. The network is based on the U-Net architecture [

9], which serves as the core framework for the model. Similarly, in [

10] the authors proposed a methodology, the Multimodal Building Height Regression Network (MBHR-Net), a DL model specifically designed to process and analyze data from the same sources. Conversely, ref. [

11] introduces a core innovation relying in the multi-level cross-fusion approach, which integrates features from SAR and electro-optical (EO) images. The model incorporates semantic information, which is particularly beneficial in densely built environments, as it helps to differentiate building structures and their respective heights. Finally, the work in [

12] introduces a so called Temporally Attentive and Swin Transformer-enhanced dual-task UNet model (T-SwinUNet) to simultaneously perform feature extraction, building height estimation, and building segmentation. The model leverages complex, multimodal time series data—specifically, 12 months of Sentinel-1 SAR and Sentinel-2 MSI imagery, including both ascending and descending passes—to capture local and global spatial patterns.

Among the second group of approaches, ref. [

13] introduces a novel methodology to estimate building heights using only Sentinel-1 Ground Range Detected (GRD) data. By utilizing the VVH indicator in combination with reference building height data derived from airborne LiDAR images, the authors developed a calibrated building height estimation model, grounded on reliable reference points from seven U.S. cities. As another example, in [

14], the limitation of satellite-based altimetry methodologies to distinguish between the photons belonging to buildings and other objects is discussed. In this study, a self-adaptive method was proposed using the Ice, Cloud and Land Elevation Satellite (ICESat-2) and Global Ecosystem Dynamics Investigation (GEDI) data in Los Angeles and New York to estimate the height of the buildings.

In the third group, some methodologies leverage the grouping of 2D primitives, such as edges and segments, to infer building heights and delineate urban structures [

15]. However, these approaches generally rely on additional auxiliary information, such as shadows and wall evidence, to infer building heights. As a result, they are highly dependent on specific imaging conditions, such as oblique viewing angles or particular times of day, when shadows and structural details are more pronounced. This dependency can limit their applicability, as variations in sun position, atmospheric conditions, and sensor orientation may affect the consistency and reliability of height estimations.

Another interesting development is the work described in [

16], which integrates 2D and 3D contextual information by leveraging a contour-based grouping hierarchy derived from the principles of perceptual organization. By utilizing a Markov random Field (MRF) model, this approach estimates building heights using a single remote sensing image and generates rooftop hypotheses without requiring explicit 3D data, but final accuracy is limited. More recent works [

17,

18] demonstrate the feasibility of estimating building heights from single VHR SAR images. In particular, Ref. [

17] proposes a DL model trained on over 50 TerraSAR-X images from eight cities for reconstructing urban height maps from single VHR SAR images by integrating sensor-specific knowledge. Their method converts SAR intensity images into shadow maps to filter out low-intensity pixels, then applies mosaicking and filtering to refine height estimates. Complementarily, ref. [

18] introduces an object-based approach that estimates building heights via bounding-box regression between the footprint and building bounding boxes, an instance-based formulation particularly effective for structures with clearly defined footprints.

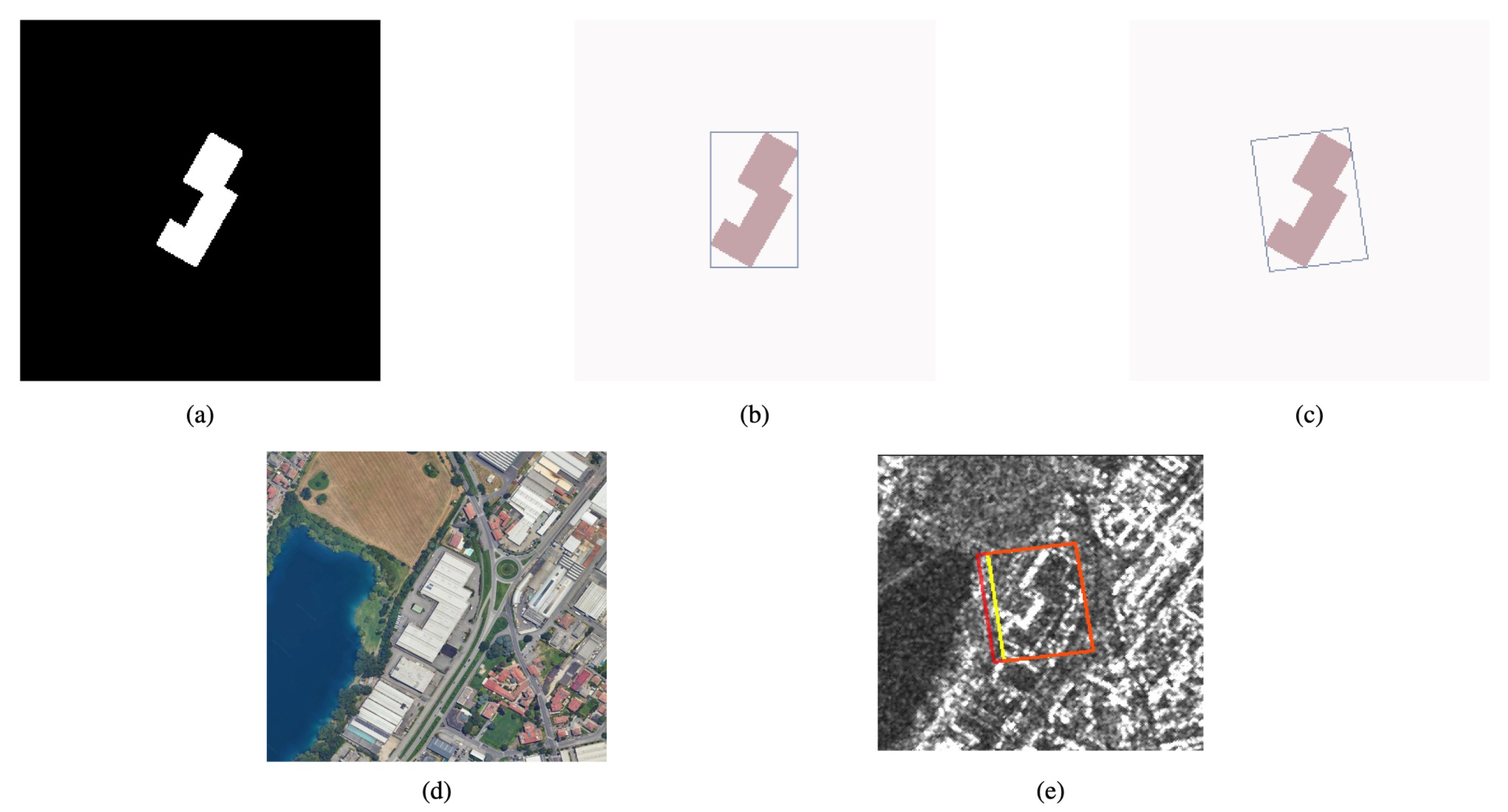

Building upon the strategies as described before, this paper aims to harness the detailed information present in single VHR SAR images to deliver accurate and efficient 3D urban structure analysis without the complexities of multi-source data fusion or multi-temporal analysis. An object-based approach is thus proposed, utilizing footprint geometries to perform instance-based height estimation. The method exploits building boundaries such as Sun et al. [

18], but introduces several key distinctions. First, while Sun et al. employed four input features, including the bounding box center coordinates, this approach uses only the bounding box dimensions (length and width), as we found out that the center coordinates contribute marginally to height prediction when the box is well aligned with the footprint, which will be proved later. This simplification reduces model complexity and the risk of overfitting to spatial patterns. Second, unlike [

18], the methodology presented in this paper explicitly operates in the ground range domain, avoiding the need to reproject data into the SAR image (slant range) plane. Instead of computing bounding boxes aligned with the slant-range geometry, as in Sun et al., our method accounts for the orbit geometry and incidence angle by directly rotating and adjusting the footprint-aligned bounding box (FBB) in ground coordinates. This preserves geometric consistency with the SAR acquisition geometry while simplifying the processing chain.

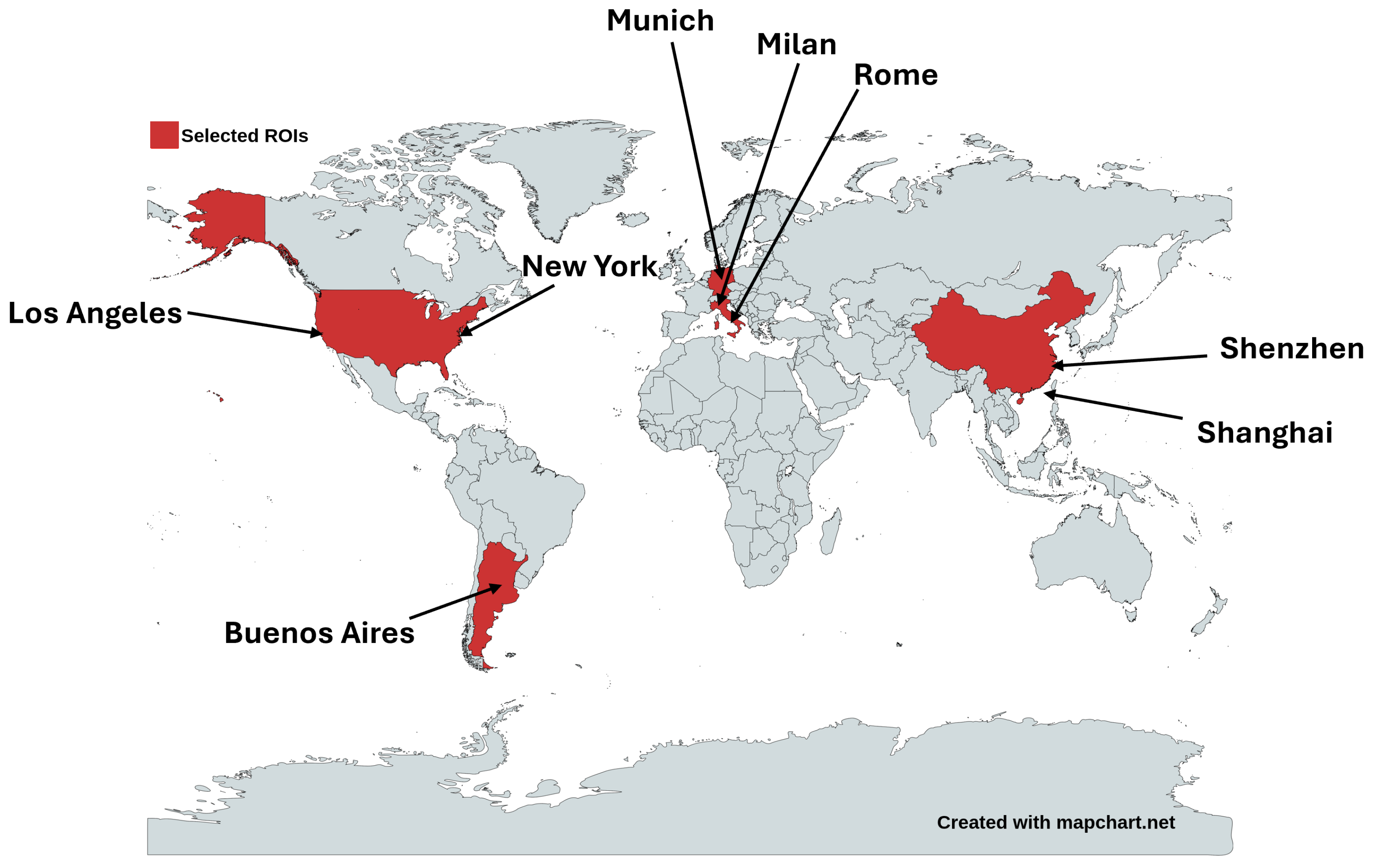

To test the proposed methodology, this study systematically evaluates the strengths and limitations of the proposed approach across different urban morphologies. The approach is therefore applied to COSMO-SkyMed VHR radar images acquired from a geographically diverse set of cities, including Buenos Aires, Los Angeles, Milan, Munich, New York, Rome, Shanghai, and Shenzhen. Since this city set represents a wide spectrum of urban environments, the use of COSMO-SkyMed data with consistent orbital direction and acquisition mode (as detailed in

Table 1) ensures that a robust and generalizable model is trained.

The rest of the article is organized as follows. In

Section 2, an overview of the study area is provided along with a detailed description of the datasets, including reference data acquisition, VHR SAR image selection, and pre-processing steps. In

Section 2.2, the proposed object-based methodology for building height estimation is presented. In

Section 3, a detailed analysis of the performance of the proposed method in estimating building heights for different cities is reported, and finally,

Section 5 summarizes the main findings of the study.

4. Discussion

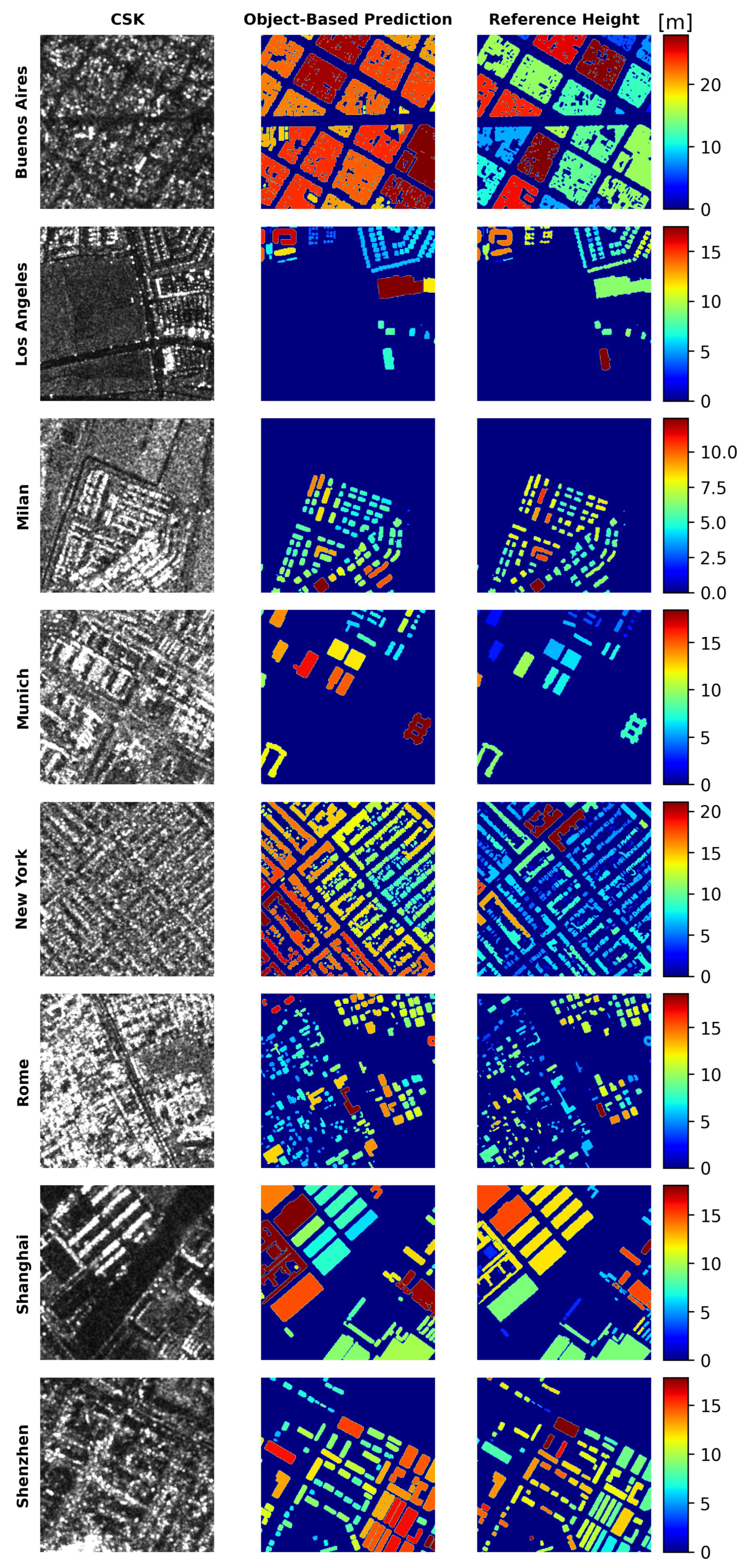

The results reveal a complex interplay between methodology and urban environment. In Buenos Aires, the relatively poor performance of the method is likely attributed to the exceptionally high building density, a factor that complicates object identification and delineation in SAR imagery. The extensive overlap of structures poses a significant challenge for any object-based methodology, which relies on accurate building boundary box detection. A similar behavior is observed for New York. Here, a contributing factor is the exceptionally high density of very tall buildings within specific areas of the city. For instance, Manhattan exhibits one of the highest densities of buildings exceeding 40 m in height, a feature that is not equally present in the other cities analyzed in this study.

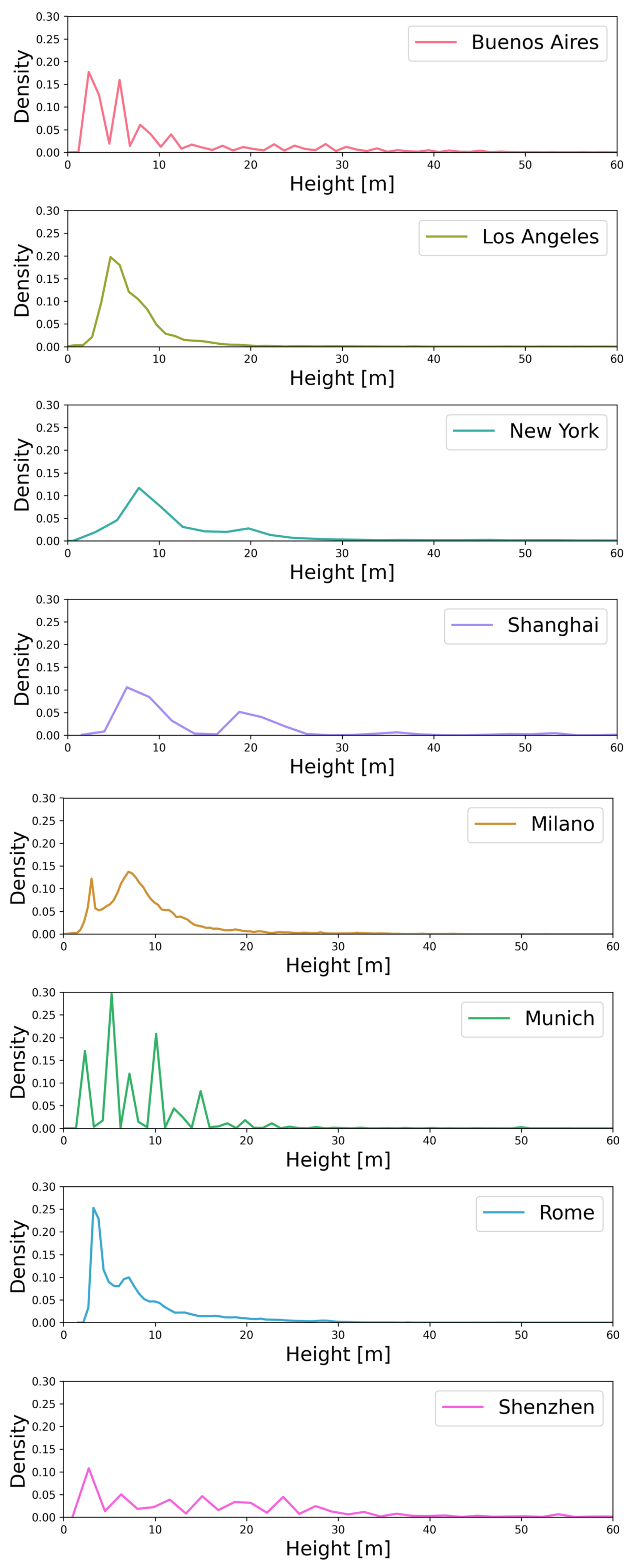

Conversely, the proposed approach demonstrates good performances in Los Angeles. Los Angeles’s urban landscape, characterized by more distinct building separation compared to Buenos Aires, likely facilitates more accurate object delineation, thus benefiting the object-based approach. In Milan, the approach exhibits excellent performances (MAE: 2.26 m, RMSE: 3.67 m), suggesting that, in cities with moderate building density and complexity, object-based approaches are working well. However, it struggles in cities with a high prevalence of tall buildings and complex architectural diversity, such as Shenzhen (MAE: 10.51 m, RMSE: 16.35 m) and Shanghai. The uneven distribution of building heights in the training data, skewed towards lower buildings (as illustrated in

Figure 2), likely hindered the models’ ability to generalize effectively to these cities with a high proportion of skyscrapers. Furthermore, the intricate architectural styles and high building density in these Asian megacities introduce significant challenges for accurate height estimation.

Figure 7 presents a detailed spatial analysis of the absolute errors in height estimation across eight cities, offering insights that go beyond the aggregate metrics reported in

Table 5. The high concentration of points with relatively small errors, particularly in areas corresponding to buildings under 40 m, as also confirmed by the building height distribution in

Table 2, suggests a consistent and reliable performance for the most common building stock. This spatial coherence in error magnitudes reinforces the overall robustness of the method in low- to mid-rise urban environments.

At the same time, the scatter plots reveal notable regions of divergence. The increased presence of points with larger errors in areas characterized by taller or more architecturally complex structures visually confirms the challenges faced by the method as the building height increases. These observations are consistent with the higher error values reported in

Table 5 for buildings exceeding 40 m.

A more in-depth analysis of the scatter plots further suggests that specific urban morphologies or building typologies within individual cities may systematically lead to larger estimation discrepancies. This raises important questions about the influence of factors such as building density, architectural diversity, or even the imaging conditions and limitations inherent to SAR data in different geographic contexts. Gaining a deeper understanding of these interactions could help identify the operational boundaries of the proposed methodology and guide future improvements for enhanced adaptability across diverse urban landscapes.

As a final test, the results of the proposed methodology are compared to state-of-the-art approaches in the same cities as in

Table 6. It is worth noting that, given the range and diversity of cities considered in this study, no single reference could serve as a universal benchmark across all experimental settings. Accordingly, comparisons were performed on a city basis, by looking at the best reported results for specific cities by means of other scientifically validated techniques and input datasets.

In Los Angeles, the proposed model can be compared with, and actually outperforms, the results in [

14]. Indeed, it achieved an MAE of 2.24 m vs. a reported MAE of 4.66 m in the above-mentioned study, as well as an RMSE of 3.29 m vs. 6.42 m. In contrast, for New York City, the performance was slightly worse (MAE: 7.43 m vs. 6.24 m and RMSE: 9.36 m vs. 8.28 m).

In the case of Milan, the T-SwinUNet model from [

12] was used for comparison. Yadav et al. reported an MAE of 3.54 m and an RMSE of 4.54 m, whereas the proposed method yields better results (MAE: 2.26 m; RMSE: 3.67 m). It must be noted that [

12] exploits multimodal and multitemporal data from Sentinel-1 and Sentinel-2, hence incorporating additional input information and spatio-temporal attention mechanisms. Nevertheless, our method brings an improvement that can be partly attributed to the use of VHR SAR images (COSMO-SkyMed vs. Sentinel-1).

The last city for which we compared the extraction results with those reported in the scientific literature is Shenzhen. We used the study in [

26] for comparison, where the authors developed an effective Building Height Estimation method by synergizing photogrammetry and deep learning methods (BHEPD) to leverage Chinese multi-view GaoFen-7 (GF-7) images for high-resolution building height estimation. The results indicate that BHEPD outperforms our model, achieving better MAE and RMSE values, 4.00 m and 6.72 m, respectively. This represents the worst performance of the proposed method used in this research and was left for a fair comparison and evaluation. This result (in disagreement with the other ones) can be attributed to the unique characteristics of Shenzhen: as shown in

Figure 2, this city has a significantly high frequency of buildings taller than 40 m. Consequently, when this city is excluded from the training set and used as test data (recall that our experiments are OOD), the model has a limited exposure to tall building heights, resulting in poorer height estimates. Furthermore, it is essential to consider the impact of building footprint dimensions on the accuracy of height estimation in the object-based methodology. In these urban settings, it is common to encounter very tall buildings, such as skyscrapers, with narrow footprints, which can complicate the estimation process in SAR (i.e., slanted) images.

This city-specific comparison effectively describes how the proposed methodology performs under various urban conditions across different cities. In Los Angeles and New York, it demonstrates competitive accuracy relative to benchmark studies. In Milan, the use of VHR SAR images yields improved accuracy compared to state-of-the-art models that rely on multimodal and multitemporal composites, while in Shenzhen, the prevalence of tall buildings with narrow footprints poses big challenges and limits to the model performance.

Finally,

Table 7 provides a summary of the key characteristics of the object-based building height estimation methodology proposed in this work. Among the reported metrics, inference speed, measured in frames per second (FPS), is of particular importance. In this context, FPS denotes the number of building-specific image patches processed per second during inference, a metric widely adopted in the remote sensing literature to assess the computational efficiency in large-scale data processing scenarios [

27].

The proposed approach is designed to operate on a per-object basis, whereby individual buildings are treated as separate instances. It requires as input a single VHR SAR image, a building footprint mask, and the corresponding footprint bounding box (FBB). Each building instance is processed independently, and a single height value is estimated using a deep convolutional network based on the ResNet-101 architecture.

Despite the model’s complexity (over 42 million trainable parameters), it demonstrates competitive computational efficiency, achieving an average inference throughput of 390.06 FPS and a training time per epoch of approximately six minutes. This efficiency is largely attributable to the object-level formulation, which eliminates the need for dense, pixel-wise prediction over large image areas.

Nevertheless, the method relies on the availability of building footprint data at both the training and inference stages, which can limit its applicability in scenarios where such ancillary information is incomplete or unavailable. Moreover, its performance may degrade in densely built-up areas, where structural complexity and SAR-specific imaging artifacts hinder accurate footprint delineation.

It should be noted that the proposed method is not limited to COSMO-SkyMed data and can be applied to data from other satellites with only minor modifications. In fact, a single adjustment is required to ensure compatibility. In the proposed framework for building height estimation, satellite acquisition parameters are used to calculate the length and width of the FBB, which are incorporated as additional input features. These geometric features are derived from the inclination angle and orbital path of the satellite. Consequently, when applying the method to imagery acquired from a different satellite, these features must be recalculated to reflect the specific sensor geometry and acquisition parameters, as variations in these factors can significantly influence the accuracy of the estimated heights. This adaptability highlights the scalability and general applicability of the proposed approach on different SAR platforms.

Despite these limitations, the object-based approach remains a robust and efficient solution for building height estimation in settings where high-quality footprint data are available. Its ability to deliver fast inference and precise, instance-level predictions makes it particularly well suited for large-scale urban analysis.

5. Conclusions

This study presents and thoroughly evaluates an object-based methodology for the challenging task of building height estimation from single VHR SAR imagery. The main goals of this paper were to reduce time and computational costs using single-time-acquisition VHR SAR images for each city and to train a scalable model to be applied across different continents with a reasonable amount of error.

Eight test sites (Buenos Aires, Los Angeles, Milan, Munich, New York, Rome, Shanghai, and Shenzhen) were selected in order to provide diverse training and test sets due to the different built-up structure distributions and different cultural and historical urban settings. The experiments provided transparent critical insights into the strengths and weaknesses of the proposed approach under realistic conditions, especially in the OOD experiments.

A key highlight of this research is the strong generalization capability demonstrated for the European cities. Indeed, achieving an accuracy of approximately one building story in an out-of-distribution setting, surpassing that of recent methodologies, underscores the effectiveness of the proposed DL frameworks. This performance demonstrates its potential for deployment in urban environments that are geographically and architecturally similar, even when city-specific training data are not available. While performance on North American and South American cities remained competitive, the noticeable decrease in accuracy observed for much more recently built cities in China (Shanghai and Shenzhen) points to the challenges in achieving robust cross-continental generalization. Factors such as distinct architectural styles, varying building densities, and the prevalence of very high-rise structures appear to pose a greater challenge for current methodologies. This suggests that, while the foundational framework shows significant promise for transfer learning, further research is needed to bridge the gap in performance across highly diverse global urban landscapes.

Looking ahead, future work could focus on enhancing the model’s ability to generalize across diverse global urban landscapes, particularly in cities with a high prevalence of tall buildings and complex architectural diversity. In addition, it is also possible to incorporate ascending orbit images and explore methods based on data fusion to enhance the reliability of the approach. Furthermore, it would be interesting to compare first and then possibly combine object-based and pixel-based methodologies to exploit their respective strengths. For generalization in specific urban landscapes, the use of fine-tuning techniques could also be explored to adapt pre-trained models to new cities more effectively.