1. Introduction

Urbanization is a defining feature of the Anthropocene [

1,

2,

3], reshaping landscapes and creating unprecedented challenges for sustainable development. As the global urban population surpasses 4 billion and continues to grow [

3], it brings challenges such as resource scarcity, climate change, and socioeconomic disparities, necessitating innovative approaches for effective urban management and planning to achieve the sustainable development goals (SDGs). Effectively addressing these challenges requires a robust framework to monitor and analyze urban systems, capturing not only their physical characteristics but also the interactions between the built environment, natural systems, and human activities. Remote sensing, with its ability to observe large-scale spatial and temporal patterns, has long served as a cornerstone for urban studies, enabling the systematic analysis of land use, environmental changes, and urban expansion [

4,

5,

6,

7,

8]. Over time, the field of remote sensing has evolved quite rapidly, especially during the past decade, with the advent of the “Big Data Era” [

4,

9,

10] to incorporate increasingly sophisticated tools and methodologies, setting the stage for new paradigms that expand its scope and application to include emerging societal dimensions.

Remote sensing has been integral to the monitoring and analysis of urban systems since the early 1960s [

1]. With its foundation in the use of electromagnetic waves, remote sensing captures spectral, spatial, and temporal information about Earth’s surface, enabling detailed observations of urban morphology, land-use changes, vegetation dynamics, and environmental processes. Through analyses of these land surface traits, it also provides a practical means to estimate socioeconomic characteristics [

11,

12]. Technologies such as optical sensing (such as Landsat), multispectral and hyperspectral imaging, Synthetic Aperture Radar (SAR), and Light Detection and Ranging (LiDAR) have revolutionized this field, providing increasingly high-resolution data, which in turn revolutionized how scholars approach urban studies and urban landscape, broadly defined [

1,

13]. The integration of remote sensing technologies in urban studies has been critical in monitoring phenomena such as urban sprawl [

14,

15,

16], infrastructure development [

17,

18,

19], urban population distribution [

20,

21,

22], and ecological transformations [

6,

23,

24,

25], among many other urban system phenomena.

However, as urban systems grow more complex and interconnected, the limitations of traditional remote sensing technology that relies on relatively sophisticated equipment and data integration techniques have become evident. While remote sensing excels at capturing static physical characteristics of urban environments and we can even deduce certain social aspects of urban systems from the remotely captured images [

1,

8,

26,

27,

28,

29], it struggles to account for the dynamic human dimensions—social behaviors, economic activities, and cultural interactions—that shape urban systems. These human-centric elements are critical to understanding how urban areas function and evolve, particularly in response to crises, policy interventions, or socio-environmental challenges.

To address this gap, remote sensing has increasingly interfaced with other data sources and analytical paradigms, as discussed in Blaschke, et al. [

4] and Yu and Fang [

1]. The emergence of the “Big Data Era” [

10] has catalyzed a transformation in the field, enabling the integration of diverse datasets, including crowdsourced and human-generated information [

4,

30,

31,

32]. This evolution sets the stage for a new paradigm in urban observation, where the physical and societal dimensions of urban systems are analyzed in tandem to provide a more holistic understanding [

1].

The term “social sensing” appeared in academic studies in the early 2010s, primarily among computer scientists and the computing society [

33,

34], but it was later systematically discussed in the seminal book

Social Sensing: Building Reliable Systems on Unreliable Data [

35] and by geographers [

36] alike. The term quickly emerged as an unprecedented development and essential component of this evolution, because while “unreliable” at first glance [

35], social sensing data, with advanced data mining and analytical technologies, offers real-time insights into human activities, perceptions, and needs through data generated by individuals and communities [

35]. For instance, during disasters, remote sensing can map flood extents or assess infrastructure damage, but it is social sensing—via geolocated tweets, mobile applications, or crowdsourced reports—that pinpoints where people are stranded or urgently need assistance. Still, research of such integration remains limited; much effort is demanded.

Integrating traditional remote sensing with new social sensing data offers great advantage for urban studies but is not without significant technical (e.g., data heterogeneity, scalability) [

35] and ethical (e.g., privacy, bias) challenges that demand careful consideration [

1,

37,

38,

39].

Despite the existence of several literature reviews on remote sensing or social sensing in urban studies and quite a few studies integrating these two [

1,

4,

8,

24,

29,

30,

40,

41,

42,

43,

44], a critical gap persists: the integration of these domains remains largely ad hoc, driven by technological possibility rather than a coherent theoretical or ethical foundation for urban governance. This leads to a patchwork of case studies whose insights are difficult to scale or generalize. This paper addresses this gap not by offering another summary but by building a solution. We develop and articulate an integrated theoretical–methodological framework for a “hybrid urban observatory”, a model designed to move the field from disconnected applications toward a unified, systems-based paradigm for urban science. This review aims to synthesize the advancements in remote sensing and social sensing technologies, emphasizing their convergence and implications for urban sustainability. This manuscript explicitly identifies the unresolved challenge of integrating human-centered and physical-environment data streams for real-time urban governance. It contributes a novel conceptual synthesis and proposes a new systems-based framework for urban observatories, aiming to advance both methodological rigor and actionable guidance for the field. It provides a historical overview of the evolution of remote sensing technologies, explores the emergence of social sensing, and presents case studies where integrating these approaches has been successfully applied. This review also highlights theoretical gaps and proposes pathways for future research, focusing on methodological innovations, ethical considerations, and policy implications. In doing so, this review not only surveys past advances but also frames the notion of an integrated “dynamic urban observatory” that unifies remote and social sensing approaches, offering a new lens for holistic urban analysis.

Following this Introduction section, I will detail how the articles are selected and synthesized in this review.

Section 3 discusses the traditional urban remote sensing technology, while

Section 4 introduces the new social sensing framework.

Section 5 attempts to establish an integrated framework for remote and social sensing. This is followed by the theoretical build-up of such a framework in

Section 6. I conclude by summarizing the key findings from this review and potential future directions.

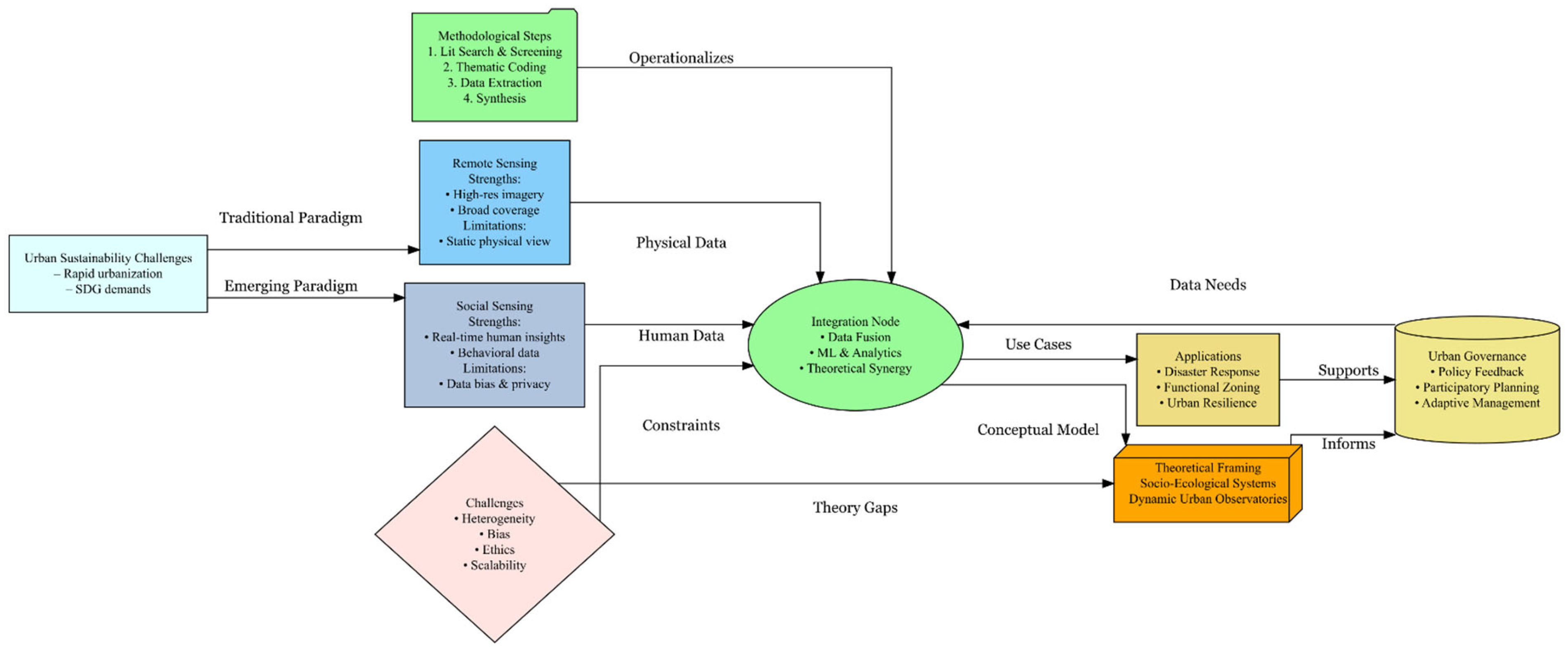

Figure 1 provides a graphic proceeding of this review’s action plan.

2. Materials and Methods

This review employs a mixed-methods approach, synthesizing insights from both quantitative and qualitative perspectives to explore the integration of remote sensing and social sensing technologies in urban studies, serving the purpose of shaping the development of urban science, particularly urban data science. I strive to provide a comprehensive understanding of advancements, applications, and challenges in these fields while ensuring that the findings are replicable and transparent. First, a systematic review outlines the rapidly evolving landscape of remote sensing technologies (including both traditional and social sensing) in urban studies. Then, a thematic analysis delves deeper into various application scenarios of these technologies. Identifying and analyzing key themes and gaps in the existing literature and focusing on the intersection of technological advancements and their practical implications for urban science are key to this review. This approach captures the breadth of technological evolution and contextual applications, offering a holistic perspective on how these technologies contribute to the development of urban science.

2.1. Literature Search Strategy

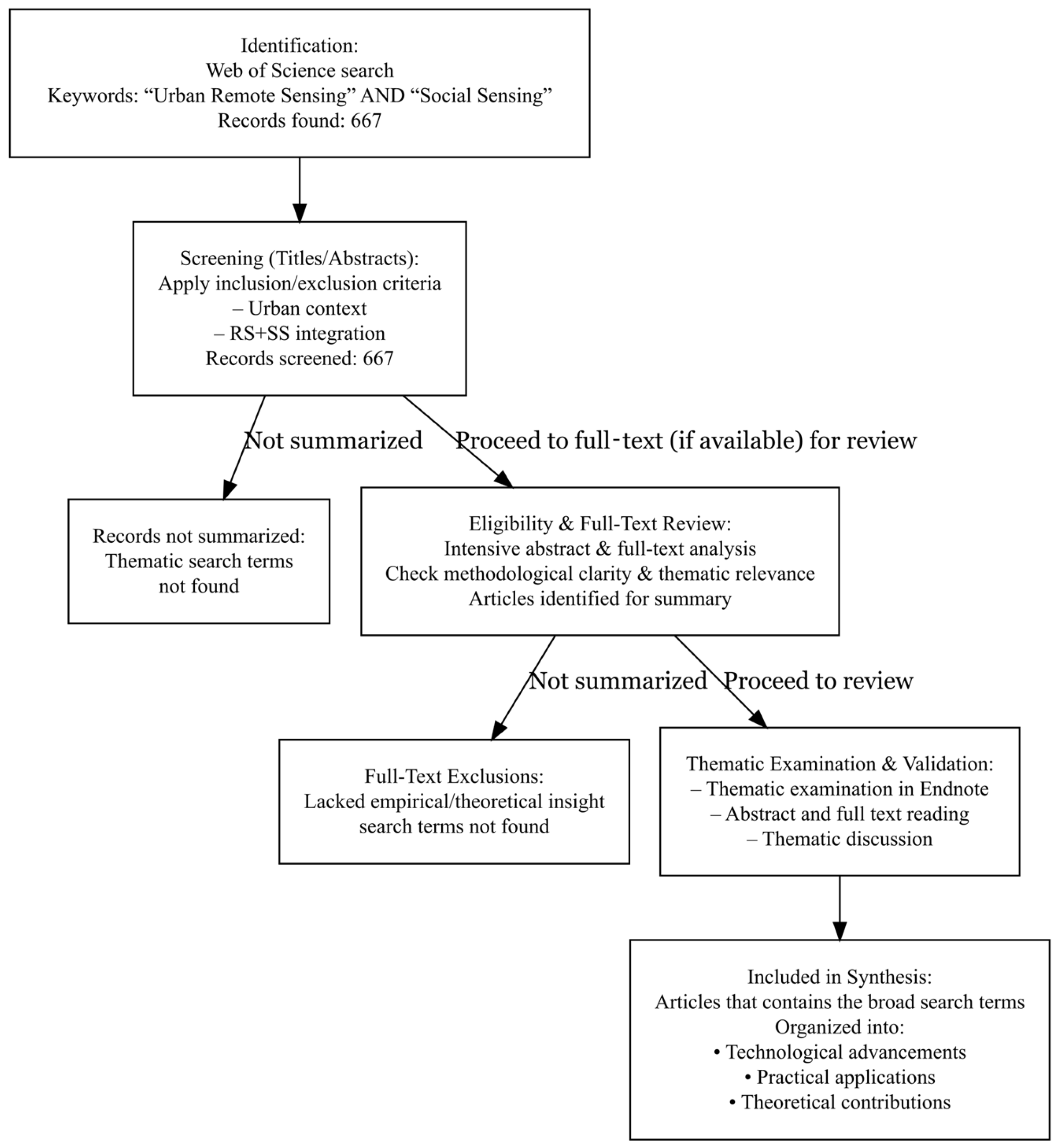

A comprehensive and systematic search of the literature was conducted using the Web of Science database, which is widely recognized for its extensive coverage of high-impact, peer-reviewed research. The keywords “Urban Remote Sensing” and “Social Sensing” were used separately (both enclosed in quotation marks to ensure precise results and avoid including less relevant studies). This focused approach allowed identification of articles specifically addressing the integration of these technologies in urban studies, rather than generic remote sensing or social media applications.

The search was conducted across all fields within the database, ensuring a broad yet targeted scope. A total of 667 peer-reviewed journal articles were identified as of 29 December 2024, spanning a range of disciplines, including geography, environmental science, computer science, and urban planning. The inclusion criteria required studies to explicitly discuss the applications, advancements, or challenges of remote sensing and social sensing in urban contexts. Articles were excluded if they lacked methodological clarity, focused solely on non-urban applications, or did not provide empirical or theoretical insights relevant to the integration of these technologies.

To ensure a comprehensive review, thematic coding was applied during the screening process. The 667 articles were categorized based on their primary focus: (1) technological advancements, which include studies exploring innovations like machine learning applications in remote sensing or the use of Multimodal Data Fusion Frameworks; (2) practical applications, which include research detailing the use of these technologies in areas such as disaster response, urban heat island mapping, or land-use classification; and (3) theoretical contributions, which include articles proposing new frameworks or paradigms for integrating remote sensing and social sensing in urban studies.

Initial search results were screened using titles, abstracts, and keywords to ensure alignment with this review’s scope. Full-text articles when available were subsequently analyzed to extract key information, including methodologies employed, thematic focus, contributions to urban science/studies, and identified research gaps or limitations. All 667 articles are stored in an Endnote® group. They serve as the literature pool for the ensuing detailed literature review.

This systematic and thematic approach ensured the inclusion of diverse perspectives while maintaining the relevance and quality of selected studies. In addition to the database search, references within selected articles were examined to identify any additional relevant studies that may not have been captured in the initial search. This iterative process added depth and context to this review, allowing for a comprehensive synthesis of existing knowledge.

2.2. Data Extraction and Synthesis

This review adopted a systematic and structured approach to extract and synthesize key information from the 667 articles. To move beyond a purely descriptive summary, our synthesis of the 667 articles was guided by an analytical goal: to distill the core methodological debates, theoretical tensions, and unresolved ethical questions at the confluence of remote and social sensing. The literature pool served as the raw material from which we identified recurring patterns and contradictions (e.g., data-driven vs. theory-driven fusion; scalability vs. accuracy). This review is therefore a synthesis of these critical issues, illustrated with key examples, rather than an exhaustive catalog of every study. Data extraction focused on identifying studies that addressed methodological frameworks, integration approaches, real-world applications, and challenges associated with using remote sensing and social sensing in urban science. This approach was designed to ensure that this review not only captured advancements in these fields but also critically analyzed their implications for the development of urban science.

In the first stage of data extraction, studies were closely examined for their contributions to methodological innovations in urban science. Particular attention was given to research that introduced advanced data fusion techniques, hybrid methodologies, or machine learning-driven frameworks. Search terms such as “methods”, “data fusion”, and “machine learning” were used in the Endnote group to find relevant studies, and their abstracts were carefully examined. If full text was available, the method section of the full text was further examined as well. These studies provided valuable insights into how the integration of remote and social sensing data could enhance the accuracy and scalability of urban monitoring. For instance, studies utilizing the Multimodal Data Fusion Frameworks (MDFFs) demonstrated the feasibility of combining diverse datasets, such as satellite imagery, social media posts, and mobile GPS data, to produce more nuanced urban analyses [

30,

32,

44,

45,

46,

47].

This review also prioritized studies that highlighted integration approaches. Search terms including “integration”, “collective”, and “bridging” were used in the Endnote group to identify relevant studies, and abstracts of the identified articles were again examined to determine their relevance. The focus here was on understanding how different types of data—ranging from optical and SAR satellite imagery to geolocated social media data—were combined to provide a more comprehensive view of urban systems. This review pays particular attention to frameworks that bridged the gap between traditional physical observations and emerging data streams that captured human-centric dynamics, as this integration represents a significant advancement in the development of urban science, such as disaster response [

44,

48,

49,

50,

51] and urban functional zoning [

32,

52,

53,

54,

55,

56,

57].

This review also considered the integrated applications in the context of their contributions to the theoretical and empirical growth of urban science. Search terms such as “theoretical”, “empirical”, and “applications” were used in the Endnote group. This review emphasized studies that applied these integrated technologies to enhance understanding of urban complexity, such as modeling urban systems as socio-ecological networks [

1,

7], identifying functional zones [

32,

54,

57,

58,

59,

60], or analyzing urban resilience [

61,

62,

63]. These applications offered insights into the broader implications of sensing technologies for theory building and empirical research in urban science.

In addition to discussing progresses of the integration, this review systematically analyzed challenges and limitations to identify areas requiring further investigation. “Challenge, limitations” are the main terms used to search for relevant articles in the Endnote group. Abstracts are examined first, then if needed, full articles, specifically the conclusion parts are examined to identify possible challenges and limitations. Key challenges include the inherent heterogeneity of data types, issues of scalability, and ethical concerns related to the use of human-generated data. Highlighting these barriers points to critical areas for future research and methodological development, emphasizing the need for robust frameworks that can address these complexities while advancing urban science.

To structure the synthesis, this review organized the extracted data into thematic categories reflecting the main contributions of the literature. These themes included technological advancements, methodological innovations, and theoretical perspectives. This thematic organization helped identify recurring patterns, such as the increasing role of machine learning in data integration and the emergence of frameworks conceptualizing cities as dynamic, multi-scalar systems. Importantly, the synthesis also revealed gaps in the literature, such as the underrepresentation of longitudinal studies, the lack of integration between urban theory and sensing technologies, and the reluctance of accepting social sensing as a growing branch of remote sensing.

Figure 2 provides a PRISMA-like flowchart to summarize the literature screening and data extraction process used in this review.

By systematically extracting and synthesizing data, the review provides not only a comprehensive overview of the state of the field but also identifies key directions for the continued development of urban science. This approach ensures that the findings contribute to both theoretical and methodological advancements, supporting the evolution of a more integrated and dynamic urban science framework.

2.3. Theoretical Framing

The theoretical framework of this review is anchored in a socio-ecological systems perspective of urban science, which views cities as dynamic, complex entities shaped by intricate interactions where coupling among population, behavior, and biophysical processes are mediated by both technological and policy interventions. This perspective recognizes that urban systems are not merely spatial constructs but dynamic networks where social, economic, technological, and ecological processes are deeply interwoven [

1,

25,

64]. Under this theoretical framework, we contend that cities are better understood through “hybrid urban observatories”, which we define as integrated data platforms that combine remote sensing (satellite/aerial) and social sensing (citizen- or device-generated) to monitor, model, and manage urban systems in real time. This perspective aligns with emerging frameworks in urban science, which emphasize the need for integrated methodologies to address the complexities of urbanization.

The socio-ecological systems framework provides a robust foundation for this integration. It enables researchers to explore cities as interdependent systems where human behaviors influence and are influenced by spatial and environmental conditions. For instance, the combination of remote sensing and social sensing has been shown to effectively model urban sprawl by capturing both physical expansion and its socio-environmental impacts [

16]. Similarly, semantic analysis of social sensing data has been demonstrated to enrich urban functional zone classification, thereby bridging the gap between spatial patterns and human-centric dynamics [

65].

This framework also emphasizes temporal dynamism, which is a critical dimension often underrepresented in traditional urban observatory theories. By incorporating temporal patterns, such as diurnal changes in human mobility or seasonal variations in land use, the socio-ecological systems approach enables a deeper understanding of urban rhythms and their implications for policy and planning. For example, the integration of temporal activity data with high-resolution imagery has been shown to significantly enhance urban land-use classification accuracy, as evidenced by recent studies employing machine learning and probabilistic models [

66,

67].

A central objective of this theoretical framing is to advance urban science by moving beyond static representations of cities. It seeks to conceptualize urban areas as living systems characterized by feedback loops, emergent behaviors, and multi-scalar interactions. This approach facilitates a more comprehensive exploration of key urban phenomena, including resilience, inequality, and adaptation to environmental changes. By framing the analysis within this dynamic and integrative paradigm, this review aims to contribute to the evolution of urban science as a discipline better equipped to address the challenges of the 21st century. The synthesis in subsequent sections is organized around this theoretical anchor, tracing methodological, empirical, and policy developments along each dimension.

2.4. Limitations

While this review strives to be comprehensive and thorough in analyzing the integration and application of remote sensing and social sensing for urban science, certain limitations are acknowledged. First, this review utilized only the Web of Science database. Although Web of Science is extensive and well-regarded for peer-reviewed literature, relevant studies published in other databases or gray literature might have been missed, potentially limiting the scope of coverage.

Second, the rapid development in remote and social sensing fields introduces potential bias from publication lag. Cutting-edge advancements and emerging practices might not yet be sufficiently represented in peer-reviewed publications. Thus, interpretations and conclusions drawn herein reflect the state of research at the time of this review, and continuous updating will be essential as the field evolves.

Furthermore, the thematic categorization and analysis of the literature, while structured and systematic, may inherently include subjective judgment in defining thematic boundaries and categorizing studies. Although measures were taken to minimize this bias, such as clear inclusion and exclusion criteria, multiple reviews, and thematic validation, the potential for interpretative subjectivity remains.

Finally, despite careful attention to methodological and theoretical gaps identified through the literature synthesis, practical constraints on article availability and access may lead to inadvertent omissions, particularly of non-English or regionally specific research. This limitation highlights the importance of future reviews incorporating a broader linguistic and geographic scope to ensure inclusivity and comprehensiveness in urban science research.

3. Urban Remote Sensing: Understanding Cities from Afar

Urban remote sensing has significantly advanced our understanding of urban environments by providing detailed, frequent, and large-scale observational data. Over the decades, it has transformed from basic aerial surveys to sophisticated satellite technologies capable of capturing detailed urban dynamics. This technological evolution has not only enriched urban science but also fundamentally reshaped urban monitoring and management practices.

3.1. Early Applications

3.1.1. Evolution from Low-Resolution Imagery to Advanced Satellite Systems

Urban remote sensing originated with aerial photography and relatively low-resolution satellite imagery, which provided foundational yet limited insights into urban structures and dynamics. The advent of advanced satellite systems like Landsat, launched in the early 1970s, marked a critical turning point. Landsat’s multispectral capabilities and consistent revisits enabled urban researchers to systematically monitor processes such as urban sprawl and land-use changes [

14,

15]. For instance, Landsat data have been extensively employed to model urban growth patterns, such as the urbanization and urban sprawl process in the northeast USA [

68], providing valuable information to urban planners about spatial–temporal land-use transformations [

69].

The MODIS (Moderate Resolution Imaging Spectroradiometer) satellite, introduced later, significantly enriched urban remote sensing by enabling global daily observations. MODIS data have been particularly useful for monitoring large-scale urban phenomena such as urban heat islands (UHIs) and seasonal vegetation dynamics, playing an essential role in urban climatology studies [

20]. Through the integration of MODIS imagery, scholars effectively explored ecological impacts associated with urban expansion, particularly its implications for regional climates and vegetation health [

6].

3.1.2. Key Contributions to Urban Planning and Ecological Monitoring

Remote sensing has been pivotal in providing essential data to support urban planning and ecological monitoring. For instance, the utilization of satellite imagery facilitated the accurate and timely measurement of urban sprawl [

15], guiding planners in resource allocation and land-use planning decisions [

14]. High-resolution imagery also enabled detailed analyses of urban infrastructure development, significantly contributing to the management and maintenance of urban amenities such as roads, bridges, and utility networks [

17,

18].

Moreover, remote sensing has substantially enhanced ecological monitoring by providing spatially explicit data on vegetation coverage, urban forests, and green space distributions. High-resolution remote sensing techniques have improved estimations of carbon storage in urban forests [

70], directly informing urban sustainability policies and strategies to mitigate climate change [

7]. Similarly, the increasing availability of hyperspectral and multispectral imagery has enabled detailed mapping of urban vegetation types and conditions, directly supporting biodiversity conservation efforts within urban contexts [

23,

24].

Collectively, while I am only presenting here a few representative applications, these early applications clearly established remote sensing as a fundamental tool for urban planning and environmental management. At the same time, their focus on physical metrics underscored the need to capture social and behavioral dimensions through integrated approaches—a gap that later innovations aimed to address.

3.2. Technologic Advancements

3.2.1. Emergence of Hyperspectral and LiDAR Data

As remote sensing technology advanced, significant improvements emerged in spatial, spectral, and temporal resolutions, markedly enhancing urban observation capabilities. Hyperspectral imaging, characterized by capturing hundreds of narrow spectral bands across electromagnetic wavelengths, emerged as a powerful tool to identify materials and urban features with unprecedented accuracy. For example, Heldens, et al. [

23] emphasized how hyperspectral imaging from platforms like the (then future) EnMAP mission (launched in 2015) can significantly contribute to nuanced urban applications, such as detailed land-use classification (such as differentiating various types of residential areas or identifying specific industrial materials) and urban ecological assessments (such as mapping different vegetation species or stress conditions in urban parks), by discriminating subtle variations (like roofing types or pavement conditions) in surface materials and vegetation conditions.

Similarly, Light Detection and Ranging (LiDAR), which utilizes laser pulses to create high-resolution three-dimensional (3D) representations of urban structures, substantially advanced urban morphological analyses. LiDAR has proven particularly effective in delineating building footprints, mapping urban canopy structures, and capturing infrastructure details. Kostrikov [

8] showcased how LiDAR technology facilitated smart city initiatives by enabling precise mapping of urban infrastructure and real-time monitoring for urban planning and management. Moreover, the ability of LiDAR to accurately capture vertical profiles revolutionized urban forestry and carbon storage assessments, making urban ecological studies increasingly precise and actionable [

7]. However, deploying these advanced sensors also introduced challenges—hyperspectral data are high-dimensional, and LiDAR collection can be resource-intensive—necessitating sophisticated processing and analysis to translate raw data into actionable urban insights.

3.2.2. Machine Learning Applications for Urban Feature Extraction

Parallel to sensor advancements, the application of artificial intelligence, particularly machine learning algorithms, significantly elevated the analytical capabilities of urban remote sensing. Traditional remote sensing techniques, while powerful, were often constrained by the complexity of urban landscapes and manual interpretation requirements. The integration of machine learning techniques, including supervised classification (where models learn from labeled examples), deep learning (a class of ML using multilayered neural networks), and convolutional neural networks (CNNs, particularly effective for image analysis), has markedly improved the automation, accuracy, and scalability of urban analyses.

Neupane, et al. [

29] conducted a comprehensive review highlighting the effectiveness of deep learning algorithms in semantic segmentation of urban features. They demonstrated how these techniques substantially improved the extraction accuracy of urban elements such as buildings, roads, and vegetation from high-resolution satellite imagery. Similarly, Deng and Wang [

5] employed sophisticated machine learning-based approaches using polarimetric Synthetic Aperture Radar (SAR) data to enhance the accuracy of urban building extraction. Their methodology underscored the transformative potential of machine learning in dealing with complex and heterogeneous urban scenes.

Further, advanced data fusion frameworks combining remote sensing imagery and machine learning techniques provided innovative solutions to urban classification challenges. For instance, Bao, et al. [

32] integrated CNNs and multisource geographic data, significantly improving urban functional zone identification. This integration facilitated comprehensive mapping of urban regions by linking spatial information from remote sensing images with contextual insights from georeferenced datasets such as points of interest (POIs), greatly enriching the capacity of urban planners and researchers to understand urban dynamics. Notwithstanding these improvements, machine learning models rely on substantial training data and careful tuning, and their often-opaque decision-making processes require careful validation to ensure they generalize reliably across different urban contexts.

3.3. Social and Ecological Focus

More recently, urban remote sensing research has increasingly focused on pressing societal and ecological challenges associated with rapid urbanization, particularly climate resilience and sustainable resource management. Remote sensing’s ability to monitor and predict environmental phenomena such as urban heat islands, flooding events, and vegetation stress has positioned it as an essential component of urban resilience frameworks.

Studies like [

6] highlighted the critical contributions of remote sensing to global environmental change research, emphasizing its role in monitoring urban resilience and supporting adaptive management strategies. Similarly, Shao, et al. [

16] demonstrated how integrating remote sensing and social media data could elucidate the complex interactions between urban sprawl (quantified through remote sensing-derived land-cover change metrics) and sustainable development, providing empirical support for urban policies aimed at resilience-building.

Urban remote sensing has also facilitated the monitoring of resources critical to urban sustainability, such as water availability and quality, energy usage patterns, and vegetation health. Zhou, et al. [

26], for example, effectively linked remotely sensed anthropogenic heat discharge (measured as surface temperature anomalies) with urban energy consumption patterns, illustrating how remote sensing can inform energy-efficient urban design. Moreover, Du, et al. [

24] underscored the utility of remote sensing image interpretation in comprehensive urban environment analyses, advocating for its continued application in sustainable urban resource management.

While the core of urban remote sensing has historically centered on monitoring the physical environment, there is growing recognition that many urban challenges, such as climate adaptation, resource equity, and population vulnerability, cannot be fully addressed through physical data alone. As cities evolve into increasingly complex socio-ecological systems, there is a pressing need for remote sensing approaches that account for human behavior, perception, and lived experience. This recognition has spurred interest in more integrated sensing paradigms that can bridge physical and social observations. Such integration remains emergent, representing a critical frontier in remote sensing research. The following sections explore how this evolution unfolds through the rise of Big Data and the ensuing social sensing, and how its convergence with traditional methods is reshaping the future of urban observation.

4. The Big Data Era: Social Sensing as a New Paradigm

The era of Big Data, characterized by vast volumes of data produced at unprecedented speed and variety [

10], has significantly reshaped the methodologies and conceptual frameworks within urban science. Initially, urban Big Data research focused heavily on large-scale, often structured datasets such as satellite imagery, sensor network outputs, or administrative data. However, urban researchers have increasingly recognized that these conventional datasets, despite their scale and precision, offer limited insight into the nuanced human dynamics and complex social interactions that shape cities. This recognition has encouraged scholars to look toward alternative sources of information, particularly unstructured digital traces produced directly by individuals through their everyday interactions. This evolution marks the emergence of the social sensing paradigm. Social sensing specifically refers to the systematic collection, analysis, and interpretation of spontaneous, user-generated digital traces, such as posts on social media platforms or location data from smartphones [

33,

34]. Unlike traditional remote sensing that relies on structured electromagnetic signals captured by satellite or aerial platforms, social sensing leverages vast and largely unstructured human-generated data streams, enabling real-time insights into dynamic urban behaviors and activities [

35].

4.1. Conceptual Framework of Social Sensing

The conceptual foundation of social sensing is rooted in treating individuals as active “human sensors” [

71,

72,

73], harnessing the potential of data generated from their daily digital interactions with the urban environment. This new conceptualization significantly expands the analytical scope and depth of urban science, enabling researchers to capture and interpret complex social behaviors, human mobility, and urban dynamics that traditional remote sensing methods might miss [

9].

4.1.1. Human as Sensors: A Fundamental Paradigm Shift

The foundational studies of Ali, et al. [

33] and Madan, et al. [

34] initially introduced the concept of social sensing within computing and software engineering communities. These scholars characterized social sensing as an innovative mechanism where spontaneously generated user data, such as social media posts, GPS logs from smartphones, or other mobile applications, can be systematically aggregated and analyzed to reveal deeper insights into urban phenomena. This shift is revolutionary because it transforms citizens from passive subjects of urban analysis to active participants who continually generate valuable, real-time data, while in reality, urban citizens are supposed to be the integrated part of the urban environment.

In urban studies, this conceptual shift profoundly impacts the granularity and timeliness of data available for analysis. For example, Ferreira, et al. [

74] employed social sensing data derived from smartphone applications and social media platforms to effectively characterize the spatiotemporal mobility patterns of tourists. Their study demonstrated that such data could highlight distinctive behavioral differences influenced by cultural and regional factors, patterns that traditional remote sensing technologies alone could not reliably reveal.

Similarly, Fan, et al. [

48] developed a social sensing-based digital twin framework that integrated textual, visual, and geospatial content from social media to significantly improve situational awareness during urban disruptions and disaster events. Their work highlighted the robustness of social sensing data in capturing real-time societal impacts, emphasizing the practical value and reliability of this human-centered data approach in crisis management. Collectively, these examples illustrate that treating humans as sensors provides insights into urban conditions—such as real-time behaviors and experiences—that are largely inaccessible to conventional remote sensing alone.

4.1.2. From Unstructured Data to Actionable Urban Intelligence

Initially, social sensing data was criticized as unreliable due to its inherently unstructured and spontaneous nature, raising concerns about its validity and representativeness. However, rapid advancements in Big Data analytics and artificial intelligence, particularly machine learning and natural language processing (NLP), have markedly improved the capacity to extract reliable, meaningful insights from these large and complex datasets [

35].

Zhang, et al. [

37], for instance, systematically reviewed passive social sensing using smartphone-derived data, emphasizing the critical importance of advanced data analytics methods in extracting meaningful information from highly unstructured user-generated content. Their analysis concluded that techniques such as NLP and deep learning effectively address initial reliability concerns by systematically filtering, categorizing, and interpreting data, thus transforming seemingly chaotic social media feeds into actionable urban intelligence.

Moreover, Yu and Wang [

44] showcased the capability of multimodal social sensing frameworks that integrate textual, visual, and geospatial data from multiple platforms to accurately track the spatiotemporal evolution of natural disasters. Their study clearly illustrated how analytical advancements have significantly increased the validity and practical utility of seemingly unstructured social sensing data in urban disaster response scenarios, reinforcing the robustness of this emerging analytical paradigm. In effect, social sensing data have evolved from being considered “unreliable noise” to serving as a credible layer of urban information thanks to these advanced analytics.

4.1.3. Integrative Framework: Bridging Physical and Social Dimensions

The integration of social sensing with traditional remote sensing frameworks is increasingly advocated to establish a more holistic understanding of urban systems. This integrated approach recognizes cities as dynamic socio-ecological systems where physical environments are deeply intertwined with human behaviors and activities. The conceptual synergy of integrating these two distinct sensing paradigms allows researchers to investigate urban phenomena with unprecedented depth and clarity.

For example, Shao, et al. [

16] demonstrated how the combination of remote sensing imagery (used to classify and map physical urban expansion over time in Morogoro) and social media-derived data (Twitter usage proxying both urbanization and urban infrastructure) could elucidate the complex interactions underlying urban sprawl and its socio-environmental consequences. They provided compelling evidence that integrating human-centric social sensing data with physically oriented remote sensing data, significantly enhanced urban planning and policymaking processes by offering multidimensional insights into urban dynamics.

Similarly, Bao, et al. [

32] effectively utilized deep learning frameworks to integrate remote sensing images (very-high-spatial-resolution images for building extraction) with social sensing data, primarily points of interest (POIs) data that illustrate urban functional semantics in the social function context. Their research significantly improved urban functional zone classification, offering a robust example of how these sensing modalities could be harmonized effectively to support urban science and planning.

These examples underscore the potential gains from blending physical and social data into unified analyses. However, such integrated frameworks remain nascent, and scaling them from case studies to common practice will require further research and methodological refinement.

4.2. Data Modalities

The analytical power of social sensing in urban science stems from its ability to capture real-time, granular, and human-centered data from diverse digital sources. These data modalities differ not only in their structures and formats but also in the behavioral dimensions and spatial–temporal resolutions they capture. Unlike traditional remote sensing data that are well-structured, periodic, and often top-down in nature, social sensing modalities are characterized by spontaneity, heterogeneity, and bottom-up user generation [

35]. This section delineates the major data modalities within social sensing and their roles in advancing urban science.

4.2.1. Geolocated Social Media Platforms

Social media platforms, such as Twitter, Weibo, and Instagram, among many others, have emerged as primary sources of geolocated user-generated content that reflect public activity, sentiment, and spatial behaviors. These platforms allow users to attach location tags and timestamps to their textual or visual posts, generating vast volumes of spatially explicit behavioral data.

For instance, Fan, et al. [

48] introduced a social sensing framework that integrates textual, visual, and geographic content from social media to enhance situational awareness during urban disruptions. Their study demonstrated the effectiveness of using Twitter data for detecting the spatial spread and impact of built environment disruptions in real time, significantly augmenting conventional disaster assessment tools. Similarly, Zhang, et al. [

50] developed a semi-automated social media analytics system to quantify the societal impacts of urban disasters by mapping disruptions and community-level responses through geolocated posts. Zhu, et al. [

75] employed geotagged social media data to assess how flooding events disrupted access to emergency shelters, offering a vivid account of how physical barriers translate into social vulnerability.

These studies showcase the value of geolocated social media data as a vital resource in urban science, offering insights that are timely, granular, and reflective of real-world human experiences. By capturing immediate, localized human responses and behaviors, such data complement traditional urban monitoring approaches and enable more responsive, adaptive urban management practices, particularly in dynamic or crisis contexts.

4.2.2. Mobile Phone and GPS Trajectory Data

Mobile phone data, particularly GPS trajectories collected through smartphone applications and cellular towers, constitute another vital modality in social sensing. These data enable high-resolution tracking of human mobility patterns, allowing researchers to model commuting behaviors, activity spaces, and urban functional dynamics.

Qian, et al. [

58] demonstrated how satellite imagery can be coupled with taxi GPS trajectory data to identify urban functional areas. By using high-resolution (0.5 m/pixel) RGB satellite images from a Baidu map in 2018, their study leveraged the temporal frequency of taxi movement patterns to classify land-use types at high spatial resolution, illustrating the unique value of combining high-resolution satellite with GPS data in capturing both the form and function of urban space. Likewise, Chang, et al. [

59] used Sentinel-2 imagery (Level 1C) in combination with social sensing (POI data from Baidu and mobile phone signaling data from China Unicom) and GPS data to delineate functional zones in Changchun, China, highlighting the integrative potential of trajectory-based movement data with traditional remote sensing.

These applications underscore how mobility-derived social sensing data allow urban scientists to monitor behavioral rhythms, detect land-use anomalies, and validate planning assumptions in ways that would be difficult with remote sensing alone.

4.2.3. Points of Interest (POIs) and Volunteered Geographic Information (VGI)

Another rich source of social sensing data arises from digital spatial annotations contributed by users, such as points of interest (POIs), OpenStreetMap entries, and other volunteered geographic information (VGI). These user-contributed datasets reflect the collective cognition and usage of urban space, often revealing underlying functional structures and spatial semantics invisible to sensors [

67,

76].

Bao, et al. [

32] developed a deep learning convolutional neural network (DFCNN) model that integrated remote sensing imagery with POI data for semantic recognition of urban functional zones. Their results showed that POI data significantly improved classification accuracy by contextualizing spectral information with real-world functional attributes. In the same train of thought, Xu, et al. [

53] proposed the RPF (Remote Sensing and POI Fused) model to identify urban functional regions, arguing that POIs serve as effective proxies for economic and social activity clusters when combined with spectral features from imagery.

The use of VGI is particularly relevant in under-mapped or rapidly evolving urban contexts where official datasets may be outdated or incomplete. Zhai, et al. [

52] introduced a hybrid Place2vec approach to extract functional regions by embedding semantic relationships between POIs and urban morphology, revealing deeper layers of spatial organization often missed in traditional cartographic representations.

POI and VGI datasets uniquely enrich social sensing by integrating human knowledge and urban semantics directly into spatial analysis. This integration not only improves urban functional zone classification but also provides critical insights for urban areas where traditional data sources may be limited, outdated, or insufficiently detailed. Still, each social sensing modality has inherent biases: social media usage may not equally represent all populations or places, mobility datasets can raise privacy concerns, and VGI contributions might be uneven. These limitations necessitate careful validation and integration strategies when using social data for urban insights.

4.3. Integrative Applications: Advancing Urban Science Through Social Sensing

The distinctive value of social sensing lies not only in its data diversity but also in its transformative potential when integrated fully into urban science frameworks. While previous discussions have detailed the methodologies, data modalities, and individual use cases of social sensing, this section highlights broader integrative applications. Specifically, it explores how social sensing can fundamentally reshape urban analysis, decision-making, and governance when treated not merely as supplementary data but as a central form of urban intelligence.

4.3.1. From Supplementary Data to Central Urban Intelligence

Social sensing data, initially viewed primarily as a source of insight, has begun evolving into a fundamental component of urban intelligence [

37,

41]. Traditional urban remote sensing that is grounded largely in structured data from remote sensors often misses critical aspects of lived experience, social vulnerability, and real-time behavioral responses. Social sensing directly fills these gaps by capturing immediate and nuanced human interactions, perceptions, and sentiments.

For example, studies using geolocated tweets provide rapid situational awareness during urban disruptions, substantially augmenting traditional remote sensing [

48,

77]. Rather than merely supplementing physical observations, these social data streams offer new analytical dimensions, such as emotional and behavioral contexts, that fundamentally reshape urban disaster response frameworks.

The critical step forward now lies in explicitly positioning social sensing as a central analytical layer within urban monitoring and data collection systems. This requires urban scientists and policymakers to evolve beyond viewing social data as merely complementary and instead recognize its integral value in capturing real-time urban dynamics.

4.3.2. Enhancing Urban Governance Through Real-Time Decision-Making

Integrating social sensing into urban promises to make city management more adaptive and responsive. Unlike conventional governance that relies on periodic surveys or delayed datasets, social sensing offers immediate insights into urban phenomena, enabling quicker, more effective policy responses.

Zhu, et al. [

75] illustrated how social sensing data could pinpoint vulnerabilities in real time during flooding events, rapidly informing emergency shelter allocations and highlighting inequalities in urban resilience. This capacity for immediate feedback, grounded in human experience, provides cities with a powerful tool for crisis management, resource allocation, and real-time decision-making.

Further advancing this capability requires urban governance structures to not only integrate real-time sensing platforms but also to develop policy infrastructures responsive to such data. Policymakers will need to learn to interpret, validate, and incorporate social sensing inputs effectively and ethically into their decision-making processes.

4.3.3. Addressing Complex Urban Challenges: Sustainability, Equity, and Public Health

Perhaps the greatest integrative potential for social sensing lies in addressing the increasingly complex, interrelated urban challenges of sustainability, equity, and public health. Urban systems are characterized by dynamic feedback loops and cross-sectoral interactions; traditional sensing methodologies, while effective in isolation, often struggle to reflect these systemic complexities.

Social sensing’s integrative capability can bridge physical and human dimensions of urban challenges. Studies employing POI data and volunteered geographic information (VGI) [

32,

53,

67] demonstrate how these datasets can enrich functional zone identification—informing policies on sustainability and land use in rapidly evolving cities.

Moreover, the ability of social sensing to capture and map public sentiment, mobility patterns, and behavior trends provides powerful support for public health interventions, equity assessments, and resilience planning [

34,

78]. It becomes clearer now that social sensing data, with the advent of more advanced analytical methodologies, can predict public responses to health interventions or policy changes, further enabling more proactive and tailored urban management.

4.3.4. Remaining Challenges and Future Directions

Despite the potential outlined above, significant methodological and ethical challenges remain, and urban scientists must address them explicitly. Representation bias [

79] remains a substantial barrier, particularly in ensuring equitable urban governance, because digital data often underrepresent certain groups, especially disadvantaged communities. However, an analytical focus on these biases can itself yield valuable insights into digital divides and differential community engagement, thereby identifying areas for targeted outreach or alternative data collection strategies. Similarly, spatial inaccuracies in social sensing data [

80] continue to complicate its direct operational use. Conversely, these perceived inaccuracies can sometimes reflect vernacular or activity-based geographies that differ from formal administrative boundaries, offering unique perspectives on how spaces are actually used and experienced.

Ethical concerns are paramount when working with social sensing data [

38,

39]. Social sensing, inherently human-centered, involves sensitive data that raises multiple interconnected ethical issues. Key among these concerns are the following: (1) data privacy and re-identification risks stemming from the fusion of multiple datasets, potentially undermining individual confidentiality; (2) surveillance risks, arising when urban observatories continuously monitor individual behaviors and collective activities, raising significant questions about consent and freedom; (3) algorithmic bias, particularly in automated data classification systems, which might unintentionally reinforce existing social inequalities or obscure underlying patterns of urban disparity; and (4) citizen agency and participatory governance, highlighting the necessity for transparency, accountability, and democratic control of sensing practices.

To robustly address these ethical concerns, urban scientists and planners must adopt integrated approaches that combine both technical and procedural safeguards. Technical solutions include systematic anonymization processes, robust encryption standards, and rigorous bias audits within data analytics and machine learning algorithms. Procedural safeguards entail engaging local communities directly in consultation processes, establishing open and transparent data governance frameworks, and involving residents meaningfully in data interpretation and decision-making processes. Developing transparent policies regarding data use, strengthening privacy protections, and creating participatory platforms will be vital to ensure that social sensing practices do not perpetuate existing inequalities or erode public trust. Without careful attention to these dimensions, social sensing risks reinforcing existing inequalities or compromising public trust. Furthermore, challenges around scalability and data standardization [

81] must be addressed before social sensing can consistently guide urban policy and governance. Developing standardized methods and ethical protocols will be crucial to ensure that social sensing matures from promising innovation to trusted urban intelligence. Overcoming these challenges will demand collaborative progress in technical standards, rigorous ethical protocols, and strategies to broaden public engagement in data collection and interpretation.

5. Integration of Remote and Social Sensing: Towards a New Urban Observational Framework

As discussed above, social sensing has already begun reshaping urban science by providing rich, human-centered data streams that complement traditional observation methods. Yet the true potential of social sensing emerges when explicitly integrated with traditional remote sensing techniques, forging a comprehensive observational framework. This integration represents a critical theoretical and methodological shift, moving from separate modalities toward a unified urban sensing paradigm. I will now focus on the progress of theoretical foundations, methodological frameworks, and practical challenges associated with integrating these two distinct but complementary sensing technologies.

5.1. Theoretical Synergies

The theoretical basis for integrating remote and social sensing stems from their epistemologies. Remote sensing provides high-frequency, high-accuracy data on physical surfaces, land use, built infrastructure, and ecological conditions. Social sensing, on the other hand, introduces human agency into the observation process, capturing mobility, perception, sentiment, and informal activities that elude traditional sensing platforms.

For example, Ouyang, et al. [

82] argued compellingly for the semantic fusion of remote sensing with social datasets like POIs and mobility patterns, transforming urban spaces from simply physical entities into socially meaningful zones shaped by human intentions and behaviors. Yu and Wang [

44] demonstrated the complementary nature of remote and social sensing in disaster contexts, highlighting that physical observations become significantly more meaningful when contextualized by real-time social insights. These theoretical synergies suggest that urban science should evolve from siloed data paradigms towards a genuinely integrative approach where physical and social realities are co-analyzed and co-understood. Nevertheless, developing a truly unified theory of urban observation that seamlessly merges these epistemologies remains an open challenge, as current efforts are still bridging disciplinary perspectives. However, these theoretical synergies are not merely abstract; they form the foundation for the development of concrete hybrid methodologies, have been demonstrated in successful integrated applications with measurable outcomes, and drive the conceptualization of new, practical metrics for urban analysis. I will turn to these particular aspects next.

5.2. Hybrid Methodologies

Building upon the theoretical synergies outlined above and leveraging the remote sensing technologies and social sensing data modalities (including POIs and AI-driven analytics) already introduced, this section details recent methodological innovations developed to effectively operationalize their integration.

In recent years, methodological innovations have been developed to effectively operationalize the theoretical integration of remote and social sensing. These hybrid methodologies seek to reconcile the distinct data structures, resolutions, and semantic interpretations associated with each sensing modality, emphasizing interpretability, uncertainty quantification, and temporal responsiveness.

One prevalent methodological approach is advanced data fusion techniques, which often incorporate deep learning, ensemble modeling, and probabilistic frameworks. These techniques are designed to combine structured remote sensing data (e.g., satellite imagery, LiDAR scans) with unstructured or semi-structured social sensing inputs (e.g., social media posts, POI databases, volunteered geographic information). For instance, Multisource Dynamic Fusion Networks (MDFNs) have shown considerable promise, integrating imagery from platforms like Sentinel satellites with POIs and building footprints to create dynamic, multilayered urban functional zone maps [

56]. The strength of such networks lies not only in their classification accuracy but also in their interpretative depth, capturing the interactions between physical urban form and human activity.

Another critical methodological advancement in integrated sensing is the incorporation of uncertainty analysis, which explicitly addresses conflicting signals and data ambiguity between remote and social sensing modalities [

47]. Uncertainty quantification methods allow researchers to identify, weigh, and reconcile differences in spatial, temporal, and thematic information between data sources. This approach proves particularly valuable in transitional urban zones, where physical structures may not clearly indicate actual land use or human dynamics. This approach allows urban planners to better interpret spatial divergences between appearance-based land-use categories and behavior-driven functional classifications [

47].

Furthermore, temporal data fusion methods represent another significant methodological development. By aligning synchronous but heterogeneous data streams, such as satellite imagery and real-time citizen-contributed content, the integrated sensing strategy facilitates highly responsive urban monitoring, especially critical during urban crises or disaster scenarios [

46].

These methodological innovations illustrate a broader shift toward interpretive, adaptive, and responsive urban sensing systems. However, the transition from research prototypes to operational implementation remains challenging. The potential of these hybrid methods will only be fully realized once integrated systematically into practical urban governance frameworks, informed by both technical feasibility and grounded urban needs.

5.3. Successful Integrations of Multiple Sensing Technologies

The integration of traditional remote sensing and the emerging social sensing has seen application since scholars first realized the potential of social sensing in urban studies [

4,

72,

83,

84]. For instance, Chen, et al. [

85] offer an illustrative example through their development of a novel methodological framework that effectively integrates remote sensing and social sensing data for enhanced urban green space (UGS) mapping; they effectively used OpenStreetMap data to assign social functions to physically derived vegetation patches. Their study allowed for the fairly accurate mapping of UGS not merely from a physical perspective but also from the crucial but often overlooked social function perspective.

The resulting classification yielded impressive overall accuracy of 92.48% for Level I and 88.76% for Level II social function types per their definition. This integration exemplifies the potential of combining remote sensing’s ability to capture physical landscape details with social sensing’s capacity to reflect socioeconomic characteristics and urban functions.

A more recent work by Cheng, et al. [

30] further illustrates the effectiveness of integrating multisource sensing technologies through the development of an advanced multimodel fusion neural network approach for fine-resolution population mapping. This fusion approach simultaneously incorporates both local spatial details and broader geographic context. By merging convolutional neural networks (CNNs) with multilayer perceptrons (MLPs), their study effectively leveraged multisource data to create highly accurate population distribution maps at a fine spatial resolution (100 m) using Shenzhen, China, as an example (R-squared = 0.77 at the township level, as compared to the commonly used WorldPop dataset, where R-squared = 0.51, and the MLP-based model, where R-square d= 0.63). This clearly improved accuracy facilitates more reliable resource allocation, urban planning, and disaster management, demonstrating the broader potential of integrating multisource sensing technologies in urban analysis.

In another study, Zhang, et al. [

86] attempted to address the robustness and accuracy challenges inherent in multimodal data fusion with remote and social sensing data. In their work on urban region function recognition, they proposed a sophisticated multimodal fusion network guided by feature co-occurrence between remote sensing and social sensing features. This approach allows the integrated data fusion framework to suppress the many noise features that are often inevitable in the integrated remote and social sensing framework. The application of graph convolutional networks with specialized node weighting and interactive update layers enabled the extraction of valuable periodic features from social sensing data, greatly improving both the robustness and the precision of the integration.

The application of integrated sensing technology is only starting. Still, these applications, while demonstrating the power of integration, also exemplify a common first-generation integration model: social data is often used as a supplementary layer to enrich a physical baseline. It answers the question “what is this place used for?” but does not fundamentally alter the analysis of the urban system itself. These foundational successes highlight the potential of fusion but also underscore the need for a more deeply integrated, systemic approach—a theme we develop in our proposed framework.

5.4. Challenges and Constraints

Despite clear theoretical and empirical advances, the integration of remote and social sensing remains fraught with challenges, many of which reflect deeper tensions in urban science itself. First, data heterogeneity is a persistent technical barrier. As Salentinig and Gamba [

87] noted in their work on SAR image fusion, even integrating multi-resolution physical data is non-trivial; adding social data introduces additional variability in scale, frequency, and reliability. Most fusion models still require careful pre-processing and manual calibration, limiting scalability and reproducibility.

Second, representational bias remains a structural issue. Knoble and Yu [

88] demonstrated that even when social sensing is employed to measure environmental justice outcomes, it often underrepresents communities most vulnerable to environmental harm—especially those less digitally connected. Integrating remote sensing does not resolve this problem; in some cases, it amplifies it by overlaying high-resolution spatial data onto incomplete social baselines.

Third, privacy and ethics must be at the forefront. Fuller, et al. [

39] raised important concerns about passive data collection, particularly when GPS traces or inferred activity patterns are used without explicit consent. Lee, et al. [

38] expanded on this concern, calling for full-lifecycle ethical frameworks covering data acquisition, algorithmic interpretation, and downstream application. When integrated with remote sensing, which already raises surveillance concerns, the ethical stakes become even higher.

Finally, standardization and transferability remain elusive. Many successful integration frameworks, such as the one developed by Qiao, et al. [

56], are context-specific, dependent on local data availability and platform setups. Without open standards, APIs, and shared benchmarks, it is difficult to compare results across cities or scale up successful models. Progress will require both technical and institutional innovations: improved algorithms to handle heterogeneous data, broader and more inclusive data collection to mitigate bias, robust privacy frameworks, and the development of open standards that enable sharing and scaling of integrated sensing solutions.

These identified methodological and practical challenges underscore deeper theoretical considerations necessary for integrated urban sensing frameworks. To meaningfully address these barriers, the next section explores the essential theoretical advancements required to transition urban sensing from siloed methodologies towards a unified, system-based conceptual paradigm.

6. Towards a Theoretical Advancement in Urban Remote Sensing

The integration of remote sensing and social sensing is more than a methodological innovation but fundamentally reshapes the theoretical foundation of urban observation. The conceptual foundation of our proposed framework rests on rethinking the nature of urban observation. Historically, urban remote sensing has prioritized quantifiable, physical characteristics visible from above, such as built-up density, surface temperature, vegetation cover, and infrastructure footprint, often neglecting dynamic human dimensions. Social sensing, by contrast, privileges the lived, experiential, and behavioral side of urban life, such as mobility flows, public sentiment, functional usage, and social vulnerability, creating opportunities for a holistic theoretical understanding of cities as dynamic socio-ecological systems. This section moves from critique to construction by systematically building our proposed systems-based framework for an integrated hybrid urban observatory. This integrated approach compels urban scholars to reconceptualize what constitutes urban knowledge, one that brings structure and agency, matter and meaning, and human behaviors and the environment into the same analytical space [

89].

6.1. Rethinking Urban Observations

Conventional urban remote sensing is often critiqued for its assumptions of visibility and fixity [

18]. It emphasizes mapping what is there (land surfaces, rooftops, and street patterns) rather than what is happening or how it is experienced. Yet cities are no longer adequately described through static geographies. They are fluid, multi-scalar systems in which the observable is shaped by, and shapes, socio-political processes.

Recent works reflect an emerging discomfort with this limitation. For instance, Wentz, et al. [

6] argued for urban remote sensing to move beyond structural analysis toward supporting research in environmental and social change. Liu, et al. [

90] and Liu, et al. [

9] advanced social sensing as a corrective, bringing attention to the behavioral rhythms and adaptive strategies that define urban life. Ouyang, et al. [

82] explicitly proposed “semantic fusion” to bridge the physical and social readings of urban space, recognizing that neither is complete on its own.

These perspectives suggest that urban sensing must evolve from a focus on what is visible to a framework that interprets what is meaningful, hence the rationale of integrating social sensing into the broader remote sensing theoretical framework. A rethought urban observation model would prioritize hybridity, not only of data sources but of theoretical assumptions. Cities are not just assemblages of buildings and infrastructure but also patterns of use, emotion, exclusion, and resilience and, most of all, of people, the urban dwellers. To operationalize this rethought urban observation model, the framework requires a new class of metrics capable of capturing these multifaceted urban realities. The following section delves into the development of new, practical metrics and “tools’ designed to capture these multifaceted urban realities by synthesizing remote and social sensing data.

6.2. Building New Metrics for a Hybrid Paradigm

To support this new observational logic, urban remote sensing science must develop new metrics that synthesize remote and social data, not simply overlay them. These new metrics must go beyond traditional land-use classifications or image-derived indices (e.g., NDVI, NDBI) to reflect urban functionality, emotional geographies, and experiential inequality.

The theoretical transition toward integrated sensing paradigms directly informs the design and application of these novel urban metrics. The methodologies introduced earlier, including Multisource Dynamic Fusion Networks (MDFNs) and uncertainty quantification (as detailed in

Section 5.2 and

Section 5.3), exemplify how theoretical integration translates into practical metrics. For example, MDFN’s fusion of spatial and social data provides a foundation for new hybrid metrics that measure urban functionality, integrating human behaviors with physical structures. Furthermore, semantic models [

91] offer insight into how sentiment and human activity can reclassify urban zones, not by zoning code or building form but by how people use and interpret space during flooding events. In addition, POI data is frequently integrated with remote imagery [

32,

56,

67,

92], which can shift land classification from rigid categories to probabilistic functions based on real-world activity. An integration of these new data sources into the existing sensing framework would be the clear starting point of creating new metrics for urban sensing technology.

The next step is to formalize such metrics into integrated frameworks. One possible direction is the creation of an activity density index, which would synthesize foot traffic data, GPS trajectories, and high-resolution optical or LiDAR remote sensing imagery (defining the physical layout of streets, buildings, and public spaces like parks). The synthesis would involve spatially overlaying activity data onto the RS-defined physical context, calculating activity densities (e.g., persons per hour per square meter) for specific zones, and analyzing temporal patterns to provide a more behaviorally grounded view of urban space utilization. Similarly, coupling sentiment analysis from social media with environmental indicators such as surface temperature or vegetation loss could yield a sentiment–environmental coupling score, a metric that captures not only where risks occur but how they are perceived and experienced by the public [

88]. For instance, a sentiment–environmental coupling score could identify areas where high land surface temperature (LST) values correlate with a high density of negative sentiment posts related to heat or other similar correlating physical and sentimental events.

Another forward-looking metric could be a hybrid livability index [

93]. Unlike conventional indices that rely solely on objective measures, this approach would reflect the experiential dimensions of urban well-being. It would synthesize objective environmental quality measures derived from remote sensing (such as air quality parameters, green space provision mapped via optical/hyperspectral data, urban heat island intensity from thermal data) with subjective public perception data sourced from social sensing (such as geolocated posts on safety, satisfaction with amenities, or community well-being expressed on social platforms). This index would offer a more holistic view of urban well-being by identifying potential disparities between physically measured environmental conditions and residents’ lived experiences of those conditions [

94]. Together, these examples illustrate how hybrid metrics can shift urban science from static descriptors toward dynamic indicators of how cities function and how they feel to inhabitants. By formally integrating such hybrid metrics into urban monitoring systems, cities can move closer to an integrated observatory model, one that continuously gauges both physical conditions (traditional remote sensing) and human experiences (social sensing) for decision support.

These metrics can support not just research but also decision-making. They can guide zoning revisions, resource allocation, and crisis response in more responsive, participatory, and evidence-based ways. However, generating and interpreting these dynamic metrics requires an analytical engine capable of understanding feedback loops and emergent behaviors—a shift toward systems-based sensing.

6.3. Toward Systems-Based Urban Sensing

Addressing methodological challenges highlighted previously (

Section 5.4)—such as data heterogeneity and scalability—necessitates a shift toward systems-based observational frameworks. Integrated sensing facilitates viewing urban phenomena not as isolated spatial or social variables but as interacting components within dynamic urban systems. This systems-based approach explicitly incorporates feedback loops, temporal dynamics, and emergent behaviors that traditional remote sensing or isolated social sensing methods alone cannot adequately capture. Yet most current sensing models—remote or social—are optimized for classification, not for system behavior. The fusion of sensing modalities opens the door to systemic modeling approaches that can move beyond snapshot diagnostics toward dynamic understanding.

Recent efforts point in this direction. Yan, et al. [

47] addressed uncertainty in fused urban land-use maps, acknowledging that different sensing modes may tell different “truths” about the same space. Such recognition of ambiguity and multiplicity is foundational to systems thinking. Similarly, Du, et al. [

24] called for remote sensing systems that can not only detect change but also anticipate it by embedding sensing in temporal models of urban transformation.

What is needed now is a sensing model that reflects cities as evolving, co-produced systems. For example, one promising direction involves the creation of coupled human–environment observatories that integrate platforms capable of continuously monitoring both environmental processes and human behavioral responses. These observatories could track, for example, the co-evolution of urban heat exposure and adaptive behaviors such as shade-seeking or reduced outdoor activity.

In parallel, predictive feedback models are needed to anticipate the consequences of interventions. These would simulate how changes in urban design, such as adding green infrastructure, might ripple through human behavior, health outcomes, and ecological systems. At a larger scale, networked urban twin systems could emerge: dynamic, interactive models that fuse physical data (such as land cover or flood risk) with social data (such as mobility, sentiment, or service demand), enabling planners to explore cascading effects and policy trade-offs in real time.

By weaving these elements into a systems framework, urban remote sensing moves beyond monitoring and classification toward explanation, simulation, and anticipatory governance, making it possible not only to understand what cities are but how they behave and might change. Such approaches will enable urban science not only to map what has happened but also to anticipate what could happen, thus helping cities adapt to climate pressures, social change, and technological disruption. For such anticipatory power to be translated into action, the framework requires a robust and ethically grounded interface with urban policy.

6.4. Policy Implications