Cross-Year Rapeseed Yield Prediction for Harvesting Management Using UAV-Based Imagery

Abstract

1. Introduction

2. Materials and Methods

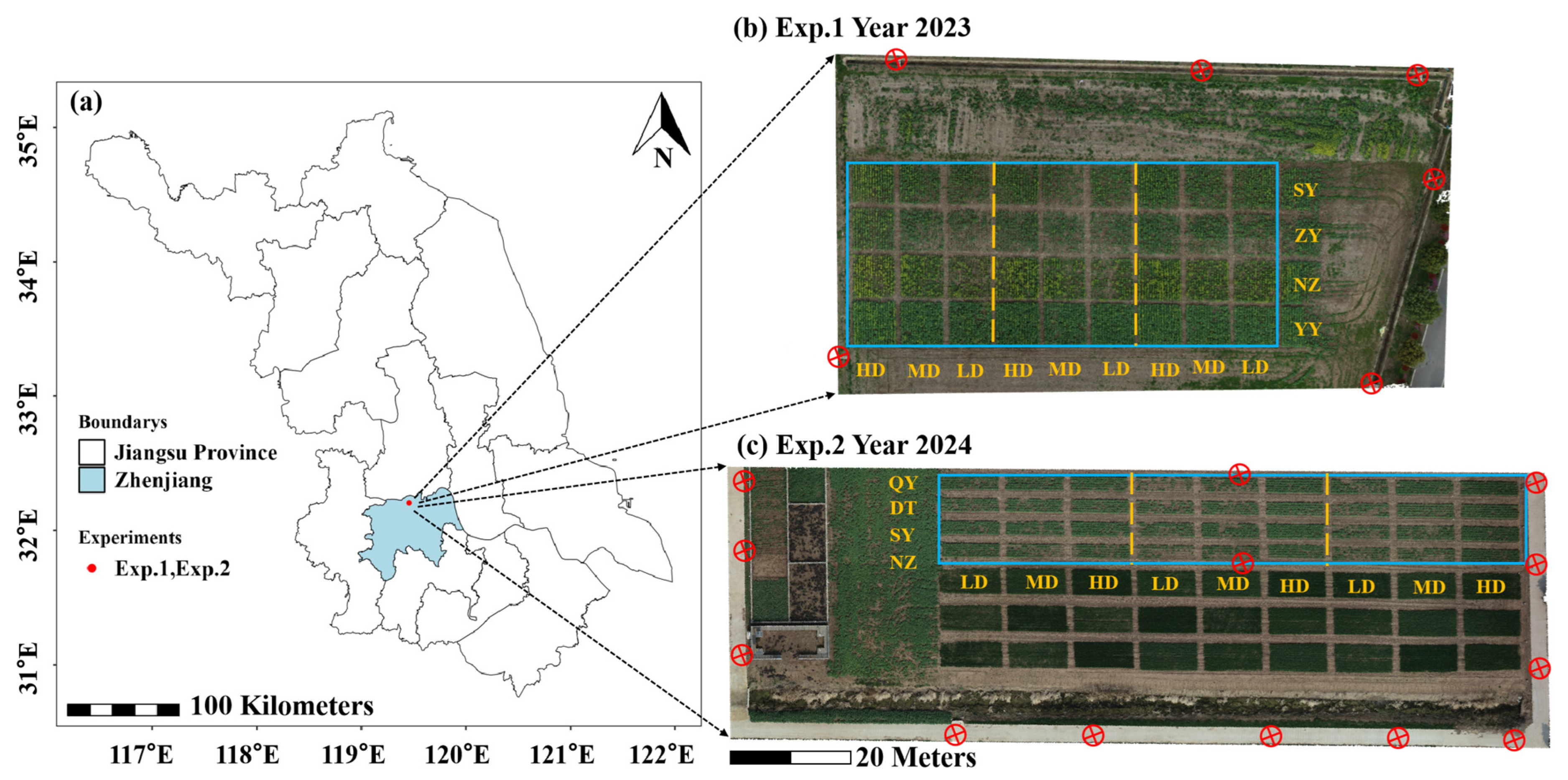

2.1. Test Site and Field Designs

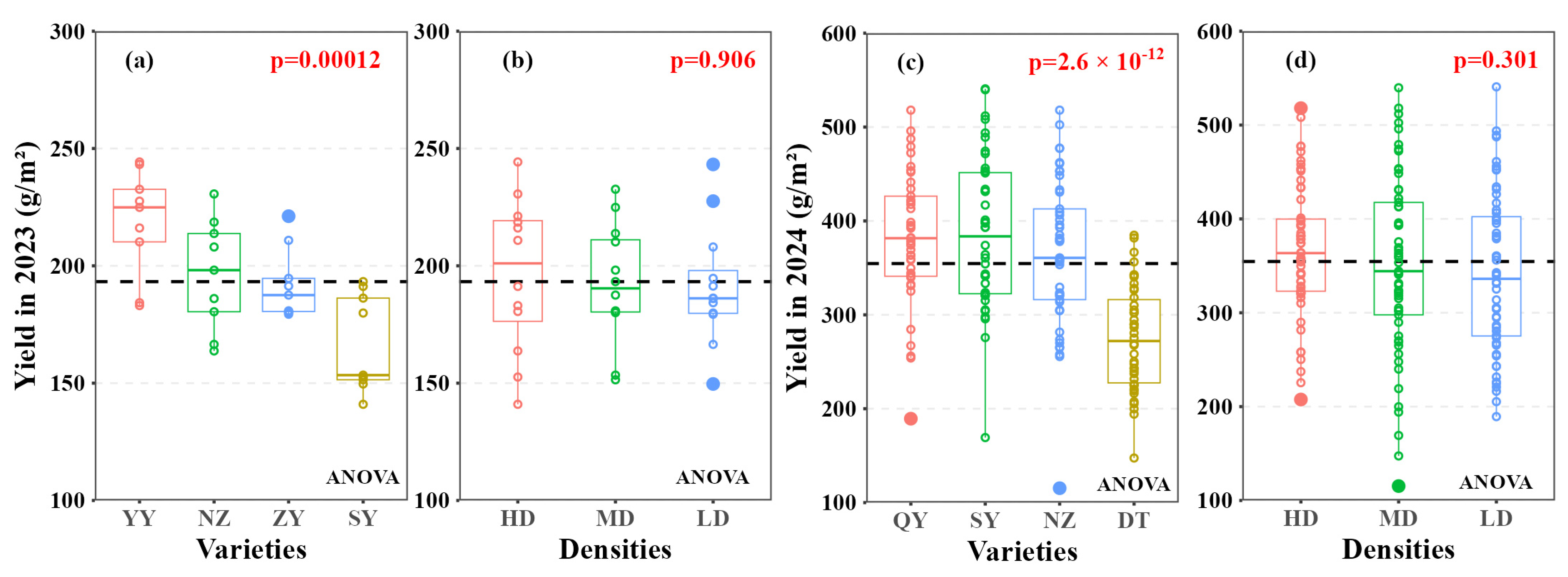

2.2. Field Trials over Two Years

2.2.1. Field Yield Data Acquisition

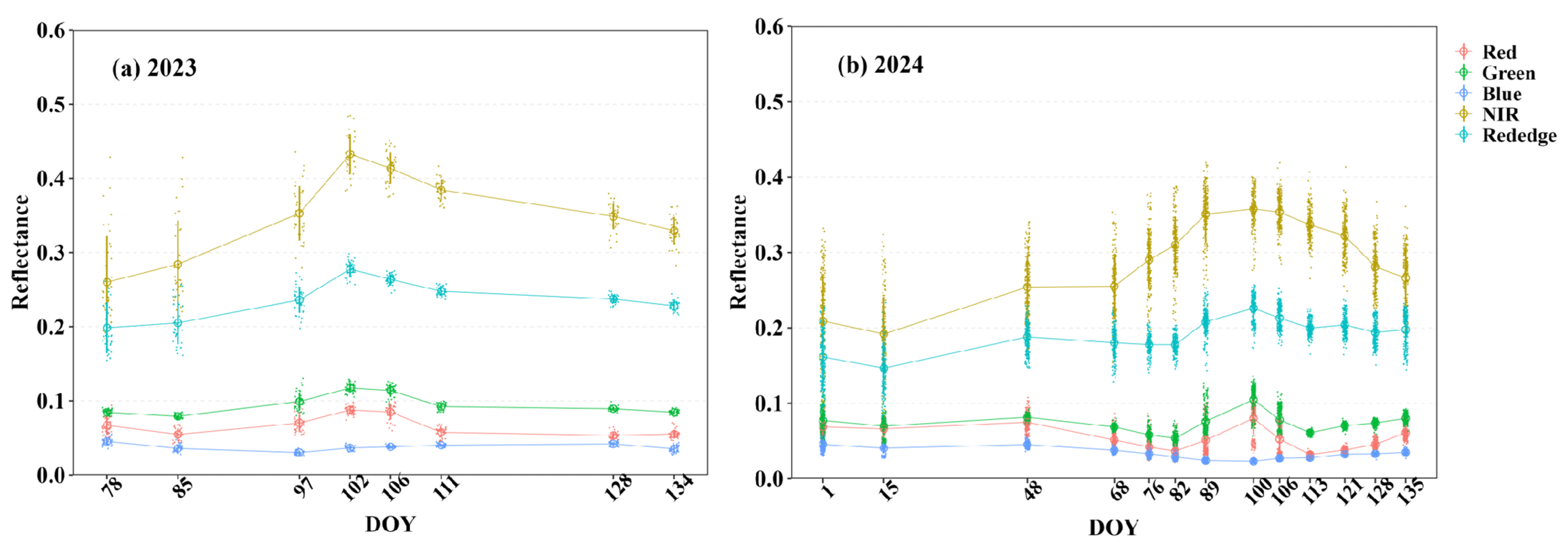

2.2.2. Multispectral Images Collection

2.3. Calculation and Selection of Features

2.3.1. Vegetation Index

2.3.2. Texture Features

2.4. Regression Models

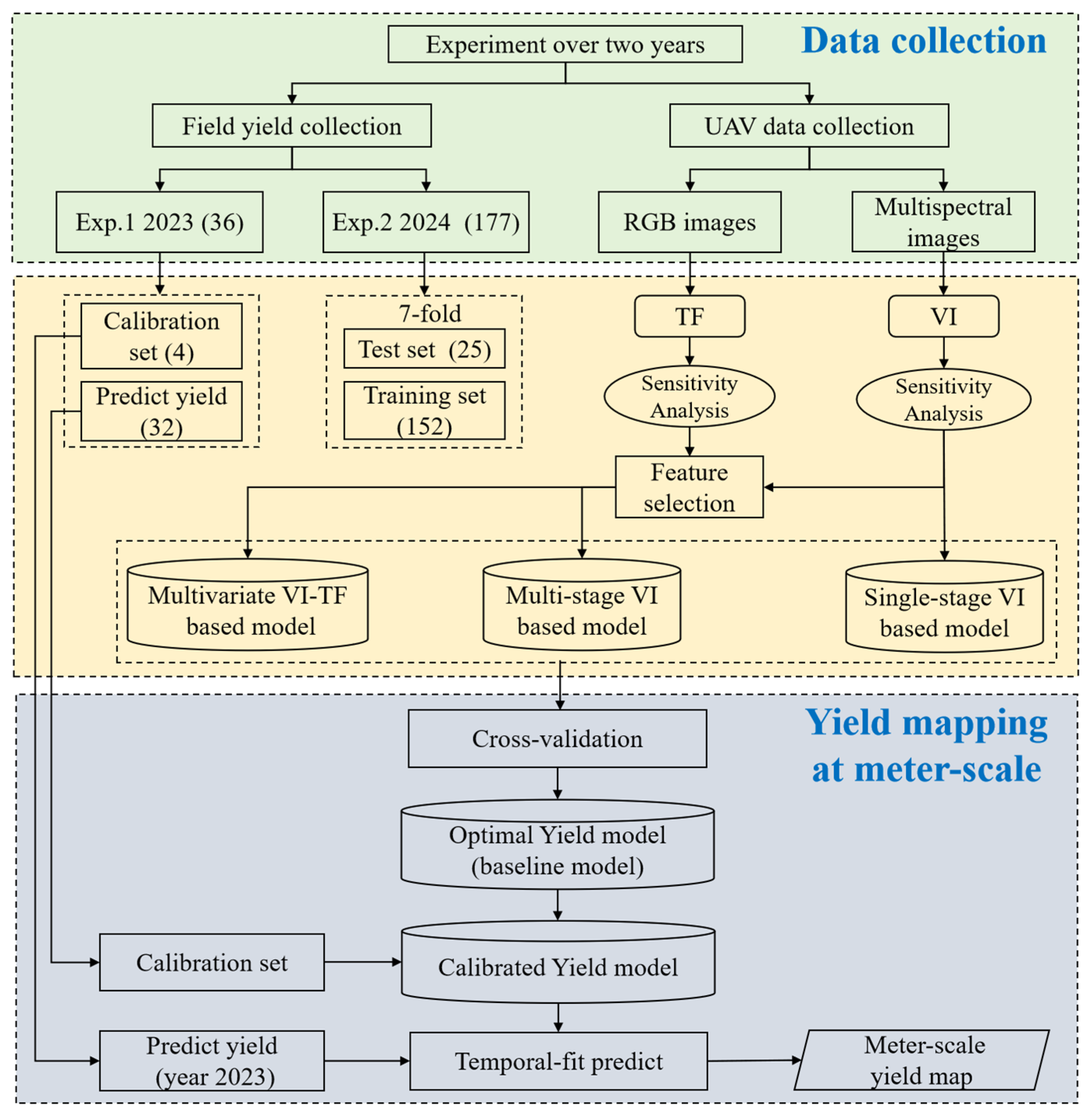

2.5. Yield Prediction Framework

2.6. Software

3. Results

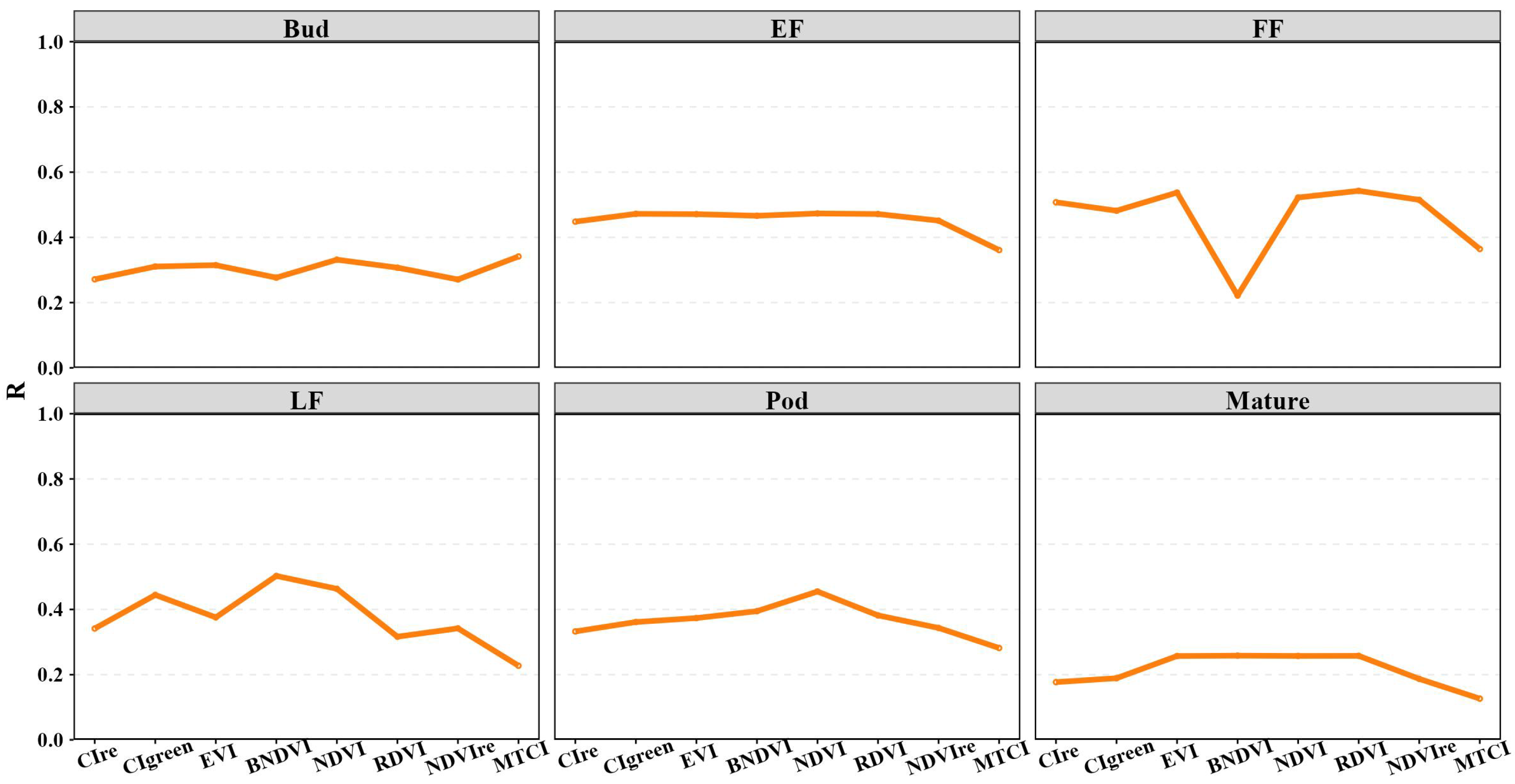

3.1. Sensitivity Analysis of Model Features

3.1.1. Relationship Between VIs at Single-Stage and Rapeseed Yield

3.1.2. Relationship Between TFs at Single-Stage and Rapeseed Yield

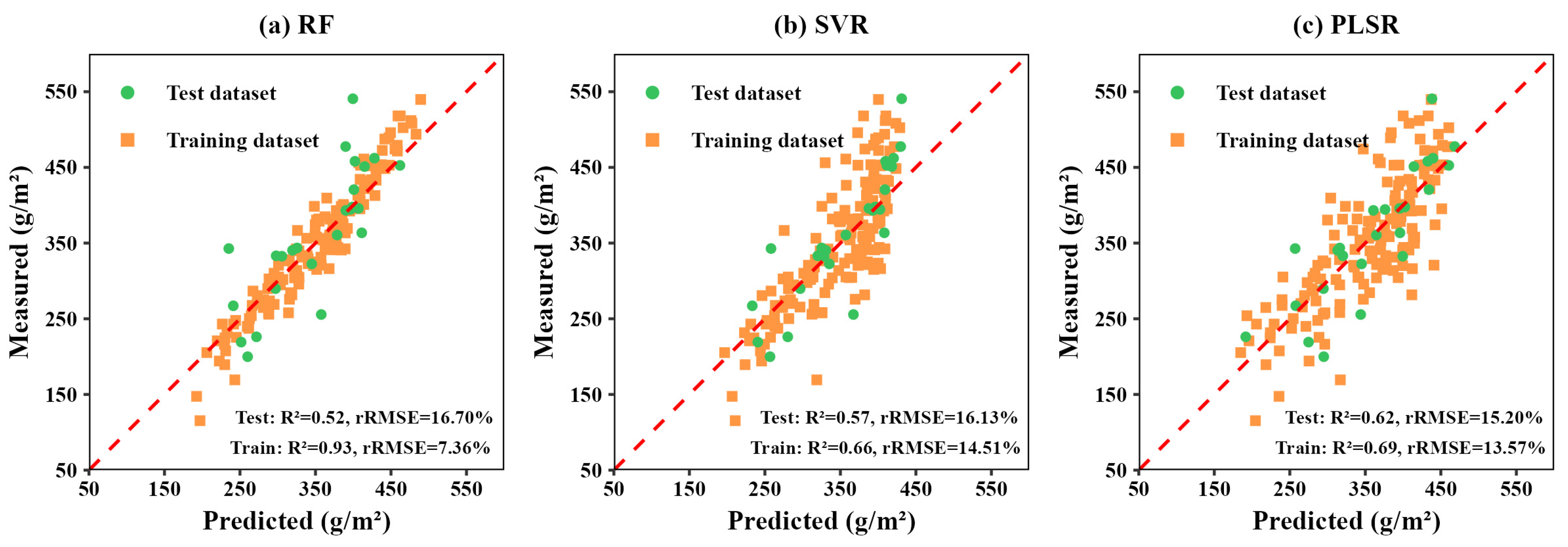

3.2. Results of Yield Prediction in 2024

3.2.1. Single-Stage Prediction

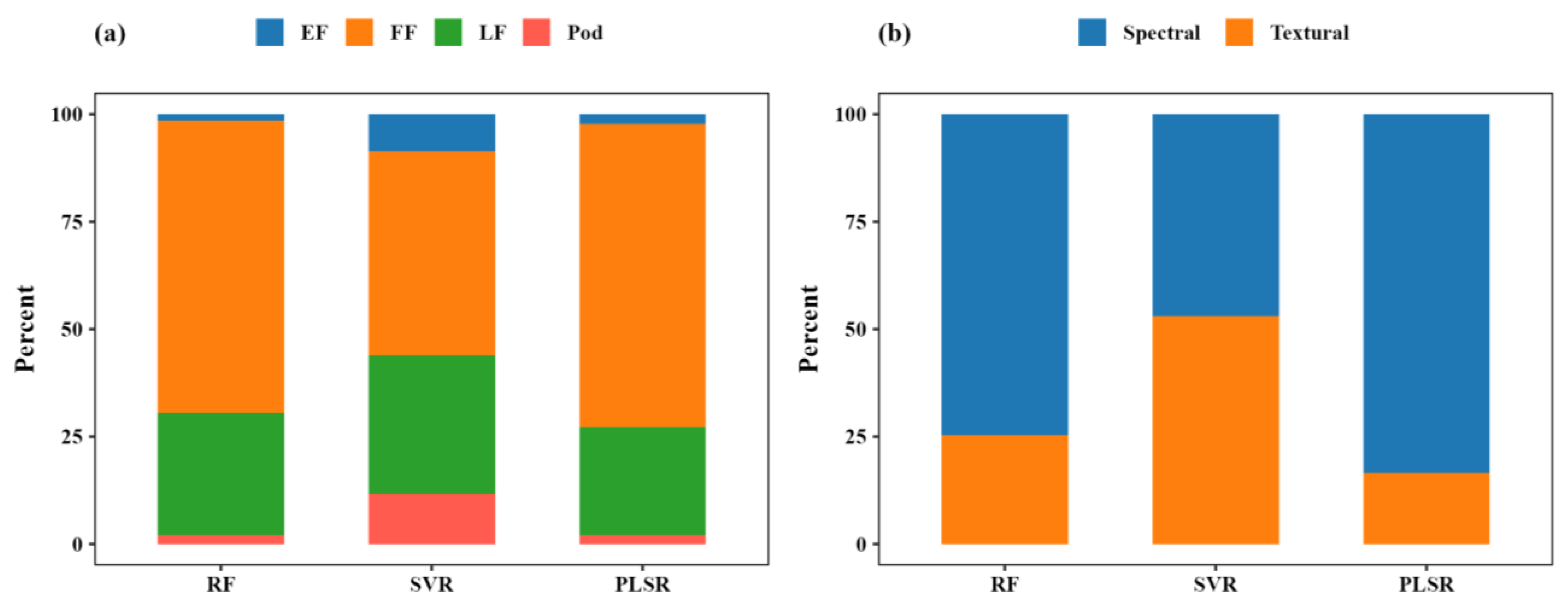

3.2.2. Multi-Stage Prediction

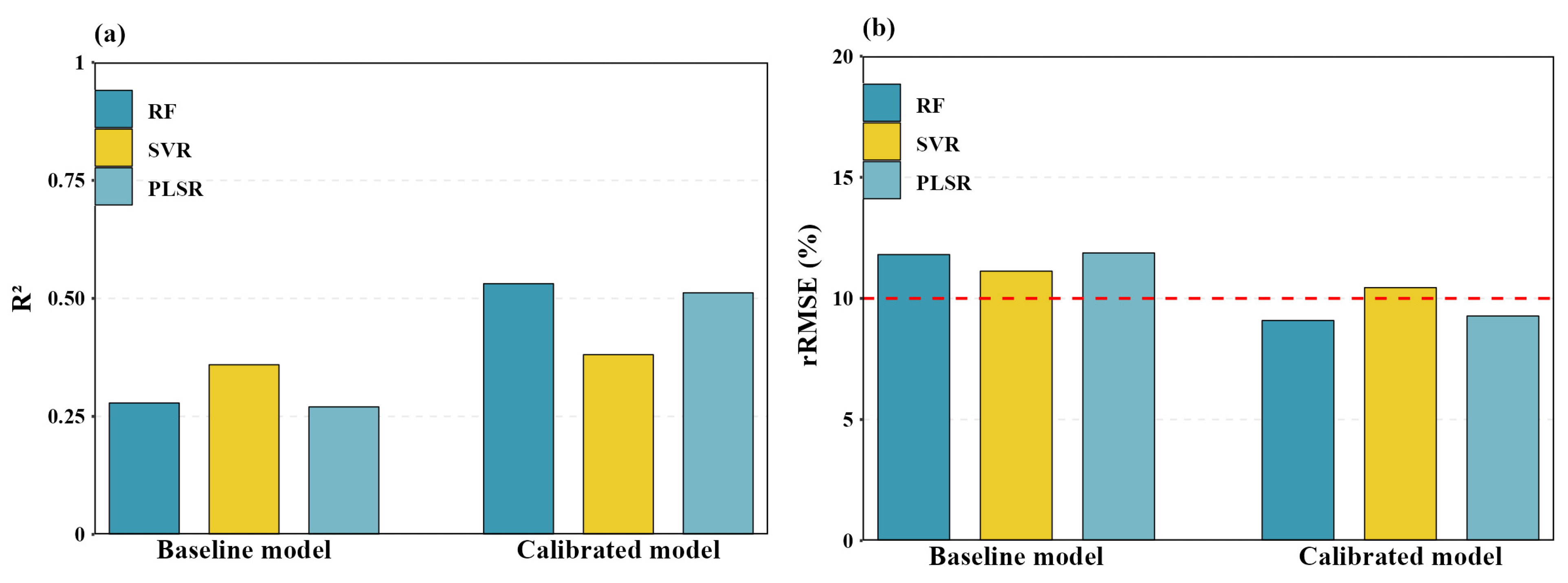

3.3. Validation Results of Yield Prediction of Temporal-Fit Scenario

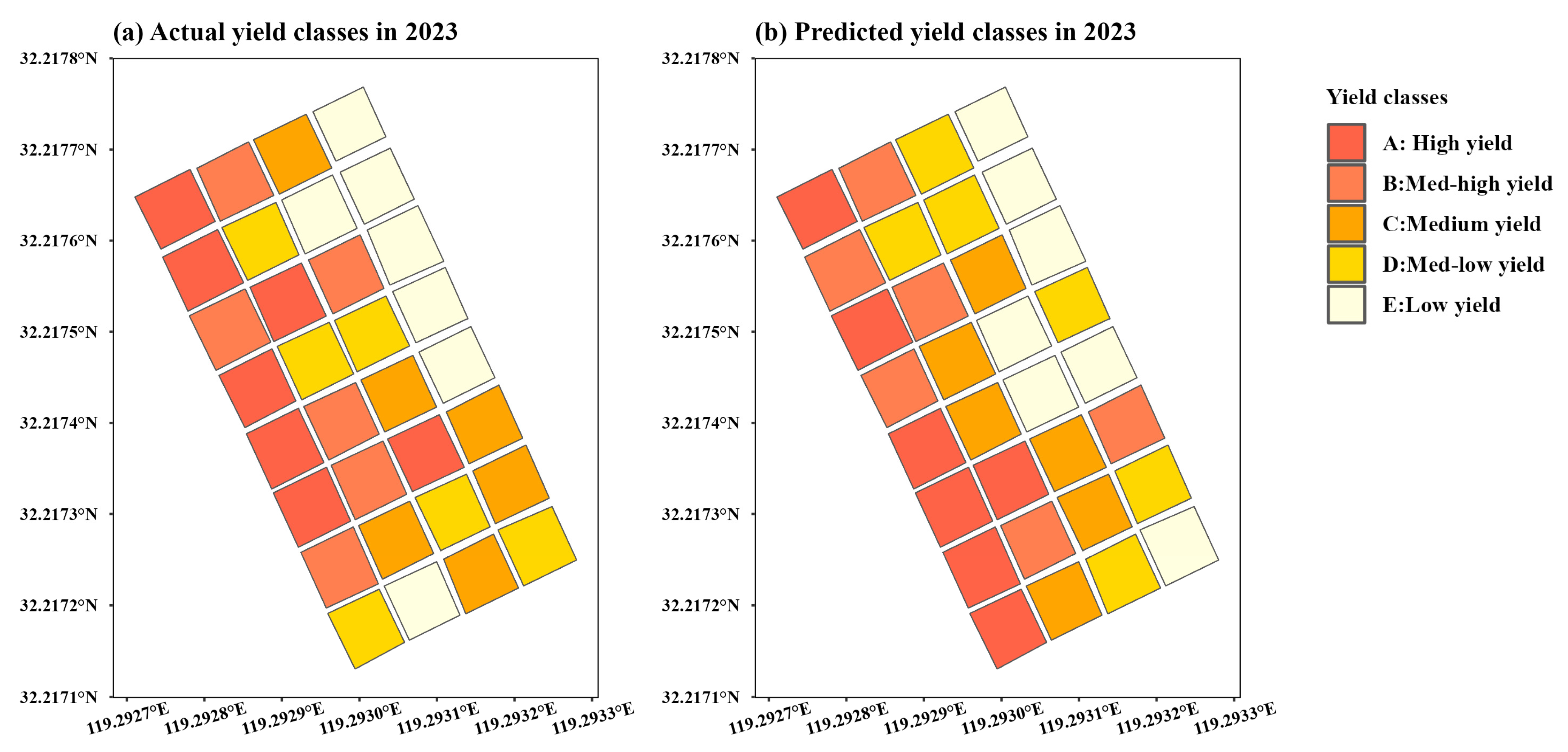

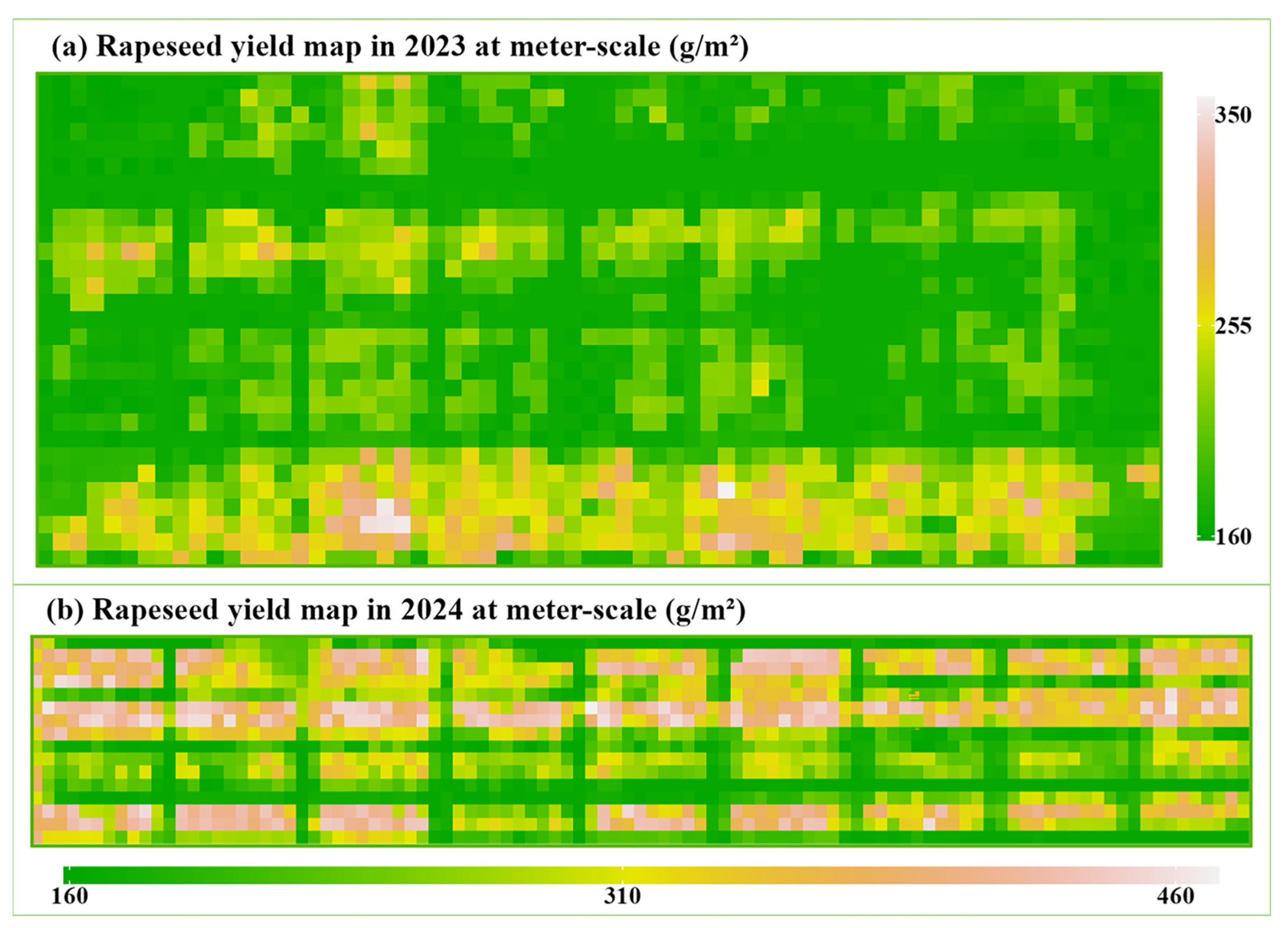

3.4. Yield Distribution Map at Meter-Scale

4. Discussion

4.1. Contrasting Multi-Stage and Multivariate Models

4.2. Yield Prediction Performance at Temporal-Fit Scenario

4.3. Application Potential and Limitations

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Velde, M.; Bouraoui, F.; Aloe, A. Pan-European regional-scale modelling of water and N efficiencies of rapeseed cultivation for biodiesel production. Glob. Change Biol. 2008, 15, 24–37. [Google Scholar] [CrossRef]

- Nowosad, K.; Liersch, A.; Popławska, W.; Bocianowski, J. Genotype by environment interaction for seed yield in rapeseed (Brassica napus L.) using additive main effects and multiplicative interaction model. Euphytica 2015, 208, 187–194. [Google Scholar] [CrossRef]

- Kirkegaard, J.A.; Lilley, J.M.; Brill, R.D.; Ware, A.H.; Walela, C.K. The critical period for yield and quality determination in canola (Brassica napus L.). Field Crops Res. 2018, 222, 180–188. [Google Scholar] [CrossRef]

- Wan, L.; Liu, Y.; He, Y.; Cen, H. Prior knowledge and active learning enable hybrid method for estimating leaf chlorophyll content from multi-scale canopy reflectance. Comput. Electron. Agric. 2023, 214, 106485. [Google Scholar] [CrossRef]

- Zhao, Y.; Meng, Y.; Han, S.; Feng, H.; Yang, G.; Li, Z. Should phenological information be applied to predict agronomic traits across growth stages of winter wheat? Crop J. 2022, 10, 1346–1352. [Google Scholar] [CrossRef]

- Lu, X.; Shen, Y.; Xie, J.; Yang, X.; Shu, Q.; Chen, S.; Shen, Z.; Cen, H. Phenotyping of Panicle Number and Shape in Rice Breeding Materials Based on Unmanned Aerial Vehicle Imagery. Plant Phenomics 2024, 6, 0265. [Google Scholar] [CrossRef]

- Malambo, L.; Popescu, S.C.; Murray, S.C.; Putman, E.; Pugh, N.A.; Horne, D.W.; Richardson, G.; Sheridan, R.; Rooney, W.L.; Avant, R.; et al. Multitemporal field-based plant height estimation using 3D point clouds generated from small unmanned aerial systems high-resolution imagery. Int. J. Appl. Earth Obs. Geoinf. 2018, 64, 31–42. [Google Scholar] [CrossRef]

- Lin, Y.C. Ayman. Quality control and crop characterization framework for multi-temporal UAV LiDAR data over mechanized agricultural fields. Remote Sens. Environ. 2021, 256, 112307. [Google Scholar] [CrossRef]

- Teshome, F.T.; Bayabil, H.K.; Hoogenboom, G.; Schaffer, B.; Singh, A.; Ampatzidis, Y. Unmanned aerial vehicle (UAV) imaging and machine learning applications for plant phenotyping. Comput. Electron. Agric. 2023, 212, 108064. [Google Scholar] [CrossRef]

- Gu, Q.; Huang, F.; Lou, W.; Zhu, Y.; Hu, H.; Zhao, Y.; Zhou, H.; Zhang, X. Unmanned aerial vehicle-based assessment of rice leaf chlorophyll content dynamics across genotypes. Comput. Electron. Agric. 2024, 221, 108821. [Google Scholar] [CrossRef]

- Zhang, M.; Gao, Y.; Zhang, Y.; Fischer, T.; Zhao, Z.; Zhou, X.; Wang, Z.; Wang, E. The contribution of spike photosynthesis to wheat yield needs to be considered in process-based crop models. Field Crops Res. 2020, 257, 107931. [Google Scholar] [CrossRef]

- Li, H.; Zhao, C.; Yang, G.; Feng, H. Variations in crop variables within wheat canopies and responses of canopy spectral characteristics and derived vegetation indices to different vertical leaf layers and spikes. Remote Sens. Environ. 2015, 169, 358–374. [Google Scholar] [CrossRef]

- Sulik, J.J.; Long, D.S. Spectral indices for yellow canola flowers. Int. J. Remote Sens. 2015, 36, 2751–2765. [Google Scholar] [CrossRef]

- Dong, T.; Shang, J.; Qian, J.; Ma, B.; Kovacs, B.; Walters, J.M.; Jiao, D.; Geng, X.; Shi, X.; Cheng, Y. Assessment of red-edge vegetation indices for crop leaf area index estimation. Remote Sens. Environ. 2019, 222, 90–102. [Google Scholar] [CrossRef]

- Sulik, J.J.; Long, D.S. Spectral considerations for modeling yield of canola. Remote Sens. Environ. 2016, 184, 161–174. [Google Scholar] [CrossRef]

- Peng, Y.; Zhu, T.E.; Li, Y.; Dai, C.; Fang, S.; Gong, Y.; Wu, X.; Zhu, R.; Liu, K. Remote prediction of yield based on LAI estimation in oilseed rape under different planting methods and nitrogen fertilizer applications. Agric. For. Meteorol. 2019, 271, 116–125. [Google Scholar] [CrossRef]

- Fan, H.; Liu, S.; Li, J.; Li, L.; Dang, L.; Ren, T.; Lu, J. Early prediction of the seed yield in winter oilseed rape based on the near-infrared reflectance of vegetation (NIRv). Comput. Electron. Agric. 2021, 186, 106314. [Google Scholar] [CrossRef]

- Duan, B.; Fang, S.; Gong, Y.; Peng, Y.; Wu, X.; Zhu, R. Remote estimation of grain yield based on UAV data in different rice cultivars under contrasting climatic zones. Field Crops Res. 2021, 267, 108148. [Google Scholar] [CrossRef]

- Lu, L.; Luo, J.; Xin, Y.; Duan, H.; Sun, Z.; Qiu, Y.; Xiao, Q. How can UAV contribute in satellite-based Phragmites australis aboveground biomass estimating? Int. J. Appl. Earth Obs. Geoinf. 2022, 114, 102712. [Google Scholar] [CrossRef]

- Zhou, X.; Zheng, H.B.; Xu, X.Q.; He, J.Y.; Ge, X.K.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.; Tian, Y. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogramm. Remote Sens. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Navarro, A.; Young, M.; Allan, B.; Carnell, P.; Macreadie, P.; Ierodiaconou, D. The application of unmanned aerial vehicles (UAVs) to estimate above-ground biomass of mangrove ecosystems. Remote Sens. Environ. 2020, 242, 111741. [Google Scholar] [CrossRef]

- Du, R.; Lu, J.; Xiang, Y.; Zhang, F.; Chen, J.; Tang, Z.; Shi, H.; Wang, X.; Li, W. Estimation of winter canola growth parameter from UAV multi-angular spectral-texture information using stacking-based ensemble learning model. Comput. Electron. Agric. 2024, 222, 109074. [Google Scholar] [CrossRef]

- Ji, Y.; Liu, Z.; Liu, R.; Wang, Z.; Zong, X.; Yang, T. High-throughput phenotypic traits estimation of faba bean based on machine learning and drone-based multimodal data. Comput. Electron. Agric. 2024, 227, 109584. [Google Scholar] [CrossRef]

- Mateo-Sanchis, A.; Piles, M.; Muñoz-Marí, J.; Adsuara, J.E.; Pérez-Suay, A.; Camps-Valls, G. Synergistic integration of optical and microwave satellite data for crop yield estimation. Remote Sens. Environ. 2019, 234, 111391. [Google Scholar] [CrossRef]

- van Klompenburg, T.; Kassahun, A.; Catal, C. Crop yield prediction using machine learning: A systematic literature review. Comput. Electron. Agric. 2020, 177, 105735. [Google Scholar] [CrossRef]

- Li, Z.; Zhao, Y.; Taylor, J.; Gaulton, R.; Jin, X.; Song, X.; Li, Z.; Meng, Y.; Chen, P.; Feng, H.; et al. Comparison and transferability of thermal, temporal and phenological-based in-season predictions of above-ground biomass in wheat crops from proximal crop reflectance data. Remote Sens. Environ. 2022, 273, 112133. [Google Scholar] [CrossRef]

- Louzada Pereira, L.; Carvalho Guarçoni, R.; Soares Cardoso, W.; Côrrea Taques, R.; Rizzo Moreira, T.; da Silva, S.F.; Schwengber ten Caten, C. Influence of Solar Radiation and Wet Processing on the Final Quality of Arabica Coffee. J. Food Qual. 2018, 2018, 6408571. [Google Scholar] [CrossRef]

- Michau, G.; Fink, O. Unsupervised transfer learning for anomaly detection: Application to complementary operating condition transfer. Knowl.-Based Syst. 2021, 216, 106895. [Google Scholar] [CrossRef]

- Meiyan, S.; Mengyuan, S.; Qizhou, D.; Xiaohong, Y.; Baoguo, L.; Yuntao, M. Estimating the maize above-ground biomass by constructing the tridimensional concept model based on UAV-based digital and multi-spectral images. Field Crops Res. 2022, 282, 107472. [Google Scholar] [CrossRef]

- Ye, Y.; Jin, L.; Bian, C.; Xian, G.; Lin, Y.; Liu, J.; Guo, H. Estimating potato aboveground biomass using unmanned aerial vehicle RGB imagery and analyzing its relationship with tuber biomass. Field Crops Res. 2024, 319, 109657. [Google Scholar] [CrossRef]

- Stamm, M.J.; Ciampitti, I.A. Canola Growth Stages and Development; Kansas State University Agricultural Experiment Station and Cooperative Extension Service: Manhattan, KS, USA, 2017; p. MF3236.

- Roujean, J.-L.; Breon, F.-M. Estimating PAR absorbed by vegetation from bidirectional reflectance measurements. Remote Sens. Environ. 1995, 51, 375–384. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Deering, D.W. Monitoring the Vernal Advancement and Retrogradation (Green Wave Effect) of Natural Vegetation; Goddard Space Flight Center: Greenbelt, MD, USA, 1973. [Google Scholar]

- Gitelson, A.A.; Gritz, Y.; Merzlyak, M.N. Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. J. Plant Physiol. 2003, 160, 271–282. [Google Scholar] [CrossRef]

- Liu, H.Q.; Huete, A. A feedback based modification of the NDVI to minimize canopy background and atmospheric noise. IEEE Trans. Geosci. Remote Sens. 1995, 33, 457–465. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Viña, A.; Ciganda, V.; Rundquist, D.C.; Arkebauer, T.J. Remote estimation of canopy chlorophyll content in crops. Geophys. Res. Lett. 2005, 32, L08403. [Google Scholar] [CrossRef]

- Gitelson, A.; Merzlyak, M.N. Spectral reflectance changes associated with autumn senescence of Aesculus hippocastanum L. and Acer platanoides L. leaves. Spectral features and relation to chlorophyll estimation. J. Plant Physiol. 1994, 143, 286–292. [Google Scholar] [CrossRef]

- Chen, J.M. Evaluation of vegetation indices and a modified simple ratio for boreal applications. Can. J. Remote Sens. 1996, 22, 229–242. [Google Scholar] [CrossRef]

- Zhou, L.; Nie, C.; Su, T.; Xu, X.; Song, Y.; Yin, D.; Liu, S.; Liu, Y.; Bai, Y.; Jia, X.; et al. Evaluating the Canopy Chlorophyll Density of Maize at the Whole Growth Stage Based on Multi-Scale UAV Image Feature Fusion and Machine Learning Methods. Agriculture 2023, 13, 895. [Google Scholar] [CrossRef]

- Nogueira Martins, R.; de Assis de Carvalho Pinto, F.; Marçal de Queiroz, D.; Sárvio Magalhães Valente, D.; Tadeu Fim Rosas, J.; Fagundes Portes, M.; Sânzio Aguiar Cerqueira, E. Digital mapping of coffee ripeness using UAV-based multispectral imagery. Comput. Electron. Agric. 2023, 204, 107499. [Google Scholar] [CrossRef]

- Su, X.; Wang, J.; Ding, L.; Lu, J.; Zhang, J.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.; Tian, Y. Grain yield prediction using multi-temporal UAV-based multispectral vegetation indices and endmember abundance in rice. Field Crops Res. 2023, 299, 108992. [Google Scholar] [CrossRef]

- Sunoj, S.; Polson, B.; Vaish, I.; Marcaida, M.; Longchamps, L.; Aardt, J.V.; Ketterings, Q.M.; Hansen, J.W.; Thornton, P.K.; Berentsen, P.B.M. Corn grain and silage yield class prediction for zone delineation using high-resolution satellite imagery. Agric. Syst. 2024, 218, 104009. [Google Scholar] [CrossRef]

| Parameters | DJI P4M | DJI M300 (L1 Camera) |

|---|---|---|

| Lens | FOV 62.7° 5.74 mm (35 mm format equivalent) f/2.2, focus infinity | 8.8 mm (24 mm format equivalent) f/2.8–f/11 |

| Sensors | 6/1.2-inch CMOS | 1/1.7-inch CMOS |

| Effective pixels | 2.08 million | 20 million |

| Maximum resolution | 1600 × 1300 | 4864 × 3648 (4:3) |

| Growth Stage | Sampling Date Exp.1 | Sampling Date Exp.2 | Data Collection |

|---|---|---|---|

| Overwintering | 12/31, 1/15, 1/27 | RGB images Multispectral images | |

| Budding (Bud) | 3/19 | 2/17, 3/8 | |

| Early flowering (EF) | 3/26 | 3/16 | |

| Full flowering (FF) | 4/7, 4/12 | 3/22, 3/29 | |

| Late flowering (LF) | 4/16 | 4/9, 4/15 | |

| Pod filling (Pod) | 4/21 | 4/22, 5/1 | |

| Maturing (M) | 5/8, 5/14 | 5/8, 5/15 | |

| Harvesting | 6/2 | 5/18, 5/24 | Yield |

| Vegetation Index | Formula | References |

|---|---|---|

| Re-normalized difference vegetation index (RDVI) | (NIR − Red)/sqrt (NIR + Red) | [32] |

| Blue normalized difference vegetation index (BNDVI) | (NIR − Blue)/(NIR + Blue) | [15] |

| Normalized difference vegetation index (NDVI) | (NIR − Red)/(NIR + Red) | [33] |

| Red edge chlorophyll index (CIre) | NIR/Rededge − 1 | [34] |

| Enhanced vegetation index (EVI) | 2.5 × (NIR − Red)/(NIR + 6 × Red − 7.5 × Blue + 1) | [35] |

| Green chlorophyll index (CIgreen) | NIR/Green − 1 | [36] |

| Red-edge normalized difference vegetation index (NDVIre) | (NIR − Red)/(NIR + Rededge) | [37] |

| MERIS terrestrial chlorophyll index (MTCI) | (NIR − Red)/(Rededge − Red) | [38] |

| Single-Stage VIs | EF | FF | LF | Pod | ||||

|---|---|---|---|---|---|---|---|---|

| Training Set | Test Set | Training Set | Test Set | Training Set | Test Set | Training Set | Test Set | |

| rRMSE(%) | ||||||||

| RF | 10.22 | 23.26 | 8.43 | 19.70 | 8.47 | 19.06 | 8.49 | 19.20 |

| SVR | 27.20 | 22.58 | 18.37 | 19.70 | 17.82 | 18.99 | 18.28 | 19.79 |

| PLSR | 19.01 | 19.62 | 19.22 | 19.97 | 19.82 | 20.83 | 20.06 | 21.26 |

| R2 | ||||||||

| RF | 0.88 | 0.14 | 0.90 | 0.38 | 0.91 | 0.40 | 0.91 | 0.38 |

| SVR | 0.27 | 0.23 | 0.43 | 0.38 | 0.46 | 0.40 | 0.45 | 0.35 |

| PLSR | 0.39 | 0.35 | 0.37 | 0.36 | 0.33 | 0.29 | 0.32 | 0.24 |

| Feature Combinations | Multi-Stage VIs | Multi-Stage VI-TFs | ||

|---|---|---|---|---|

| Training Set | Test Set | Training Set | Test Set | |

| rRMSE(%) | ||||

| RF | 7.36 | 16.70 | 7.89 | 18.55 |

| SVR | 14.51 | 16.13 | 16.33 | 18.49 |

| PLSR | 13.57 | 15.20 | 18.31 | 19.97 |

| R2 | ||||

| RF | 0.93 | 0.52 | 0.92 | 0.44 |

| SVR | 0.66 | 0.57 | 0.56 | 0.44 |

| PLSR | 0.69 | 0.62 | 0.43 | 0.35 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Niu, Y.; Cui, Z.; Chai, X.; Xu, L. Cross-Year Rapeseed Yield Prediction for Harvesting Management Using UAV-Based Imagery. Remote Sens. 2025, 17, 2010. https://doi.org/10.3390/rs17122010

Zhang Y, Niu Y, Cui Z, Chai X, Xu L. Cross-Year Rapeseed Yield Prediction for Harvesting Management Using UAV-Based Imagery. Remote Sensing. 2025; 17(12):2010. https://doi.org/10.3390/rs17122010

Chicago/Turabian StyleZhang, Yanni, Yaxiao Niu, Zhihong Cui, Xiaoyu Chai, and Lizhang Xu. 2025. "Cross-Year Rapeseed Yield Prediction for Harvesting Management Using UAV-Based Imagery" Remote Sensing 17, no. 12: 2010. https://doi.org/10.3390/rs17122010

APA StyleZhang, Y., Niu, Y., Cui, Z., Chai, X., & Xu, L. (2025). Cross-Year Rapeseed Yield Prediction for Harvesting Management Using UAV-Based Imagery. Remote Sensing, 17(12), 2010. https://doi.org/10.3390/rs17122010