Abstract

Semantic Change Detection (SCD) in remote sensing imagery is a common technique for monitoring surface dynamics. However, geospatial data acquisition increasingly involves the collection of visible and infrared images. SCD in visible and infrared image pairs confronts the challenge of distinguishing genuine semantic change from spectral discrepancies caused by heterogeneous imaging mechanisms. To address this issue, we propose a Modal Feature Analysis Semantic Change Detection Network (MFA-SCDNet), a novel framework that analyzes cross-modal features for change identification. The proposed architecture operates through three principal technical components: An infrared feature enhancement module that transforms infrared inputs into three-channel representations through spectral domain adaptation, enhancing the network’s perception of both high-frequency and low-frequency information in images; an encoder–decoder structure that simultaneously extracts modality-specific features and common features through adversarial learning; and a synergistic information fusion mechanism that integrates semantic recognition with change detection through multi-task optimization. Specific features are employed for semantic recognition, while common features are utilized for change detection, ultimately resulting in a comprehensive understanding of semantic changes. Experiments on public datasets show that MFA-SCDNet has an average improvement of 9.4% in mIoUbc and 12.9% in mIoUsc compared with the alternatives. MFA-SCDNet has better performance in heterogeneous images SCD.

1. Introduction

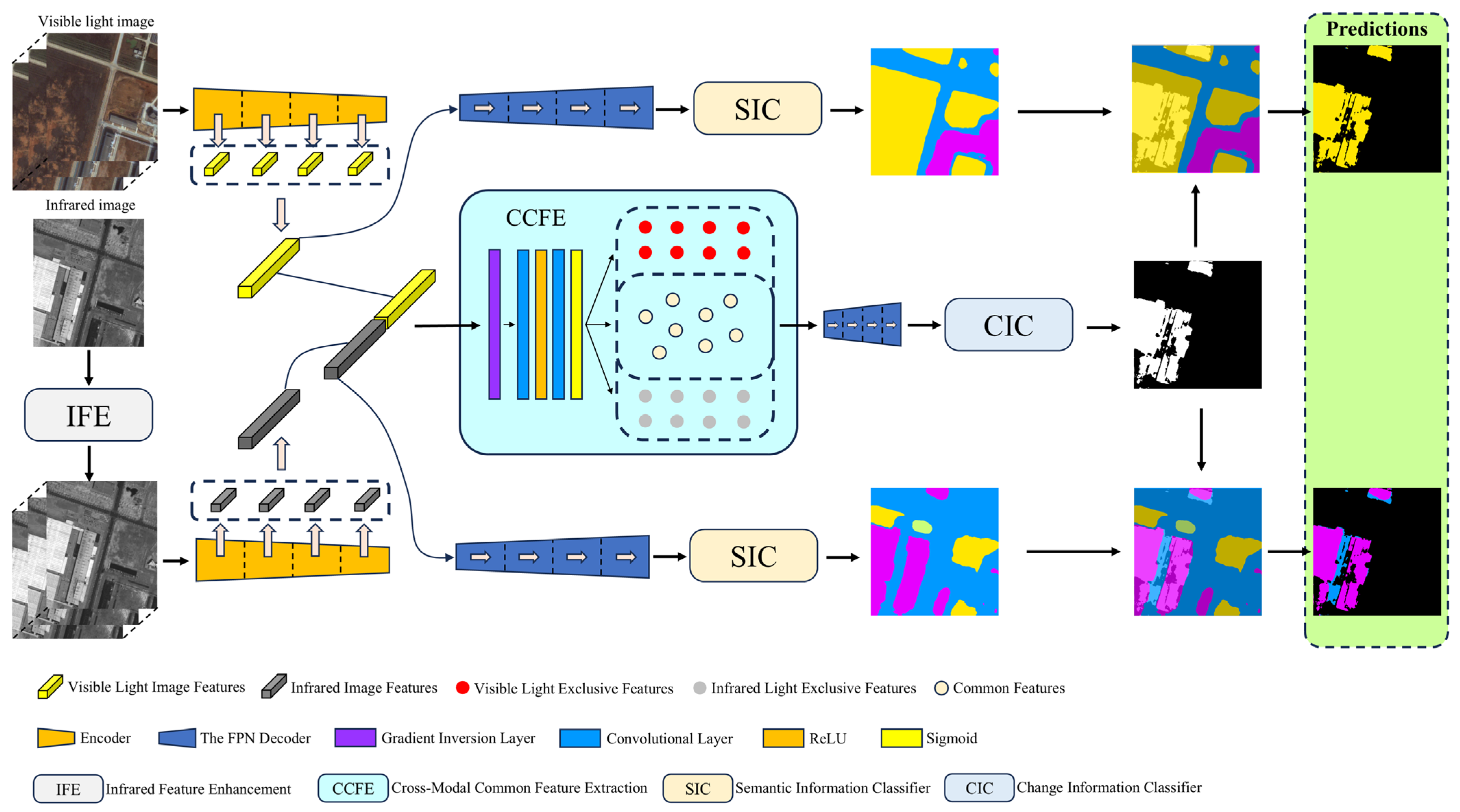

Semantic Change Detection (SCD) in cross-modal remote sensing imagery aims to identify semantically meaningful alterations between visible and infrared image pairs—a critical capability for all-weather environmental monitoring [1,2]. Unlike homogeneous SCD scenarios, this task confronts two inherent challenges: (1) Spectral discrepancy: Visible sensors capture surface reflectance in RGB channels, while infrared detectors record thermal radiation profiles, creating divergent feature representations even for identical targets. (2) Task interdependence: Effective SCD requires simultaneous optimization of pixel-level change detection and object-aware semantic segmentation, as isolated processing of either task propagates errors through cascading mismatches. For instance, neglecting change detection leads to redundant computations in static regions, while ignoring semantic segmentation fails to categorize detected changes into meaningful classes. Only by jointly modeling their bidirectional constraints can the system ensure precision in both tasks [3,4].

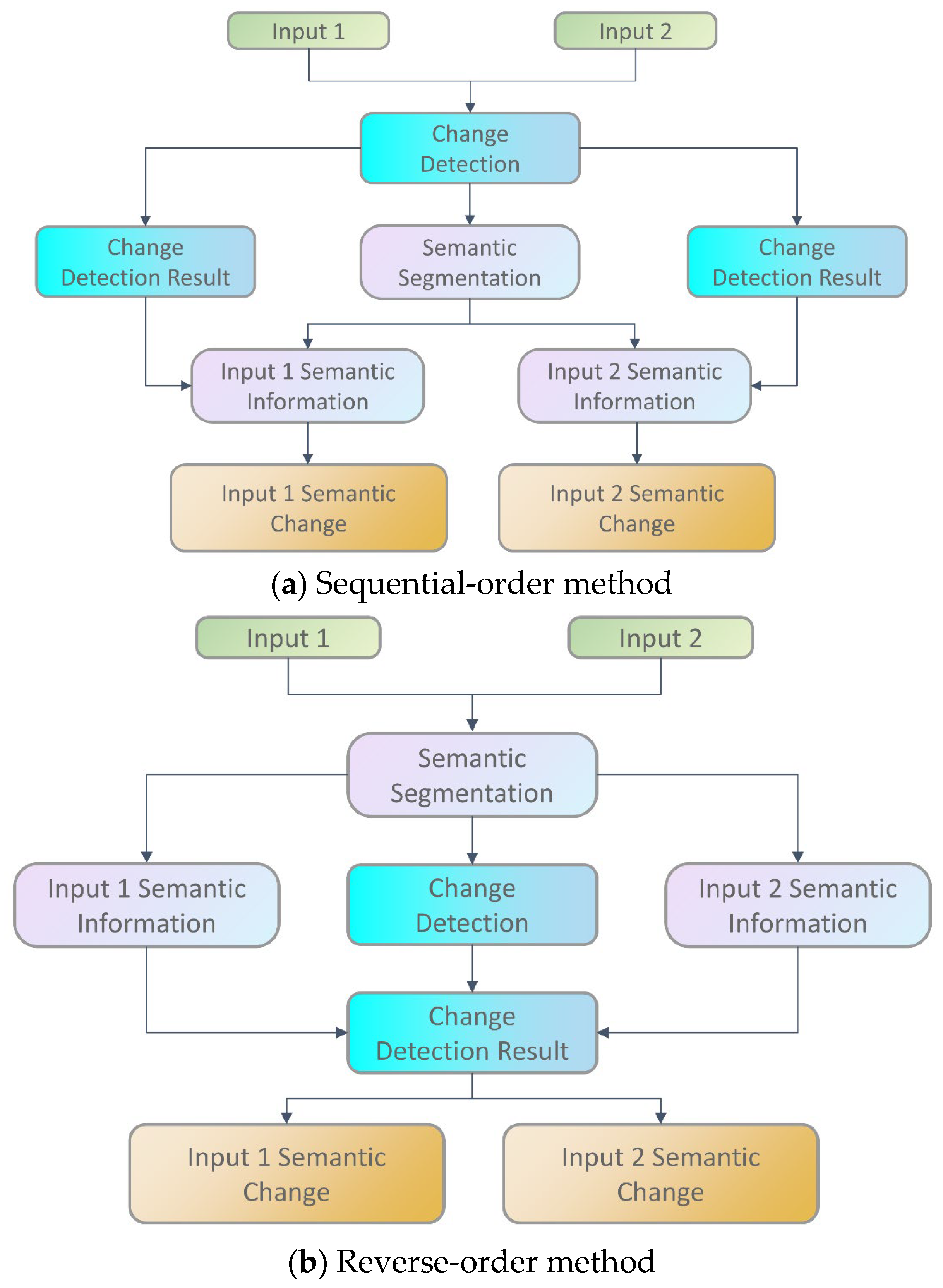

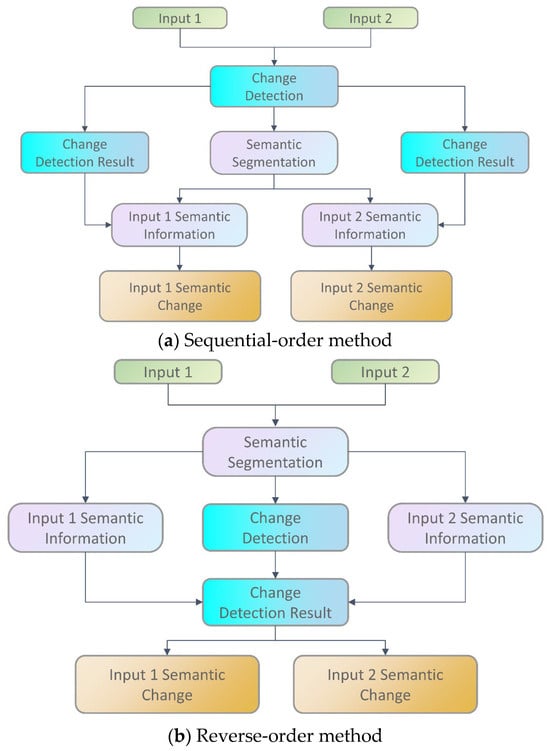

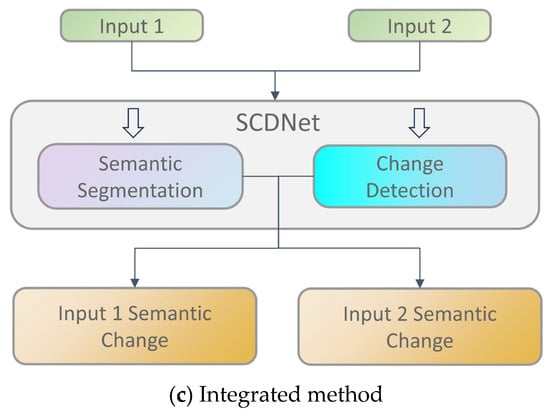

SCD in dual-temporal imagery involves two inter-dependent tasks: pixel-level change detection and region-based semantic segmentation [5]. The sequential-order method (Figure 1a), defined by its two-stage workflow, first quantifies radiometric differences between temporal instances to generate a change magnitude map, then classifies semantic labels exclusively within detected change regions. In contrast, the reverse-order method (Figure 1b) conducts independent semantic segmentation on both temporal images before cross-comparing their classification maps to derive semantic changes. Recent advancements have moved beyond traditional sequential/retrospective methods by employing end-to-end frameworks that map input bitemporal images directly to semantic change maps. As illustrated in Figure 1c, these integrated architectures use dual-stream convolutional networks to extract cross-temporal features, generating both semantic segmentation maps and probabilistic change masks through unified encoding–decoding mechanisms. The final semantic change results are derived by applying thresholded change masks to filter out persistent objects from altered regions.

Figure 1.

Schematic diagram of the main methods of SCD.

Infrared and visible modalities have distinct radiometric characteristics: thermal imaging captures heat signatures, while optical sensors record surface reflectance patterns [6,7]. Although both share structural features related to scene geometry and object contours, they provide significant modality-specific information—color and texture in visible images versus temperature distribution in infrared images. Notably, infrared images are typically single-channel grayscale representations with fewer features (e.g., reduced texture detail) compared to visible imagery. This fundamental difference makes cross-modal SCD using both infrared and visible imagery more challenging than conventional optical-only approaches. Therefore, our research focuses on the underexplored area of cross-modal SCD rather than improving traditional optical-based methods. By integrating complementary common and modality-specific features, we aim for a more comprehensive scene representation that enhances change detection robustness while reducing false positives and missed detections [8].

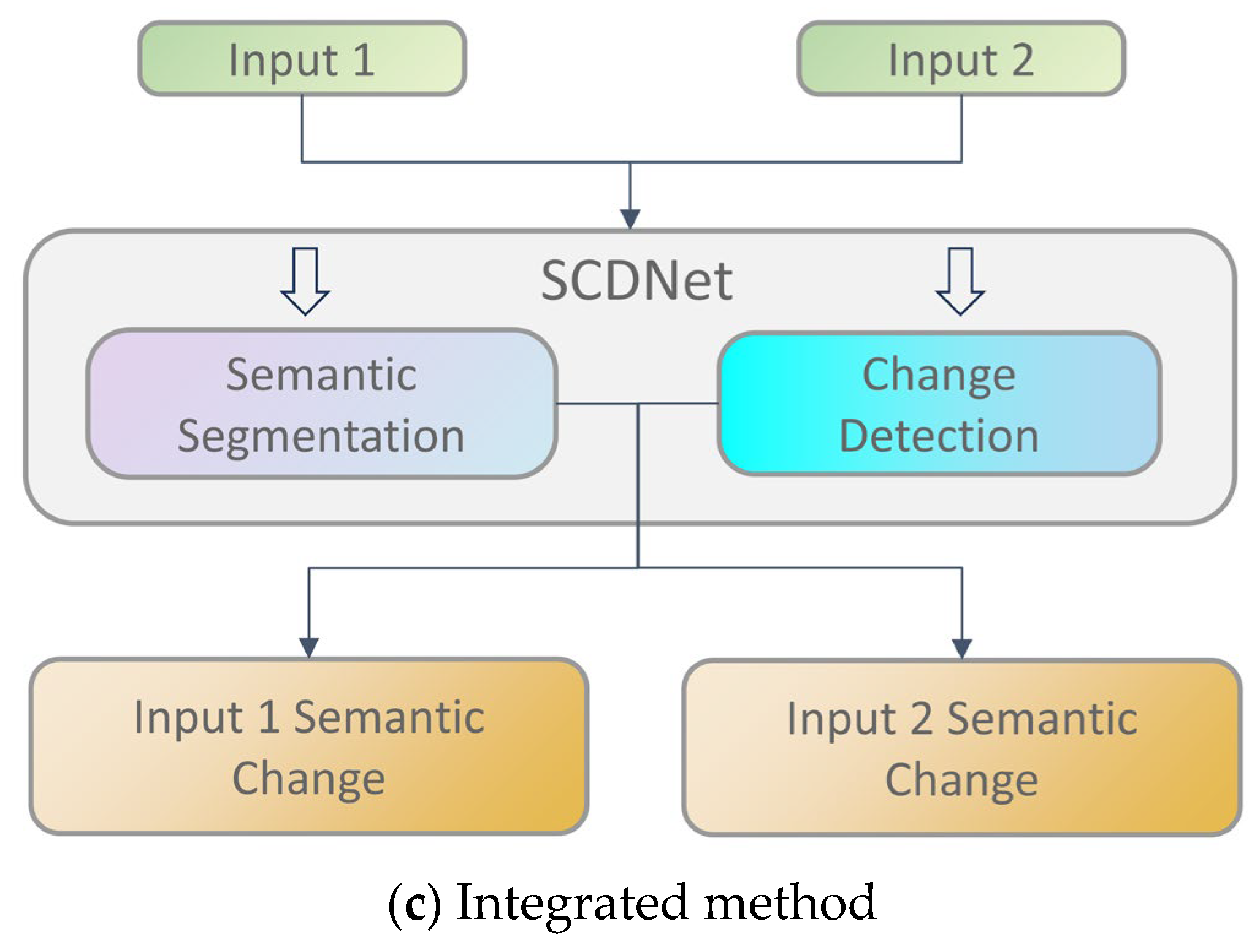

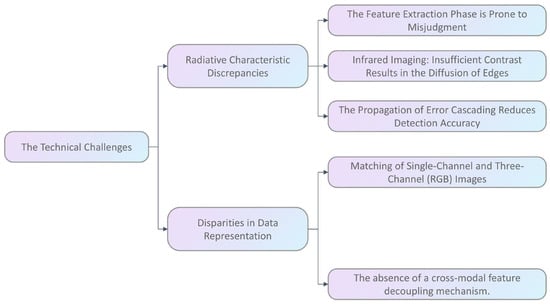

The schematic diagram illustrating the technical challenges encountered by SCD between visible light and infrared images is presented in Figure 2.

Figure 2.

Illustration of technical challenges.

The main points can be categorized into two aspects:

- The inherent divergence in radiometric characteristics between infrared and visible spectral domains introduces significant challenges for cross-modal SCD. Even when imaging identical targets within co-registered scenes, sensor-specific feature discrepancies emerge: visible sensors capture high-resolution texture patterns under adequate illumination, whereas infrared detectors record thermal emission profiles with inherent low contrast and edge diffusion effects. This modality gap frequently induces neural networks to misinterpret sensor-specific artifacts as genuine semantic changes during feature extraction, ultimately compromising change detection accuracy through cascading error propagation.

- The distinct sensing mechanisms between optical and infrared modalities produce fundamentally divergent data representations: visible sensors encode tricolor spectral signatures (RGB channels) through photon reflection, while infrared detectors capture monochromatic thermal intensity profiles corresponding to target emissivity. This representational dichotomy creates asymmetric feature spaces, with visible imagery containing multispectral signatures versus infrared’s thermodynamic characteristics. Current SCD architectures exhibit insufficient cross-modal feature decoupling mechanisms, leading to asymmetric feature learning that preferentially weights photometric patterns while attenuating critical thermal discriminators during multimodal fusion processes.

To address these cross-modal issue, we propose a Modal Feature Analysis Semantic Change Detection Network (MFA-SCDNet), a novel architecture optimized for visible and infrared bitemporal analysis. The principal innovations of this study comprise:

- We propose a cross-modal SCD network, MFA-SCDNet, which utilizes adversarial training tasks to filter features from heterogeneous images. This approach extracts common features that are minimally affected by modal changes in both visible light and infrared images for effective change information detection. Furthermore, we combine the change information with semantic information to obtain semantic change results.

- To address the distinct frequency requirements of change detection (high-frequency edge variations) and semantic recognition (low-frequency semantic information), we designed an infrared feature enhancement module. This component extracts and integrates both high-frequency and low-frequency components from infrared imagery, generating an enhanced representation optimized for downstream semantic feature extraction and change detection.

- We propose a multi-task loss function that integrates cross-entropy loss for semantic segmentation, weighted focal loss for change detection, and a shared feature loss from adversarial training. This approach aims to avoid error accumulation caused by independent task processing in traditional methods, enabling end-to-end optimization.

The remaining content of this paper is organized as follows: Section 2 presents a review of the current research methods and findings related to cross-modal SCD. Section 3 provides a detailed description of the structure and design principles of MFA-SCDNet. In Section 4, we report on experiments conducted using datasets for SCD and analyze the results obtained. Finally, Section 5 concludes this paper with key insights.

2. Related Work

The SCD of infrared-visible images aims to extract and compare semantic information from images of different modalities, identifying the differences between them and outputting the semantic changes of dual-temporal images [9,10,11]. In recent years, SCD methods for visible and infrared image pairs have been categorized into three types: sequential-order methods, reverse-order methods, and integrated methods. This chapter systematically analyzes the technical features and research advancements of these three categories within multimodal scenarios.

2.1. Two-Stage Framework: Change Detection Followed by Semantic Classification

The heterogeneity between visible light and infrared images presents a dual challenge of modal differences and feature alignment for traditional sequential-order methods. It is essential to first identify the differing regions through cross-modal change detection, followed by multimodal semantic classification of these changed areas.

Early approaches primarily employed pixel-based techniques, such as image differencing [12] and threshold segmentation [13]. The fundamental idea behind these methods is to compare the pixel differences between two images in order to detect regions that have undergone changes. However, due to the distinct imaging features of infrared and visible light images, these pixel difference-based detection methods often fail to effectively capture changes at the semantic level. To address this issue, researchers have modeled the SCD process using various feature learning methodologies. For instance, Wu et al. [14] proposed a framework for detecting scene changes in very high-resolution (VHR) imagery based on a Bag-of-Visual-Words (BOVW) model and classification method in 2016, followed by another approach utilizing kernel slow feature analysis combined with post-classification fusion for scene change detection in 2017 [15]. Additionally, other techniques such as binary particle swarm optimization [16,17], sparse coding [18], and topic modeling [19] have also been introduced for application in SCD.

In recent years, the rapid development of deep learning has led to an increasing number of studies applying neural networks to SCD [20,21,22]. Various methods have been proposed to obtain change detection results for heterogeneous remote sensing images. For instance, Ji et al. [23] combined superpixels with square receptive fields and introduced a non-supervised Siamese network based on superpixels. Li et al. [24], utilizing copula theory, modeled the dependencies between random variables and designed a neural network specifically for change detection in heterogeneous images. The sequential-order method that first obtains a binarized change map for SCD is simple yet effective [25,26,27]. However, unlike SCD using homogeneous images, cross-modal images such as visible light (RGB three channels) and infrared (single-channel grayscale) exhibit significant asymmetry in information representation [28]. Due to differences in spectral information, imaging quality, and scene perception across modalities [29,30], binarized change maps often present considerable noise when compared directly, making it challenging to interface these maps with semantic segmentation results. This complicates the process of achieving semantic matching and change detection.

2.2. Cross-Modal Comparison via Bitemporal Semantic Segmentation

The reverse-order method first independently processes the semantic segmentation of visible light and infrared images, followed by cross-modal semantic comparison to identify changes. This approach is particularly suitable for scenarios with significant modality specificity. The emphasis of this method lies in obtaining high-precision semantic segmentation results for both visible light and infrared images [31,32]. The classification–comparison (PCC) method is the most representative reverse-order SCD technique [33], as illustrated in Figure 2. The PCC method is exemplified with data sourced from the literature [14]. A square region defined as 512 × 512 pixels is designated as a specific type. In conducting SCD, the analysis focuses solely on whether there has been a change in the type of the square region. Its principle involves classifying remote sensing images captured at different time points, segmenting the pixels within these images into distinct categories, and subsequently comparing the regions of change.

Apart from the PCC method, Khoshboresh Masouleh et al. [34] incorporated a Gaussian–Bernoulli Restricted Boltzmann Machine (GB-RBM) into the semantic segmentation network. The model they designed achieved favorable semantic segmentation results on infrared images. Zhou et al. [35] investigated the differences in features between visible light and infrared images, proposing an adaptive gated fusion network for semantic feature analysis by combining multimodal features. The reverse-order method relies on high-precision semantic segmentation results; however, infrared images often exhibit factors that interfere with semantic segmentation, such as blurred boundaries and noise, which significantly impact output accuracy.

2.3. End-to-End Multi-Task Learning for Joint Semantic and Change Detection

In recent years, various integrated approaches have been applied in the field of SCD [36]. These methods jointly accomplish two primary tasks of detection: generating binary change maps and dual-temporal semantic maps [37,38]. Kai Tang et al. [5] proposed a multi-task learning model named ClearSCD, which leverages the mutual gain relationship between semantics and changes through three innovative modules. This model employs a semantic enhancement algorithm to improve the performance of extracting change and semantic information. Rodrigo Caye Daudt et al. [39] introduced a network architecture that combines pixel-level change information, enabling simultaneous execution of change detection and land cover mapping while utilizing predicted land cover information to assist in predicting changes. Lei He et al. [40] presented a change-guided similarity pyramid network that constructs a three-branch, multi-task SCD architecture; for a pair of dual-temporal input images, this approach utilizes three decoders to produce two semantic maps and one binary change map, ultimately combining these outputs to generate a semantic change map.

In order to bridge the modal differences between visible light and infrared images, numerous studies have attempted to achieve a joint representation of image information from both modalities through feature fusion [41,42]. Common techniques include multimodal convolutional neural networks [43,44], cross-modal alignment methods in deep learning [45,46], and autoencoder architectures [47,48]. These approaches learn the mapping relationships between visible light and infrared images within a shared feature space, thereby extracting more consistent high-level features that enhance the accuracy of change detection. To overcome the challenges associated with directly combining binarized change maps with semantic segmentation results, Li et al. [49] proposed a multimodal semantic consistency fusion framework. This framework employs multi-level feature matching and spatial consistency constraints to optimize the search for an optimal multimodal dense fusion architecture. Although this line of research has not yet been applied to SCD in visible and infrared image pairs, the methodologies involving multi-level feature matching and spatial consistency constraints hold promise for adaptation in cross-modal image SCD [50].

3. Methodology

3.1. Overall Structure of the Network

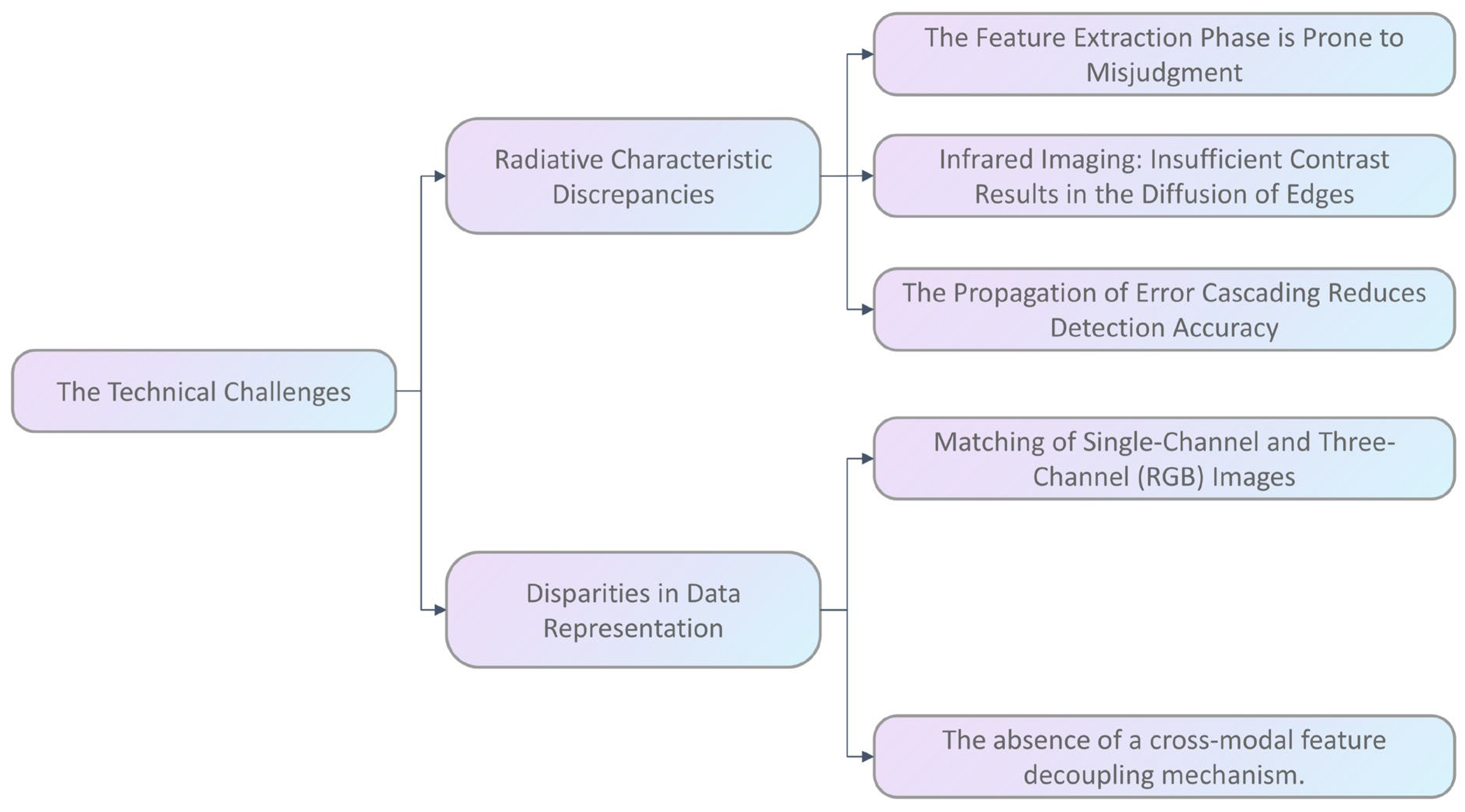

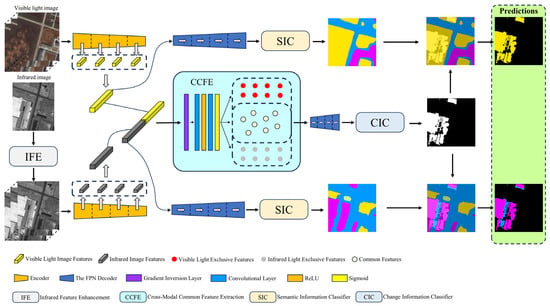

The proposed MFA-SCDNet is designed for SCD in visible and infrared image pairs, with its structural composition and design details illustrated in Figure 3. A pair of dual-temporal images is inputted into the network, consisting of an RGB three-channel visible light image and a single-channel grayscale infrared image. Initially, the infrared image undergoes processing through the infrared feature enhancement module (IFE), which enhances the feature information of the infrared image and extracts both high-frequency and low-frequency components to facilitate subsequent feature extraction.

Figure 3.

The overall structure of MFA-SCDNet utilizes dual-temporal visible and infrared image pairs as an example, propagating from left to right. It takes a three-channel visible light image and a single-channel infrared image as inputs, producing a set of semantic transformation detection result images.

The feature information extracted from the image input is processed through an FPN decoder, followed by a semantic information classifier to obtain semantic recognition results. We have designed a common feature extractor that, prior to conducting change detection, utilizes adversarial training tasks to filter features from heterogeneous images. This process retains features that are not accurately distinguishable by the classifier as modal common features. These common features are then fed into the FPN decoder and subsequently processed by the change information classifier to yield change detection results. The integration of change detection results with semantic recognition outcomes produces a dual-phase semantic change result.

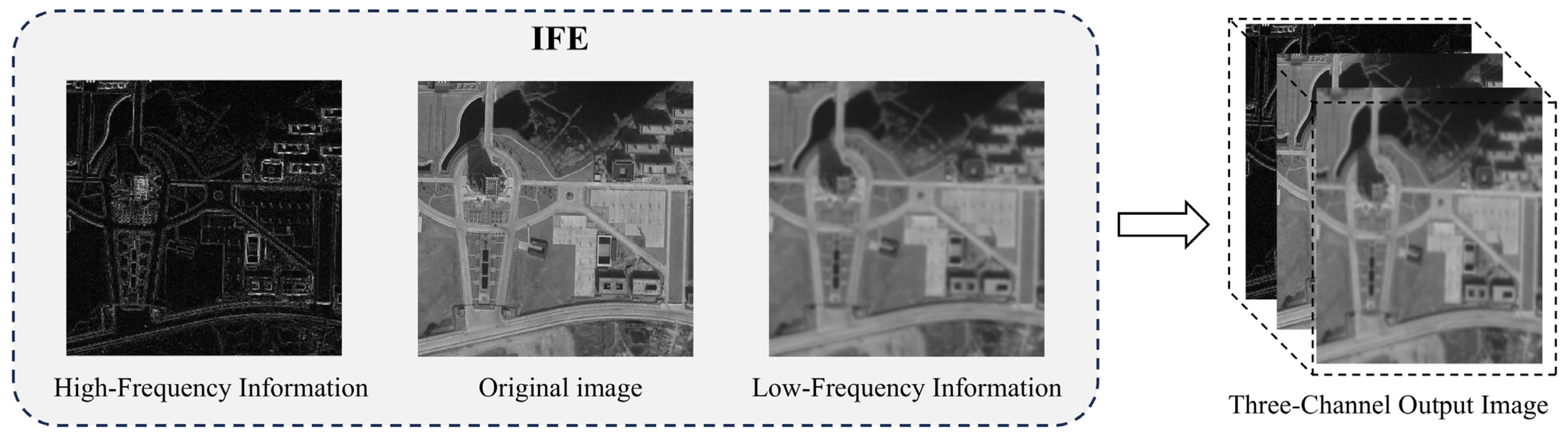

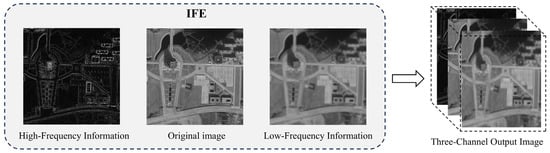

3.2. Infrared Feature Enhancement (IFE)

The task of SCD in images can be categorized into two distinct objectives: the extraction of change information and semantic information. The change information within an image is typically concentrated in regions characterized by abrupt transitions, particularly evident in edge details. From a frequency domain perspective, such edge information corresponds to the high-frequency components of the image. This phenomenon occurs because edge areas exhibit sharp variations in grayscale values or other feature dimensions; these rapid changes manifest as higher-frequency components within the frequency domain. In contrast, the process of semantic recognition primarily emphasizes accurately detecting smooth target information. In the frequency domain, this type of information is represented by low-frequency components that reflect extensive areas with gradual changes. Such features are crucial for understanding the semantic content of images. Therefore, separating high-frequency and low-frequency information enhances the network’s capability to effectively perceive these features [51].

We have designed an infrared feature enhancement module that extracts both the high-frequency and low-frequency components of infrared images. These components, along with the original image, are combined to form a three-channel image. This approach not only meets the encoder’s requirement for a three-channel input but also enhances the features of single-channel infrared images, thereby improving model performance [52]. The process of the infrared feature enhancement module is illustrated in Figure 4.

Figure 4.

The high-frequency components of the infrared image, the original image, and the low-frequency components are assigned to their respective RGB channels to synthesize a new image.

Suppose a single-channel infrared image with a size of n × n:

We employed Gaussian filtering to extract the low-frequency components of the image, while Laplacian filtering was utilized to obtain the high-frequency components. This process involves the construction of a Gaussian kernel and a Laplacian operator:

The expression in Equation (2) denotes as the value of the Gaussian function at the point , while represents the standard deviation that controls the width of the Gaussian kernel, thereby determining the degree of smoothing. In Equation (3), signifies the Laplacian operator, which employs a 3 × 3 convolution kernel. The values within this convolution kernel are defined based on practical considerations and serve to emphasize edges in an image by computing its second-order derivatives. Given the infrared image depicted in Equation (1), applying Gaussian filtering results in image , followed by Laplacian filtering yielding image .

In the above equation, and represent the radii of the Gaussian kernel and the convolutional kernel, respectively. We set and . Finally, we combine the three single-channel images to form a single RGB three-channel image, denoted as .

3.3. Encoder and Decoder

We designed two structurally consistent encoders to extract feature information from dual-phase multimodal images, with each encoder comprising three input channels. To accommodate varying task requirements and dataset characteristics, the encoders can be configured using either ResNet or EfficientNet architectures. During the feature extraction phase, the encoders process the input images through a series of convolutional and pooling layers. Throughout this process, the image resolution progressively decreases while the number of channels in the feature maps correspondingly increases. The output feature maps at each layer encapsulate unique information at different scales, thereby providing a rich data foundation for subsequent processing.

The decoder employs a Feature Pyramid Network (FPN) architecture that integrates multi-scale features derived from the encoder’s output to achieve enhanced accuracy in semantic segmentation and change detection [53]. In the top-down pathway, high-level feature maps undergo upsampling operations and are fused with corresponding low-level feature maps across various scales. The semantic information contained within high-level feature maps guides low-level feature maps towards more targeted feature extraction, while low-level features supplement their high-level counterparts by providing essential details that may be missing. This collaborative interaction ensures that the final fused feature map encompasses richer image information.

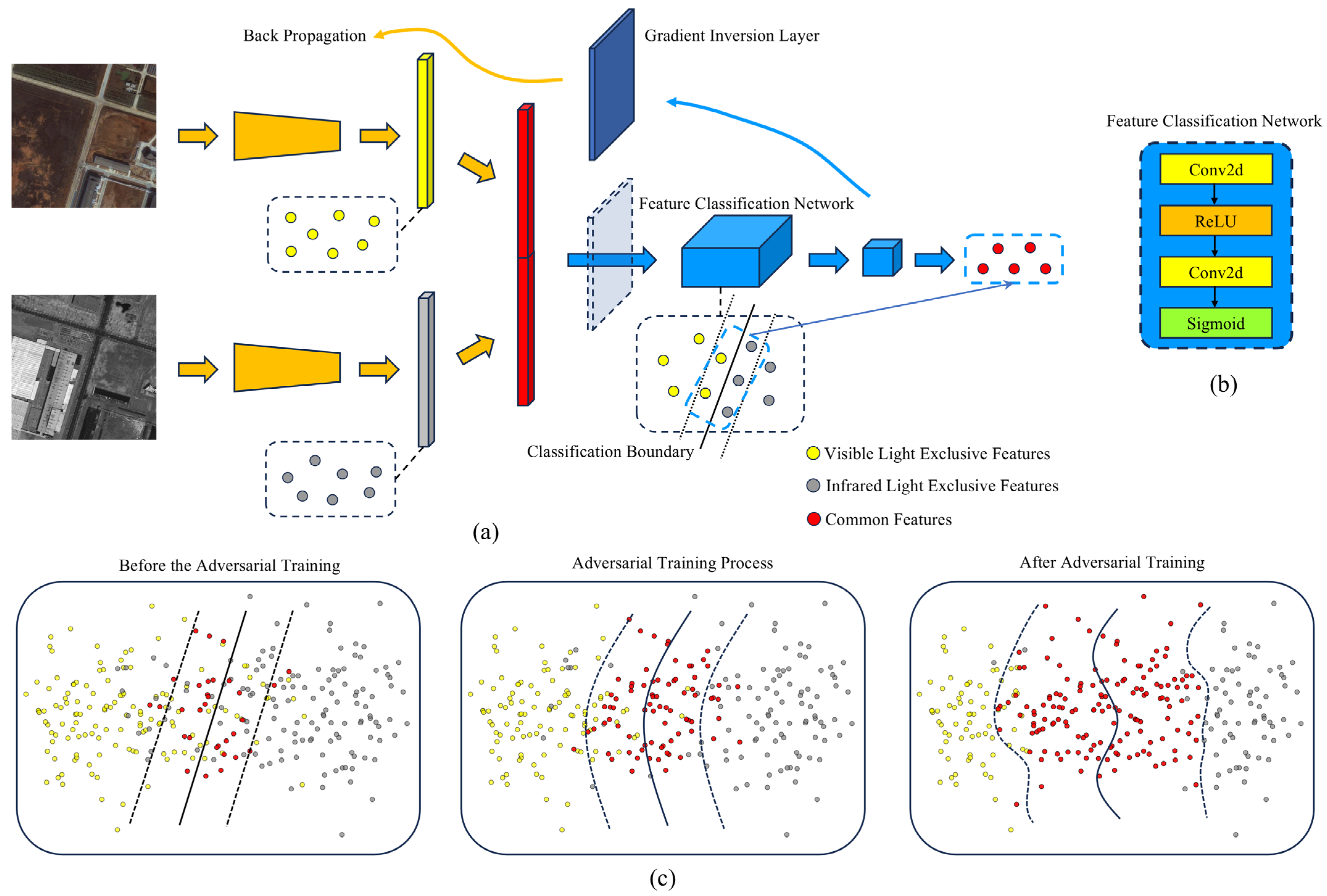

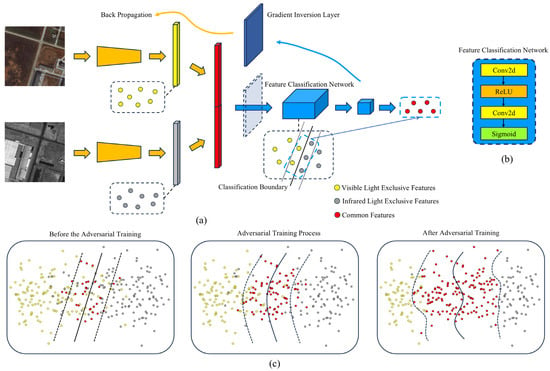

3.4. Cross-Modal Common Feature Extraction (CCFE)

The imaging principles of visible light and infrared images differ significantly; however, when a multispectral sensor captures images of the same ground area, a series of relatively stable common features exist that do not vary with modality. These features include target contours, structural characteristics, regional statistical attributes, and contextual semantic information. Nevertheless, due to the pronounced differences between the two modalities, effectively extracting these common features presents a key challenge in this field of research. This paper employs adversarial learning to design a common feature extractor that filters out shared features from those extracted by an encoder. Subsequently, it compares variations in these common features to output transformation information for dual-temporal phase images. The structure of the common feature extractor is illustrated in Figure 5a.

Figure 5.

Illustration of the CCFE module. (a) Schematic diagram of the structure of the common feature extractor. After stitching features from visible light and infrared images, they are input into a feature classifier to extract relatively stable common features. (b) Schematic representation of feature classification network. (c) Schematic representation of adversarial training.

The extractor is constructed based on a spectral classification network, with the primary objective being learning the features of input images to distinguish between visible light and infrared image features. The spectral classification network comprises two layers of 1 × 1 convolutional layers: one layer of ReLU activation function, and one layer of Sigmoid activation function. The structure of the feature classification network is illustrated in Figure 5b.

By employing 1 × 1 convolutional layers, it effectively adjusts the channel dimension while maintaining the size of feature maps. This allows for linear combinations and transformations of features, enabling efficient capture of spectral features that exhibit significant distribution differences in feature space, thereby achieving preliminary spectral classification results. The output from the classification network is mapped to the (0, 1) interval through an activation function; this output value represents the probability that the input features belong to either visible light or infrared images. Consequently, this facilitates differentiation between various modal features and accomplishes effective spectral classification outcomes.

Based on this, we further introduce an adversarial training task by incorporating a gradient reversal layer into the spectrum classification network. During the forward propagation, the gradient reversal layer does not alter the input features, ensuring normal data flow; however, during backpropagation, it reverses the sign of the gradients. This configuration enables the subsequent network to optimize towards minimizing classification loss while compelling the preceding network to maximize classification loss. Such an adversarial training mechanism fosters a competitive relationship between the feature extraction network and the classification network. As illustrated in Figure 5c, during the early training stages, the spectrum classification network can accurately distinguish between different modal features. However, as adversarial training progresses, the feature extraction network continuously adjusts its parameters to produce increasingly deceptive features that challenge accurate discrimination by the spectrum classification network. When this latter fails to classify accurately, it indicates that what has been extracted by the feature extraction network are common features minimally affected by modal variations. Leveraging this adversarial training approach has successfully led to designing a robust common feature extractor capable of filtering key shared features from complex cross-modal image features, significantly enhancing MFA-SCDNet’s performance in visible and infrared image pairs SCD.

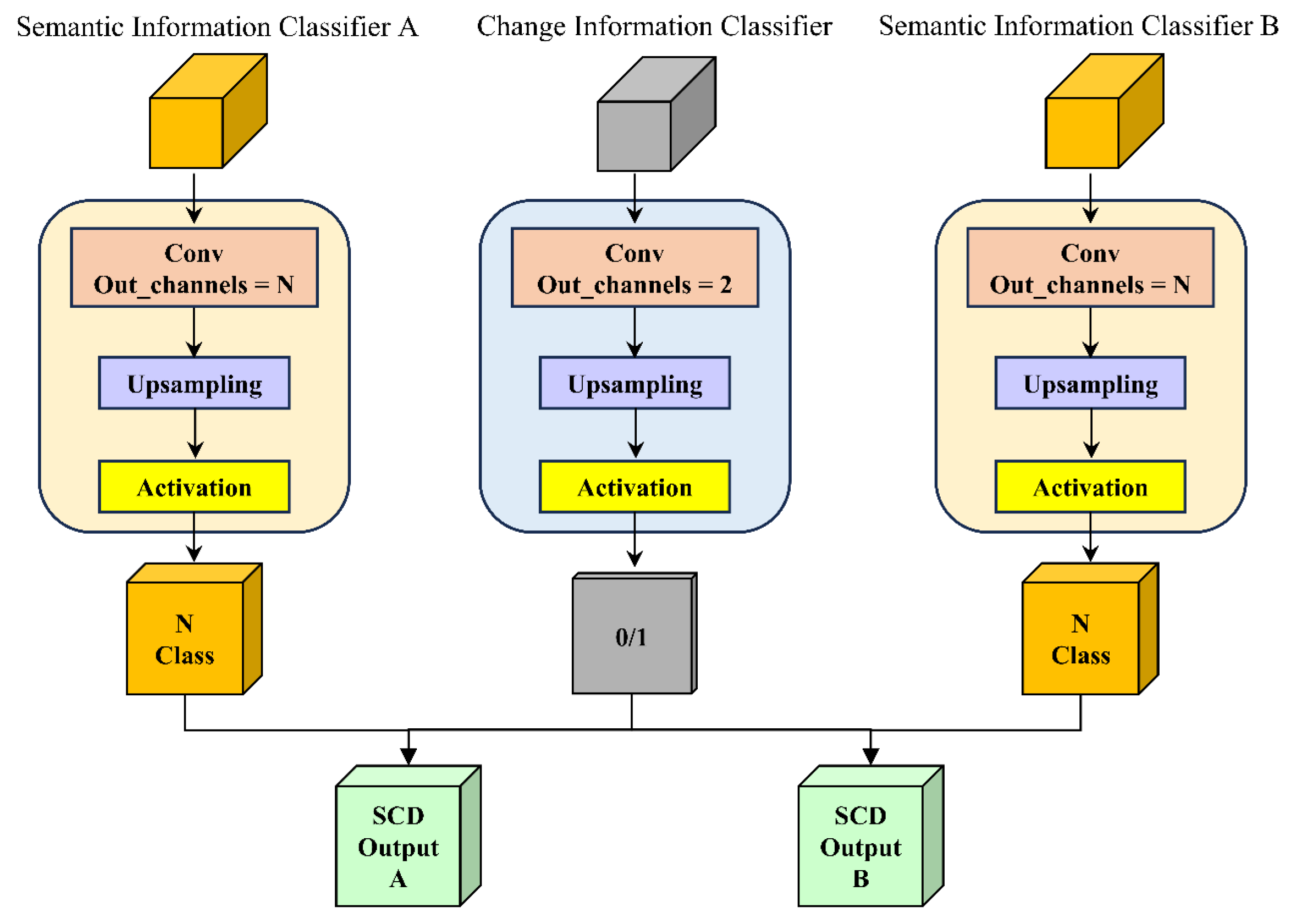

3.5. Information Classifier

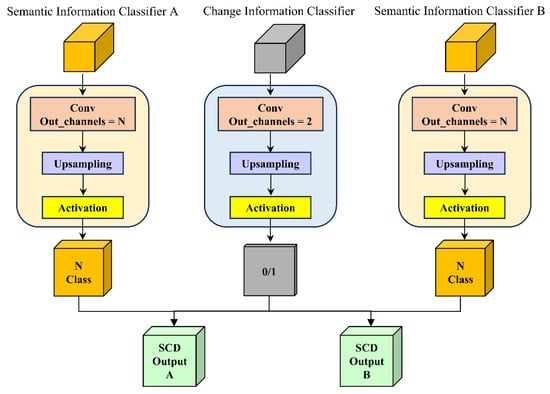

In the task of SCD, semantic recognition can be regarded as an N-class classification problem, where N represents the number of semantic categories. The objective is to classify the output features into one of these N categories. Conversely, change detection can be viewed as a binary (0–1) decision-making problem that determines whether changes have occurred in the output features. The decoder outputs a feature map in the form of a probability graph; we propose a semantic information classifier and a change information classifier to categorize each pixel point, mapping the output feature map into either N-class or binary classifications. The specific structure is illustrated in Figure 6.

Figure 6.

Schematic representation of the information classifier.

The output of the decoder contains both change information and semantic feature information, which possess the same number of channels. These outputs are fed into separate classifiers for semantic information and change information recognition. Both classifiers utilize an identical architecture, consisting of a convolutional layer, an upsampling step, and an activation layer. The convolutional layer of the semantic information classifier produces N output channels corresponding to the number of semantic classes, while the change information classifier generates two output channels that indicate changes with binary values (0/1). After combining the resulting semantic and change feature maps, a dual-temporal semantic change map is produced as output.

3.6. Loss Functions

The training objective of MFA-SCDNet involves optimizing three critical components: semantic segmentation, change detection, and cross-modal common feature extraction Via adversarial learning. To ensure end-to-end optimization, we formulate a composite loss function comprising three terms:

For the N-class semantic segmentation task, we employ the standard pixel-wise, cross-entropy loss to measure the discrepancy between predicted semantic probabilities and ground truth labels as follows:

where and denote the spatial dimensions of the output feature map.

The binary change detection task utilizes a weighted focal loss to address class imbalance between changed and unchanged regions:

where denotes the predicted change probability, denotes the ground truth labels, balances positive/negative samples, and mitigates hard example suppression.

The calculation formula of the adversarial common feature loss is as follows:

where denotes the expected value, and denote features from visible and infrared modalities, donotes a binary classification network with an output range of [0, 1], which represents the probability that the input feature belongs to the visible mode.

4. Results

4.1. Experiments Settings

4.1.1. Dataset

We conducted experiments on the HanYang dataset, which includes four spectral bands: RGB and near-infrared. This dataset encompasses multi-temporal imagery primarily covering the urban area of Hanyang in Wuhan, China [14]. The images were acquired by the IKONOS sensor (manufactured by Space Imaging, Thornton, CO, USA) on 11 February 2002, and 24 June 2009, with a spatial resolution of 1 m. Each image has dimensions of 7200 × 6000 pixels and has undergone geographic referencing and precise registration. To adapt to the network input requirements, we employed a 512 × 512 sliding window for image segmentation, resulting in a total of 1176 sub-images for training, validation, and testing. We annotated the semantic information within the images and extracted both the RGB channels and infrared channels to represent visible light and infrared imagery, respectively.

4.1.2. Evaluation Metrics

We implemented the proposed MFA-SCDNet using PyTorch 2.0.0 in conjunction with CUDA 11.8, utilizing an NVIDIA GeForce RTX 4090 (designed by NVIDIA Corporation, Santa Clara, CA, USA) for training. The pre-trained weights of ResNet provided by PyTorch were employed to train MFA-SCDNet, and we utilized a stochastic gradient descent optimizer for this purpose. The initial learning rate was set to 0.00025, and we adopted an exponential decay scheme to gradually reduce the learning rate throughout the training process. The input training data was randomly shuffled before each epoch. The dataset was divided into training, validation, and testing sets to a ratio of 6:2:2, allowing us to train the network until convergence, at which point no further reduction in loss was observed.

For change detection, the Intersection over Union (IoU) and F1-score of change classes are utilized as evaluation metrics to assess the overall performance of the results. The specific calculations are as follows:

In this context, , , and represent the counts of true values, specifically denoting the number of true positives, false positives, and false negatives for pixels in both change and non-change scenarios.

The performance of SCD is evaluated using three metrics [54]: the binary consistency , the change region consistency , and the no-change area consistency . The is calculated based on the results of change detection. Meanwhile, and measure the consistency between semantic change results and ground truth in both changed areas and unchanged areas, respectively. The calculation formulas are as follows:

The terms and refer to the number of categories for change regions and non-change regions, respectively. In and , denotes the Intersection over Union (IoU) for the i-th change class and non-change class. Furthermore, and represent the for change regions and non-change regions, respectively.

4.1.3. Benchmark Methods

- ClearSCD: This network simulates the dependency relationship between semantics and variations, incorporating multiple innovative modules to enhance model performance [5].

- MTL: By merging the outputs of multiple encoders, this approach addresses the issue of how multi-task networks manage feature interactions among sub-tasks in SCD [8].

- SCanNet: The network jointly considers spatiotemporal dependencies to enhance the accuracy of SCD and introduces a semantic learning scheme to guide the learning of semantic variations [55].

- SCD-SAM: The introduction of the Segment Anything Module (SAM) aims to enhance SCD in high-resolution remote sensing images [56].

- The CdSC model employs a twin-vision transformer network to address the issue of high consistency reliance within dual-temporal feature spaces in traditional SCD architectures. This dependency often leads to false positives or missed detections in the identification of change regions [57].

- HRSCD.str4: This model features a distinct encoder–decoder architecture for BCD and semantic segmentation, which facilitates the direct transfer of semantic features to the CD branch through skip connections, thereby enabling information sharing [39].

4.2. Experiments on the HanYang Series Dataset

We conducted experiments using various advanced open-source methods on the proposed dataset, visualized the results, and ultimately performed a comparative analysis of the experimental outcomes.

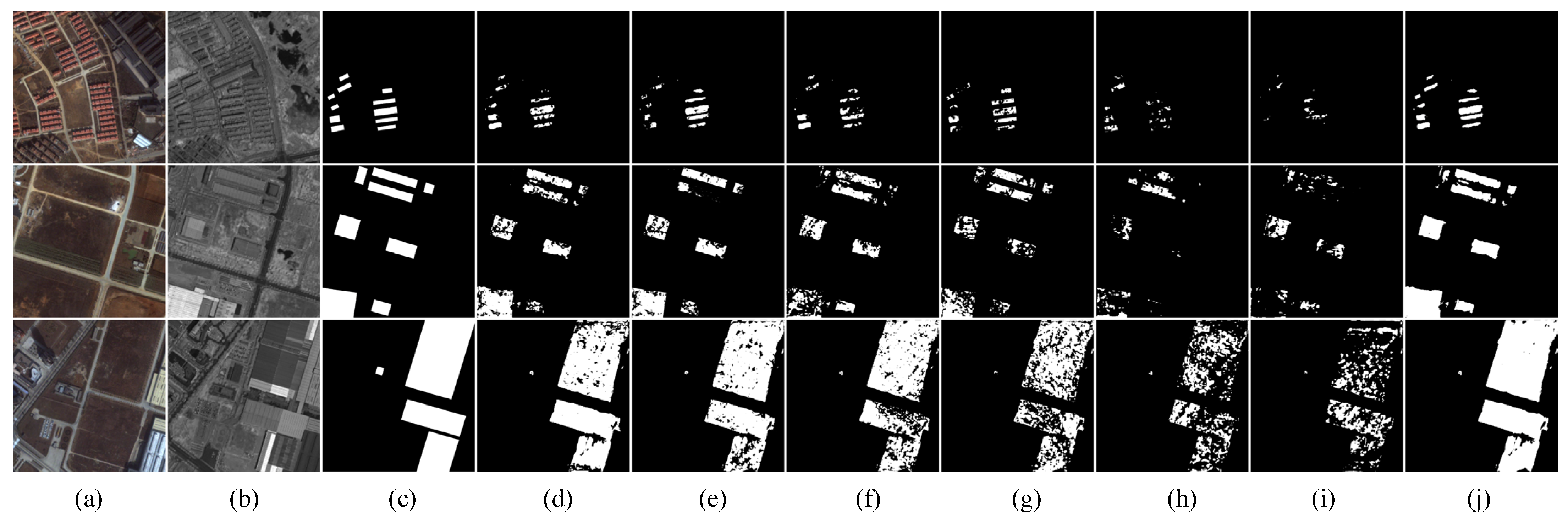

4.2.1. Results and Analysis of Binary Change Detection

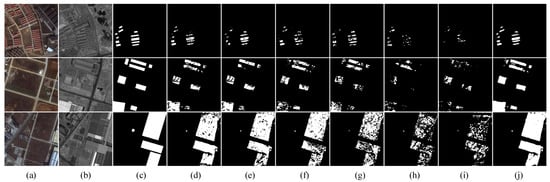

In the binary change detection experiments, we conducted a comprehensive evaluation of MFA-SCDNet in comparison to six widely utilized SCD networks: ClearSCD, SCanNet, CdSC, SCD-SAM, MTL, and HRSCD.str4. The tests were performed on the HanYang dataset, with the results presented in Table 1. The visualization results are illustrated in Figure 7.

Table 1.

Binary change detection test results.

Figure 7.

Visualization results of BCD in the HanYang dataset. From left to right: (a) RGB image, (b) infrared image, (c) label, (d) SCanNet, (e) ClearSCD, (f) CdSC, (g) SCD-SAM, (h) MTL, (i) HRSCD.str4, (j) MFA-SCDNet.

From the experimental data, it can be observed that the IoU value of MFA-SCDNet is 62.1%, significantly surpassing that of the other algorithms. The IoU value measures the degree of overlap between predicted results and actual outcomes; this high metric for MFA-SCDNet indicates a more precise alignment with real conditions when identifying unchanged areas, thereby enhancing the accuracy in delineating these regions. For instance, during the detection of urban areas within the HanYang dataset, MFA-SCDNet effectively identifies buildings and roads that have remained unchanged between two temporal images. This capability reduces instances of misclassifying unchanged areas as changed ones, thereby significantly lowering the false alarm rate.

The F1-score of MFA-SCDNet ranks among the top compared algorithms. The F1-score integrates both precision and recall, serving as a crucial metric for evaluating model performance. A high F1-score indicates that MFA-SCDNet achieves a favorable balance between precision and recall in detecting unchanged areas. Precision reflects the proportion of actual unchanged areas among those predicted by the model as unchanged, while recall represents the ratio of correctly detected actual unchanged areas. The outstanding performance of MFA-SCDNet in these two aspects signifies its ability to ensure accuracy in detection results while comprehensively covering real unchanged regions. This capability provides a solid foundation for subsequent change detection and analysis efforts.

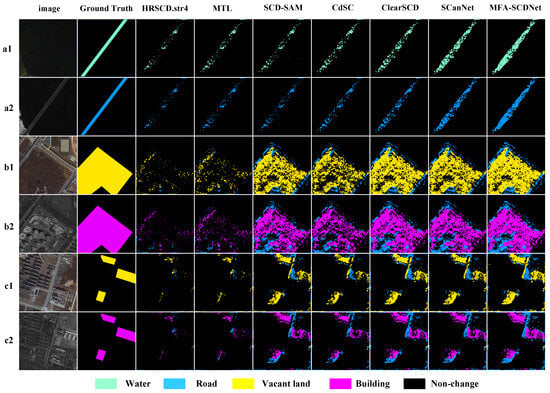

4.2.2. Results and Analysis of SCD

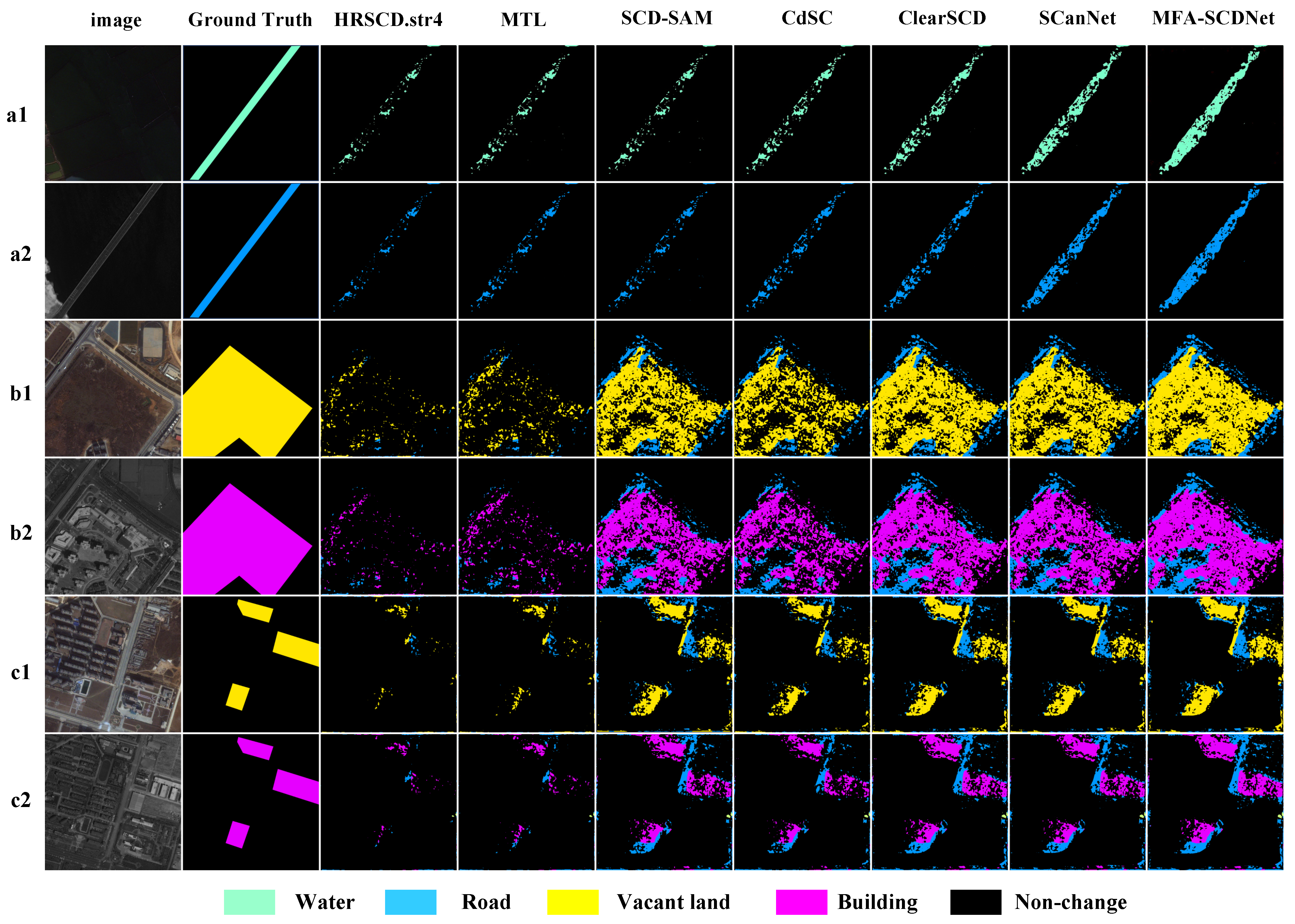

In this study, we conducted experiments on SCD using the HanYang dataset. The experimental results are presented in a visual format in Figure 8, where label 1 corresponds to the visible light image and label 2 corresponds to the infrared image. Detailed quantitative data can be found in Table 2.

Figure 8.

Visualization results of SCD in the HanYang dataset. In the figure, (a1,b1,c1) are visible images, while (a2,b2,c2) are infrared images.

Table 2.

Results of SCD testing.

In the testing of the HanYang dataset, MFA-SCDNet demonstrated significant advantages across multiple metrics. Notably, the mIoU exhibited outstanding performance across different categories, with mIoUbc reaching 41.2%, surpassing that of other methods. This indicates that MFA-SCDNet possesses a higher accuracy in detecting change regions, enabling it to more sensitively capture portions of the scene that have undergone changes.

Regarding mIoUsc, MFA-SCDNet achieved a value of 17.5%, which also outperformed other networks. MFA-SCDNet’s high mIoUsc value suggests its superior ability to maintain semantic category coherence when processing cross-modal images. This results in more rational and accurate outcomes for SCD. When analyzing visible light infrared images from different time phases, MFA-SCDNet ensures consistent judgments regarding areas belonging to the same semantic category during change detection processes. This effectively mitigates issues related to semantic confusion caused by modal differences.

The mIoUnc of MFA-SCDNet is 39.2%, which remains higher than that of other algorithms. This further confirms the high accuracy of this network in detecting unchanged areas, enabling a clear and precise distinction between unchanged and changed regions. Such capability provides a solid and reliable foundation for SCD. When processing images containing a substantial amount of unchanged areas, MFA-SCDNet can accurately identify these regions, significantly reducing erroneous judgments regarding unchanged areas. This greatly enhances the overall reliability and stability of the change detection system.

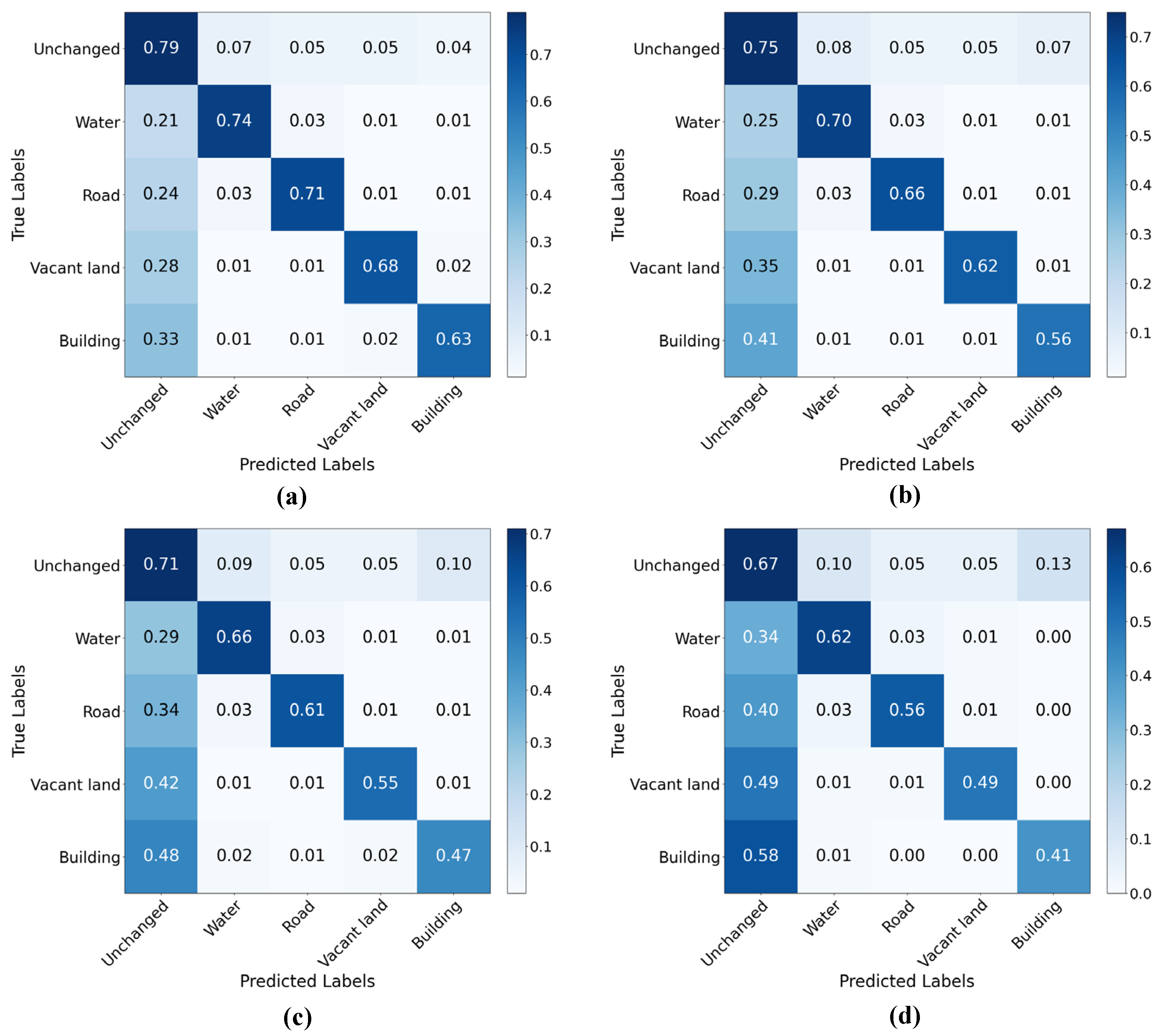

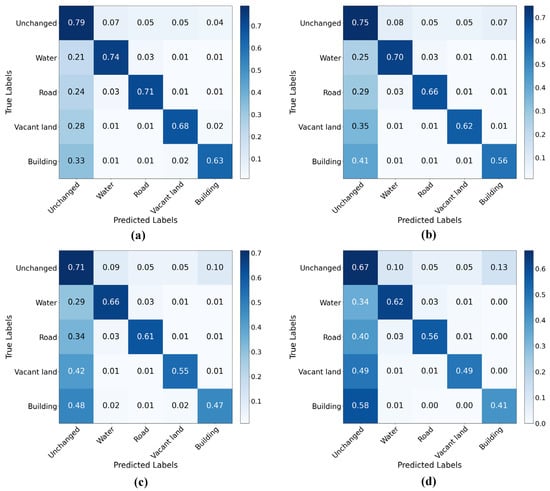

Figure 9 presents the confusion matrix results for MFA-SCDNet alongside three other high-performing algorithms.

Figure 9.

Results of the confusion matrix visualization. The figure presents the following models: (a) MFA-SCDNet, (b) SCanNet, (c) ClearSCD, (d) CdSC.

The values diagonally along the matrix represent the accuracy of each detection category pair. With the exception of “Unchange to Unchange”, results where Predicted Labels are classified as Unchanged indicate miss detection rates in change areas. The results demonstrate that MFA-SCDNet achieves higher accuracy and lower miss detection rates compared to other comparative algorithms. Notably, for the Vacant Land and Building categories, accuracies reached 0.68 and 0.63, respectively, while miss detection rates were only 0.28 and 0.33. This indicates that MFA-SCDNet performs effectively in detecting changes related to building categories.

The experimental results demonstrate that MFA-SCDNet exhibits exceptional performance advantages in the task of SCD. Whether considering overall pixel classification accuracy, precision in detecting changed regions, or consistency in semantic categories and accuracy in identifying unchanged areas, it significantly outperforms other comparative algorithms. This fully validates its effectiveness, advancement, and reliability in the field of visible and infrared image pairs SCD.

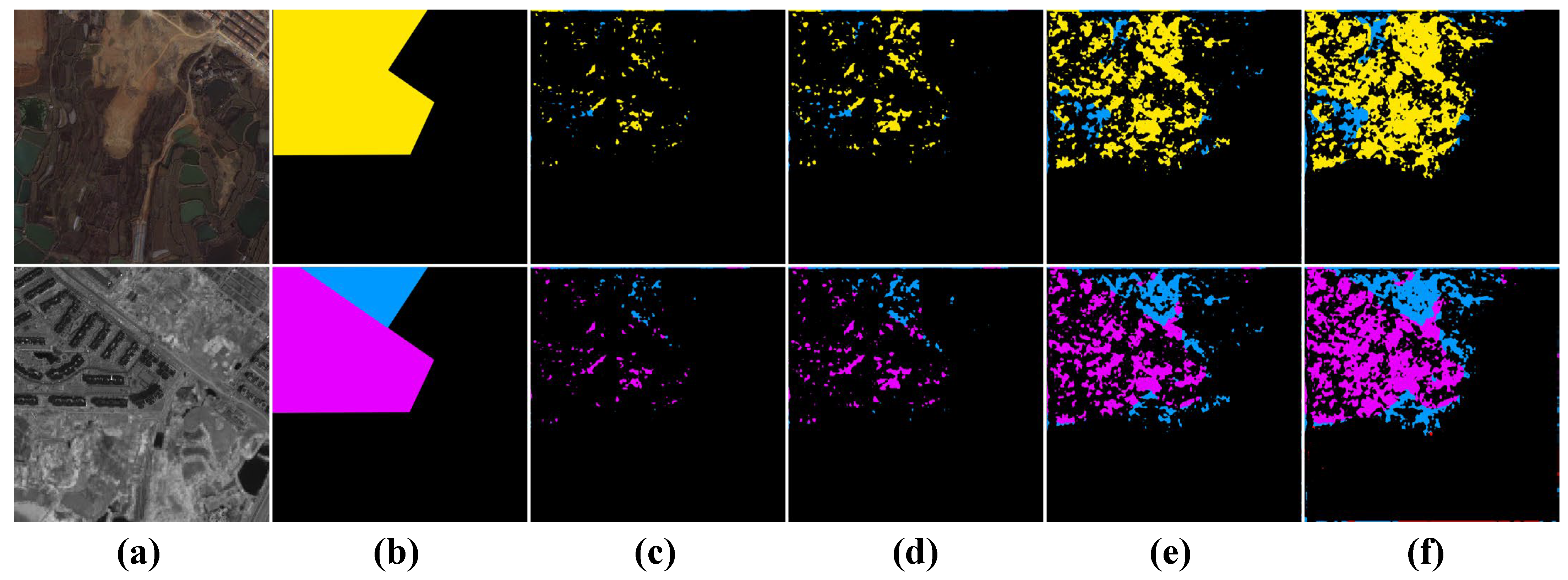

4.2.3. Ablation Studies

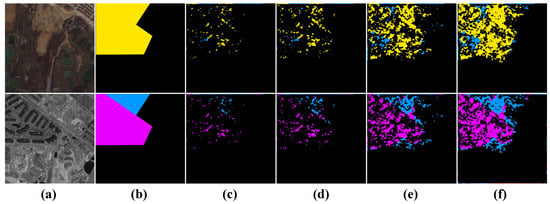

To thoroughly investigate the specific contributions of each key module in MFA-SCDNet to model performance, we conducted ablation experiments. In these experiments, we systematically removed either the infrared feature enhancement module or the adversarial training-based common feature extraction module, both individually and in combination. The visualization results of the ablation experiments are presented in Figure 10. The setup for the ablation experiments is presented in Table 3. We retrained and tested on the HanYang dataset, ensuring that the evaluation metrics remained consistent with those used in previous comparative experiments.

Figure 10.

Visualization results of ablation studies. The figure presents the following models: (a) image, (b) label, (c) without IFE and CCFE, (d) without CCFE, (e)without IFE, (f) full model.

Table 3.

Design of ablation experiments. The annotation indicates that this particular module is applied within the context.

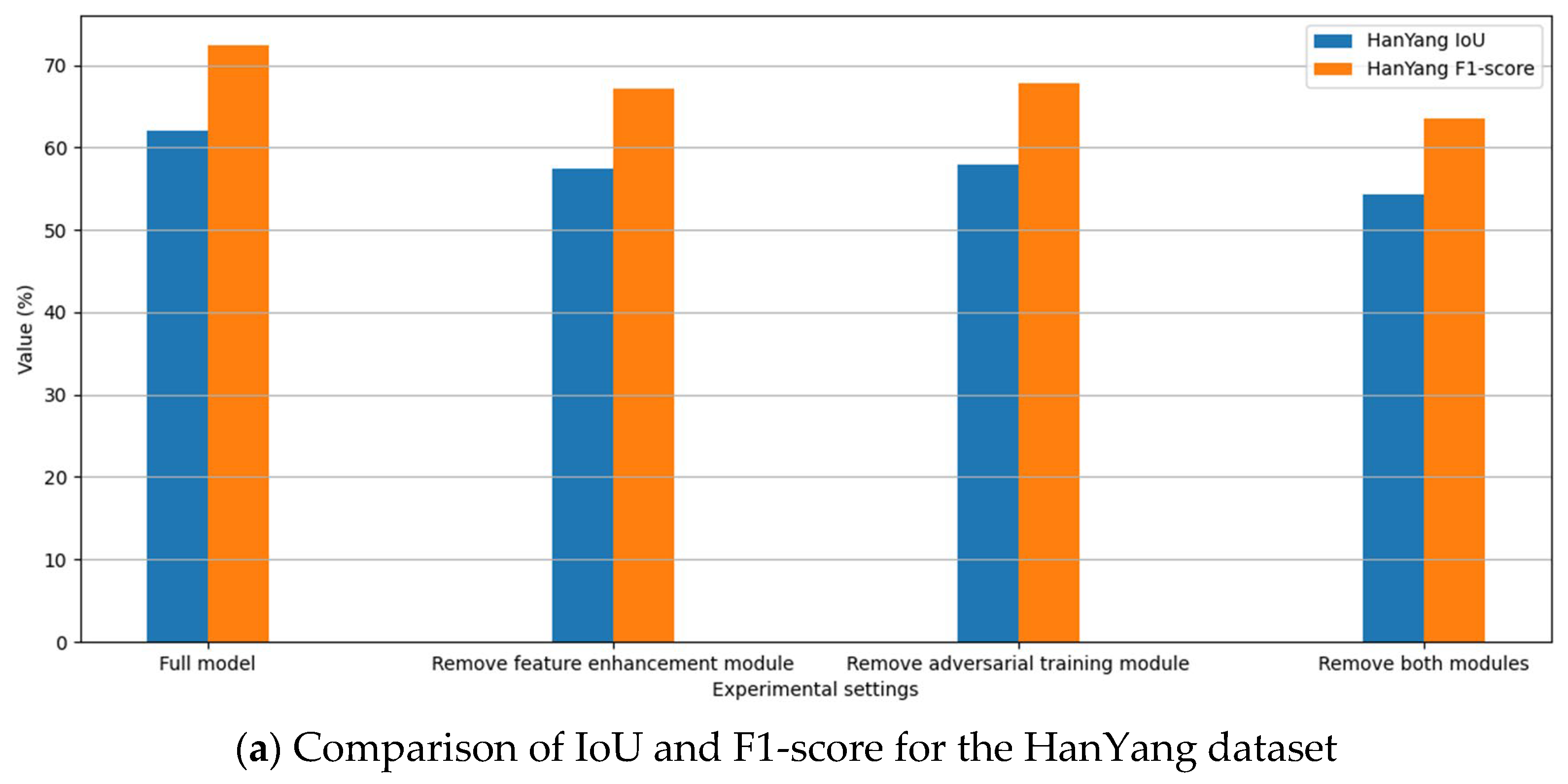

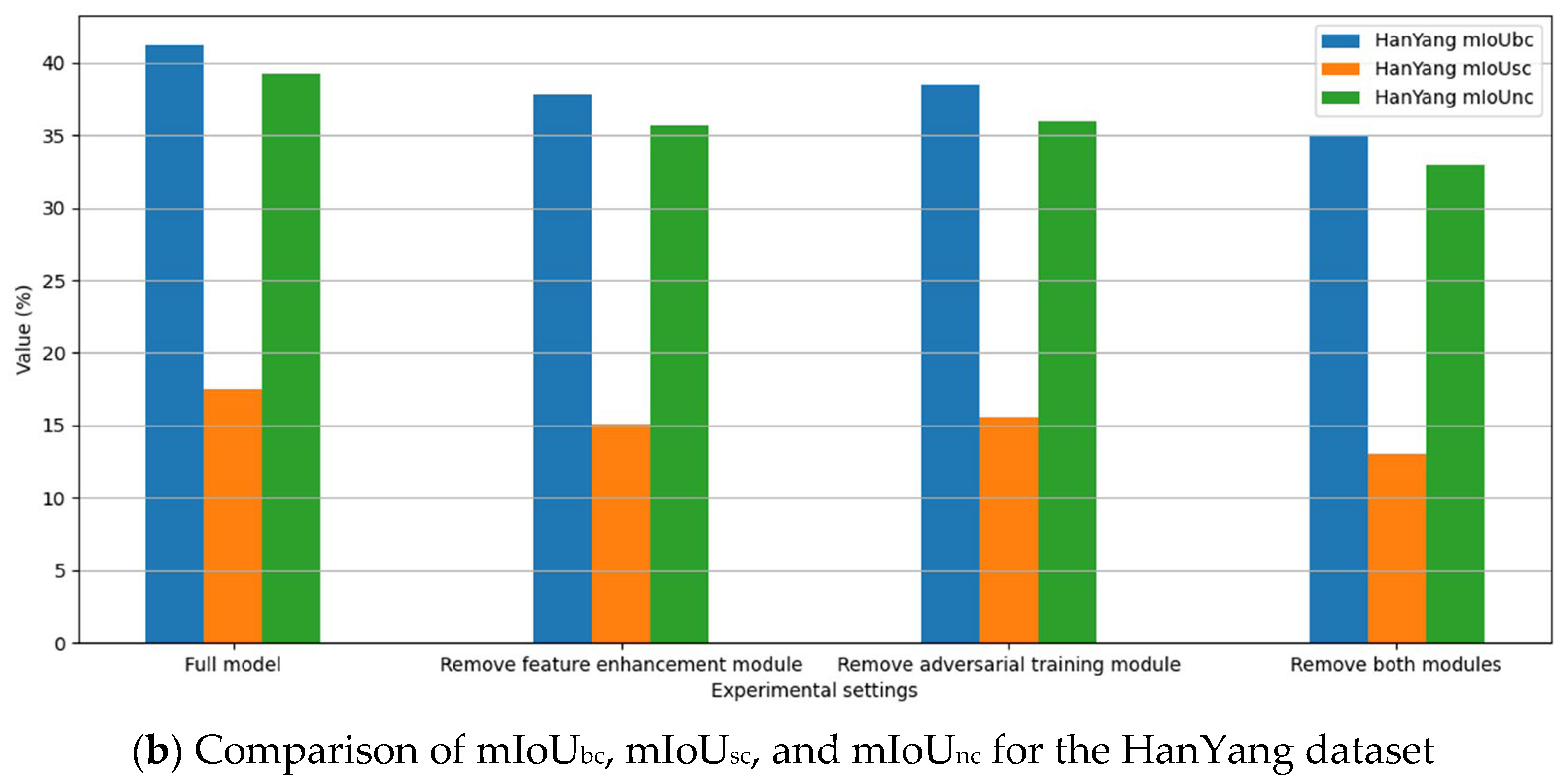

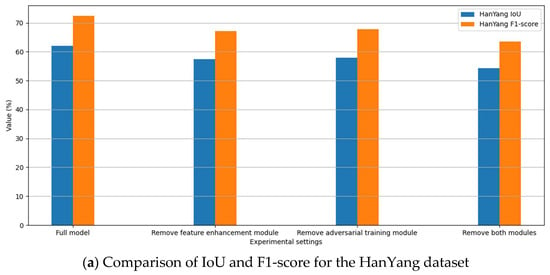

The ablation study consisted of four experimental groups. Experiment 1 utilized the complete model, while Experiments 2 and 3 involved the removal of the feature enhancement module and adversarial training module, respectively. Experiment 4 excluded both modules. The results of the ablation experiments are presented in Figure 11.

Figure 11.

The results of the ablation experiments were analyzed to observe how changes in network modules affect model performance.

When the infrared feature enhancement module is removed, the model’s binary change detection IoU decreases to 57.5%, and the F1-score drops to 67.2%. In terms of SCD, the mIoUbc on the HanYang dataset declines to 37.8%, while mIoUsc falls to 15.1% and mIoUnc decreases to 35.7%. This indicates that the infrared feature enhancement module is crucial for improving the feature representation capability of infrared images. The absence of this module leads to insufficient utilization of information from infrared images, which in turn affects accurate judgment regarding changed and unchanged areas, ultimately diminishing the model’s performance in both binary change detection and SCD tasks.

If the common feature extraction module based on adversarial training is removed, the model’s performance is significantly impacted. The IoU for binary change detection of the model drops to 58.0%, and the F1-score decreases to 67.8%. In SCD, the mIoUbc for the HanYang dataset is recorded at 38.5%, while mIoUsc stands at 15.5% and mIoUnc at 36.0%. This indicates that this module plays a crucial role in filtering common features from visible light and infrared images, thereby reducing modal disparity effects. Without it, the model struggles to effectively extract stable common features, resulting in decreased accuracy in both change detection and semantic segmentation tasks. When both modules are simultaneously removed, a more pronounced decline in model performance is observed. The IoU falls to just 54.3%, with an F1-score of only 63.5%; additionally, for SCD, mIoUbc reaches merely 35.0%, mIoUsc drops to 13.0%, and mIoUnc declines to 33.0%.

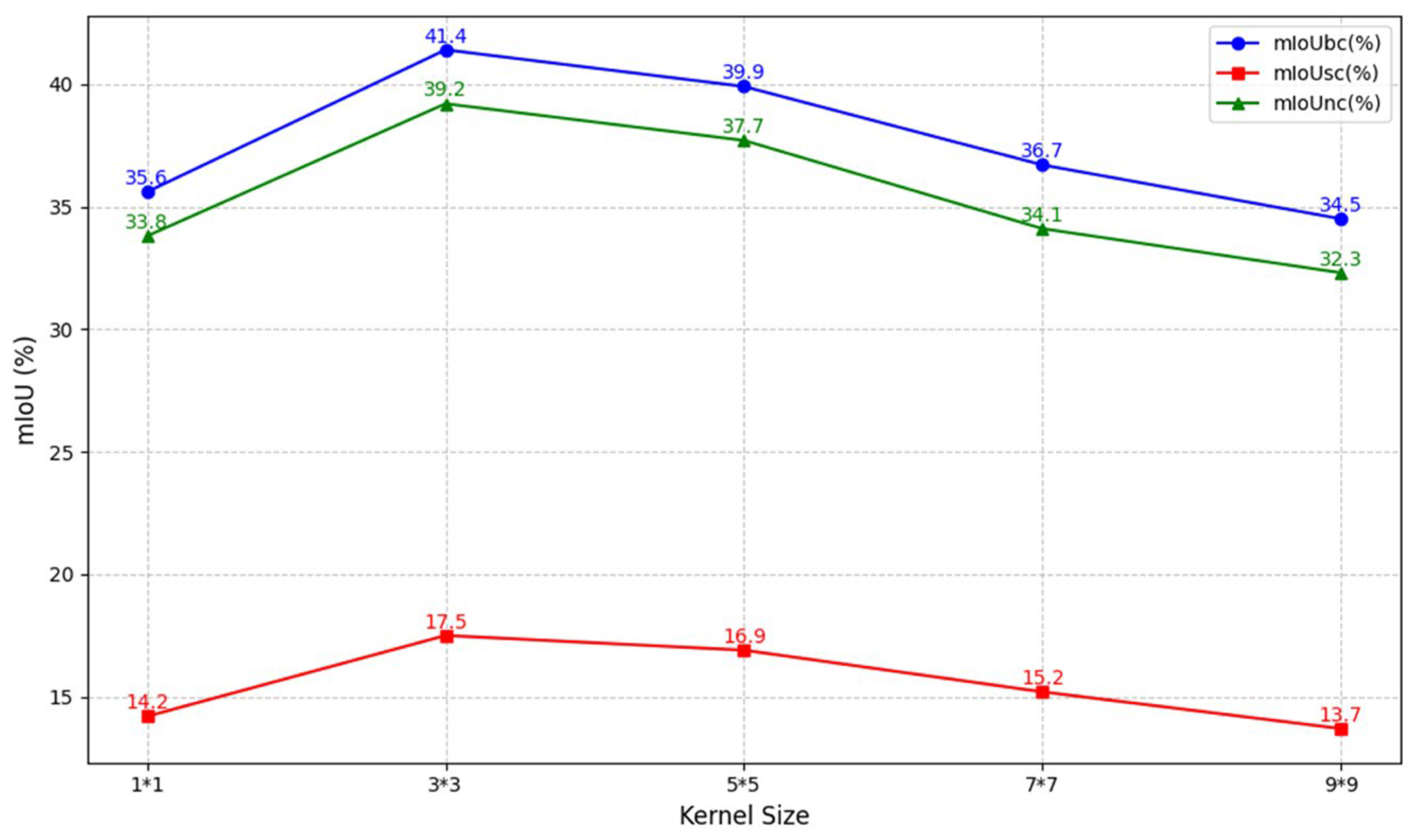

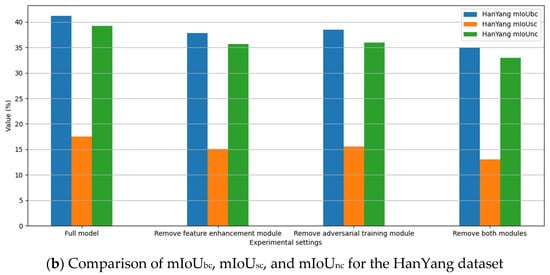

To investigate the influence of the convolution kernel size of the Laplacian operator on the performance of the IFE, we conducted ablation experiments by varying the kernel size while keeping other network configurations constant. The results and parameter settings are illustrated in Figure 12.

Figure 12.

Illustration of the impact of Laplacian kernel size on the performance of MFA-SCDNet. The term “n*n” refers to a kernel of size n × n.

The results presented in Figure 12 indicate that, under the specified experimental conditions, the performance of mIoUbc, mIoUsc, and mIoUnc reach their peak at a kernel size of 3 × 3. Both excessively small and large kernels lead to a degradation in performance due to inadequate extraction of high-frequency details or excessive smoothing of features. This finding underscores the importance of selecting an optimal kernel size to effectively balance feature discrimination and noise suppression.

Through the results of ablation experiments, it is evident that both the infrared feature enhancement module and the adversarial training-based common feature extraction module are indispensable components of MFA-SCDNet. These modules optimize model performance from different perspectives. The former enhances the features of infrared images, enabling better integration of bimodal information, whereas the latter effectively extracts common features, mitigating interference caused by modal differences. The synergistic interaction between these two modules significantly improves MFA-SCDNet’s performance in visible and infrared image pairs SCD tasks, providing robust support for the model’s accuracy and stability.

5. Discussion

From the experimental results, it is evident that MFA-SCDNet outperforms comparative algorithms in both binary change detection and SCD tasks. This superiority can be attributed to its unique network design. The feature classification module effectively extracts common features minimally affected by modal changes through adversarial training, thereby reducing interference caused by modal differences and enabling the network to more accurately identify change information. Furthermore, the infrared feature enhancement module transforms single-channel infrared grayscale images into three-channel images, thereby augmenting the feature information of infrared images. This transformation compensates for the inherent disadvantage of insufficient information compared to visible light images, ultimately enhancing the model’s ability to understand and process infrared imagery.

However, there remains considerable room for optimization in MFA-SCDNet. In complex scenarios, although the network is capable of detecting most changes, its detection accuracy for subtle and concealed variations still requires improvement. This limitation may stem from the significant background interference present in intricate environments, making it challenging for existing feature extraction and classification methods to accurately distinguish minor changes from noise. Furthermore, the network exhibits high computational complexity when processing large-sized images, which results in a decline in detection efficiency. This issue could become a limiting factor in practical applications, particularly in scenarios where real-time performance is critical.

6. Conclusions

The present study introduces a network named MFA-SCDNet, designed for SCD in visible and infrared image pairs. This approach effectively addresses the challenges posed by modality differences in heterogeneous image feature extraction. The core of MFA-SCDNet lies in its innovative design of infrared feature enhancement and cross-modal common feature extraction modules. These components facilitate the extraction of both high-frequency and low-frequency components from infrared images to enhance feature information. Additionally, adversarial training is employed to filter out common features that are minimally affected by modal variations for change detection purposes, while modality-specific features are utilized for semantic recognition. Ultimately, the integration of change and semantic information yields SCD results, which have been validated on an open-source dataset demonstrating the effectiveness of the proposed network.

Author Contributions

Conceptualization, X.L.; methodology, X.L.; software, X.L.; validation, X.L.; investigation, X.L.; resources, X.L. and J.W.; writing—review and editing, X.L. and J.G.; supervision, Z.W. and J.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

Author Jianxiong Wen was employed by the company Beijing Hangyu Tianqiong Technology Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| SCD | Semantic Change Detection |

| BCD | Binary Change Detection |

| IFE | Infrared feature enhancement |

| CCFE | Linear dichroism |

References

- He, J.; Jiang, X.; Hao, Z.; Zhu, M.; Gao, W.; Liu, S. LPHOG: A Line Feature and Point Feature Combined Rotation Invariant Method for Heterologous Image Registration. Remote Sens. 2023, 15, 4548. [Google Scholar] [CrossRef]

- Zhu, X.; Yang, Z.; Zoubir, T. Research on the Matching Algorithm for Heterologous Image After Deformation in the Same Scene. Discret. Contin. Dyn. Syst. 2019, 12, 1281–1296. [Google Scholar]

- Jiang, C.; Ren, H.; Yang, H.; Huo, H.; Zhu, P.; Yao, Z.; Li, J.; Sun, M.; Yang, S. M2FNet: Multi-Modal Fusion Network for Object Detection from Visible and Thermal Infrared Images. Int. J. Appl. Earth Obs. Geoinf. 2024, 130, 103918. [Google Scholar] [CrossRef]

- Hou, Z.; Li, X.; Yang, C.; Ma, S.; Yu, W.; Wang, Y. Dual-Branch Network Object Detection Algorithm Based on Dual-Modality Fusion of Visible and Infrared Images. Multimed. Syst. 2024, 30, 333. [Google Scholar] [CrossRef]

- Tang, K.; Xu, F.; Chen, X.; Dong, Q.; Yuan, Y.; Chen, J. The ClearSCD Model: Comprehensively Leveraging Semantics and Change Relationships for Semantic Change Detection in High Spatial Resolution Remote Sensing Imagery. ISPRS-J. Photogramm. Remote Sens. 2024, 211, 299–317. [Google Scholar] [CrossRef]

- Liu, T.; Zhang, M.; Gong, M.; Zhang, Q.; Jiang, F.; Zheng, H.; Lu, D. Commonality Feature Representation Learning for Unsupervised Multimodal Change Detection. IEEE Trans. Image Process. 2025, 34, 1219–1233. [Google Scholar] [CrossRef]

- Wu, Y.; Li, J.H.; Yuan, Y.Z.; Qin, A.K.; Miao, Q.G.; Gong, M.G. Commonality Autoencoder: Learning Common Features for Change Detection from Heterogeneous Images. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 4257–4270. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Wang, X.; Fang, S.; Zhao, J.; Yang, S.; Li, W. A Decoder-Focused Multitask Network for Semantic Change Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5609115. [Google Scholar] [CrossRef]

- Han, Y.; Zheng, C.; Liu, X.; Tian, Y.; Dong, Z. Burned Area and Burn Severity Mapping with a Transformer-Based Change Detection Model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 13866–13880. [Google Scholar] [CrossRef]

- Cao, Y.; Huang, X.; Weng, Q. A Multi-Scale Weakly Supervised Learning Method with Adaptive Online Noise Correction for High-Resolution Change Detection of Built-Up Areas. Remote Sens. Environ. 2023, 297, 113779. [Google Scholar] [CrossRef]

- Chantharaj, S.; Pornratthanapong, K.; Chitsinpchayakun, P.; Panboonyuen, T.; Vateekul, P.; Lawavirojwong, S.; Srestasathiern, P.; Jitkajornwanich, K. Semantic Segmentation on Medium-Resolution Satellite Images Using Deep Convolutional Networks with Remote Sensing Derived Indices. In Proceedings of the 15th International Joint Conference on Computer Science and Software Engineering (JCSSE), Nakhonpathom, Thailand, 11–13 July 2018. [Google Scholar]

- Cao, Y.; Feng, W.; Quan, Y.; Bao, W.; Dauphin, G.; Ren, A.; Yuan, X.; Xing, M. Forest Disaster Detection Method Based on Ensemble Spatial-Spectral Genetic Algorithm. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 7375–7390. [Google Scholar] [CrossRef]

- Han, T.; Tang, Y.; Chen, Y.; Zou, B.; Feng, H. Global Structure Graph Mapping for Multimodal Change Detection. Int. J. Digit. Earth 2024, 17, 2347457. [Google Scholar] [CrossRef]

- Wu, C.; Zhang, L.; Zhang, L. A Scene Change Detection Framework for Multi-Temporal Very High Resolution Remote Sensing Images. Signal Process. 2016, 124, 184–197. [Google Scholar] [CrossRef]

- Wu, C.; Zhang, L.; Du, B. Kernel Slow Feature Analysis for Scene Change Detection. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2367–2384. [Google Scholar] [CrossRef]

- Lal, A.; Anouncia, S. Modernizing the Multi-Temporal Multispectral Remotely Sensed Image Change Detection for Global Maxima Through Binary Particle Swarm Optimization. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 95–103. [Google Scholar] [CrossRef]

- Kusetogullari, H.; Yavariabdi, A.; Celik, T. Unsupervised Change Detection in Multitemporal Multispectral Satellite Images Using Parallel Particle Swarm Optimization. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2151–2164. [Google Scholar] [CrossRef]

- Qu, J.; Yang, P.; Dong, W.; Zhang, X.; Li, Y. A Semi-Supervised Multiscale Convolutional Sparse Coding-Guided Deep Interpretable Network for Hyperspectral Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5531314. [Google Scholar] [CrossRef]

- Yao, F.; Wang, Y. Tracking Urban Geo-Topics Based on Dynamic Topic Model. Comput. Environ. Urban Syst. 2020, 79, 101419. [Google Scholar] [CrossRef]

- Lv, P.; Cheng, P.; Ma, C.; Zhong, Y. A Semi-Supervised Semantic and Spatial Change Detail Retention Network for Semantic Change Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4416516. [Google Scholar] [CrossRef]

- Niu, Y.; Guo, H.; Lu, J.; Ding, L.; Yu, D. SMNet: Symmetric Multi-Task Network for Semantic Change Detection in Remote Sensing Images Based on CNN and Transformer. Remote Sens. 2023, 15, 949. [Google Scholar] [CrossRef]

- Wang, J.; Zhong, Y.; Zhang, L. Semantic Change Detection Based on Supervised Contrastive Learning for High-Resolution Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5649720. [Google Scholar] [CrossRef]

- Ji, Z.; Wang, X.; Wang, Z.; Li, G. An Enhanced and Unsupervised Siamese Network with Superpixel-Guided Learning for Change Detection in Heterogeneous Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 19451–19466. [Google Scholar] [CrossRef]

- Li, W.; Wang, X.; Li, G.; Geng, B.C.; Varshney, P.K. A Copula-Guided In-Model Interpretable Neural Network for Change Detection in Heterogeneous Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4700817. [Google Scholar] [CrossRef]

- Han, P.; Ma, C.; Li, Q.; Leng, P.; Bu, S.; Li, K. Aerial Image Change Detection Using Dual Regions of Interest Networks. Neurocomputing 2019, 349, 190–201. [Google Scholar] [CrossRef]

- Kwan, C.; Larkin, J. Detection of Small Moving Objects in Long Range Infrared Videos from a Change Detection Perspective. Photonics 2021, 8, 394. [Google Scholar] [CrossRef]

- Mirka, B.; Stow, D.; Paulus, G.; Loerch, A.C.; Coulter, L.L.; An, L.; Lewison, R.L.; Pflüger, L.S. Evaluation of Thermal Infrared Imaging from Uninhabited Aerial Vehicles for Arboreal Wildlife Surveillance. Environ. Monit. Assess. 2022, 194, 512. [Google Scholar] [CrossRef]

- Bian, L.; Cao, F.; Zhao, H.; Xiang, F.; Sun, H.; Wang, M.; Li, L. Self-Powered Perovskite/Si Bipolar Response Photodetector for Visible and Near-Infrared Dual-Band Imaging and Secure Optical Communication. Laser Photon. Rev. 2024, 19, 1. [Google Scholar] [CrossRef]

- Kurban, T. Fusion of Remotely Sensed Infrared and Visible Images Using Shearlet Transform and Backtracking Search Algorithm. Int. J. Remote Sens. 2021, 42, 5087–5104. [Google Scholar] [CrossRef]

- Fang, H.; Wei, Y.; Luo, H.; Hu, Q. Detection of Building Shadow in Remote Sensing Imagery of Urban Areas with Fine Spatial Resolution Based on Saturation and Near-Infrared Information. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2695–2706. [Google Scholar] [CrossRef]

- Wang, R.; Wu, H.; Qiu, H.; Wang, F.; Liu, X.; Cheng, X. A Difference Enhanced Neural Network for Semantic Change Detection of Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2023, 20, 5509205. [Google Scholar] [CrossRef]

- Xiang, S.; Wang, M.; Jiang, X.; Xie, G.; Zhang, Z.; Tang, P. Dual-Task Semantic Change Detection for Remote Sensing Images Using the Generative Change Field Module. Remote Sens. 2021, 13, 3336. [Google Scholar] [CrossRef]

- Feng, H.; Dai, X.; Chai, L.; Su, B.; Zhang, T.; Xiao, C. Landsat Data-Driven Identification of Surface Soil Freeze and Thaw States: A Comparison of Various Target Detection and Semantic Segmentation Models. Int. J. Digit. Earth 2024, 17, 2413889. [Google Scholar] [CrossRef]

- Khoshboresh-Masouleh, M.; Shah-Hosseini, R. Development and Evaluation of a Deep Learning Model for Real-Time Ground Vehicle Semantic Segmentation from UAV-Based Thermal Infrared Imagery. ISPRS-J. Photogramm. Remote Sens. 2019, 155, 172–186. [Google Scholar] [CrossRef]

- Zhou, X.; Wu, X.; Bao, L.; Yin, H.; Jiang, Q.; Zhang, J. AGFNet: Adaptive Gated Fusion Network for RGB-T Semantic Segmentation. IEEE Trans. Intell. Transp. Syst. 2025, 26, 6477–6492. [Google Scholar] [CrossRef]

- Zheng, Z.; Zhong, Y.; Tian, S.; Ma, A.; Zhang, L. ChangeMask: Deep Multi-Task Encoder-Transformer-Decoder Architecture for Semantic Change Detection. ISPRS-J. Photogramm. Remote Sens. 2022, 183, 228–239. [Google Scholar] [CrossRef]

- Zheng, D.; Wu, Z.; Liu, J.; Xu, Y.; Hung, C.; Wei, Z. Explicit Change-Relation Learning for Change Detection in VHR Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2024, 21, 6005005. [Google Scholar] [CrossRef]

- Prasad, J.V.D.; Sreelatha, M.; SuvarnaVani, K. Semantic Land Cover Change Detection Using HarDNet and Dual Path Coronet. Int. J. Remote Sens. 2023, 44, 7857–7875. [Google Scholar] [CrossRef]

- Daudt, R.; Le Saux, B.; Boulch, A.; Gousseau, Y. Multitask Learning for Large-Scale Semantic Change Detection. Comput. Vis. Image Underst. 2019, 187, 102783. [Google Scholar] [CrossRef]

- He, L.; Zhang, M.; Li, Y.; Zhang, J.; Luo, S.; Li, S.; Zhang, X. Change-Guided Similarity Pyramid Network for Semantic Change Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5637917. [Google Scholar] [CrossRef]

- Jiang, X.; Huang, B.; Zhao, Y. Spatiotemporal Image Fusion with Spectrally Preserved Pre-Prediction: Tackling Complex Land-Cover Changes. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5406114. [Google Scholar] [CrossRef]

- Singh, S.; Tiwari, R.; Sood, V.; Gusain, H.; Prashar, S. Image Fusion of Ku-Band-Based SCATSAT-1 and MODIS Data for Cloud-Free Change Detection Over Western Himalayas. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4302514. [Google Scholar] [CrossRef]

- Benson, I.M.; Helser, T.E.; Barnett, B.K. Fourier Transform Near Infrared Spectroscopy of Otoliths Coupled with Deep Learning Improves Age Prediction for Long-Lived Northern Rockfish. Fish. Res. 2024, 278, 107116. [Google Scholar] [CrossRef]

- Zhang, L.; Ding, X.; Hou, R. Classification Modeling Method for Near-Infrared Spectroscopy of Tobacco Based on Multimodal Convolution Neural Networks. J. Anal. Methods Chem. 2020, 2020, 9652470. [Google Scholar] [CrossRef]

- Li, Z.; Wang, Q.; Chen, L.; Zhang, X.; Yin, Y. Cascaded Cross-Modal Alignment for Visible-Infrared Person Re-Identification. Knowledge-Based Syst. 2024, 305, 112585. [Google Scholar] [CrossRef]

- Yuan, M.; Shi, X.; Wang, N.; Wang, Y.; Wei, X. Improving RGB-Infrared Object Detection with Cascade Alignment-Guided Transformer. Inf. Fusion 2024, 105, 102246. [Google Scholar] [CrossRef]

- Gonzalez, C.; Horrocks, T.; Wedge, D.; Holden, E.; Hackman, N.; Green, T. Anomaly Detection in Fourier Transform Infrared Spectroscopy of Geological Specimens Using Variational Autoencoders. Ore Geol. Rev. 2023, 158, 105478. [Google Scholar] [CrossRef]

- Ma, N.; Peng, Y.; Wang, S. On-Line Hyperspectral Anomaly Detection with Hypothesis Test Based Model Learning. Infrared Phys. Technol. 2019, 97, 15–24. [Google Scholar] [CrossRef]

- Li, X.; Lei, L.; Zhang, C.; Kuang, G. Multimodal Semantic Consistency-Based Fusion Architecture Search for Land Cover Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4412414. [Google Scholar] [CrossRef]

- Liao, M.; Tian, S.; Wei, B.; Zhang, Y.; Zou, W.; Li, X. Class-Balanced Sampling and Discriminative Stylization for Domain Generalization Semantic Segmentation. IEEE Trans. Intell. Transp. Syst. 2024, 26, 2596–2608. [Google Scholar] [CrossRef]

- Zhang, C.; Li, H.; Feng, Z.; He, S. Joint coupled dictionaries-based visible-infrared image fusion method via texture preservation structure in sparse domain. Comput. Vis. Image Underst. 2023, 235, 103781. [Google Scholar] [CrossRef]

- Wan, D.; Lu, R.; Hu, B.; Yin, J.; Shen, S.; Xu, T.; Lang, X. YOLO-MIF: Improved YOLOv8 with Multi-Information Fusion for Object Detection in Gray-Scale Images. Adv. Eng. Inform. 2024, 62, 102709. [Google Scholar] [CrossRef]

- Yang, G.; Lei, J.; Tian, H.; Feng, Z.; Liang, R. Asymptotic Feature Pyramid Network for Labeling Pixels and Regions. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 7820–7829. [Google Scholar] [CrossRef]

- Tian, S.; Zhong, Y.; Zheng, Z.; Ma, A.; Tan, X.; Zhang, L. Large-scale deep learning based binary and semantic change detection in ultra high resolution remote sensing imagery: From benchmark datasets to urban application. ISPRS-J. Photogramm. Remote Sens. 2022, 193, 164–186. [Google Scholar] [CrossRef]

- Ding, L.; Zhang, J.; Guo, H.; Zhang, K.; Liu, B.; Bruzzone, L. Joint Spatio-Temporal Modeling for Semantic Change Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5610814. [Google Scholar] [CrossRef]

- Cheng, Y.; Yang, W.; Li, Y.; Xu, W.; Chang, L.; Li, R. Remote Sensing Semantic Change Detection Based on the Visual Foundation Model. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Athens, Greece, 7–12 July 2024. [Google Scholar]

- Wang, Q.; Jing, W.; Chi, K.; Yuan, Y. Cross-Difference Semantic Consistency Network for Semantic Change Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4406312. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).