Spectral-Spatial-Sensorial Attention Network with Controllable Factors for Hyperspectral Image Classification

Abstract

1. Introduction

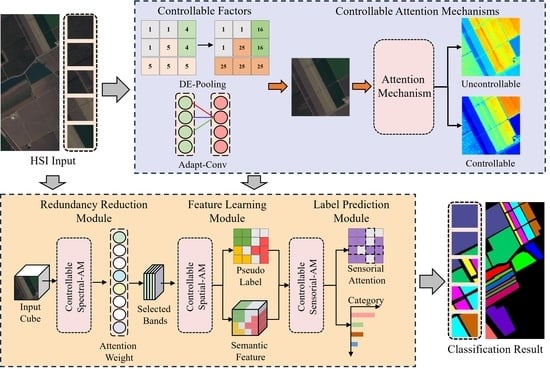

- A S3AN with controllable factors is proposed for HSI classification. The S3AN integrates redundancy reduction, feature learning, and label prediction processes based on the spectral-spatial-sensorial attention mechanism, which refines the transformation of features and improves the adaptability of attention mechanisms in HSI classification;

- Two controllable factors, DE-Pooling and Adapt-Conv are developed to balance the differences in spectral–spatial features. The controllable factors are dynamically adjusted through backpropagation to accurately distinguish continuous and approximate features, and improve the sensitivity of attention mechanisms;

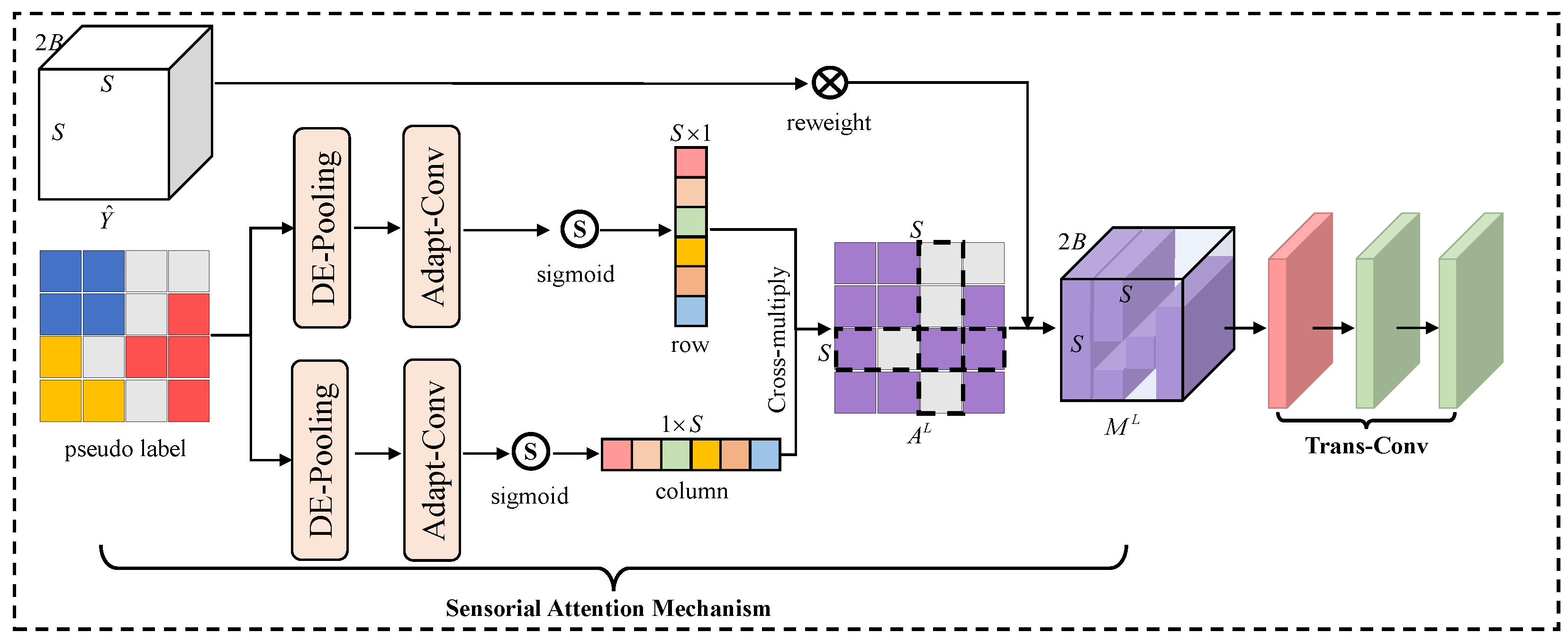

- A new sensorial attention mechanism is designed to enhance the representation of detailed features. The category information in the pseudo label is transformed into the sensorial attention map to highlight important objects, and position details and improve the reliability of label prediction.

2. Related Work and Motivations

2.1. HSI Classification Based on CNN

2.2. HSI Classification Based on Attention Mechanism

2.3. Motivations

3. Materials and Methods

3.1. Controllable Factors

3.1.1. DE-Pooling

3.1.2. Adapt-Conv

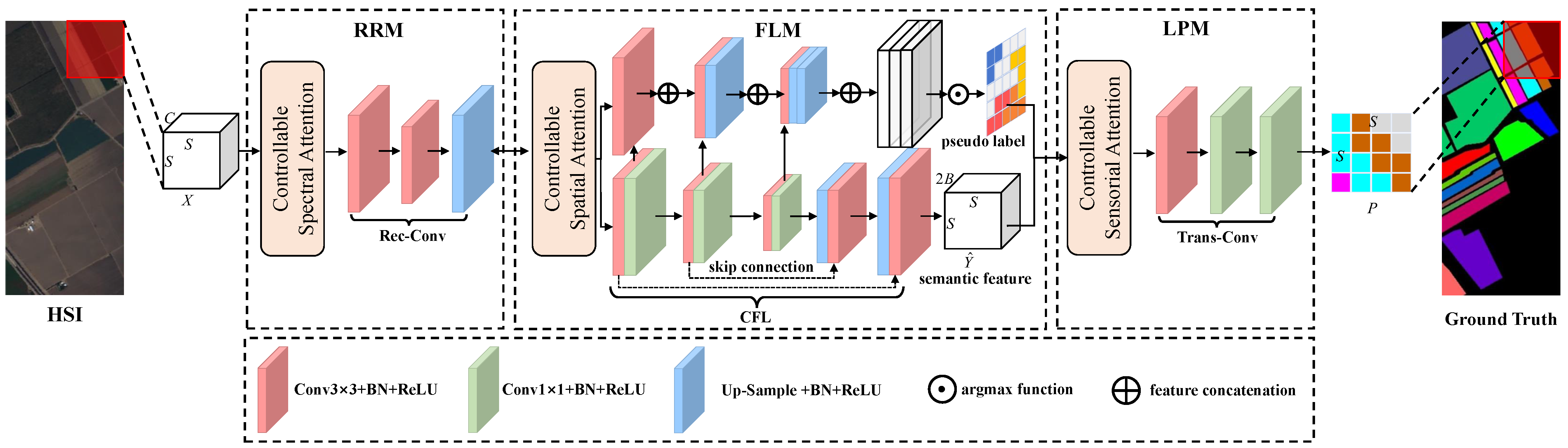

3.2. Redundancy Reduction Module (RRM)

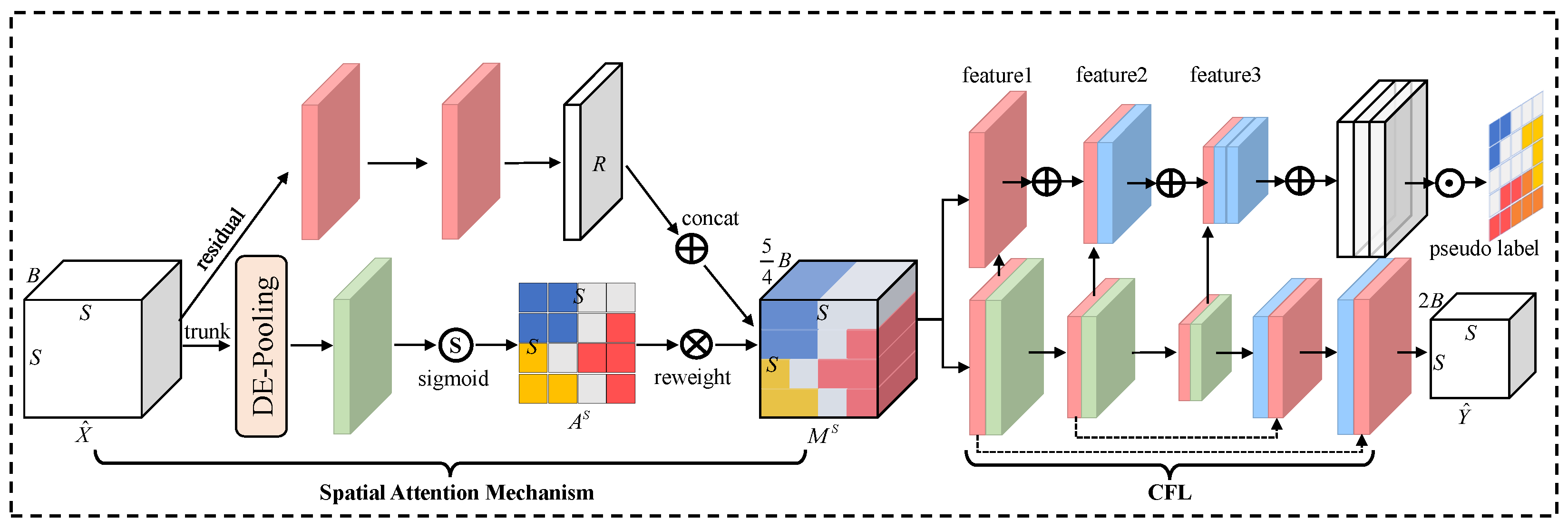

3.3. Feature Learning Module (FLM)

3.4. Label Prediction Module (LPM)

3.5. S3AN for HSI Classification

4. Experimental Results

4.1. Datasets Description

- Indian Pines dataset: The Indian Pines dataset was collected by AVIRIS imaging spectrometer in a piece of Indian Pine in Indiana, USA, with a spatial resolution of 20 m. The image has 200 bands and pixels and contains 16 different categories of land cover.

- Salinas dataset: The Salinas dataset was collected by the AVIRIS imaging spectrometer in the Salinas Valley, California, USA, with a spatial resolution of 3.7 m. The image has 204 bands and pixels and contains 16 different categories of land cover;

- WHU-Hi-HanChuan dataset: The WHU-Hi-HanChuan dataset was collected by the Headwall Nano Hyperspec imaging spectrometer aboard the drone platform in Hanchuan, Hubei Province, China, with a spatial resolution of about 0.0109 m. The image has 274 bands and pixels and contains 16 different categories of land cover.

4.2. Experimental Setup

- Operation environment: All experiments are based on the PyTorch library and run on Tesla M40 GPUs. The experimental results are the average of 10 independent runs;

- Evaluation metrics: Five metrics are used to evaluate the performance of HSI classification with respect to classification accuracy and computational efficiency, such as the per-class accuracy, the overall accuracy (), the average accuracy (), the coefficient (), and the inference time. Specifically, evaluation metrics are calculated as follows:where denotes True Positive, denotes False Positive, denotes True Negative, and denotes False Negative. Note that is an intermediate variable in the calculation of . denotes per-class accuracy. In addition, the inference time denotes the time required by the model to ergodic the entire HSI. The shorter of the inference time indicates that the model is closer to the actual application requirements.

- Parameters setting: For the parameters of controllable factors, the dynamic exponent is initially set to 2; the adaptive convolution kernel size is initially set to 3; and the number of bands selected is set to 32. In addition, the Adam optimizer trains the model with a learning rate of 0.001, the loss function is cross-entropy and the training epoch is set to 200.

4.3. Classification Results

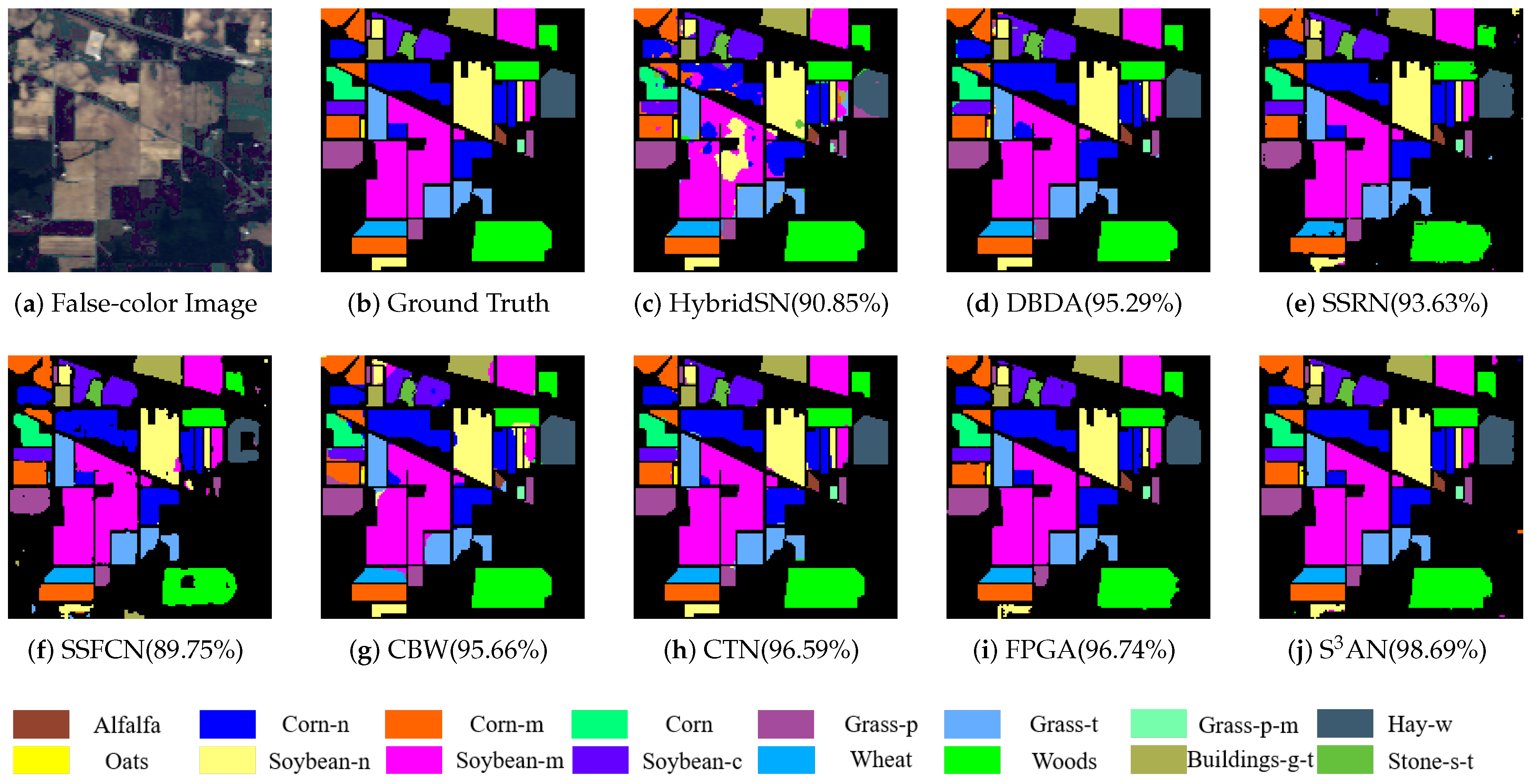

4.3.1. Classification Results on Indian Pines Dataset

4.3.2. Classification Results on Salinas Dataset

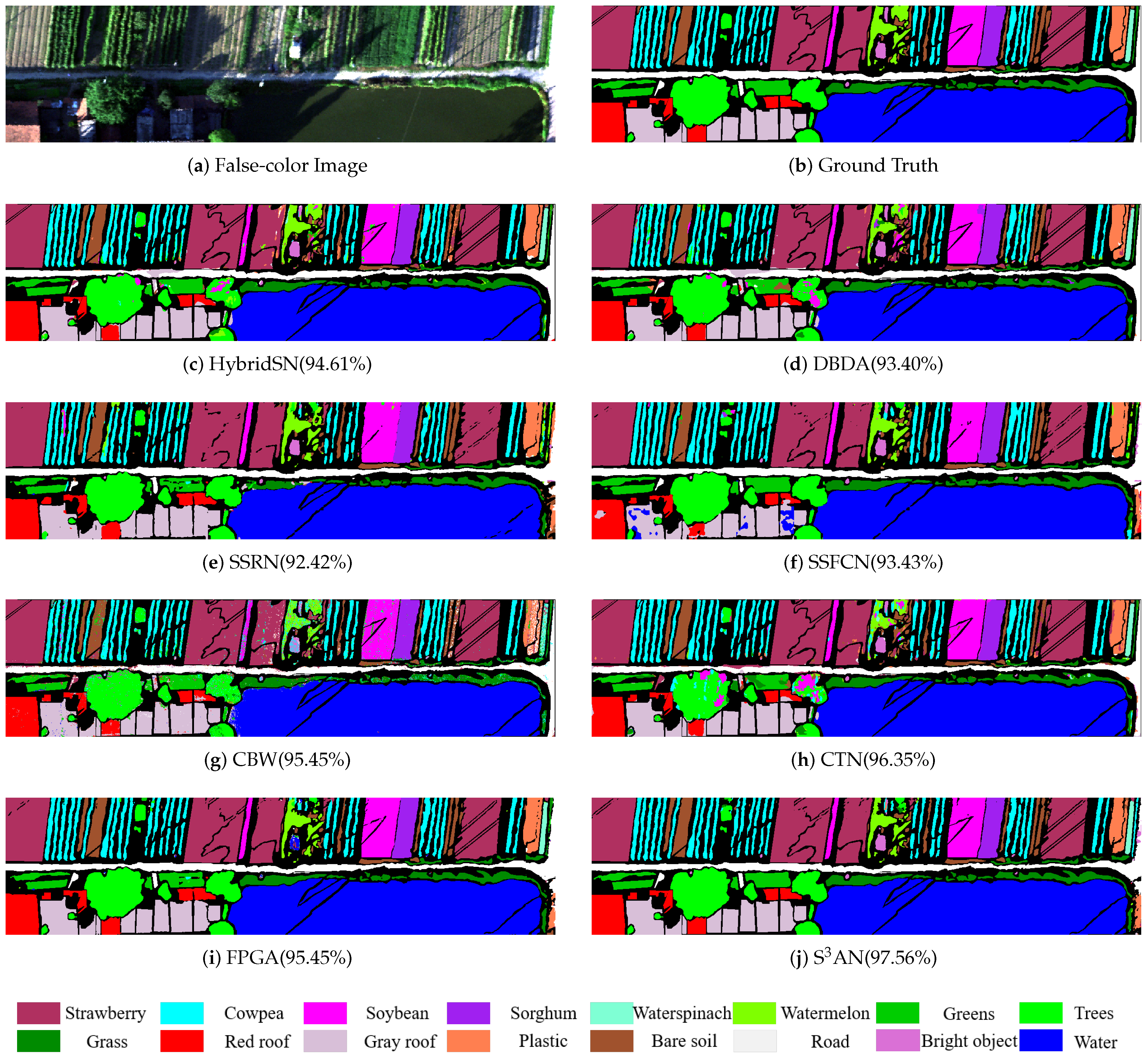

4.3.3. Classification Results on HanChuan Dataset

4.3.4. Confusion Matrix

5. Discussion

5.1. Discussion of Controllable Factors

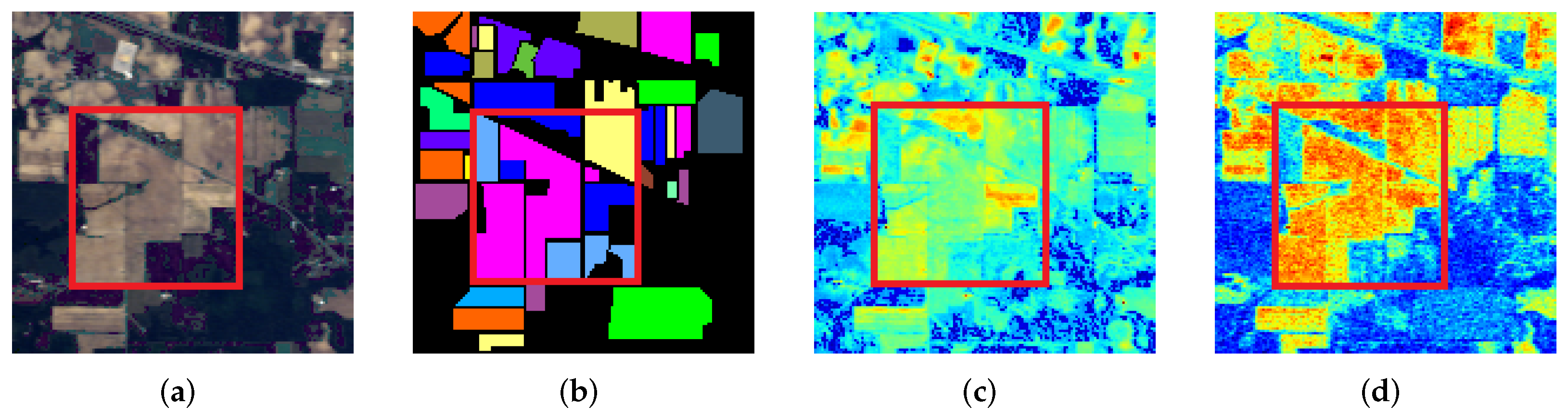

5.2. Discussion of Sensorial Attention Mechanism

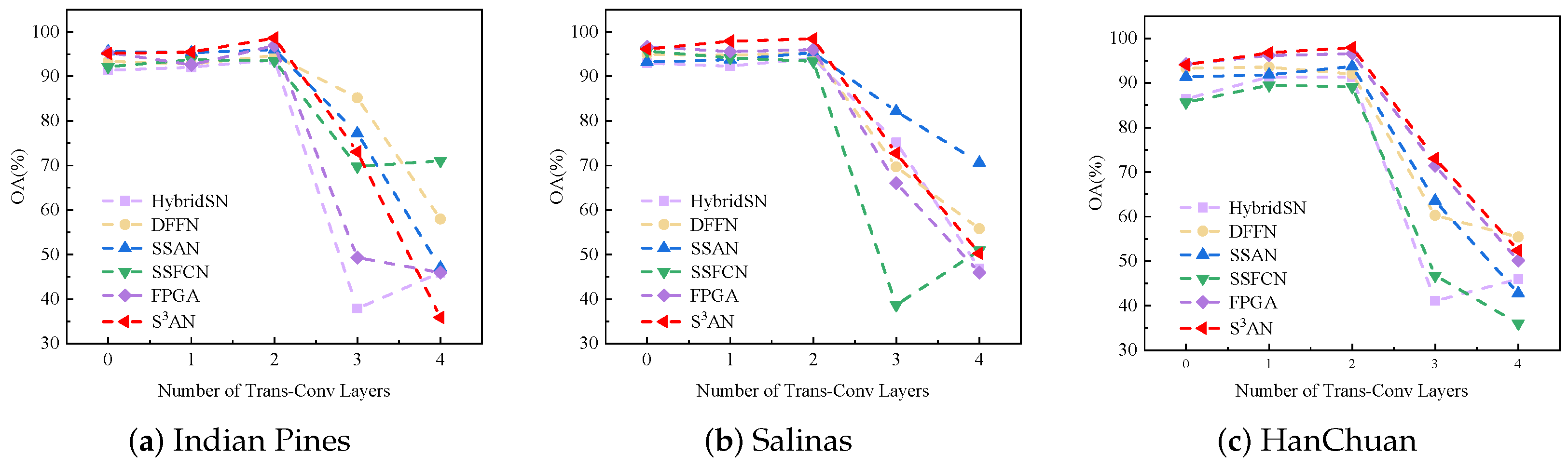

5.3. Discussion of Trans-Conv Layers

5.4. Discussion of Selected Bands

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- He, L.; Li, J.; Liu, C.; Li, S. Recent advances on spectral–spatial hyperspectral image classification: An overview and new guidelines. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1579–1597. [Google Scholar] [CrossRef]

- Paoletti, M.; Haut, J.; Plaza, J.; Plaza, A. Deep learning classifiers for hyperspectral imaging: A review. ISPRS J. Photogramm. Remote Sens. 2019, 158, 279–317. [Google Scholar] [CrossRef]

- Dong, Y.; Liang, T.; Zhang, Y.; Du, B. Spectral–spatial weighted kernel manifold embedded distribution alignment for remote sensing image classification. IEEE Trans. Cybern. 2021, 51, 3185–3197. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Peng, J.; Chen, C. Dimension reduction using spatial and spectral regularized local discriminant embedding for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2014, 53, 1082–1095. [Google Scholar] [CrossRef]

- Zhou, Y.; Wei, Y. Learning hierarchical spectral–spatial features for hyperspectral image classification. IEEE Trans. Cybern. 2016, 46, 1667–1678. [Google Scholar] [CrossRef] [PubMed]

- Zhang, F.; Du, B.; Zhang, L.; Zhang, L. Hierarchical feature learning with dropout k-means for hyperspectral image classification. Neurocomputing 2016, 187, 75–82. [Google Scholar] [CrossRef]

- Wang, M.; Wu, C.; Wang, L.; Xiang, D.; Huang, X. A feature selection approach for hyperspectral image based on modified ant lion optimizer. Knowl. Based Syst. 2019, 168, 39–48. [Google Scholar] [CrossRef]

- Xia, J.; Ghamisi, P.; Yokoya, N.; Iwasaki, A. Random forest ensembles and extended multiextinction profiles for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 202–216. [Google Scholar] [CrossRef]

- Wang, M.; Yan, Z.; Luo, J.; Ye, Z.; He, P. A band selection approach based on wavelet support vector machine ensemble model and membrane whale optimization algorithm for hyperspectral image. Appl. Intell. 2021, 51, 7766–7780. [Google Scholar] [CrossRef]

- Fang, L.; Li, S.; Kang, X.; Benediktsson, J. Spectral–spatial hyperspectral image classification via multiscale adaptive sparse representation. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7738–7749. [Google Scholar] [CrossRef]

- Zhou, H.; Zhang, X.; Zhang, C.; Ma, Q. Quaternion convolutional neural networks for hyperspectral image classification. Eng. Appl. Artif. Intell. 2023, 123, 106234. [Google Scholar] [CrossRef]

- Zhao, Z.; Hu, D.; Wang, H.; Yu, X. Convolutional transformer network for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2022, 19, 6009005. [Google Scholar] [CrossRef]

- Roy, S.; Krishna, G.; Dubey, S.; Chaudhuri, B. HybridSN: Exploring 3-D–2-D CNN feature hierarchy for hyperspectral image classification. IEEE Geosci. Remote Sens. Lett. 2019, 17, 277–281. [Google Scholar] [CrossRef]

- Dalal, A.; Cai, Z.; Al-Qaness, M.; Dahou, A.; Alawamy, E.; Issaka, S. Compression and reinforce variation with convolutional neural networks for hyperspectral image classification. Appl. Soft Comput. 2022, 130, 109650. [Google Scholar]

- Zhong, Z.; Li, J.; Luo, Z.; Chapman, M. Spectral–spatial residual network for hyperspectral image classification: A 3-D Deep Learning Framework. IEEE Trans. Geosci. Remote Sens. 2018, 56, 847–858. [Google Scholar] [CrossRef]

- Xu, Y.; Du, B.; Zhang, L. Beyond the patchwise classification: Spectral-spatial fully convolutional networks for hyperspectral image classification. IEEE Trans. Big Data 2020, 6, 492–506. [Google Scholar] [CrossRef]

- Zheng, Z.; Zhong, Y.; Ma, A.; Zhang, L. FPGA: Fast patch-free global learning framework for fully end-to-end hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2020, 58, 5612–5626. [Google Scholar] [CrossRef]

- Yang, X.; Cao, W.; Lu, Y.; Zhou, Y. Hyperspectral image transformer classification networks. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5528715. [Google Scholar] [CrossRef]

- Huang, Z.; Wang, X.; Wei, Y.; Huang, L.; Shi, H.; Liu, W.; Huang, T. Criss-cross attention for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 6896–6908. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Zhu, J.; Feng, Y.; Wang, L. MS2CANet: Multiscale spatial–spectral cross-modal attention network for hyperspectral image and LiDAR classification. IEEE Geosci. Remote Sens. Lett. 2024, 21, 5501505. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-excitation networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef] [PubMed]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1153–11542. [Google Scholar]

- Shi, C.; Wu, H.; Wang, L. A feature complementary attention network based on adaptive knowledge filtering for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5527219. [Google Scholar] [CrossRef]

- Xing, C.; Duan, C.; Wang, Z.; Wang, M. Binary feature learning with local spectral context-aware attention for classification of hyperspectral images. Pattern Recognit. 2023, 134, 109123. [Google Scholar] [CrossRef]

- Zhao, Z.; Wang, H.; Yu, X. Spectral-spatial graph attention network for semisupervised hyperspectral image classification. IEEE Geosci. Remote Sens. Lett. 2021, 19, 5503905. [Google Scholar] [CrossRef]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual attention network for image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3156–3164. [Google Scholar]

- Wang, W.; Liu, F.; Liu, J.; Xiao, L. Cross-domain few-shot hyperspectral image classification with class-wise attention. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5502418. [Google Scholar] [CrossRef]

- Roy, S.; Deria, A.; Shah, C.; Haut, J.; Du, Q.; Plaza, A. Spectral–spatial morphological attention transformer for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5503615. [Google Scholar] [CrossRef]

- Zhao, F.; Li, S.; Zhang, J.; Liu, H. Convolution transformer fusion splicing network for hyperspectral image classification. IEEE Geosci. Remote Sens. Lett. 2023, 20, 5501005. [Google Scholar] [CrossRef]

- Mou, L.; Ghamisi, P.; Zhu, X. Unsupervised spectral–spatial feature learning via deep residual Conv–Deconv network for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2017, 56, 391–406. [Google Scholar] [CrossRef]

- Lin, S.; Zhang, M.; Cheng, X.; Shi, L.; Gamba, P.; Wang, H. Dynamic low-rank and sparse priors constrained deep autoencoders for hyperspectral anomaly detection. IEEE Trans. Instrum. Meas. 2024, 73, 2500518. [Google Scholar] [CrossRef]

- Pan, B.; Xu, X.; Shi, Z.; Zhang, N.; Luo, H.; Lan, X. DSSNet: A simple dilated semantic segmentation network for hyperspectral imagery classification. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1968–1972. [Google Scholar] [CrossRef]

- Pu, C.; Huang, H.; Yang, L. An attention-driven convolutional neural network-based multi-level spectral–spatial feature learning for hyperspectral image classification. Expert Syst. Appl. 2021, 185, 115663. [Google Scholar] [CrossRef]

- Li, R.; Zheng, S.; Duan, C.; Yang, Y.; Wang, X. Classification of hyperspectral image based on double-branch dual-attention mechanism network. Remote Sens. 2020, 12, 582. [Google Scholar] [CrossRef]

- Zhu, M.; Jiao, L.; Liu, F.; Yang, S.; Wang, J. Residual spectral–spatial attention network for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 449–462. [Google Scholar] [CrossRef]

- Sun, H.; Zheng, X.; Lu, X.; Wu, S. Spectral–spatial attention network for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2019, 58, 3232–3245. [Google Scholar] [CrossRef]

- Cheng, X.; Zhang, M.; Lin, S.; Li, Y.; Wang, H. Deep self-representation learning framework for hyperspectral anomaly detection. IEEE Trans. Instrum. Meas. 2023, 73, 5002016. [Google Scholar] [CrossRef]

- Cai, Y.; Liu, X.; Cai, Z. BS-Nets: An end-to-end framework for band selection of hyperspectral image. IEEE Trans. Geosci. Remote Sens. 2019, 58, 1969–1984. [Google Scholar] [CrossRef]

- Nandi, U.; Roy, S.; Hong, D.; Wu, X.; Chanussot, J. TAttMSRecNet: Triplet-attention and multiscale reconstruction network for band selection in hyperspectral images. Expert Syst. Appl. 2023, 212, 118797. [Google Scholar] [CrossRef]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep feature extraction and classification of hyperspectral images based on convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar] [CrossRef]

- Zhao, L.; Yi, J.; Li, X.; Hu, W.; Wu, J.; Zhang, G. Compact band weighting module based on attention-driven for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 9540–9552. [Google Scholar] [CrossRef]

- Lee, H.; Kwon, H. Going deeper with contextual CNN for hyperspectral image classification. IEEE Trans. Image Process. 2017, 26, 4843–4855. [Google Scholar] [CrossRef] [PubMed]

- Gao, M.; Qian, P. Exponential linear units-guided Depthwise separable convolution network with cross attention mechanism for hyperspectral image classification. Signal Process. 2023, 210, 108995. [Google Scholar] [CrossRef]

- Yang, H.; Yu, H.; Zheng, K.; Hu, J.; Tao, T.; Zhang, Q. Hyperspectral image classification based on interactive transformer and CNN with multilevel feature fusion network. IEEE Geosci. Remote Sens. Lett. 2023, 20, 5507905. [Google Scholar] [CrossRef]

- Shivam, P.; Biplab, B. Adaptive hybrid attention network for hyperspectral image classification. Pattern Recognit. Lett. 2021, 144, 6–12. [Google Scholar]

| Class | Indian Pines | Salinas | HanChuan | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Name | Train | Test | Name | Train | Test | Name | Train | Test | |

| 1 | Alfalfa | 5 | 41 | Brocoli-1 | 201 | 1808 | Strawberry | 4473 | 40,262 |

| 2 | Corn-n | 143 | 1285 | Brocoli-2 | 373 | 3353 | Cowpea | 2275 | 20,478 |

| 3 | Corn-m | 83 | 747 | Fallow | 198 | 1778 | Soybean | 1029 | 9258 |

| 4 | Corn | 24 | 213 | Fallow-r | 139 | 1255 | Sorghum | 535 | 4818 |

| 5 | Grass-p | 48 | 435 | Fallow-s | 268 | 2410 | Water-s | 120 | 1080 |

| 6 | Grass-t | 73 | 657 | Stubble | 396 | 3563 | Watermelon | 453 | 4080 |

| 7 | Grass-m | 3 | 25 | Celery | 358 | 3221 | Greens | 590 | 5313 |

| 8 | Hay-w | 48 | 430 | Graps-u | 1127 | 10,144 | Trees | 1798 | 16,180 |

| 9 | Oats | 2 | 18 | Soil-v-d | 620 | 5583 | Grass | 947 | 8522 |

| 10 | Soy-n | 97 | 875 | Corn-w | 328 | 2950 | Red roof | 1052 | 9464 |

| 11 | Soy-m | 245 | 2210 | Lettuce-4 | 107 | 961 | Gray roof | 1691 | 15,220 |

| 12 | Soy-c | 59 | 534 | Lettuce-5 | 193 | 1734 | Plastic | 368 | 3311 |

| 13 | Wheat | 20 | 185 | Lettuce-6 | 92 | 824 | Bare soil | 912 | 8204 |

| 14 | Woods | 126 | 1139 | Lettuce-7 | 107 | 963 | Road | 1856 | 16,704 |

| 15 | Buildings | 39 | 347 | Vinyardu | 727 | 6541 | Bright-o | 114 | 1022 |

| 16 | Stone-s | 9 | 84 | Vinyardv | 181 | 1626 | Water | 7540 | 67,861 |

| Total | 924 | 9325 | Total | 5415 | 48,714 | Total | 25,753 | 257,530 | |

| Class | HybridSN | DBDA | SSRN | SSFCN | CBW | CTN | FPGA | S3AN |

|---|---|---|---|---|---|---|---|---|

| 1 | 90.25 ± 0.86 | 95.15 ± 0.20 | 89.07 ± 0.95 | 56.24 ± 2.60 | 93.33 ± 1.64 | 95.23 ± 0.53 | 92.37 ± 0.15 | 99.16 ± 0.42 |

| 2 | 87.74 ± 0.29 | 93.76 ± 1.15 | 93.59 ± 0.63 | 89.65 ± 0.75 | 94.71 ± 0.85 | 94.28 ± 0.20 | 96.55 ± 0.12 | 91.55 ± 0.15 |

| 3 | 89.19 ± 0.92 | 89.61 ± 0.17 | 92.25 ± 0.99 | 95.45 ± 0.44 | 97.24 ± 0.23 | 94.61 ± 1.41 | 92.38 ± 0.37 | 96.33 ± 0.33 |

| 4 | 85.15 ± 1.94 | 92.89 ± 0.42 | 90.53 ± 1.36 | 92.62 ± 0.68 | 99.03 ± 0.07 | 98.14 ± 0.16 | 96.85 ± 0.25 | 98.18 ± 0.48 |

| 5 | 91.18 ± 0.08 | 94.66 ± 1.94 | 94.31 ± 2.56 | 95.44 ± 1.25 | 89.61 ± 1.21 | 98.62 ± 0.27 | 94.26 ± 0.31 | 97.75 ± 0.07 |

| 6 | 93.53 ± 0.25 | 96.45 ± 0.76 | 89.75 ± 0.72 | 74.96 ± 1.92 | 95.75 ± 2.91 | 97.60 ± 0.32 | 95.08 ± 1.25 | 99.60 ± 0.36 |

| 7 | 89.29 ± 0.88 | 95.33 ± 1.13 | 93.09 ± 1.02 | 85.66 ± 0.96 | 99.62 ± 0.15 | 96.15 ± 0.21 | 98.33 ± 0.88 | 95.89 ± 0.20 |

| 8 | 84.41 ± 0.64 | 97.01 ± 0.46 | 95.66 ± 1.79 | 93.50 ± 0.45 | 99.89 ± 0.04 | 99.30 ± 0.06 | 98.74 ± 0.65 | 97.03 ± 0.15 |

| 9 | 90.96 ± 1.12 | 95.62 ± 0.21 | 91.15 ± 0.56 | 91.75 ± 2.21 | 99.33 ± 0.21 | 89.99 ± 2.49 | 99.51 ± 0.42 | 96.96 ± 0.26 |

| 10 | 95.54 ± 1.28 | 92.74 ± 0.85 | 90.69 ± 0.21 | 84.85 ± 0.95 | 91.69 ± 1.35 | 99.18 ± 0.25 | 90.52 ± 1.13 | 99.51 ± 0.12 |

| 11 | 91.78 ± 2.21 | 94.11 ± 1.15 | 88.26 ± 2.24 | 89.15 ± 0.41 | 94.28 ± 0.84 | 96.83 ± 0.30 | 93.66 ± 0.16 | 95.45 ± 1.65 |

| 12 | 92.76 ± 0.86 | 96.35 ± 0.06 | 97.75 ± 1.09 | 93.96 ± 0.50 | 97.95 ± 0.39 | 98.50 ± 0.33 | 91.77 ± 3.71 | 96.33 ± 3.74 |

| 13 | 95.10 ± 0.42 | 94.18 ± 0.51 | 90.33 ± 0.57 | 89.31 ± 0.61 | 99.49 ± 0.28 | 99.45 ± 0.15 | 96.05 ± 0.85 | 98.45 ± 0.58 |

| 14 | 69.89 ± 2.98 | 89.72 ± 0.29 | 91.51 ± 0.66 | 91.99 ± 1.15 | 99.57 ± 0.04 | 99.21 ± 0.38 | 93.33 ± 2.59 | 99.76 ± 0.23 |

| 15 | 95.41 ± 0.68 | 88.96 ± 1.86 | 89.36 ± 0.30 | 92.25 ± 1.18 | 98.55 ± 0.16 | 98.86 ± 0.04 | 95.00 ± 0.23 | 98.50 ± 0.95 |

| 16 | 90.17 ± 0.11 | 95.99 ± 0.58 | 96.95 ± 0.29 | 90.96 ± 0.86 | 96.34 ± 0.31 | 94.99 ± 2.75 | 96.15 ± 0.58 | 96.88 ± 2.21 |

| OA (%) | 90.85 ± 0.15 | 95.29 ± 0.27 | 93.63 ± 0.05 | 89.75 ± 0.54 | 95.66 ± 0.24 | 96.59 ± 0.34 | 96.74 ± 0.17 | 98.69 ± 0.13 |

| AA (%) | 89.52 ± 0.42 | 93.91 ± 0.21 | 92.14 ± 0.73 | 87.98 ± 0.42 | 96.64 ± 0.50 | 96.93 ± 0.20 | 95.03 ± 0.20 | 97.33 ± 0.45 |

| Kappa | 0.9095 ± 0.004 | 0.9431 ± 0.002 | 0.9308 ± 0.003 | 0.8585 ± 0.002 | 0.9505 ± 0.002 | 0.9559 ± 0.004 | 0.9524 ± 0.004 | 0.9841 ± 0.002 |

| Time (s) | 8.78 ± 2.04 | 6.51 ± 1.03 | 7.62 ± 1.85 | 19.35 ± 2.55 | 3.58 ± 0.87 | 12.26 ± 1.46 | 5.4 ± 1.27 | 2.36 ± 0.99 |

| Class | HybridSN | DBDA | SSRN | SSFCN | CBW | CTN | FPGA | S3AN |

|---|---|---|---|---|---|---|---|---|

| 1 | 99.70 ± 0.04 | 94.89 ± 0.59 | 99.01 ± 0.16 | 63.82 ± 2.30 | 98.66 ± 0.05 | 99.50 ± 0.21 | 95.99 ± 0.54 | 99.32 ± 0.40 |

| 2 | 95.85 ± 0.12 | 98.22 ± 0.72 | 71.74 ± 3.56 | 99.55 ± 0.42 | 99.85 ± 0.11 | 94.96 ± 0.85 | 99.49 ± 0.36 | 98.75 ± 0.38 |

| 3 | 93.34 ± 0.37 | 92.85 ± 1.31 | 99.48 ± 0.12 | 96.74 ± 0.56 | 98.80 ± 0.25 | 99.39 ± 0.14 | 99.16 ± 0.60 | 99.93 ± 0.06 |

| 4 | 75.66 ± 2.64 | 95.89 ± 0.32 | 99.13 ± 0.14 | 87.99 ± 1.12 | 98.58 ± 0.60 | 97.71 ± 0.60 | 86.43 ± 1.59 | 99.51 ± 0.18 |

| 5 | 86.84 ± 1.95 | 87.26 ± 2.27 | 85.16 ± 1.57 | 86.56 ± 2.37 | 96.89 ± 0.32 | 97.47 ± 0.59 | 95.62 ± 0.55 | 96.65 ± 1.65 |

| 6 | 85.29 ± 0.62 | 83.79 ± 0.30 | 84.10 ± 2.92 | 90.55 ± 0.85 | 87.28 ± 1.74 | 99.27 ± 0.33 | 94.97 ± 0.20 | 95.74 ± 0.33 |

| 7 | 90.34 ± 0.58 | 85.75 ± 0.49 | 98.92 ± 0.53 | 90.24 ± 0.95 | 99.64 ± 0.03 | 87.38 ± 0.89 | 95.79 ± 0.56 | 98.41 ± 0.40 |

| 8 | 90.17 ± 0.62 | 86.11 ± 1.65 | 89.85 ± 0.36 | 93.61 ± 0.44 | 98.80 ± 0.16 | 99.61 ± 0.17 | 90.61 ± 1.65 | 95.22 ± 2.85 |

| 9 | 89.59 ± 0.66 | 91.18 ± 0.38 | 99.15 ± 0.39 | 95.35 ± 0.07 | 96.23 ± 0.85 | 98.77 ± 0.05 | 97.55 ± 0.97 | 98.79 ± 0.78 |

| 10 | 87.68 ± 3.31 | 89.99 ± 1.78 | 91.59 ± 0.51 | 85.49 ± 0.38 | 95.35 ± 0.64 | 90.61 ± 0.61 | 98.43 ± 0.43 | 95.46 ± 0.25 |

| 11 | 82.55 ± 0.47 | 92.35 ± 0.28 | 87.99 ± 0.83 | 89.71 ± 0.23 | 91.32 ± 0.79 | 96.89 ± 0.55 | 94.24 ± 0.45 | 98.22 ± 1.12 |

| 12 | 87.60 ± 2.80 | 98.96 ± 0.22 | 94.15 ± 0.95 | 79.75 ± 0.39 | 93.38 ± 0.85 | 94.37 ± 0.34 | 95.33 ± 1.92 | 97.75 ± 0.51 |

| 13 | 95.16 ± 1.15 | 95.19 ± 0.65 | 97.82 ± 0.55 | 95.07 ± 1.15 | 85.92 ± 2.38 | 99.43 ± 0.19 | 96.87 ± 0.45 | 96.36 ± 0.33 |

| 14 | 86.33 ± 0.22 | 90.55 ± 0.44 | 97.71 ± 0.60 | 99.51 ± 0.17 | 93.91 ± 0.33 | 98.06 ± 0.22 | 95.61 ± 0.16 | 99.73 ± 0.09 |

| 15 | 83.46 ± 0.58 | 91.76 ± 0.19 | 92.87 ± 0.71 | 90.96 ± 1.68 | 95.57 ± 0.45 | 85.39 ± 2.38 | 97.79 ± 0.66 | 97.55 ± 0.38 |

| 16 | 92.32 ± 0.14 | 94.33 ± 0.61 | 96.85 ± 0.28 | 89.65 ± 0.20 | 93.55 ± 0.20 | 97.77 ± 0.25 | 99.03 ± 0.22 | 99.85 ± 0.25 |

| OA (%) | 90.49 ± 0.55 | 93.55 ± 0.32 | 92.68 ± 0.39 | 90.92 ± 0.14 | 95.08 ± 0.25 | 96.08 ± 0.23 | 97.96 ± 0.65 | 98.59 ± 0.18 |

| AA (%) | 88.87 ± 0.86 | 91.82 ± 0.35 | 92.85 ± 0.25 | 89.66 ± 0.30 | 95.23 ± 0.34 | 96.03 ± 0.18 | 95.84 ± 0.35 | 97.95 ± 0.32 |

| Kappa | 0.9205 ± 0.004 | 0.933 ± 0.005 | 0.9033 ± 0.002 | 0.8993 ± 0.004 | 0.9413 ± 0.003 | 0.9468 ± 0.005 | 0.9774 ± 0.004 | 0.9792 ± 0.004 |

| Time (s) | 40.48 ± 5.45 | 40.33 ± 7.53 | 53.88 ± 5.95 | 102.99 ± 9.88 | 32.69 ± 4.50 | 42.68 ± 9.06 | 37.99 ± 3.37 | 25.09 ± 2.70 |

| Class | HybridSN | DBDA | SSRN | SSFCN | CBW | CTN | FPGA | S3AN |

|---|---|---|---|---|---|---|---|---|

| 1 | 97.14 ± 0.74 | 95.41 ± 0.51 | 99.33 ± 0.32 | 99.50 ± 0.32 | 99.04 ± 0.37 | 99.32 ± 0.07 | 99.18 ± 0.27 | 99.41 ± 0.25 |

| 2 | 98.26 ± 0.36 | 99.71 ± 0.20 | 97.43 ± 0.62 | 98.17 ± 0.45 | 93.90 ± 0.88 | 97.43 ± 0.82 | 98.60 ± 0.55 | 96.04 ± 0.77 |

| 3 | 90.58 ± 1.18 | 84.97 ± 0.95 | 97.28 ± 0.95 | 99.75 ± 0.22 | 96.58 ± 1.78 | 97.27 ± 1.12 | 99.39 ± 0.64 | 99.69 ± 0.12 |

| 4 | 99.90 ± 0.06 | 86.97 ± 0.48 | 99.83 ± 0.31 | 98.39 ± 0.17 | 96.12 ± 1.10 | 99.83 ± 0.09 | 99.15 ± 0.41 | 99.75 ± 0.17 |

| 5 | 89.29 ± 0.95 | 98.02 ± 0.26 | 66.65 ± 3.75 | 12.03 ± 5.89 | 86.44 ± 2.40 | 86.65 ± 3.85 | 99.62 ± 0.23 | 99.96 ± 0.02 |

| 6 | 70.72 ± 2.25 | 81.72 ± 2.27 | 99.79 ± 0.15 | 78.83 ± 1.33 | 93.89 ± 0.56 | 97.99 ± 0.25 | 98.41 ± 0.51 | 98.40 ± 0.28 |

| 7 | 89.65 ± 0.60 | 99.82 ± 0.03 | 95.59 ± 0.29 | 99.06 ± 0.15 | 96.75 ± 0.49 | 95.59 ± 0.55 | 99.45 ± 0.13 | 99.92 ± 0.05 |

| 8 | 96.87 ± 0.49 | 93.40 ± 0.65 | 96.59 ± 0.66 | 97.16 ± 0.26 | 99.97 ± 0.02 | 96.59 ± 0.38 | 98.13 ± 0.19 | 97.99 ± 1.12 |

| 9 | 97.12 ± 0.85 | 94.37 ± 1.21 | 99.22 ± 0.54 | 99.68 ± 0.14 | 94.99 ± 0.38 | 99.22 ± 0.32 | 99.58 ± 0.26 | 98.70 ± 0.55 |

| 10 | 99.57 ± 0.31 | 98.68 ± 0.33 | 97.94 ± 0.36 | 96.51 ± 0.25 | 96.67 ± 0.65 | 97.94 ± 0.39 | 99.33 ± 0.75 | 99.59 ± 0.13 |

| 11 | 91.34 ± 0.25 | 89.54 ± 0.50 | 82.97 ± 0.89 | 95.26 ± 0.27 | 71.84 ± 2.78 | 82.97 ± 0.77 | 99.06 ± 0.50 | 99.78 ± 0.18 |

| 12 | 73.88 ± 4.62 | 86.46 ± 0.95 | 90.09 ± 1.44 | 85.57 ± 1.58 | 93.70 ± 0.49 | 90.09 ± 0.60 | 88.03 ± 2.42 | 83.7 ± 1.42 |

| 13 | 90.31 ± 0.37 | 88.17 ± 1.69 | 42.76 ± 5.12 | 97.92 ± 1.40 | 96.83 ± 0.71 | 96.49 ± 0.59 | 98.09 ± 1.37 | 97.71 ± 0.65 |

| 14 | 97.58 ± 0.15 | 98.35 ± 0.78 | 99.47 ± 0.31 | 99.77 ± 0.21 | 95.99 ± 0.67 | 99.46 ± 0.33 | 99.57 ± 0.25 | 99.65 ± 0.16 |

| 15 | 98.68 ± 0.63 | 99.13 ± 0.51 | 84.05 ± 0.55 | 65.87 ± 3.35 | 75.41 ± 3.81 | 84.04 ± 1.55 | 7.96 ± 3.89 | 68.11 ± 2.85 |

| 16 | 99.85 ± 0.05 | 99.73 ± 0.07 | 99.39 ± 0.16 | 97.56 ± 0.57 | 95.53 ± 0.55 | 99.93 ± 0.04 | 99.32 ± 0.25 | 99.58 ± 0.07 |

| OA (%) | 94.61 ± 0.31 | 93.4 ± 0.65 | 92.42 ± 0.75 | 93.43 ± 0.46 | 95.45 ± 0.46 | 96.35 ± 0.29 | 96.62 ± 0.56 | 97.56 ± 0.52 |

| AA (%) | 92.55 ± 0.28 | 92.32 ± 0.44 | 90.52 ± 0.66 | 88.81 ± 0.60 | 92.78 ± 0.35 | 95.05 ± 0.46 | 92.68 ± 0.60 | 96.23 ± 0.31 |

| Kappa | 0.9447 ± 0.004 | 0.9204 ± 0.004 | 0.9191 ± 0.006 | 0.9288 ± 0.007 | 0.9429 ± 0.006 | 0.9668 ± 0.004 | 0.9505 ± 0.006 | 0.9769 ± 0.004 |

| Time (s) | 209.37 ± 9.96 | 404.83 ± 20.38 | 371.49 ± 10.44 | 508.42 ± 20.51 | 246.42 ± 16.40 | 313.26 ± 17.65 | 169.79 ± 3.15 | 121.09 ± 6.98 |

| Dataset | Method | DE-Pooling | Adapt-Conv | OA (%) | AA (%) | Kappa |

|---|---|---|---|---|---|---|

| Indian Pines | Baseline | - | - | 83.19 ± 1.14 | 81.32 ± 1.68 | 0.7816 ± 0.075 |

| DE-Pooling | ✓ | - | 96.57 ± 0.65 | 96.85 ± 1.60 | 0.9379 ± 0.097 | |

| DE-Pooling + Adapt-Conv | ✓ | ✓ | 98.41 ± 0.44 | 97.55 ± 0.60 | 0.9591 ± 0.059 | |

| Salinas | Baseline | - | - | 65.03 ± 3.35 | 63.77 ± 2.70 | 0.5952 ± 0.031 |

| DE-Pooling | ✓ | - | 95.18 ± 0.65 | 94.35 ± 0.89 | 0.9331 ± 0.016 | |

| DE-Pooling + Adapt-Conv | ✓ | ✓ | 98.09 ± 0.38 | 97.42 ± 0.55 | 0.9799 ± 0.012 | |

| HanChuan | Baseline | - | - | 71.83 ± 4.78 | 69.52 ± 3.96 | 0.6607 ± 0.036 |

| DE-Pooling | ✓ | - | 95.61 ± 0.55 | 95.22 ± 0.27 | 0.9494 ± 0.031 | |

| DE-Pooling + Adapt-Conv | ✓ | ✓ | 97.51 ± 0.11 | 96.89 ± 0.28 | 0.9673 ± 0.003 |

| Dataset | Number | Selected Band | OA (%) | AA (%) | Kappa | Time (s) |

|---|---|---|---|---|---|---|

| Indian Pines | 8 | [102,104,…,198] | 61.33 ± 5.56 | 60.51 ± 4.70 | 0.5933 ± 0.093 | 1.66 ± 0.30 |

| 16 | [56,102,…,199] | 82.60 ± 2.33 | 83.95 ± 1.16 | 0.7827 ± 0.062 | 1.85 ± 0.31 | |

| 24 | [18,56,…,199] | 97.45 ± 0.18 | 96.37 ± 0.25 | 0.9615 ± 0.005 | 2.07 ± 0.29 | |

| 32 | [12,18,…,199] | 97.63 ± 0.21 | 96.59 ± 0.09 | 0.9689 ± 0.003 | 2.41 ± 0.29 | |

| 36 | [12,17,…,199] | 97.25 ± 0.30 | 94.35 ± 0.27 | 0.9500 ± 0.003 | 2.95 ± 0.30 | |

| Salinas | 8 | [37,38,…,197] | 53.77 ± 5.53 | 59.73 ± 3.95 | 0.5630 ± 0.052 | 12.32 ± 1.56 |

| 16 | [12,19,…,200] | 79.09 ± 2.61 | 82.14 ± 3.35 | 0.7949 ± 0.079 | 15.61 ± 2.05 | |

| 24 | [8,9,12,…,200] | 97.51 ± 0.22 | 96.75 ± 0.37 | 0.9665 ± 0.012 | 18.44 ± 3.32 | |

| 32 | [4,5,6,…,200] | 98.05 ± 0.16 | 98.00 ± 0.11 | 0.9811 ± 0.007 | 25.89 ± 2.98 | |

| 36 | [4,5,6,…,200] | 98.16 ± 0.29 | 95.58 ± 0.36 | 0.9503 ± 0.015 | 29.59 ± 3.75 | |

| HanChuan | 8 | [0,3,10,...,254] | 65.30 ± 2.49 | 67.72 ± 3.60 | 0.6447 ± 0.071 | 64.35 ± 8.83 |

| 16 | [0,3,10,…,272] | 76.11 ± 3.55 | 79.55 ± 3.70 | 0.7605 ± 0.063 | 89.05 ± 7.99 | |

| 24 | [1,3,10,…,272] | 96.89 ± 0.19 | 95.80 ± 0.23 | 0.9578 ± 0.015 | 96.16 ± 10.80 | |

| 32 | [1,3,10,…,273] | 97.32 ± 0.25 | 98.11 ± 0.19 | 0.9790 ± 0.010 | 120.55 ± 9.55 | |

| 36 | [1,2,3,…,273] | 96.09 ± 0.51 | 94.65 ± 1.01 | 0.9532 ± 0.026 | 164.89 ± 10.66 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, S.; Wang, M.; Cheng, C.; Gao, X.; Ye, Z.; Liu, W. Spectral-Spatial-Sensorial Attention Network with Controllable Factors for Hyperspectral Image Classification. Remote Sens. 2024, 16, 1253. https://doi.org/10.3390/rs16071253

Li S, Wang M, Cheng C, Gao X, Ye Z, Liu W. Spectral-Spatial-Sensorial Attention Network with Controllable Factors for Hyperspectral Image Classification. Remote Sensing. 2024; 16(7):1253. https://doi.org/10.3390/rs16071253

Chicago/Turabian StyleLi, Sheng, Mingwei Wang, Chong Cheng, Xianjun Gao, Zhiwei Ye, and Wei Liu. 2024. "Spectral-Spatial-Sensorial Attention Network with Controllable Factors for Hyperspectral Image Classification" Remote Sensing 16, no. 7: 1253. https://doi.org/10.3390/rs16071253

APA StyleLi, S., Wang, M., Cheng, C., Gao, X., Ye, Z., & Liu, W. (2024). Spectral-Spatial-Sensorial Attention Network with Controllable Factors for Hyperspectral Image Classification. Remote Sensing, 16(7), 1253. https://doi.org/10.3390/rs16071253