Single-Temporal Sentinel-2 for Analyzing Burned Area Detection Methods: A Study of 14 Cases in Republic of Korea Considering Land Cover

Abstract

1. Introduction

2. Study Area and Data

2.1. Study Area

2.2. Sentinel-2 Multispectral Instrument

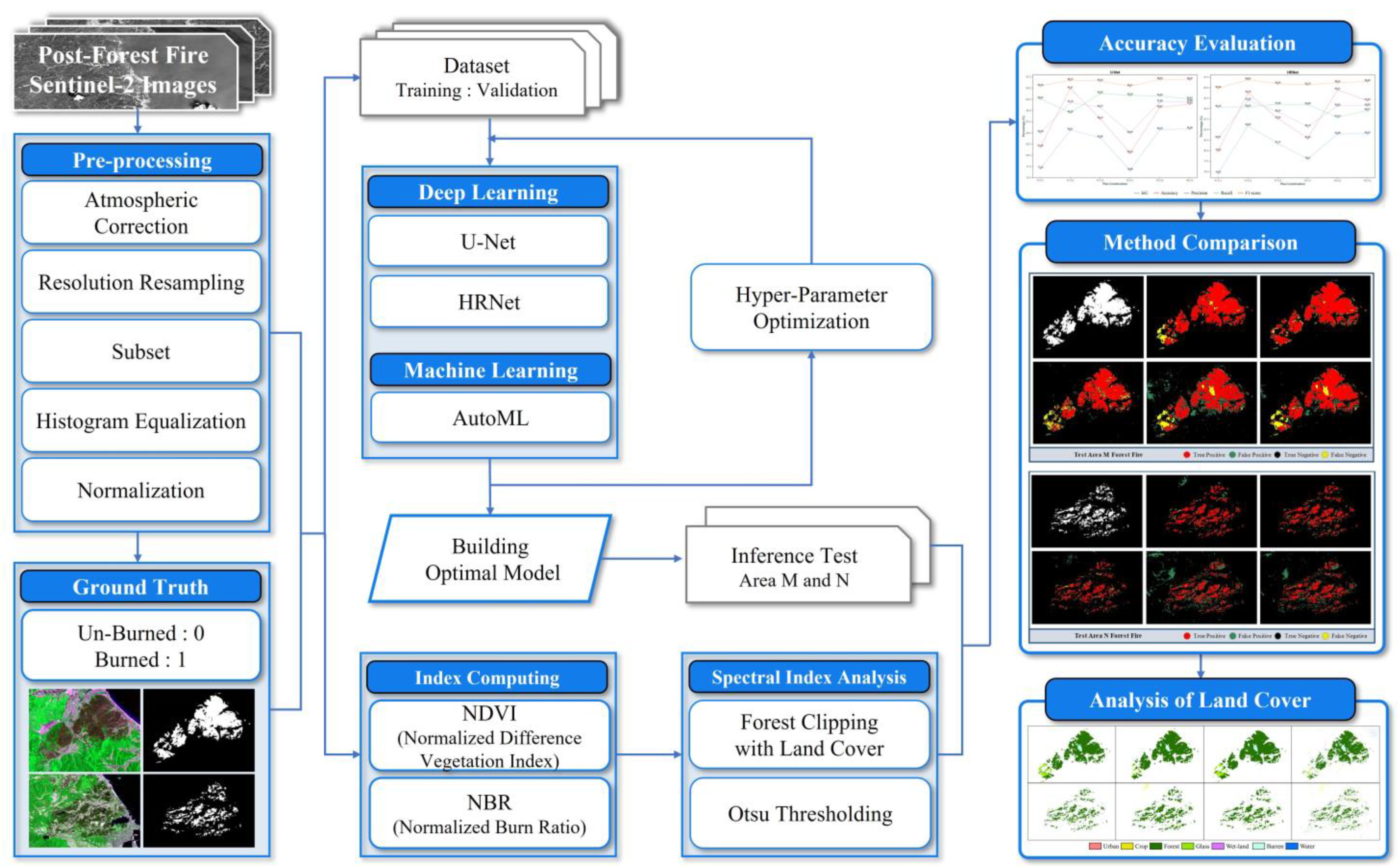

3. Methodology

- (1)

- Satellite image preprocessing: preprocess images to correct atmospheric disturbances in Sentinel-2 satellite images and improve clarity.

- (2)

- Generation of ground truth (GT) data: manually label forest fire-affected areas within the imagery for training and validation.

- (3)

- Creation of method-specific datasets: tailor datasets for different detection methods (DL, ML, and SI) by extracting relevant features and integrating GT data.

- (4)

- Environment configuration and hyperparameter optimization: set up computational resources and fine-tune algorithm parameters for optimal performance.

- (5)

- Evaluation of detection results: assess algorithm performance using accuracy assessment techniques and compare with GT data.

- (6)

- Analysis of forest fire damage in relation to LC types: analyze the detected forest fire damage areas in the context of different LC types, such as forests and grass.

3.1. Data Preparation

3.1.1. Sentinel-2 Image Preprocessing

- Level-1C images with top of atmosphere (TOA) reflectance values were atmospherically corrected using Sen2cor to derive level-2A surface reflectance with bottom of atmosphere (BOA) reflectance values [42].

- To align the spatial resolution of the Sentinel-2 image with that of the band, an up-sampling process was performed. The nearest neighbor interpolation method was applied to resample the SWIR (B11 and B12) data with a spatial resolution of 20–10 m.

- A subset of equal size was obtained through the area of intersection, utilizing the geographical coordinates from the areas where forest fires occurred.

- The image was reconstructed using histogram equalization to emphasize the contrast and make it easier to distinguish burned woodland and intact forest areas. Linear stretching was applied to expand the contrast of the histogram, resulting in values between 2% and 98%.

- Min–max normalization was applied because the distribution of reflectance values varies among different bands. The reflectance values were rescaled to a range of 0–255 using the minimum and maximum values of each band.

3.1.2. Generation of Ground Truth

3.2. Deep Learning Approaches

3.2.1. U-Net Model

3.2.2. HRNet Model

3.2.3. Deep Learning Environment

3.3. Machine Learning Approaches

3.3.1. AutoML Model

3.3.2. Machine Learning Environment

3.4. Spectral Indices-Based Approaches

3.4.1. Normalized Difference Vegetation Index (NDVI)

3.4.2. Normalized Burn Ratio

3.4.3. Spectral Indices Analysis

3.5. Accuracy Evaluation

4. Results

4.1. Input Image Combination for Deep Learning Models

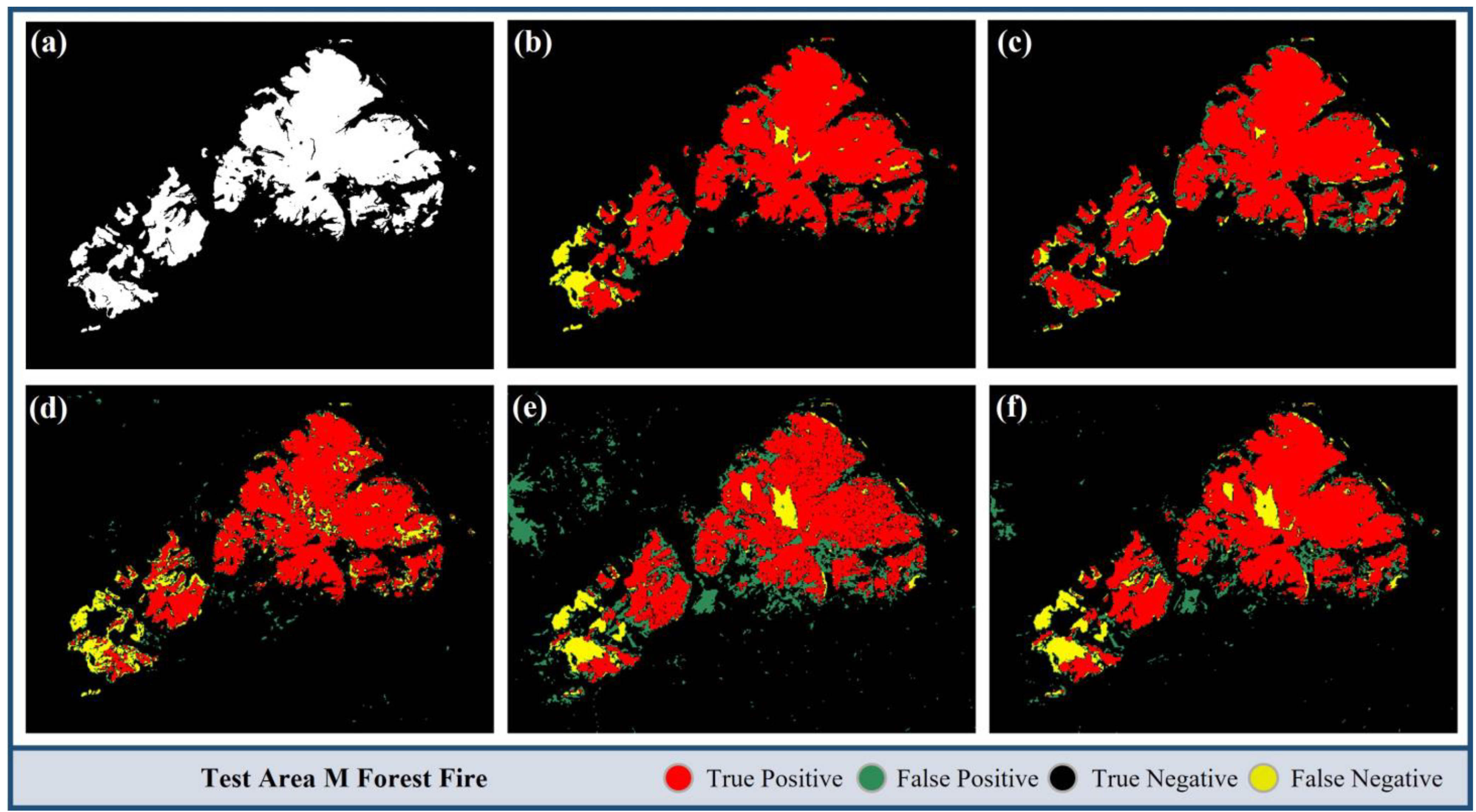

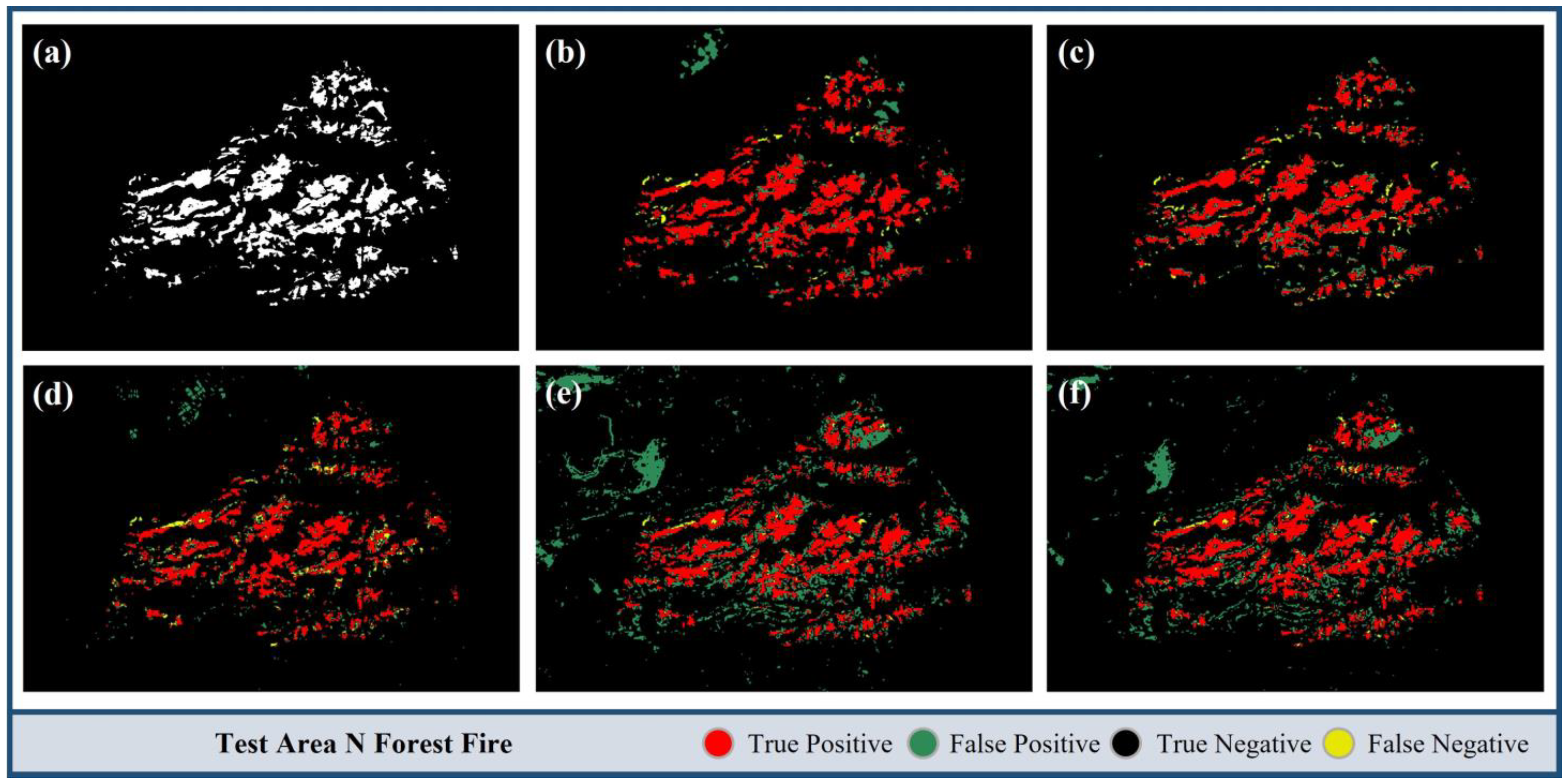

4.2. Comparison of the Model Results in Test Study Areas

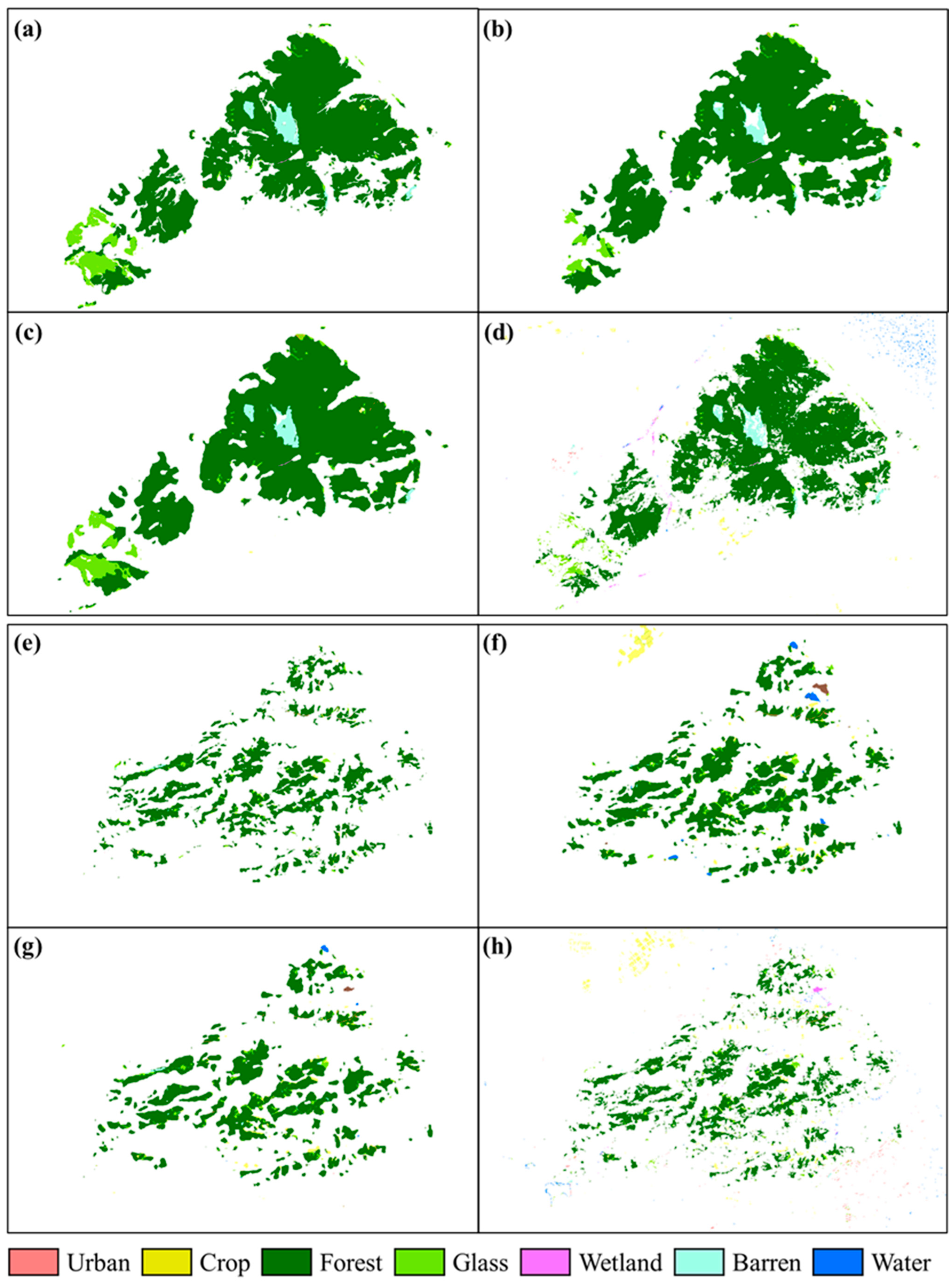

4.3. Analysis Considering Land Cover

5. Discussion

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Correction Statement

References

- Földi, L.; Kuti, R. Characteristics of forest fires and their impact on the environment. Acad. Appl. Res. Mil. Public Manag. Sci. 2016, 15, 5–17. [Google Scholar] [CrossRef]

- Korea Forest Service. Forest Fire Statistical Yearbook; Korea Forest Service: Daejeon, Republic of Korea, 2022.

- Jiao, Q.; Fan, M.; Tao, J.; Wang, W.; Liu, D.; Wang, P. Forest fire patterns and lightning-caused forest fire detection in Heilongjiang Province of China using satellite data. Fire 2023, 6, 166. [Google Scholar] [CrossRef]

- Jung, H.G.; An, H.J.; Lee, S.M. Agricultural Policy Focus: Improvement Tasks for Effective Forest Fire Management; Korea Rural Economic Institute: Naju, Republic of Korea, 2017; pp. 1–20. Available online: https://www.dbpia.co.kr/pdf/pdfView.do?nodeId=NODE07220754 (accessed on 1 January 2024).

- Filipponi, F. Exploitation of sentinel-2 time series to map burned areas at the national level: A case study on the 2017 Italy wildfires. Remote Sens. 2019, 11, 622. [Google Scholar] [CrossRef]

- Sertel, E.; Alganci, U. Comparison of pixel and object-based classification for burned area mapping using SPOT-6 images. Geomat. Nat. Hazards Risk 2016, 7, 1198–1206. [Google Scholar] [CrossRef]

- Hawbaker, T.J.; Vanderhoof, M.K.; Beal, Y.-J.; Takacs, J.D.; Schmidt, G.L.; Falgout, J.T.; Williams, B.; Fairaux, N.M.; Caldwell, M.K.; Picotte, J.J.; et al. Mapping burned areas using dense time-series of Landsat data. Remote Sens. Environ. 2017, 198, 504–522. [Google Scholar] [CrossRef]

- Lasaponara, R.; Tucci, B.; Ghermandi, L. On the use of satellite Sentinel 2 data for automatic mapping of burnt areas and burn severity. Sustainability 2018, 10, 3889. [Google Scholar] [CrossRef]

- Liu, J.; Heiskanen, J.; Maeda, E.E.; Pellikka, P.K. Burned area detection based on Landsat time series in savannas of southern Burkina Faso. Int. J. Appl. Earth Obs. Geoinf. 2018, 64, 210–220. [Google Scholar] [CrossRef]

- Belenguer-Plomer, M.A.; Tanase, M.A.; Fernandez-Carrillo, A.; Chuvieco, E. Burned area detection and mapping using Sentinel-1 backscatter coefficient and thermal anomalies. Remote Sens. Environ. 2019, 233, 111345. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A. Deep learning approaches for wildland fires using satellite remote sensing data: Detection, mapping, and prediction. Fire 2023, 6, 192. [Google Scholar] [CrossRef]

- Chu, T.; Guo, X. Remote sensing techniques in monitoring post-fire effects and patterns of forest recovery in boreal forest regions: A review. Remote Sens. 2013, 6, 470–520. [Google Scholar] [CrossRef]

- Gaveau, D.L.; Descals, A.; Salim, M.A.; Sheil, D.; Sloan, S. Refined burned-area mapping protocol using Sentinel-2 data increases estimate of 2019 Indonesian burning. Earth System Science Data. 2021, 13, 5353–5368. [Google Scholar] [CrossRef]

- Abid, N.; Malik, M.I.; Shahzad, M.; Shafait, F.; Ali, H.; Ghaffar, M.M.; Weis, C.; Wehn, N.; Liwicki, M. Burnt Forest Estimation from Sentinel-2 Imagery of Australia using Unsupervised Deep Learning. In Proceedings of the Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, Australia, 29 November–1 December 2021. [Google Scholar]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Chuvieco, E.; Martin, M.P.; Palacios, A. Assessment of different spectral indices in the red-near-infrared spectral domain for burned land discrimination. Int. J. Remote Sens. 2002, 23, 5103–5110. [Google Scholar] [CrossRef]

- Smith, A.M.; Wooster, M.J.; Drake, N.A.; Dipotso, F.M.; Falkowski, M.J.; Hudak, A.T. Testing the potential of multi-spectral remote sensing for retrospectively estimating fire severity in African Savannahs. Remote Sens. Environ. 2005, 97, 92–115. [Google Scholar] [CrossRef]

- García, M.L.; Caselles, V. Mapping burns and natural reforestation using Thematic Mapper data. Geocarto Int. 1991, 6, 31–37. [Google Scholar] [CrossRef]

- Veraverbeke, S.; Lhermitte, S.; Verstraeten, W.W.; Goossens, R. Evaluation of pre/post-fire differenced spectral indices for assessing burn severity in a Mediterranean environment with Landsat Thematic Mapper. Int. J. Remote Sens. 2011, 32, 3521–3537. [Google Scholar] [CrossRef]

- Veraverbeke, S.; Hook, S.; Hulley, G. An alternative spectral index for rapid fire severity assessments. Remote Sens. Environ. 2012, 123, 72–80. [Google Scholar] [CrossRef]

- Navarro, G.; Caballero, I.; Silva, G.; Parra, P.C.; Vázquez, Á.; Caldeira, R. Evaluation of forest fire on Madeira Island using Sentinel-2A MSI imagery. Int. J. Appl. Earth Obs. Geoinf. 2017, 58, 97–106. [Google Scholar] [CrossRef]

- Ponomarev, E.; Zabrodin, A.; Ponomareva, T. Classification of fire damage to boreal forests of Siberia in 2021 based on the dNBR index. Fire 2022, 5, 19. [Google Scholar] [CrossRef]

- Escuin, S.; Navarro, R.; Fernández, P. Fire severity assessment by using NBR (Normalized Burn Ratio) and NDVI (Normalized Difference Vegetation Index) derived from LANDSAT TM/ETM images. Int. J. Remote Sens. 2008, 29, 1053–1073. [Google Scholar] [CrossRef]

- Smiraglia, D.; Filipponi, F.; Mandrone, S.; Tornato, A.; Taramelli, A. Agreement index for burned area mapping: Integration of multiple spectral indices using Sentinel-2 satellite images. Remote Sens. 2020, 12, 1862. [Google Scholar] [CrossRef]

- Mpakairi, K.S.; Ndaimani, H.; Kavhu, B. Exploring the utility of Sentinel-2 MSI derived spectral indices in mapping burned areas in different land-cover types. Sci. Afr. 2020, 10, e00565. [Google Scholar] [CrossRef]

- Pinto, M.M.; Libonati, R.; Trigo, R.M.; Trigo, I.F.; DaCamara, C.C. A deep learning approach for mapping and dating burned areas using temporal sequences of satellite images. ISPRS J. Photogramm. Remote Sens. 2020, 160, 260–274. [Google Scholar] [CrossRef]

- Khryashchev, V.; Larionov, R. Wildfire segmentation on satellite images using deep learning. In Proceedings of the Moscow Workshop on Electronic and Networking Technologies (MWENT), Moscow, Russia, 11–13 March 2020. [Google Scholar] [CrossRef]

- Huot, F.; Hu, R.L.; Goyal, N.; Sankar, T.; Ihme, M.; Chen, Y.F. Next day wildfire spread: A machine learning dataset to predict wildfire spreading from remote-sensing data. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Seydi, S.T.; Sadegh, M. Improved burned area mapping using monotemporal Landsat-9 imagery and convolutional shift-transformer. Measurement 2023, 216, 112961. [Google Scholar] [CrossRef]

- Hu, X.; Ban, Y.; Nascetti, A. Uni-temporal multispectral imagery for burned area mapping with deep learning. Remote Sens. 2021, 13, 1509. [Google Scholar] [CrossRef]

- Cho, A.Y.; Park, S.E.; Kim, D.J.; Kim, J.; Li, C.; Song, J. Burned area mapping using Unitemporal Planetscope imagery with a deep learning based approach. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 16, 242–253. [Google Scholar] [CrossRef]

- Gibson, R.; Danaher, T.; Hehir, W.; Collins, L. A remote sensing approach to mapping fire severity in south-eastern Australia using sentinel 2 and random forest. Remote Sens. Environ. 2020, 240, 111702. [Google Scholar] [CrossRef]

- Bar, S.; Parida, B.R.; Pandey, A.C. Landsat-8 and Sentinel-2 based Forest fire burn area mapping using machine learning algorithms on GEE cloud platform over Uttarakhand, Western Himalaya. Remote Sens. Appl. Soc. Environ. 2020, 18, 100324. [Google Scholar] [CrossRef]

- Prabowo, Y.; Sakti, A.D.; Pradono, K.A.; Amriyah, Q.; Rasyidy, F.H.; Bengkulah, I.; Ulfa, K.; Candra, D.S.; Imdad, M.T.; Ali, S. Deep learning dataset for estimating burned areas: Case study, Indonesia. Data 2022, 7, 78. [Google Scholar] [CrossRef]

- Alkan, D.; Karasaka, L. Segmentation of LANDSAT-8 images for burned area detection with deep learning. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, 48, 455–461. [Google Scholar] [CrossRef]

- Knopp, L.; Wieland, M.; Rättich, M.; Martinis, S. A deep learning approach for burned area segmentation with Sentinel-2 data. Remote Sens. 2020, 12, 2422. [Google Scholar] [CrossRef]

- Lee, C.; Park, S.; Kim, T.; Liu, S.; Md Reba, M.N.; Oh, J.; Han, Y. Machine learning-based forest burned area detection with various input variables: A case study of South Korea. Appl. Sci. 2022, 12, 10077. [Google Scholar] [CrossRef]

- Tonbul, H.; Yilmaz, E.O.; Kavzoglu, T. Comparative analysis of deep learning and machine learning models for burned area estimation using Sentinel-2 image: A case study in Muğla-Bodrum, Turkey. In Proceedings of the International Conference on Recent Advances in Air and Space Technologies (RAST), Istanbul, Turkey, 7–9 June 2023. [Google Scholar] [CrossRef]

- Korea Meteorological Institute. Meteorological Technology & Policy; Korea Meteorological Institute: Seoul, Republic of Korea, 2019; Volume 12. Available online: https://www.kma.go.kr/down/t_policy/t_policy_20200317.pdf (accessed on 1 January 2024).

- Bae, M.; Chae, H. Regional characteristics of forest fire occurrences in Korea from 1990 to 2018. J. Korean Soc. Hazard Mitig. 2019, 19, 305–313. [Google Scholar] [CrossRef]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s optical high-resolution mission for GMES operational services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Louis, J.; Debaecker, V.; Pflug, B.; Main-Knorn, M.; Bieniarz, J.; Mueller-Wilm, U.; Cadau, E.; Gascon, F. Sentinel-2 Sen2Cor: L2A processor for users. In Proceedings of the Living Planet Symposium, Prague, Czech Republic, 9–13 May 2016; Available online: http://esamultimedia.esa.int/multimedia/publications/SP-740/SP-740_toc.pdf (accessed on 6 June 2023.).

- Arzt, M.; Deschamps, J.; Schmied, C.; Pietzsch, T.; Schmidt, D.; Tomancak, P.; Haase, R.; Jug, F. LABKIT: Labeling and segmentation toolkit for big image data. Front. Comput. Sci. 2022, 4, 10. [Google Scholar] [CrossRef]

- Rashkovetsky, D.; Mauracher, F.; Langer, M.; Schmitt, M. Wildfire detection from multisensor satellite imagery using deep semantic segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 700–7016. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Sun, K.; Zhao, Y.; Jiang, B.; Cheng, T.; Xiao, B.; Liu, D.; Mu, Y.; Wang, X.; Liu, W.; Wang, J. High-resolution representations for labeling pixels and regions. arXiv 2019, arXiv:1904.04514. [Google Scholar] [CrossRef]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep high-resolution representation learning for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3349–3364. [Google Scholar] [CrossRef] [PubMed]

- Yuan, Y.; Chen, X.; Wang, J. Object-contextual representations for semantic segmentation. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; Volume 12351. [Google Scholar] [CrossRef]

- He, X.; Zhao, K.; Chu, X. AutoML: A survey of the state-of-the-art. Knowl.-Based Syst. 2021, 212, 106622. [Google Scholar] [CrossRef]

- Salehin, I.; Islam, M.S.; Saha, P.; Noman, S.M.; Tuni, A.; Hasan, M.M.; Baten, A. AutoML: A systematic review on automated machine learning with neural architecture search. J. Inf. Intell. 2023, 2, 52–81. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Amos, C.; Petropoulos, G.P.; Ferentinos, K.P. Determining the use of Sentinel-2A MSI for wildfire burning & severity detection. Int. J. Remote Sens. 2019, 40, 905–930. [Google Scholar] [CrossRef]

- GDAL Documentation. Available online: https://gdal.org/index.html (accessed on 22 April 2022).

- Jo, W.; Lim, Y.; Park, K.H. Deep learning based land cover classification using convolutional neural network: A case study of Korea. J. Korean Geogr. Soc. 2019, 54, 1–16. [Google Scholar]

- Son, S.; Lee, S.H.; Bae, J.; Ryu, M.; Lee, D.; Park, S.R.; Seo, D.; Kim, J. Land-cover-change detection with aerial orthoimagery using segnet-based semantic segmentation in Namyangju city, South Korea. Sustainability 2022, 14, 12321. [Google Scholar] [CrossRef]

- Martins, V.S.; Roy, D.P.; Huang, H.; Boschetti, L.; Zhang, H.K.; Yan, L. Deep learning high resolution burned area mapping by transfer learning from Landsat-8 to PlanetScope. Remote Sens. Environ. 2022, 280, 113203. [Google Scholar] [CrossRef]

- Hu, X.; Zhang, P.; Ban, Y. Large-scale burn severity mapping in multispectral imagery using deep semantic segmentation models. ISPRS J. Photogramm. Remote Sens. 2023, 196, 228–240. [Google Scholar] [CrossRef]

| Year | Area | Location | Event Date | End Date | Burned Area (ha) | Image Date | Image Size (Pixels) | Dataset |

|---|---|---|---|---|---|---|---|---|

| 2023 | A | Nangok-dong, Gangneung-si, and Gangwon-do | 11 April | 11 April | 379 | 12 April, 19 April, and 27 April | 403 × 327 | Training/ Validation |

| B | Daedong-myeon, Hampyeong-gun, and Jeollanam-do | 3 April | 4 April | 475 | 22 April, 27 April, and 2 May | 357 × 426 | ||

| C | Boksang-myeon, Geumsan-gun, and Chungcheongnam-do | 2 April | 4 April | 889.36 | 9 April, 12 April, and 22 April | 548 × 392 | ||

| D | Seobu-myeon, Hongseong-gun, and Chungcheongnam-do | 2 April | 4 April | 1454 | 12 April, 22 April, and 27 April | 788 × 695 | ||

| E | Pyeongeun-myeon, Yeongju-si, and Gyeongsangbuk-do | 3 April | 3 April | 210 | 7 April, 17 April, and 19 April | 322 × 272 | ||

| 2022 | F | Bubuk-myeon, Miryang-si, and Gyeongsangnam-do | 31 May | 3 June | 763 | 3 June and 18 June | 331 × 694 | |

| G | Uiheung-myeon, Gunwi-gun, and Daegu Metropolitan City | 10 April | 12 April | 347 | 19 April, 24 April, and 4 May | 605 × 475 | ||

| H | Yulgok-myeon, Hapcheon-gun, and Gyeongsangnam-do | 28 February | 1 March | 675 | 3 March, 15 March, and 4 April | 602 × 430 | ||

| I | Buk-myeon, Uljin-gun, and Gyeongsangbuk-do | 4 March | 13 March | 20,923 | 15 March, 4 April, and 9 April | 1814 × 1968 | ||

| J | Seongsan-myeon, Gangneung-si, and Gangwon-do | 4 March | 5 March | 4000 | 4 April, 9 April, and 24 April | 1214 × 909 | ||

| K | Yanggu-eup, Yanggu-gun, and Gangwon-do | 10 April | 12 April | 759 | 17 April and 17 May | 485 × 573 | ||

| 2020 | L | Pungcheon-myeon, Andong-si, and Gyeongsangbuk-do | 24 April | 26 April | 1944 | 29 April, 12 May, 29 May, 1 June, 6 June, and 8 June | 1204 × 798 | |

| 2019 | M | Okgye-myeon, Gangneung-si, and Gangwon-do | 4 April | 5 April | 1260 | 20 April | 771 × 573 | Test |

| N | Toseong-myeon, Goseong-gun, and Gangwon-do | 4 April | 5 April | 1227 | 20 April | 1056 × 693 |

| Band | Central Wavelength | Resolution |

|---|---|---|

| Band2–Blue | 0.490 μm | 10 m |

| Band3–Green | 0.560 μm | |

| Band4–Red | 0.665 μm | |

| Band8–NIR | 0.842 μm | |

| Band11–SWIR1 | 1.610 μm | 20 m |

| Band12–SWIR2 | 2.190 μm |

| Plan | Combination | Input Channel Composition |

|---|---|---|

| P1 | C1 | B4, B3, and B2 |

| C2 | B4, B8, and B3 | |

| C3 | B2, B3, B4, B8, B11, and B12 | |

| P2 (NDVI and NBR) | C1 | B4, B3, B2 + NDVI, and NBR |

| C2 | B4, B8, B3 + NDVI, and NBR | |

| C3 | B2, B3, B4, B8, B11, B12 + NDVI, and NBR |

| Model | U-Net | HRNet |

|---|---|---|

| Backbone Network | S5-D16 | HRNetV2-W48 |

| Input Image Size | 256 × 256 pixels | |

| Loss Function | Binary Cross Entropy | |

| Optimizer | AdamW | |

| Batch Size | 8 | 4 |

| Learning Rate | 5 × 10−6 | 5 × 10−3 |

| Output | Probability Map | |

| Model | Plan | Combination | IoU (%) | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) | Inference Time (s) |

|---|---|---|---|---|---|---|---|---|

| U-Net | P1 | C1 | 77.04 | 95.48 | 92.63 | 81.90 | 85.08 | 9.31 |

| C2 | 85.61 | 96.75 | 89.50 | 94.91 | 91.78 | 9.42 | ||

| C3 | 83.96 | 96.58 | 93.82 | 88.18 | 90.73 | 10.02 | ||

| P2 | C1 | 76.66 | 95.41 | 93.38 | 80.53 | 84.94 | 9.39 | |

| C2 | 85.76 | 96.95 | 93.25 | 90.67 | 91.90 | 9.30 | ||

| C3 | 85.88 | 96.92 | 92.55 | 91.45 | 91.98 | 9.73 | ||

| HRNet | P1 | C1 | 74.63 | 94.89 | 90.35 | 79.93 | 83.01 | 6.50 |

| C2 | 85.95 | 96.92 | 90.42 | 93.78 | 91.99 | 5.40 | ||

| C3 | 81.74 | 95.93 | 91.06 | 87.64 | 89.24 | 6.11 | ||

| P2 | C1 | 78.01 | 95.70 | 90.98 | 82.94 | 85.73 | 5.96 | |

| C2 | 83.96 | 96.22 | 87.93 | 94.51 | 90.65 | 6.04 | ||

| C3 | 84.05 | 96.46 | 89.57 | 91.93 | 90.69 | 6.11 |

| Test Area | Evaluation | U-Net | HRNet | AutoML | NDVI | NBR |

|---|---|---|---|---|---|---|

| M | IoU | 89.11 | 89.40 | 75.58 | 61.50 | 72.98 |

| Accuracy | 96.58 | 96.62 | 95.46 | 90.65 | 94.44 | |

| Precision | 93.94 | 93.37 | 92.80 | 85.31 | 83.04 | |

| Recall | 94.26 | 95.25 | 80.29 | 85.31 | 85.76 | |

| F1-score | 94.10 | 94.27 | 86.09 | 76.16 | 84.38 | |

| N | IoU | 82.10 | 82.49 | 63.24 | 42.03 | 49.76 |

| Accuracy | 96.91 | 97.21 | 96.97 | 91.18 | 93.80 | |

| Precision | 89.46 | 87.46 | 77.48 | 43.27 | 52.72 | |

| Recall | 85.05 | 92.30 | 76.20 | 93.62 | 89.86 | |

| F1-score | 95.55 | 89.70 | 76.20 | 59.18 | 66.45 |

| LC Type | Area M | Area N | ||||||

|---|---|---|---|---|---|---|---|---|

| GT | U-Net | HRNet | AutoML | GT | U-Net | HRNet | AutoML | |

| Urban | 0.05 | 0.10 | 0.07 | 0.15 | 0.22 | 0.46 | 0.42 | 1.43 |

| Crop | 0.11 | 0.16 | 0.16 | 1.14 | 0.72 | 5.81 | 2.75 | 8.12 |

| Forest | 89.12 | 93.93 | 90.40 | 91.66 | 94.12 | 85.77 | 88.29 | 83.35 |

| Grass | 7.32 | 3.40 | 6.48 | 3.11 | 3.88 | 5.12 | 5.10 | 4.96 |

| Wetland | 0.03 | 0.04 | 0.03 | 0.39 | 0.01 | 0.79 | 0.33 | 0.47 |

| Barren | 3.37 | 2.38 | 2.87 | 2.87 | 1.04 | 0.76 | 2.75 | 0.90 |

| Water | 0.00 | 0.00 | 0.00 | 0.70 | 0.00 | 1.29 | 0.34 | 0.78 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, D.; Son, S.; Bae, J.; Park, S.; Seo, J.; Seo, D.; Lee, Y.; Kim, J. Single-Temporal Sentinel-2 for Analyzing Burned Area Detection Methods: A Study of 14 Cases in Republic of Korea Considering Land Cover. Remote Sens. 2024, 16, 884. https://doi.org/10.3390/rs16050884

Lee D, Son S, Bae J, Park S, Seo J, Seo D, Lee Y, Kim J. Single-Temporal Sentinel-2 for Analyzing Burned Area Detection Methods: A Study of 14 Cases in Republic of Korea Considering Land Cover. Remote Sensing. 2024; 16(5):884. https://doi.org/10.3390/rs16050884

Chicago/Turabian StyleLee, Doi, Sanghun Son, Jaegu Bae, Soryeon Park, Jeongmin Seo, Dongju Seo, Yangwon Lee, and Jinsoo Kim. 2024. "Single-Temporal Sentinel-2 for Analyzing Burned Area Detection Methods: A Study of 14 Cases in Republic of Korea Considering Land Cover" Remote Sensing 16, no. 5: 884. https://doi.org/10.3390/rs16050884

APA StyleLee, D., Son, S., Bae, J., Park, S., Seo, J., Seo, D., Lee, Y., & Kim, J. (2024). Single-Temporal Sentinel-2 for Analyzing Burned Area Detection Methods: A Study of 14 Cases in Republic of Korea Considering Land Cover. Remote Sensing, 16(5), 884. https://doi.org/10.3390/rs16050884