Pretrained Deep Learning Networks and Multispectral Imagery Enhance Maize LCC, FVC, and Maturity Estimation

Abstract

1. Introduction

2. Datasets

2.1. Study Area

2.2. Field Experiments

2.2.1. LCC and FVC Acquisition

2.2.2. UAV Imagery

3. Methods

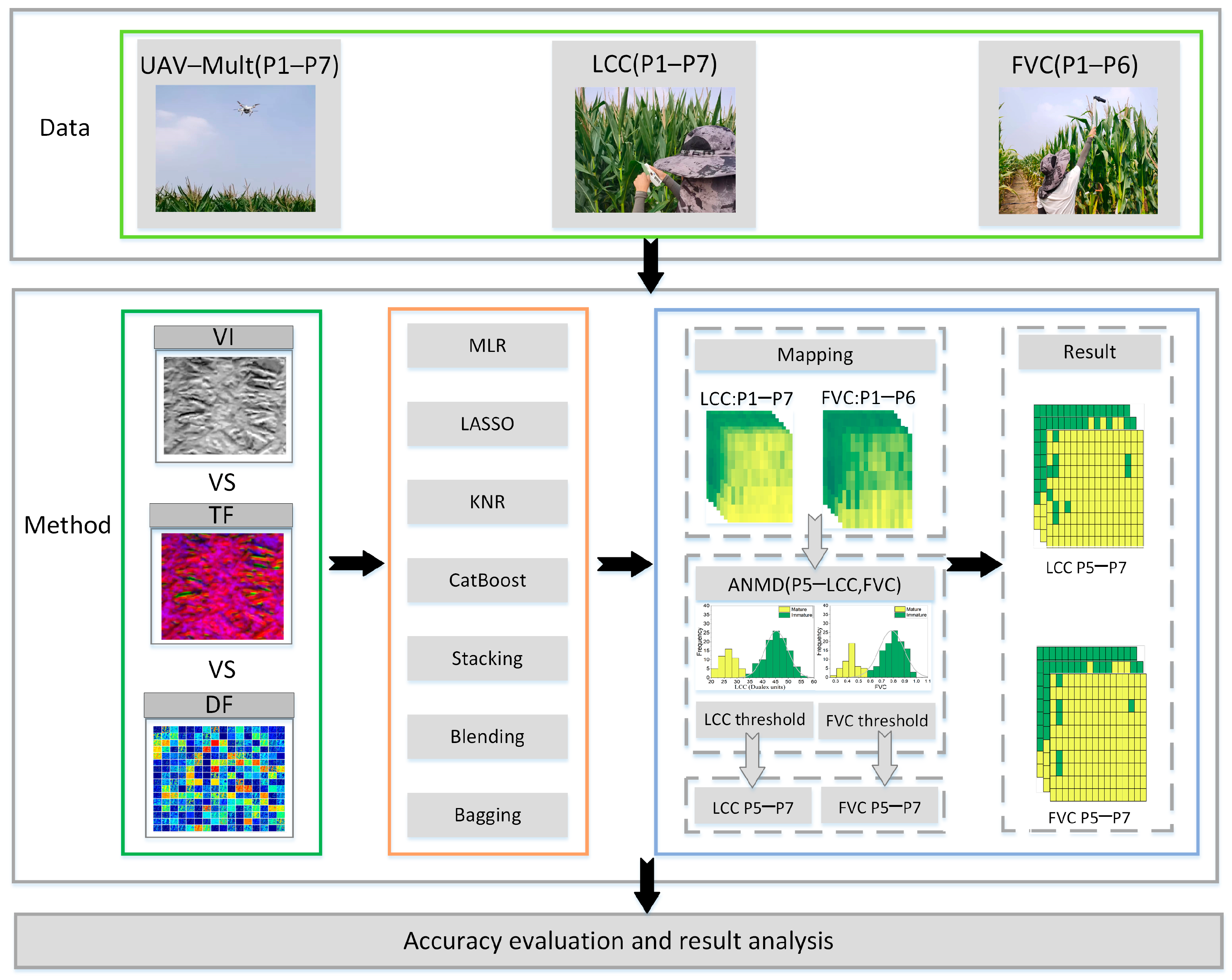

- Data Collection: At this stage, data collection was conducted, including obtaining data for seven phases of maize LCC, six phases of maize FVC, and seven phases of UAV-based maize multispectral DOMs.

- Feature Extraction: Feature extraction was performed based on vegetation index maps involving three key features: (a) VIs, (b) TFs based on Gray Level Co-occurrence Matrix (GLCM), and (c) DFs.

- Regression Model Construction: The three types of extracted features were input into preselected single-model regression models and ensemble models to estimate LCC and FVC.

- Maize maturity monitoring: Utilizing the ANMD, thresholds for LCC and FVC that correspond to mature maize at P5 were determined. These thresholds were subsequently applied during P5–P7 to monitor maize maturity.

3.1. Regression Techniques

3.2. Adaptive Normal Maturity Detection Algorithm

3.3. Feature Extraction

3.3.1. Vegetation Indices

3.3.2. GLCM Texture Features

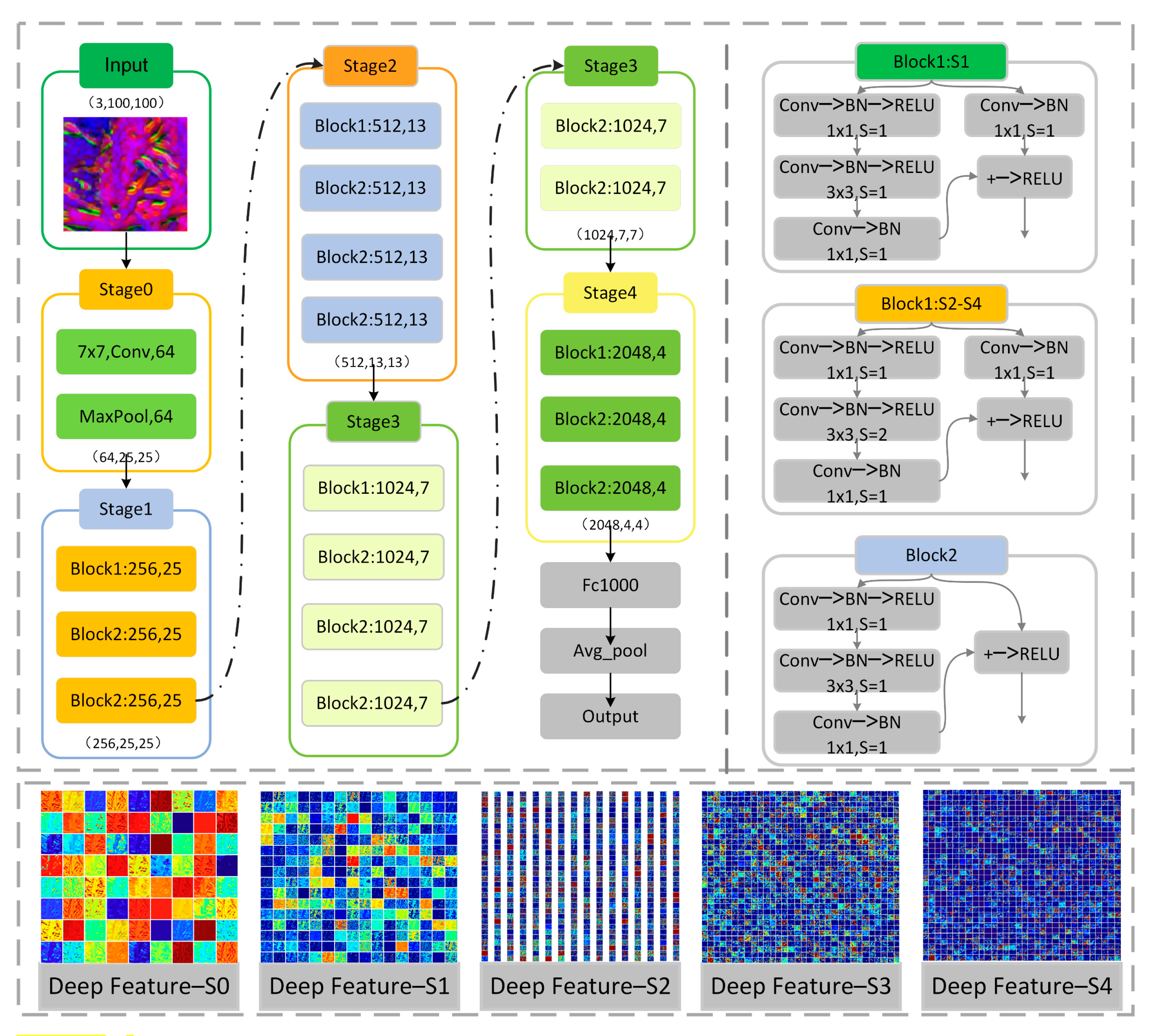

3.3.3. Deep Features

3.4. Performance Evaluation

4. Results

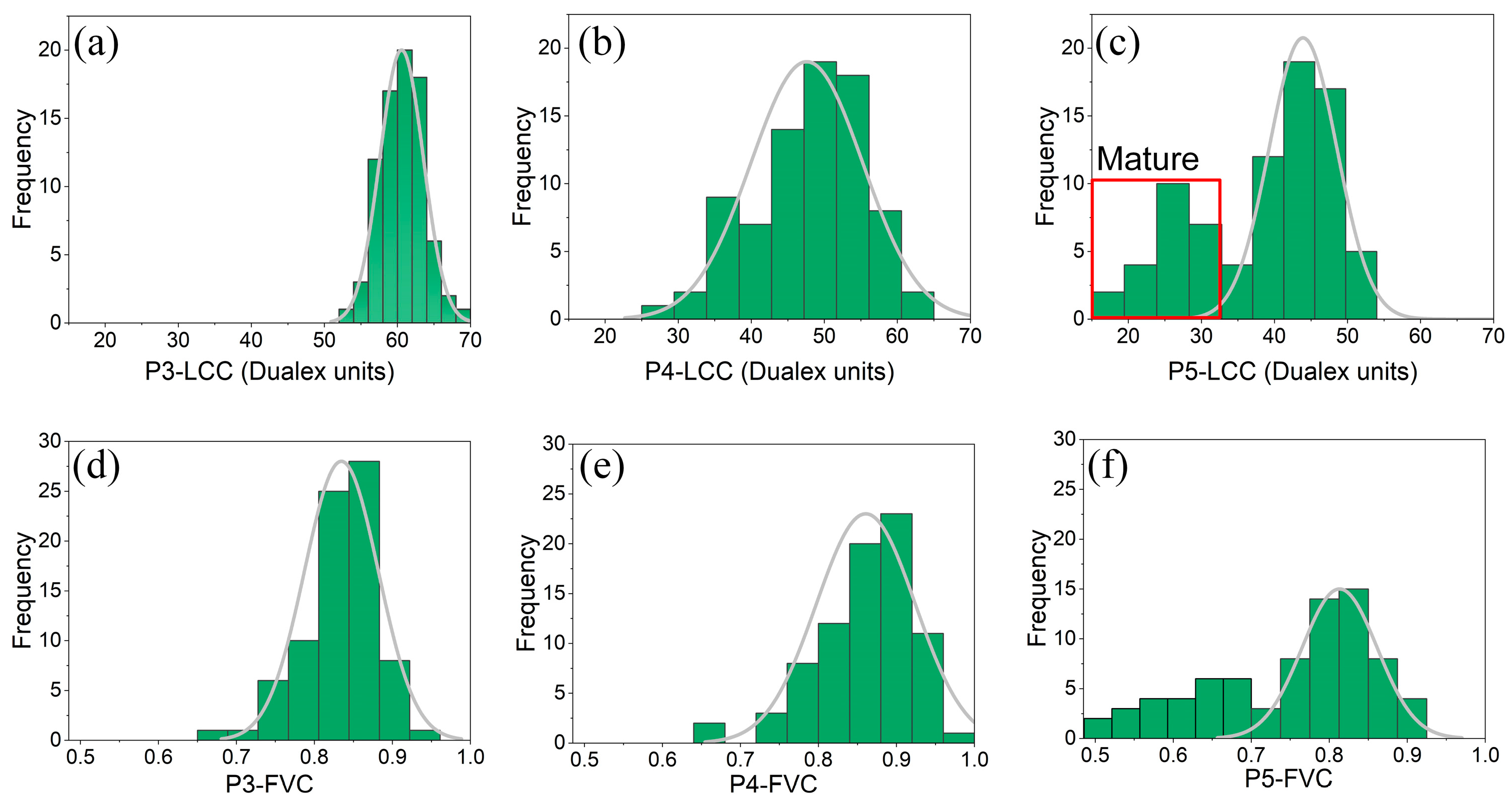

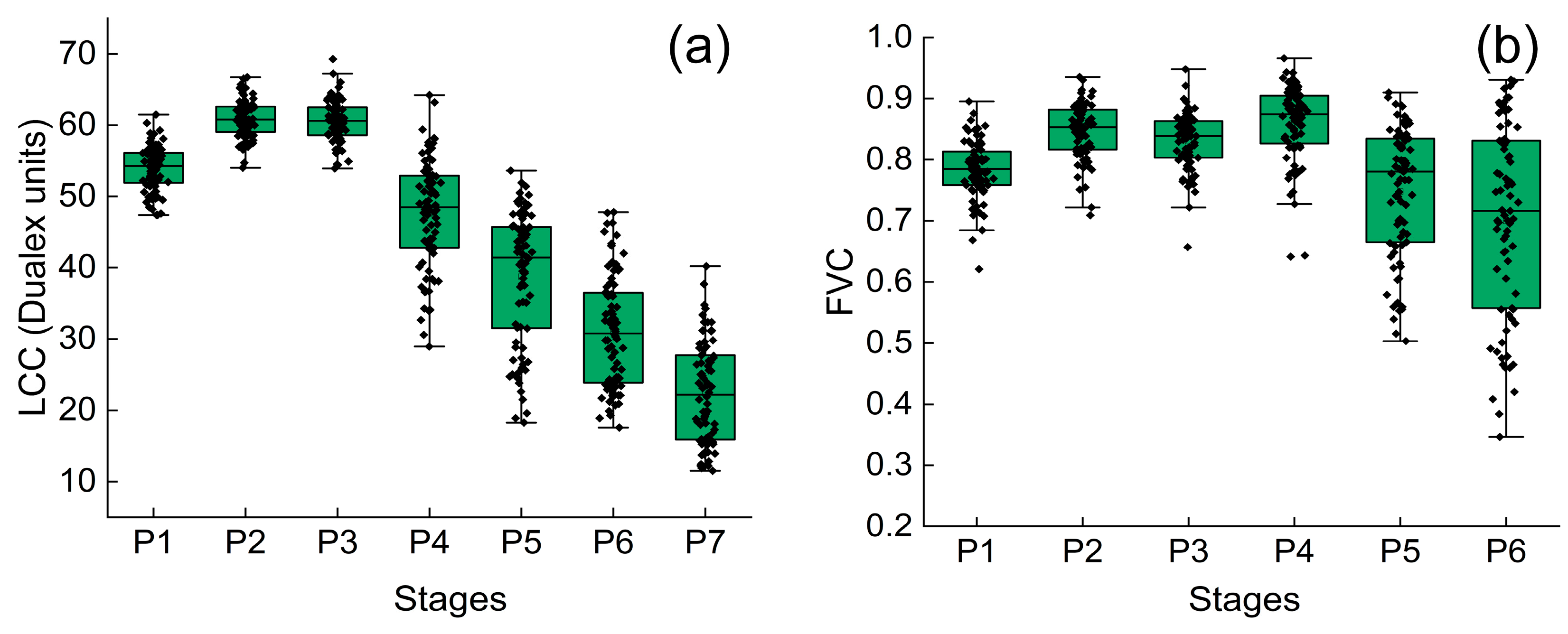

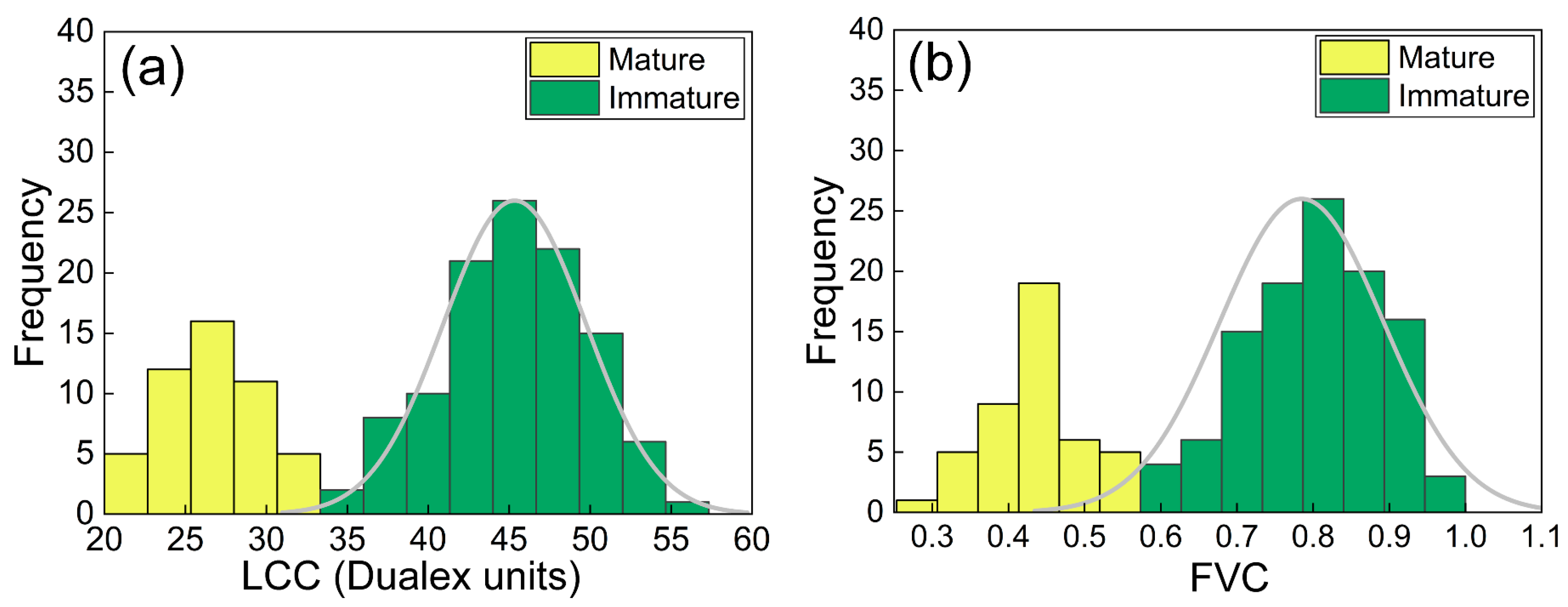

4.1. Statistical Analysis of LCC and FVC

4.2. Feature Correlation Analysis

4.2.1. Correlation Analysis of the Vegetation Indices

4.2.2. Correlation Analysis of GLCM Texture Features

4.2.3. Correlation Analysis of Deep Features

4.3. LCC and FVC Estimation and Mapping

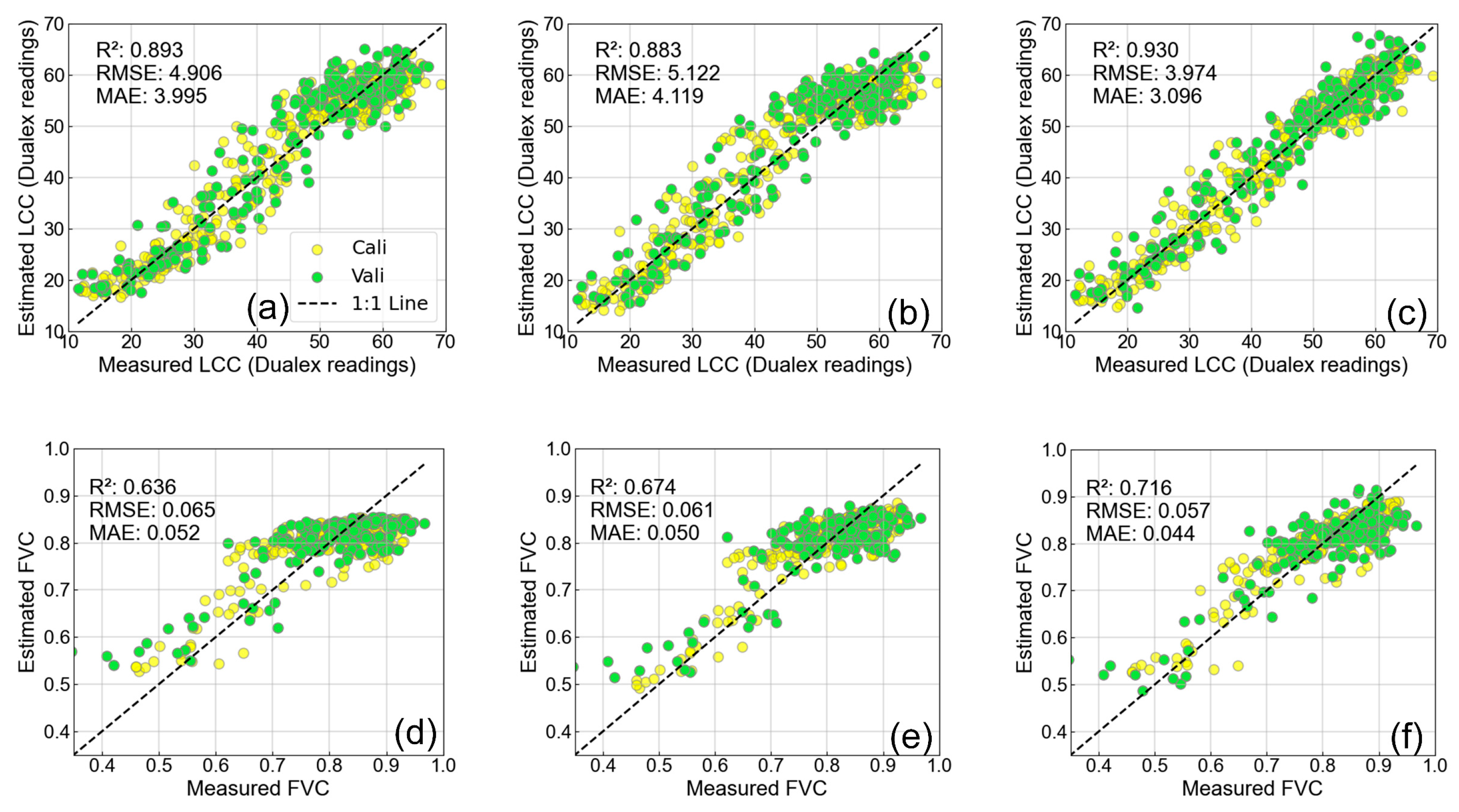

4.3.1. LCC and FVC Estimation

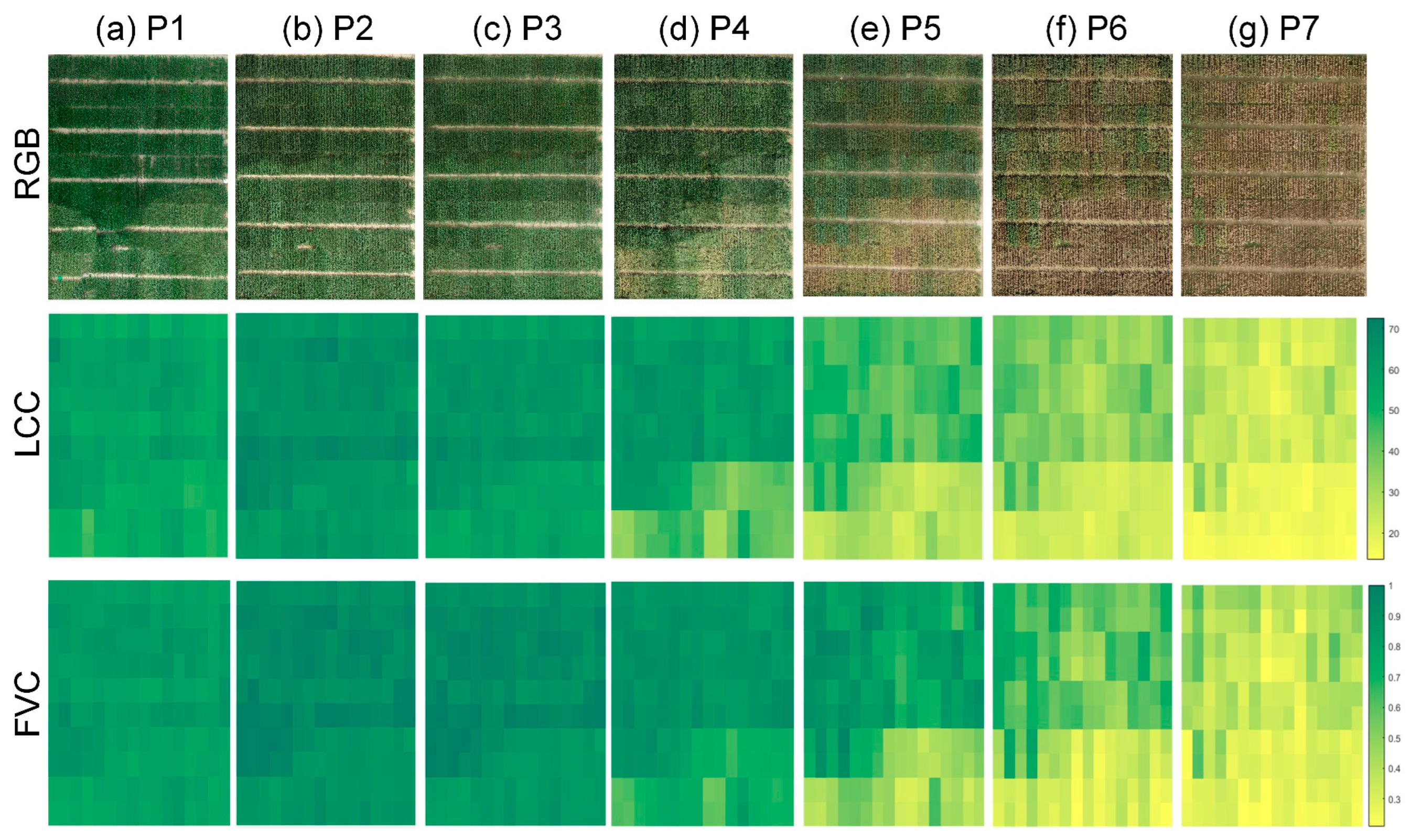

4.3.2. LCC and FVC Mapping

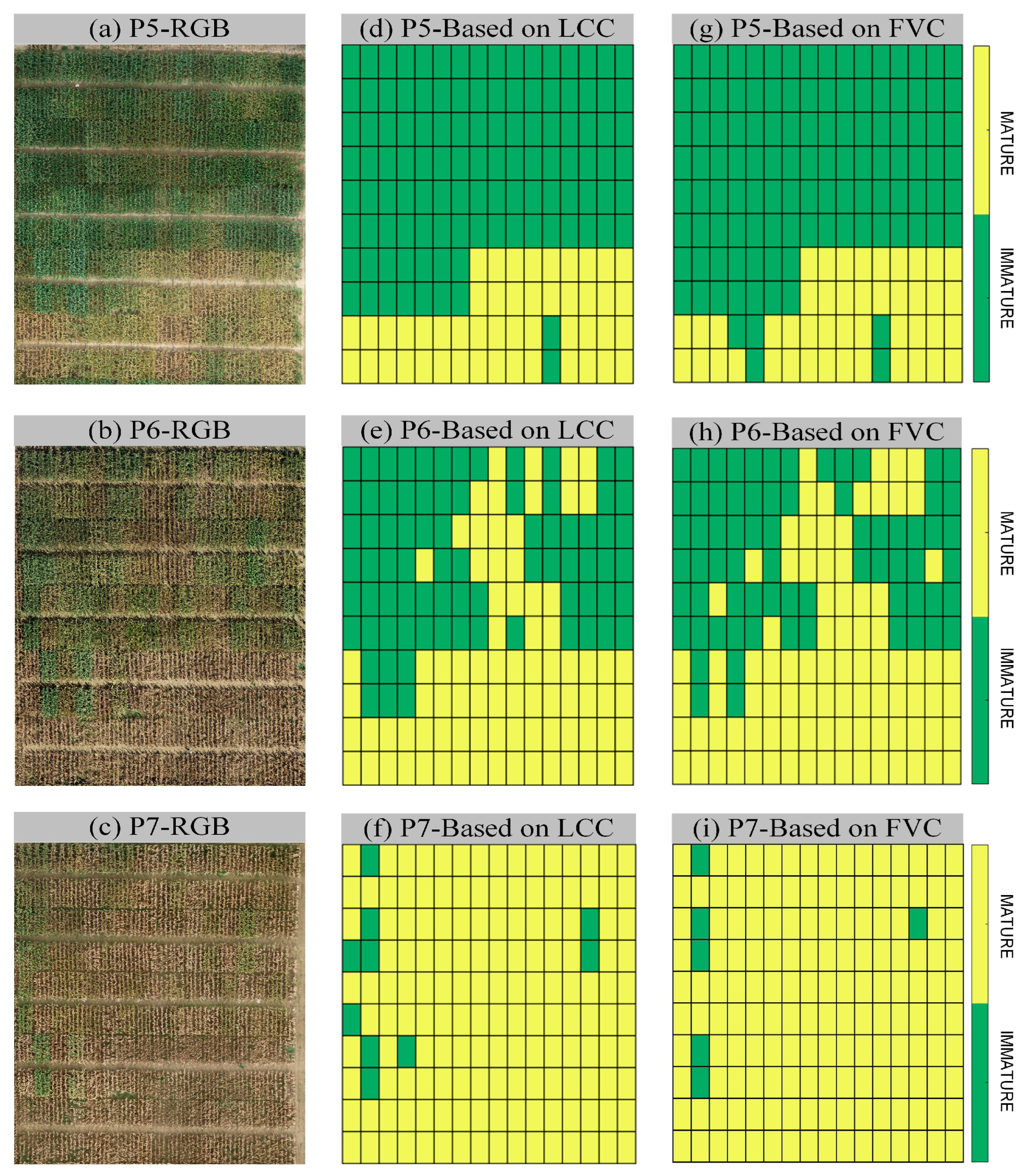

4.4. Maize Maturity Monitoring

5. Discussion

5.1. Impact of Different Features and Models on LCC and FVC Estimation

5.2. Monitoring and Analysis of Maize Maturity

5.3. Experimental Uncertainty and Limitations

6. Conclusions

- (1)

- Using image features derived from pretrained deep learning networks proves to be more effective at accurately describing crop canopy structure, thereby mitigating saturation effects and enhancing the precision of LCC and FVC estimations (as depicted in Figure 10). Specifically, employing DFs for LCC estimation yields a notable increase in R2 (0.037–0.047) and a decrease in RMSE (0.932–1.175) and MAE (0.899–1.023) compared to the utilization of the VIs and TFs. Similarly, the application of DFs for FVC estimation significantly improved the R2 values (0.042–0.08) and reduced the RMSE (0.006–0.008) and the MAE (0.004–0.008).

- (2)

- Compared with individual machine learning models, ensemble models demonstrate superior performance in estimating LCC and FVC. Implementing the stacking technique with DFs for LCC estimation yields optimal performance (R2: 0.930; RMSE: 3.974; and MAE: 3.096). Similarly, when estimating FVC, the Stacking + DF strategy achieves optimal performance (R2: 0.716; RMSE: 0.057; and MAE: 0.044).

- (3)

- The proposed ANMD, combined with LCC and FVC maps, has proven to be effective at monitoring the maturity of maize. Establishing the maturity threshold for LCC based on the wax ripening period (P5) and successfully applying it to the wax ripening-mature period (P5–P7) achieved high monitoring accuracy (overall accuracy (OA): 0.9625–0.9875; user’s accuracy: 0.9583–0.9933; and producer’s accuracy: 0.9634–1). Similarly, utilizing the ANMD algorithm with FVC also attained elevated monitoring accuracy during P5–P7 (OA: 0.9125–0.9750; UA: 0.878–0.9778; and PA: 0.9362–0.9934). This approach provides a rapid and effective maturity monitoring technique for future maize breeding fields.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Diao, Z.; Guo, P.; Zhang, B.; Zhang, D.; Yan, J.; He, Z.; Zhao, S.; Zhao, C.; Zhang, J. Navigation line extraction algorithm for corn spraying robot based on improved YOLOv8s network. Comput. Electron. Agric. 2023, 212, 108049. [Google Scholar] [CrossRef]

- Khan, A.; Hassan, T.; Shafay, M.; Fahmy, I.; Werghi, N.; Mudigansalage, S.; Hussain, I. Tomato maturity recognition with convolutional transformers. Sci. Rep. 2023, 13, 22885. [Google Scholar] [CrossRef] [PubMed]

- Kumar Yadav, P.; Alex Thomasson, J.; Hardin, R.; Searcy, S.W.; Braga-Neto, U.; Popescu, S.C.; Martin, D.E.; Rodriguez, R.; Meza, K.; Enciso, J.; et al. Detecting volunteer cotton plants in a corn field with deep learning on UAV remote-sensing imagery. Comput. Electron. Agric. 2023, 204, 107551. [Google Scholar] [CrossRef]

- Li, S.; Sun, Z.; Sang, Q.; Qin, C.; Kong, L.; Huang, X.; Liu, H.; Su, T.; Li, H.; He, M.; et al. Soybean reduced internode 1 determines internode length and improves grain yield at dense planting. Nat. Commun. 2023, 14, 7939. [Google Scholar] [CrossRef] [PubMed]

- Ma, Y.; Zhang, Z.; Kang, Y.; Özdoğan, M. Corn yield prediction and uncertainty analysis based on remotely sensed variables using a Bayesian neural network approach. Remote Sens. Environ. 2021, 259, 112408. [Google Scholar] [CrossRef]

- Yue, J.; Yang, H.; Feng, H.; Han, S.; Zhou, C.; Fu, Y.; Guo, W.; Ma, X.; Qiao, H.; Yang, G. Hyperspectral-to-image transform and CNN transfer learning enhancing soybean LCC estimation. Comput. Electron. Agric. 2023, 211, 108011. [Google Scholar] [CrossRef]

- Zhao, T.; Mu, X.; Song, W.; Liu, Y.; Xie, Y.; Zhong, B.; Xie, D.; Jiang, L.; Yan, G. Mapping Spatially Seamless Fractional Vegetation Cover over China at a 30-m Resolution and Semimonthly Intervals in 2010–2020 Based on Google Earth Engine. J. Remote Sens. 2023, 3, 0101. [Google Scholar] [CrossRef]

- Pan, W.; Wang, X.; Sun, Y.; Wang, J.; Li, Y.; Li, S. Karst vegetation coverage detection using UAV multispectral vegetation indices and machine learning algorithm. Plant Methods 2023, 19, 7. [Google Scholar] [CrossRef] [PubMed]

- Yue, J.; Guo, W.; Yang, G.; Zhou, C.; Feng, H.; Qiao, H. Method for accurate multi-growth-stage estimation of fractional vegetation cover using unmanned aerial vehicle remote sensing. Plant Methods 2021, 17, 51. [Google Scholar] [CrossRef] [PubMed]

- Yue, J.; Tian, Q.; Liu, Y.; Fu, Y.; Tian, J.; Zhou, C.; Feng, H.; Yang, G. Mapping cropland rice residue cover using a radiative transfer model and deep learning. Comput. Electron. Agric. 2023, 215, 108421. [Google Scholar] [CrossRef]

- Vahidi, M.; Shafian, S.; Thomas, S.; Maguire, R. Pasture Biomass Estimation Using Ultra-High-Resolution RGB UAVs Images and Deep Learning. Remote Sens. 2023, 15, 5714. [Google Scholar] [CrossRef]

- Liu, Y.; Feng, H.; Yue, J.; Jin, X.; Fan, Y.; Chen, R.; Bian, M.; Ma, Y.; Song, X.; Yang, G. Improved potato AGB estimates based on UAV RGB and hyperspectral images. Comput. Electron. Agric. 2023, 214, 108260. [Google Scholar] [CrossRef]

- Pan, D.; Li, C.; Yang, G.; Ren, P.; Ma, Y.; Chen, W.; Feng, H.; Chen, R.; Chen, X.; Li, H. Identification of the Initial Anthesis of Soybean Varieties Based on UAV Multispectral Time-Series Images. Remote Sens. 2023, 15, 5413. [Google Scholar] [CrossRef]

- Sun, Y.; Hao, Z.; Guo, Z.; Liu, Z.; Huang, J. Detection and Mapping of Chestnut Using Deep Learning from High-Resolution UAV-Based RGB Imagery. Remote Sens. 2023, 15, 4923. [Google Scholar] [CrossRef]

- Che, Y.; Wang, Q.; Xie, Z.; Li, S.; Zhu, J.; Li, B.; Ma, Y. High-quality images and data augmentation based on inverse projection transformation significantly improve the estimation accuracy of biomass and leaf area index. Comput. Electron. Agric. 2023, 212, 108144. [Google Scholar] [CrossRef]

- Liu, Y.; Feng, H.; Yue, J.; Jin, X.; Li, Z.; Yang, G. Estimation of potato above-ground biomass based on unmanned aerial vehicle red-green-blue images with different texture features and crop height. Front. Plant Sci. 2022, 13, 938216. [Google Scholar] [CrossRef]

- Shu, M.; Fei, S.; Zhang, B.; Yang, X.; Guo, Y.; Li, B.; Ma, Y. Application of UAV multisensor data and ensemble approach for high-throughput estimation of maize phenotyping traits. Plant Phenomics 2022, 2022, 9802585. [Google Scholar] [CrossRef] [PubMed]

- Yadav, S.P.; Ibaraki, Y.; Dutta Gupta, S. Estimation of the chlorophyll content of micropropagated potato plants using RGB based image analysis. Plant Cell Tissue Organ Cult. (PCTOC) 2010, 100, 183–188. [Google Scholar] [CrossRef]

- Yue, J.; Yang, H.; Yang, G.; Fu, Y.; Wang, H.; Zhou, C. Estimating vertically growing crop above-ground biomass based on UAV remote sensing. Comput. Electron. Agric. 2023, 205, 107627. [Google Scholar] [CrossRef]

- Zhou, C.; Hu, J.; Xu, Z.; Yue, J.; Ye, H.; Yang, G. A monitoring system for the segmentation and grading of broccoli head based on deep learning and neural networks. Front. Plant Sci. 2020, 11, 402. [Google Scholar] [CrossRef]

- Albert, L.P.; Cushman, K.C.; Zong, Y.; Allen, D.W.; Alonso, L.; Kellner, J.R. Sensitivity of solar-induced fluorescence to spectral stray light in high resolution imaging spectroscopy. Remote Sens. Environ. 2023, 285, 113313. [Google Scholar] [CrossRef]

- Cong, N.; Piao, S.; Chen, A.; Wang, X.; Lin, X.; Chen, S.; Han, S.; Zhou, G.; Zhang, X. Spring vegetation green-up date in China inferred from SPOT NDVI data: A multiple model analysis. Agric. For. Meteorol. 2012, 165, 104–113. [Google Scholar] [CrossRef]

- Jin, X.; Li, Z.; Yang, G.; Yang, H.; Feng, H.; Xu, X.; Wang, J.; Li, X.; Luo, J. Winter wheat yield estimation based on multi-source medium resolution optical and radar imaging data and the AquaCrop model using the particle swarm optimization algorithm. ISPRS J. Photogramm. Remote Sens. 2017, 126, 24–37. [Google Scholar] [CrossRef]

- Xie, J.; Wang, J.; Chen, Y.; Gao, P.; Yin, H.; Chen, S.; Sun, D.; Wang, W.; Mo, H.; Shen, J. Estimating the SPAD of Litchi in the Growth Period and Autumn Shoot Period Based on UAV Multi-Spectrum. Remote Sens. 2023, 15, 5767. [Google Scholar] [CrossRef]

- De Souza, R.; Peña-Fleitas, M.T.; Thompson, R.B.; Gallardo, M.; Padilla, F.M. Assessing performance of vegetation indices to estimate nitrogen nutrition index in pepper. Remote Sens. 2020, 12, 763. [Google Scholar] [CrossRef]

- Fan, Y.; Feng, H.; Jin, X.; Yue, J.; Liu, Y.; Li, Z.; Feng, Z.; Song, X.; Yang, G. Estimation of the nitrogen content of potato plants based on morphological parameters and visible light vegetation indices. Front. Plant Sci. 2022, 13, 1012070. [Google Scholar] [CrossRef]

- Li, X.; Wang, X.; Wu, J.; Luo, W.; Tian, L.; Wang, Y.; Liu, Y.; Zhang, L.; Zhao, C.; Zhang, W. Soil Moisture Monitoring and Evaluation in Agricultural Fields Based on NDVI Long Time Series and CEEMDAN. Remote Sens. 2023, 15, 5008. [Google Scholar] [CrossRef]

- Liu, Y.; An, L.; Wang, N.; Tang, W.; Liu, M.; Liu, G.; Sun, H.; Li, M.; Ma, Y. Leaf area index estimation under wheat powdery mildew stress by integrating UAV-based spectral, textural and structural features. Comput. Electron. Agric. 2023, 213, 108169. [Google Scholar] [CrossRef]

- Fan, Y.; Feng, H.; Yue, J.; Jin, X.; Liu, Y.; Chen, R.; Bian, M.; Ma, Y.; Song, X.; Yang, G. Using an optimized texture index to monitor the nitrogen content of potato plants over multiple growth stages. Comput. Electron. Agric. 2023, 212, 108147. [Google Scholar] [CrossRef]

- Li, W.; Wang, J.; Zhang, Y.; Yin, Q.; Wang, W.; Zhou, G.; Huo, Z. Combining Texture, Color, and Vegetation Index from Unmanned Aerial Vehicle Multispectral Images to Estimate Winter Wheat Leaf Area Index during the Vegetative Growth Stage. Remote Sens. 2023, 15, 5715. [Google Scholar] [CrossRef]

- Liu, Y.; Feng, H.; Yue, J.; Fan, Y.; Bian, M.; Ma, Y.; Jin, X.; Song, X.; Yang, G. Estimating potato above-ground biomass by using integrated unmanned aerial system-based optical, structural, and textural canopy measurements. Comput. Electron. Agric. 2023, 213, 108229. [Google Scholar] [CrossRef]

- Sun, Q.; Sun, L.; Shu, M.; Gu, X.; Yang, G.; Zhou, L. Monitoring Maize Lodging Grades via Unmanned Aerial Vehicle Multispectral Image. Plant Phenomics 2019, 2019, 5704154. [Google Scholar] [CrossRef]

- Yang, Y.; Nie, J.; Kan, Z.; Yang, S.; Zhao, H.; Li, J. Cotton stubble detection based on wavelet decomposition and texture features. Plant Methods 2021, 17, 113. [Google Scholar] [CrossRef]

- Chen, L.; Wu, J.; Xie, Y.; Chen, E.; Zhang, X. Discriminative feature constraints via supervised contrastive learning for few-shot forest tree species classification using airborne hyperspectral images. Remote Sens. Environ. 2023, 295, 113710. [Google Scholar] [CrossRef]

- Jjagwe, P.; Chandel, A.K.; Langston, D. Pre-Harvest Corn Grain Moisture Estimation Using Aerial Multispectral Imagery and Machine Learning Techniques. Land 2023, 12, 2188. [Google Scholar] [CrossRef]

- Fu, B.; He, X.; Yao, H.; Liang, Y.; Deng, T.; He, H.; Fan, D.; Lan, G.; He, W. Comparison of RFE-DL and stacking ensemble learning algorithms for classifying mangrove species on UAV multispectral images. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102890. [Google Scholar] [CrossRef]

- Hernandez, L.; Larsen, A. Visual definition of physiological maturity in sunflower (Helianthus annuus L.) is associated with receptacle quantitative color parameters. Span. J. Agric. Res. 2013, 11, 447–454. [Google Scholar] [CrossRef]

- Tremblay, G.; Filion, P.; Tremblay, M.; Berard, M.; Durand, J.; Goulet, J.; Montpetit, J. Evolution of kernels moisture content and physiological maturity determination of corn (Zea mays L.). Can. J. Plant Sci. 2008, 88, 679–685. [Google Scholar] [CrossRef]

- Gwathmey, C.O.; Bange, M.P.; Brodrick, R. Cotton crop maturity: A compendium of measures and predictors. Field Crops Res. 2016, 191, 41–53. [Google Scholar] [CrossRef]

- Hu, J.; Yue, J.; Xu, X.; Han, S.; Sun, T.; Liu, Y.; Feng, H.; Qiao, H. UAV-Based Remote Sensing for Soybean FVC, LCC, and Maturity Monitoring. Agriculture 2023, 13, 692. [Google Scholar] [CrossRef]

- Liu, Z.; Li, H.; Ding, X.; Cao, X.; Chen, H.; Zhang, S. Estimating Maize Maturity by Using UAV Multi-Spectral Images Combined with a CCC-Based Model. Drones 2023, 7, 586. [Google Scholar] [CrossRef]

- Liu, X.; Zhou, P.; Lin, Y.; Sun, S.; Zhang, H.; Xu, W.; Yang, S. Influencing Factors and Risk Assessment of Precipitation-Induced Flooding in Zhengzhou, China, Based on Random Forest and XGBoost Algorithms. Int. J. Environ. Res. Public Health 2022, 19, 16544. [Google Scholar] [CrossRef]

- Buchaillot, M.L.; Soba, D.; Shu, T.; Liu, J.; Aranjuelo, I.; Araus, J.L.; Runion, G.B.; Prior, S.A.; Kefauver, S.C.; Sanz-Saez, A. Estimating peanut and soybean photosynthetic traits using leaf spectral reflectance and advance regression models. Planta 2022, 255, 93. [Google Scholar] [CrossRef]

- Feng, C.; Zhang, W.; Deng, H.; Dong, L.; Zhang, H.; Tang, L.; Zheng, Y.; Zhao, Z. A Combination of OBIA and Random Forest Based on Visible UAV Remote Sensing for Accurately Extracted Information about Weeds in Areas with Different Weed Densities in Farmland. Remote Sens. 2023, 15, 4696. [Google Scholar] [CrossRef]

- Mao, Y.; Van Niel, T.G.; McVicar, T.R. Reconstructing cloud-contaminated NDVI images with SAR-Optical fusion using spatio-temporal partitioning and multiple linear regression. ISPRS J. Photogramm. Remote Sens. 2023, 198, 115–139. [Google Scholar] [CrossRef]

- Uribeetxebarria, A.; Castellón, A.; Aizpurua, A. Optimizing Wheat Yield Prediction Integrating Data from Sentinel-1 and Sentinel-2 with CatBoost Algorithm. Remote Sens. 2023, 15, 1640. [Google Scholar] [CrossRef]

- Tao, S.; Zhang, X.; Feng, R.; Qi, W.; Wang, Y.; Shrestha, B. Retrieving soil moisture from grape growing areas using multi-feature and stacking-based ensemble learning modeling. Comput. Electron. Agric. 2023, 204, 107537. [Google Scholar] [CrossRef]

- Derraz, R.; Melissa Muharam, F.; Nurulhuda, K.; Ahmad Jaafar, N.; Keng Yap, N. Ensemble and single algorithm models to handle multicollinearity of UAV vegetation indices for predicting rice biomass. Comput. Electron. Agric. 2023, 205, 107621. [Google Scholar] [CrossRef]

- Freedman, D.; Diaconis, P. On the maximum deviation between the histogram and the underlying density. Z. Für Wahrscheinlichkeitstheorie Und Verwandte Geb. 1981, 58, 139–167. [Google Scholar] [CrossRef]

- Lu, S.; Lu, F.; You, W.; Wang, Z.; Liu, Y.; Omasa, K. A robust vegetation index for remotely assessing chlorophyll content of dorsiventral leaves across several species in different seasons. Plant Methods 2018, 14, 15. [Google Scholar] [CrossRef] [PubMed]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the Great Plains with ERTS. NASA Spec. Publ. 1974, 351, 309. [Google Scholar]

- Barnes, E.; Clarke, T.; Richards, S.; Colaizzi, P.; Haberland, J.; Kostrzewski, M.; Waller, P.; Choi, C.; Riley, E.; Thompson, T. Coincident detection of crop water stress, nitrogen status and canopy density using ground based multispectral data. In Proceedings of the Fifth International Conference on Precision Agriculture, Bloomington, MN, USA, 16–19 July 2000; p. 6. [Google Scholar]

- Dash, J.; Jeganathan, C.; Atkinson, P.M. The use of MERIS Terrestrial Chlorophyll Index to study spatio-temporal variation in vegetation phenology over India. Remote Sens. Environ. 2010, 114, 1388–1402. [Google Scholar] [CrossRef]

- Peñuelas, J.; Gamon, J.A.; Fredeen, A.L.; Merino, J.; Field, C.B. Reflectance indices associated with physiological changes in nitrogen- and water-limited sunflower leaves. Remote Sens. Environ. 1994, 48, 135–146. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Schneider, P.; Roberts, D.A.; Kyriakidis, P.C. A VARI-based relative greenness from MODIS data for computing the Fire Potential Index. Remote Sens. Environ. 2008, 112, 1151–1167. [Google Scholar] [CrossRef]

- Huang, Y.; Wen, X.; Gao, Y.; Zhang, Y.; Lin, G. Tree Species Classification in UAV Remote Sensing Images Based on Super-Resolution Reconstruction and Deep Learning. Remote Sens. 2023, 15, 2942. [Google Scholar] [CrossRef]

- Qiao, L.; Gao, D.; Zhao, R.; Tang, W.; An, L.; Li, M.; Sun, H. Improving estimation of LAI dynamic by fusion of morphological and vegetation indices based on UAV imagery. Comput. Electron. Agric. 2022, 192, 106603. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Tian, Q.; Feng, H.; Xu, K.; Zhou, C. Estimate of winter-wheat above-ground biomass based on UAV ultrahigh-ground-resolution image textures and vegetation indices. ISPRS J. Photogramm. Remote Sens. 2019, 150, 226–244. [Google Scholar] [CrossRef]

- Dericquebourg, E.; Hafiane, A.; Canals, R. Generative-Model-Based Data Labeling for Deep Network Regression: Application to Seed Maturity Estimation from UAV Multispectral Images. Remote Sens. 2022, 14, 5238. [Google Scholar] [CrossRef]

- Moeinizade, S.; Pham, H.; Han, Y.; Dobbels, A.; Hu, G. An applied deep learning approach for estimating soybean relative maturity from UAV imagery to aid plant breeding decisions. Mach. Learn. Appl. 2022, 7, 100233. [Google Scholar] [CrossRef]

- Ilniyaz, O.; Du, Q.; Shen, H.; He, W.; Feng, L.; Azadi, H.; Kurban, A.; Chen, X. Leaf area index estimation of pergola-trained vineyards in arid regions using classical and deep learning methods based on UAV-based RGB images. Comput. Electron. Agric. 2023, 207, 107723. [Google Scholar] [CrossRef]

- Li, X.; Dong, Y.; Zhu, Y.; Huang, W. Enhanced Leaf Area Index Estimation with CROP-DualGAN Network. IEEE Trans. Geosci. Remote Sens. 2022, 61, 5514610. [Google Scholar] [CrossRef]

- Yamaguchi, T.; Tanaka, Y.; Imachi, Y.; Yamashita, M.; Katsura, K. Feasibility of combining deep learning and RGB images obtained by unmanned aerial vehicle for leaf area index estimation in rice. Remote Sens. 2020, 13, 84. [Google Scholar] [CrossRef]

- Yu, D.; Zha, Y.; Sun, Z.; Li, J.; Jin, X.; Zhu, W.; Bian, J.; Ma, L.; Zeng, Y.; Su, Z. Deep convolutional neural networks for estimating maize above-ground biomass using multi-source UAV images: A comparison with traditional machine learning algorithms. Precis. Agric. 2023, 24, 92–113. [Google Scholar] [CrossRef]

- Wu, M.; Dou, S.; Lin, N.; Jiang, R.; Zhu, B. Estimation and Mapping of Soil Organic Matter Content Using a Stacking Ensemble Learning Model Based on Hyperspectral Images. Remote Sens. 2023, 15, 4713. [Google Scholar] [CrossRef]

- Zhang, Y.; Fu, B.; Sun, X.; Yao, H.; Zhang, S.; Wu, Y.; Kuang, H.; Deng, T. Effects of Multi-Growth Periods UAV Images on Classifying Karst Wetland Vegetation Communities Using Object-Based Optimization Stacking Algorithm. Remote Sens. 2023, 15, 4003. [Google Scholar] [CrossRef]

- Wang, L.; Gao, R.; Li, C.; Wang, J.; Liu, Y.; Hu, J.; Li, B.; Qiao, H.; Feng, H.; Yue, J. Mapping Soybean Maturity and Biochemical Traits Using UAV-Based Hyperspectral Images. Remote Sens. 2023, 15, 4807. [Google Scholar] [CrossRef]

| Stage | LCC | FVC | ||||||

|---|---|---|---|---|---|---|---|---|

| Num | Max | Min | Mean | Num | Max | Min | Mean | |

| P1 (7.27) | 80 | 61.5 | 47.4 | 53.98 | 80 | 0.895 | 0.620 | 0.784 |

| P2 (8.11) | 80 | 66.7 | 54.0 | 60.87 | 80 | 0.935 | 0.709 | 0.846 |

| P3 (8.18) | 80 | 69.3 | 53.9 | 60.57 | 80 | 0.948 | 0.656 | 0.830 |

| P4 (9.1) | 80 | 64.2 | 28.9 | 47.60 | 80 | 0.966 | 0.641 | 0.860 |

| P5 (9.7) | 80 | 53.6 | 18.3 | 38.75 | 80 | 0.909 | 0.515 | 0.743 |

| P6 (9.14) | 80 | 47.8 | 17.6 | 31.01 | 80 | 0.930 | 0.346 | 0.702 |

| P7 (9.21) | 80 | 40.2 | 11.5 | 22.43 | - | - | - | - |

| Total | 560 | 69.3 | 11.5 | 45.03 | 480 | 0.966 | 0.346 | 0.797 |

| Name | Calculation | Reference |

|---|---|---|

| NDVI | (NIR − R)/(NIR + R) | [51] |

| NDRE | (NIR − RE)/(NIR + RE) | [52] |

| LCI | (NIR − REG)/(NIR + RED) | [53] |

| EXR | 1.4R − G | [54] |

| OSAVI | 1.16 (NIR − R)/(NIR + R + 0.16) | [55] |

| GNDVI | (NIR − G)/(NIR + G) | [56] |

| VARI | (G − R)/(G + R − B) | [57] |

| MTCI | (NIR − REG)/(REG − RED) | [53] |

| Name | Calculation |

|---|---|

| Mean | |

| Variance | |

| Homogeneity | |

| Contrast | |

| Dissimilarity | |

| Entropy | |

| Second Moment | |

| Correlation |

| Confusion Matrix | Predicted | ||

|---|---|---|---|

| Matured | Immature | ||

| Actual | Matured | TP | FN |

| Immature | FP | TN | |

| Name | Model | LCC-Calibration | LCC-Validation | ||||

|---|---|---|---|---|---|---|---|

| R2 | RMSE | MAE | R2 | RMSE | MAE | ||

| VI | MLR | 0.842 | 6.077 | 4.863 | 0.828 | 6.236 | 5.107 |

| LASSO | 0.842 | 6.077 | 4.863 | 0.828 | 6.236 | 5.107 | |

| KNR | 0.850 | 5.921 | 4.823 | 0.845 | 5.989 | 4.912 | |

| CatBoost | 0.907 | 4.647 | 3.759 | 0.874 | 5.418 | 4.279 | |

| Bagging | 0.874 | 5.415 | 4.235 | 0.856 | 5.674 | 4.602 | |

| Blending | 0.923 | 4.237 | 3.423 | 0.890 | 4.969 | 4.011 | |

| Stacking | 0.920 | 4.314 | 3.470 | 0.893 | 4.906 | 3.995 | |

| TF | MLR | 0.822 | 6.441 | 5.394 | 0.814 | 6.513 | 5.454 |

| LASSO | 0878 | 5.332 | 4.091 | 0.819 | 6.376 | 5.069 | |

| KNR | 0.858 | 5.672 | 4.579 | 0.816 | 6.432 | 5.259 | |

| CatBoost | 0.875 | 5.412 | 4.458 | 0.855 | 5.788 | 4.833 | |

| Bagging | 0.904 | 4.733 | 3.831 | 0.869 | 5.413 | 4.310 | |

| Blending | 0.905 | 4.715 | 3.837 | 0.883 | 5.122 | 4.119 | |

| Stacking | 0.892 | 5.012 | 3.970 | 0.871 | 5.377 | 4.229 | |

| DF | MLR | 0.822 | 6.447 | 5.331 | 0.790 | 6.861 | 5.634 |

| LASSO | 0.922 | 4.259 | 3.407 | 0.900 | 4.735 | 3.879 | |

| KNR | 0.850 | 5.917 | 4.812 | 0.839 | 6.00 | 4.917 | |

| CatBoost | 0.888 | 5.124 | 4.062 | 0.879 | 5.233 | 4.216 | |

| Bagging | 0.892 | 5.012 | 3.97 | 0.871 | 5.377 | 4.229 | |

| Blending | 0.941 | 3.700 | 2.915 | 0.924 | 4.221 | 3.293 | |

| Stacking | 0.945 | 3.586 | 2.792 | 0.930 | 3.974 | 3.096 | |

| Name | Model | FVC-Calibration | FVC-Validation | ||||

|---|---|---|---|---|---|---|---|

| R2 | RMSE | MAE | R2 | RMSE | MAE | ||

| VI | MLR | 0.543 | 0.064 | 0.050 | 0.540 | 0.073 | 0.057 |

| LASSO | 0.543 | 0.064 | 0.050 | 0.540 | 0.073 | 0.057 | |

| KNR | 0.586 | 0.062 | 0.047 | 0.566 | 0.071 | 0.055 | |

| CatBoost | 0.632 | 0.058 | 0.045 | 0.599 | 0.068 | 0.054 | |

| Bagging | 0.619 | 0.060 | 0.046 | 0.578 | 0.070 | 0.055 | |

| Blending | 0.654 | 0.056 | 0.044 | 0.636 | 0.065 | 0.052 | |

| Stacking | 0.651 | 0.056 | 0.044 | 0.631 | 0.065 | 0.052 | |

| TF | MLR | 0.519 | 0.066 | 0.051 | 0.503 | 0.076 | 0.059 |

| LASSO | 0.545 | 0.064 | 0.050 | 0.539 | 0.073 | 0.057 | |

| KNR | 0.580 | 0.062 | 0.048 | 0.570 | 0.071 | 0.055 | |

| CatBoost | 0.615 | 0.060 | 0.046 | 0.601 | 0.068 | 0.054 | |

| Bagging | 0.543 | 0.064 | 0.050 | 0.542 | 0.073 | 0.057 | |

| Blending | 0.762 | 0.046 | 0.036 | 0.674 | 0.061 | 0.050 | |

| Stacking | 0.698 | 0.052 | 0.041 | 0.615 | 0.067 | 0.053 | |

| DF | MLR | 0.545 | 0.064 | 0.050 | 0.526 | 0.074 | 0.058 |

| LASSO | 0.698 | 0.052 | 0.041 | 0.615 | 0.067 | 0.053 | |

| KNR | 0.720 | 0.050 | 0.038 | 0.577 | 0.070 | 0.055 | |

| CatBoost | 0.659 | 0.055 | 0.044 | 0.593 | 0.069 | 0.054 | |

| Bagging | 0.586 | 0.062 | 0.047 | 0.566 | 0.071 | 0.055 | |

| Blending | 0.874 | 0.035 | 0.026 | 0.697 | 0.060 | 0.048 | |

| Stacking | 0.801 | 0.042 | 0.032 | 0.716 | 0.057 | 0.044 | |

| Name | Stages | OA | UA | PA |

|---|---|---|---|---|

| LCC | P5 | 0.9875 | 0.9583 | 1.0000 |

| P6 | 0.9625 | 0.9634 | 0.9634 | |

| P7 | 0.9812 | 0.9933 | 0.9868 | |

| FVC | P5 | 0.9750 | 0.9778 | 0.9362 |

| P6 | 0.9125 | 0.8778 | 0.9634 | |

| P7 | 0.9688 | 0.9740 | 0.9934 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, J.; Feng, H.; Wang, Q.; Shen, J.; Wang, J.; Liu, Y.; Feng, H.; Yang, H.; Guo, W.; Qiao, H.; et al. Pretrained Deep Learning Networks and Multispectral Imagery Enhance Maize LCC, FVC, and Maturity Estimation. Remote Sens. 2024, 16, 784. https://doi.org/10.3390/rs16050784

Hu J, Feng H, Wang Q, Shen J, Wang J, Liu Y, Feng H, Yang H, Guo W, Qiao H, et al. Pretrained Deep Learning Networks and Multispectral Imagery Enhance Maize LCC, FVC, and Maturity Estimation. Remote Sensing. 2024; 16(5):784. https://doi.org/10.3390/rs16050784

Chicago/Turabian StyleHu, Jingyu, Hao Feng, Qilei Wang, Jianing Shen, Jian Wang, Yang Liu, Haikuan Feng, Hao Yang, Wei Guo, Hongbo Qiao, and et al. 2024. "Pretrained Deep Learning Networks and Multispectral Imagery Enhance Maize LCC, FVC, and Maturity Estimation" Remote Sensing 16, no. 5: 784. https://doi.org/10.3390/rs16050784

APA StyleHu, J., Feng, H., Wang, Q., Shen, J., Wang, J., Liu, Y., Feng, H., Yang, H., Guo, W., Qiao, H., Niu, Q., & Yue, J. (2024). Pretrained Deep Learning Networks and Multispectral Imagery Enhance Maize LCC, FVC, and Maturity Estimation. Remote Sensing, 16(5), 784. https://doi.org/10.3390/rs16050784