Flood Detection with SAR: A Review of Techniques and Datasets

Abstract

1. Introduction

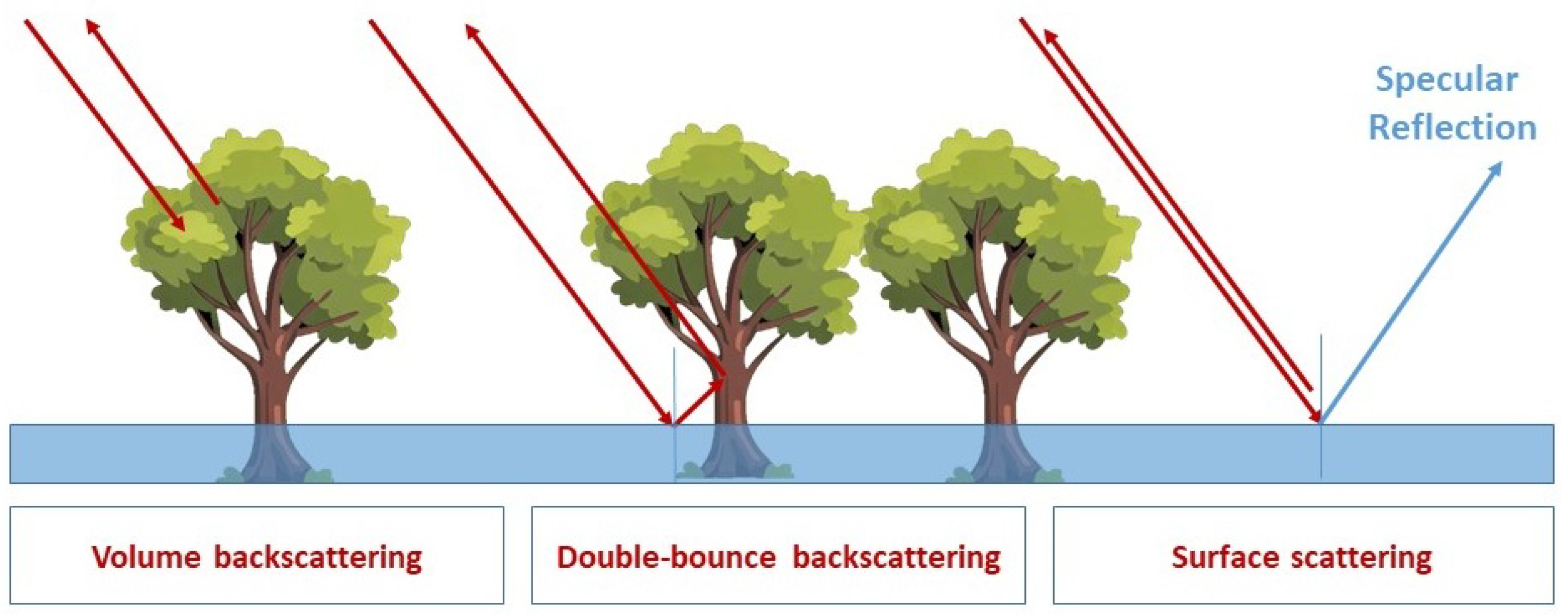

2. Phenomenological Aspects of Floods in SAR Images

2.1. Backscattering Coefficient

2.2. Interferometric Coherence

- environmental change over time (temporal decorrelation);

- imaging from different viewing directions (geometric decorrelation);

- imaging volumetric backscattering (volume decorrelation).

3. Flood Detection Processing Techniques

3.1. General Pre-Processing Operations

3.2. Thresholding

3.3. Fuzzy Classifiers

3.4. Machine Learning

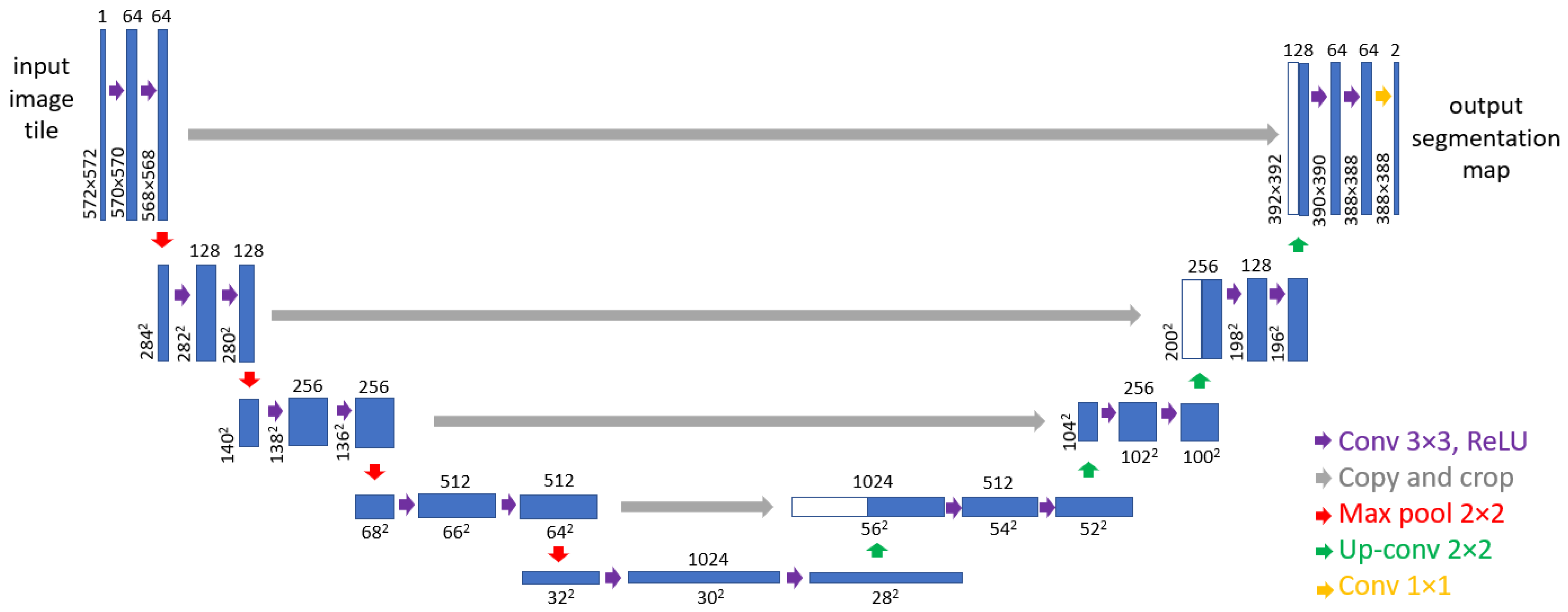

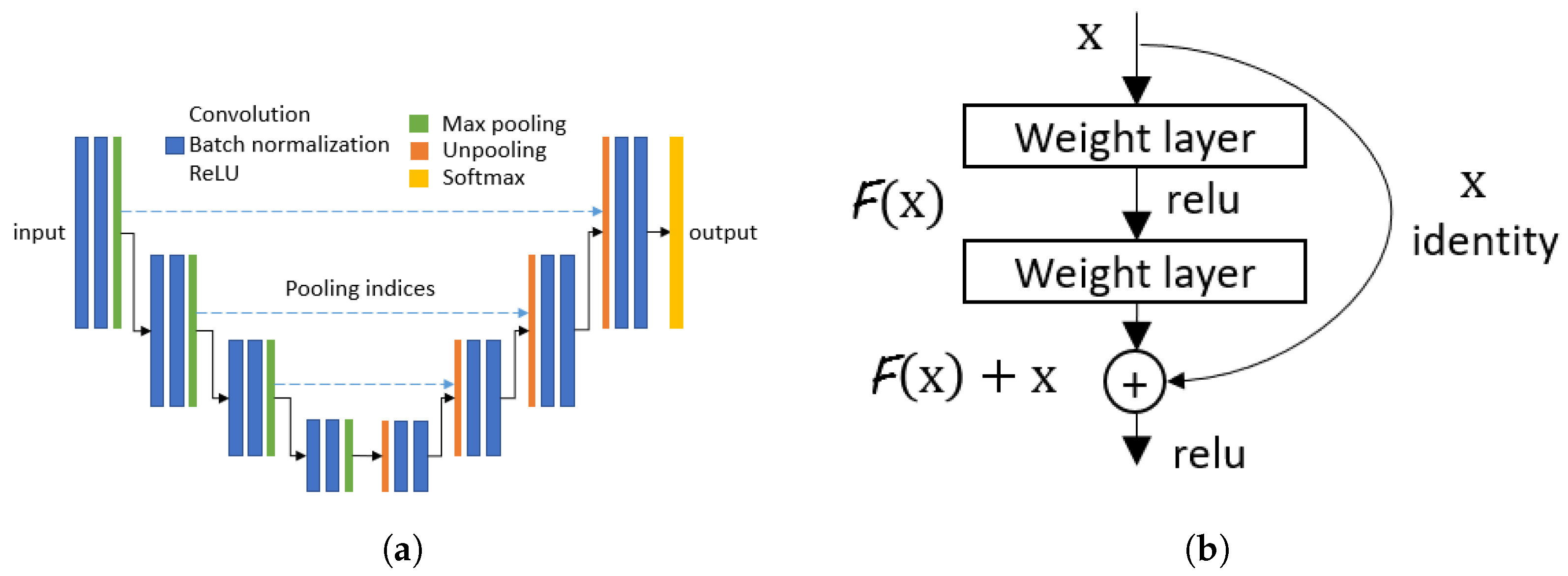

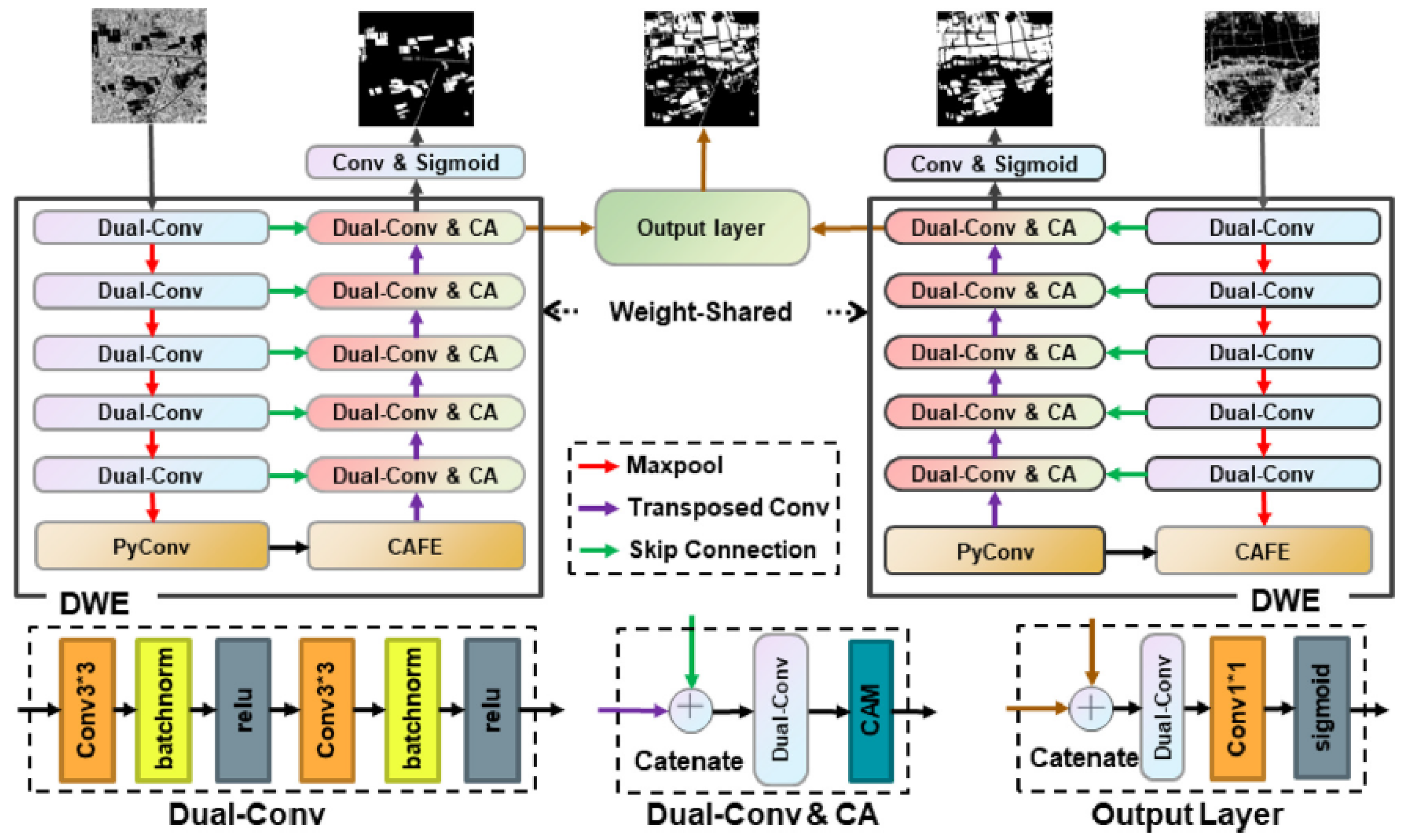

3.5. Other Methodologies

3.5.1. Multi-Sensor Data Fusion

3.5.2. Active Contour Models

3.5.3. Data Assimilation

3.6. Post-Processing Operations

3.7. Challenging Situations

3.7.1. Vegetation Cover

3.7.2. Urban Areas

3.7.3. Mountainous Regions

4. Validation Strategies and Map Quality Indicators

- True Positive (TP): number of flooded image samples classified as flooded.

- False Positive (FP): number of flooded image samples classified as non-flooded.

- True Negative (TN): number of non-flooded image samples classified as non-flooded.

- False Negative (FN): number of non-flooded image samples classified as flooded.

- SAR imagery: Reference maps are obtained by application of well-assessed and well-recognized SAR-based methods to be taken as reference. One main example is the CEMS service, which is described in Section 5. This is the methodology followed in, e.g., [18]. Using maps derived from the same sensor has the advantage of likely neither temporal nor spatial misalignment between the reference map and the derived one. The co-registration step is also simplified. Notwithstanding, the reference algorithm should be manifestly superior in terms of classification accuracy, something that is not ensured when developing new methods.

- Optical/MS imagery: Reference maps are obtained by either visual interpretation and labeling by experts or automatic processing of aerial photography or optical/MS satellite imagery. Both approaches can be combined in a semi-automatic procedure where an initial delineation map is derived by means of classification algorithms and manually refined. Common automatic strategies include thresholding of NDWI where the Otsu’s method [53] is a common approach to find the optimal threshold. Manual quality check and classification refinement is optionally performed to reduce misclassifications and partially compensates for the temporal gap between the SAR and optical/MS acquisitions, possibly with the support of hydrodynamic models. In the case of a purely manual approach, optical/MS images are commonly digitized by remote sensing experts in two (flood/non-flood areas) or more (as in fuzzy approaches) classes, possibly with the support of permanent water information derived from existing external databases, such as the JRC surface water dataset, or from pre-event images.When deriving flooding extent maps from optical/MS imagery, it should be taken into account that these data are not affected by geometrical distortions, thus leading to potential misregistrations between the test and validation datasets. Additionally, optical/MS satellite sensors are sensitive to sunlight and cloud cover, which might impair data availability and quality.

- Ground-truth campaigns: reference maps are obtained through ground surveys on the flooded area. It is noteworthy that the use of lumped field data might lead to highly accurate reference data but, at the same time, they might not allow for a comprehensive validation of the model behavior at large spatial scales and/or in different scenarios.

5. Publicly Available Datasets

5.1. Sen1Floods11

5.2. S1S2-Water/Flood

5.3. Sen12-Flood

5.4. UNOSAT

5.5. CEMS

5.6. Other Datasets

- SEN12MS [203] consists of over 180,000 256 × 256 labeled patch triplets (Sentinel-1 dual-pol SAR, Sentinel-2 MS, MODIS land cover) covering all the continents and seasons. However, SEN12MS suffers from some issues when applied to flood detection applications. First, variability of water class is not specifically accounted for. Indeed, it is primarily designed for image fusion and landcover mapping applications and includes also some permanent water data, but they represent a negligible quote, and no flood events are present. Additionally, annotated maps are derived from an independent source w.r.t. the remote sensing one and at a much coarser spatial resolution, up to 500 m. This prevents an adequate exploitation of the dataset for high-resolution mapping. A picture of the global and seasonal distribution of the dataset can be found in ([203], Figure 3). The patches are stored as multi-channel GeoTiff images and require about 420 GByte of storage. The SEN12MS dataset is shared under the open access license CC-BY and is available for download at https://mediatum.ub.tum.de/1474000 (accessed on 21 January 2024).

- The WorldFloods dataset comprises bundles of S2 images with corresponding pixel-level water extent area maps covering 119 flood events distributed over the entire globe and occurring between November 2015 and March 2019 (https://gitlab.com/frontierdevelopmentlab/disaster-prevention/cubesatfloods (accessed on 21 January 2024)) [204]. It contains over 400 flood delineation maps at 10 m resolution gathered from three independent sources, namely CEMS, UNOSAT and the Global Flood Inundation Map Repository (https://sdml.ua.edu/glofimr/ (accessed on 21 January 2024)). They were generated using either active (mostly) or passive remote sensing satellites and by either a semi-automatic procedure or manually by photo-interpretation techniques. Even though this dataset cannot be directly adopted for training neural networks based on SAR imagery, the large amount of available water masks could be used for cal/val operations. However, it should be emphasized here that the maps have not been validated through ground measurements and that the manual intervention as well as potential temporal misalignments between the acquisition of the original remote sensing image and the S2 one might lead to inaccuracies.

- The European Commission Joint Research Centre (JRC) surface water dataset provides observations of surface water on a monthly basis and at 30 m resolution using MS Landsat imagery [205]. It offers highly accurate labeled data for permanent water, with commission and omission error rate <1%, while the accuracy on all other water classes, including flooding events, is much lower [205] due to cloud coverage sensitivity and the limited temporal extent of floods. Here, permanent water refers to samples classified as water in the whole time period of the dataset (1984–2018). Even though this dataset is not specifically designed for flood mapping, the availability of permanent water maps can be exploited in support of permanent water/flood discrimination.

6. Discussion

7. Conclusions

- Making developed codes and data freely available to the scientific community through personal websites or sharing platforms, e.g., IEEE DataPort, Remote Sensing Code Library of IEEE Geoscience and Remote Sensing Society, Papers With Code, GitHub. Codes should be documented at best in order to ease usage from other scientists.

- Fix and apply a random seed to all the workflow components involving randomness, e.g., data shuffling, data augmentation, model weight initialization.

- Enforcing deterministic GPU floating point calculations, as conducted, e.g., in [186].

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Economic Lossess, Poverty & Disasters 1998–2017; United Nations Office for Disaster Risk Reduction: Geneva, Switzerland, 2017.

- Rentschler, J.; Salhab, M.; Jafino, B.A. Flood exposure and poverty in 188 countries. Nat. Commun. 2022, 13, 3527. [Google Scholar] [CrossRef]

- Bailey, R.; Saffioti, C.; Drall, S. Sunk Costs: The Socioeconomic Impacts of Flooding; Marsh & McLennan Companies Ltd.: New York, NY, USA, 2021. [Google Scholar]

- Lehmkuhl, F.; Schüttrumpf, H.; Schwarzbauer, J.; Brüll, C.; Dietze, M.; Letmathe, C.V.; Hollert, H. Assessment of the 2021 summer flood in Central Europe. Environ. Sci. Eur. 2022, 34, 107. [Google Scholar] [CrossRef]

- Lin, S.S.; Zhang, N.; Xu, Y.S.; Hino, T. Lesson Learned from Catastrophic Floods in Western Japan in 2018: Sustainable Perspective Analysis. Water 2020, 12, 2489. [Google Scholar] [CrossRef]

- Kelly, M.; Schwarz, I.; Ziegelaar, M.; Watkins, A.B.; Kuleshov, Y. Flood Risk Assessment and Mapping: A Case Study from Australia’s Hawkesbury-Nepean Catchment. Hydrology 2023, 10, 26. [Google Scholar] [CrossRef]

- Winsemius, H.C.; Aerts, J.C.J.H.; van Beek, L.P.H.; Bierkens, M.F.P.; Bouwman, A.; Jongman, B.; Kwadijk, J.C.J.; Ligtvoet, W.; Lucas, P.L.; van Vuuren, D.P.; et al. Global drivers of future river flood risk. Nat. Clim. Chang. 2016, 6, 381–385. [Google Scholar] [CrossRef]

- Pilon, P.J. (Ed.) Guidelines for Reducing Flood Losses; United Nations: Rome, Italy, 2004. [Google Scholar]

- Hirabayashi, Y.; Mahendran, R.; Koirala, S.; Konoshima, L.; Yamazaki, D.; Watanabe, S.; Kim, H.; Kanae, S. Global flood risk under climate change. Nat. Clim. Chang. 2013, 3, 816–821. [Google Scholar] [CrossRef]

- Nicholls, R.J.; Lincke, D.; Hinkel, J.; Brown, S.; Vafeidis, A.T.; Meyssignac, B.; Hanson, S.E.; Merkens, J.L.; Fang, J. A global analysis of subsidence, relative sea-level change and coastal flood exposure. Nat. Clim. Chang. 2021, 11, 338–342. [Google Scholar] [CrossRef]

- Bagheri-Gavkosh, M.; Hosseini, S.M.; Ataie-Ashtiani, B.; Sohani, Y.; Ebrahimian, H.; Morovat, F.; Ashrafi, S. Land subsidence: A global challenge. Sci. Total Environ. 2021, 778, 146193. [Google Scholar] [CrossRef]

- Parsons, T.; Wu, P.; Wei, M.M.; D’Hondt, S. The Weight of New York City: Possible Contributions to Subsidence From Anthropogenic Sources. Earth’s Future 2023, 11, e2022EF003465. [Google Scholar] [CrossRef]

- Amitrano, D.; Di Martino, G.; Guida, R.; Iervolino, P.; Iodice, A.; Papa, M.; Riccio, D.; Ruello, G. Earth Environmental Monitoring Using Multi-Temporal Synthetic Aperture Radar: A Critical Review of Selected Applications. Remote Sens. 2021, 13, 604. [Google Scholar] [CrossRef]

- de Leeuw, J.; Vrieling, A.; Shee, A.; Atzberger, C.; Hadgu, K.M.; Biradar, C.M.; Keah, H.; Turvey, C. The potential and uptake of remote sensing in insurance: A review. Remote Sens. 2014, 6, 10888–10912. [Google Scholar] [CrossRef]

- Garcia-Pintado, J.; Mason, D.C.; Dance, S.L.; Cloke, H.L.; Neal, J.C.; Freer, J.; Bates, P.D. Satellite-supported flood forecasting in river networks: A real case study. J. Hydrol. 2015, 523, 706–724. [Google Scholar] [CrossRef]

- Mitidieri, F.; Papa, M.N.; Amitrano, D.; Ruello, G. River morphology monitoring using multitemporal sar data: Preliminary results. Eur. J. Remote Sens. 2016, 49, 889–898. [Google Scholar] [CrossRef]

- Mobilia, M.; Longobardi, A.; Amitrano, D.; Ruello, G. Land use and damaging hydrological events temporal changes in the Sarno River basin: Potential for green technologies mitigation by remote sensing analysis. Hydrol. Res. 2023, 54, 277–302. [Google Scholar] [CrossRef]

- Amitrano, D.; Di Martino, G.; Iodice, A.; Riccio, D.; Ruello, G. Unsupervised Rapid Flood Mapping Using Sentinel-1 GRD SAR Images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3290–3299. [Google Scholar] [CrossRef]

- Martinis, S.; Kersten, J.; Twele, A. A fully automated TerraSAR-X based flood service. ISPRS J. Photogramm. Remote Sens. 2015, 104, 203–212. [Google Scholar] [CrossRef]

- Giustarini, L.; Hostache, R.; Kavetski, D.; Chini, M.; Corato, G.; Schlaffer, S.; Matgen, P. Probabilistic flood mapping using synthetic aperture radar data. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6958–6969. [Google Scholar] [CrossRef]

- Moreira, A.; Prats-Iraola, P.; Younis, M.; Krieger, G.; Hajnsek, I.; Papathanassiou, K.P. A tutorial on synthetic aperture radar. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–43. [Google Scholar] [CrossRef]

- Di Martino, G.; Iodice, A.; Riccio, D.; Ruello, G. A Novel Approach for Disaster Monitoring: Fractal Models and Tools. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1559–1570. [Google Scholar] [CrossRef]

- Clement, M.; Kilsby, C.; Moore, P. Multi-temporal synthetic aperture radar flood mapping using change detection. J. Flood Risk Manag. 2018, 11, 152–168. [Google Scholar] [CrossRef]

- Grimaldi, S.; Xu, J.; Li, Y.; Pauwels, V.R.; Walker, J.P. Flood mapping under vegetation using single SAR acquisitions. Remote Sens. Environ. 2020, 237, 111582. [Google Scholar] [CrossRef]

- Pierdicca, N.; Chini, M.; Pulvirenti, L.; Macina, F. Integrating physical and topographic information into a fuzzy scheme to map flooded area by SAR. Sensors 2008, 8, 4151–4164. [Google Scholar] [CrossRef]

- Richards, J.A.; Woodgate, P.W.; Skidmore, A.K. An explanation of enhanced radar backscattering from flooded forests. Int. J. Remote Sens. 1987, 8, 1093–1100. [Google Scholar] [CrossRef]

- Tsyganskaya, V.; Martinis, S.; Marzahn, P.; Ludwig, R. SAR-based detection of flooded vegetation–a review of characteristics and approaches. Int. J. Remote Sens. 2018, 39, 2255–2293. [Google Scholar] [CrossRef]

- Moser, L.; Schmitt, A.; Wendleder, A.; Roth, A. Monitoring of the Lac Bam Wetland Extent Using Dual-Polarized X-Band SAR Data. Remote Sens. 2016, 8, 302. [Google Scholar] [CrossRef]

- Alsdorf, D.E.; Melack, J.M.; Dunne, T.; Mertes, L.A.; Hess, L.L.; Smith, L.C. Interferometric radar measurements of water level changes on the Amazon flood plain. Nature 2000, 404, 174–177. [Google Scholar] [CrossRef]

- Pulvirenti, L.; Chini, M.; Pierdicca, N.; Boni, G. Use of SAR Data for Detecting Floodwater in Urban and Agricultural Areas: The Role of the Interferometric Coherence. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1532–1544. [Google Scholar] [CrossRef]

- Refice, A.; Capolongo, D.; Pasquariello, G.; D’Addabbo, A.; Bovenga, F.; Nutricato, R.; Lovergine, F.P.; Pietranera, L. SAR and InSAR for Flood Monitoring: Examples With COSMO-SkyMed Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2711–2722. [Google Scholar] [CrossRef]

- Zebker, H.A.; Villasenor, J. Decorrelation in interferometric radar echoes. IEEE Trans. Geosci. Remote Sens. 1992, 30, 950–959. [Google Scholar] [CrossRef]

- Schepanski, K.; Wright, T.J.; Knippertz, P. Evidence for flash floods over deserts from loss of coherence in InSAR imagery. J. Geophys. Res. Atmos. 2012, 117, D20101. [Google Scholar] [CrossRef]

- Kim, J.W.; Lu, Z.; Lee, H.; Shum, C.; Swarzenski, C.M.; Doyle, T.W.; Baek, S.H. Integrated analysis of PALSAR/Radarsat-1 InSAR and ENVISAT altimeter data for mapping of absolute water level changes in Louisiana wetlands. Remote Sens. Environ. 2009, 113, 2356–2365. [Google Scholar] [CrossRef]

- Mao, W.; Wang, X.; Liu, G.; Zhang, R.; Shi, Y.; Pirasteh, S. Estimation and Compensation of Ionospheric Phase Delay for Multi-Aperture InSAR: An Azimuth Split-Spectrum Interferometry Approach. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5209414. [Google Scholar] [CrossRef]

- Li, Y.; Martinis, S.; Wieland, M.; Schlaffer, S.; Natsuaki, R. Urban flood mapping using SAR intensity and interferometric coherence via Bayesian network fusion. Remote Sens. 2019, 11, 2231. [Google Scholar] [CrossRef]

- Alsdorf, D.; Smith, L.; Melack, J. Amazon floodplain water level changes measured with interferometric SIR-C radar. IEEE Trans. Geosci. Remote Sens. 2001, 39, 423–431. [Google Scholar] [CrossRef]

- Chini, M.; Pulvirenti, L.; Pierdicca, N. Analysis and Interpretation of the COSMO-SkyMed Observations of the 2011 Japan Tsunami. IEEE Geosci. Remote Sens. Lett. 2012, 9, 467–471. [Google Scholar] [CrossRef]

- Deijns, A.A.J.; Dewitte, O.; Thiery, W.; d’Oreye, N.; Malet, J.P.; Kervyn, F. Timing landslide and flash flood events from SAR satellite: A regionally applicable methodology illustrated in African cloud-covered tropical environments. Nat. Hazards Earth Syst. Sci. 2022, 22, 3679–3700. [Google Scholar] [CrossRef]

- Freeman, A. SAR Calibration: An Overview. IEEE Trans. Geosci. Remote Sens. 1992, 30, 1107–1121. [Google Scholar] [CrossRef]

- Ulander, L.M. Radiometric slope correction of synthetic-aperture radar images. IEEE Trans. Geosci. Remote Sens. 1996, 34, 1115–1122. [Google Scholar] [CrossRef]

- Imperatore, P. SAR Imaging Distortions Induced by Topography: A Compact Analytical Formulation for Radiometric Calibration. Remote Sens. 2021, 13, 3318. [Google Scholar] [CrossRef]

- Imperatore, P.; Di Martino, G. SAR Radiometric Calibration Based on Differential Geometry: From Theory to Experimentation on SAOCOM Imagery. Remote Sens. 2023, 15, 1286. [Google Scholar] [CrossRef]

- Amitrano, D.; Di Martino, G.; Iodice, A.; Mitidieri, F.; Papa, M.N.; Riccio, D.; Ruello, G. Sentinel-1 for Monitoring Reservoirs: A Performance Analysis. Remote Sens. 2014, 6, 10676–10693. [Google Scholar] [CrossRef]

- Kropatsch, W.; Strobl, D. The generation of SAR layover and shadow maps from digital elevation models. IEEE Trans. Geosci. Remote Sens. 1990, 28, 98–107. [Google Scholar] [CrossRef]

- Imperatore, P.; Sansosti, E. Multithreading based parallel processing for image geometric coregistration in sar interferometry. Remote Sens. 2021, 13, 1963. [Google Scholar] [CrossRef]

- Di Martino, G.; Poderico, M.; Poggi, G.; Riccio, D.; Verdoliva, L. Benchmarking Framework for SAR Despeckling. IEEE Trans. Geosci. Remote Sens. 2014, 52, 1596–1615. [Google Scholar] [CrossRef]

- Lee, J.S. Refined filtering of image noise using local statistics. Comput. Graph. Image Process. 1981, 15, 380–389. [Google Scholar] [CrossRef]

- Di Martino, G.; Di Simone, A.; Iodice, A.; Riccio, D. Benchmarking Framework for Multitemporal SAR Despeckling. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5207826. [Google Scholar] [CrossRef]

- Sezgin, M.; Sankur, B. Survey over image thresholding techniques and quantitative performance evaluation. J. Electron. Imaging 2004, 13, 146–165. [Google Scholar] [CrossRef]

- Lee, J.; Jurkevich, I. Segmentation of SAR images. IEEE Trans. Geosci. Remote Sens. 1989, 27, 674–680. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Zoppetti, C. Nonparametric Change Detection in Multitemporal SAR Images Based on Mean-Shift Clustering. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2022–2031. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Landuyt, L.; Van Wesemael, A.; Schumann, G.J.P.; Hostache, R.; Verhoest, N.E.C.; Van Coillie, F.M.B. Flood Mapping Based on Synthetic Aperture Radar: An Assessment of Established Approaches. IEEE Trans. Geosci. Remote Sens. 2019, 57, 722–739. [Google Scholar] [CrossRef]

- Pulvirenti, L.; Marzano, F.S.; Pierdicca, N.; Mori, S.; Chini, M. Discrimination of Water Surfaces, Heavy Rainfall, and Wet Snow Using COSMO-SkyMed Observations of Severe Weather Events. IEEE Trans. Geosci. Remote Sens. 2014, 52, 858–869. [Google Scholar] [CrossRef]

- Schumann, G.; Baldassarre, G.D.; Alsdorf, D.; Bates, P.D. Near real-time flood wave approximation on large rivers from space: Application to the River Po, Italy. Water Resour. Res. 2010, 46, W05601. [Google Scholar] [CrossRef]

- Kittler, J.; Illingworth, J. Minimum error thresholding. Pattern Recognit. 1986, 19, 41–47. [Google Scholar] [CrossRef]

- Martinis, S.; Twele, A.; Voigt, S. Towards operational near real-time flood detection using a split-based automatic thresholding procedure on high resolution TerraSAR-X data. Nat. Hazards Earth Syst. Sci. 2009, 9, 303–314. [Google Scholar] [CrossRef]

- Moser, G.; Serpico, S.B. Generalized minimum-error thresholding for unsupervised change detection from SAR amplitude imagery. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2972–2982. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L. A Split-Based Approach to Unsupervised Change Detection in Large-Size Multitemporal Images: Application to Tsunami-Damage Assessment. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1658–1670. [Google Scholar] [CrossRef]

- Chini, M.; Hostache, R.; Giustarini, L.; Matgen, P. A hierarchical split-based approach for parametric thresholding of SAR images: Flood inundation as a test case. IEEE Trans. Geosci. Remote Sens. 2017, 55, 6975–6988. [Google Scholar] [CrossRef]

- Ashman, K.A.; Bird, C.M.; Zepf, S.E. Detecting bimodality in astronomical datasets. Astron. J. 1994, 108, 2348. [Google Scholar] [CrossRef]

- Long, S.; Fatoyimbo, T.E.; Policelli, F. Flood extent mapping for Namibia using change detection and thresholding with SAR. Environ. Res. Lett. 2014, 9, 035002. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L. The Time Variable in Data Fusion: A Change Detection Perspective. IEEE Geosci. Remote Sens. Mag. 2015, 3, 8–26. [Google Scholar] [CrossRef]

- Rignot, E.J.; Zyl, J.V. Change Detection Techniques for ERS-1 SAR Data. IEEE Trans. Geosci. Remote Sens. 1993, 31, 896–906. [Google Scholar] [CrossRef]

- Bazi, Y.; Bruzzone, L.; Melgani, F. An Unsupervised Approach Based on the Generalized Gaussian Model to Automatic Change Detection in Multitemporal SAR Images. IEEE Trans. Geosci. Remote Sens. 2005, 43, 874–887. [Google Scholar] [CrossRef]

- Xiong, B.; Chen, J.M.; Kuang, G. A change detection measure based on a likelihood ratio and statistical properties of SAR intensity images. Remote Sens. Lett. 2012, 3, 267–275. [Google Scholar] [CrossRef]

- Amitrano, D.; Di Martino, G.; Iodice, A.; Riccio, D.; Ruello, G. Small Reservoirs Extraction in Semiarid Regions Using Multitemporal Synthetic Aperture Radar Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3482–3492. [Google Scholar] [CrossRef]

- Martinez, J.; Toan, T.L. Mapping of flood dynamics and spatial distribution of vegetation in the Amazon floodplain using multitemporal SAR data. Remote Sens. Environ. 2007, 108, 209–223. [Google Scholar] [CrossRef]

- Kosko, B. Neural Networks and Fuzzy Systems: A Dynamical Systems Approach to Machine Intelligence; Prentice Hall: Englewood Cliffs, NJ, USA, 1992. [Google Scholar]

- Mendel, J.M. Fuzzy Logic Systems for Engineering: A Tutorial. Proc. IEEE 1995, 83, 345–377. [Google Scholar] [CrossRef]

- Pulvirenti, L.; Pierdicca, N.; Chini, M.; Guerriero, L. An algorithm for operational flood mapping from Synthetic Aperture Radar (SAR) data using fuzzy logic. Nat. Hazards Earth Syst. Sci. 2011, 11, 529–540. [Google Scholar] [CrossRef]

- Franceschetti, G.; Iodice, A.; Riccio, D. A Canonical Problem in Electromagnetic Backscattering From Buildings. IEEE Trans. Geosci. Remote Sens. 2002, 40, 1787–1801. [Google Scholar] [CrossRef]

- Ferrazzoli, P.; Guerriero, L. Radar sensitivity to tree geometry and woody volume: A model analysis. IEEE Trans. Geosci. Remote Sens. 1995, 33, 360–371. [Google Scholar] [CrossRef]

- Dasgupta, A.; Grimaldi, S.; Ramsankaran, R.A.; Pauwels, V.R.; Walker, J.P. Towards operational SAR-based flood mapping using neuro-fuzzy texture-based approaches. Remote Sens. Environ. 2018, 215, 313–329. [Google Scholar] [CrossRef]

- Karmakar, G.C.; Dooley, L.S. A generic fuzzy rule based image segmentation algorithm. Pattern Recognit. Lett. 2002, 23, 1215–1227. [Google Scholar] [CrossRef]

- Zhang, M.; Chen, F.; Liang, D.; Tian, B.; Yang, A. Use of Sentinel-1 GRD SAR Images to Delineate Flood Extent in Pakistan. Sustainability 2020, 12, 5784. [Google Scholar] [CrossRef]

- Amitrano, D.; Di Martino, G.; Iodice, A.; Riccio, D.; Ruello, G. A New Framework for SAR Multitemporal Data RGB Representation: Rationale and Products. IEEE Trans. Geosci. Remote Sens. 2015, 53, 117–133. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Amitrano, D.; Belfiore, V.; Cecinati, F.; Di Martino, G.; Iodice, A.; Mathieu, P.P.; Medagli, S.; Poreh, D.; Riccio, D.; Ruello, G. Urban Areas Enhancement in Multitemporal SAR RGB Images Using Adaptive Coherence Window and Texture Information. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3740–3752. [Google Scholar] [CrossRef]

- Parikh, H.; Patel, S.; Patel, V. Classification of SAR and PolSAR images using deep learning: A review. Int. J. Image Data Fusion 2020, 11, 1–32. [Google Scholar] [CrossRef]

- Bentivoglio, R.; Isufi, E.; Jonkman, S.N.; Taormina, R. Deep learning methods for flood mapping: A review of existing applications and future research directions. Hydrol. Earth Syst. Sci. 2022, 26, 4345–4378. [Google Scholar] [CrossRef]

- Ho, T.K. The random subspace method for constructing decision forests. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 832–844. [Google Scholar] [CrossRef]

- Prokhorenkova, L.; Gusev, G.; Vorobev, A.; Dorogush, A.V.; Gulin, A. CatBoost: Unbiased Boosting with Categorical Features. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, Red Hook, NY, USA, 3–8 December 2018; pp. 6639–6649. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Ganjirad, M.; Delavar, M.R. Flood risk mapping using random forest and support vector machine. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, 10, 201–208. [Google Scholar] [CrossRef]

- Chu, Y.; Lee, H. Performance of Random Forest Classifier for Flood Mapping Using Sentinel-1 SAR Images. Korean J. Remote Sens. 2022, 38, 375–386. [Google Scholar] [CrossRef]

- Mastro, P.; Masiello, G.; Serio, C.; Pepe, A. Change Detection Techniques with Synthetic Aperture Radar Images: Experiments with Random Forests and Sentinel-1 Observations. Remote Sens. 2022, 14, 3323. [Google Scholar] [CrossRef]

- Tanim, A.H.; McRae, C.B.; Tavakol-davani, H.; Goharian, E. Flood Detection in Urban Areas Using Satellite Imagery and Machine Learning. Water 2022, 14, 1140. [Google Scholar] [CrossRef]

- Panahi, M.; Rahmati, O.; Kalantari, Z.; Darabi, H.; Rezaie, F.; Moghaddam, D.D.; Ferreira, C.S.S.; Foody, G.; Aliramaee, R.; Bateni, S.M.; et al. Large-scale dynamic flood monitoring in an arid-zone floodplain using SAR data and hybrid machine-learning models. J. Hydrol. 2022, 611, 128001. [Google Scholar] [CrossRef]

- Tipping, M.E. Sparse Bayesian Learning and the Relevance Vector Machine. J. Mach. Learn. Res. 2001, 1, 211–244. [Google Scholar] [CrossRef]

- Sharifi, A. Flood Mapping Using Relevance Vector Machine and SAR Data: A Case Study from Aqqala, Iran. J. Indian Soc. Remote Sens. 2020, 48, 1289–1296. [Google Scholar] [CrossRef]

- Elkhrachy, I. Flash Flood Water Depth Estimation Using SAR Images, Digital Elevation Models, and Machine Learning Algorithms. Remote Sens. 2022, 14, 440. [Google Scholar] [CrossRef]

- Saravanan, S.; Abijith, D.; Reddy, N.M.; KSS, P.; Janardhanam, N.; Sathiyamurthi, S.; Sivakumar, V. Flood susceptibility mapping using machine learning boosting algorithms techniques in Idukki district of Kerala India. Urban Clim. 2023, 49, 101503. [Google Scholar] [CrossRef]

- Awad, M.; Khanna, R. Support Vector Regression. In Efficient Learning Machines: Theories, Concepts, and Applications for Engineers and System Designers; Springer: Berlin/Heidelberg, Germany, 2015; pp. 67–80. [Google Scholar] [CrossRef]

- Mehravar, S.; Razavi-Termeh, S.V.; Moghimi, A.; Ranjgar, B.; Foroughnia, F.; Amani, M. Flood susceptibility mapping using multi-temporal SAR imagery and novel integration of nature-inspired algorithms into support vector regression. J. Hydrol. 2023, 617, 129100. [Google Scholar] [CrossRef]

- Hao, C.; Yunus, A.P.; Subramanian, S.S.; Avtar, R. Basin-wide flood depth and exposure mapping from SAR images and machine learning models. J. Environ. Manag. 2021, 297, 113367. [Google Scholar] [CrossRef]

- Shahabi, H.; Shirzadi, A.; Ghaderi, K.; Omidvar, E.; Al-Ansari, N.; Clague, J.J.; Geertsema, M.; Khosravi, K.; Amini, A.; Bahrami, S.; et al. Flood detection and susceptibility mapping using Sentinel-1 remote sensing data and a machine learning approach: Hybrid intelligence of bagging ensemble based on K-Nearest Neighbor classifier. Remote Sens. 2020, 12, 266. [Google Scholar] [CrossRef]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Wu, X.; Zhang, Z.; Xiong, S.; Zhang, W.; Tang, J.; Li, Z.; An, B.; Li, R. A Near-Real-Time Flood Detection Method Based on Deep Learning and SAR Images. Remote Sens. 2023, 15, 2046. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Li, Z.; Demir, I. U-net-based semantic classification for flood extent extraction using SAR imagery and GEE platform: A case study for 2019 central US flooding. Sci. Total Environ. 2023, 869, 161757. [Google Scholar] [CrossRef]

- Liu, B.; Li, X.; Zheng, G. Coastal Inundation Mapping From Bitemporal and Dual-Polarization SAR Imagery Based on Deep Convolutional Neural Networks. J. Geophys. Res. Ocean. 2019, 124, 9101–9113. [Google Scholar] [CrossRef]

- Nemni, E.; Bullock, J.; Belabbes, S.; Bromley, L. Fully Convolutional Neural Network for Rapid Flood Segmentation in Synthetic Aperture Radar Imagery. Remote Sens. 2020, 12, 2532. [Google Scholar] [CrossRef]

- Katiyar, V.; Tamkuan, N.; Nagai, M. Near-real-time flood mapping using off-the-shelf models with sar imagery and deep learning. Remote Sens. 2021, 13, 2334. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Wang, J.; Wang, S.; Wang, F.; Zhou, Y.; Wang, Z.; Ji, J.; Xiong, Y.; Zhao, Q. FWENet: A deep convolutional neural network for flood water body extraction based on SAR images. Int. J. Digit. Earth 2022, 15, 345–361. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zagoruyko, S.; Komodakis, N. Learning to compare image patches via convolutional neural networks. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 4353–4361. [Google Scholar] [CrossRef]

- Zhao, B.; Sui, H.; Liu, J. Siam-DWENet: Flood inundation detection for SAR imagery using a cross-task transfer siamese network. Int. J. Appl. Earth Obs. Geoinf. 2023, 116, 103132. [Google Scholar] [CrossRef]

- Shi, H.; Cao, G.; Ge, Z.; Zhang, Y.; Fu, P. Double-branch network with pyramidal convolution and iterative attention for hyperspectral image classification. Remote Sens. 2021, 13, 1403. [Google Scholar] [CrossRef]

- Roy, A.G.; Navab, N.; Wachinger, C. Concurrent spatial and channel ‘squeeze & excitation’ in fully convolutional networks. In Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2018: 21st International Conference, Granada, Spain, 16–20 September 2018; Proceedings, Part I. Springer: Berlin/Heidelberg, Germany, 2018; pp. 421–429. [Google Scholar]

- McFeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Pohl, C.; Genderen, J.L.V. Multisensor image fusion in remote sensing: Concepts, methods and applications. Int. J. Remote Sens. 1998, 19, 823–854. [Google Scholar] [CrossRef]

- Irwin, K.; Beaulne, D.; Braun, A.; Fotopoulos, G. Fusion of SAR, optical imagery and airborne LiDAR for surface water detection. Remote Sens. 2017, 9, 890. [Google Scholar] [CrossRef]

- Quang, N.H.; Tuan, V.A.; Hao, N.T.P.; Hang, L.T.T.; Hung, N.M.; Anh, V.L.; Phuong, L.T.M.; Carrie, R. Synthetic aperture radar and optical remote sensing image fusion for flood monitoring in the Vietnam lower Mekong basin: A prototype application for the Vietnam Open Data Cube. Eur. J. Remote Sens. 2019, 52, 599–612. [Google Scholar] [CrossRef]

- Muñoz, D.F.; Muñoz, P.; Moftakhari, H.; Moradkhani, H. From local to regional compound flood mapping with deep learning and data fusion techniques. Sci. Total Environ. 2021, 782, 146927. [Google Scholar] [CrossRef]

- Seydi, S.T.; Saeidi, V.; Kalantar, B.; Ueda, N.; Genderen, J.L.V.; Maskouni, F.H.; Aria, F.A. Fusion of the Multisource Datasets for Flood Extent Mapping Based on Ensemble Convolutional Neural Network (CNN) Model. J. Sens. 2022, 2022, 2887502. [Google Scholar] [CrossRef]

- He, X.; Zhang, S.; Xue, B.; Zhao, T.; Wu, T. Cross-modal change detection flood extraction based on convolutional neural network. Int. J. Appl. Earth Obs. Geoinf. 2023, 117, 103197. [Google Scholar] [CrossRef]

- Rambour, C.; Audebert, N.; Koeniguer, E.; Le Saux, B.; Crucianu, M.; Datcu, M. Flood detection in time series of optical and sar images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 1343–1346. [Google Scholar] [CrossRef]

- Benoudjit, A.; Guida, R. A Novel Fully Automated Mapping of the Flood Extent on SAR Images Using a Supervised Classifier. Remote Sens. 2019, 11, 779. [Google Scholar] [CrossRef]

- Islam, K.A.; Uddin, M.S.; Kwan, C.; Li, J. Flood detection using multi-modal and multi-temporal images: A comparative study. Remote Sens. 2020, 12, 2455. [Google Scholar] [CrossRef]

- Horritt, M.S.; Mason, D.C.; Luckman, A.J. Flood boundary delineation from synthetic aperture radar imagery using a statistical active contour model. Int. J. Remote Sens. 2001, 22, 2489–2507. [Google Scholar] [CrossRef]

- Kass, M.; Witkin, A.; Terzopoulos, D. Snakes: Active contour models. Int. J. Comput. Vis. 1988, 1, 321–331. [Google Scholar] [CrossRef]

- Horritt, M. A statistical active contour model for SAR image segmentation. Image Vis. Comput. 1999, 17, 213–224. [Google Scholar] [CrossRef]

- Mason, D.; Speck, R.; Devereux, B.; Schumann, G.P.; Neal, J.; Bates, P. Flood Detection in Urban Areas Using TerraSAR-X. IEEE Trans. Geosci. Remote Sens. 2010, 48, 882–894. [Google Scholar] [CrossRef]

- Tong, X.; Luo, X.; Liu, S.; Xie, H.; Chao, W.; Liu, S.; Liu, S.; Makhinov, A.N.; Makhinova, A.F.; Jiang, Y. An approach for flood monitoring by the combined use of Landsat 8 optical imagery and COSMO-SkyMed radar imagery. ISPRS J. Photogramm. Remote Sens. 2018, 136, 144–153. [Google Scholar] [CrossRef]

- Ahmadi, S.; Homayouni, S. A Novel Active Contours Model for Environmental Change Detection from Multitemporal Synthetic Aperture Radar Images. Remote Sens. 2020, 12, 1746. [Google Scholar] [CrossRef]

- Foroughnia, F.; Alfieri, S.M.; Menenti, M.; Lindenbergh, R. Evaluation of SAR and Optical Data for Flood Delineation Using Supervised and Unsupervised Classification. Remote Sens. 2022, 14, 3718. [Google Scholar] [CrossRef]

- Moradkhani, H.; Hsu, K.L.; Gupta, H.; Sorooshian, S. Uncertainty assessment of hydrologic model states and parameters: Sequential data assimilation using the particle filter. Water Resour. Res. 2005, 41, W05012. [Google Scholar] [CrossRef]

- Di Mauro, C.; Hostache, R.; Matgen, P.; Pelich, R.; Chini, M.; van Leeuwen, P.J.; Nichols, N.K.; Blöschl, G. Assimilation of probabilistic flood maps from SAR data into a coupled hydrologic–hydraulic forecasting model: A proof of concept. Hydrol. Earth Syst. Sci. 2021, 25, 4081–4097. [Google Scholar] [CrossRef]

- Hostache, R.; Chini, M.; Giustarini, L.; Neal, J.; Kavetski, D.; Wood, M.; Corato, G.; Pelich, R.; Matgen, P. Near-Real-Time Assimilation of SAR-Derived Flood Maps for Improving Flood Forecasts. Water Resour. Res. 2018, 54, 5516–5535. [Google Scholar] [CrossRef]

- Annis, A.; Nardi, F.; Castelli, F. Simultaneous assimilation of water levels from river gauges and satellite flood maps for near-real-time flood mapping. Hydrol. Earth Syst. Sci. 2022, 26, 1019–1041. [Google Scholar] [CrossRef]

- Wongchuig-Correa, S.; de Paiva, R.C.D.; Biancamaria, S.; Collischonn, W. Assimilation of future SWOT-based river elevations, surface extent observations and discharge estimations into uncertain global hydrological models. J. Hydrol. 2020, 590, 125473. [Google Scholar] [CrossRef]

- Lai, X.; Liang, Q.; Yesou, H.; Daillet, S. Variational assimilation of remotely sensed flood extents using a 2-D flood model. Hydrol. Earth Syst. Sci. 2014, 18, 4325–4339. [Google Scholar] [CrossRef]

- van Leeuwen, P.J.; Künsch, H.R.; Nerger, L.; Potthast, R.; Reich, S. Particle filters for high-dimensional geoscience applications: A review. Q. J. R. Meteorol. Soc. 2019, 145, 2335–2365. [Google Scholar] [CrossRef]

- Di Mauro, C.; Hostache, R.; Matgen, P.; Pelich, R.; Chini, M.; van Leeuwen, P.J.; Nichols, N.; Blöschl, G. A Tempered Particle Filter to Enhance the Assimilation of SAR-Derived Flood Extent Maps Into Flood Forecasting Models. Water Resour. Res. 2022, 58, e2022WR031940. [Google Scholar] [CrossRef]

- Amitrano, D.; Ciervo, F.; Di Martino, G.; Papa, M.N.; Iodice, A.; Koussoube, Y.; Mitidieri, F.; Riccio, D.; Ruello, G. Modeling Watershed Response in Semiarid Regions With High-Resolution Synthetic Aperture Radars. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2732–2745. [Google Scholar] [CrossRef]

- Schreier, G. Geometrical properties of SAR images. In SAR Geocoding: Data and Systems; Herbert Wichman: Karlsruhe, Germany, 1993; pp. 103–134. [Google Scholar]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing; Prentice Hall: Englewood Cliffs, NJ, USA, 2007. [Google Scholar]

- Amitrano, D.; Di Martino, G.; Iodice, A.; Riccio, D.; Ruello, G. An end-user oriented framework for the classification of multitemporal SAR images. Int. J. Remote Sens. 2016, 37, 248–261. [Google Scholar] [CrossRef]

- Amitrano, D.; Guida, R.; Iervolino, P. Semantic Unsupervised Change Detection of Natural Land Cover With Multitemporal Object-Based Analysis on SAR Images. IEEE Trans. Geosci. Remote Sen. 2020, 59, 5494–5514. [Google Scholar] [CrossRef]

- Matsuyama, T.; Hwang, V.S.S. SIGMA—A Knowledge-Based Aerial Image Understanding System; Plenum Press: New York, NY, USA, 1990. [Google Scholar]

- Wegmuller, U. Automated terrain corrected SAR geocoding. In Proceedings of the IEEE 1999 International Geoscience and Remote Sensing Symposium. IGARSS’99, Hamburg, Germany, 28 June–2 July 1999; Volume 3, pp. 1712–1714. [Google Scholar] [CrossRef]

- Hess, L.L.; Melack, J.M.; Simonett, D.S. Radar detection of flooding beneath the forest canopy: A review. Int. J. Remote Sens. 1990, 11, 1313–1325. [Google Scholar] [CrossRef]

- Hess, L.L.; Melack, J.M.; Novo, E.M.; Barbosa, C.C.; Gastil, M. Dual-season mapping of wetland inundation and vegetation for the central Amazon basin. Remote Sens. Environ. 2003, 87, 404–428. [Google Scholar] [CrossRef]

- Horritt, M.; Mason, D.; Cobby, D.; Davenport, I.; Bates, P. Waterline mapping in flooded vegetation from airborne SAR imagery. Remote Sens. Environ. 2003, 85, 271–281. [Google Scholar] [CrossRef]

- Arnesen, A.S.; Silva, T.S.; Hess, L.L.; Novo, E.M.; Rudorff, C.M.; Chapman, B.D.; McDonald, K.C. Monitoring flood extent in the lower Amazon River floodplain using ALOS/PALSAR ScanSAR images. Remote Sens. Environ. 2013, 130, 51–61. [Google Scholar] [CrossRef]

- Chapman, B.; McDonald, K.; Shimada, M.; Rosenqvist, A.; Schroeder, R.; Hess, L. Mapping Regional Inundation with Spaceborne L-Band SAR. Remote Sens. 2015, 7, 5440–5470. [Google Scholar] [CrossRef]

- Grings, F.; Ferrazzoli, P.; Jacobo-Berlles, J.; Karszenbaum, H.; Tiffenberg, J.; Pratolongo, P.; Kandus, P. Monitoring flood condition in marshes using EM models and Envisat ASAR observations. IEEE Trans. Geosci. Remote Sens. 2006, 44, 936–942. [Google Scholar] [CrossRef]

- Lang, M.W.; Townsend, P.A.; Kasischke, E.S. Influence of incidence angle on detecting flooded forests using C-HH synthetic aperture radar data. Remote Sens. Environ. 2008, 112, 3898–3907. [Google Scholar] [CrossRef]

- Marti-Cardona, B.; Dolz-Ripolles, J.; Lopez-Martinez, C. Wetland inundation monitoring by the synergistic use of ENVISAT/ASAR imagery and ancilliary spatial data. Remote Sens. Environ. 2013, 139, 171–184. [Google Scholar] [CrossRef]

- Cazals, C.; Rapinel, S.; Frison, P.L.; Bonis, A.; Mercier, G.; Mallet, C.; Corgne, S.; Rudant, J.P. Mapping and Characterization of Hydrological Dynamics in a Coastal Marsh Using High Temporal Resolution Sentinel-1A Images. Remote Sens. 2016, 8, 570. [Google Scholar] [CrossRef]

- Plank, S.; Jüssi, M.; Martinis, S.; Twele, A. Mapping of flooded vegetation by means of polarimetric Sentinel-1 and ALOS-2/PALSAR-2 imagery. Int. J. Remote Sens. 2017, 38, 3831–3850. [Google Scholar] [CrossRef]

- Tsyganskaya, V.; Martinis, S.; Marzahn, P.; Ludwig, R. Detection of Temporary Flooded Vegetation Using Sentinel-1 Time Series Data. Remote Sens. 2018, 10, 1286. [Google Scholar] [CrossRef]

- Landuyt, L.; Verhoest, N.E.; Van Coillie, F.M. Flood mapping in vegetated areas using an unsupervised clustering approach on Sentinel-1 and-2 imagery. Remote Sens. 2020, 12, 3611. [Google Scholar] [CrossRef]

- Pulvirenti, L.; Pierdicca, N.; Chini, M.; Guerriero, L. Monitoring Flood Evolution in Vegetated Areas Using COSMO-SkyMed Data: The Tuscany 2009 Case Study. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 1807–1816. [Google Scholar] [CrossRef]

- Voormansik, K.; Praks, J.; Antropov, O.; Jagomägi, J.; Zalite, K. Flood mapping with TerraSAR-X in forested regions in Estonia. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 7, 562–577. [Google Scholar] [CrossRef]

- Cohen, J.; Riihimäki, H.; Pulliainen, J.; Lemmetyinen, J.; Heilimo, J. Implications of boreal forest stand characteristics for X-band SAR flood mapping accuracy. Remote Sens. Environ. 2016, 186, 47–63. [Google Scholar] [CrossRef]

- Pierdicca, N.; Pulvirenti, L.; Boni, G.; Squicciarino, G.; Chini, M. Mapping flooded vegetation using COSMO-SkyMed: Comparison with polarimetric and optical data over rice fields. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 2650–2662. [Google Scholar] [CrossRef]

- Tsyganskaya, V.; Martinis, S.; Marzahn, P. Flood Monitoring in Vegetated Areas Using Multitemporal Sentinel-1 Data: Impact of Time Series Features. Water 2019, 11, 1938. [Google Scholar] [CrossRef]

- Evans, T.L.; Costa, M.; Telmer, K.; Silva, T.S.F. Using ALOS/PALSAR and RADARSAT-2 to Map Land Cover and Seasonal Inundation in the Brazilian Pantanal. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2010, 3, 560–575. [Google Scholar] [CrossRef]

- Brisco, B.; Schmitt, A.; Murnaghan, K.; Kaya, S.; Roth, A. SAR polarimetric change detection for flooded vegetation. Int. J. Digit. Earth 2013, 6, 103–114. [Google Scholar] [CrossRef]

- Zhao, L.; Yang, J.; Li, P.; Zhang, L. Seasonal inundation monitoring and vegetation pattern mapping of the Erguna floodplain by means of a RADARSAT-2 fully polarimetric time series. Remote Sens. Environ. 2014, 152, 426–440. [Google Scholar] [CrossRef]

- Betbeder, J.; Rapinel, S.; Corgne, S.; Pottier, E.; Hubert-Moy, L. TerraSAR-X dual-pol time-series for mapping of wetland vegetation. ISPRS J. Photogramm. Remote Sens. 2015, 107, 90–98. [Google Scholar] [CrossRef]

- Olthof, I.; Rainville, T. Evaluating Simulated RADARSAT Constellation Mission (RCM) Compact Polarimetry for Open-Water and Flooded-Vegetation Wetland Mapping. Remote Sens. 2020, 12, 1476. [Google Scholar] [CrossRef]

- Canisius, F.; Brisco, B.; Murnaghan, K.; Van Der Kooij, M.; Keizer, E. SAR Backscatter and InSAR Coherence for Monitoring Wetland Extent, Flood Pulse and Vegetation: A Study of the Amazon Lowland. Remote Sens. 2019, 11, 720. [Google Scholar] [CrossRef]

- Giustarini, L.; Hostache, R.; Matgen, P.; Schumann, G.J.P.; Bates, P.D.; Mason, D.C. A Change Detection Approach to Flood Mapping in Urban Areas Using TerraSAR-X. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2417–2430. [Google Scholar] [CrossRef]

- Mason, D.; Giustarini, L.; Garcia-Pintado, J.; Cloke, H. Detection of flooded urban areas in high resolution Synthetic Aperture Radar images using double scattering. Int. J. Appl. Earth Obs. Geoinf. 2014, 28, 150–159. [Google Scholar] [CrossRef]

- Iervolino, P.; Guida, R.; Iodice, A.; Riccio, D. Flooding water depth estimation with high-resolution SAR. IEEE Trans. Geosci. Remote Sens. 2014, 53, 2295–2307. [Google Scholar] [CrossRef]

- Chini, M.; Pelich, R.; Pulvirenti, L.; Pierdicca, N.; Hostache, R.; Matgen, P. Sentinel-1 InSAR Coherence to Detect Floodwater in Urban Areas: Houston and Hurricane Harvey as A Test Case. Remote Sens. 2019, 11, 107. [Google Scholar] [CrossRef]

- Chaabani, C.; Chini, M.; Abdelfattah, R.; Hostache, R.; Chokmani, K. Flood mapping in a complex environment using bistatic TanDEM-X/TerraSAR-X InSAR coherence. Remote Sens. 2018, 10, 1873. [Google Scholar] [CrossRef]

- Li, Y.; Martinis, S.; Wieland, M. Urban flood mapping with an active self-learning convolutional neural network based on TerraSAR-X intensity and interferometric coherence. ISPRS J. Photogramm. Remote Sens. 2019, 152, 178–191. [Google Scholar] [CrossRef]

- Zhao, J.; Li, Y.; Matgen, P.; Pelich, R.; Hostache, R.; Wagner, W.; Chini, M. Urban-aware U-Net for large-scale urban flood mapping using multitemporal Sentinel-1 intensity and interferometric coherence. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4209121. [Google Scholar] [CrossRef]

- Pelich, R.; Chini, M.; Hostache, R.; Matgen, P.; Pulvirenti, L.; Pierdicca, N. Mapping floods in urban areas from dual-polarization InSAR coherence data. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4018405. [Google Scholar] [CrossRef]

- Ohki, M.; Tadono, T.; Itoh, T.; Ishii, K.; Yamanokuchi, T.; Shimada, M. Flood Detection in Built-Up Areas Using Interferometric Phase Statistics of PALSAR-2 Data. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1904–1908. [Google Scholar] [CrossRef]

- Manavalan, R. SAR image analysis techniques for flood area mapping—Literature survey. Earth Sci. Inform. 2017, 10, 1–14. [Google Scholar] [CrossRef]

- Manavalan; Rao, Y.S.; Mohan, B.K.; Sharma, S. Mapping the layover-shadow pixels of elevated flooded regions of RADARSAT-2 SLC data. In Proceedings of the 2014 IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014; pp. 1821–1824. [Google Scholar] [CrossRef]

- Gelautz, M.; Frick, H.; Raggam, J.; Burgstaller, J.; Leberl, F. SAR image simulation and analysis of alpine terrain. ISPRS J. Photogramm. Remote Sens. 1998, 53, 17–38. [Google Scholar] [CrossRef]

- Zhao, J.; Pelich, R.; Hostache, R.; Matgen, P.; Cao, S.; Wagner, W.; Chini, M. Deriving exclusion maps from C-band SAR time-series in support of floodwater mapping. Remote Sens. Environ. 2021, 265, 112668. [Google Scholar] [CrossRef]

- Song, Y.S.; Sohn, H.G.; Park, C.H. Efficient water area classification using Radarsat-1 SAR imagery in a high relief mountainous environment. Photogramm. Eng. Remote Sens. 2007, 73, 285–296. [Google Scholar] [CrossRef]

- Strozzi, T.; Wiesmann, A.; Kääb, A.; Joshi, S.; Mool, P. Glacial lake mapping with very high resolution satellite SAR data. Nat. Hazards Earth Syst. Sci. 2012, 12, 2487–2498. [Google Scholar] [CrossRef]

- Li, N.; Wang, R.; Liu, Y.; Du, K.; Chen, J.; Deng, Y. Robust river boundaries extraction of dammed lakes in mountain areas after Wenchuan Earthquake from high resolution SAR images combining local connectivity and ACM. ISPRS J. Photogramm. Remote Sens. 2014, 94, 91–101. [Google Scholar] [CrossRef]

- Metternicht, G.; Hurni, L.; Gogu, R. Remote sensing of landslides: An analysis of the potential contribution to geo-spatial systems for hazard assessment in mountainous environments. Remote Sens. Environ. 2005, 98, 284–303. [Google Scholar] [CrossRef]

- Wieland, M.; Fichtner, F.; Martinis, S.; Groth, S.; Krullikowski, C.; Plank, S.; Motagh, M. S1S2-Water: A global dataset for semantic segmentation of water bodies from Sentinel-1 and Sentinel-2 satellite images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 17, 1084–1099. [Google Scholar] [CrossRef]

- Horritt, M. A methodology for the validation of uncertain flood inundation models. J. Hydrol. 2006, 326, 153–165. [Google Scholar] [CrossRef]

- Hagen, A. Fuzzy set approach to assessing similarity of categorical maps. Int. J. Geogr. Inf. Sci. 2003, 17, 235–249. [Google Scholar] [CrossRef]

- Pappenberger, F.; Frodsham, K.; Beven, K.; Romanowicz, R.; Matgen, P. Fuzzy set approach to calibrating distributed flood inundation models using remote sensing observations. Hydrol. Earth Syst. Sci. 2007, 11, 739–752. [Google Scholar] [CrossRef]

- Wealands, S.R.; Grayson, R.B.; Walker, J.P. Quantitative comparison of spatial fields for hydrological model assessment—Some promising approaches. Adv. Water Resour. 2005, 28, 15–32. [Google Scholar] [CrossRef]

- Baumgardner, M.F.; Biehl, L.L.; Landgrebe, D.A. 220 Band AVIRIS Hyperspectral Image Data Set: June 12, 1992 Indian Pine Test Site 3. Purdue Univ. Res. Repos. 2015, 10, 991. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Bonafilia, D.; Tellman, B.; Anderson, T.; Issenberg, E. Sen1Floods11: A georeferenced dataset to train and test deep learning flood algorithms for Sentinel-1. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 835–845. [Google Scholar] [CrossRef]

- Salamon, P.; Mctlormick, N.; Reimer, C.; Clarke, T.; Bauer-Marschallinger, B.; Wagner, W.; Martinis, S.; Chow, C.; Böhnke, C.; Matgen, P.; et al. The New, Systematic Global Flood Monitoring Product of the Copernicus Emergency Management Service. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 1053–1056. [Google Scholar] [CrossRef]

- Brakenridge, G.R. Global Active Archive of Large Flood Events. Available online: https://floodobservatory.colorado.edu/Archives/index.html (accessed on 7 December 2023).

- Bai, Y.; Wu, W.; Yang, Z.; Yu, J.; Zhao, B.; Liu, X.; Yang, H.; Mas, E.; Koshimura, S. Enhancement of Detecting Permanent Water and Temporary Water in Flood Disasters by Fusing Sentinel-1 and Sentinel-2 Imagery Using Deep Learning Algorithms: Demonstration of Sen1Floods11 Benchmark Datasets. Remote Sens. 2021, 13, 2220. [Google Scholar] [CrossRef]

- Bereczky, M.; Wieland, M.; Krullikowski, C.; Martinis, S.; Plank, S. Sentinel-1-Based Water and Flood Mapping: Benchmarking Convolutional Neural Networks Against an Operational Rule-Based Processing Chain. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 2023–2036. [Google Scholar] [CrossRef]

- Long, Y.; Xia, G.S.; Li, S.; Yang, W.; Yang, M.Y.; Zhu, X.X.; Zhang, L.; Li, D. On Creating Benchmark Dataset for Aerial Image Interpretation: Reviews, Guidances, and Million-AID. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4205–4230. [Google Scholar] [CrossRef]

- Rambour, C.; Audebert, N.; Koeniguer, E.; Le Saux, B.; Crucianu, M.; Datcu, M. SEN12-FLOOD: A SAR and Multispectral Dataset for Flood Detection; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar] [CrossRef]

- Bischke, B.; Helber, P.; Schulze, C.; Srinivasan, V.; Dengel, A.R.; Borth, D. The Multimedia Satellite Task at MediaEval 2019. In Proceedings of the MediaEval Benchmarking Initiative for Multimedia Evaluation, Sophia Antipolis, France, 27–29 October 2019. [Google Scholar]

- Bauer-Marschallinger, B.; Cao, S.; Tupas, M.E.; Roth, F.; Navacchi, C.; Melzer, T.; Freeman, V.; Wagner, W. Satellite-Based Flood Mapping through Bayesian Inference from a Sentinel-1 SAR Datacube. Remote Sens. 2022, 14, 3673. [Google Scholar] [CrossRef]

- Krullikowski, C.; Chow, C.; Wieland, M.; Martinis, S.; Bauer-Marschallinger, B.; Roth, F.; Matgen, P.; Chini, M.; Hostache, R.; Li, Y.; et al. Estimating Ensemble Likelihoods for the Sentinel-1-Based Global Flood Monitoring Product of the Copernicus Emergency Management Service. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 6917–6930. [Google Scholar] [CrossRef]

- Schmitt, M.; Hughes, L.H.; Qiu, C.; Zhu, X.X. SEN12MS—A Curated Dataset of Georeferenced Multi-Spectral Sentinel-1/2 Imagery for Deep Learning and Data Fusion. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, IV-2/W7, 153–160. [Google Scholar] [CrossRef]

- Mateo-Garcia, G.; Veitch-Michaelis, J.; Smith, L.; Oprea, S.V.; Schumann, G.; Gal, Y.; Baydin, A.G.; Backes, D. Towards global flood mapping onboard low cost satellites with machine learning. Sci. Rep. 2021, 11, 7249. [Google Scholar] [CrossRef] [PubMed]

- Pekel, J.F.; Cottam, A.; Gorelick, N.; Belward, A.S. High-resolution mapping of global surface water and its long-term changes. Nature 2016, 540, 418–422. [Google Scholar] [CrossRef] [PubMed]

- Chandran, R.V.; Ramakrishnan, D.; Chowdary, V.M.; Jeyaram, A.; Jha, A.M. Flood mapping and analysis using air-borne synthetic aperture radar: A case study of July 2004 flood in Baghmati river basin, Bihar. Curr. Sci. 2006, 90, 249–256. [Google Scholar]

- Pultz, T.J.; Leconte, R.; St-Laurent, L.; Peters, L. Flood Mapping with Airborne Sar Imagery: Case of the 1987 Saint-John River Flood. Can. Water Resour. J. 1991, 16, 173–189. [Google Scholar] [CrossRef][Green Version]

- Wang, C.; Pavelsky, T.M.; Yao, F.; Yang, X.; Zhang, S.; Chapman, B.; Song, C.; Sebastian, A.; Frizzelle, B.; Frankenberg, E.; et al. Flood Extent Mapping During Hurricane Florence With Repeat-Pass L-Band UAVSAR Images. Water Resour. Res. 2022, 58, e2021WR030606. [Google Scholar] [CrossRef]

- Denbina, M.; Towfic, Z.J.; Thill, M.; Bue, B.; Kasraee, N.; Peacock, A.; Lou, Y. Flood Mapping Using UAVSAR and Convolutional Neural Networks. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 3247–3250. [Google Scholar] [CrossRef]

- Kundu, S.; Lakshmi, V.; Torres, R. Flood Depth Estimation during Hurricane Harvey Using Sentinel-1 and UAVSAR Data. Remote Sens. 2022, 14, 1450. [Google Scholar] [CrossRef]

- Salem, A.; Hashemi-Beni, L. Inundated Vegetation Mapping Using SAR Data: A Comparison of Polarization Configurations of UAVSAR L-Band and Sentinel C-Band. Remote Sens. 2022, 14, 6374. [Google Scholar] [CrossRef]

- Schumann, G.; Giustarini, L.; Tarpanelli, A.; Jarihani, B.; Martinis, S. Flood Modeling and Prediction Using Earth Observation Data. Surv. Geophys. 2022, 44, 1553–1578. [Google Scholar] [CrossRef]

- Ardila, J.; Laurila, P.; Kourkouli, P.; Strong, S. Persistent Monitoring and Mapping of Floods Globally Based on the Iceye Sar Imaging Constellation. In Proceedings of the IGARSS 2022—2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 6296–6299. [Google Scholar] [CrossRef]

- Schumann, G.J. The need for scientific rigour and accountability in flood mapping to better support disaster response. Hydrol. Process. 2019, 33, 3138–3142. [Google Scholar] [CrossRef]

- Pappenberger, F.; Beven, K.J. Ignorance is bliss: Or seven reasons not to use uncertainty analysis. Water Resour. Res. 2006, 42, W05302. [Google Scholar] [CrossRef]

- Justice, C.; Belward, A.; Morisette, J.; Lewis, P.; Privette, J.; Baret, F. Developments in the ’validation’ of satellite sensor products for the study of the land surface. Int. J. Remote Sens. 2000, 21, 3383–3390. [Google Scholar] [CrossRef]

- Amitrano, D.; Guida, R.; Ruello, G. Multitemporal SAR RGB Processing for Sentinel-1 GRD Products: Methodology and Applications. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 1497–1507. [Google Scholar] [CrossRef]

- Martinis, S.; Rieke, C. Backscatter analysis using multi-temporal and multi-frequency SAR data in the context of flood mapping at River Saale, Germany. Remote Sens. 2015, 7, 7732–7752. [Google Scholar] [CrossRef]

- Zhang, M.; Li, Z.; Tian, B.; Zhou, J.; Tang, P. The backscattering characteristics of wetland vegetation and water-level changes detection using multi-mode SAR: A case study. Int. J. Appl. Earth Obs. Geoinf. 2016, 45, 1–13. [Google Scholar] [CrossRef]

- Ulaby, F.T.; Long, D.G. Microwave Radar and Radiometric Remote Sensing; The University of Michigan Press: Ann Arbor, MI, USA, 2014. [Google Scholar]

- Baghdadi, N.; Zribi, M.; Loumagne, C.; Ansart, P.; Anguela, T.P. Analysis of TerraSAR-X data and their sensitivity to soil surface parameters over bare agricultural fields. Remote Sens. Environ. 2008, 112, 4370–4379. [Google Scholar] [CrossRef]

- Franceschetti, G.; Iodice, A.; Migliaccio, M.; Riccio, D. Scattering from natural rough surfaces modeled by fractional Brownian motion two-dimensional processes. IEEE Trans. Antennas Propag. 1999, 47, 1405–1415. [Google Scholar] [CrossRef]

- Di Martino, G.; Iodice, A.; Natale, A.; Riccio, D. Polarimetric Two-Scale Two-Component Model for the Retrieval of Soil Moisture Under Moderate Vegetation via L-Band SAR Data. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2470–2491. [Google Scholar] [CrossRef]

- Lv, S.; Meng, L.; Edwing, D.; Xue, S.; Geng, X.; Yan, X.H. High-Performance Segmentation for Flood Mapping of HISEA-1 SAR Remote Sensing Images. Remote Sens. 2022, 14, 5504. [Google Scholar] [CrossRef]

- Kourkouli, P. Natural Disaster Monitoring Using ICEYE SAR Data; Elsevier: Amsterdam, The Netherlands, 2023; pp. 163–170. [Google Scholar] [CrossRef]

- Yague-Martinez, N.; Leach, N.R.; Dasgupta, A.; Tellman, E.; Brown, J.S. Towards Frequent Flood Mapping with the Capella Sar System. The 2021 Eastern Australia Floods Case. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 6174–6177. [Google Scholar] [CrossRef]

- Notti, D.; Giordan, D.; Caló, F.; Pepe, A.; Zucca, F.; Galve, J.P. Potential and limitations of open satellite data for flood mapping. Remote Sens. 2018, 10, 1673. [Google Scholar] [CrossRef]

- Zavorotny, V.U.; Gleason, S.; Cardellach, E.; Camps, A. Tutorial on Remote Sensing Using GNSS Bistatic Radar of Opportunity. IEEE Geosci. Remote Sens. Mag. 2014, 2, 8–45. [Google Scholar] [CrossRef]

- Chapman, B.; Russo, I.M.; Galdi, C.; Morris, M.; di Bisceglie, M.; Zuffada, C.; Lavalle, M. Comparison of Sar and CYGNSS Surface Water Extent Metrics Over the Yucatan Lake Wetland Site. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 966–969. [Google Scholar] [CrossRef]

| Reference | |||

|---|---|---|---|

| Flooded | Non-Flooded | ||

| SAR | Flooded | TP | FN |

| Non-flooded | FP | TN | |

| Dataset | Website (Accessed on 21 January 2024) | Ref. | Data | Patches | Coverage | Classification Method |

|---|---|---|---|---|---|---|

| Sen1Floods11 | https://github.com/cloudtostreet/Sen1Floods11 | [193] | dual-pol S1, 13-band S2 | 4800+ | global | Automatic labeling of patches; hand-labeling of the remaining ones. |

| S1S2-Water | https://zenodo.org/records/8314175 | [186] | dual-pol S1 GRD IW, 6-band S2 L1C, DEM | 75,000+ | global | Semi-automated labeling based on NDWI thresholding and manual refinement. |

| S1S2-Flood | https://zenodo.org/records/8314175 | [186] | dual-pol S1 GRD IW, 6-band S2 L1C, DEM | 38,000+ | global | Semi-automated labeling based on NDWI thresholding and manual refinement. |

| Sen12-Flood | https://clmrmb.github.io/SEN12-FLOOD | [121] | dual-pol S1 GRD IW, 12-band S2 L2A | 5600 S1; 3600 S2 | Africa, Iran, Australia | Binary flood/no-flood information derived from CEMS; no pixel-level annotation maps. |

| UNOSAT | https://unosat.org/products | [105] | VV S1 GRD IW | 58,000+ | Eastern Africa, South-East Asia | Automatic thresholding with manually selected threshold and hybrid manual–automatic refinement. |

| CEMS | https://emergency.copernicus.eu/ | [194] | S1 GRD IW | Continuous update | global | Ensemble processing with three independent automatic detection algorithms. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Amitrano, D.; Di Martino, G.; Di Simone, A.; Imperatore, P. Flood Detection with SAR: A Review of Techniques and Datasets. Remote Sens. 2024, 16, 656. https://doi.org/10.3390/rs16040656

Amitrano D, Di Martino G, Di Simone A, Imperatore P. Flood Detection with SAR: A Review of Techniques and Datasets. Remote Sensing. 2024; 16(4):656. https://doi.org/10.3390/rs16040656

Chicago/Turabian StyleAmitrano, Donato, Gerardo Di Martino, Alessio Di Simone, and Pasquale Imperatore. 2024. "Flood Detection with SAR: A Review of Techniques and Datasets" Remote Sensing 16, no. 4: 656. https://doi.org/10.3390/rs16040656

APA StyleAmitrano, D., Di Martino, G., Di Simone, A., & Imperatore, P. (2024). Flood Detection with SAR: A Review of Techniques and Datasets. Remote Sensing, 16(4), 656. https://doi.org/10.3390/rs16040656