Abstract

Model-based hyperspectral image (HSI) denoising methods have attracted continuous attention in the past decades, due to their effectiveness and interpretability. In this work, we aim at advancing model-based HSI denoising, through sophisticated investigation for both the fidelity and regularization terms, or correspondingly noise and prior, by virtue of several recently developed techniques. Specifically, we formulate a novel unified probabilistic model for the HSI denoising task, within which the noise is assumed as pixel-wise non-independent and identically distributed (non-i.i.d) Gaussian predicted by a pre-trained neural network, and the prior for the HSI image is designed by incorporating the deep image prior (DIP) with total variation (TV) and spatio-spectral TV. To solve the resulted maximum a posteriori (MAP) estimation problem, we design a Monte Carlo Expectation–Maximization (MCEM) algorithm, in which the stochastic gradient Langevin dynamics (SGLD) method is used for computing the E-step, and the alternative direction method of multipliers (ADMM) is adopted for solving the optimization in the M-step. Experiments on both synthetic and real noisy HSI datasets have been conducted to verify the effectiveness of the proposed method.

1. Introduction

Hyperspectral images (HSIs) are acquired by high spectral resolution sensors, which generally have hundreds of bands ranging from ultraviolet to infrared wavelengths. Due to the richness of its spatial and spectral information, HSIs have been widely used in many applications [1,2,3,4,5], such as environmental monitoring, military surveillance and mineral exploration. However, due to various factors, including thermal electronics, dark current and stochastic error of photocounting in an imaging process, HSIs inevitably suffer from severe noise during the acquisition process, which results in inconvenience to the subsequent tasks. Therefore, as an important pre-processing step, HSI denoising has attracted much attention in the past decades.

Current HSI denoising methods can be roughly divided into two categories, i.e., model-driven methods and data-driven ones. Recently, due to the rapid advances in deep learning studies, data-driven methods, which try to directly learn a denoiser from paired noisy-clean HSIs in an end-to-end way, have attracted more attention [6,7,8,9,10]. Though they can achieve promising denoising results, the performance of these kinds of methods highly depends on the quality of training data and may not generalize well to realistic HSIs since the real noise is much more complex than that contained in the training data. Therefore, in this work, we are more interested in the model-driven HSI denoising methods, while also trying to borrow the merits of the data-driven ones.

Model-based HSI methods are generally constructed according to the following observation model:

where denotes the clean HSI, the noise, and the corresponding noisy observation. Based on (1), one can formulate the HSI denoising problem as the following optimization, in general:

where is often referred to as the fidelity term, which ensures that the estimated clean HSI is not too far from the noisy observation and is related to the assumption of the noise ; is the regularization term, which characterizes the prior knowledge about the intrinsic structures underlying the clean HSI. As can be seen from (2), both the fidelity and the regularization terms contribute to the final estimation , and therefore, how to properly design them to better characterize the intrinsic structures of the noise and the clean HSI is the main focus in this line of research. It should be mentioned that though earlier studies treat the HSI as a matrix by reshaping it [11,12,13], due to simplicity, tensor is a more natural and powerful tool for representing it [14,15,16], and thus, we directly work on such a tensor representation in this paper.

For the fidelity term in (2), the most convenient choice is the squared -norm due to its smoothness, which does not perform well under the sparse noise scenarios, such as stripe noise or deadline, that are often encountered in HSIs. Therefore, the -norm is adopted in many previous studies [17,18,19,20], often incorporated with the squared -norm. However, both the -norm and -norm, which correspond to certain noise assumptions, i.e., Gaussian noise and Laplace noise, respectively, may largely deviate from the real complex HSI noise. To alleviate this issue, the noise modeling strategy has been considered [21,22,23,24]. The main idea is to not pre-specify the fidelity term while modeling the noise distribution with certain parametric forms, such as mixture models and to let the fidelity term be automatically tuned according to the adaptively learned parametric noise model. Recently, Rui et al. [25] proposed to pre-train a weight predictor for the weighted -norm, which to some extent can be seen as a unification and generalization of previous noise modeling techniques.

For the regularization term, various types of regularizers have been introduced to describe different priors of the clean HSI images. For example, total variation (TV) has been adopted to characterize the local smoothness of HSIs in the spatial domain [13,14,26] and has been generalized to the 3D version to also include the spectral information into consideration [27]. Similarly, the nuclear norm has been used to model the low-rankness of the HSIs [28]. Recently, motivated by the effectiveness for RGB images, the deep image prior (DIP) [29] has been considered for dealing with the HSI denoising problem [30] and further been incorporated with traditional regularizers [10,31]. Due to their flexibility in representing the HSIs, the DIP-based methods have achieved a promising performance for the HSI denoising task.

In this work, we aim at advancing model-driven HSI denoising, through sophisticated investigation for both the fidelity and regularization terms, or correspondingly the noise and prior, by virtue of several recently developed techniques. Specifically, we first formulate a unified probabilistic model for the HSI denoising task. In the model, the noise is assumed as pixel-wise non-independent and identically distributed (non-i.i.d) Gaussian, whose precision parameters are predicted by a pre-trained neural network [25]. The prior for the clean image is designed by incorporating the DIP with total variation (TV) and spatio-spectral TV (SSTV). The proposed noise model is expected to be flexible enough for describing the complex noise in real HSIs, while the prior is supposed to be able to well characterize the intrinsic structures of the underlying clean HSIs. To solve the resulted maximum a posteriori (MAP) estimation problem, we design a Monte Carlo Expectation–Maximization (MCEM) algorithm, in which the stochastic gradient Langevin dynamics (SGLD) method [32] is used for computing the E-step, and the alternative direction method of multipliers (ADMM) [33] is adopted for solving the optimization in the M-step.

Our contributions can be summarized as follows:

- We propose a new HSI denoising model within the probabilistic framework. The proposed model integrates a flexible noise model and a sophisticated HSI prior, which can faithfully characterize the intrinsic structures of both the noise and the clean HSI.

- We design an algorithm using the MCEM framework for solving the MAP estimation problem corresponding to the proposed model. The designed algorithm integrates the SGLD for the E-step and the ADMM for the M-step, both of which can be efficiently implemented.

- We demonstrate the applications of the proposed method on various HSI denoising examples, both synthetic and real, under different types of complex noise.

The rest of the paper is organized as follows. Section 2 briefly reviews related work. Section 3 discusses the details of the proposed method, including the probabilistic model and the solving algorithm. Section 4 introduces the experimental results on both synthetic and real noisy HSIs to demonstrate the effectiveness of the proposed method. Then, the paper is concluded in Section 5.

2. Related Work

In this section, we briefly review related studies on the HSI denoising task, which can be roughly categorized into model-driven methods and data-driven ones.

2.1. Model-Driven Methods

Traditionally, HSI denoising methods were mainly developed in a model-driven way, and the key is how to properly specify the fidelity and regularization terms in (2), which correspond to noise assumption and prior knowledge of HSIs, respectively. There are plenty of studies concerning designing the regularization term to impose certain prior knowledge to the clean HSIs. For example, considering the intrinsic correlation between the spectral bands, low-rank models [11,34] have been adopted, and to characterize the correlations between HSI patches, non-local similarity-oriented models [35,36,37] have been developed. In recent studies, TV [38] and its variants have shown their importance in HSI denoising. For example, He et al. [13] introduced TV to characterize the local smoothness in the spatial domain, Wang et al. [14] further took the spectral domain into consideration and proposed the SSTV regularizer, and Peng et al. [27] enhanced the 3D version of TV by deeper investigations on the gradient domain of the HSIs. Recently, motivated by its successful applications in RGB image processing, the DIP [29] was generalized to the HSI version [30] and its potential demonstrated in HSI denoising. Luo et al. [10] went a step further by integrating the TV-type regularizations with the DIP and achieved a remarkable HSI denoising performance. These studies motivate us to consider both the TV and SSTV in our denoising model.

For the noise assumption, a straightforward and most widely used choice is the Gaussian noise, which results in the squared -norm as the fidelity term. However, it does not perform well under the sparse noise scenario, such as stripe noise or deadline, that is often encountered in HSIs. Thus, -norm is adopted in many previous studies [17,18,19,20], which is often combined with the squared -norm for complementarity. Beyond the simple -norm and -norm, which still do not perfectly fit the real scenarios of noisy HSIs, a noise modeling strategy has been considered. For example, with the simple matrix-based low-rank prior for the clean HSI, Chen et al. [21] modeled the complex noise within real HSIs as a band-wise mixture of Gaussians, and Yue et al. [22] further considered a hierarchical Dirichlet process mixture model for the HSI noise. Then, Ma et al. [23] and Jiang et al. [39] combined the noise modeling strategy with more sophisticated HSI priors, such as self-similarity, tensor-based low-rankness and neural-network-based implicit prior. Those noise modeling methods have demonstrated their ability in precisely characterizing the complex noise structure within real HSIs and thus motivate us to also consider such a methodology.

2.2. Data-Driven Methods

In the last few years, motivated by the success of deep learning (DL) in a wide range of applications, data-driven methods have attracted increasing attention in HSI denoising. The main idea behind this kind of method is to learn a direct mapping from the noisy HSI to its clean counterpart in a data-driven way. In this line of research, the 2D convolutional neural network (CNN) was first considered [6,7] and showed promising performance. Then, for fully exploring both the spatial and spectral information, 3D CNN models were developed [8,40] and have demonstrated their advantages over 2D CNNs. Later, more advanced neural network architectures were introduced to the HSI denoising task, including recurrent neural network [41], U-Net [42], attention mechanism [43] and transformer [44,45]. These data-driven methods generally have a good performance on the HSI denoising task if provided proper training. However, their performance could be limited by the restricted training data, especially when generalizing to real noisy HSIs with unseen types of noise.

In addition to learning a direct noisy-to-clean mapping, the data-driven strategy can also facilitate the HSI denoising in an indirect way. Recently, Rui et al. [25] made a successful attempt in this direction. Specifically, they proposed an approach to efficiently learn a CNN for predicting the weights in the fidelity term of (2). This approach indeed realizes noise modeling for noisy HSI in a data-driven way, which can be simpler and more flexible than the noise modeling techniques discussed before. Thus, we integrate this idea into our denoising model.

3. Proposed Method

In this section, we first propose our probabilistic model for HSI denoising and then present the solving algorithm for the resulted MAP estimation problem.

3.1. Probabilistic Model

Following the observation model (1) for the noisy HSI and placing prior to the clean HSI , we can simply obtain the probabilistic model in general:

and then realize HSI denoising by estimating the posterior according to Bayesian rule:

Therefore, the key is how to specify the likelihood , or equivalently the noise distribution , and the prior , which intrinsically correspond to the fidelity term and the regularization term in (2), respectively. In the following, we will discuss our approach for modeling the two terms and the resulting MAP estimation problem.

3.1.1. Noise Modeling

As mentioned in Introduction and Related Work, there are several studies that have specifically considered modeling noise for HSI denoising [21,22,23,24,46], which often use complex distributions. In this work, we use a relatively simpler, while flexible enough, model for the noise, i.e., pixel-wise non-i.i.d. Gaussian. Specifically, denoting as the -th pixel in the noisy HSI cube and, similarly, for , we propose the following model for the noisy HSI:

where denotes the Gaussian distribution with mean and precision , and is the corresponding precision parameter of the -th pixel. Though seemingly simple, this noise model is indeed very flexible, since we can specify different precision parameters for different pixels to approximate very complex noise.

The pixel-wise noise model (5) can then lead to the following likelihood term:

where is a weight tensor whose -th element is . As will be seen later, this form of likelihood results in a weighted least square term in the optimization procedure, which facilitates a simpler solving algorithm.

The final issue in completing the noise model is how to specify the weight tensor . Here, we follow the strategy by Rui et al. [25], i.e., using a pre-trained neural network to predict from the noisy HSI in a data-driven manner, which can make the noise parameters automatically fit the observations.

3.1.2. Prior Modeling

Traditionally, TV [47] and SSTV [17,48] regularizers have shown their effectiveness in HSI denoising, while recently, DIP has also shown its potential for this task [30]. Therefore, following the idea by Luo et al. [10], we incorporate the TV and SSTV with DIP, but in a more concise probabilistic model. Specifically, we define the prior for the clean HSI in the following way:

where is a neural network parameterized by , denotes the multivariate Gaussian distribution parameterized by and , is the identity matrix, and and are the TV and SSTV, respectively, defined as

and

where denotes the matrix -norm, i.e., the sum of absolute values of all elements in a matrix, and the derivative tensors , and are defined as

with , and being the finite derivative operators on the vertical, horizontal and spectral directions, respectively.

Before proceeding, we briefly explain the nature of the proposed prior model. The first two lines of (7) are indeed a probabilistic reformulation of the parameterization and regularizations adopted by Luo et al. [10]. Specifically, the first line parameterizes the clean HSI by a neural network (DIP), and the second line is the probabilistic formulation of the TV and SSTV regularization terms used in [10]. Different from Luo et al. [10], who treat as a random input, following the standard way of DIP, we consider it as a latent variable and introduce a Gaussian prior (the third line of (7)). As shown in [49], such a strategy can benefit the performance of the DIP-based algorithm. We will further discuss its advantage in the following part and empirically demonstrate it in experiments.

3.1.3. Overall Model and MAP Estimation

Combining (6) and (7), we can obtain the following probabilistic generative model for HSI denoising:

where is the indicator function that ensures the event within the curly brackets to be true. Given that is indeed represented by and , we can omit the explicit expression of in the above joint distribution and write

Then, the posterior of can be obtained by

where we integrate out the latent variable , and is the only observation we have. Then, we can perform MAP estimation to :

Once the optimal is obtained, we can recover the estimated clean HSI by

where denotes the expectation with respect to the latent variable . Compared with conventional DIP-based methods that only input a single , we can take the expectation to obtain a model-averaged result. This is the key advantage of our proposed probabilistic model, which will be demonstrated in experiments.

3.2. Solving Algorithm for The MAP Estimation

Our goal now is to effectively solve the MAP estimation problem (14). Note that since there exists a complex integral, the analytic form of the objective can not be easily obtained. Therefore, we resort to the MCEM [50] framework to deal with the issue.

3.2.1. Overall EM Process

Following the general EM framework, we iterate between the E-step and M-step to solve the MAP estimation. In the E-step, we construct the surrogate function by taking the expectation with respect to the latent variable :

where denotes the current value of . In the M-step, we obtain a new value of by the following optimization:

Note that the expectation in the E-step cannot be exactly computed due to the analytically intractable posterior and the complex form of , and thus, we use the Monte Carlo strategy to approximate it. Once the expectation is properly approximated, we apply the ADMM to solve the optimization problem in the M-step. The whole process is summarized in Algorithm 1, and the details of the two alternative steps are discussed in the following parts.

| Algorithm 1 HSI denoising by noise modeling and DIP |

3.2.2. Monte Carlo E-Step for Updating Latent Variable

To apply the Monte Carlo approximation to the expectation in (16), we first need to (approximately) sample from the distribution . There are various ways of performing such sampling, and we adopt the Langevin dynamics [32] due to its simplicity. Specifically, the sampling process can be written as

where denotes the time step for Langevin dynamics, is the step size, and the Gaussian white noise. According to (11), can be evaluated as

where is a constant irrelevant to , and thus, its gradient with respect to can be readily calculated using automatic differentiation provided by modern deep learning packages, such as PyTorch [51].

With a set of sampled s, denoted as , we can then use the Monte Carlo method to approximate :

Practically, we can even let [52,53] and use the following simplified approximation:

where with T being the total number of Langevin steps.

3.2.3. M-Step for Updating DIP Parameter

With the approximated surrogate function obtained in the E-step, we then update the DIP parameter in the M-step by the following optimization:

where

with being a constant irrelevant to . This optimization is indeed equivalent to

Optimization (24) can be solved by the ADMM [33]. Specifically, we can first introduce four auxiliary variables to reformulate the optimization model (24) as

Then, the augmented Lagrangian function can be written as follows:

where is the penalty parameter, and are the Lagrangian multipliers. By ADMM, the problem is then decomposed into the following subproblems:

-subproblems: The subproblems for updating are

which can be directly solved by

in which is the soft-thresholding operator defined as:

and operates element-wise.

-subproblem: The subproblem of can be written as follows:

Though it cannot be analytically solved, we can update with respect to this optimization by several gradient steps, using solvers like Adam [54], which can be easily implemented by virtue of deep learning packages, such as PyTorch [51].

updating: The Lagrangian multipliers can then be updated by

4. Experiments

In this section, we conduct both simulation and real case experiments to verify the effectiveness of the proposed method.

4.1. Experimental Settings

4.1.1. Datasets

For a comprehensive comparison, we conduct experiments on clean HSI datasets with simulated noise and noisy HSI datasets from real scenarios.

For the sythetic data, we artificially add noise of different types to different HSI datasets to generate the noisy HSIs. Specifically, we consider two commonly adopted HSI datasets:

- CAVE: The Columbia Imaging and Vision Laboratory (CAVE) dataset [55] contains 31 HSIs with real-world objects in indoor scenarios. Each HSI in this dataset has 31 bands and a spatial size of .

- ICVL: The Ben-Gurion University Interdisiplinary Computational Vision Laboratory (ICVL) dataset [56] is composed of 201 HSIs with a spatial resolution over 519 spectra. The HSIs in this dataset are captured on natural outdoor scenes with complex background structures. In our experiments, we select 11 HSIs for comparison.

Following the previous study [21], we consider five different types of complex noise:

- Case 1 (Non-i.i.d Gaussian): The entire HSI is corrupted by the zero-mean Gaussian noise with variance 10–70 for all bands.

- Case 2 (Gaussian + Impulse): In addition to the Gaussian noise in Case 1, 10 bands of each HSI are randomly selected and added to impulse noise, whose ratio is between and .

- Case 3 (Gaussian + Deadline): In addition to the Gaussian noise in Case 1, 10 bands of each HSI are randomly selected and added to deadline noise, whose ratio is between and .

- Case 4 (Gaussian + Stripe): In addition to the Gaussian noise in Case 1, 10 bands of each HSI are randomly selected and added to stripe noise, whose ratio is between and .

- Case 5 (Mixture): Each band of HSI is randomly corrupted with at least one type of noise as that in the aforementioned cases.

For the real data, we consider two HSIs of real-earth observations, i.e., Indian Pines (https://purr.purdue.edu/publications/1947/1, accessed on 10 May 2024) with the size of and Urban with the size of . Due to the complex environments of the acquisition process, the noise contained in these two HSIs has complex structures, as shown in Section 4.3.

4.1.2. Compared Methods and Evaluation Metrics

In the experiments, we compare our proposed method with three representative methods, including LRTDTV [14], a traditional model-based method using low-rank and TV as regularization; HySuDeep [57], which uses a plug-in CNN prior in the subspace learning-based denoising procedure; and S2DIP [10], which combines the DIP with TV-based prior.

For quantitative evaluation, we consider four metrics: Peak Signal-to-Noise Ratio (PSNR), Feature Similarity Index (FSIM) [58], Erreur Relative Globale Adimensionnelle de Synthèse (ERGAS) [59] and Spectral Angle Mapper (SAM) [60]. Among the four, PSNR and FSIM are metrics for general image quality assessment, while ERGAS and SAM are metrics specifically designed for HSIs.

4.1.3. Implementation Details

There are several implementation details which should be described concerning the proposed algorithm. The first is about the pre-trained network for predicting the weight tensor . In the experiments, following [25], we use a CNN pre-trained on 10,000 noisy HSI patches extracted and augmented from the CAVE datasets, under the matrix factorization (MF) model. Albeit relatively simpler to train, this pre-trained CNN has been shown to be able to generalize to other HSI denoising models other than MF and also empirically works well in our model.

There are several hyper-parameters in our algorithm which should also be discussed. First, there are two balance parameters and in our probabilistic model (11), and we simply set both of them to . Then, for the hyper-parameters in Algorithm 1, i.e., T, and , we set them as 5, and , respectively. Note that though they work well in our experiments, those hyper-parameters are not optimized.

For other competing methods, we follow the recommendations by the authors of the corresponding papers.

4.2. Results on the Synthetic Data

The quantitative results on the two datasets with five types of noise are summarized in Table 1 and Table 2, respectively. It can be seen that the proposed method consistently performs among the best two in all noise settings on the two datasets, with respect to all the four metrics which demonstrate the effectiveness of it. Regarding the metrics specifically designed for HSIs, i.e., ERGAS and SAM, the effectiveness of the proposed method is more clear. Specifically, it achieves the best performance with respect to SAM in all noise cases on Cave and in four cases on ICVL.

Table 1.

Quantitive results of all competing methods on the CAVE dataset. The best performance in each noise case is highlighted in bold.

Table 2.

Quantitive results of all competing methods on the ICVL dataset. The best performance in each noise case is highlighted in bold.

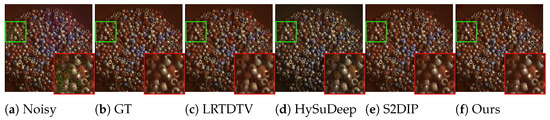

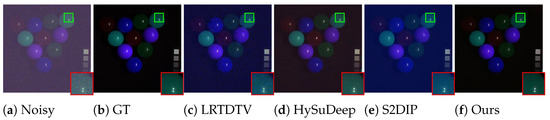

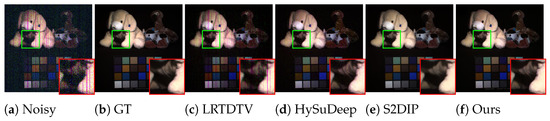

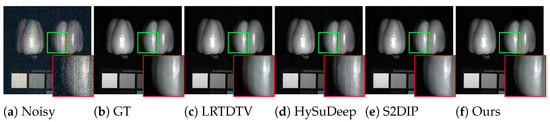

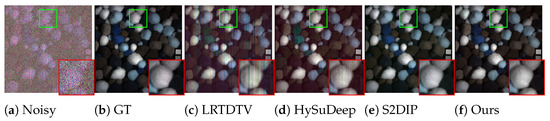

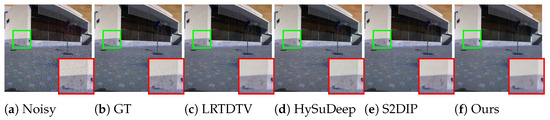

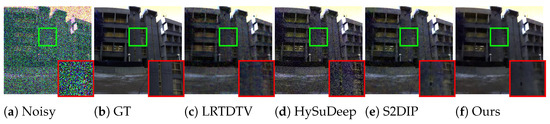

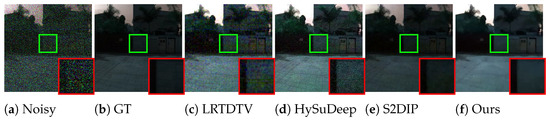

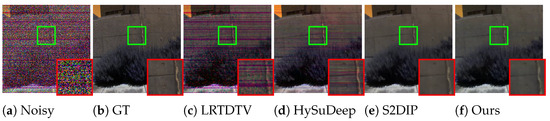

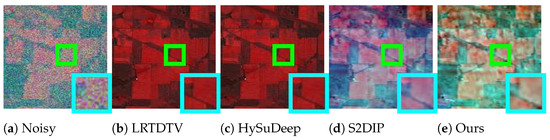

For a more intuitive comparison, we show example visual results on Cave in Figure 1, Figure 2, Figure 3, Figure 4 and Figure 5 and on ICVL in Figure 6, Figure 7, Figure 8, Figure 9 and Figure 10, for all noise cases, where the pseudo color images are constructed by three randomly selected bands from the corresponding HSI. It can be seen from these figures that, in all noise cases, our proposed method can consistently remove noise as much as possible, while retaining more or a comparable amount of details of the HSIs. In comparison, other methods either do not satisfactorily remove the noise, or produce color distortion, especially on the CAVE dataset. For example, we can see from Figure 2 that although S2DIP can remove more noise than LRTDTV and HySuDeep, it produces a severe color distortion compared with the proposed method. It is also observed that the visual results of the proposed method on the ICVL dataset are not as good as on CAVE. This can be attributed to the training of the network for predicting the weight tensor . Specifically, this network is pre-trained on the patches extracted from the CAVE dataset following [25], and thus, it could be most suited to working on the CAVE dataset and may be less effective in generalizing to ICVL. We believe that this issue could be alleviated by increasing the diversity of the training data to pre-train this network.

Figure 1.

Denoising results of competing methods on the Beads HSI in the CAVE dataset with noise of Case 1.

Figure 2.

Denoising results of competing methods on the Superball HSI in the CAVE dataset with noise of Case 2.

Figure 3.

Denoising results of competing methods on the Stuffed Toy HSI in the CAVE dataset with noise of Case 3.

Figure 4.

Denoising results of competing methods on the Peppers HSI in the CAVE dataset with noise of Case 4.

Figure 5.

Denoising results of competing methods on the Pompoms HSI in the CAVE dataset with noise of Case 5.

Figure 6.

Example denoising results of competing methods on the ICVL dataset with noise of Case 1.

Figure 7.

Example denoising results of competing methods on the ICVL dataset with noise of Case 2.

Figure 8.

Example denoising results of competing methods on the ICVL dataset with noise of Case 3.

Figure 9.

Example denoising results of competing methods on the ICVL dataset with noise of Case 4.

Figure 10.

Example denoising results of competing methods on the ICVL dataset with noise of Case 5.

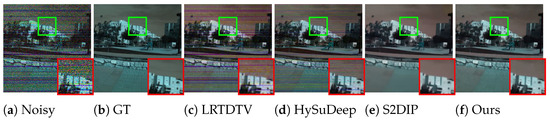

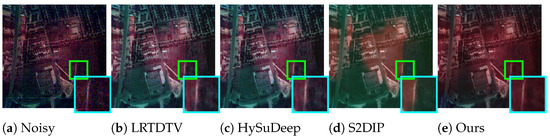

4.3. Experiments on the Real HSIs

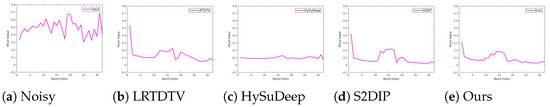

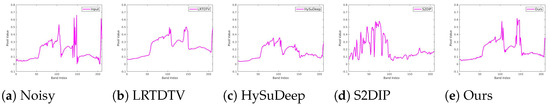

Since no groundtruths are available for real noisy HSIs, the quantitative metrics cannot be computed. Therefore, we visualize the results in Figure 11 and Figure 12 for comparison, and the promising performance of the proposed method can be clearly observed. Specifically, for the IndianPines, HSI shown in Figure 11, the results of LRTDTV and HySuDeep have severe color bias, indicating that the two methods fail to recover the spectral information; in comparison, both S2DIP and the proposed method can better recover the HSI. For the Urban HSI shown in Figure 12, we can see that both LRTDTV and HySuDeep fail to remove the stripe noise, while the proposed method can not only completely remove the complex noise but also better keep the spectral information. Compared with S2DIP, the proposed method obtains the result with less color bias. For a more comprehensive comparison, we also show the spectral signatures of pixels from the two HSIs in Figure 13 and Figure 14. We can see that LRTDTV and HySuDeep tend to oversmooth the spectral information, while S2DIP may generate unexpected fluctuations on the Urban HSI. In comparison, the spectral signatures by the proposed method are relatively better on the Urban HSI and comparatively as good as that of S2DIP on the IndianPines HSI.

Figure 11.

Denoising results of the IndianPines HSI.

Figure 12.

Denoising results of the Urban HSI.

Figure 13.

Spectral signatures of competing methods on the IndianPines HSI.

Figure 14.

Spectral signatures of competing methods on the Urban HSI.

4.4. More Analyses

In this part, we provide more analyses about the proposed method, including an ablation study on its components, computational complexity and empirical convergence.

4.4.1. Ablation Study

There are mainly three key components in our HSI denoising method, i.e., the pixel-wise noise modeling, the TV-oriented DIP and the EM process, and we thus conduct ablation studies to show their effects. Specifically, we consider two simplified variants of our model, i.e, removing the TV and SSTV regularization and treating the DIP input as random noise without the E-step update, respectively. Then, we apply these two variants, together with the full model and the S2DIP method, to the CAVE dataset with mixture noise. The results are shown in Table 3. It can be seen that all the components contribute to the final promising performance of the proposed method, specifically ignoring either the TV regularization and the E-step results in a clear performance drop. In addition, considering that the only difference between the setting “w/o E-step” and S2DIP is the fidelity term, the effectiveness of the pixel-wise noise modeling in our method is also evidently substantiated.

Table 3.

Results of the ablation study on the CAVE dataset with mixture noise.

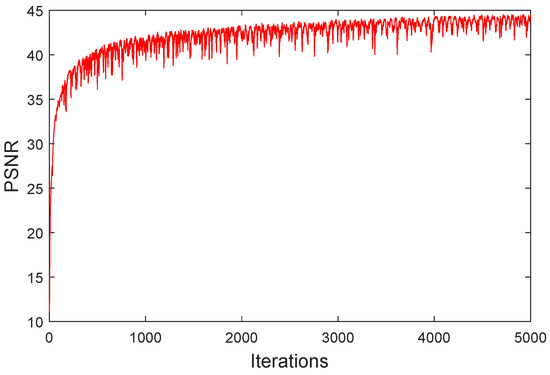

4.4.2. Computational Complexity and Convergence

Since the proposed algorithm adopts the similar DIP and TV regularization terms, its computational complexity is similar to that of S2DIP, despite the SGLD updates in the E-step. Here, we report in Table 4 the empirical running times of the two methods on the CAVE dataset, within which the size of the HSIs is (Since the other two methods were implemented without GPU acceleration, we do not compare with them).

Table 4.

Empirical computational complexity comparison between the proposed method and S2DIP. The evaluations were conducted using a NVIDIA RTX 2080 Ti GPU.

Due to the complex form of the optimization procedure of our method, a theoretical analysis of its convergence is difficult. Therefore, we have empirically shown in Figure 15 the convergence behavior of the proposed algorithm on the Balloon HSI from the CAVE dataset under the mixture noise case. It can be seen that the proposed method can converge to a promising solution with an affordable number of iterations.

Figure 15.

Empirical convergence behavior, in terms of PSNR, of the proposed method.

5. Conclusions

In this paper, we have proposed a new model-driven HSI denoising method. Specifically, we have presented a unified probabilistic model that integrates both the pixel-wise non-i.i.d Gaussian noise assumption and the TV-oriented DIP for better noise and prior modeling. Then, an MCEM-type algorithm has been designed for solving the resulting MAP estimation problem, in which the SGLD and the ADMM algorithms have been adopted for the E-step and the M-step, respectively. A series of experiments on both synthetic and real noisy HSI datasets have been conducted to verify the effectiveness of the proposed method. Specifically, the proposed method shows promising performance on various complex noise cases, especially on the real noisy HSIs. In the future, we will try to further improve the noise and prior modeling for the HSIs by virtue of the deep generative models, such as GAN [61] and DDPM [62].

Author Contributions

Conceptualization, L.Y. and Q.Z.; methodology, L.Y. and Q.Z.; software, L.Y.; writing—original draft preparation, L.Y.; writing—review and editing, Q.Z.; supervision, Z.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the China NSFC projects grant number 12226004, 62076196, 62272375.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Khan, M.J.; Khan, H.S.; Yousaf, A.; Khurshid, K.; Abbas, A. Modern trends in hyperspectral image analysis: A review. IEEE Access 2018, 6, 14118–14129. [Google Scholar] [CrossRef]

- Stuart, M.B.; McGonigle, A.J.; Willmott, J.R. Hyperspectral imaging in environmental monitoring: A review of recent developments and technological advances in compact field deployable systems. Sensors 2019, 19, 3071. [Google Scholar] [CrossRef]

- Shimoni, M.; Haelterman, R.; Perneel, C. Hypersectral imaging for military and security applications: Combining myriad processing and sensing techniques. IEEE Geosci. Remote Sens. Mag. 2019, 7, 101–117. [Google Scholar] [CrossRef]

- Okada, N.; Maekawa, Y.; Owada, N.; Haga, K.; Shibayama, A.; Kawamura, Y. Automated identification of mineral types and grain size using hyperspectral imaging and deep learning for mineral processing. Minerals 2020, 10, 809. [Google Scholar] [CrossRef]

- Khan, U.; Paheding, S.; Elkin, C.P.; Devabhaktuni, V.K. Trends in deep learning for medical hyperspectral image analysis. IEEE Access 2021, 9, 79534–79548. [Google Scholar] [CrossRef]

- Yuan, Q.; Zhang, Q.; Li, J.; Shen, H.; Zhang, L. Hyperspectral image denoising employing a spatial–spectral deep residual convolutional neural network. IEEE Trans. Geosci. Remote Sens. 2018, 57, 1205–1218. [Google Scholar] [CrossRef]

- Chang, Y.; Yan, L.; Fang, H.; Zhong, S.; Liao, W. HSI-DeNet: Hyperspectral image restoration via convolutional neural network. IEEE Trans. Geosci. Remote Sens. 2018, 57, 667–682. [Google Scholar] [CrossRef]

- Dong, W.; Wang, H.; Wu, F.; Shi, G.; Li, X. Deep spatial–spectral representation learning for hyperspectral image denoising. IEEE Trans. Comput. Imaging 2019, 5, 635–648. [Google Scholar] [CrossRef]

- Zhuang, L.; Ng, M.K. FastHyMix: Fast and parameter-free hyperspectral image mixed noise removal. IEEE Trans. Neural Netw. Learn. Syst. 2021, 34, 4702–4716. [Google Scholar] [CrossRef]

- Luo, Y.S.; Zhao, X.L.; Jiang, T.X.; Zheng, Y.B.; Chang, Y. Hyperspectral mixed noise removal via spatial-spectral constrained unsupervised deep image prior. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 9435–9449. [Google Scholar] [CrossRef]

- Zhang, H.; He, W.; Zhang, L.; Shen, H.; Yuan, Q. Hyperspectral image restoration using low-rank matrix recovery. IEEE Trans. Geosci. Remote Sens. 2013, 52, 4729–4743. [Google Scholar] [CrossRef]

- He, W.; Zhang, H.; Zhang, L.; Shen, H. Hyperspectral image denoising via noise-adjusted iterative low-rank matrix approximation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3050–3061. [Google Scholar] [CrossRef]

- He, W.; Zhang, H.; Zhang, L.; Shen, H. Total-variation-regularized low-rank matrix factorization for hyperspectral image restoration. IEEE Trans. Geosci. Remote Sens. 2015, 54, 178–188. [Google Scholar] [CrossRef]

- Wang, Y.; Peng, J.; Zhao, Q.; Leung, Y.; Zhao, X.L.; Meng, D. Hyperspectral image restoration via total variation regularized low-rank tensor decomposition. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 11, 1227–1243. [Google Scholar] [CrossRef]

- Xue, J.; Zhao, Y.; Huang, S.; Liao, W.; Chan, J.C.W.; Kong, S.G. Multilayer Sparsity-Based Tensor Decomposition for Low-Rank Tensor Completion. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 6916–6930. [Google Scholar] [CrossRef] [PubMed]

- Xue, J.; Zhao, Y.; Bu, Y.; Chan, J.C.W.; Kong, S.G. When Laplacian Scale Mixture Meets Three-Layer Transform: A Parametric Tensor Sparsity for Tensor Completion. IEEE Trans. Cybern. 2022, 52, 13887–13901. [Google Scholar] [CrossRef] [PubMed]

- Aggarwal, H.K.; Majumdar, A. Hyperspectral image denoising using spatio-spectral total variation. IEEE Geosci. Remote Sens. Lett. 2016, 13, 442–446. [Google Scholar] [CrossRef]

- Du, B.; Huang, Z.; Wang, N.; Zhang, Y.; Jia, X. Joint weighted nuclear norm and total variation regularization for hyperspectral image denoising. Int. J. Remote Sens. 2018, 39, 334–355. [Google Scholar] [CrossRef]

- Xie, T.; Li, S.; Sun, B. Hyperspectral images denoising via nonconvex regularized low-rank and sparse matrix decomposition. IEEE Trans. Image Process. 2019, 29, 44–56. [Google Scholar] [CrossRef] [PubMed]

- Zhuang, L.; Ng, M.K. Hyperspectral mixed noise removal by ℓ1-norm-based subspace representation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 1143–1157. [Google Scholar] [CrossRef]

- Chen, Y.; Cao, X.; Zhao, Q.; Meng, D.; Xu, Z. Denoising hyperspectral image with non-iid noise structure. IEEE Trans. Cybern. 2017, 48, 1054–1066. [Google Scholar] [CrossRef] [PubMed]

- Yue, Z.; Meng, D.; Sun, Y.; Zhao, Q. Hyperspectral image restoration under complex multi-band noises. Remote Sens. 2018, 10, 1631. [Google Scholar] [CrossRef]

- Ma, T.H.; Xu, Z.; Meng, D.; Zhao, X.L. Hyperspectral image restoration combining intrinsic image characterization with robust noise modeling. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 1628–1644. [Google Scholar] [CrossRef]

- Peng, J.; Wang, H.; Cao, X.; Liu, X.; Rui, X.; Meng, D. Fast noise removal in hyperspectral images via representative coefficient total variation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Rui, X.; Cao, X.; Xie, Q.; Yue, Z.; Zhao, Q.; Meng, D. Learning an explicit weighting scheme for adapting complex HSI noise. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6739–6748. [Google Scholar]

- He, S.; Zhou, H.; Wang, Y.; Cao, W.; Han, Z. Super-resolution reconstruction of hyperspectral images via low rank tensor modeling and total variation regularization. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 6962–6965. [Google Scholar]

- Peng, J.; Xie, Q.; Zhao, Q.; Wang, Y.; Yee, L.; Meng, D. Enhanced 3DTV Regularization and Its Applications on HSI Denoising and Compressed Sensing. IEEE Trans. Image Process. 2020, 29, 7889–7903. [Google Scholar] [CrossRef]

- Huang, X.; Du, B.; Tao, D.; Zhang, L. Spatial-spectral weighted nuclear norm minimization for hyperspectral image denoising. Neurocomputing 2020, 399, 271–284. [Google Scholar] [CrossRef]

- Ulyanov, D.; Vedaldi, A.; Lempitsky, V. Deep image prior. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9446–9454. [Google Scholar]

- Sidorov, O.; Yngve Hardeberg, J. Deep hyperspectral prior: Single-image denoising, inpainting, super-resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Niresi, K.F.; Chi, C.Y. Unsupervised hyperspectral denoising based on deep image prior and least favorable distribution. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 5967–5983. [Google Scholar] [CrossRef]

- Welling, M.; Teh, Y.W. Bayesian learning via stochastic gradient Langevin dynamics. In Proceedings of the 28th International Conference on Machine Learning (ICML-11), Bellevue, WA, USA, 28 June–2 July 2011; pp. 681–688. [Google Scholar]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 2011, 3, 1–122. [Google Scholar]

- Ye, H.; Li, H.; Yang, B.; Cao, F.; Tang, Y. A novel rank approximation method for mixture noise removal of hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4457–4469. [Google Scholar] [CrossRef]

- Maggioni, M.; Katkovnik, V.; Egiazarian, K.; Foi, A. Nonlocal transform-domain filter for volumetric data denoising and reconstruction. IEEE Trans. Image Process. 2012, 22, 119–133. [Google Scholar] [CrossRef]

- Xie, Q.; Zhao, Q.; Meng, D.; Xu, Z.; Gu, S.; Zuo, W.; Zhang, L. Multispectral images denoising by intrinsic tensor sparsity regularization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1692–1700. [Google Scholar]

- Peng, Y.; Meng, D.; Xu, Z.; Gao, C.; Yang, Y.; Zhang, B. Decomposable nonlocal tensor dictionary learning for multispectral image denoising. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2949–2956. [Google Scholar]

- Zhang, H. Hyperspectral image denoising with cubic total variation model. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 1, 95–98. [Google Scholar] [CrossRef]

- Jiang, T.X.; Zhuang, L.; Huang, T.Z.; Zhao, X.L.; Bioucas-Dias, J.M. Adaptive Hyperspectral Mixed Noise Removal. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5511413. [Google Scholar] [CrossRef]

- Liu, W.; Lee, J. A 3-D atrous convolution neural network for hyperspectral image denoising. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5701–5715. [Google Scholar] [CrossRef]

- Wei, K.; Fu, Y.; Huang, H. 3-D quasi-recurrent neural network for hyperspectral image denoising. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 363–375. [Google Scholar] [CrossRef] [PubMed]

- Cao, X.; Fu, X.; Xu, C.; Meng, D. Deep spatial-spectral global reasoning network for hyperspectral image denoising. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5504714. [Google Scholar] [CrossRef]

- Shi, Q.; Tang, X.; Yang, T.; Liu, R.; Zhang, L. Hyperspectral image denoising using a 3-D attention denoising network. IEEE Trans. Geosci. Remote Sens. 2021, 59, 10348–10363. [Google Scholar] [CrossRef]

- Chen, H.; Yang, G.; Zhang, H. Hider: A hyperspectral image denoising transformer with spatial–spectral constraints for hybrid noise removal. IEEE Trans. Neural Netw. Learn. Syst. 2022, 35, 8797–8811. [Google Scholar] [CrossRef] [PubMed]

- Li, M.; Fu, Y.; Zhang, Y. Spatial-spectral transformer for hyperspectral image denoising. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; pp. 1368–1376. [Google Scholar]

- Cao, X.; Zhao, Q.; Meng, D.; Chen, Y.; Xu, Z. Robust low-rank matrix factorization under general mixture noise distributions. IEEE Trans. Image Process. 2016, 25, 4677–4690. [Google Scholar] [CrossRef]

- Allard, W.K. Total variation regularization for image denoising, I. Geometric theory. SIAM J. Math. Anal. 2008, 39, 1150–1190. [Google Scholar] [CrossRef]

- Chang, Y.; Yan, L.; Fang, H.; Luo, C. Anisotropic spectral-spatial total variation model for multispectral remote sensing image destriping. IEEE Trans. Image Process. 2015, 24, 1852–1866. [Google Scholar] [CrossRef]

- Yue, Z.; Zhao, Q.; Xie, J.; Zhang, L.; Meng, D.; Wong, K.Y.K. Blind image super-resolution with elaborate degradation modeling on noise and kernel. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 28–24 June 2022; pp. 2128–2138. [Google Scholar]

- Caffo, B.S.; Jank, W.; Jones, G.L. Ascent-based Monte Carlo expectation–maximization. J. R. Stat. Soc. Ser. B Stat. Methodol. 2005, 67, 235–251. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. In Proceedings of the Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, Vancouver, BC, Canada, 8–14 December 2019; Volume 32. [Google Scholar]

- Wei, G.C.; Tanner, M.A. A Monte Carlo implementation of the EM algorithm and the poor man’s data augmentation algorithms. J. Am. Stat. Assoc. 1990, 85, 699–704. [Google Scholar] [CrossRef]

- Celeux, G.; Chauveau, D.; Diebolt, J. Stochastic versions of the EM algorithm: An experimental study in the mixture case. J. Stat. Comput. Simul. 1996, 55, 287–314. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Yasuma, F.; Mitsunaga, T.; Iso, D.; Nayar, S.K. Generalized assorted pixel camera: Postcapture control of resolution, dynamic range, and spectrum. IEEE Trans. Image Process. 2010, 19, 2241–2253. [Google Scholar] [CrossRef] [PubMed]

- Arad, B.; Ben-Shahar, O. Sparse recovery of hyperspectral signal from natural RGB images. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part VII 14. Springer: Cham, Switzerland, 2016; pp. 19–34. [Google Scholar]

- Zhuang, L.; Ng, M.K.; Fu, X. Hyperspectral image mixed noise removal using subspace representation and deep CNN image prior. Remote Sens. 2021, 13, 4098. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A Feature Similarity Index for Image Quality Assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef]

- Wald, L. Data Fusion: Definitions and Architectures: Fusion of Images of Different Spatial Resolutions; Presses des Mines: Paris, France, 2002. [Google Scholar]

- Yuhas, R.H.; Goetz, A.F.; Boardman, J.W. Discrimination among semi-arid landscape endmembers using the spectral angle mapper (SAM) algorithm. In Proceedings of the JPL, Summaries of the Third Annual JPL Airborne Geoscience Workshop, Pasadena, CA, USA, 1–5 June 1992; Volume 1. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the Twenty-Eighth Annual Conference on Neural Information Processing Systems, Montréal, QC, Canada, 8–13 December 2014; Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N., Weinberger, K., Eds.; Volume 27. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising Diffusion Probabilistic Models. In Proceedings of the Thirty-Fourth Annual Conference on Neural Information Processing Systems, Virtual, 6–12 December 2020; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Volume 33, pp. 6840–6851. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).