A New Sparse Collaborative Low-Rank Prior Knowledge Representation for Thick Cloud Removal in Remote Sensing Images

Abstract

1. Introduction

- To leverage the inherent structural sparsity of cloud components, we propose the TriSps function specifically for cloud removal purposes. Unlike element-wise and tube-wise sparsity, the TriSps function is designed to capture the properties of clouds in three-dimensional directions more effectively.

- Building upon the TriSps function, we propose a novel cloud removal model that simultaneously estimates both the image and cloud aspects.

- We devised an effective algorithm based on PAM to tackle our method. Experiments with synthetic and real datasets highlight the proposed method’s prowess in cloud removal, outperforming other advanced methods currently available in the field.

2. Notations and Preliminaries

3. The Proposed Method

3.1. The Tri-Fiber-Wise Sparse Function

3.2. Proposed Model

3.3. Optimization Algorithm

- Updating the -subproblem

- Updating the -subproblem

- Updating the -subproblem

- Updating the -subproblem

- Updating the -subproblem

- Updating the -subproblem

- Updating the -subproblem

| Algorithm 1 Tri-fiber-wise sparse collaborative low-rank prior knowledge algorithm. |

| Input: Multitemporal images contaminated by clouds and the parameters , , and . |

| Output: Image component . |

4. Experiments

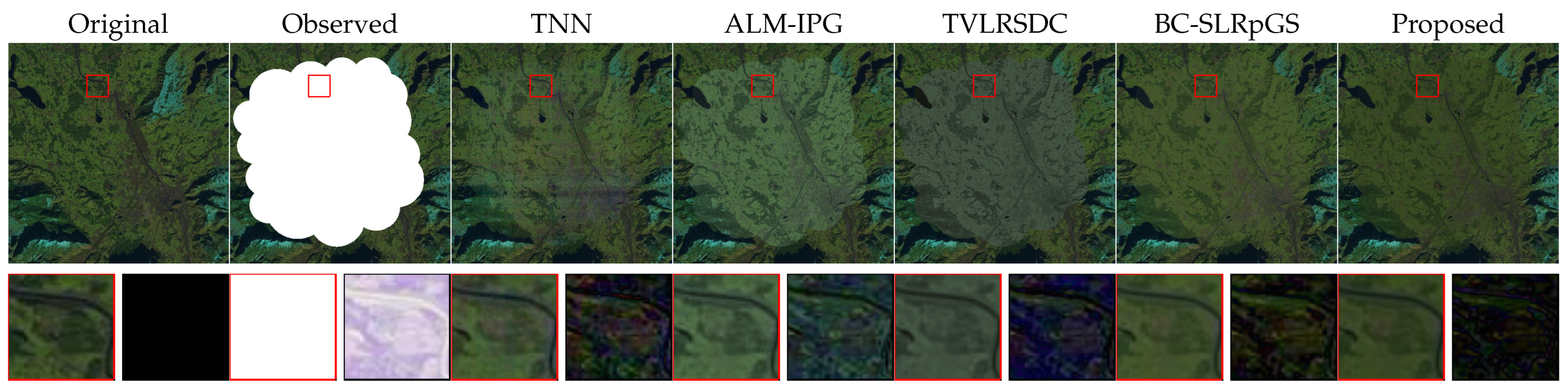

4.1. Synthetic Experiments

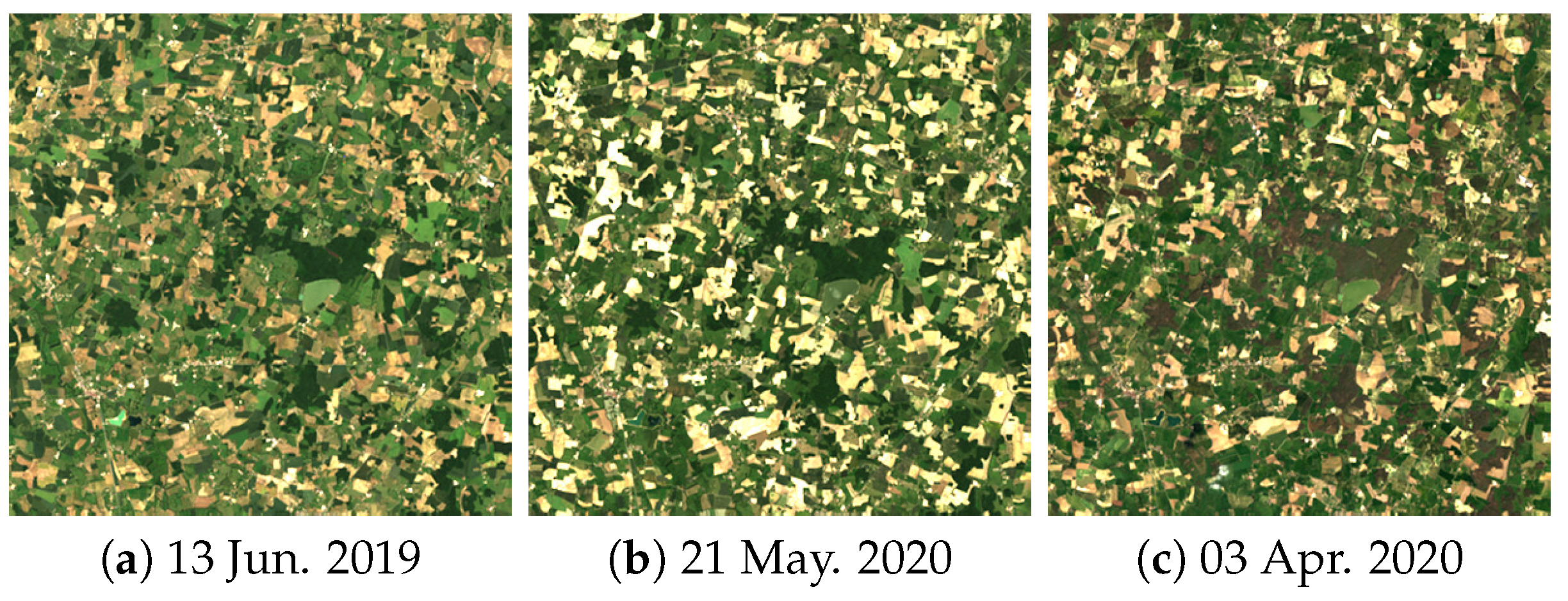

4.2. Real Experiments

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Gao, L.; Wang, Z.; Zhuang, L.; Yu, H.; Zhang, B.; Chanussot, J. Using Low-Rank Representation of Abundance Maps and Nonnegative Tensor Factorization for Hyperspectral Nonlinear Unmixing. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5504017. [Google Scholar] [CrossRef]

- Yao, J.; Meng, D.; Zhao, Q.; Cao, W.; Xu, Z. Nonconvex-Sparsity and Nonlocal-Smoothness-Based Blind Hyperspectral Unmixing. IEEE Trans. Image Process. 2019, 28, 2991–3006. [Google Scholar] [CrossRef] [PubMed]

- Wang, K.; Wang, Y.; Zhao, X.L.; Chan, J.C.W.; Xu, Z.; Meng, D. Hyperspectral and Multispectral Image Fusion via Nonlocal Low-Rank Tensor Decomposition and Spectral Unmixing. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7654–7671. [Google Scholar] [CrossRef]

- He, W.; Yokoya, N.; Yuan, X. Fast Hyperspectral Image Recovery of Dual-Camera Compressive Hyperspectral Imaging via Non-Iterative Subspace-Based Fusion. IEEE Trans. Image Process. 2021, 30, 7170–7183. [Google Scholar] [CrossRef] [PubMed]

- Dian, R.; Li, S.; Sun, B.; Guo, A. Recent Advances and New Guidelines on Hyperspectral and Multispectral Image Fusion. Inf. Fusion 2021, 69, 40–51. [Google Scholar] [CrossRef]

- Li, J.; Zhang, Y.; Sheng, Q.; Wu, Z.; Wang, B.; Hu, Z.; Shen, G.; Schmitt, M.; Molinier, M. Thin Cloud Removal Fusing Full Spectral and Spatial Features for Sentinel-2 Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 8759–8775. [Google Scholar] [CrossRef]

- Hong, D.; Gao, L.; Yao, J.; Zhang, B.; Plaza, A.; Chanussot, J. Graph Convolutional Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 5966–5978. [Google Scholar] [CrossRef]

- Cao, R.; Chen, Y.; Chen, J.; Zhu, X.; Shen, M. Thick Cloud Removal in Landsat Images based on Autoregression of Landsat Time-Series Data. Remote Sens. Environ. 2020, 249, 112001. [Google Scholar] [CrossRef]

- Xu, S.; Cao, X.; Peng, J.; Ke, Q.; Ma, C.; Meng, D. Hyperspectral Image Denoising by Asymmetric Noise Modeling. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5545214. [Google Scholar] [CrossRef]

- Zheng, W.J.; Zhao, X.L.; Zheng, Y.B.; Lin, J.; Zhuang, L.; Huang, T.Z. Spatial-Spectral-Temporal Connective Tensor Network Decomposition for Thick Cloud Removal. ISPRS J. Photogramm. Remote Sens. 2023, 199, 182–194. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, M.; He, W.; Zeng, J.; Huang, M.; Zheng, Y.-B. Thick Cloud Removal in Multitemporal Remote Sensing Images via Low-Rank Regularized Self-Supervised Network. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5506613. [Google Scholar] [CrossRef]

- Criminisi, A.; Perez, P.; Toyama, K. Region filling and object removal by exemplar-based image inpainting. IEEE Trans. Image Process. 2004, 13, 1200–1212. [Google Scholar] [CrossRef] [PubMed]

- Shen, H.; Zhang, L. A MAP-Based Algorithm for Destriping and Inpainting of Remotely Sensed Images. IEEE Trans. Geosci. Remote Sens. 2009, 47, 1492–1502. [Google Scholar] [CrossRef]

- He, K.; Sun, J. Image Completion Approaches Using the Statistics of Similar Patches. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 2423–2435. [Google Scholar] [CrossRef] [PubMed]

- Mendez-Rial, R.; Calvino-Cancela, M.; Martin-Herrero, J. Anisotropic Inpainting of the Hypercube. IEEE Geosci. Remote Sens. Lett. 2012, 9, 214–218. [Google Scholar] [CrossRef]

- Wang, L.; Qu, J.; Xiong, X.; Hao, X.; Xie, Y.; Che, N. A New Method for Retrieving Band 6 of Aqua MODIS. IEEE Geosci. Remote Sens. Lett. 2006, 3, 267–270. [Google Scholar] [CrossRef]

- Li, X.; Shen, H.; Zhang, L.; Zhang, H.; Yuan, Q. Dead Pixel Completion of Aqua MODIS Band 6 Using a Robust M-Estimator Multiregression. IEEE Geosci. Remote Sens. Lett. 2014, 11, 768–772. [Google Scholar]

- Lin, C.H.; Tsai, P.H.; Lai, K.H.; Chen, J.Y. Cloud Removal from Multitemporal Satellite Images using Information Cloning. IEEE Trans. Geosci. Remote Sens. 2012, 51, 232–241. [Google Scholar] [CrossRef]

- Li, X.; Shen, H.; Li, H.; Zhang, L. Patch Matching-Based Multitemporal Group Sparse Representation for the Missing Information Reconstruction of Remote-Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3629–3641. [Google Scholar] [CrossRef]

- Wang, J.; Olsen, P.A.; Conn, A.R.; Lozano, A.C. Removing Clouds and Recovering Ground Observations in Satellite Image Sequences via Temporally Contiguous Robust Matrix Completion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2754–2763. [Google Scholar]

- Zhang, Q.; Yuan, Q.; Li, J.; Li, Z.; Shen, H.; Zhang, L. Thick Cloud and Cloud Shadow Removal in Multitemporal Imagery using Progressively Spatio-Temporal Patch Group Deep Learning. ISPRS J. Photogramm. Remote Sens. 2020, 162, 148–160. [Google Scholar] [CrossRef]

- Zhang, Q.; Yuan, Q.; Li, Z.; Sun, F.; Zhang, L. Combined Deep Prior with Low-Rank Tensor SVD for Thick Cloud Removal in Multitemporal Images. ISPRS J. Photogramm. Remote Sens. 2021, 177, 161–173. [Google Scholar] [CrossRef]

- Li, W.; Li, Y.; Chan, J.C.W. Thick Cloud Removal with Optical and SAR Imagery via Convolutional-MappingDeconvolutional Network. IEEE Trans. Geosci. Remote Sens. 2020, 58, 2865–2879. [Google Scholar] [CrossRef]

- Gao, J.; Yuan, Q.; Li, J.; Zhang, H.; Su, X. Cloud Removal with Fusion of High Resolution Optical and SAR images using Generative Adversarial Networks. Remote Sens. 2020, 12, 191. [Google Scholar] [CrossRef]

- Chen, B.; Huang, B.; Chen, L.; Xu, B. Spatially and Temporally Weighted Regression: A Novel Method to Produce Continuous Cloud-Free Landsat Imagery. IEEE Trans. Geosci. Remote Sens. 2017, 55, 27–37. [Google Scholar] [CrossRef]

- Melgani, F. Contextual Reconstruction of Cloud-contaminated Multitemporal Multispectral Images. IEEE Trans. Geosci. Remote Sens. 2006, 44, 442–455. [Google Scholar] [CrossRef]

- Wen, F.; Zhang, Y.; Gao, Z.; Ling, X. Two-Pass Robust Component Analysis for Cloud Removal in Satellite Image Sequence. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1090–1094. [Google Scholar] [CrossRef]

- Chen, Y.; He, W.; Yokoya, N.; Huang, T.Z. Blind Cloud and Cloud Shadow Removal of Multitemporal Images based on Total Variation Regularized Low-Rank Sparsity Decomposition. ISPRS J. Photogramm. Remote Sens. 2019, 157, 93–107. [Google Scholar] [CrossRef]

- Meraner, A.; Ebel, P.; Zhu, X.X.; Schmitt, M. Cloud removal in Sentinel-2 imagery using a deep residual neural network and SAR-optical data fusion. ISPRS J. Photogramm. Remote Sens. 2020, 166, 333–346. [Google Scholar] [CrossRef]

- Wang, J.-L.; Zhao, X.-L.; Li, H.-C.; Cao, K.-X.; Miao, J.; Huang, T.-Z. Unsupervised Domain Factorization Network for Thick Cloud Removal of Multitemporal Remotely Sensed Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5405912. [Google Scholar] [CrossRef]

- Duan, C.; Pan, J.; Li, R. Thick Cloud Removal of Remote Sensing Images Using Temporal Smoothness and Sparsity Regularized Tensor Optimization. Remote Sens. 2020, 12, 3446. [Google Scholar] [CrossRef]

- Ji, T.Y.; Chu, D.; Zhao, X.L.; Hong, D. A unified framework of cloud detection and removal based on low-rank and group sparse regularizations for multitemporal multispectral images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5303015. [Google Scholar] [CrossRef]

- Attouch, H.; Bolte, J.; Svaiter, B.F. Convergence of Descent Methods for Semi-Algebraic and Tame Problems: Proximal Algorithms, Forward–Backward Splitting, and Regularized Gauss–Seidel methods. Math. Program. 2013, 137, 91–129. [Google Scholar] [CrossRef]

- Wang, M.; Hong, D.; Han, Z.; Li, J.; Yao, J.; Gao, L.; Zhang, B.; Chanussot, J. Tensor Decompositions for Hyperspectral Data Processing in Remote Sensing: A comprehensive review. IEEE Geosci. Remote Sens. Mag. 2023, 11, 26–72. [Google Scholar] [CrossRef]

- Kolda, T.G.; Bader, B.W. Tensor Decompositions and Applications. SIAM Rev. 2009, 51, 455–500. [Google Scholar] [CrossRef]

- Zhang, Z.; Ely, G.; Aeron, S.; Hao, N.; Kilmer, M. Novel Methods for Multilinear Data Completion and De-noising based on Tensor-SVD. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 3842–3849. [Google Scholar]

- Zhang, Z.; Aeron, S. Exact Tensor Completion using t-SVD. IEEE Trans. Signal Process. 2017, 65, 1511–1526. [Google Scholar] [CrossRef]

- Yuan, L.; Li, C.; Mandic, D.; Cao, J.; Zhao, Q. Tensor Ring Decomposition with Rank Minimization on Latent Space: An Efficient Approach for Tensor Completion. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 9151–9158. [Google Scholar]

- Li, X.; Shen, H.; Zhang, L.; Zhang, H.; Yuan, Q.; Yang, G. Recovering Quantitative Remote Sensing Products Contaminated by Thick Clouds and Shadows using Multitemporal Dictionary Learning. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7086–7098. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

| Notations | Interpretations |

|---|---|

| a, , , | scalar, vector, matrix, tensor |

| trace of , with | |

| unfolding of in its kth mode. | |

| ith mode-k fiber of | |

| rth frontal slice of | |

| reshaping of fourth-order tensor into third-order tensor | |

| difference operator in fourth mode |

| Data | Image Size | Spectral | Temporal | GSD | Source |

|---|---|---|---|---|---|

| Munich | 512 × 512 | 3 | 4 | 30 m | Landsat-8 |

| Picardie | 500 × 500 | 6 | 3 | 20 m | Sentinel-2 |

| Beijing | 256 × 256 | 6 | 4 | 20 m | Sentinel-2 |

| France | 400 × 400 | 7 | 3 | 30 m | Landsat-8 |

| Dataset | Index | Method | |||||

|---|---|---|---|---|---|---|---|

| Observed | TNN | ALM-IPG | TVLRSDC | BC-SLRpGS | Proposed | ||

| Munich | PSNR | 4.29 | 26.66 | 23.26 | 26.23 | 27.81 | 29.6 |

| SSIM | 0.4769 | 0.8344 | 0.8462 | 0.8385 | 0.8506 | 0.8496 | |

| CC | 0.154 | 0.8897 | 0.841 | 0.8545 | 0.9013 | 0.9184 | |

| Picardie | PSNR | 4.56 | 42.89 | 43.49 | 37.33 | 43.35 | 44.06 |

| SSIM | 0.543 | 0.987 | 0.9924 | 0.9493 | 0.9899 | 0.9918 | |

| CC | 0.0904 | 0.9397 | 0.9509 | 0.7558 | 0.9421 | 0.9514 | |

| Beijing | PSNR | 5.84 | 36.71 | 38.34 | 36.14 | 38.88 | 39.58 |

| SSIM | 0.6182 | 0.9379 | 0.9608 | 0.949 | 0.9622 | 0.9638 | |

| CC | 0.0196 | 0.9384 | 0.9646 | 0.9287 | 0.9689 | 0.9729 | |

| France | PSNR | 6.28 | 28.31 | 28.32 | 27.09 | 29.56 | 30.14 |

| SSIM | 0.6224 | 0.7947 | 0.8001 | 0.8049 | 0.8488 | 0.8536 | |

| CC | 0.2398 | 0.9603 | 0.9582 | 0.9194 | 0.9661 | 0.9697 | |

| Data | Image Size | Spectral | Temporal | GSD | Source |

|---|---|---|---|---|---|

| Eure | 400 × 400 | 4 | 4 | 10 m | Sentinel-2 |

| Morocco | 600 × 600 | 4 | 4 | 10 m | Sentinel-2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, D.-L.; Ji, T.-Y.; Ding, M. A New Sparse Collaborative Low-Rank Prior Knowledge Representation for Thick Cloud Removal in Remote Sensing Images. Remote Sens. 2024, 16, 1518. https://doi.org/10.3390/rs16091518

Sun D-L, Ji T-Y, Ding M. A New Sparse Collaborative Low-Rank Prior Knowledge Representation for Thick Cloud Removal in Remote Sensing Images. Remote Sensing. 2024; 16(9):1518. https://doi.org/10.3390/rs16091518

Chicago/Turabian StyleSun, Dong-Lin, Teng-Yu Ji, and Meng Ding. 2024. "A New Sparse Collaborative Low-Rank Prior Knowledge Representation for Thick Cloud Removal in Remote Sensing Images" Remote Sensing 16, no. 9: 1518. https://doi.org/10.3390/rs16091518

APA StyleSun, D.-L., Ji, T.-Y., & Ding, M. (2024). A New Sparse Collaborative Low-Rank Prior Knowledge Representation for Thick Cloud Removal in Remote Sensing Images. Remote Sensing, 16(9), 1518. https://doi.org/10.3390/rs16091518