Abstract

The latest advances in super-resolution have been tested with general-purpose images such as faces, landscapes and objects, but mainly unused for the task of super-resolving earth observation images. In this research paper, we benchmark state-of-the-art SR algorithms for distinct EO datasets using both full-reference and no-reference image quality assessment metrics. We also propose a novel Quality Metric Regression Network (QMRNet) that is able to predict the quality (as a no-reference metric) by training on any property of the image (e.g., its resolution, its distortions, etc.) and also able to optimize SR algorithms for a specific metric objective. This work is part of the implementation of the framework IQUAFLOW, which has been developed for the evaluation of image quality and the detection and classification of objects as well as image compression in EO use cases. We integrated our experimentation and tested our QMRNet algorithm on predicting features such as blur, sharpness, snr, rer and ground sampling distance and obtained validation medRs below 1.0 (out of N = 50) and recall rates above 95%. The overall benchmark shows promising results for LIIF, CAR and MSRN and also the potential use of QMRNet as a loss for optimizing SR predictions. Due to its simplicity, QMRNet could also be used for other use cases and image domains, as its architecture and data processing is fully scalable.

1. Introduction

One of the main issues in observing and analyzing earth observation (EO) images is to estimate its quality. However, this main issue is twofold. First, images are captured with distinct image modifications and distortions, such as optical diffractions and aberrations, detector spacing and footprints, atmospheric turbulence, platform vibration, blurring, target motion, and postprocessing. Second, EO image resolution is very limited due to the sensor’s optical resolution, the satellite’s and connection’s capacity to send high-quality images to the ground as well as the captured ground sampling distance (GSD) [1]. These limitations make the image quality assessment (IQA) hard to evaluate for EO particularly, as there are no comparable fine-grained baselines in broad EO domains.

We will tackle these problems by defining a network that acts as a no-reference (blind) metric, assessing the quality and optimizing the super-resolution of EO images at any scale and modification.

Below are summarized our main contributions:

- We train and validate a novel network (QMRNet) for EO imagery that is able to predict any type of image based on its quality and distortion

- (Case 1) We benchmark distinct super-resolution models with QMRNet and compare the results with full-reference, no-reference and feature-based metrics

- (Case 2) We benchmark distinct EO datasets with QMRNet scores

- (Case 3) We propose to use QMRNet as a loss for optimizing the quality of super-resolution models

Super-resolution (SR) consists of estimating a high-resolution image () given a low-resolution one (). In the deep learning era, deep networks have been used to classify images, obtaining a high precision in their predictions. For the specific SR task, one can design a network (autoencoder) whose convolutional layers (feature extractor) encode the patches of the image in order to build a feature vector (encoder) from the image, then add deconvolutional layers to reconstruct the original image (decoder). The instances of the predicted images are compared with the original ones in order to re-train the autoencoder network until they converge to an HR objective. The SRCNN and FSRCNN models [2,3] are based on a network of three blocks (patch extraction and representation, nonlinear mapping and reconstruction). The authors also mention the use of rotation, scaling and noise transformations as data augmentation prior to training the network. The authors use downscaling with a low-pass filter to obtain the images and use a bicubic interpolation for the upscaling during reconstruction to obtain the (the model’s prediction of ). SRCNN has been used by MC-SRCNN [4] to super-resolve multi-spectral images by changing the architecture’s input channels and adding pan-sharpening filters (modulating the smoothing/sharpening intensity). These design principles used in autoencoders, however, have a drawback in that they work differently over feature-size frequencies and features at distinct resolutions. For that, multi-scale architectures are proposed. The Multi-Scale Residual Network (MSRN) [5] uses residual connections in multiple residual blocks at different scale bands, non-exclusive to ResNets. It ables the equalization of the information bottleneck in deeper layers (high-level features) where the spatial information in some cases tends to diminish or either vanish. Traditional convolutional filters in primary layers have a fixed and narrow field of view, which creates dependencies to the learning of spatial long-range connections and deeper layers. However, multi-scale blocks cope with this drawback by analyzing the image domain at different resolution scales to be later merged in a high-dimensional multiband latent space. This allows a better abstraction at deeper layers and, therefore, the reconstruction of spatial information. This is a remarkable advantage when using EO images, which come with distinct resolutions and GSD.

Novel state-of-the-art SR models are based on generative adversarial networks (GANs). These networks are composed of two networks, a generator that generates an image estimate (SR) and a discriminator that decides whether the generated image is real or fake under certain categorical or metric objectives with respect to the classification of a set of images “I”. Usually, the generator is a deconvolutional network that is fed with a latent vector that represents the distribution for each image. In the SR problem, the LR () is considered as the input latent space while the HR image is considered as the real image to obtain the adversarial loss. For the case of the popular SRGAN [6], it has been designed with adversarial loss through VGG and ResNet (SRResnet) with residual connections and perceptual loss. The ESRGAN [7] is an improved version of the SRGAN, although it uses adversarial loss relaxation, adds training upon perceptual loss and has some some residual connections in its architecture. The main intrinsic difference between GANs and other architectures is that the image probability distribution is intrinsically learned. This makes these architectures suffer from unknown artifacts and hallucinations; however, their SR estimates are usually sharper than autoencoder-type architectures. Some mentioned generative techniques for SR, such as SRGAN/SRResnet, ESRGAN and Enlighten-GAN [8], and convolutional SR autoencoders, such as VDSR [9], SRCNN/FRSCNN and MSRN, do not adapt their feature generation to optimize a loss based on a specific quality standard that considers all quality properties of the image (both structural and pixel-to-pixel). However, the predictions show typical distortions such as blurring (from downscaling the input) or GAN artifacts from the training domain objective. Most of these GAN-based models build the inputs of the network from downsampled data from the original . This generation from downsampling limits the training of these models to perform the reverse transformation of the modification; however, the type of distortions and variations from any test image are a combination of much more diverse modifications. The only way to mitigate this limitation, but only partially due to overfitting, is to augment the samples to distinct transformations simultaneously.

Some self-supervised techniques can learn to solve the ill-posed inverse problem from the observed measurements, without any knowledge of the underlying distribution assuming its invariance to the transformations. The Content Adaptive Resampler (CAR) [10] was proposed, in which a join-learnable downscaling pre-step block together with an upscaling block (SRNet) is trained separately. It is able to learn the downscaling step (through a ResamplerNet) by learning the statistics of kernels from the , then it learns the upscaling blocks with another net (SRNet/EDSR) to obtain the SR images. CAR has been able to improve the experimental results of SR by considering the intrinsic divergences between and . The Local Implicit Image Function (LIIF) [11] is able to generate super-resolved pixels considering 2D deep features around these coordinates as inputs. In LIIF, an encoder is jointly trained in a self-supervised super-resolution task maintaining high fidelity at higher resolutions. Since the coordinates are continuous, LIIF can be presented in any arbitrary resolution. Here, the main advantage is that the SR is represented in a resolution without resizing , making it invariant to the transformations performed to the . This enables LIIF to extrapolate SR upon factors up to x30.

In order to assess the quality of an image, there are distinct strategies. Full-reference metrics consider the difference between an estimated or modified image () and the reference image (). In contrast, no-reference metrics assess the specific statistical properties of the estimated image without any reference image. Other more novel metrics calculate the high-level characteristics of the estimated by comparing its distribution distance with respect to either a preprocessed dataset or the reference in a feature-based space.

The similarity between the predicted images and the reference high-resolution images is estimated by looking at the pixel-wise differences responsive to reflectance, sharpness, structure, noise, etc. Very well-known examples of pixel-level (or full-reference) metrics are the root-mean-square error (RMSE) [12], Spearman’s rank order correlation coefficient (SRCC or SROCC), Pearson’s linear correlation coefficient (PLCC), Kendall’s rank order correlation coefficient (KROCC), the peak signal-to-noise ratio (PSNR) [13], the structural similarity metric (SSIM/MSSIM) [14], the Haar perceptual similarity index (HAARPSI) [15], the gradient magnitude similarity deviation (GMSD) [16] and the mean deviation similarity index (MDSI) [17]. PSNR calculates the power of the signal-to-noise ratio considering the noise error with respect to the . Some metrics such as the SSIM specifically measure the means and covariances locally for each region at a specific size (e.g., 8 × 8 patches; multi-scale patches for MSSIM) affecting the overall metric score. The GMSD calculates the global variation similarity of the gradient based on a local quality map combined with a pooling strategy. Most comparative studies use these metrics to measure the actual quality, mostly relying on PSNR, although there is no evidence that these measurements are the best for EO cases, as some of these are not sensitive to local perturbations (i.e., blurring, over-sharpening) and local changes (i.e., artifacts, hallucinations) to the image. The HAARPSI calculates an index based on the difference (absolute or in mutual information) using the sum of a set of wavelet coefficients processed over the - images. Other cases of metrics combine some of the pinpointed parameters simultaneously. For instance, the MDSI compares jointly the gradient similarity, chromaticity similarity and deviation pooling. The latest metric design, LCSA [18], uses linear combinations of full-reference metrics, i.e., VSI, FSIM, IFC, MAD, MSSSIM, NQM, PSNR, SSIM and VIF.

Pixel-reference metrics have a main requirement, which is that the ground-truth HR images are needed to assess a specific quality standard. For the case of no-reference (or blind) metrics, no explicit reference is needed. These rely on a parametric characterization of the enhanced signal based on statistic descriptors, usually linked to the noise or sharpness, embedded in high-frequency bands. Some examples are the variance, entropy (He), or high-end spectrum (FFT). The main popular metric in EO is the modulation transfer function (MTF), which measures impulse responses in the spatial domain and transfer functions in the frequency domain. This varies upon overall local pixel characteristics mostly present on contours, corners and sharp features in general [19]. Here, the MTF is very sensitive to local changes such as those aforementioned (e.g., optical diffractions and aberrations, blurring, motion, etc). Other metrics would use statistics from image patches in combination with multivariate filtering methods to propose score indexes for a given predefined image given its geo-referenced parameter standards. Such methods include NIQE [20], PIQE [21] and GIQE [22]. The latter is considered for official evaluation of NIIRS ratings (https://irp.fas.org/imint/niirs.htm, accessed on 10 October 2022) considering the ground sampling distance (GSD), the signal-to-noise ratio (SNR) and the relative edge response (RER) in distinct effective focal lengths of EO images [23,24,25]. Note that RER measures the line spread function (LSF), which corresponds to the absolute impulse response also computed by the MTF. The relative edge response measures the slope in the edge response (transition). The lower the metric, the blurrier the image. Taking the derivative of the normalized edge response produces the line spread function (LSF). The LSF is a 1D representation of the system point sparsity function (PSF). The width of the LSF at half the height is called the full width at half maximum (FWHM). The Fourier transform of the LSF produces the modulation transfer function (MTF). The MTF is determined across all spatial frequencies, but can be evaluated at a single spatial frequency, such as the Nyquist frequency. The value of the MTF at Nyquist provides a measure of the resolvable contrast at the highest ‘alias-free’ spatial frequency.

In [26], the authors argued that the conventional IQA evaluation methods are not valid for EO as the degradation functions and operation hardware conditions do not meet the operational conditions. Through advances in DL in that aspect, deeper network representations have been shown to improve the perceptual quality of images, although with higher requirements. The concept of feature-based metrics (i.e., perceptual similarity) is defined by the score reference on these trained features (i.e., the generator or reconstruction network). These metrics compare the distances between latent features from the predicted image and the reference image. Some state-of-the-art methods of perceptual similarity include the VGGLoss [27] and the Learned Perceptual Image Patch Similarity (LPIPS) [28], which measure the feature maps obtained by the n-th convolution after activation (image-reference layer n) and then calculate the similarity using the Euclidean distance between the predicted model features and the reference image features. Some other metrics such as the sliced Wasserstein distance (SWD) [29] and the Fréchet inception distance (FID) [30] assume a non-linear space modelling for the feature representations to compare, and therefore can adapt better with larger variability or a lack of samples in the training image domains.

2. Datasets and Related Work

Most non-feature-based metrics are fully unsupervised, namely, there are no current models that specifically can assess the image quality invariably from the specific modifications made on images specific to a certain domain. Blind quality ranking and assessment of images has been useful for applications such as avoiding forgetting in continual learning adaptation tasks [31] and many others, such as compression evaluation and mean opinion scores (MOS/CMOS). One novel strategy, ProxIQA [32], tries to evaluate the quality of an image by adapting the underlying distribution of a GAN given a compressed input. This method has been shown to improve the quality when tested on images from compression datasets from Kodak, Teknick and NFLX, although the results may vary among the trained image distributions, as shown by the JPEG2000, VMAFp and HEVC metrics. Traditional blind IQA methods (i.e., BLIINDS-II [33], BRISQUE [34], CORNIA [35], HOSA [36] and RankIQA [37]) as well as the latest deep blind image-quality assessment models such as WaDIQaM (deepIQA) [38], IQA-MCNN [39], Meta-IQA [40] and GraphIQA [41] propose to benchmark distortion-aware datasets (e.g., LIVE, LIVEC, CSIQ, KonIQ10k, TID2013 and KADID-10k) with already-distorted images and MOS/CMOS. These train and assess upon annotated exemplars such as Gaussian blur, lens blur, motion blur, color quantization, color saturation, etc. SRIF [42], RealSRQ [43] and DeepSRQ [44] explore deep learning strategies as no-reference metrics for SR quality estimation, although they have only been tested in generic datasets such as CVIU, SRID, QADS and Waterloo. Additionally, most of these models do not integrate their own modifiers that are able to customize ranking metrics (i.e., are limited to the available synthetic annotations from the aforementioned datasets). Some of these could include geo-reference annotations from the actual EO missions, such as the GSD, Nadir angle, atmospheric data, etc. The usage of customizable modifiers allows the fine-tuning on distortions on any existing domain, in our case, HR EO images. It has also not been demonstrated for IQA methods to integrate with super-resolution model benchmarking and re-training. Understanding and building the mechanics of distortions (geometrics and modifiers) is thus key for the generation of the necessary samples to train a network with enough samples to represent the whole domain.

Very few studies on SR use EO images obtained from current worldwide satellites such as DigitalGlobe WorldView-4 (https://earth.esa.int/eogateway/missions/worldview-4, accessed on 10 October 2022), SPOT (https://earth.esa.int/eogateway/missions/spot, accessed on 10 October 2022), Sentinel-2 (https://sentinels.copernicus.eu/web/sentinel/missions/sentinel-2, accessed on 10 October 2022), Landsat-8 (https://www.usgs.gov/landsat-missions/landsat-8, accessed on 10 October 2022), Hyperion/ EO-1 (https://www.usgs.gov/centers/eros/science/usgs-eros-archive-earth-observing-one-eo-1-hyperion, accessed on 10 October 2022), SkySat (https://earth.esa.int/eogateway/missions/skysat, accessed on 10 October 2022), Planetscope (https://earth.esa.int/eogateway/missions/planetscope, accessed on 10 October 2022), RedEye (https://space.skyrocket.de/docs_dat/red-eye.htm, accessed on 10 October 2022), QuickBird (https://earth.esa.int/eogateway/missions/quickbird-2, accessed on 10 October 2022), CBERS (https://www.satimagingcorp.com/satellite-sensors/other-satellite-sensors/cbers-2/, accessed on 10 October 2022), Himawari-8 (https://www.data.jma.go.jp/mscweb/data/himawari/, accessed on 10 October 2022), DSCOVR EPIC (https://epic.gsfc.nasa.gov/, accessed on 10 October 2022) or PRISMA (https://www.asi.it/en/earth-science/prisma/, accessed on 10 October 2022). In our study we selected a variety of subsets (see Table 1) from distinct online general public domain satellite imagery datasets with high resolution (around 30 cm/px). Most of these are used for land use classification tasks, with coverage category annotations and some with object segmentation. The Inria Aerial Image Labeling Dataset [45] (Inria-AILD) (https://project.inria.fr/aerialimagelabeling/, accessed on 10 October 2022) contains 180 training and 180 test images covering 405 + 405 km of US (Austin, Chicago, Kitsap County, Bellingham, Bloomington, San Francisco) and Austrian (Innsbruck Eastern/Western Tyrol, Vienna) regions. Inria-AILD was used for a semantic segmentation of buildings contest. Some land cover categories are considered for aerial scene classification in DeepGlobe (http://deepglobe.org/, accessed on 10 October 2022) (Urban, Agriculture, Rangeland, Forest, Water or Barren), USGS (https://data.usgs.gov/datacatalog/, accessed on 10 October 2022) and UCMerced (http://weegee.vision.ucmerced.edu/datasets/landuse.html, accessed on 10 October 2022) with 21 classes (i.e., agricultural, airplane, baseball diamond, beach, buildings, chaparral, dense residential, forest, freeway, golf course, harbor, intersection, medium residential, mobile home park, overpass, parking lot, river, runway, sparse residential, storage tanks and tennis court). The latter has been captured for many US regions, i.e., Birmingham, Boston, Buffalo, Columbus, Dallas, Harrisburg, Houston, Jacksonville, Las Vegas, Los Angeles, Miami, Napa, New York, Reno, San Diego, Santa Barbara, Seattle, Tampa, Tucson and Ventura. XView (http://xviewdataset.org/, accessed on 10 October 2022) contains 1.400 km RGB pan-sharpened images from DigitalGlobe WorldView-3 with 1 million labeled objects and 60 classes (e.g., Building, Hangar, Train, Airplane, Vehicle, Parking Lot) annotated both with bounding boxes and segmentation. Kaggle Shipsnet (https://www.kaggle.com/datasets/rhammell/ships-in-satellite-imagery, accessed on 10 October 2022) contains seven San Francisco Bay harbor images and 4000 individual crops of ships captured in the dataset. The ECODSE competition dataset (https://zenodo.org/record/1206101, accessed on 10 October 2022), (https://www.ecodse.org/task3_classification.html, accessed on 10 October 2022) has been considered for EO hyperspectral image classification [46], delineation (segmentation) and alignment of trees. ECODSE has available NEON photographs, LiDAR data for assessing canopy height and hyperspectral images with 426 bands. The terrain is photographed with a mean altitude of 45 m.a.s.l. and the mean canopy height is approximately 23 m.

Table 1.

List of datasets used in our experimentation. We show 12 subsets collected from 8 datasets provided by 5 satellites and EO stations.

3. Proposed Method

3.1. Iquaflow Modifiers and Metrics

We have developed a novel framework IQUAFLOW [47,48] (code available at https://github.com/satellogic/iquaflow, accessed on 10 October 2022) with set of modifiers (https://github.com/satellogic/iquaflow/tree/main/iquaflow/datasets, accessed on 10 October 2022) that apply a specific type of distortion in EO images. In the modifiers list (see Table 2) we describe 5 modifiers we developed for our experimentation, 3 of which have been integrated from common libraries (Pytorch (https://pytorch.org/vision/stable/transforms.html, accessed on 10 October 2022), PIL (https://pillow.readthedocs.io/en/stable/reference/Image.html, accessed on 10 October 2022), such as the (), sharpness factor (F) and detected ground sampling distance (), and 2 ( and ) that we developed to represent the RER and SNR metric modifications. For the case of , we build a Gaussian filter with kernel 7 × 7 and we parameterize the . For the case of F, similarly, we build a function that is modulated by a Gaussian factor (similar to a ). If the factor is higher than 1.0 (i.e., from 1.0 to 10.0), the image is sharpened (high-pass filter, with negative values on the sides of the kernel). However, if the factor is lower than 1.0 (i.e., from 0.0 to 1.0) then the image is blurred through a Gaussian function (low-pass filter with Gaussian shape). For the case of GSD, we apply a bilinear interpolation on the original image to a specific scaling (e.g., ×1.5, ×2), which will increase the scaling resolution of objects. In this case, an interpolated version of a 5000 × 5000 image of GSD 30 cm/px will be 10,000 × 10,000 and its GSD 60 cm/px, as its resolution has changed but the (oversampled/fake) sampling distance is doubled (worse). For the case of RER, we obtain the real RER value from the ground truth and calculate the LSF and max value of edge response. From that, we build a Gaussian function that is adapted to the expected RER coefficients and then filter the image. For SNR, similarly to RER, we require annotation of the base SNR from the original dataset. From that, we build a randomness regime that is adapted to a Gaussian shape that will be summed to the original image (adding randomness with a specific slope probability).

Table 2.

List of modifier parameters used in QMRNet. These modify the input images and annotate them to provide training and test data for the QMRNet. Distinct intervals have been selected according to the precision and variability of the modification. represents closest values to .

3.2. QMRNet: Classifier of Modifier Metric Parameters

We have designed the Quality Metric Regression Network (QMRNet) to be able to regress the quality parameters upon the modification or distortion (see Table 2 and Table 3) applied to single images (code for QMRNet in https://github.com/satellogic/iquaflow/tree/main/iquaflow/quality_metrics, accessed on 10 October 2022). Given a set of images, modified through a Gaussian blur (), sharpness (Gaussian factor F), a rescaling to a distinct GSD, noise (SNR), or any kind of distortion, the images are annotated with that parameter. These annotations can be used by training and validating the network upon classifying the intervals corresponding to the annotated parameters.

Table 3.

Examples of Inria-AILD crops from modified images for each modifier (see Table 2).

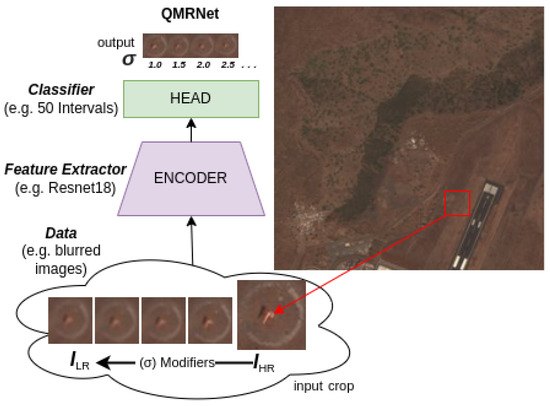

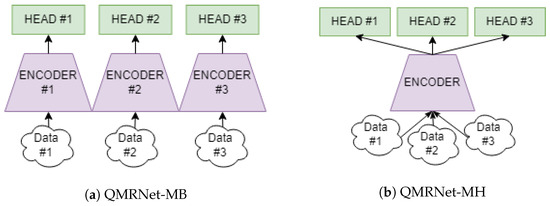

QMRNet is a feed-forward neural network that takes the architecture of an encoder with a parametrizable classifier (see Figure 1) upon numerical class intervals (can be set as binary, categorical or continuous according to the N intervals). It trains upon the predicted interval differences and the annotated parameters of the ground truth (GT) and requires a HEAD for each parameter to predict. In Figure 2 we designed 2 mechanisms of assessing the quality from several parameters simultaneously (multiparameter prediction): multibranch (MB) and multihead (MH). For MB a single encoder and head is required for each parameter to predict, while MH requires a head for each parameter but only one encoder. Therefore, QMRNet-MH considers one encoder and #N classifiers per EO parameter while QMRNet-MB considers #N encoders + #N classifiers (a whole QMRNet per parameter). The QMRNet-MH predicts all parameters simultaneously (faster) but its capacity is lower (can lead to lower accuracy) from the encoder part.

Figure 1.

Architecture of QMRNet. Single-parameter QMRNet for using modified/annotated data from a unique parameter/modifier (in this case, from blur-modified images).

Figure 2.

Multiparameter architectures to simultaneously predict several distortions in one run. (a) Multibranch QMRNet (QMRNet-MB), example with 3 stacked QMRNets (3 encoders with 1 head each). (b) Multihead QMRNet (QMRNet-MH), example with 3 heads.

For our experiments with QMRNet we have used an encoder based on ResNet18 (backbone) composed of a convolutional layer (3 × 3) and 4 residual blocks (each composed of 4 convolutional layers) of 64, 128, 256 and 512 pixels of resolution. Our network is scalable to distinct crop resolutions as well as regression parameters (N intervals), adapting the HEAD to the number of classes to predict. The output of the HEAD after pooling is a continuous value of probability of each class interval, and through softmax and thresholding we can filter (one-hot) which class or classes have been predicted (1) and not (0) for each image sample crop. By default, we utilize the Binary Cross Entropy Loss (BCELoss) as the classification error and Stochastic Gradient Descend as the optimizer. For the case of multiclass regression, we designed the multibranch QMRNet (QMRNet-MB), in which we train each network individually with its set of parameterized modification intervals for each sample. QMRNet-MB is trained individually but can be run once per sample (parallel threads per branch), especially to obtain fast multi-class metric calculations (see in Section 4.1).

Note that for processing irregular or inequivalent crops in our design of the network input, in the case of having the encoder input resolution R lower than the input image crops (e.g., 5000 × 5000 for GT and 256 × 256 for the network input), we crop the image to the QMRNet input R by C crops. C is the number of crops to generate for each sample (e.g., 10, 20, 50, 100, 200). In the case of the crops being smaller than the encoder backbone input (e.g., 232 × 232 for the GT and 256 × 256 for the network input), we apply a circular padding on each border (width and/or height) to obtain a real image that preserves the scaling and domain. The total number of hyperparameters to specify the design of a specific QMRNet architecture is N × R and it can be trained with a distinct combination of hyperparameters (N × C × R). To train the QMRNet’s regressor, we select a training set and generate a set of distorted cases, which are parameterizable through our modifiers. The total number of training samples (dataset size) can be calculated by the product of the dataset images (I) and N × C (number of parameter intervals and crops per sample). We can set distinct possible hyperparameters specifically to train and validate, such as the number of epochs (e), batch size (bs), learning rate (lr), weight decay (wd), momentum, soft thresholding, etc.

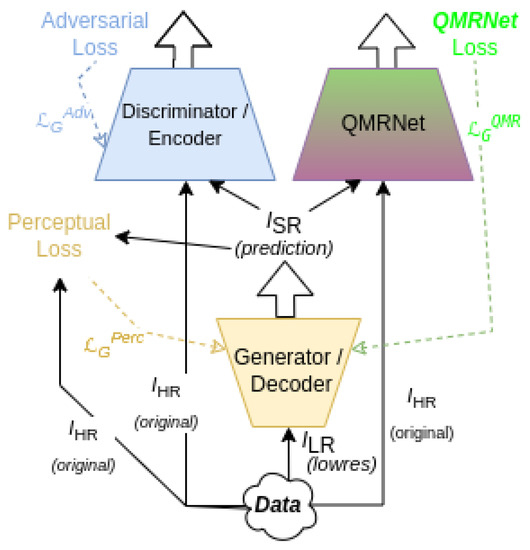

3.3. QMRLoss: Learning Quality Metric Regression as Loss in SR

We designed a novel objective function that is able to optimize super-resolution algorithms upon a specific quality objective using QMRNet (see Figure 3). Given a GAN or autoencoder network, we can add an ad hoc module based on a specific (or several) parameters of QMRNet. The QMRLoss is obtained by computing the classification error between the prediction and the original . This classification error determines whether the SR image is distinct in terms of a quality parameter objective (i.e., , F, GSD, or ) with respect the HR. The QMRLoss has been designed to use any classification error (i.e., BCE, L1 or L2) and can be summated to the perceptual or content loss of the generator (decoder for autoencoders) in order to tune the SR to the quality objective.

Figure 3.

Super-resolution model pipeline (encoder–decoder for autoencoders and generator–discriminator for GANs) with ad hoc QMRNet loss optimization. Note that all losses (i.e., , and ) are considered for the case of MSRN optimization with QMRNet; see Section 4.4.

The objective function for image generation algorithms is based on minimizing the generator (G) error (which compares and ) while maximizing the discriminator (D) error (which tests whether the SR image is true or fake).

During training, G is optimized upon , which considers and . We added a new term, , which will be our loss function based on the quality objectives (QMRNet). Note that here we consider as the prediction image .

Below, we define the term , which calculates the parameter difference between the images and images, regularized by the constant . This is performed by computing the classification error (L1, L2 or BCE) between the output of the heads for each case:

4. Experiments

4.1. Experimental Setup

For training the QMRNet we collected 30cm/pixel data from the Inria Aerial Image Labeling Dataset (both training and validation using Inria-AILD sets). For testing our network, we selected all 11 subsets from the distinct EO datasets, USGS, UCMerced, Inria, DeepGlobe, Shipsnet, ECODSE and Xview (see Table 1).

4.1.1. Evaluation Metrics

In order to validate the training regime, we set several evaluation metrics (Table 4, Table 5 and Table 6) that provide interval dependencies for each prediction, namely, that intervals that are closer to the target interval are considered better predictions that further ones. This means that given an unblurred image ( ) the prediction of will be a worse prediction than predictions closer to the GT (e.g., , ). For this, we considered retrieval metrics (which are N-rank order classification) such as medR or recall rate K (R@K) [49,50] as well as performance statistics (precision, recall, accuracy, F-score) at different intervals close to the target (Precision@K, Recall@K, Accuracy@K, F-Score@K) and overall Area Under ROC (AUC). The retrieval metric medR measures the median absolute interval difference between classes, namely, that for 10 classes and modifier GSD (30, , ,…, 60), if the targets (modified) are and predictions are then there is a medR of 1.0, while if predictions are 60 then medR is 9.0. R@K measures the total recall (whether prediction in an interval distance from the target is lower than K) over a target window (i.e., if there are 40 classes and K is 10, only the 10 classes around the target label are considered for evaluation).

Table 4.

Validation metrics for QMRNet (ResNet18) with all modifiers in Inria-AILD-180-test. Note that R (height × width) defines the resolution input of the network, in each case 1024 × 1024, 512 × 512, 256 × 256, 128 × 128 and 64 × 64. Underline represents top-1 best performance. Italics represents same value for most cases.

Table 5.

Validation metrics for multiparameter prediction with QMRNet-MH (multihead) and QMRNet-MB (multibranch) in Inria-AILD-180-test. Note: QMRNet-MB (one QMRNet branch per parameter) validation is equivalent to running several parameters from Figure 4 jointly. Underline represents top-1 best performance. Italics represents same value for most cases.

Table 6.

Validation metrics in ECODSE Competition hyperspectral image dataset with crops of 80 × 80 and 426 bands ranging from 383 to 2.512 nm with a spectral resolution of five nm.

In Table 7, Table 8 and Table 11 we add another quality metric in addition to the modifier-based ones, which is the . For this we defined a basis that describes the overall quality ranking (set from 0.0 to 1.0) of an image or dataset. This is calculated by measuring the weighted mean of the metrics, each metric with its own objective target (min↓ or max↑) as described in Table 2.

Table 7.

Mean IQA results of datasets given QMRNet(ResNet18) trained over 180 images (Inria-AILD-180-train) and 5 modifiers. Underline represents top-1 best performance. Italics represents same value for most cases.

Table 8.

Mean no-reference Quality Metric Regression (QMRNet trained on Inria-AILD-180-train) metrics on super-resolution of downsampled inputs in UCMerced-380. Bold represents having lower distortion than HR. Underline represents top-1 best performance. Italics represents the same value for most cases.

For a specific quality metric we define the total of the metric (i.e., for it would be , namely, ), an objective value (i.e., for it would be the minimum, as best the goes toward minimizing , namely, 1.0) and the weights for the total weighted sum of the (by default if we keep the same importance for each metric, , where m is the total number of modifiers, for our case ).

4.1.2. Training and Validation

We trained our network with Inria-AILD sets of 180 images each for the training, validation and test subsets (Inria-AILD-180-train, Inria-AILD-180-val Inria-AILD-180-test, respectively), selecting 100 images for training and 20 for validation (proportional to 45% and 12% of the total, respectively). We processed all samples of the dataset with distinct intervals for each modifier (thus, we annotated each sample with that modification interval) and built our network with distinct heads: , , , , . We selected a distinct set of crops for each resolution (C × R), in this case 10 crops of 1024 × 1024, 20 crops of 512 × 512, 50 crops of 256 × 256, 100 crops of 128 × 128 and 200 crops of 64 × 64. Thus, we generated datasets with different input resolutions but adapting the total domain capacity. The total number of trained images becomes 180xx (e.g., a 64 × 64 image set contains 1.8M crop samples).

We ran our training and validation experiments for 200 epochs with distinct hyperparameters: = [, , , ], = [, , ], momentum = and soft threshold 0.3 (to filter out soft to hard/one-hot labels). Due to the computational capacity, the training batch sizes were selected according to the resolution for each set: , , and .

In Table 4 we show the validation results (Inria-AILD-180-test) with the trained QMRNet using a ResNet18 backbone with the Inria-AILD-180-train data. We can observe that the overall medRs are around 1.0 (predictions are about one interval of distance with respect to the targets) and recall rates (exact match) are for top-1 (R@1) around 70% and for R@5 and R@10 (prediction is in an interval below 5 and 10 of the distance with respect to the target, respectively) around 100%. This means our network is able to predict the parameter data ( , sharpness F, GSD, , ) with a very high retrieval precision, even when the parameters are fine-grained (e.g., 40 or 50 class intervals). The best results appear for low N parameters (smaller classification tasks), such as F and GSD. Here, GSD is mostly an easy task, as the scaling of objects is constant whether the images are distorted or not. In terms of the crop size, the best results are mostly in a higher input resolution of networks (); this may vary on the selected backbone for the encoder (here, Resnet18 is mostly used with input R around ).

In Table 5 are shown the validation results for multiparameter prediction, in which we tested multibranch QMRNet (QMRNet-MB) and multihead QMRNet (QMRNet-MH). Here, the performance between the two is similar to the single-parameter prediction (Table 4), where medR is around 1.0 and the recall rates around 70% for R@1 and >90% for R@5 and R@10. We tested predictions for two simultaneous parameters ( + , F + and + ) and overall QMRNet-MB obtains better results for + and + but slightly worse for F + than QMRNet-MH.

In Table 6 are shown the validation results for QMRNet’s prediction of and in hyperspectral images (ECODSE RSData with 426 bands per pixel). By changing the first convolutional layer of QMRNet’s encoder backbone for multiband input channels we can classify the quality metric with multichannel and hyperspectral images. Overall, medR is around 5.0 and the recall rates around 20% for R@1 (exact match), 56% for R@5 (five closest categories) and 80% for R@10 (ten closest categories). Here, the precision is lower due to the hardness of the approximation task, given the hyperspectral resolution (i.e., 80 × 80 at 60 cm/px) and the very few examples (43 examples). Despite the hardness of the dataset task, QMRNet with ResNet18 is able measure whether a parameter is in a specific range of or in hyperspectral images.

In Figure 4 we can see that most of the worst predictions for blur, sharpness, rer and snr appear mainly when attempting to predict over crops with sparse or homogeneous features, namely, when most of the image has limited or little pixel information (i.e., with similar pixel values), such as the sea or flat terrain surfaces. This is because the preprocessed samples have few or no dissimilarities in each modifier parameter. This has an effect on evaluating the datasets: when the surfaces are more sparse, predictions become harder.

Figure 4.

Correct and incorrect prediction examples of QMRNet on Inria-AILD-180 validation (crop resolution, i.e., R = 128 × 128) given interval rank error (classification label distance between GT and prediction, maximum is N for each net, i.e., 50 for blur, 10 for sharpness and 40 for snr and rer). medR is the overall median rank (err).

4.2. Results on QMRNet for IQA: Benchmarking Image Datasets

We ran our QMRNet with a ResNet18 backbone over the sets (See the EO dataset evaluation use case in https://github.com/dberga/iquaflow-qmr-eo, accessed on 10 October 2022) described in Table 1. Given our network trained uniquely on Inria-AILD-180-train, we see how our network is able to adapt due to the prediction of feasible quality metrics ( , GSD, sharpness F, and ) over each of the distinct datasets. We see that with fine-tuning QMRNet over Inria-AILD-180-train, the overall for most of the datasets appears to be (originally unblurred from the ground truth) except for USGS279 and Inria-AILD-180-test, which is around . For the case of the sharpness factor F, the overall values for most datasets is (without oversharpening) but for cases such as UCMerced380 and Shipnset, it appears to be oversharpened ( and , respectively). Most datasets present an overall predicted of = 28.67 and of . The highest datasets are Inria-AILD-180-train, UCMerced2100 and USGS279, here considering the same weight for each modifier metric M.

4.3. Results on QMRNet for IQA: Benchmarking Image Super-Resolution

Here we selected a set of super-resolution algorithms that have been previously tested to super-resolve high-quality real-image SR dataset benchmarks such as BSD100, Urban100 or Set5 × 2 (https://paperswithcode.com/task/image-super-resolution, accessed on 10 October 2022) but here we want to apply them to EO data and metrics. For this we want to benchmark their performance considering full-reference, no-reference and our QMRNet-based metric (See the use case of super-resolution benchmark at https://github.com/dberga/iquaflow-qmr-sisr, accessed on 10 October 2022). QMRNet allows us to check the amount of each distortion for every transformation (LR) applied to the original image (HR), if it is either the usual x2, x3 or x4 downsampling or a specific distortion such as blurring.

Concretely, we tested our UCMerced subset of 380 images with crops of 256 × 256 with autoencoder algorithms (FSRCNN and MSRN) and GAN-based and self-supervised architectures such as SRGAN, ESRGAN, CAR and LIIF. All model checkpoints are selected as vanilla (default hyperparameter settings) except for the input scaling (x2, x3, x4) and also for the case of MSRN, for which we computed three versions of the vanilla MSRN (architecture with four scales), one without fine-tuning (), one with fine-tuning and added noise () and one () with fine-tuning (over Inria-AILD-180-train).

In Table 8 we have evaluated each type of modifier parameter for every single super-resolution algorithm as well as the overall score for all quality metric regression. Here, we tested the algorithms considering x2, x3 and x4 downsampling input (LR), as well as considering the case of adding a blur filter with a scaled . Here, the QMRNet is able to predict that gives the worst ranking for most metrics. FSRCNN and SRGAN give similar results in most metrics, with SRGAN being slightly better in the and metrics. MSRN shows the best results in and F, mainly when the inputs have a higher resolution (i.e., x2, x3). For the overall scores, CAR presents the best results in and , with the highest score ranking in most downsampling cases. However, CAR has the worst ranking in the noise and sharpness metrics ( and F). As we mentioned earlier, CAR presents oversharpening and hallucinations, which can trick some metrics that measure blur but becomes worse for those that predict unusual signal-to-noise ratios and illusory edges. In contrast, LIIF presents a bad performance in the and metrics (meaning LIIF’s images appearing slightly blurred), although LIIF acquires a overall good performance for the rest of the modifier metrics. We want to pinpoint that in some metrics (i.e., , and F), some of the tested algorithms (Table 8, Table 9 and Table 10) show lower distortion values than the original . This phenomenon means that our metrics can demonstrate if an image has a present distortion effect (whether it is oversharpening, blur or noise) beyond its image quality, unlike full-reference metrics, which are limited to the quality of the samples.

Table 9.

Mean full-reference metrics on super-resolution of downsampled inputs in UCMerced-380. Underline represents top-1 best performance.

Table 10.

Mean no-reference noise (SNR) and contour sharpness (RER, MTF, FWHM) metrics on super-resolution of downsampled inputs in UCMerced-380. Underline represents top-1 best performance.

In Table 9 we show a benchmark of known full-reference metrics. In super-resolving x2, MSRN (concretely, and ) has the best results for full-reference metrics, including SSIM, PSNR, SWD, FID, MSSIM, HAARPSI and MDSI. In x3 and x4, LIIF and CAR have the best results for most of these metrics, including PSNR, FID, GMSD and MDSI, being top-3 with most metric evaluations. Here we have to pinpoint that LIIF does not perform as well when the input () has been blurred; see here that CAR is able to deblur the input better than other algorithms as it is oversharpening the originally downscaled and/or blurred . In Table 10 we show the no-reference metric results, here for SNR, RER, MTF and FWHM. SRGAN, MSRN and LIIF present significantly better results for SNR than other algorithms. This means these algorithms in general do not add noise to the input, namely, the generated images do not contain artifacts that were not present in the original . In this case, CAR outperforms in RER, MTF and FWHM.

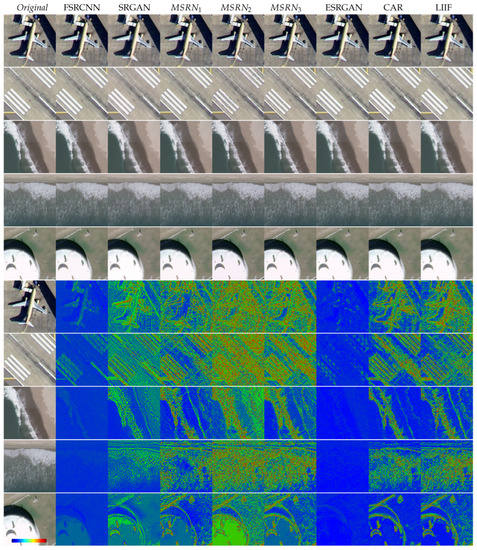

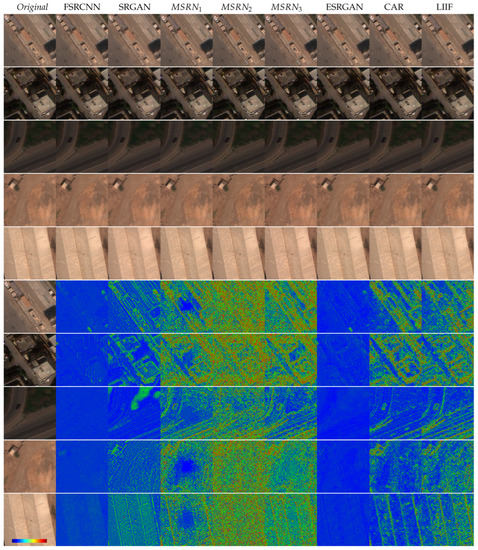

In Figure 5 and Figure 6 we super-resolve the original UCMerced and XView images x3 and we can observe that some algorithms, such as FSRCNN, SRGAN, , ESRGAN and LIIF, present a similar (blurred) output, while others, such as , and CAR, present a higher noise and oversharpening of borders, trying to enhance the features of the image (here, attempting to generate features beyond the content). The noise and oversharpening are distinguishable in colormaps of buildings (e.g., Figure 5, row 10 and Figure 7, row 6).

Figure 5.

Examples of super-resolving original UCMerced images (with a crop zoom, i.e., 128 × 128) and each SR algorithm output. Inputs (Original ) without downsampling (i.e., super-resolving x3). In rows 6–10 are colormaps of the sum of differences (R + G + B) with respect to Original .

Figure 6.

Examples of super-resolving original XView images (with a crop zoom, i.e., 128 × 128) and each SR algorithm output. Inputs (Original ) without downsampling (i.e., super-resolving x4). In rows 6–10 are colormaps of the sum of differences (R + G + B) with respect to Original .

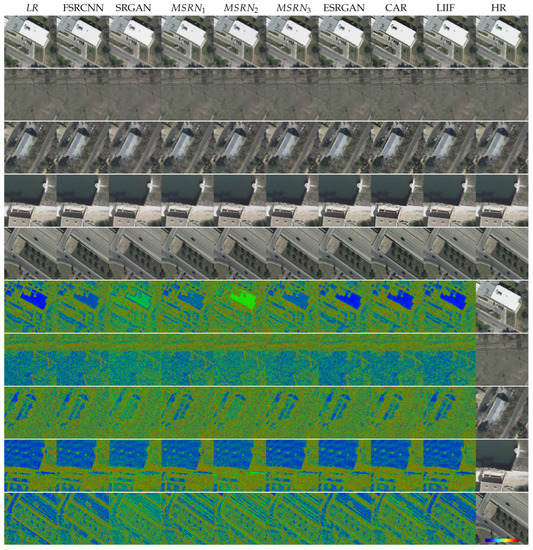

Figure 7.

Low-resolution examples of Inria-AILD-180-val images (crops of 256 × 256) and each SR algorithm output. LR (corresponding to input on algorithms ) is the downsampling x3 of . In rows 6–10 are colormaps of the sum of differences (R + G + B) with respect to Original .

In our results for low-resolution LR inputs we can qualitatively see (Figure 7) that FSRCNN, SRGAN, and LIIF present blurred outputs, similar to the . ESRGAN does not change much the appearance with respect to the original image (see differences in colormaps), but simply adds some residual noise at the edges. CAR, however, seems to acquire better results but it appears in some cases to be oversharpened (similar to MSRN). We can observe that MSRN algorithms do not perform well when super-resolving very-low-resolution images (i.e., the downsampled ). Its original training set might not have considered very-low-resolution image samples. See Section 4.4 for MSRN optimization using QMRNet.

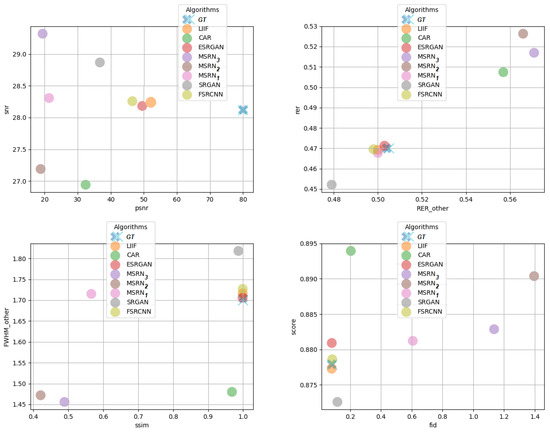

Above (Figure 8) we demonstrate the validity of some of our metric results by comparing them with each homologous measurement, namely, the ones measuring similar or the same properties. Here, we compared QMRNet’s and PSNR↑. These measure the quantity of noise over information of the image. The first subplot shows an anticorrelation (↙) on the algorithm values in these two metrics, with LIIF being closest to the (GT) and CAR, and having both the lowest (best) and PSNR (worst). For the case of QMRNet’s and measured (which corresponds to the RER that measures diagonal contours), there is a positive correlation (↗), with CAR, and outperforming the rest of the algorithms. We also compared and SSIM↑ to see how well each algorithm performs when evaluating the diagonal contour width as well as the structural similarity, and it appears that , and CAR have the lowest (best) and most algorithms have the same values of SSIM as the original GT images (unchanged). In the last subplot we compared the QMRNet’s (composed of the weighted mean of QMRNet’s , , , and F) and FID↓, which measures the Fréchet distribution distance between images. Here , and CAR show the highest with higher (worse) FID, while most algorithms are close to the original image (almost unchanged). Note that in these plots we super-resolve x4 the original image so that full-reference metrics can only compare with the original image (thus, there is no downsampling of inputs so that the would be equivalent to the input). Here, we need to consider how the algorithms actually perform in metrics that can evaluate better than the original image.

Figure 8.

Scatter plots of metric comparison on super-resolving (x4) UCMerced dataset.

4.4. Results on QMRloss: Optimizing Image Super-Resolution

In this section, we integrated the aforementioned QMRLoss as an ad-hoc regularization strategy for optimizing SR algorithms (See the QMRLoss optimization use case at https://github.com/dberga/iquaflow-qmr-loss, accessed on 10 October 2022). For this case, we integrated different loss methods (L1, L2 and BCE) as QMRLoss in different modifiers in MSRN training. We regularized the MSRN architecture by integrating the QMRLoss () to the total loss calculation, namely, summed to the adversarial loss and the perceptual loss (in this case, VGGLoss). This QMRLoss regularization mechanism will allow MSRN and any other algorithm to avoid quality mismatches considering several metrics that measure distortions simultaneously (see results in Table 11).

Table 11.

Test metrics on super-resolution (super-resolving original input x3 or using downsampled inputs x3) using MSRN backbone +QMRLoss in UCMerced-380. computation over Inria-AILD-180-train on distinct QMRNets (for , and ) using crops of R = 256 × 256. Note that here we are testing MSRN + QMRLoss over UCMerced samples while QMRNet’s training is on Inria-AILD. Bold represents having lower distortion than HR. Underline represents top-1 best performance. Italics represents the same value for most cases.

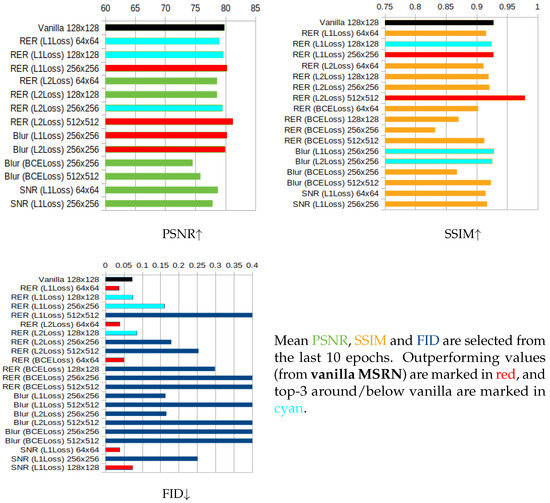

In Figure 9 we show that several strategies such as QMRLoss using (and L1 loss) obtain better results than vanilla MSRN in the PSNR, SSIM and FID metrics. Here, the PSNR improves with QMRNet using L1 loss and crops of 256 × 256 as well as with L2 loss with 512 × 512. It also improves with the metric both with L1 and L2 loss on 256 × 256 crops. The SSIM improves with L1 loss in QMRNet that uses RER and significatively (almost 1.0) with L2 loss with crops of 512 × 512. For FID, using QMRNet improves MSRN with and all types of losses (L1, L2 and BCE) using crops of 64 × 64, here as well using QMRNet with metric and L1 loss, using crops of 64 × 64 and 128 × 128.

Figure 9.

Validation of QMRLoss optimizing MSRN in super-resolution of Inria-AILD-180. Note that the training/validation regime was conducted over Inria-AILD-180 with 100-20 image splits and crops set to 64 × 64, 128 × 128, 256 × 256 and 512 × 512.

We also tested our MSRN + QMRLoss (adding QMRNet’s metric evaluation) generated images with most of our full-reference and no-reference metrics in the UCMerced-380 dataset (outside Inria-AILD’s training and validation distribution) with crops of 256 × 256. Here, vanilla MSRN yields worse results for , , , SNR, RER mean of X and Y ↑, MTF mean of X and Y ↑ and FWHM mean of X and Y ↓ in comparison with the optimized QMRLoss, QMRLoss and QMRLoss. Here, QMRLoss has been able to adapt better when generating contours and predicting blurred objects on testing distinct shapes from the original training. In the case of full-reference metrics, is more similar to the original (although seemingly blurred); this is due to the lack of changes made to the image. In the no-reference metrics, MSRN+QMR significatively improves with respect to and MSRN.

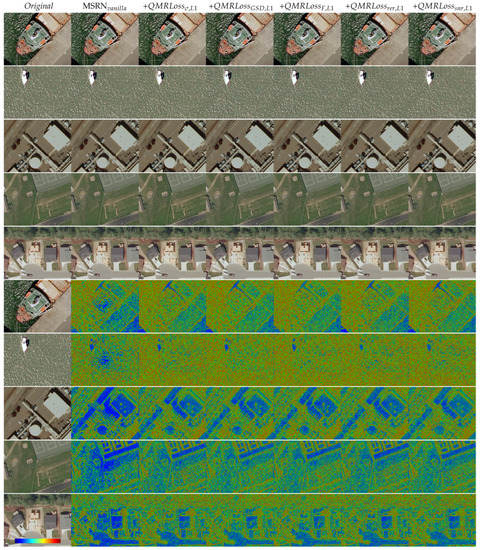

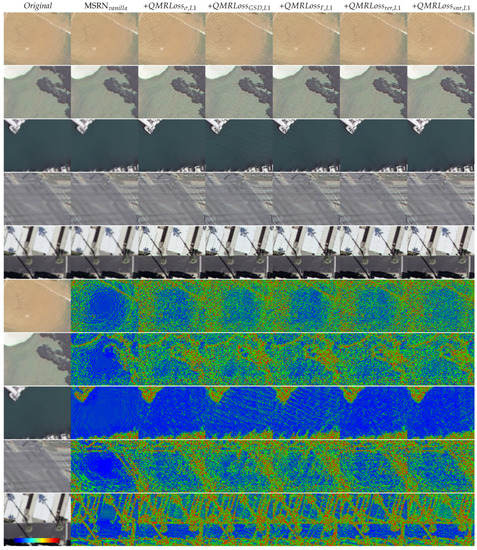

In Figure 10, Figure 11 and Figure 12 can be observed the changes of super-resolving UCMerced and Inria-AILD images according to every QMRNet optimization. In most cases of MSRN (column 2 colormaps) there is a center bias, especially in sparse/homogeneous regions. In Figure 11, row 3 and Figure 12, row 1 it can be observed that QMRLoss and QMRLoss significatively enhance the noise present in the homogeneous areas (sea/beach), while QMRLoss, QMRLoss and QMRLoss present a smoother solution whilst having higher oversharpening than MSRN.

Figure 10.

Examples of Inria-AILD-180-test images (with a crop zoom of 256 × 256) and each QMRNet algorithm output (QMRLoss). Inputs (Original ) without downsampling (i.e., super-resolving x3). In rows 6–10 are colormaps of the sum of differences (R + G + B) with respect to Original .

Figure 11.

Examples of UCMerced images (with a crop zoom of 96 × 96) and each QMRNet algorithm output (QMRLoss). Inputs (Original ) without downsampling (i.e., super-resolving x4). In rows 6–10 are colormaps of the sum of differences (R + G + B) with respect to Original .

Figure 12.

Examples of UCMerced images (with a crop zoom of 96 × 96) and each QMRNet algorithm output (QMRLoss). Inputs (Original ) without downsampling (i.e., super-resolving x4). In rows 6–10 are colormaps of the sum of differences (R + G + B) with respect to Original .

5. Conclusions

In this study, we implement an open-source tool (integrated in the IQUAFLOW framework) developed for assessing quality and modifying EO images. We propose a network architecture (QMRNet) that predicts the amount of distortion for each parameter as a no-reference metric. We also benchmark distinct super-resolution algorithms and datasets with both full-reference and no-reference metrics and propose a novel mechanism for optimizing super-resolution training regimes using QMRLoss, integrating QMRNet metrics with SR algorithm objectives. We tested the performance in single-parameter prediction of , , , F and GSD, as well as multiparameter simultaneously. In addition to the high-resolution color EO image computation, we adapted and tested the QMRNet architecture for the prediction of and with hyperspectral EO images.

On assessing the image quality of datasets we observe similar overall scores for most datasets, with dissimilarities in the scores of and . On assessing the single-image super-resolution we see significantly better results for CAR, LIIF, and . Optimizing MSRN with QMRLoss (snr, rer and blur) improves the results on both full-reference and no-reference metrics with respect to the default vanilla MSRN.

We have to point out that our proposed method can be applied to any type of distortion or modification. QMRNet allows us to predict any parameter of the image and also several parameters simultaneously. For instance, training QMRNet to assess compression parameters could be another use case of interest, including other datasets mentioned in Section 2. We also tested the usage of QMRNet as loss for optimizing SR results by regularizing the MSRN network, but it could be extended with distinct algorithm architectures and uses, as QMRLoss allows us to reverse or denoise any modification of the original image. In addition, it is also possible to implement a variation to the QMRLoss objective by forcing the loss calculation to be on a specific interval with maximum quality and minimal distortion for each parameter. In that way, the algorithm could maximize toward a specific metric or objective beyond the output over the GT.

Author Contributions

Conceptualization, D.B., D.V. and J.M.; methodology, D.B.; software, D.B., P.G., L.R.-C., K.T., C.G.-M. and E.M.; validation, D.B.; formal analysis, D.B.; investigation, D.B. and J.M.; resources, D.B. and J.M.; data curation, D.B.; writing—original draft, D.B.; writing—review and editing, D.B., P.G. and J.M.; visualization, D.B.; supervision, D.B., J.M., P.G. and D.V.; project administration, D.B., J.M. and D.V.; funding acquisition, D.B., J.M. and D.V. All authors have read and agreed to the published version of the manuscript.

Funding

The project was financed by the Ministry of Science and Innovation (MICINN) and by the European Union within the framework of FEDER RETOS-Collaboration of the State Program of Research (RTC2019-007434-7), Development and Innovation Oriented to the Challenges of Society, within the State Research Plan Scientific and Technical and Innovation 2017–2020, with the main objective of promoting technological development, innovation and quality research.

Data Availability Statement

The research code and data are specified in the following repositories:

- IQUAFLOW https://github.com/satellogic/iquaflow (accessed on 2 April 2023);

- IQUAFLOW-QMRNet https://github.com/satellogic/iquaflow/tree/main/iquaflow/quality_metrics (accessed on 2 April 2023);

- IQUAFLOW-Modifiers https://github.com/satellogic/iquaflow/tree/main/iquaflow/datasets (accessed on 2 April 2023);

- Case 1: Benchmark Datasets https://github.com/dberga/iquaflow-qmr-eo (accessed on 2 April 2023);

- Case 2: Benchmark Super-Resolution https://github.com/dberga/iquaflow-qmr-sisr (accessed on 2 April 2023);

- Case 3: Benchmark QMRLoss https://github.com/dberga/iquaflow-qmr-loss (accessed on 2 April 2023).

Conflicts of Interest

The authors declare that they have no known competing financial interests nor personal relationships that could have appeared to influence the work reported in this paper.

Abbreviations

The following abbreviations are used in this manuscript:

| SR| | Super-Resolution|SR image |

| HR| | High-Resolution|HR image |

| LR| | Low-Resolution|LR image |

| EO | Earth Observation |

| IQA | Image Quality Assessment |

| GSD | Ground Sampling Distance |

| GAN | Generative Adversarial Networks |

| SNR | Signal-to-Noise |

| RER | Relative Edge Response |

| MTF | Modulation Transfer Function |

| LSF | Line Spread Function |

| PSF | Point Sparsity Function |

| FWHM | Full Width at Half Maximum |

| PSNR | Peak Signal-to-Noise Ratio |

| SSIM | Structural Similarity |

| MSSIM | Mean Structural Similarity |

| HAARPSI | Haar Wavelet Perceptual Similarity Index |

| GMSD | Gradient Magnitude Similarity Deviation |

| MDSI | Mean Deviation Similarity Index |

| SWD | Sliced Wasserstein Distance |

| FID | Fréchet Inception Distance |

References

- Leachtenauer, J.C.; Driggers, R.G. Surveillance and Reconnaissance Imaging Systems: Modeling and Performance Prediction; Artech House Optoelectronics Library: Norwood, MA, USA, 2001. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image Super-Resolution Using Deep Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef]

- Yamanaka, J.; Kuwashima, S.; Kurita, T. Fast and Accurate Image Super Resolution by Deep CNN with Skip Connection and Network in Network. In Neural Information Processing; Springer International Publishing: Long Beach, CA, USA, 2017; pp. 217–225. [Google Scholar] [CrossRef]

- Müller, M.U.; Ekhtiari, N.; Almeida, R.M.; Rieke, C. Super-resolution of multispectral satellite images using convolutional neural networks. arXiv 2020, arXiv:2002.00580. [Google Scholar] [CrossRef]

- Li, J.; Fang, F.; Mei, K.; Zhang, G. Multi-scale Residual Network for Image Super-Resolution. In Proceedings of the European Conference on Computer Vision (ECCV); Springer International Publishing: Munich, Germany, 2018; pp. 527–542. [Google Scholar] [CrossRef]

- Ledig, C.; Theis, L.; Huszar, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Qiao, Y.; Loy, C.C. ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks. In Workshop of the European Conference on Computer Vision (ECCV); Springer International Publishing: Munich, Germany, 2019; pp. 63–79. [Google Scholar] [CrossRef]

- Jiang, Y.; Gong, X.; Liu, D.; Cheng, Y.; Fang, C.; Shen, X.; Yang, J.; Zhou, P.; Wang, Z. EnlightenGAN: Deep Light Enhancement Without Paired Supervision. IEEE Trans. Image Process. 2021, 30, 2340–2349. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate Image Super-Resolution Using Very Deep Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR Oral), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Sun, W.; Chen, Z. Learned image downscaling for upscaling using content adaptive resampler. IEEE Trans. Image Process. 2020, 29, 4027–4040. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Liu, S.; Wang, X. Learning Continuous Image Representation with Local Implicit Image Function. arXiv 2020, arXiv:2012.09161. [Google Scholar]

- Pradham, P.; Younan, N.H.; King, R.L. Concepts of image fusion in remote sensing applications. In Image Fusion; Elsevier: Amsterdam, The Netherlands, 2008; pp. 393–428. [Google Scholar] [CrossRef]

- Huynh-Thu, Q.; Ghanbari, M. Scope of validity of PSNR in image/video quality assessment. Electron. Lett. 2008, 44, 800. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.; Sheikh, H.; Simoncelli, E. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Reisenhofer, R.; Bosse, S.; Kutyniok, G.; Wiegand, T. A Haar wavelet-based perceptual similarity index for image quality assessment. Signal Process. Image Commun. 2018, 61, 33–43. [Google Scholar] [CrossRef]

- Xue, W.; Zhang, L.; Mou, X.; Bovik, A.C. Gradient Magnitude Similarity Deviation: A Highly Efficient Perceptual Image Quality Index. IEEE Trans. Image Process. 2014, 23, 684–695. [Google Scholar] [CrossRef]

- Nafchi, H.Z.; Shahkolaei, A.; Hedjam, R.; Cheriet, M. Mean Deviation Similarity Index: Efficient and Reliable Full-Reference Image Quality Evaluator. IEEE Access 2016, 4, 5579–5590. [Google Scholar] [CrossRef]

- Varga, D. Full-Reference Image Quality Assessment Based on an Optimal Linear Combination of Quality Measures Selected by Simulated Annealing. J. Imaging 2022, 8, 224. [Google Scholar] [CrossRef] [PubMed]

- Lim, P.C.; Kim, T.; Na, S.I.; Lee, K.D.; Ahn, H.Y.; Hong, J. Analysis of UAV image quality using edge analysis. Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2018, 42, 359–364. [Google Scholar] [CrossRef]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “Completely Blind” Image Quality Analyzer. IEEE Signal Process. Lett. 2013, 20, 209–212. [Google Scholar] [CrossRef]

- Venkatanath, N.; Praneeth, D.; Bh, M.C.; Channappayya, S.S.; Medasani, S.S. Blind image quality evaluation using perception based features. In Proceedings of the 2015 Twenty First National Conference on Communications (NCC), Mumbai, India, 27 February–1 March 2015. [Google Scholar] [CrossRef]

- Leachtenauer, J.C.; Malila, W.; Irvine, J.; Colburn, L.; Salvaggio, N. General Image-Quality Equation: GIQE. Appl. Opt. 1997, 36, 8322. [Google Scholar] [CrossRef]

- Thurman, S.T.; Fienup, J.R. Analysis of the general image quality equation. In Visual Information Processing XVII; ur Rahman, Z., Reichenbach, S.E., Neifeld, M.A., Eds.; SPIE: Bellingham, WA, USA, 2008. [Google Scholar] [CrossRef]

- Kim, T.; Kim, H.; Kim, H.D. Image-based Estimation and Validation of Niirs for High-resolution Satellite Images. Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2008, 37, 1–4. [Google Scholar]

- Li, L.; Luo, H.; Zhu, H. Estimation of the Image Interpretability of ZY-3 Sensor Corrected Panchromatic Nadir Data. Remote Sens. 2014, 6, 4409–4429. [Google Scholar] [CrossRef]

- Benecki, P.; Kawulok, M.; Kostrzewa, D.; Skonieczny, L. Evaluating super-resolution reconstruction of satellite images. Acta Astronaut. 2018, 153, 15–25. [Google Scholar] [CrossRef]

- Johnson, J.; Alahi, A.; Fei-Fei, L. Perceptual Losses for Real-Time Style Transfer and Super-Resolution. In Computer Vision–ECCV 2016; Springer International Publishing: Amsterdam, The Netherlands, 2016; pp. 694–711. [Google Scholar] [CrossRef]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar] [CrossRef]

- Kolouri, S.; Nadjahi, K.; Simsekli, U.; Badeau, R.; Rohde, G. Generalized Sliced Wasserstein Distances. In Proceedings of the Advances in Neural Information Processing Systems; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Knoxville, TN, USA, 2019; Volume 32. [Google Scholar]

- Salimans, T.; Goodfellow, I.; Zaremba, W.; Cheung, V.; Radford, A.; Chen, X.; Chen, X. Improved Techniques for Training GANs. In Proceedings of the Advances in Neural Information Processing Systems; Lee, D., Sugiyama, M., Luxburg, U., Guyon, I., Garnett, R., Eds.; Curran Associates, Inc.: Knoxville, TN, USA, 2016; Volume 29. [Google Scholar]

- Liu, J.; Zhou, W.; Li, X.; Xu, J.; Chen, Z. LIQA: Lifelong Blind Image Quality Assessment. IEEE Trans. Multimed. 2022; Early Access. [Google Scholar] [CrossRef]

- Chen, L.H.; Bampis, C.G.; Li, Z.; Norkin, A.; Bovik, A.C. ProxIQA: A Proxy Approach to Perceptual Optimization of Learned Image Compression. IEEE Trans. Image Process. 2021, 30, 360–373. [Google Scholar] [CrossRef]

- Saad, M.A.; Bovik, A.C.; Charrier, C. Blind Image Quality Assessment: A Natural Scene Statistics Approach in the DCT Domain. IEEE Trans. Image Process. 2012, 21, 3339–3352. [Google Scholar] [CrossRef]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef] [PubMed]

- Ye, P.; Kumar, J.; Kang, L.; Doermann, D. Unsupervised feature learning framework for no-reference image quality assessment. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1098–1105. [Google Scholar] [CrossRef]

- Xu, J.; Ye, P.; Li, Q.; Du, H.; Liu, Y.; Doermann, D. Blind Image Quality Assessment Based on High Order Statistics Aggregation. IEEE Trans. Image Process. 2016, 25, 4444–4457. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; van de Weijer, J.; Bagdanov, A.D. RankIQA: Learning From Rankings for No-Reference Image Quality Assessment. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Bosse, S.; Maniry, D.; Muller, K.R.; Wiegand, T.; Samek, W. Deep Neural Networks for No-Reference and Full-Reference Image Quality Assessment. IEEE Trans. Image Process. 2018, 27, 206–219. [Google Scholar] [CrossRef]

- Fan, C.; Zhang, Y.; Feng, L.; Jiang, Q. No Reference Image Quality Assessment based on Multi-Expert Convolutional Neural Networks. IEEE Access 2018, 6, 8934–8943. [Google Scholar] [CrossRef]

- Zhu, H.; Li, L.; Wu, J.; Dong, W.; Shi, G. MetaIQA: Deep Meta-Learning for No-Reference Image Quality Assessment. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 14143–14152. [Google Scholar]

- Sun, S.; Yu, T.; Xu, J.; Zhou, W.; Chen, Z. Graphiqa: Learning distortion graph representations for blind image quality assessment. IEEE Trans. Multimed. 2022; Early Access. [Google Scholar] [CrossRef]

- Zhou, W.; Wang, Z. Quality Assessment of Image Super-Resolution: Balancing Deterministic and Statistical Fidelity. arXiv 2022, arXiv:2207.08689. [Google Scholar]

- Jiang, Q.; Liu, Z.; Gu, K.; Shao, F.; Zhang, X.; Liu, H.; Lin, W. Single Image Super-Resolution Quality Assessment: A Real-World Dataset, Subjective Studies, and an Objective Metric. IEEE Trans. Image Process. 2022, 31, 2279–2294. [Google Scholar] [CrossRef]

- Zhou, W.; Jiang, Q.; Wang, Y.; Chen, Z.; Li, W. Blind quality assessment for image superresolution using deep two-stream convolutional networks. Inf. Sci. 2020, 528, 205–218. [Google Scholar] [CrossRef]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Can semantic labeling methods generalize to any city? the inria aerial image labeling benchmark. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 3226–3229. [Google Scholar] [CrossRef]

- Dalponte, M.; Frizzera, L.; Gianelle, D. Individual tree crown delineation and tree species classification with hyperspectral and LiDAR data. PeerJ 2019, 6, e6227. [Google Scholar] [CrossRef]

- Gallés, P.; Takáts, K.; Hernández-Cabronero, M.; Berga, D.; Pega, L.; Riordan-Chen, L.; Garcia-Moll, C.; Becker, G.; Garriga, A.; Bukva, A.; et al. iquaflow: A new framework to measure image quality. arXiv 2022, arXiv:2210.13269. [Google Scholar]

- Gallés, P.; Takáts, K.; Marín, J. Object Detection Performance Variation on Compressed Satellite Image Datasets with Iquaflow. arXiv 2023, arXiv:2301.05892. [Google Scholar]

- Carvalho, M.; Cadène, R.; Picard, D.; Soulier, L.; Thome, N.; Cord, M. Cross-Modal Retrieval in the Cooking Context. In Proceedings of the The 41st International ACM SIGIR Conference on Research Development in Information Retrieval, Ann Arbor, MI, USA, 8–12 July 2018. [Google Scholar] [CrossRef]

- Salvador, A.; Hynes, N.; Aytar, Y.; Marin, J.; Ofli, F.; Weber, I.; Torralba, A. Learning Cross-Modal Embeddings for Cooking Recipes and Food Images. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).