1. Introduction

High-resolution remote-sensing images contain rich information on geometry, texture, and spatial distribution, which are widely used in agriculture, geological surveys, disaster detection, environmental protection, transportation, urban planning, and other fields [

1,

2,

3,

4,

5,

6]. With the development of remote-sensing technology, the extraction of information on target buildings automatically and accurately from high-resolution remote-sensing images has gradually become a key problem. The improvements in remote-sensing-image resolution also create new challenges. For example, when the texture and structure of one building are inconsistent, incomplete building extractions, or internal voids, might occur. At present, researchers are dedicated to the task of automatically extracting information on buildings from remote-sensing images. A variety of extraction algorithms have been proposed, which can be mainly divided into traditional methods and deep-learning-based approaches.

Regarding the traditional methods, the structure and texture, of and prior information on buildings in remote-sensing images are used for extraction. Irvin R. B. et al. proposed a method to extract information on buildings from remote-sensing images by using building shadows [

7]. Lee et al. extracted building-height information based on the building-volume shadow analysis (VSA) method [

8]. However, this method is not suitable for urban buildings with high densities, whose shadows might overlap with each other. On the other hand, many researchers extract information on buildings by analyzing their structure and texture. Levitt. S proposed a method based on image-texture detection [

9]. This method is simple and efficient, but the actual effect depends on the choice of texture. Lin et al. adopted the edge-detection algorithm to extract information on buildings by detecting their roofs, walls, and shadows [

10]. They extracted candidate building edges by analyzing line-segment features in remote-sensing images, and determined the candidate building boundaries by region segmentation, analysis, and region merging [

11]. With the development of digital-image-processing technology, methods based on mathematical morphology have emerged. Xu et al. used two-dimensional OTSU segmentation to carry out the rough segmentation of buildings, and used mathematical morphology and other means to detect and eliminate the non-buildings in aerial images [

12]. Gavankar et al. proposed an automatic building-extraction method based on mathematical morphology in high-resolution remote-sensing images by using the top-hat filter and K-means algorithm of image morphology [

13]. In general, the traditional methods only use the shallow features of buildings. Their extraction accuracy is not high, their generalization ability is poor, limited to specific cases requiring significant building features.

In recent years, the application of deep learning has emerged. Deep learning uses convolutional neural networks to extract and learn features in images, resulting in breakthroughs in classification, detection, and segmentation tasks. Currently, the mainstream convolutional neural networks include AlexNet [

14], VGGNet [

15], ResNet [

16], etc. Compared with traditional feature-extraction algorithms, convolutional neural networks can effectively extract the deep features of images with great levels of generalization. Scholars have designed a series of semantic segmentation networks using convolutional neural networks. Long et al. proposed the full convolutional neural network, FCN [

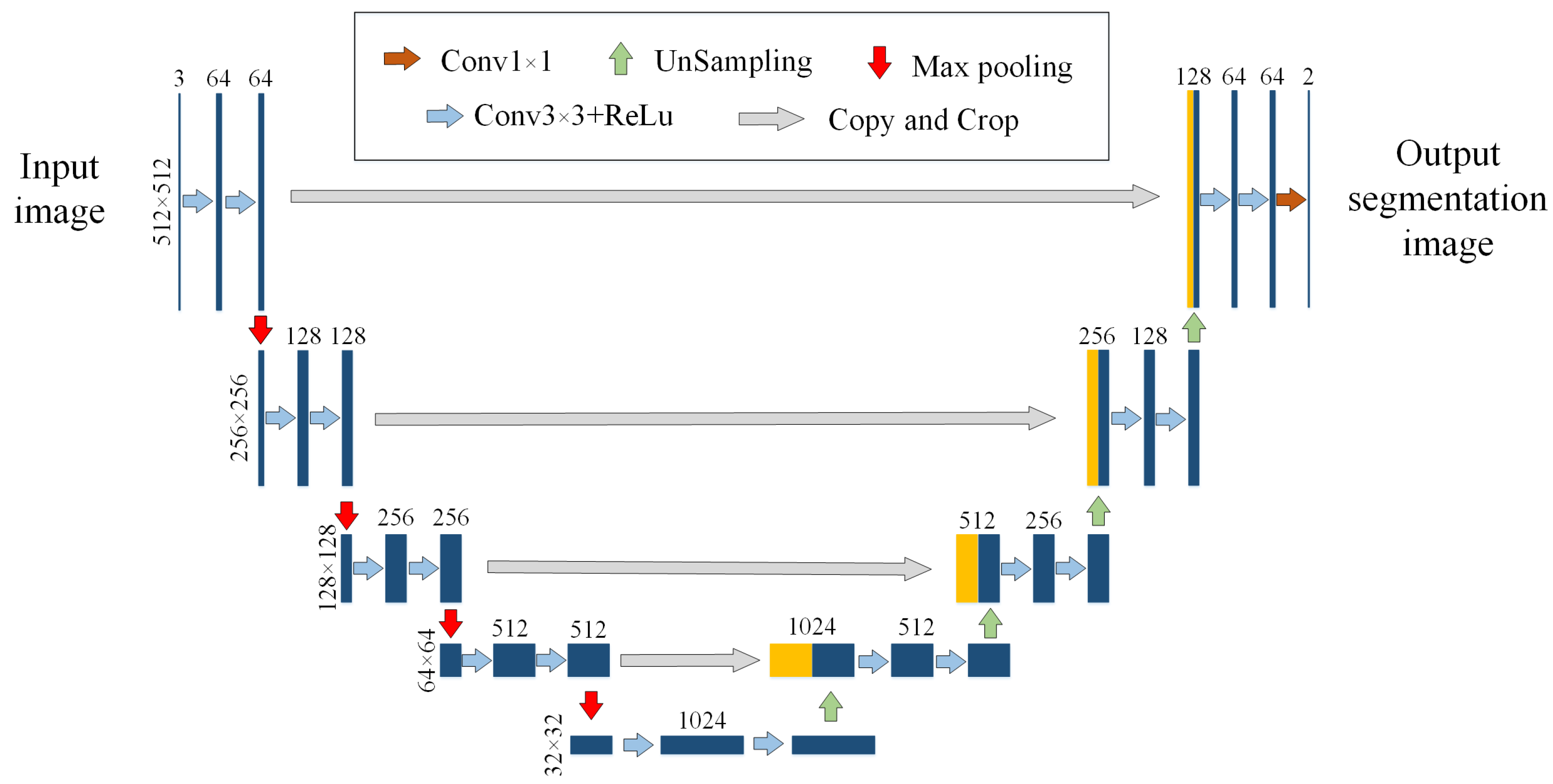

17], in 2015, and applied it in the semantic segmentation of images. The FCN restores the feature map to the image’s input size through deconvolution, so as to achieve pixel-by-pixel prediction. Subsequently, many new semantic segmentation networks were derived in order to improve the FCN, including Unet [

18], SegNet [

19], PSPNet [

20], DeepLab [

21], etc. In particular, the Unet network has a completely symmetric encoder–decoder structure, which preserves the simplicity of the structure while improving its performance. Many scholars have undertaken efforts based on the application of Unet to the automatic extraction of information on buildings with remote-sensing images. Oktay et al. introduced attention gates into the skip connections between the encoder and decoder in Unet. Attention gates can be used to suppress irrelevant regions and focus on useful salient features during network training [

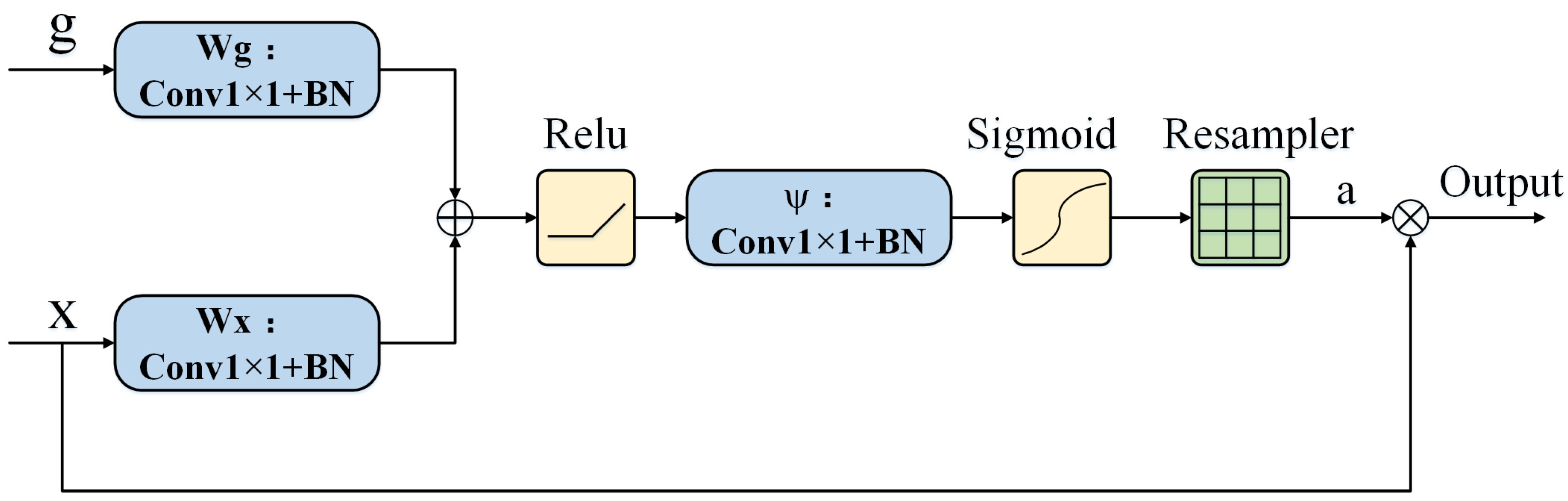

22]. He et al. proposed a first-order and second-order hybrid attention network [

23] and applied it to Unet to improve the correlation between the intermediate features. Shi et al. introduced a spatial-channel attention mechanism on the basis of Unet [

24] to improve the feature-extraction ability of the neural networks. The structure of Unet can also be improved by using other networks. Ji et al. improved Unet by adapting FPN, and proposed SuUnet [

25] to deal with objects of different sizes in remote-sensing images or multi-scale problems in the images of different resolutions. Delibasoglu et al. employed Inception blocks instead of traditional convolutional layers in the coding phase of Unet [

26]. This approach enabled the model to capture building features at multiple scales by utilizing parallel convolution kernels of different sizes. Abdollahi et al. employed dense connections, ConvLSTM [

27], and the SE mechanism [

28] to suppress irrelevant information [

29] while incorporating prior information on buildings. Although deep-learning-based methods are superior in the extraction of information on buildings from images, large numbers of building samples are required in the network-training processes. However, in real-world-application scenarios, the sample data sizes are often limited, leading to unsatisfactory learning results when using semantic segmentation networks.

Obtaining strong network performances with limited data-set capacity is a common problem. At present, an intuitive solution is to use transfer learning, which involves the transfer of the pre-training weight of related fields and fine-tuning the network. In medical imaging, label scarcity is a common problem. Therefore, transfer learning has been widely applied in the field of medical imaging, in the form of by domain adaptation (DA). This is a transfer-learning method in which the source task is the same as the target task, but the data distribution of the source domain is different from that of the target domain. In recent years, scholars have proposed a series of DA methods applicable to the medical field. Khan et al. [

30] pre-trained the VGG network on the ImageNet dataset and then fine-tuned it using small amounts of labeled magnetic resonance imaging (MRI) data for the classification of Alzheimer’s disease (AD). Gu et al. [

31] proposed a two-stage adaptation approach based on an intermediate domain for use in situations in which there are insufficient target samples with which to fine-tune the model directly. All these examples are domain-adaptive methods under supervision. Since data-set annotation is time-consuming, scholars have proposed a series of unsupervised domain-adaptive (UDA) methods. Wollmann et al. [

32] proposed an UDA method based on style transfer learning. They first used CycleGAN to transform whole-slide images (WSIs) of lymph nodes from a source domain to the target domain. Next, DenseNet was used to classify breast cancers. However, due to the lack of class-level joint distribution in adversarial learning, the aligned distribution is not discriminative. In order to solve this problem, Liu et al. [

33] proposed a novel margin-preserving self-paced contrast learning (MPSCL) model to facilitate the classification of the alignment of perceptual features through cross-domain contrast learning, which effectively improved the segmentation performance of the network. Yao et al. [

34] proposed an unsupervised domain-adaptive framework. The framework achieves full image alignment to alleviate the domain-offset problem, and introduces 3D segmentation in domain-adaptive tasks to maintain semantic consistency at deep levels. In some areas of confidential medical imaging, source datasets are often unavailable. To solve this problem, Liu et al. [

35] proposed a passive semantic segmentation adaptive framework, which can use knowledge migration to obtain knowledge in the source domain from existing source models, and then achieve domain adaptation. Stan et al. [

36] developed an UDA algorithm that does not require access to source-domain data during target adaptation. This method encodes the source-domain information as an internal distribution, which is used to guide the adaptation in the absence of source samples. The successful application of transfer learning in the medical field also provides a new approach to the high-precision extraction of information on buildings with small sample sizes.

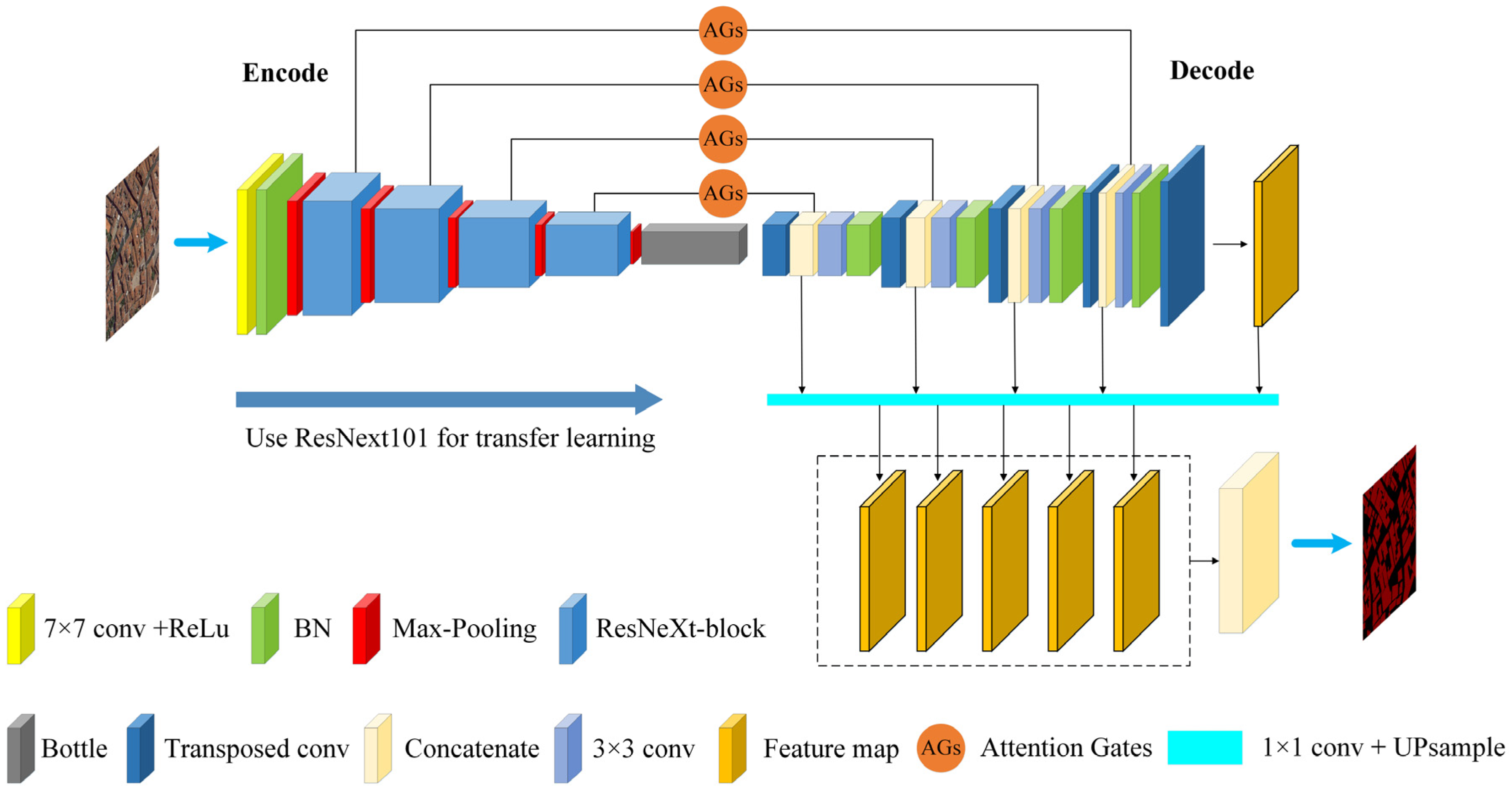

To address the challenge of achieving high-precision building segmentation with neural networks with small sampling sizes, this paper proposes a segmentation network, ResFAUnet, based on transfer learning and multi-scale fusion. The contributions of this paper are as follows:

- (1)

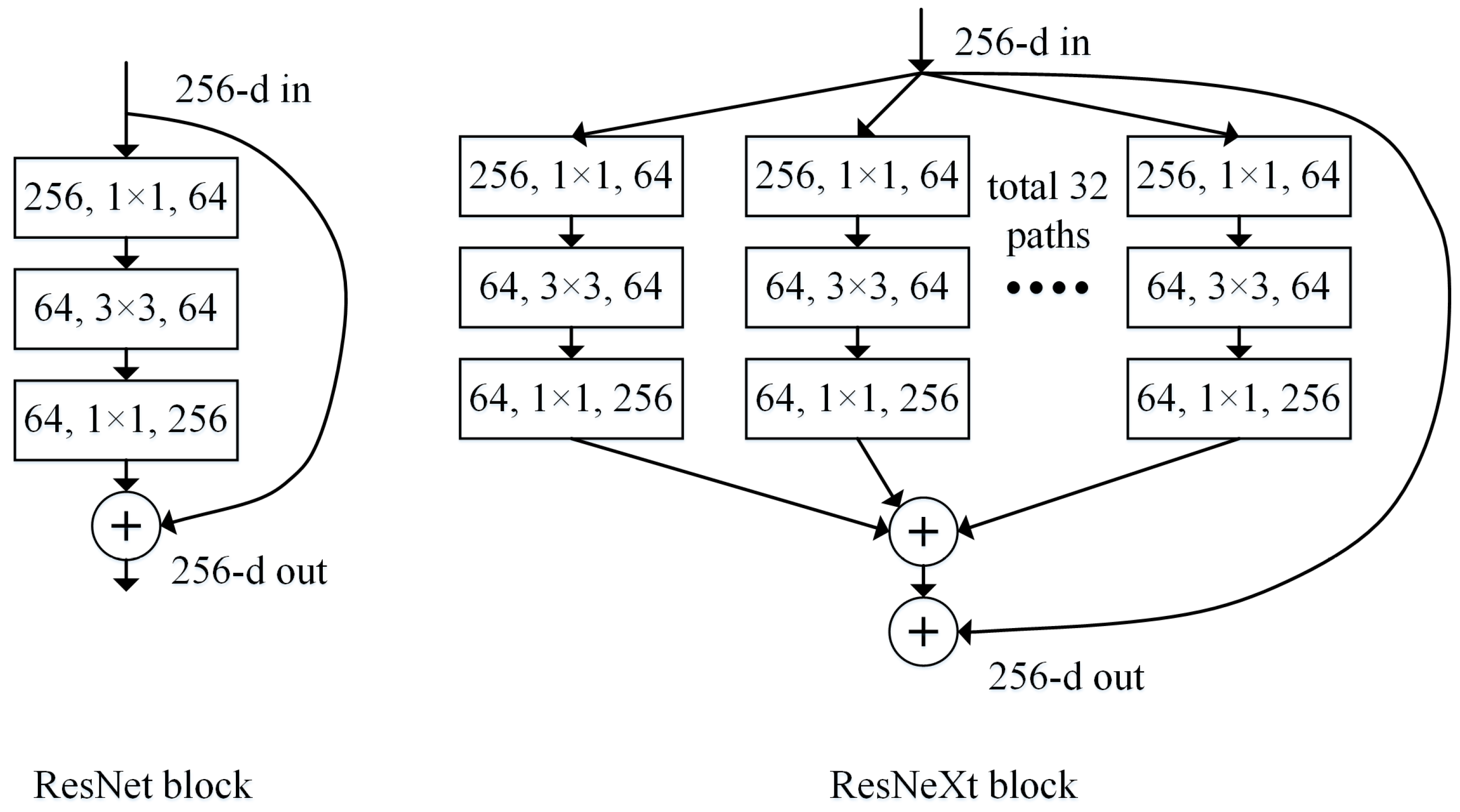

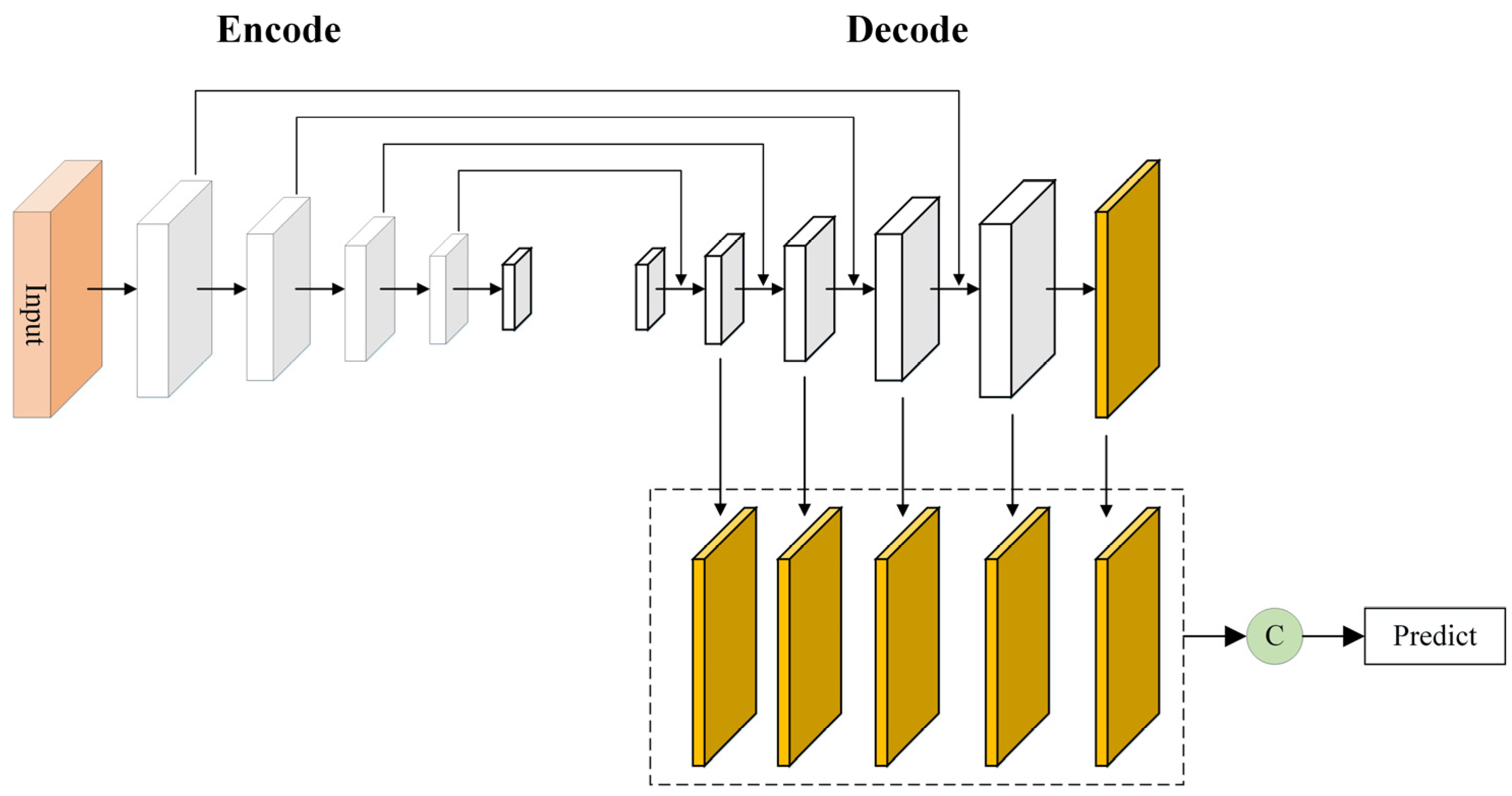

In the encoding part of the network, the ResNeXt101 network with group convolution is used for feature extraction, and pre-trained weights are introduced in the transfer learning, which improves the feature-learning performance of the network with limited samples.

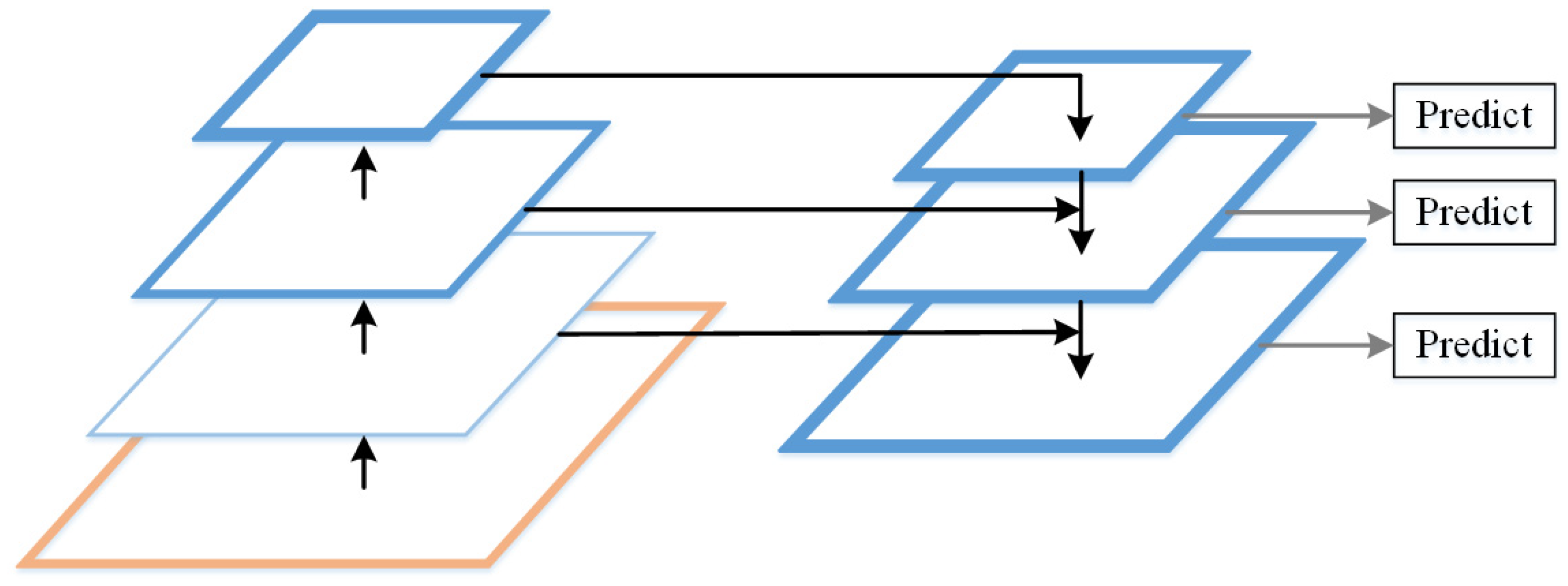

- (2)

In the decoding part of the network, the original single-chain up-sampling feature-fusion mode is replaced by the multi-chain, while in the decoding part, the features from different up-sampling scales are stacked to realize direct information fusion across scales.

The subsequent sections of this paper are organized as follows.

Section 2 introduces the network structure of ResFAUnet.

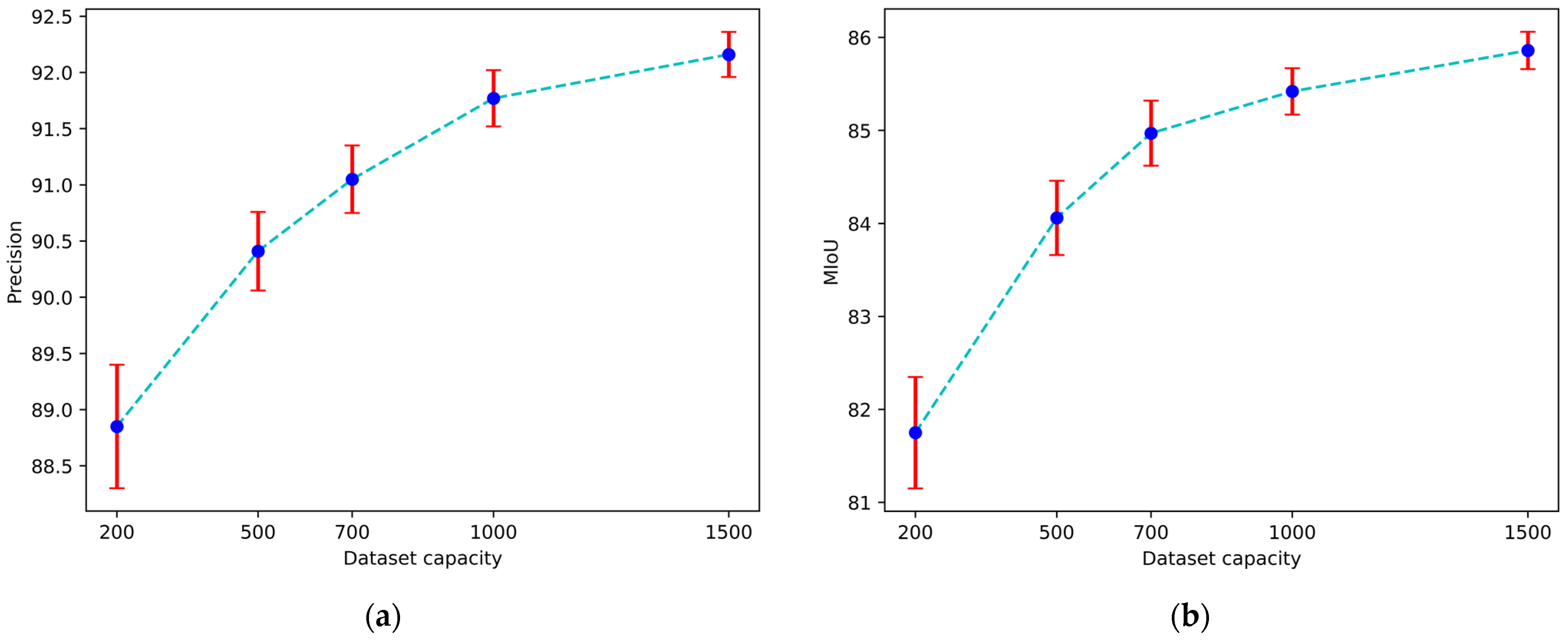

Section 3 introduces the datasets, parameter setting, and evaluation indexes used in the experiment, describes the ablation experiment, compares the performance of ResFAUnet with those of other networks, and analyzes the experimental results.

Section 4 discusses the influence of some of the training parameters (data-set capacity, input-image size) on the performance of the ResFAUnet network during the training process. Finally,

Section 5 summarizes the conclusions of this study.

3. Experiments and Analysis

In this section, the selection of the public datasets, the setting of the network parameters, and the evaluation index are introduced first. Next, we report the numerous experiments that were conducted to verify the performance of ResFAUnet. Finally, the experimental results are analyzed and discussed.

3.1. Selection of Public Datasets

In this paper, three public datasets were selected to evaluate the performance of the ResFAUnet network. In order to test the performance of ResFAUnet in cases of insufficient data, a total of 500 images were selected as experimental samples from the training set and test set from the three datasets, and the segmentation results were evaluated on the test set after training.

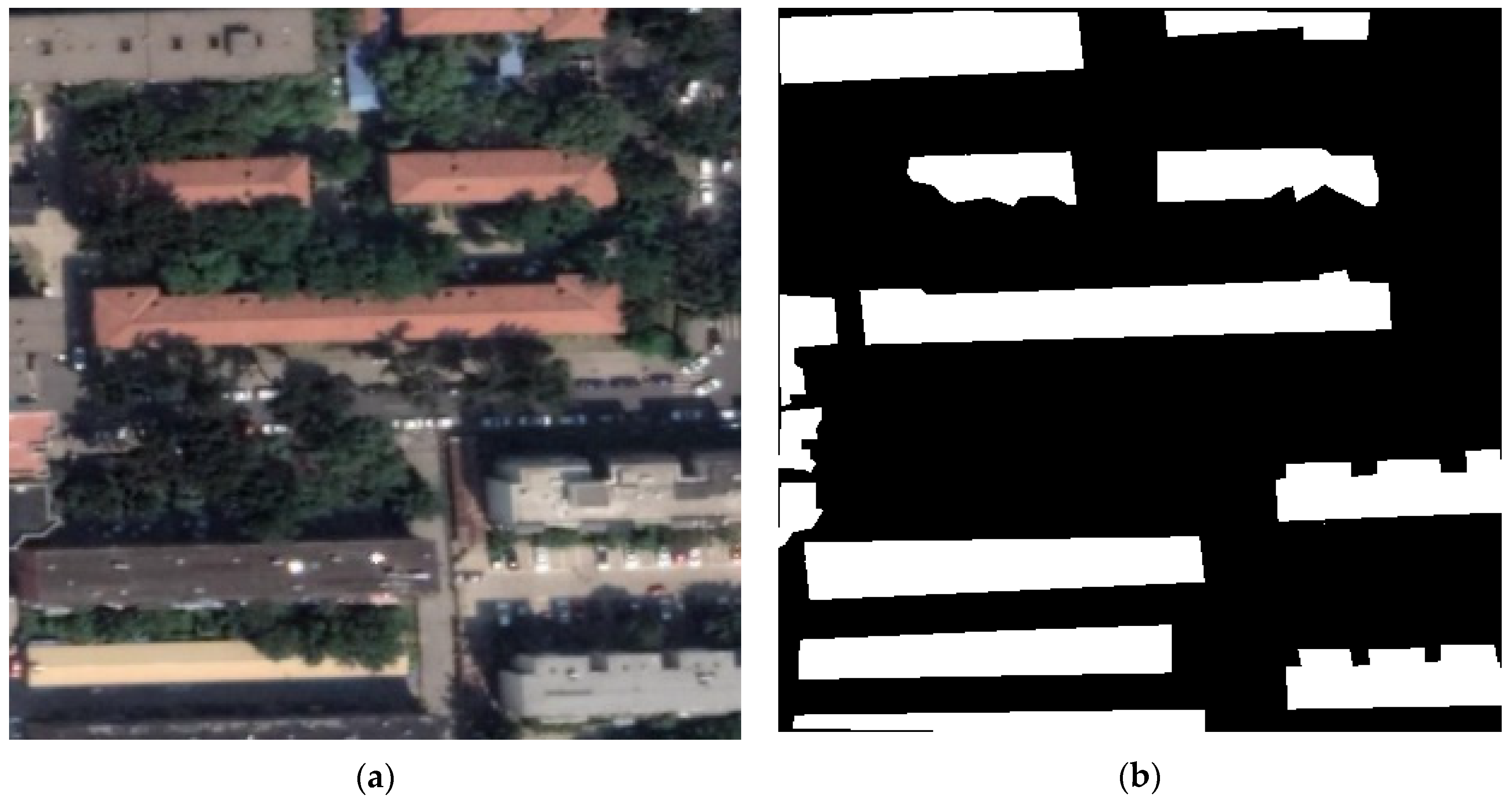

Inria Aerial Image Labeling Dataset: This dataset [

37] includes 180 aerial orthographic corrected color images with public labels, covering an area of 810 square kilometers, with a spatial resolution of 0.3 m and an image size of 5000 × 5000 pixels. It covers five different urban settlements (Austin, Chicago, Kitsap County, Western Tyrol, and Vienna), ranging from densely populated areas to alpine towns. Each subset of the dataset contains a different image of each city, which was used to evaluate the network’s generalization ability.

Figure 7 shows some examples.

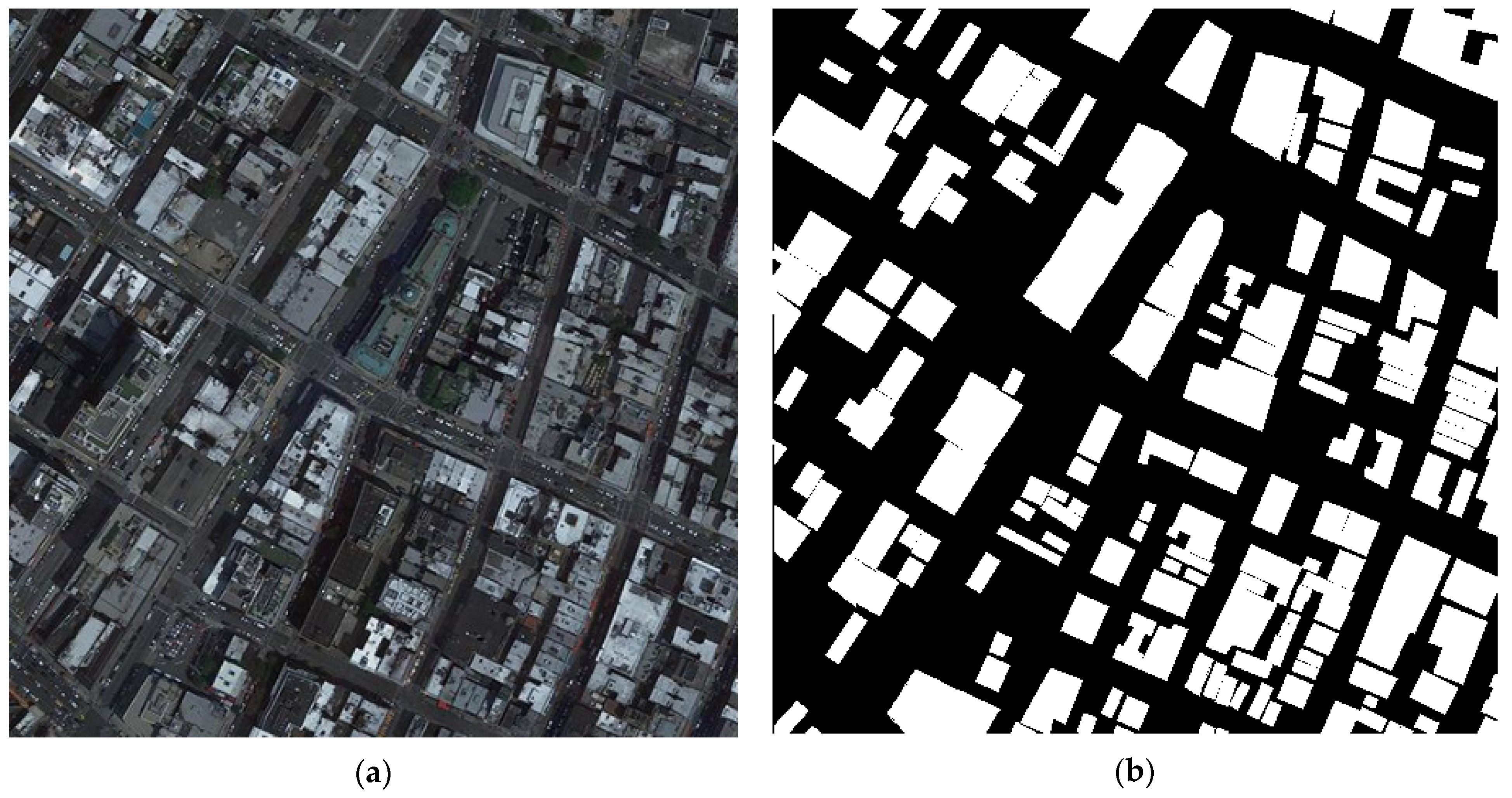

Building instances of typical cities in China: This dataset [

38] is composed of 7260 images from four Chinese cities (Beijing, Shanghai, Shenzhen, and Wuhan) and 63,886 building examples. The size of each image is 500 × 500 pixels and the spatial resolution is 0.29 m. The dataset was used to test the segmentation performance of the network for localized buildings.

Figure 8 shows some examples.

WHU Building Dataset: This dataset [

39] is composed of aerial dataset, a global urban-satellite dataset, and an East Asian urban-satellite dataset. The Global Cities Satellite dataset collects remote-sensing images of cities around the world from satellite resources such as QuickBird, Worldview series, IKONOS, and ZY-3. It contains 204 512 × 512-pixel images with spatial resolutions ranging from 0.3 m to 2.5 m. The use of this dataset is challenging due to the variations between different satellites, sensors, and atmospheric conditions. The dataset was used to test the robustness of the network.

Figure 9 shows some examples.

3.2. Parameter Settings

3.2.1. Image Setting

Due to the inconsistency in the image sizes across the different datasets, it was not feasible to use them directly for training. To address this issue, the original images were all preprocessed to obtain 512 × 512-pixel images across the three datasets. For the Inria dataset, the images were expanded from 5000 × 5000 pixels to 5120 × 5120 pixels using bilinear interpolation and then trimmed to 512 × 512 pixels. Similarly, the image sizes in the China dataset were expanded to 512 × 512 pixels by using bilinear interpolation. The WHU datasets were simply retained in their original size without any modification. In order to simulate a limited dataset with a small sample size, for the Inria dataset and the China dataset, 500 images (450 in training set and 50 in test set) were selected. Since the number of images in the WHU dataset was only 204, we also used the 9:1 ratio to divide the training set and the test set.

3.2.2. Training Setting

The Pytorch framework was used to train the ResFAUnet model. During the training, the same parameters were used for all three datasets; the learning rate was set to 0.005, and the batch size was set to 2. This is the maximum size that the graphics card can support at pixel sizes of 512 × 512. The SGD (stochastic gradient descent) optimizer with a momentum item of 0.9 was used to train the network. The SGD optimizer is one of the most commonly used optimization algorithms in deep learning. In each iteration, it randomly selects a small batch of samples to calculate the gradient of the loss function and updates the parameters with the gradient. This randomness makes the algorithm more robust, prevents it from falling into local minima, and improves its training speed. The training graphics card was NVIDIA GeForce GTX 1080Ti (It is made by Nvidia Corporation and sourced from Beijing, China), and the training was performed for 100 epochs. The CE loss (cross-entropy loss) was used to calculate the loss. The CE loss is a loss function based on cross entropy, which can be used to determine how close the actual output is to the desired output. The CE loss uses cross entropy to measure the difference between the output of the network and the label, and uses this difference to update the network parameters through back propagation. The formula for CE loss is as follows:

where

C represents the number of categories,

pi represents the true value, and

qi represents the predicted value.

3.3. Evaluation Indexes

To evaluate the network performances, the

Pre (precision),

MIoU (mean intersection over union),

Rec (recall), and

F1 (

F1 score) were employed. The Pre index represents the probability that a certain category of prediction is correct in the forecast results. The

MIoU index refers to the ratio of the intersection and the union of each type of predicted result and real value, the result of the summation, and then the average. The

Rec index refers to the probability that a category will be predicted correctly in the true value. The

F1 index is the harmonic average of

Pre and

Rec, and it is used to evaluate the comprehensive performance of the network. The four indicators are defined as follows:

where

TP (true positive) refers to the part of the input image that is actually positive and predicted to be positive. The

FP (false positive) represents the part of the input image that is actually negative and predicted to be positive. The

TN (true negative) represents the part that is actually negative sample and predicted to be negative. The

FN (false negative) means the part that is actually a positive sample and is predicted to be a negative sample.

3.4. Comparisons and Analysis

In this section, ResFAUnet is compared with Unet, SuUnet, FCN, SegNet, and other mainstream deep-learning networks. The training parameters were kept the same for the different networks to conduct the training using the Inria dataset, China dataset, and WHU dataset. The performance of ResFAUnet is evaluated through qualitative and quantitative analyses of the results.

3.4.1. Inria Dataset

Table 1 presents the quantitative evaluation indexes of the different models evaluated on the Inria dataset. As shown in the table, the ResFAUnet network proposed in this paper outperformed the Unet, FCN, SuUnet, and SegNet networks in all four indexes. Due to the high resolution of the Irina dataset, all of the four networks except Unet achieved precision and MIoU values above 90% and 85%, respectively. Compared to the Unet, the precision index of the ResFAUnet network increased by 3.23%, while that of the SuUnet network with the multi-scale fusion structure improved by 0.97%, and those of the FCN network and the SegNet network with the transfer learning achieved improvements of 1.19% and 0.89%, respectively. Among all the comparison networks, ResFAUnet had the highest

F1 score and the lowest standard deviation, which means that the model was the most stable. Additionally, by taking the MIoU index into account, a better balance was achieved between recall and precision.

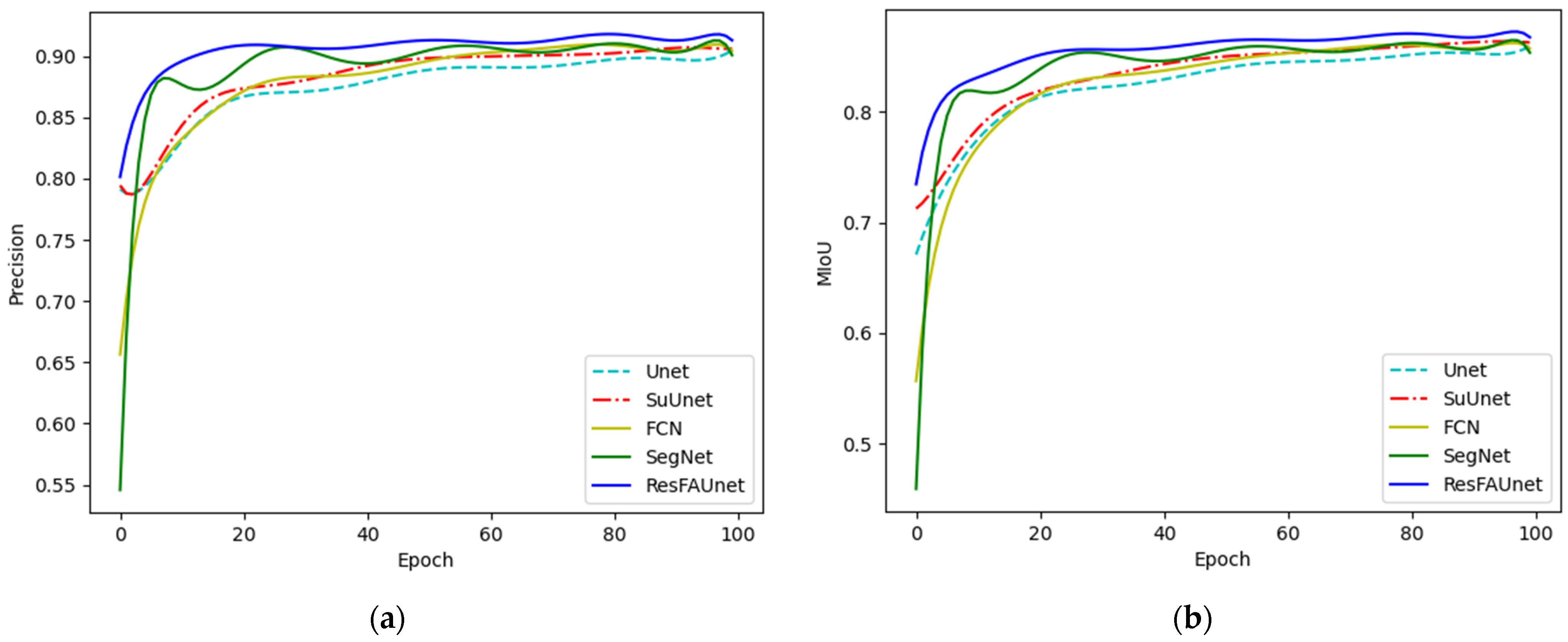

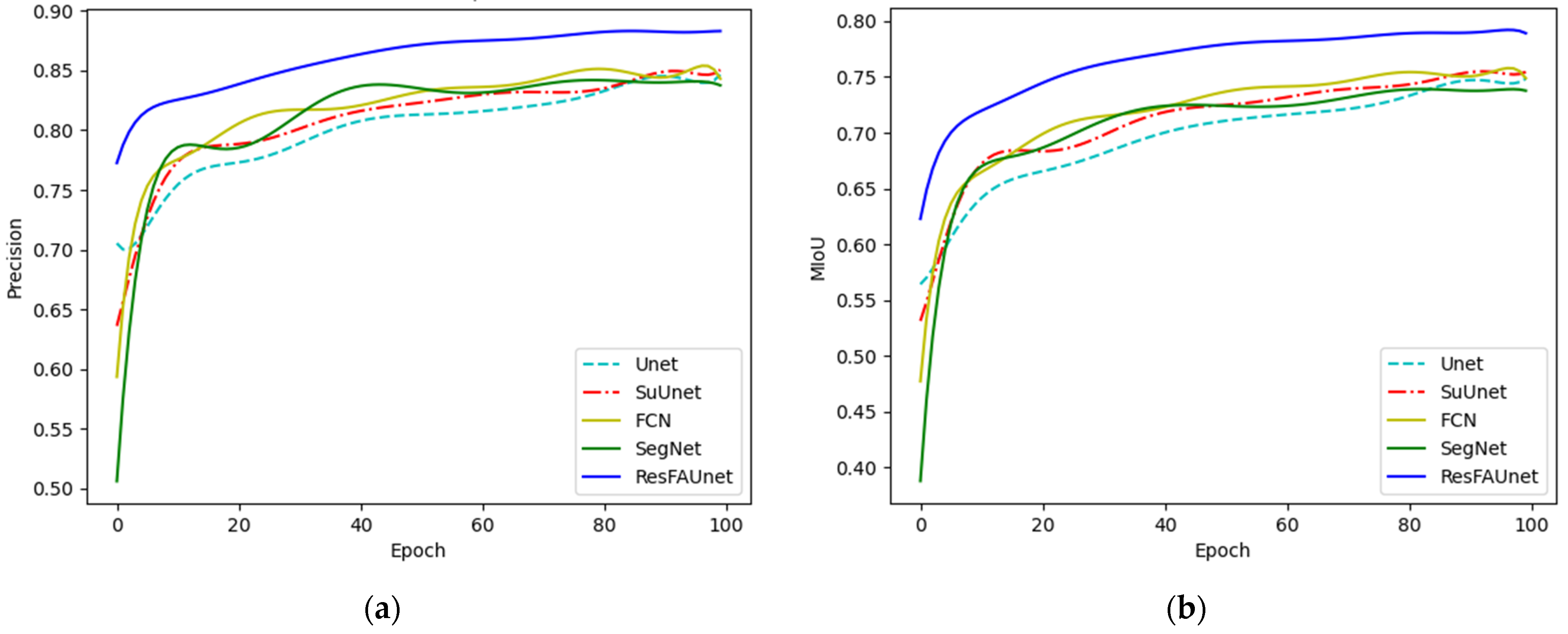

Figure 10 shows the comparison of the precision and MIoU indexes of the Inria dataset among the five networks. From the graph, it can be seen that ResFAUnet achieved the highest precision and MIoU indicators by using fewer epochs.

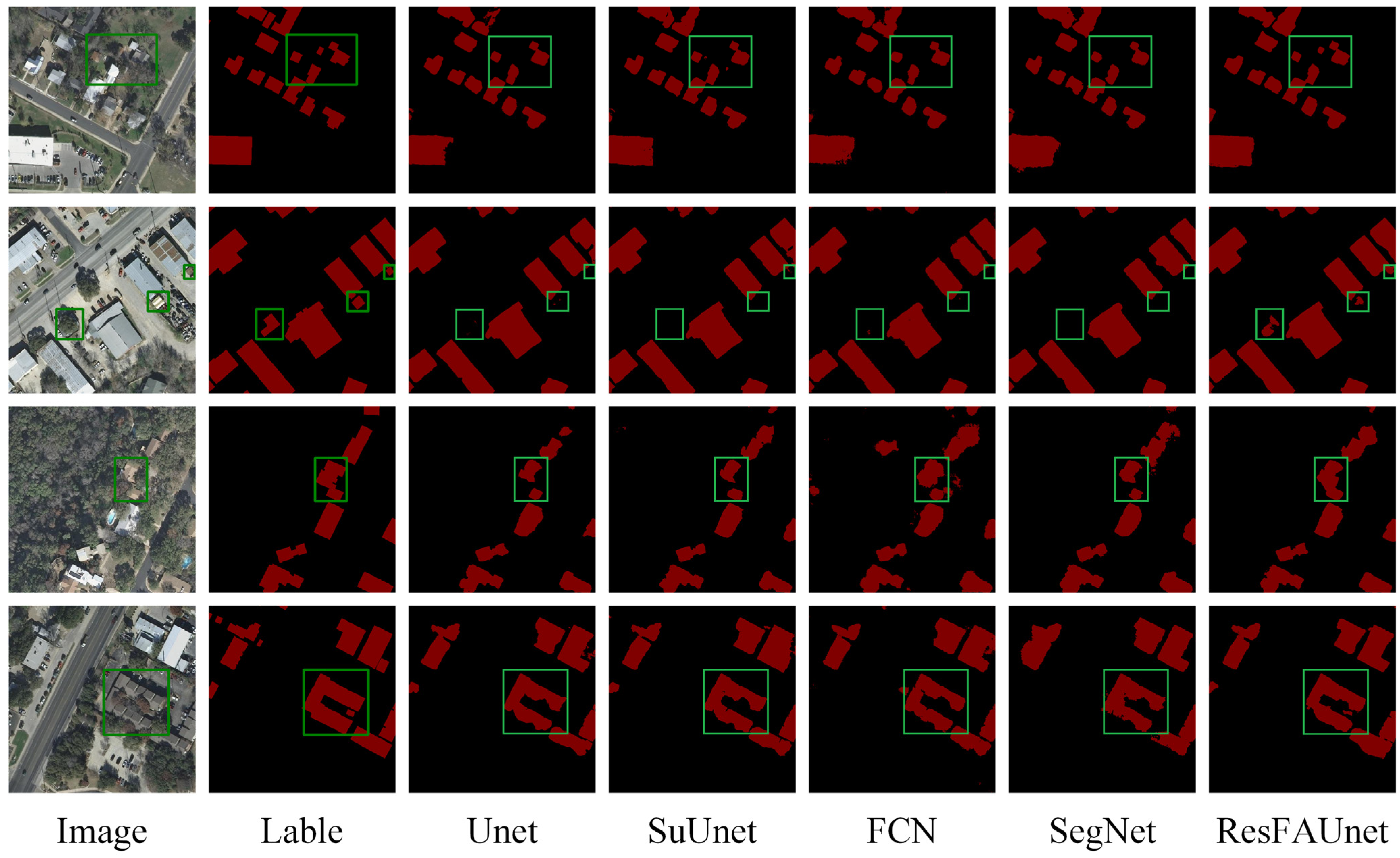

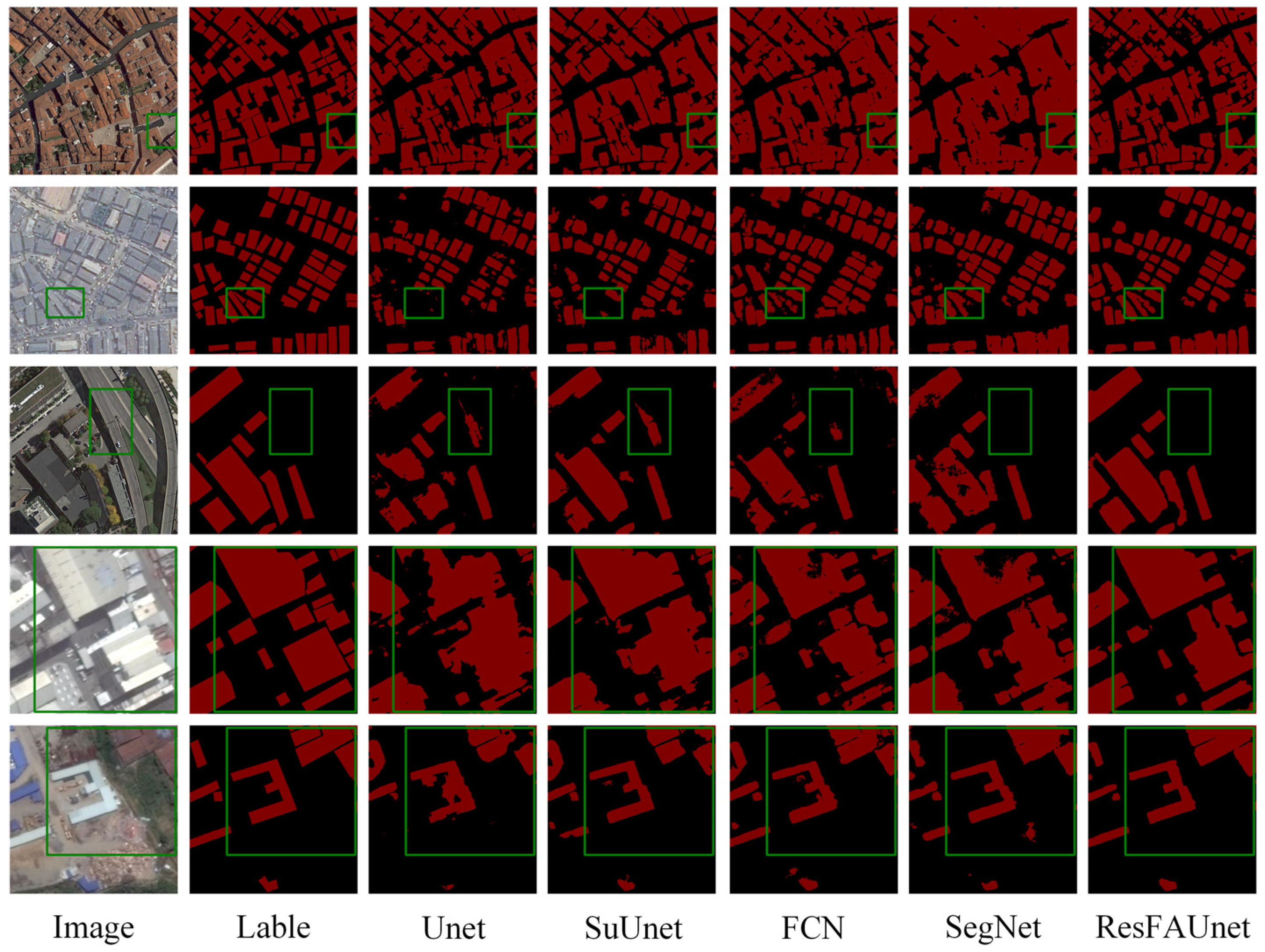

Figure 11 shows the test image results under different scenarios. The first imagine is that of a small target building. For the building in the green box in the figure, the Unet, SuUnet, FCN, and SegNet networks did not detect the smallest building, and only the ResFAUnet network completely segmented all the buildings. In the green box in the second image, only ResFAUnet successfully detected the outline of the occluded building. In the third image, the other four networks only segmented part of the outline of the building, while only ResFAUnet segmented the complete outline of the building. In the fourth scenario, the differentiation between the building and the background was not high. In the green box in the image, only ResFAUnet completely divided all the buildings. Therefore, the ResFAUnet network proposed in this paper achieved good results in small-target-building segmentation and building-contour integrity.

3.4.2. China Dataset

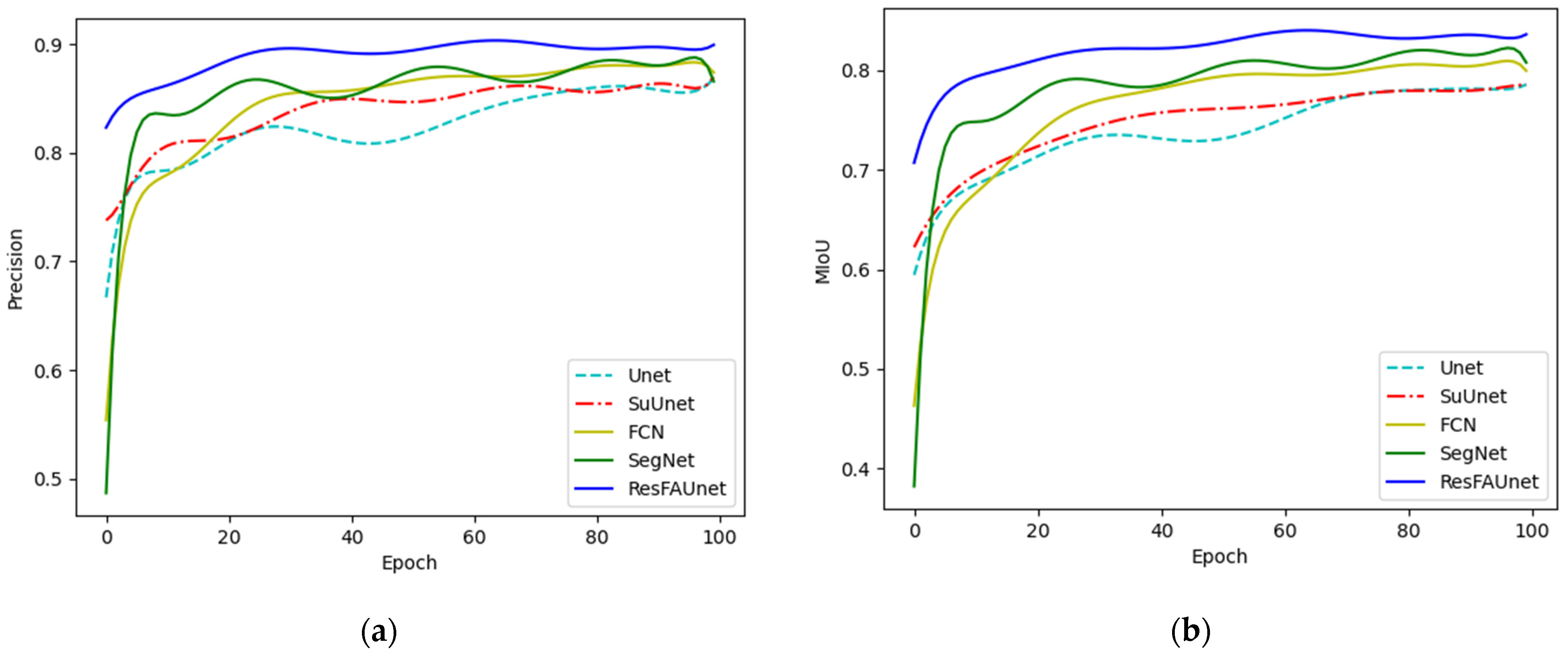

Table 2 shows the quantitative evaluation indexes of various models tested on the China dataset. It can be seen that the test scores of the five models using the China dataset were all lower than those using the Inria. This result was attributed to the fact that the China dataset contains image data from Beijing, Shanghai, Shenzhen, and Wuhan, where the building scenes are relatively complex. In particular, the presence of shadows between high-rise city buildings creates significant challenges for segmentation. Additionally, the similarity between city roads and buildings makes it difficult to distinguish between them. Despite these challenges, ResFAUnet still achieved good segmentation results, which were significantly higher than those of Unet, FCN, SuUnet, and SegNet in all four indexes. Compared to Unet and SuUnet, the precision improved by 5.36% and 3.07%, respectively. Compared to FCN and SegNet, which also adopt transfer learning, the improvements were 1.75% and 1.28%, respectively. Furthermore, the precision and MIoU indexes of the five models using the China dataset were compared, as shown in

Figure 12. From the graph, it can be seen that the ResFAUnet curve was the most stable, requiring only a few epochs to achieve convergence, and its performance was superior to those of the four other comparison networks.

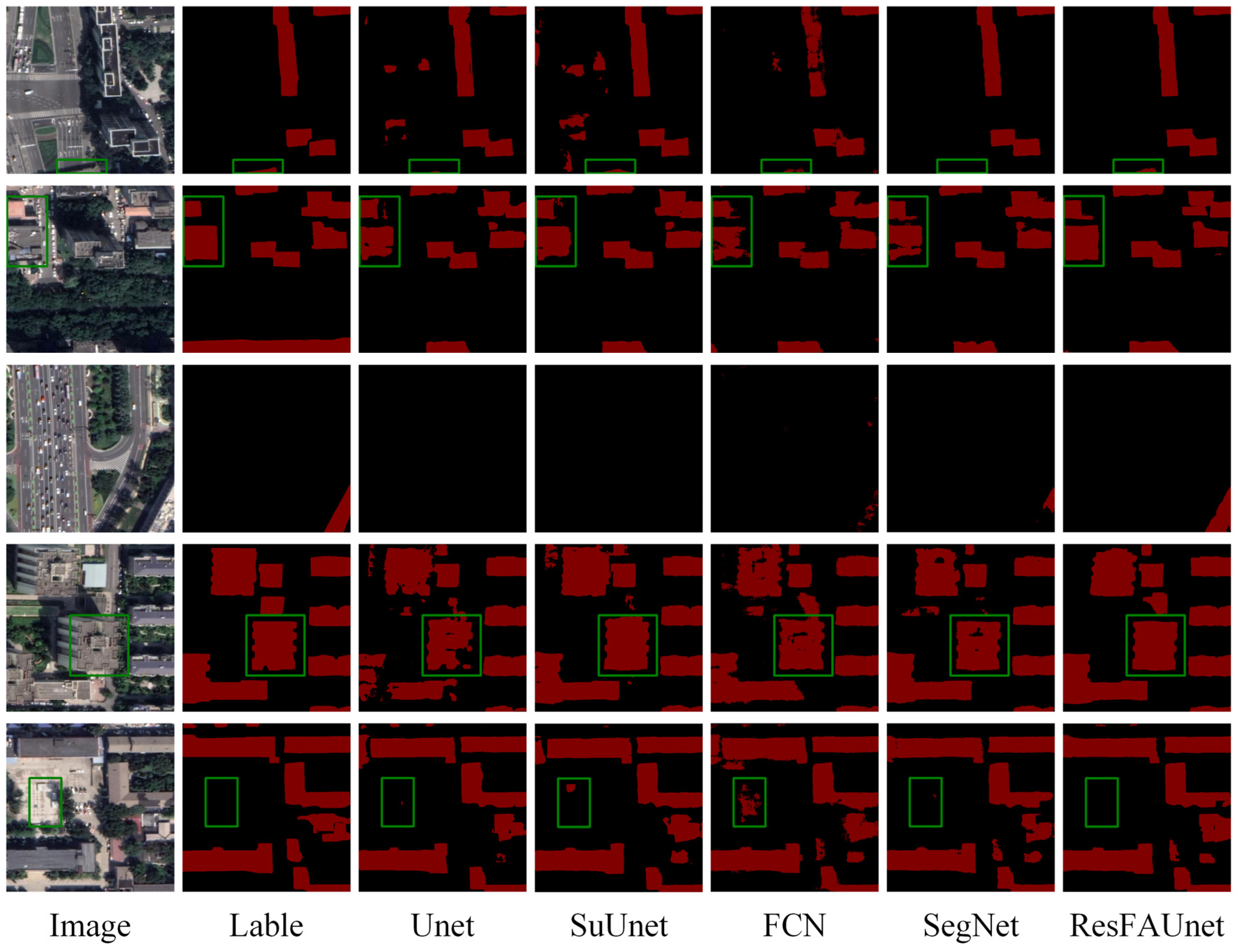

Figure 13 shows the segmentation results in various scenarios. In the first scenario, both the building and the road are present. The Unet, SuUnet, and FCN wrongly identified roads as buildings. Although SuUnet performed better in distinguishing between roads and buildings, it failed to identify any buildings in the green-box area; only ResFAUnet accurately segmented all the buildings. In the second and fourth scenarios, large buildings were tested. The Unet, SuUnet, FCN, and SuUnet networks partially segmented the building outlines, and large buildings with cavities inside, while the proposed ResFAUnet network completely segmented the outlines of the buildings. As with the first scenario, the third scenario was used to evaluate the network’s ability to distinguish between roads and buildings. Only ResFAUnet was able to segment the entire outlines of the buildings, while Unet and SuUnet did not even recognize the buildings. The fifth scenario featured a ground area that appeared similar to the roof of a building. Only ResFAUnet successfully identified it, while all the other models falsely identified it as a building. It can be seen that the ResFAUnet network proposed in this paper can distinguish buildings from backgrounds in complex scenarios in Chinese datasets and completely segment the outlines of buildings.

3.4.3. WHU Dataset

Table 3 shows the evaluation indexes tested with different models on the WHU dataset. It can be seen from

Table 3 that the precisions of all the models were below 90%, and that of the MIoU was less than 85%. This can be attributed to the fact that the number of images in the WHU dataset is small, the image resolution is low, and the environments are different. However, the proposed ResFAUnet still demonstrated acceptable results and outperformed the other networks on all the evaluation indexes. Compared with Unet, SuUnet, FCN, and SegNet, its precision was improved by 5.71%, 2.86%, 3.4%, and 2.54%, respectively. Of the comparison networks, ResFAUnet had the highest

F1 score and the lowest standard deviation, which means that this model was the most stable. Meanwhile, the comparison of the precision and MIoU indexes of the five networks on the WHU datasets is shown in

Figure 14. It can be seen that the precision index and the MIoU index of the ResFAUnet were significantly better, especially in the initial epochs.

Figure 15 displays the test-image results in various scenarios. The first and the second scenarios featured densely populated buildings. The SegNet performed the worst in the first scenario and failed to distinguish the separate buildings accurately. As depicted in the green boxes, only ResFAUnet accurately distinguished the buildings from the ground. All the other four networks misidentified the ground as buildings. In the second scenario, the other four networks did not fully recognize the small buildings in the green boxes; Unet did not recognize any buildings at all. Only ResFAUnet completely recognized the outlines of all the buildings. The third scenario was complex, with buildings and roads both present. In this scenario, the other four networks missed some of the buildings. In the fourth and the fifth scenarios, the contour integrity when the shape of building outline was complex was compared between the models, and only ResFAUnet completely and correctly recognized the contours of the buildings.

3.5. Ablation Experiment

This section reports the ablation experiments that were conducted to verify three aspects of the ResFAUnet network. The first aspect was the feature-extraction structure of the ResNeXt101 network in the encoding part (assessed by comparing the performance of AttentionUnet, ResNeXt101 + AttentionUnet, and ResFAUnet networks on different datasets). The second aspect was the multi-scale fusion structure of the decoding part (assessed by comparing the performance of AttentionUnet, FPN + AttentionUnet and ResFAUnet networks on different datasets). The last aspect was the transfer learning (assessed by comparing the performance of AttentionUnet, ResFAUnet_nopred, and ResFAUnet networks on different datasets). The experiments were conducted using the same dataset and parameter settings.

3.5.1. Verifying the Effectiveness of ResNeXt101 Network

In this section, the effectiveness of the ResNeXt101 network as the encoding part for the feature extraction was verified by comparing AttentionUnet, ResNeXt101 + AttentionUnet, and ResFAUnet.

Table 4 presents the test results of the three networks on Inria dataset, China dataset, and WHU dataset. The results indicate that after replacing the encoding part of AttentionUnet with ResNeXt101, the performance was significantly improved. The precision indexes of the three datasets increased by 1.93%, 3.88%, and 3.25%, respectively, and the other three indexes also improved. This means that the ResNeXt101 network had a stronger feature-extraction ability.

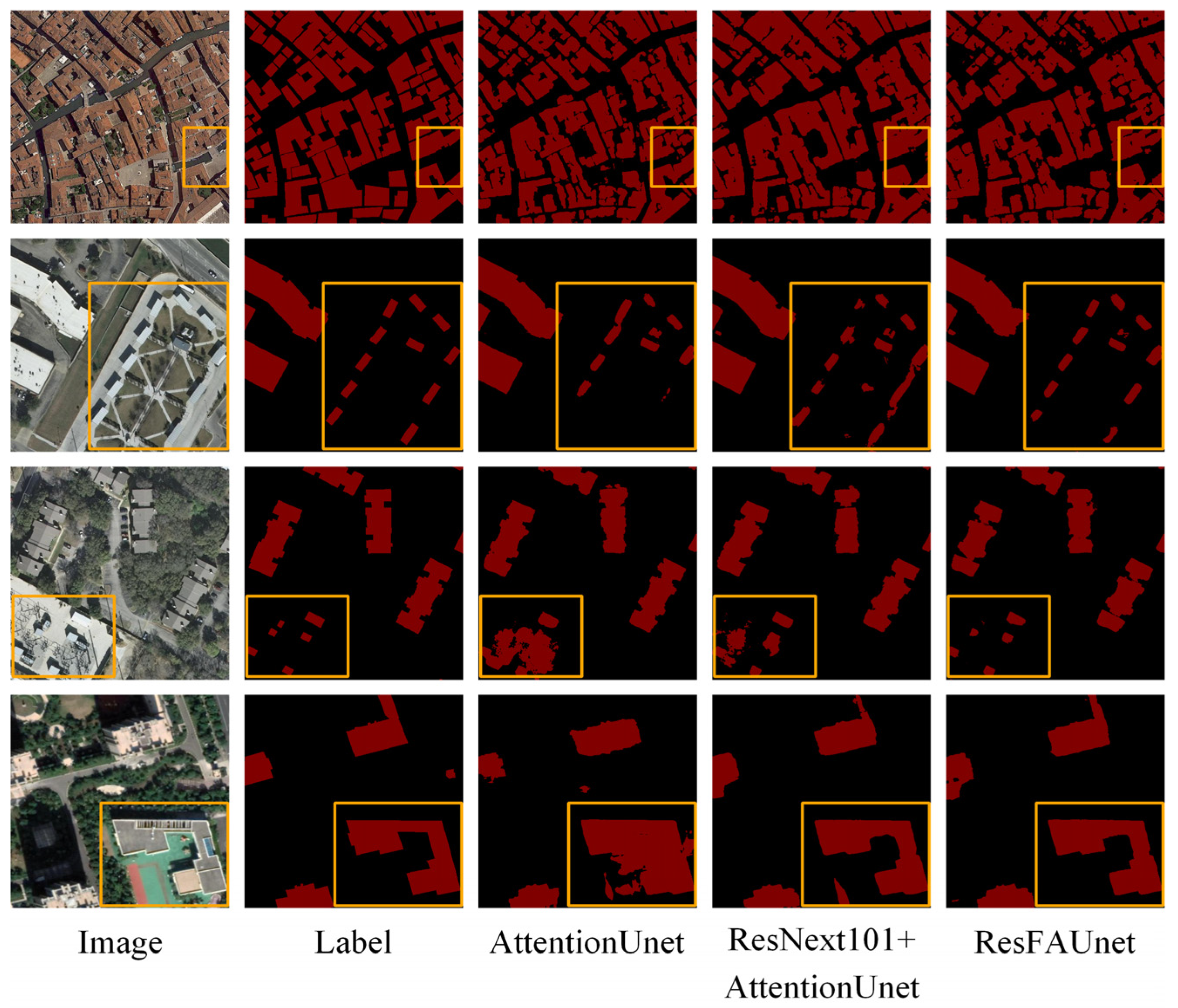

Replacing AttentionUnet’s feature-extraction part with the ResNeXt101 network can improve the feature-extraction ability of the network. For a clearer visualization of this observation, four images are presented from the three datasets, in

Figure 16. The first image is from the WHU dataset, and the results reveal that the segmentation by AttentionUnet left holes in the interior of the building, while the outline of the building was completely segmented by ResNeXt101 + AttentionUnet. The second and third images are from the Irina dataset. The AttentionUnet missed buildings that appeared similar to the background, while ResNeXt101 + AttentionUnet accurately recognized them. The fourth image is from the China dataset, in which AttentionUnet did not accurately distinguish the void inside the building, but ResNeXt101 + AttentionUnet made the correct prediction. In the three datasets, ResFAUnet achieved the best segmentation effect, owing to the introduction of the feature fusion of the decoding part based on ResNeXt101 + AttentionUnet. This demonstrates not only the inheritance of the outstanding feature-extraction ability of ResNeXt101, but also that a more precise segmentation of the building outline was achieved.

3.5.2. Verifying the Partial Feature Fusion in Decoding

In this section, the effectiveness of the partial feature fusion in the decoding part is verified by comparing AttentionUnet, feature fusion + AttentionUnet (FPN + AttentionUnet) and ResFAUnet.

Table 5 shows the test results for the three networks on the Inria dataset, China dataset, and WHU dataset. It can be seen that the network with feature fusion in decoding (FPN + AttentionUnet) had small improvements in all indexes compared with AttentionUnet network, and that the precision indexes of the three datasets improved by 0.71%, 1.91%, and 0.89%, respectively. This means that the multi-scale feature fusion can effectively improve the feature-extraction ability of the network.

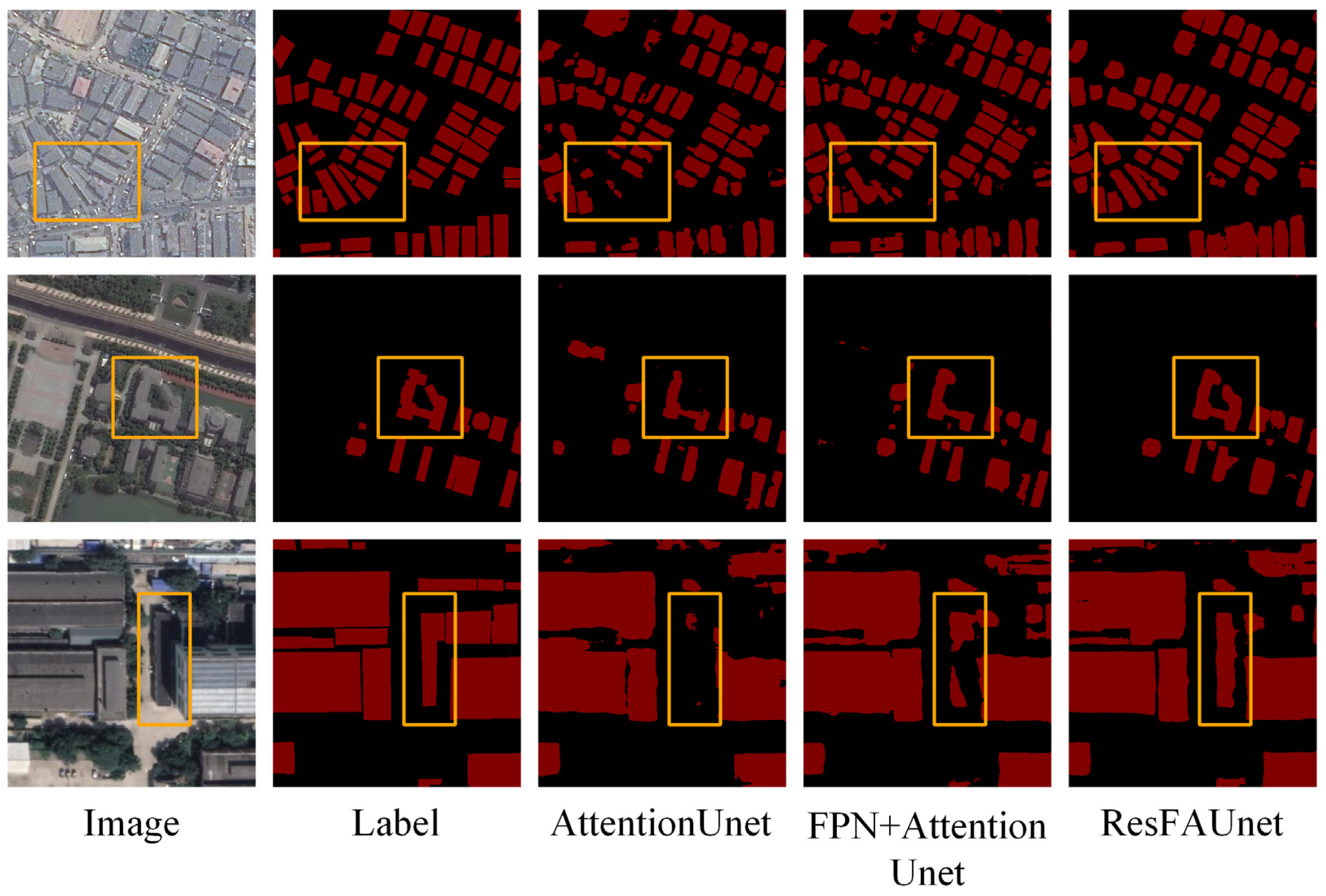

For a better visualization, one image was selected from each of the three datasets, as shown in

Figure 17. In the WHU dataset and China dataset, AttentionUnet missed some of the buildings, while the FPN + AttentionUnet achieved better segmentation results. In the Irina dataset, AttentionUnet incorrectly identified land as buildings, while FPN + AttentionUnet effectively distinguished the ground from buildings. It can be seen that partial feature fusion in decoding can reduce building omissions and false identification to some extent, as well as improving the building-segmentation performance of the network.

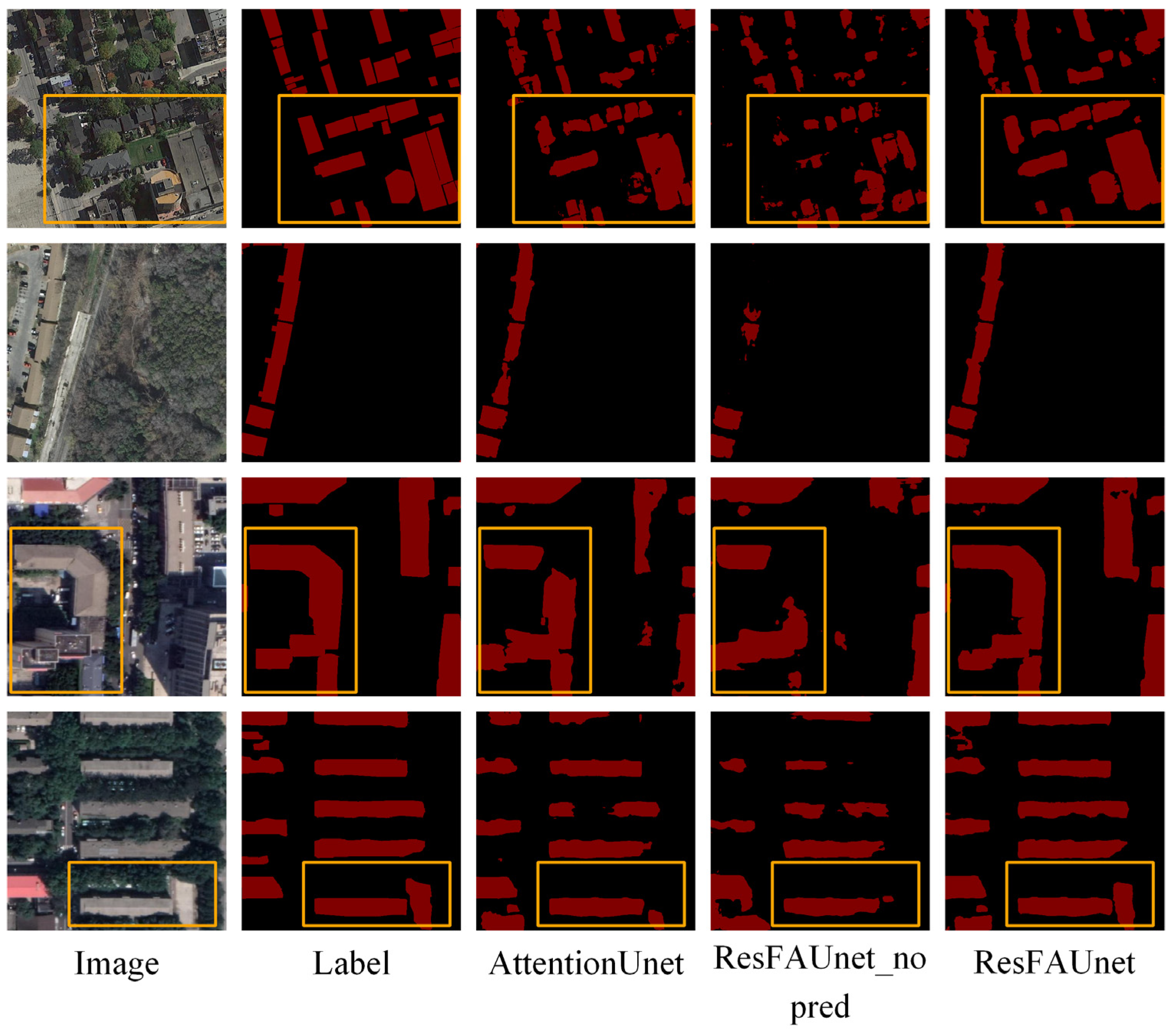

3.5.3. Verifying Transfer Learning

In this section, the effectiveness of transfer learning is verified by comparing AttentionUnet, ResFAUnet_nopred, and ResFAUnet. Specifically, AttentionUnet and ResFAUnet_nopred do not use transfer learning.

Table 6 shows the test results for the three networks on the three datasets. The results of ResFAUnet_nopred were all lower than those of AttentionUnet, while the all indexes of ResFAUnet were higher than those of AttentionUnet. Compared to ResFAUnet_nopred, the precision index of ResFAUnet increased by 4.5%, 5.29%, and 8.16% in three datasets, respectively. These results demonstrate that the introduction of transfer learning can effectively improve network performance in small sample-learning tasks.

For a clearer visualization, four images were selected from Inria dataset, China dataset, and WHU dataset, and shown in

Figure 18. The performance of ResFAUnet_nopred in these four scenarios was the worst, even lower than AttentionUnet’s performance, with a large number of incomplete building-contour segmentations. This was mainly because the dataset size was small, making it difficult for ResFAUnet_nopred to learn a good weight. The ResFAUnet achieved the best performance with the same dataset and parameter settings. Thus, transfer learning can help the network gain better training results with small sample sizes.