Lidar Pose Tracking of a Tumbling Spacecraft Using the Smoothed Normal Distribution Transform

Abstract

1. Introduction

2. Related Work

2.1. Point Cloud Registration

2.2. Satellite Pose Tracking Using Range Data

3. Proposed Methods

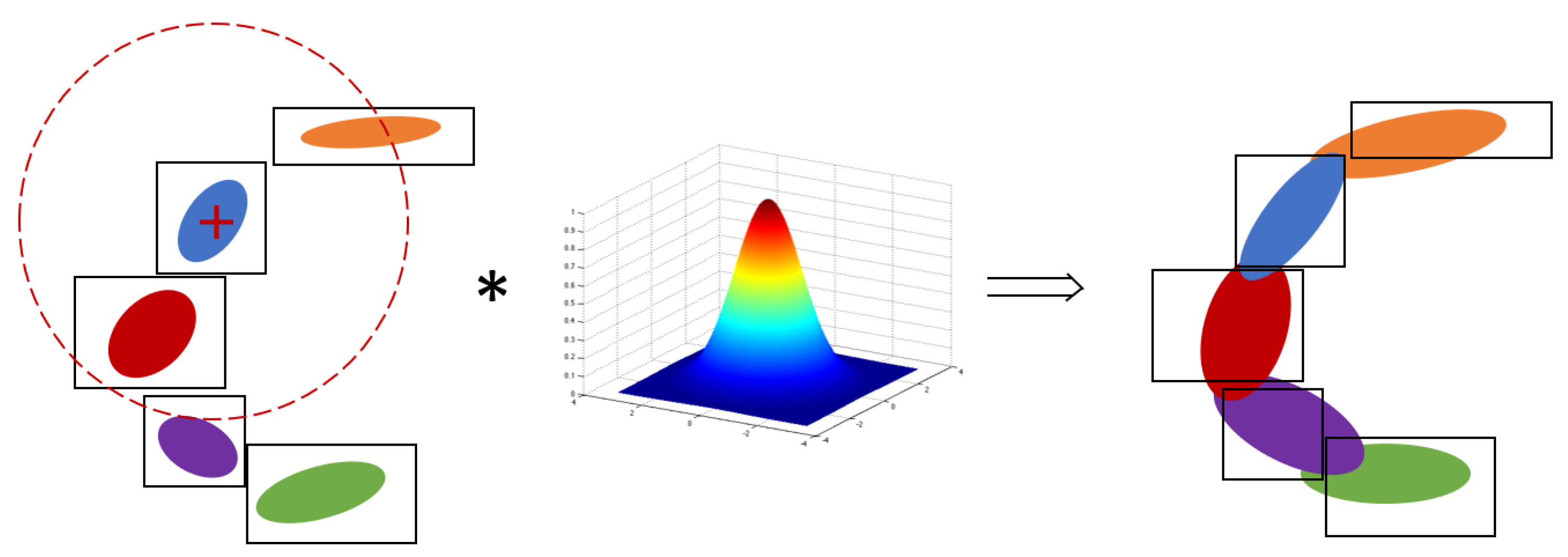

3.1. Smoothed Normal Distribution Transform

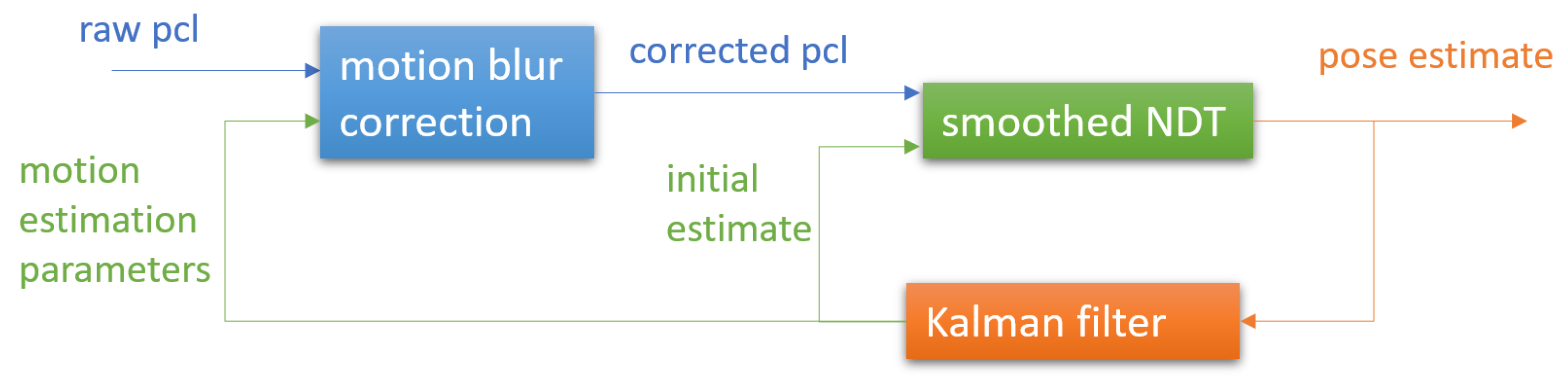

3.2. Conjunction with a Filter and Motion Compensation

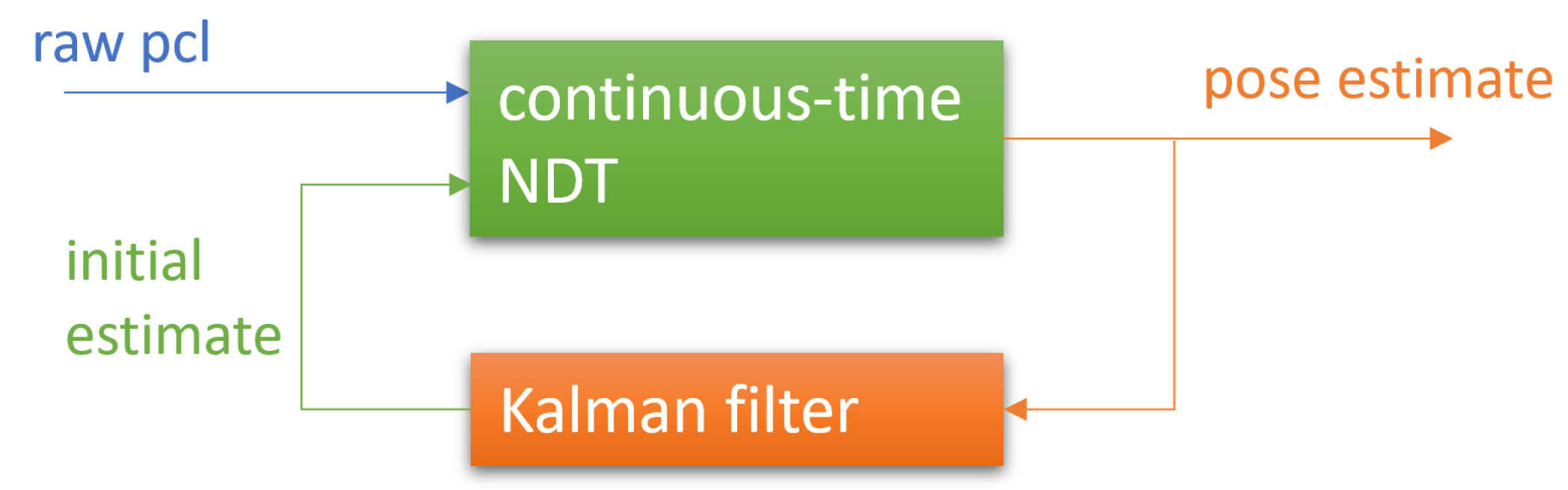

3.3. Continuous-Time NDT

4. Experimental Results

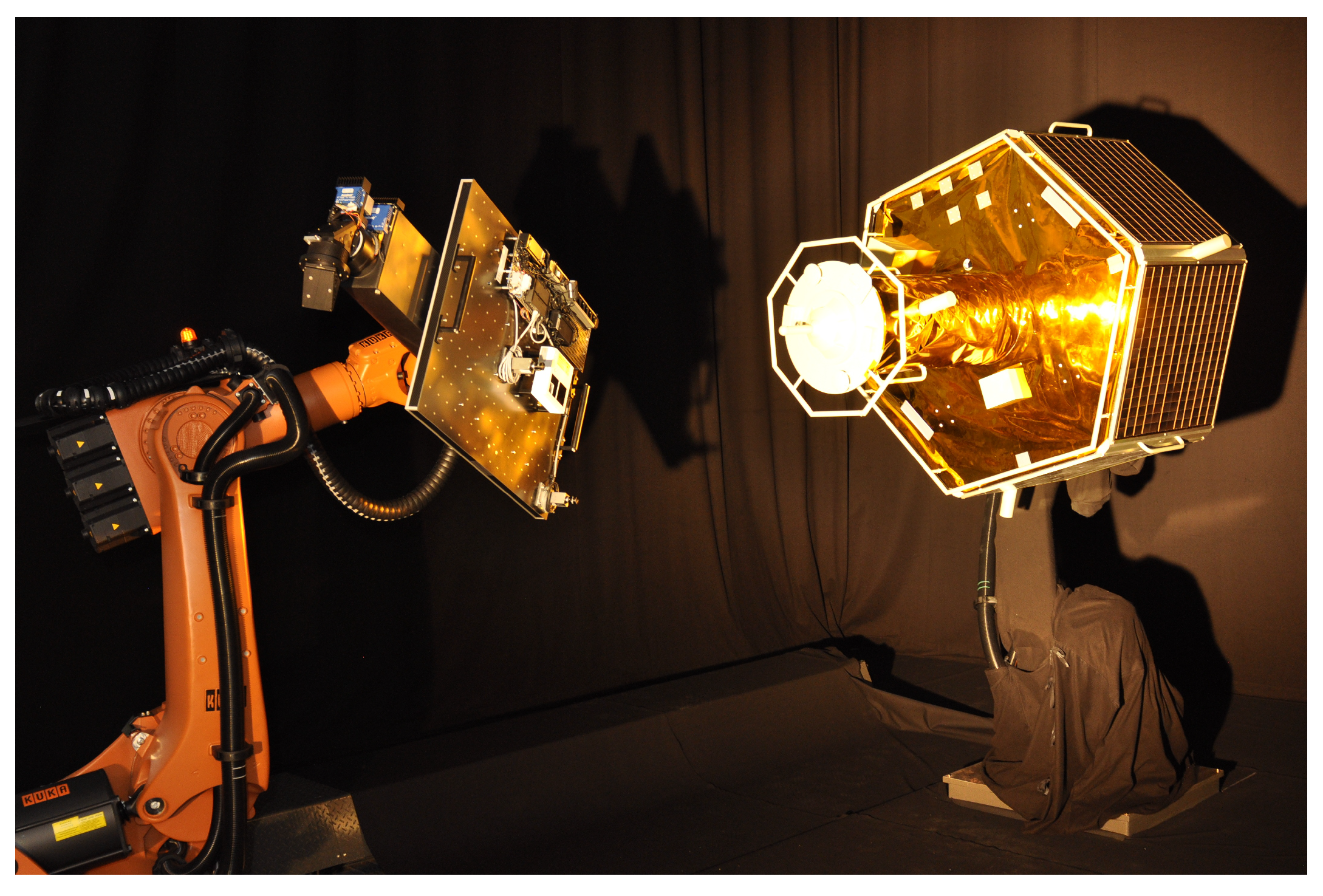

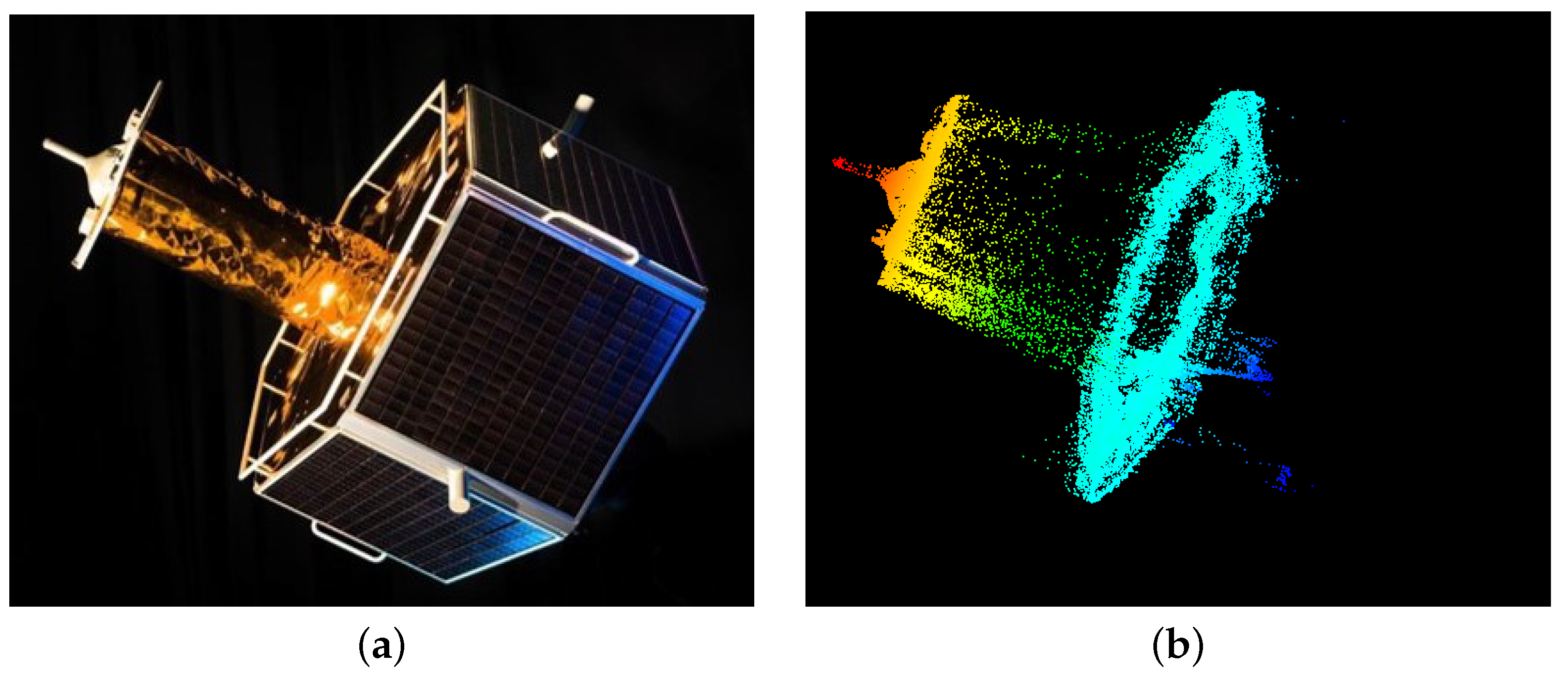

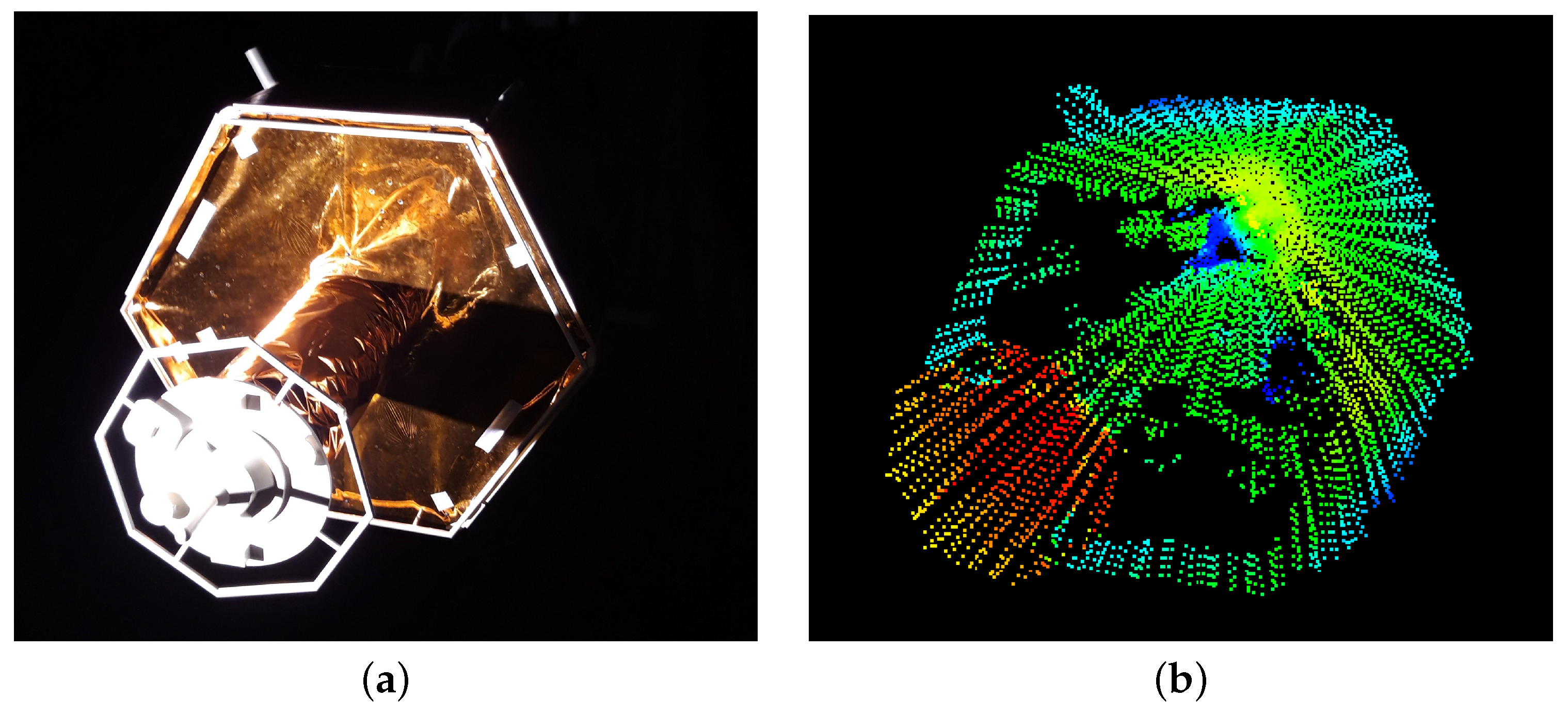

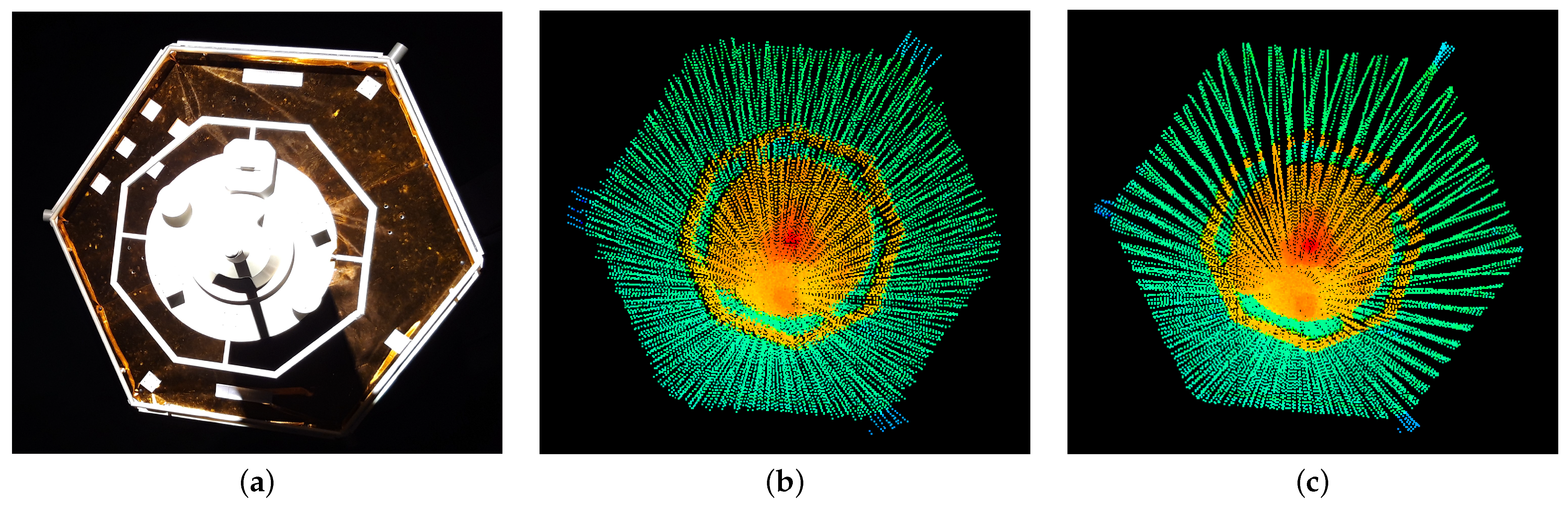

4.1. Hardware-in-the-Loop Experiments at the European Proximity Operations Simulator

4.2. Evaluated Methods

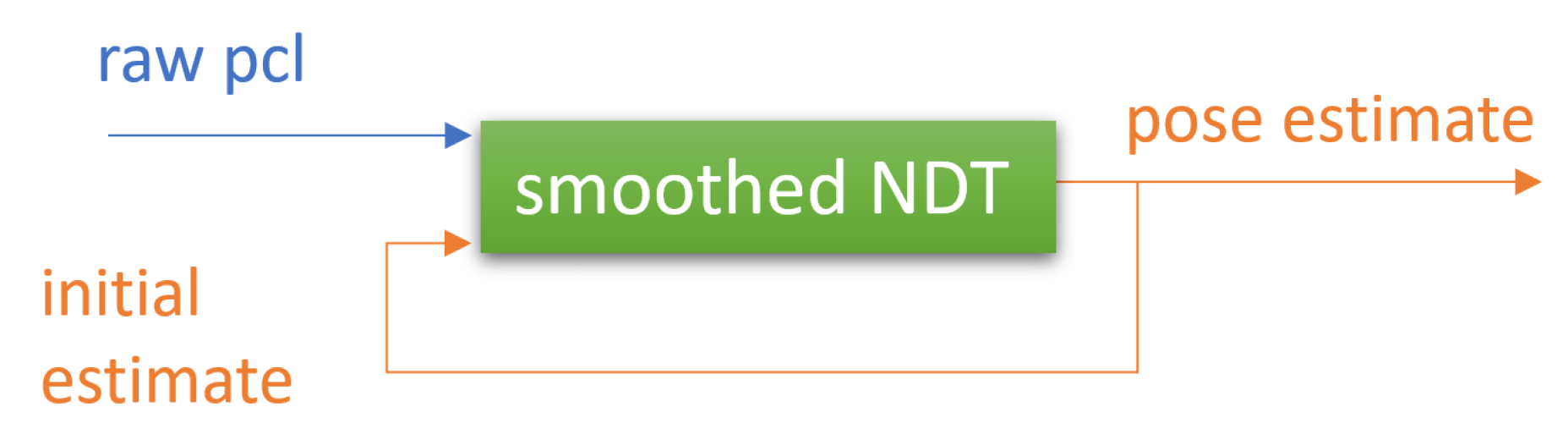

- NDT: Smoothed NDT as presented in Section 3.1, with simple feedback as in Figure 2;

- NDT-deblurring: Smoothed NDT with motion compensation as in Section 3.2, in conjunction with the Kalman filter as in Figure 3;

- CT-NDT: Continuous-time smoothed NDT presented in Section 3.3, in conjunction with the Kalman filter as in Figure 4;

- ICP: Implementation of ICP from the 3D toolkit, with simple feedback as in Figure 2.

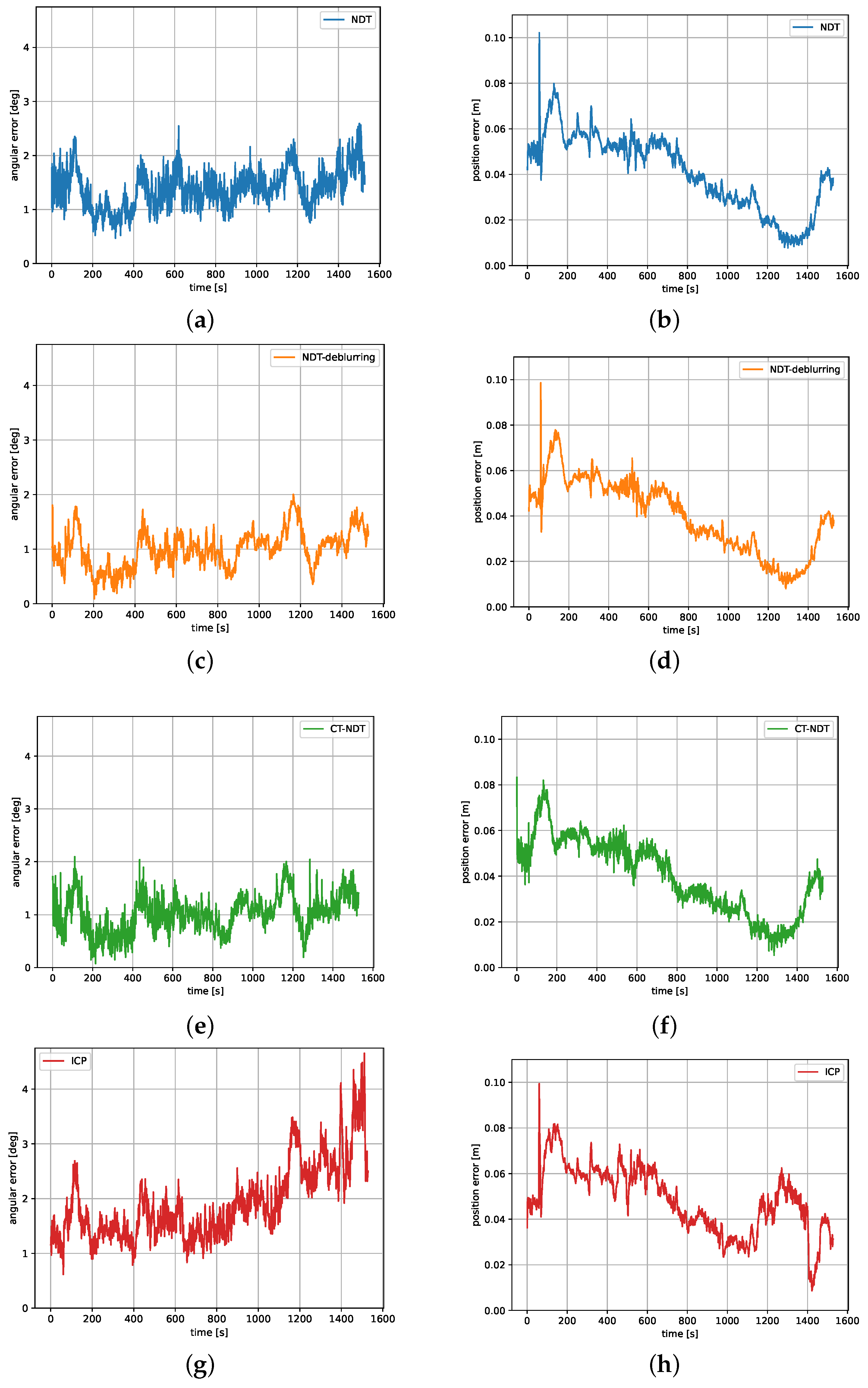

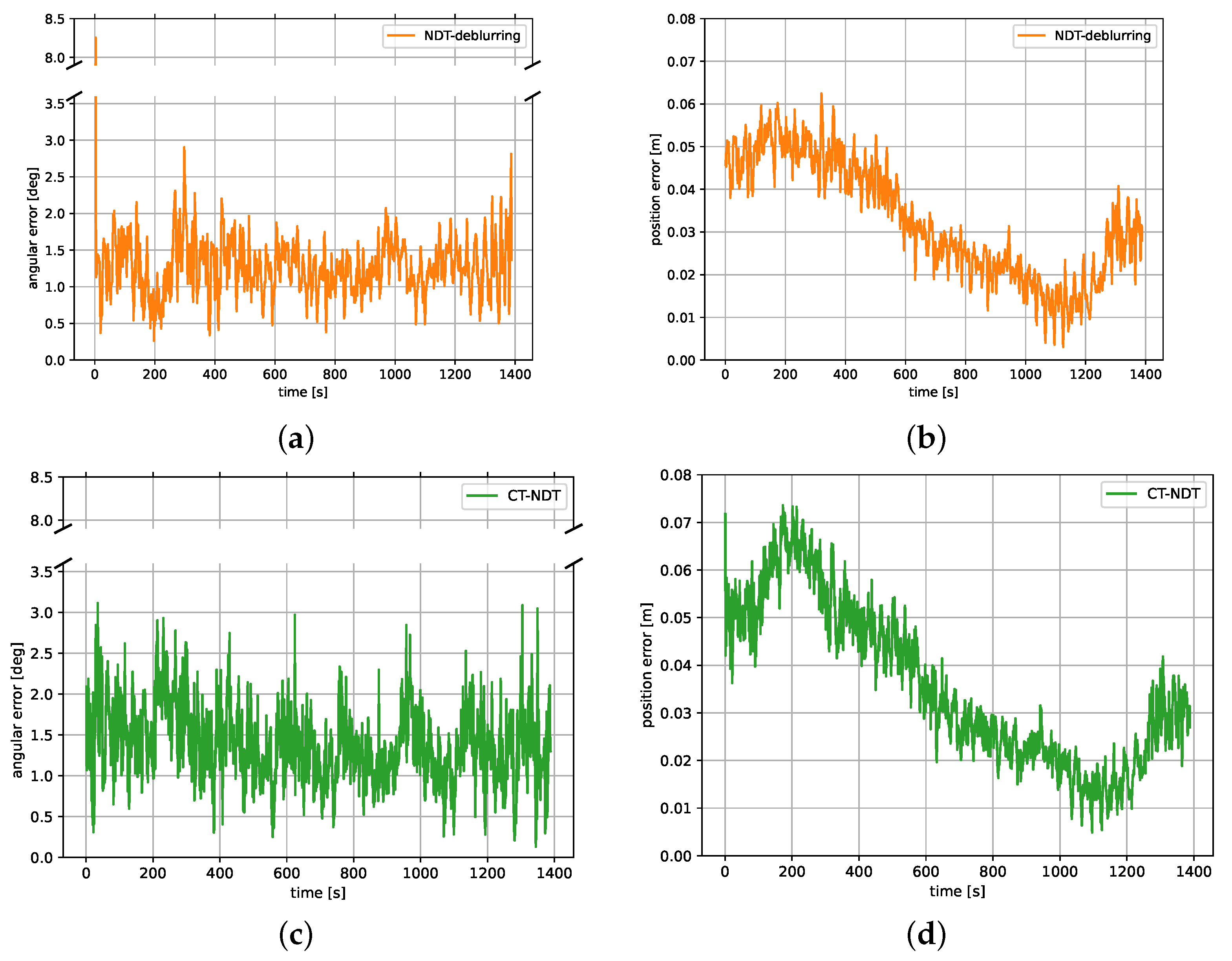

4.3. Hardware-in-the-Loop Results for a Slowly Spinning Target

4.4. Hardware-in-the-Loop Results for a Rapidly Tumbling Target

5. Discussion

5.1. Comparison of the Different Methods for Tracking a Slowly Spinning Target

5.2. Comparison of the Different Methods for Tracking a Rapidly Tumbling Target

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Jacobians of the NDT Cost Function

Appendix A.1. Jacobian for the Classical Formulation

Appendix A.2. Jacobian for the Continuous-Time Formulation

References

- Pyrak, M.; Anderson, J. Performance of Northrop Grumman’s Mission Extension Vehicle (MEV) RPO imagers at GEO. In Proceedings of the Autonomous Systems: Sensors, Processing and Security for Ground, Air, Sea and Space Vehicles and Infrastructure 2022, Orlando, FL, USA, 6 June 2022; Volume 12115, pp. 64–82. [Google Scholar] [CrossRef]

- Cassinis, L.P.; Fonod, R.; Gill, E. Review of the robustness and applicability of monocular pose estimation systems for relative navigation with an uncooperative spacecraft. Prog. Aerosp. Sci. 2019, 110, 100548. [Google Scholar] [CrossRef]

- Opromolla, R.; Fasano, G.; Rufino, G.; Grassi, M. A review of cooperative and uncooperative spacecraft pose determination techniques for close-proximity operations. Prog. Aerosp. Sci. 2017, 93, 53–72. [Google Scholar] [CrossRef]

- Liu, L.; Zhao, G.; Bo, Y. Point cloud based relative pose estimation of a satellite in close range. Sensors 2016, 16, 824. [Google Scholar] [CrossRef]

- Klionovska, K.; Burri, M. Hardware-in-the-Loop Simulations with Umbra Conditions for Spacecraft Rendezvous with PMD Visual Sensors. Sensors 2021, 21, 1455. [Google Scholar] [CrossRef]

- Sun, D.; Hu, L.; Duan, H.; Pei, H. Relative Pose Estimation of Non-Cooperative Space Targets Using a TOF Camera. Remote Sens. 2022, 14, 6100. [Google Scholar] [CrossRef]

- Ruel, S.; Luu, T.; Berube, A. Space shuttle testing of the TriDAR 3D rendezvous and docking sensor. J. Field Robot. 2012, 29, 535–553. [Google Scholar] [CrossRef]

- Gaias, G.; D’Amico, S.; Ardaens, J.S. Angles-only navigation to a noncooperative satellite using relative orbital elements. J. Guid. Control Dyn. 2014, 37, 439–451. [Google Scholar] [CrossRef]

- Sullivan, J.; Koenig, A.; D’Amico, S. Improved maneuver-free approach to angles-only navigation for space rendezvous. In Proceedings of the 26th AAS/AIAA Space Flight Mechanics Meeting, Napa, CA, USA, 14–18 February 2016. [Google Scholar]

- Opromolla, R.; Fasano, G.; Rufino, G.; Grassi, M. A model-based 3D template matching technique for pose acquisition of an uncooperative space object. Sensors 2015, 15, 6360–6382. [Google Scholar] [CrossRef]

- Klionovska, K.; Benninghoff, H. Initial pose estimation using PMD sensor during the rendezvous phase in on-orbit servicing missions. In Proceedings of the 27th AAS/AIAA Space Flight Mechanics Meeting, San Antonio, TX, USA, 5–9 February 2017. [Google Scholar]

- Guo, W.; Hu, W.; Liu, C.; Lu, T. Pose initialization of uncooperative spacecraft by template matching with sparse point cloud. J. Guid. Control Dyn. 2021, 44, 1707–1720. [Google Scholar] [CrossRef]

- Besl, P.J.; McKay, N.D. Method for registration of 3-D shapes. In Proceedings of the Sensor Fusion IV: Control Paradigms and Data Structures, Boston, MA, USA, 12–15 November 1991; Volume 1611, pp. 586–606. [Google Scholar] [CrossRef]

- Biber, P.; Straßer, W. The normal distributions transform: A new approach to laser scan matching. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003) (Cat. No. 03CH37453), Las Vegas, NV, USA, 27–31 October 2003; Volume 3, pp. 2743–2748. [Google Scholar] [CrossRef]

- Magnusson, M.; Nuchter, A.; Lorken, C.; Lilienthal, A.J.; Hertzberg, J. Evaluation of 3D registration reliability and speed-A comparison of ICP and NDT. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3907–3912. [Google Scholar] [CrossRef]

- Pang, S.; Kent, D.; Cai, X.; Al-Qassab, H.; Morris, D.; Radha, H. 3d scan registration based localization for autonomous vehicles–A comparison of ndt and icp under realistic conditions. In Proceedings of the 2018 IEEE 88th Vehicular Technology Conference (VTC-Fall), Chicago, IL, USA, 27–30 August 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Dong, Z.; Liang, F.; Yang, B.; Xu, Y.; Zang, Y.; Li, J.; Wang, Y.; Dai, W.; Fan, H.; Hyyppä, J.; et al. Registration of large-scale terrestrial laser scanner point clouds: A review and benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 163, 327–342. [Google Scholar] [CrossRef]

- Chen, Y.; Medioni, G. Object modelling by registration of multiple range images. Image Vis. Comput. 1992, 10, 145–155. [Google Scholar] [CrossRef]

- Chetverikov, D.; Svirko, D.; Stepanov, D.; Krsek, P. The trimmed iterative closest point algorithm. In Proceedings of the 2002 International Conference on Pattern Recognition, Quebec City, QC, Canada, 11–15 August 11 2002; Volume 3, pp. 545–548. [Google Scholar] [CrossRef]

- Segal, A.; Haehnel, D.; Thrun, S. Generalized-icp. In Proceedings of the Robotics: Science and Systems, Seattle, WA, USA, 28 June–1 July 2009; Volume 2, p. 435. [Google Scholar] [CrossRef]

- Magnusson, M. The Three-Dimensional Normal-Distributions Transform: An Efficient Representation for Registration, Surface Analysis, and Loop Detection. Ph.D. Thesis, Örebro Universitet, Örebro, Sweden, 2009; pp. 58–65. [Google Scholar]

- Magnusson, M.; Lilienthal, A.; Duckett, T. Scan registration for autonomous mining vehicles using 3D-NDT. J. Field Robot. 2007, 24, 803–827. [Google Scholar] [CrossRef]

- Ulaş, C.; Temeltaş, H. 3D multi-layered normal distribution transform for fast and long range scan matching. J. Intell. Robot. Syst. 2013, 71, 85–108. [Google Scholar] [CrossRef]

- Takeuchi, E.; Tsubouchi, T. A 3-D scan matching using improved 3-D normal distributions transform for mobile robotic mapping. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–13 October 2006; pp. 3068–3073. [Google Scholar] [CrossRef]

- Quenzel, J.; Behnke, S. Real-time multi-adaptive-resolution-surfel 6D LiDAR odometry using continuous-time trajectory optimization. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 5499–5506. [Google Scholar] [CrossRef]

- Das, A.; Waslander, S.L. Scan registration using segmented region growing NDT. Int. J. Robot. Res. 2014, 33, 1645–1663. [Google Scholar] [CrossRef]

- Lim, H.; Hwang, S.; Shin, S.; Myung, H. Normal distributions transform is enough: Real-time 3D scan matching for pose correction of mobile robot under large odometry uncertainties. In Proceedings of the 2020 20th International Conference on Control, Automation and Systems (ICCAS), Busan, Republic of Korea, 13–16 October 2020; pp. 1155–1161. [Google Scholar] [CrossRef]

- Schulz, C.; Hanten, R.; Zell, A. Efficient map representations for multi-dimensional normal distributions transforms. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 2679–2686. [Google Scholar] [CrossRef]

- Behley, J.; Stachniss, C. Efficient Surfel-Based SLAM using 3D Laser Range Data in Urban Environments. Proc. Robot. Sci. Syst. 2018, 2018, 59. [Google Scholar] [CrossRef]

- Moosmann, F.; Stiller, C. Velodyne slam. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011; pp. 393–398. [Google Scholar] [CrossRef]

- Deschaud, J.E. IMLS-SLAM: Scan-to-model matching based on 3D data. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 2480–2485. [Google Scholar] [CrossRef]

- Zhang, J.; Singh, S. LOAM: Lidar odometry and mapping in real-time. Proc. Robot. Sci. Syst. 2014, 2, 1–9. [Google Scholar]

- Whelan, T.; Salas-Moreno, R.F.; Glocker, B.; Davison, A.J.; Leutenegger, S. ElasticFusion: Real-time dense SLAM and light source estimation. Int. J. Robot. Res. 2016, 35, 1697–1716. [Google Scholar] [CrossRef]

- Nüchter, A.; Bleier, M.; Schauer, J.; Janotta, P. Improving Google’s Cartographer 3D mapping by continuous-time slam. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 543. [Google Scholar] [CrossRef]

- Dellenbach, P.; Deschaud, J.E.; Jacquet, B.; Goulette, F. CT-ICP: Real-time elastic lidar odometry with loop closure. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 5580–5586. [Google Scholar] [CrossRef]

- Opromolla, R.; Fasano, G.; Rufino, G.; Grassi, M. Pose estimation for spacecraft relative navigation using model-based algorithms. IEEE Trans. Aerosp. Electron. Syst. 2017, 53, 431–447. [Google Scholar] [CrossRef]

- Li, Y.; Wang, Y.; Xie, Y. Using consecutive point clouds for pose and motion estimation of tumbling non-cooperative target. Adv. Space Res. 2019, 63, 1576–1587. [Google Scholar] [CrossRef]

- Wang, Q.; Lei, T.; Liu, X.; Cai, G.; Yang, Y.; Jiang, L.; Yu, Z. Pose estimation of non-cooperative target coated with MLI. IEEE Access 2019, 7, 153958–153968. [Google Scholar] [CrossRef]

- Martínez, H.G.; Giorgi, G.; Eissfeller, B. Pose estimation and tracking of non-cooperative rocket bodies using time-of-flight cameras. Acta Astronaut. 2017, 139, 165–175. [Google Scholar] [CrossRef]

- Opromolla, R.; Nocerino, A. Uncooperative spacecraft relative navigation with LIDAR-based unscented Kalman filter. IEEE Access 2019, 7, 180012–180026. [Google Scholar] [CrossRef]

- Yin, F.; Chou, W.; Wu, Y.; Yang, G.; Xu, S. Sparse unorganized point cloud based relative pose estimation for uncooperative space target. Sensors 2018, 18, 1009. [Google Scholar] [CrossRef]

- Renaut, L.; Frei., H.; Nüchter., A. Smoothed Normal Distribution Transform for Efficient Point Cloud Registration During Space Rendezvous. In Proceedings of the 18th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications–Volume 5: VISAPP, Lisbon, Portugal, 19–21 February 2023; SciTePress: Setúbal, Portugal, 2023; pp. 919–930. [Google Scholar] [CrossRef]

- Sola, J.; Deray, J.; Atchuthan, D. A micro Lie theory for state estimation in robotics. arXiv 2018, arXiv:1812.01537. [Google Scholar] [CrossRef]

- Barrau, A.; Bonnabel, S. Invariant kalman filtering. Annu. Rev. Control Robot. Auton. Syst. 2018, 1, 237–257. [Google Scholar] [CrossRef]

- Barrau, A.; Bonnabel, S. The invariant extended Kalman filter as a stable observer. IEEE Trans. Autom. Control 2016, 62, 1797–1812. [Google Scholar] [CrossRef]

- Eade, E. Derivative of the Exponential Map. 2018. Available online: https://ethaneade.org/exp_diff.pdf (accessed on 24 April 2023).

- Benninghoff, H.; Rems, F.; Risse, E.A.; Mietner, C. European proximity operations simulator 2.0 (EPOS)-a robotic-based rendezvous and docking simulator. J. Large-Scale Res. Facil. 2017, 3, A107. [Google Scholar] [CrossRef]

- Frei, H.; Burri, M.; Rems, F.; Risse, E.A. A robust navigation filter fusing delayed measurements from multiple sensors and its application to spacecraft rendezvous. Adv. Space Res. 2022. [Google Scholar] [CrossRef]

- 3DTK. The 3D Toolkit. 2023. Available online: https://slam6d.sourceforge.io/index.html (accessed on 24 April 2023).

| Cell Size | Max Distance 1 | Max Iterations | Termination Criteria | Voxel Filter Size | |

|---|---|---|---|---|---|

| NDT | cm | cm | 20 | Increment and mm | 2 cm |

| ICP | - | 10 cm | 40 | Increment norm | 2 cm |

| Ang. Error [deg] | Pos. Error [cm] | Exec. Time [ms] | Num. Iterations | |||||

|---|---|---|---|---|---|---|---|---|

| Mean | Max | Mean | Max | Mean | Max | Mean | Max | |

| NDT | 20 | |||||||

| NDT-deblurring | 20 | |||||||

| CT-NDT | 20 | |||||||

| ICP | 40 | |||||||

| Ang. Error [deg] | Pos. Error [cm] | Exec. Time [ms] | Num. Iterations | |||||

|---|---|---|---|---|---|---|---|---|

| Mean | Max | Mean | Max | Mean | Max | Mean | Max | |

| NDT-deblurring | 20 | |||||||

| CT-NDT | 20 | |||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Renaut, L.; Frei, H.; Nüchter, A. Lidar Pose Tracking of a Tumbling Spacecraft Using the Smoothed Normal Distribution Transform. Remote Sens. 2023, 15, 2286. https://doi.org/10.3390/rs15092286

Renaut L, Frei H, Nüchter A. Lidar Pose Tracking of a Tumbling Spacecraft Using the Smoothed Normal Distribution Transform. Remote Sensing. 2023; 15(9):2286. https://doi.org/10.3390/rs15092286

Chicago/Turabian StyleRenaut, Léo, Heike Frei, and Andreas Nüchter. 2023. "Lidar Pose Tracking of a Tumbling Spacecraft Using the Smoothed Normal Distribution Transform" Remote Sensing 15, no. 9: 2286. https://doi.org/10.3390/rs15092286

APA StyleRenaut, L., Frei, H., & Nüchter, A. (2023). Lidar Pose Tracking of a Tumbling Spacecraft Using the Smoothed Normal Distribution Transform. Remote Sensing, 15(9), 2286. https://doi.org/10.3390/rs15092286