Can Plot-Level Photographs Accurately Estimate Tundra Vegetation Cover in Northern Alaska?

Abstract

1. Introduction

- Which machine learning model is optimal for the classification of plot-level photographs of Arctic tundra vegetation?

- How do estimates from plot-level photography compare with estimates from the point frame method?

- Can we predict vegetation cover across space and time using the vegetation cover estimates from plot-level photography?

2. Materials and Methods

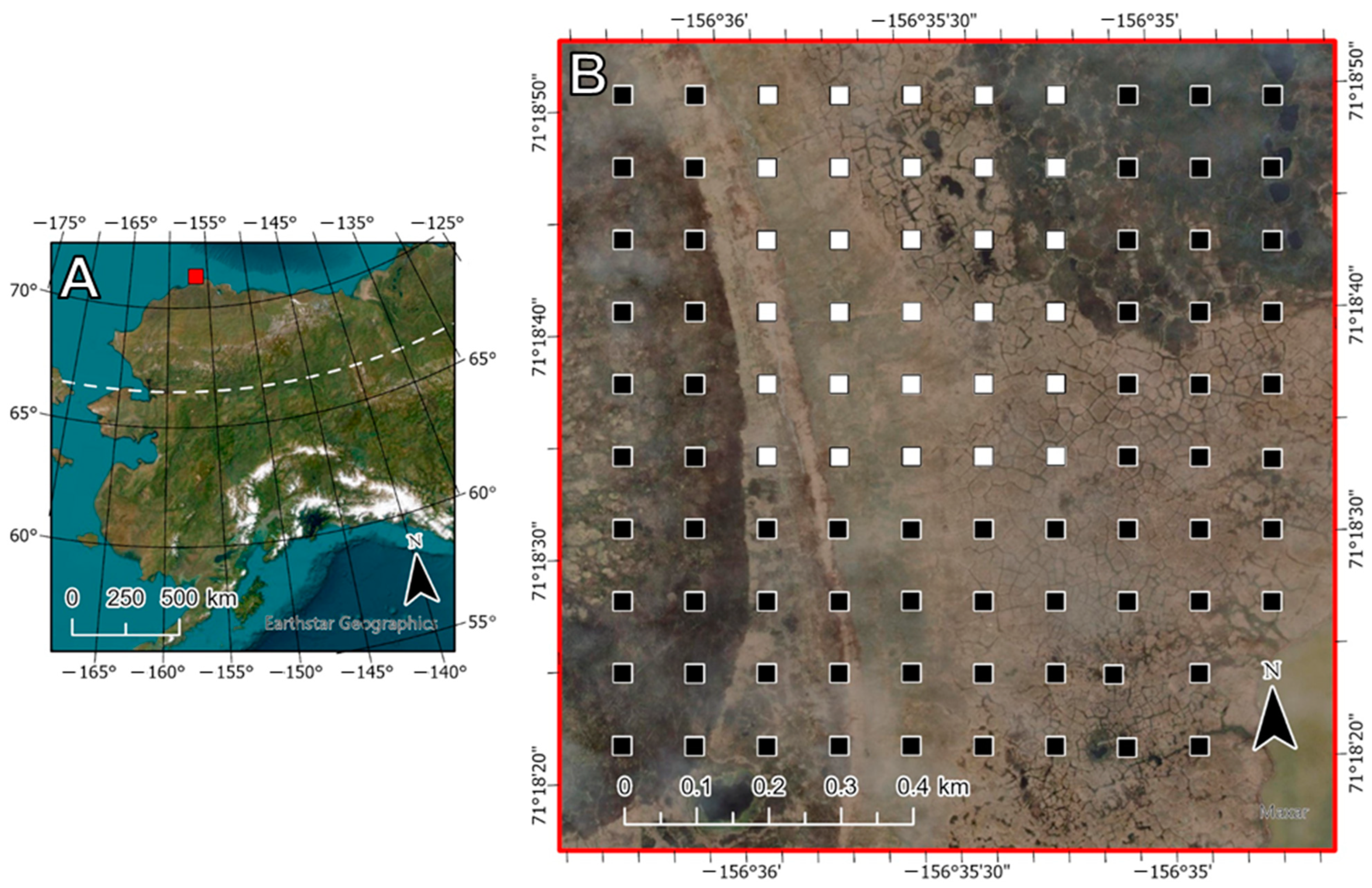

2.1. Study Site

2.2. Plot-Level Photography

2.3. Semi-Automated Image Analysis

2.3.1. Image Preprocessing

2.3.2. Segmentation and Preliminary Classification

2.3.3. Machine Learning Classification

2.4. Point Frame

2.5. Predicting Vegetation Cover

3. Results

3.1. Comparing Machine Learning Models

3.2. Comparing Estimates of Vegetation Cover from Plot-Level Photography and Point Frame Sampling

4. Discussion

4.1. Comparing Machine Learning Models

4.2. Reliability of Vegetation Classes

4.3. Comparing Estimates of Vegetation Cover from Plot-Level Photography and Point Frame Sampling

4.4. Using Plot-Level Photography to Predict Vegetation Cover across Space and Time

4.5. Additional Sources of Error

4.6. Recommendations for Future Image Analysis

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Constable, A.J.; Harper, S.; Dawson, J.; Holsman, K.; Mustonen, T.; Piepenburg, D.; Rost, B. Cross-Chapter Paper 6: Polar Regions. In Climate Change 2022: Impacts, Adaptation and Vulnerability; Pörtner, H.O., Roberts, D.C., Tignor, M., Poloczanska, E.S., Mintenbeck, K., Alegría, A., Craig, M., Langsdorf, S., Löschke, S., Möller, V., et al., Eds.; Cambridge University Press: New York, NY, USA, 2022; pp. 2319–2368. [Google Scholar]

- Kelsey, K.; Pedersen, S.; Leffler, A.J.; Sexton, J.; Feng, M.; Welker, J.M. Winter Snow and Spring Temperature Have Differential Effects on Vegetation Phenology and Productivity across Arctic Plant Communities. Glob. Chang. Biol. 2021, 27, 1572–1586. [Google Scholar] [CrossRef] [PubMed]

- Leffler, A.J.; Klein, E.S.; Oberbauer, S.F.; Welker, J.M. Coupled Long-Term Summer Warming and Deeper Snow Alters Species Composition and Stimulates Gross Primary Productivity in Tussock Tundra. Oecologia 2016, 181, 287–297. [Google Scholar] [CrossRef] [PubMed]

- Shiklomanov, N.I.; Streletskiy, D.A.; Nelson, F.E.; Hollister, R.D.; Romanovsky, V.E.; Tweedie, C.E.; Bockheim, J.G.; Brown, J. Decadal Variations of Active-Layer Thickness in Moisture-Controlled Landscapes, Barrow, Alaska. J. Geophys. Res. 2010, 115, G00I04. [Google Scholar] [CrossRef]

- Farquharson, L.M.; Romanovsky, V.E.; Cable, W.L.; Walker, D.A.; Kokelj, S.V.; Nicolsky, D. Climate Change Drives Widespread and Rapid Thermokarst Development in Very Cold Permafrost in the Canadian High Arctic. Geophys. Res. Lett. 2019, 46, 6681–6689. [Google Scholar] [CrossRef]

- Chapin, F.S.; Sturm, M.; Serreze, M.C.; McFadden, J.P.; Key, J.R.; Lloyd, A.H.; McGuire, A.D.; Rupp, T.S.; Lynch, A.H.; Schimel, J.P.; et al. Role of Land-Surface Changes in Arctic Summer Warming. Science 2005, 310, 657–660. [Google Scholar] [CrossRef]

- Pearson, R.G.; Phillips, S.J.; Loranty, M.M.; Beck, P.S.A.; Damoulas, T.; Knight, S.J.; Goetz, S.J. Shifts in Arctic Vegetation and Associated Feedbacks under Climate Change. Nat. Clim. Chang. 2013, 3, 673–677. [Google Scholar] [CrossRef]

- Post, E.; Alley, R.B.; Christensen, T.R.; Macias-Fauria, M.; Forbes, B.C.; Gooseff, M.N.; Iler, A.; Kerby, J.T.; Laidre, K.L.; Mann, M.E.; et al. The Polar Regions in a 2 °C Warmer World. Sci. Adv. 2019, 5, aaw9883. [Google Scholar] [CrossRef]

- Guay, K.C.; Beck, P.S.A.; Berner, L.T.; Goetz, S.J.; Baccini, A.; Buermann, W. Vegetation Productivity Patterns at High Northern Latitudes: A Multi-Sensor Satellite Data Assessment. Glob. Chang. Biol. 2014, 20, 3147–3158. [Google Scholar] [CrossRef]

- Zhu, Z.; Piao, S.; Myneni, R.B.; Huang, M.; Zeng, Z.; Canadell, J.G.; Ciais, P.; Sitch, S.; Friedlingstein, P.; Arneth, A.; et al. Greening of the Earth and Its Drivers. Nat. Clim. Chang. 2016, 6, 791–795. [Google Scholar] [CrossRef]

- Bhatt, U.S.; Walker, D.A.; Raynolds, M.K.; Bieniek, P.A.; Epstein, H.E.; Comiso, J.C.; Pinzon, J.E.; Tucker, C.J.; Polyakov, I.V. Recent Declines in Warming and Vegetation Greening Trends over Pan-Arctic Tundra. Remote Sens. 2013, 5, 4229–4254. [Google Scholar] [CrossRef]

- De Jong, R.; de Bruin, S.; de Wit, A.; Schaepman, M.E.; Dent, D.L. Analysis of Monotonic Greening and Browning Trends from Global NDVI Time-Series. Remote Sens. Environ. 2011, 115, 692–702. [Google Scholar] [CrossRef]

- Phoenix, G.K.; Bjerke, J.W. Arctic Browning: Extreme Events and Trends Reversing Arctic Greening. Glob. Chang. Biol. 2016, 22, 2960–2962. [Google Scholar] [CrossRef]

- Myers-Smith, I.H.; Kerby, J.T.; Phoenix, G.K.; Bjerke, J.W.; Epstein, H.E.; Assmann, J.J.; John, C.; Andreu-Hayles, L.; Angers-Blondin, S.; Beck, P.S.A.; et al. Complexity Revealed in the Greening of the Arctic. Nat. Clim. Chang. 2020, 10, 106–117. [Google Scholar] [CrossRef]

- Epstein, H.E.; Raynolds, M.K.; Walker, D.A.; Bhatt, U.S.; Tucker, C.J.; Pinzon, J.E. Dynamics of Aboveground Phytomass of the Circumpolar Arctic Tundra during the Past Three Decades. Environ. Res. Lett. 2012, 7, 015506. [Google Scholar] [CrossRef]

- Fisher, J.B.; Hayes, D.J.; Schwalm, C.R.; Huntzinger, D.N.; Stofferahn, E.; Schaefer, K.; Luo, Y.; Wullschleger, S.D.; Goetz, S.; Miller, C.E.; et al. Missing Pieces to Modeling the Arctic-Boreal Puzzle. Environ. Res. Lett. 2018, 13, 020202. [Google Scholar] [CrossRef]

- Walker, D.A.; Daniëls, F.J.A.; Alsos, I.; Bhatt, U.S.; Breen, A.L.; Buchhorn, M.; Bültmann, H.; Druckenmiller, L.A.; Edwards, M.E.; Ehrich, D.; et al. Circumpolar Arctic Vegetation: A Hierarchic Review and Roadmap toward an Internationally Consistent Approach to Survey, Archive and Classify Tundra Plot Data. Environ. Res. Lett. 2016, 11, 055005. [Google Scholar] [CrossRef]

- Wu, X.; Xiao, Q.; Wen, J.; You, D.; Hueni, A. Advances in Quantitative Remote Sensing Product Validation: Overview and Current Status. Earth Sci. Rev. 2019, 196, 102875. [Google Scholar] [CrossRef]

- Anderson, H.B.; Nilsen, L.; Tommervik, H.; Rune Karlsen, S.; Nagai, S.; Cooper, E.J. Using Ordinary Digital Cameras in Place of Near-Infrared Sensors to Derive Vegetation Indices for Phenology Studies of High Arctic Vegetation. Remote Sens. 2016, 8, 847. [Google Scholar] [CrossRef]

- Assmann, J.J.; Myers-Smith, I.H.; Kerby, J.T.; Cunliffe, A.M.; Daskalova, G.N. Drone Data Reveal Heterogeneity in Tundra Greenness and Phenology Not Captured by Satellites. Environ. Res. Lett. 2020, 15, 125002. [Google Scholar] [CrossRef]

- Cunliffe, A.M.; Assmann, J.J.; Daskalova, G.N.; Kerby, J.T.; Myers-Smith, I.H. Aboveground Biomass Corresponds Strongly with Drone-Derived Canopy Height but Weakly with Greenness (NDVI) in a Shrub Tundra Landscape. Environ. Res. Lett. 2020, 15, 125004. [Google Scholar] [CrossRef]

- Fraser, R.H.; Olthof, I.; Lantz, T.C.; Schmitt, C. UAV Photogrammetry for Mapping Vegetation in the Low-Arctic. Arct. Sci. 2016, 2, 79–102. [Google Scholar] [CrossRef]

- Liu, N.; Treitz, P. Modeling High Arctic Percent Vegetation Cover Using Field Digital Images and High Resolution Satellite Data. Int. J. Appl. Earth Obs. 2016, 52, 445–456. [Google Scholar] [CrossRef]

- Malenovský, Z.; Lucieer, A.; King, D.H.; Turnbull, J.D.; Robinson, S.A. Unmanned Aircraft System Advances Health Mapping of Fragile Polar Vegetation. Methods Ecol. Evol. 2017, 8, 1842–1857. [Google Scholar] [CrossRef]

- Orndahl, K.M.; Macander, M.J.; Berner, L.T.; Goetz, S.J. Plant Functional Type Aboveground Biomass Change within Alaska and Northwest Canada Mapped Using a 35-Year Satellite Time Series from 1985 to 2020. Environ. Res. Lett. 2022, 17, 115010. [Google Scholar] [CrossRef]

- Molau, U.; Mølgaard, P. International Tundra Experiment (ITEX) Manual, 2nd ed.; Danish Polar Center: Copenhagen, Denmark, 1996. [Google Scholar]

- May, J.L.; Hollister, R.D. Validation of a Simplified Point Frame Method to Detect Change in Tundra Vegetation. Polar Biol. 2012, 35, 1815–1823. [Google Scholar] [CrossRef]

- Beamish, A.; Raynolds, M.K.; Epstein, H.E.; Frost, G.V.; Macander, M.J.; Bergstedt, H.; Bartsch, A.; Kruse, S.; Miles, V.; Tanis, C.M.; et al. Recent Trends and Remaining Challenges for Optical Remote Sensing of Arctic Tundra Vegetation: A Review and Outlook. Remote Sens. Environ. 2020, 246, 111872. [Google Scholar] [CrossRef]

- Du, J.; Watts, J.D.; Jiang, L.; Lu, H.; Cheng, X.; Duguay, C.; Farina, M.; Qiu, Y.; Kim, Y.; Kimball, J.S.; et al. Remote Sensing of Environmental Changes in Cold Regions: Methods, Achievements and Challenges. Remote Sens. 2019, 11, 1952. [Google Scholar] [CrossRef]

- Shiklomanov, A.N.; Bradley, B.A.; Dahlin, K.M.; Fox, A.M.; Gough, C.M.; Hoffman, F.M.; Middleton, E.M.; Serbin, S.P.; Smallman, L.; Smith, W.K. Enhancing Global Change Experiments through Integration of Remote-Sensing Techniques. Front. Ecol. Environ. 2019, 17, 215–224. [Google Scholar] [CrossRef]

- Chen, Z.; Chen, E.W.; Leblanc, S.G.; Henry, G.H.R.; Chen, W. Digital Photograph Analysis for Measuring Percent Plant Cover in the Arctic. Arctic 2010, 63, 315–326. [Google Scholar] [CrossRef]

- Luscier, J.D.; Thompson, W.L.; Wilson, J.M.; Gorhara, B.E.; Dragut, L.D. Using Digital Photographs and Object-Based Image Analysis to Estimate Percent Ground Cover in Vegetation Plots. Front. Ecol. Environ. 2006, 4, 408–413. [Google Scholar] [CrossRef]

- Booth, D.T.; Cox, S.E.; Fifield, C.; Phillips, M.; Willlamson, N. Image Analysis Compared with Other Methods for Measuring Ground Cover. Arid Land Res. Manag. 2005, 19, 91–100. [Google Scholar] [CrossRef]

- King, D.H.; Wasley, J.; Ashcroft, M.B.; Ryan-Colton, E.; Lucieer, A.; Chisholm, L.A.; Robinson, S.A. Semi-Automated Analysis of Digital Photographs for Monitoring East Antarctic Vegetation. Front. Plant Sci. 2020, 11, 766. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Rango, A.; Herrick, J.E.; Fredrickson, E.L.; Burkett, L. An Object-Based Image Analysis Approach for Determining Fractional Cover of Senescent and Green Vegetation with Digital Plot Photography. J. Arid Environ. 2007, 69, 1–14. [Google Scholar] [CrossRef]

- Hay, G.J.; Castilla, G. Geographic Object-Based Image Analysis (GEOBIA): A New Name for a New Discipline. In Lecture Notes in Geoinformation and Cartography; Blaschke, T., Lang, S., Hay, G.J., Eds.; Springer: Berlin, Germany, 2008; pp. 75–89. ISBN 9783319005140. [Google Scholar]

- Hay, G.J.; Castilla, G. Object-Based Image Analysis: Strengths, Weaknesses, Opportunities and Threats (SWOT). In International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Proceedings of the 1st International Conference on Object-Based Image Analysis (OBIA 2006), Salzburg, Austria, 4–5 July 2006; ISPRS: Hannover, Germany, 2006; pp. 1–3. [Google Scholar]

- Blaschke, T. Object Based Image Analysis for Remote Sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Platt, R.V.; Rapoza, L. An Evaluation of an Object-Oriented Paradigm for Land Use/Land Cover Classification. Prof. Geog. 2008, 60, 87–100. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Queiroz Feitosa, R.; van der Meer, F.; van der Werff, H.; van Coillie, F.; et al. Geographic Object-Based Image Analysis—Towards a New Paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef]

- Chen, G.; Weng, Q.; Hay, G.J.; He, Y. Geographic Object-Based Image Analysis (GEOBIA): Emerging Trends and Future Opportunities. GIScience Remote Sens. 2018, 55, 159–182. [Google Scholar] [CrossRef]

- Hussain, M.; Chen, D.; Cheng, A.; Wei, H.; Stanley, D. Change Detection from Remotely Sensed Images: From Pixel-Based to Object-Based Approaches. ISPRS J. Photogramm. Remote Sens. 2013, 80, 91–106. [Google Scholar] [CrossRef]

- Ma, L.; Manchun, L.; Ma, X.; Cheng, L.; Du, P.; Liu, Y. A Review of Supervised Object-Based Land-Cover Image Classification. ISPRS J. Photogramm. Remote Sens. 2017, 130, 277–293. [Google Scholar] [CrossRef]

- Michel, P.; Mathieu, R.; Mark, A.F. Spatial Analysis of Oblique Photo-Point Images for Quantifying Spatio-Temporal Changes in Plant Communities. Appl. Veg. Sci. 2010, 13, 173–182. [Google Scholar] [CrossRef]

- Alberdi, M.; Bravo, L.A.; Gutierrez, A.; Gidekel, M.; Corcuera, L.J. Ecophysiology of Antarctic Vascular Plants. Physiol. Plant. 2002, 115, 479–486. [Google Scholar] [CrossRef] [PubMed]

- Callaghan, T.V.; Björn, L.O.; Chernov, Y.; Chapin, T.; Christensen, T.; Huntley, B.; Ims, R.A.; Johansson, M.; Jolly, D.; Jonasson, S.; et al. Biodiversity, Distributions and Adaptations of Arctic Species in the Context of Environmental Change. Ambio 2004, 33, 404–417. [Google Scholar] [CrossRef] [PubMed]

- Brown, J.; Hinkel, K.M.; Nelson, F.E. The Circumpolar Active Layer Monitoring (CALM) Program: Research Designs and Initial Results. Polar Geogr. 2000, 24, 165–258. [Google Scholar] [CrossRef]

- Harris, J.A.; Hollister, R.D.; Botting, T.F.; Tweedie, C.E.; Betway, K.R.; May, J.L.; Barrett, R.T.S.; Leibig, J.A.; Christoffersen, H.L.; Vargas, S.A.; et al. Understanding the Climate Impacts on Decadal Vegetation Change in Northern Alaska. Arct. Sci. 2022, 8, 878–898. [Google Scholar] [CrossRef]

- Botting, T.F. Documenting Annual Differences in Vegetation Cover, Height and Diversity near Barrow, Alaska. Master’s Thesis, Grand Valley State University, Allendale, MI, USA, 2015. [Google Scholar]

- Raynolds, M.K.; Walker, D.A.; Balser, A.; Bay, C.; Campbell, M.; Cherosov, M.M.; Daniëls, F.J.A.; Eidesen, P.B.; Ermokhina, K.; Frost, G.V.; et al. A Raster Version of the Circumpolar Arctic Vegetation Map (CAVM). Remote Sens. Environ. 2019, 232, 111297. [Google Scholar] [CrossRef]

- Box, J.E.; Colgan, W.T.; Christensen, T.R.; Schmidt, N.M.; Lund, M.; Parmentier, F.-J.W.; Brown, R.; Bhatt, U.S.; Euskirchen, E.S.; Romanovsky, V.E.; et al. Key Indicators of Arctic Climate Change: 1971–2017. Environ. Res. Lett. 2019, 14, 045010. [Google Scholar] [CrossRef]

- Brown, J.; Miller, P.C.; Tieszen, L.L.; Bunnell, F.L. (Eds.) An Arctic Ecosystem: The Coastal Tundra at Barrow, Alaska; Dowden, Hutchinson & Ross, Inc.: Stroudsburg, PA, USA, 1980; pp. 1–571. [Google Scholar]

- Tieszan, L.L. Photosynthesis in the Principal Barrow, Alaska Species: A Summary of Field and Laboratory Responses. In Vegetation and Production Ecology of an Alaskan Arctic Tundra; Tieszen, L.L., Ed.; Springer: New York, NY, USA, 1978; pp. 241–268. [Google Scholar]

- Lang, S. Object-Based Image Analysis for Remote Sensing Applications: Modeling Reality—Dealing with Complexity. In Object-based Image Analysis; Blaschke, T., Lang, S., Hay, G.J., Eds.; Springer: Berlin/Heidelberg, Germany; New York, NY, USA, 2008; pp. 1–25. [Google Scholar]

- Strahler, A.; Woodcock, C.; Smith, J. On the Nature of Models in Remote Sensing. Remote Sens. Environ. 1986, 20, 121–139. [Google Scholar] [CrossRef]

- Clarke, T.A.; Fryer, J.G. The Development of Camera Calibration Methods and Models. Photogramm. Rec. 1998, 16, 51–66. [Google Scholar] [CrossRef]

- Rogers, G.F.; Turner, R.M.; Malde, H.E. Using Matched Photographs to Monitor Resource Change. In Renewable Resource Inventories for Monitoring Changes and Trends, Proceedings of the International Conference, Corvallis, OR, USA, 15–19 August 1983; Bell, J.F., Atterbury, T., Eds.; OSU Press: Corvallis, OR, USA, 1983; pp. 90–92. [Google Scholar]

- Baatz, M.; Schaape, A. A Multiresolution Segmentation: An Optimization Approach for High Quality Multi-Scale Image Segmentation. In Proceedings of the Angewandte Geographische Informations-Verarbeitung XII; Strobl, J., Ed.; University of Salzburg: Salzburg, Austria, 2000; pp. 12–23. [Google Scholar]

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-Resolution, Object-Oriented Fuzzy Analysis of Remote Sensing Data for GIS-Ready Information. ISPRS J. Photogramm. Remote Sens. 2004, 58, 239–258. [Google Scholar] [CrossRef]

- Hossain, M.D.; Chen, D. Segmentation for Object-Based Image Analysis (OBIA): A Review of Algorithms and Challenges from Remote Sensing Perspective. ISPRS J. Photogramm. Remote Sens. 2019, 150, 115–134. [Google Scholar] [CrossRef]

- Liu, T.; Abd-Elrahman, A.; Morton, J.; Wilhelm, V.L. Comparing Fully Convolutional Networks, Random Forest, Support Vector Machine, and Patch-Based Deep Convolutional Neural Networks for Object-Based Wetland Mapping Using Images from Small Unmanned Aircraft System. GIScience Remote Sens. 2018, 55, 243–264. [Google Scholar] [CrossRef]

- Radoux, J.; Bogaert, P. Good Practices for Object-Based Accuracy Assessment. Remote Sens. 2017, 9, 646. [Google Scholar] [CrossRef]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices, 3rd ed.; Lewis Publishers: Boca Raton, FL, USA, 2019. [Google Scholar] [CrossRef]

- Kuhn, M. Building Predictive Models in R Using the Caret Package. J. Stat. Softw. 2008, 28, 1–26. [Google Scholar] [CrossRef]

- Congalton, R.G. A Review of Assessing the Accuracy of Classifications of Remotely Sensed Data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Haralick, R. Statistical and Structural Approaches to Texture. Proc. IEEE 1979, 67, 786–804. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugan, K.; Dinstein, I. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Jensen, J.R. Remote Sensing of the Environment: An Earth Resource Perspective, 2nd ed.; Pearson Education Ltd.: Harlow, UK, 2013; ISBN 978-0131889507. [Google Scholar]

- Beamish, A.L.; Nijland, W.; Edwards, M.; Coops, N.C.; Henry, G.H.R. Phenology and Vegetation Change Measurements from True Colour Digital Photography in High Arctic Tundra. Arct. Sci. 2016, 2, 33–49. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel Algorithms for Remote Estimation of Vegetation Fraction. Remote Sens. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Ide, R.; Oguma, H. Use of Digital Cameras for Phenological Observations. Ecol. Inform. 2010, 5, 339–347. [Google Scholar] [CrossRef]

- Richardson, A.D.; Braswell, B.H.; Hollinger, D.Y.; Jenkins, J.P.; Ollinger, S.V. Near-Surface Remote Sensing of Spatial and Temporal Variation in Canopy Phenology. Ecol. Appl. 2009, 19, 1417–1428. [Google Scholar] [CrossRef] [PubMed]

- Richardson, A.D.; Jenkins, J.P.; Braswell, B.H.; Hollinger, D.Y.; Ollinger, S.V.; Smith, M.L. Use of Digital Webcam Images to Track Spring Green-up in a Deciduous Broadleaf Forest. Oecologia 2007, 152, 323–334. [Google Scholar] [CrossRef] [PubMed]

- Tucker, C.J. Red and Photographic Infrared Linear Combinations for Monitoring Vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Rango, A. Image Processing and Classification Procedures for Analysis of Sub-Decimeter Imagery Acquired with an Unmanned Aircraft over Arid Rangelands. GISci. Remote Sens. 2011, 48, 4–23. [Google Scholar] [CrossRef]

- Trimble. Trimble Documentation ECognition Developer 9.5; Reference Book 9.5.1.; Trimble Germany GmbH: Munich, Germany, 2019; pp. 1–487. [Google Scholar]

- Chandrashekar, G.; Sahin, F. A Survey on Feature Selection Methods. Comput. Electr. Eng. 2014, 40, 16–28. [Google Scholar] [CrossRef]

- Story, M.; Congalton, R.G. Accuracy Assessment: A User’s Perspective. Photogramm. Eng. Remote Sens. 1986, 52, 397–399. [Google Scholar]

- Clark, J.S.; Nemergut, D.; Seyednasrollah, B.; Turner, P.J.; Zhang, S. Generalized Joint Attribute Modeling for Biodiversity Analysis: Median-Zero, Multivariate, Multifarious Data. Ecol. Monogr. 2017, 87, 34–56. [Google Scholar] [CrossRef]

- de Valpine, P.; Harmon-Threatt, A.N. General Models for Resource Use or Other Compositional Count Data Using the Dirichlet-Multinomial Distribution. Ecology 2013, 94, 2678–2687. [Google Scholar] [CrossRef]

- Simonis, J.L.; White, E.P.; Ernest, S.K.M. Evaluating Probabilistic Ecological Forecasts. Ecology 2021, 102, e03431. [Google Scholar] [CrossRef]

- Willmott, C.J.; Matsuura, K. Advantages of the Mean Absolute Error (MAE) over the Root Mean Square Error (RMSE) in Assessing Average Model Performance. Clim. Res. 2005, 30, 79–82. [Google Scholar] [CrossRef]

- Myint, S.W.; Gober, P.; Brazel, A.; Grossman-Clarke, S.; Qihao, W. Per-Pixel vs. Object-Based Classification of Urban Land Cover Extraction Using High Spatial Resolution Imagery. Remote Sens. Environ. 2011, 115, 1145–1161. [Google Scholar] [CrossRef]

- Li, M.; Ma, L.; Blaschke, T.; Cheng, L.; Tiede, D. A Systematic Comparison of Different Object-Based Classification Techniques Using High Spatial Resolution Imagery in Agricultural Environments. Int. J. Appl. Earth Obs. Geoinf. 2016, 49, 87–98. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Rango, A. Incorporation of Texture, Intensity, Hue, and Saturation for Rangeland Monitoring with Unmanned Aircraft Imagery. In The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Proceedings of the GEOBIA 2008—Pixels, Objects, Intelligence GEOgraphic Object Based Image Analysis for the 21st Century, Calgary, AB, Canada, 5–8 August 2008; ISPRS: Hannover, Germany, 2008; Volume 38, p. 4. [Google Scholar]

- Yu, Q.; Gong, P.; Clinton, N.; Biging, G.; Kelly, M.; Schirokauer, D. Object-Based Detailed Vegetation Classification with Airborne High Spatial Resolution Remote Sensing Imagery. Photogramm. Eng. Remote Sens. 2006, 72, 799–811. [Google Scholar] [CrossRef]

- Ma, L.; Cheng, L.; Li, M.; Liu, Y.; Ma, X. Training Set Size, Scale, and Features in Geographic Object-Based Image Analysis of Very High Resolution Unmanned Aerial Vehicle Imagery. ISPRS J. Photogramm. Remote Sens. 2015, 102, 14–27. [Google Scholar] [CrossRef]

- Dronova, I. Object-Based Image Analysis in Wetland Research: A Review. Remote Sens. 2015, 7, 6380–6413. [Google Scholar] [CrossRef]

- Kim, M.; Warner, T.A.; Madden, M.; Atkinson, D.S. Multi-Scale GEOBIA with Very High Spatial Resolution Digital Aerial Imagery: Scale, Texture and Image Objects. Int. J. Remote Sens. 2011, 32, 2825–2850. [Google Scholar] [CrossRef]

- Strobl, C.; Boulesteix, A.-L.; Zeileis, A.; Hothorn, T. Bias in Random Forest Variable Importance Measures: Illustrations, Sources and a Solution. BMC Bioinform. 2007, 8, 8–25. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of Machine-Learning Classification in Remote Sensing: An Applied Review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef]

- Jensen, J.R. Introductory Digital Image Processing: A Remote Sensing Perspective, 3rd ed.; Prentice Hall, Inc.: Upper Saddle River, NJ, USA, 2005; ISBN 978-0134058160. [Google Scholar]

- Marcial-Pablo, M.D.J.; Gonzalez-Sanchez, A.; Jimenez-Jimenez, S.I.; Ontiveros-Capurata, R.E.; Ojeda-Bustamante, W. Estimation of Vegetation Fraction Using RGB and Multispectral Images from UAV. Int. J. Remote Sens. 2019, 40, 420–438. [Google Scholar] [CrossRef]

- Motohka, T.; Nasahara, K.N.; Oguma, H.; Tsuchida, S. Applicability of Green-Red Vegetation Index for Remote Sensing of Vegetation Phenology. Remote Sens. 2010, 2, 2369–2387. [Google Scholar] [CrossRef]

- Murray, D.F.; Murray, D.F. Appendix: Checklists of Vascular Plants, Bryophytes, and Lichens from the Alaskan U.S. IBP Tundra Biome Study Areas—Barrow, Prudhoe Bay, Eagle Summit; Tieszan, L.L., Ed.; Springer: New York, NY, USA, 1978; pp. 647–677. [Google Scholar]

- Stow, D.A.; Hope, A.; McGuire, D.; Verbyla, D.; Gamon, J.; Huemmrich, F.; Houston, S.; Racine, C.; Sturm, M.; Tape, K.; et al. Remote Sensing of Vegetation and Land-Cover Change in Arctic Tundra Ecosystems. Remote Sens. 2004, 89, 281–308. [Google Scholar] [CrossRef]

- May, J.L.; Parker, T.; Unger, S.; Oberbauer, S.F. Short Term Changes in Moisture Content Drive Strong Changes in Normalized Difference Vegetation Index and Gross Primary Productivity in Four Arctic Moss Communities. Remote Sens. Environ. 2018, 212, 114–120. [Google Scholar] [CrossRef]

- Harris, D.J.; Taylor, S.D.; White, E.P. Forecasting Biodiversity in Breeding Birds Using Best Practices. PeerJ 2018, 6, e4278. [Google Scholar] [CrossRef] [PubMed]

- Ye, S.; Pontius, R.G.; Rakshit, R. A Review of Accuracy Assessment for Object-Based Image Analysis: From Per-Pixel to Per-Polygon Approaches. ISPRS J. Photogramm. Remote Sens. 2018, 141, 137–147. [Google Scholar] [CrossRef]

- Bennett, L.T.; Judd, T.S.; Adams, M.A. Close-Range Vertical Photography for Measuring Cover Changes in Perennial Grasslands. J. Range Manag. 2000, 53, 634–641. [Google Scholar] [CrossRef]

- Vittoz, P.; Guisan, A. How Reliable Is the Monitoring of Permanent Vegetation Plots? A Test with Multiple Observers. J. Veg. Sci. 2007, 18, 413–422. [Google Scholar] [CrossRef]

- Gorrod, E.J.; Keith, D.A. Observer Variation in Field Assessments of Vegetation Condition: Implications for Biodiversity Conservation. Ecol. Manag. Restor. 2009, 10, 31–40. [Google Scholar] [CrossRef]

- Mamet, S.D.; Young, N.; Chun, K.P.; Johnstone, J.F. What Is the Most Efficient and Effective Method for Long-Term Monitoring of Alpine Tundra Vegetation? Arct. Sci. 2016, 2, 127–141. [Google Scholar] [CrossRef]

- Olden, J.D.; Lawler, J.J.; Poff, N.L. Machine Learning Methods without Tears: A Primer for Ecologists. Q. Rev. Biol. 2008, 83, 171–193. [Google Scholar] [CrossRef]

- Rawat, W.; Wang, Z. Deep Convolutional Neural Networks for Image Classification: A Comprehensive Review. Neural Comput. 2017, 29, 2352–2449. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep Learning in Remote Sensing Applications: A Meta-Analysis and Review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- McFeeters, S. The Use of the Normalized Difference Water Index (NDWI) in the Delineation of Open Water Features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Zhou, Y.; Dong, J.; Xiao, X.; Xiao, T.; Yang, Z.; Zhao, G.; Zou, Z.; Qin, Y. Open Surface Water Mapping Algorithms: A Comparison of Water-Related Spectral Indices and Sensors. Water 2017, 9, 256. [Google Scholar] [CrossRef]

- Epstein, H.E.; Myers-Smith, I.H.; Walker, D.A. Recent Dynamics of Arctic and Sub-Arctic Vegetation. Environ. Res. Lett. 2013, 8, 015040. [Google Scholar] [CrossRef]

- Pettorelli, N. The Normalized Difference Vegetation Index; Oxford University Press: Oxford, UK, 2013; pp. 1–224. [Google Scholar]

- Beamish, A.L.; Coops, N.; Chabrillat, S.; Heim, B. A Phenological Approach to Spectral Differentiation of Low-Arctic Tundra Vegetation Communities, North Slope, Alaska. Remote Sens. 2017, 9, 1200. [Google Scholar] [CrossRef]

- Bratsch, S.N.; Epstein, H.E.; Buchhorn, M.; Walker, D.A. Differentiating among Four Arctic Tundra Plant Communities at Ivotuk, Alaska Using Field Spectroscopy. Remote Sens. 2016, 8, 51. [Google Scholar] [CrossRef]

- Wang, R.; Gamon, J.A. Remote Sensing of Terrestrial Plant Biodiversity. Remote Sens. Environ. 2019, 231, 111218. [Google Scholar] [CrossRef]

- Gholizadeh, H.; Gamon, J.A.; Helzer, C.J.; Cavender-Bares, J. Multi-Temporal Assessment of Grassland α- and β-Diversity Using Hyperspectral Imaging. Ecol. Appl. 2020, 30, e02145. [Google Scholar] [CrossRef]

- Jetz, W.; Cavender-Bares, J.; Pavlick, R.; Schimel, D.; David, F.W.; Asner, G.P.; Guralnick, R.; Kattge, J.; Latimer, A.M.; Moorcroft, P.; et al. Monitoring Plant Functional Diversity from Space. Nat. Plants 2016, 2, 16024. [Google Scholar] [CrossRef]

- Cavender-Bares, J.; Gamon, J.A.; Townsend, P.A. The Use of Remote Sensing to Enhance Biodiversity Monitoring and Detection: A Critical Challenge for the Twenty-First Century. In Remote Sensing of Plant Biodiversity; Cavender-Bares, J., Gamon, J.A., Townsend, P.A., Eds.; Springer: Cham, Switzerland, 2020; pp. 1–12. ISBN 978-3-030-33156-6. [Google Scholar]

| Training Set | Test Set | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Model | OA | Min | Max | Kappa | Run Time | Model | OA | Lower | Upper | Kappa | |

| (min) | CI | CI | |||||||||

| RF | 59.8 | 56.8 | 63.1 | 51.9 | 25.4 | RF | 60.5 | 58.4 | 62.5 | 52.5 | |

| GBM | 60.0 | 57.4 | 63.2 | 52.0 | 36.3 | GBM | 59.8 | 57.7 | 61.8 | 51.7 | |

| CART | 55.5 | 52.0 | 59.8 | 46.8 | 0.1 | CART | 56.2 | 54.1 | 58.2 | 46.8 | |

| SVM | 57.4 | 54.9 | 60.7 | 49.3 | 10.6 | SVM | 57.4 | 55.3 | 59.4 | 49.4 | |

| KNN | 46.8 | 43.1 | 51.3 | 37.6 | 1.9 | KNN | 46.6 | 44.5 | 48.7 | 37.6 | |

| Predicted | Observed | |||||||

|---|---|---|---|---|---|---|---|---|

| BRYO | DSHR | FORB | GRAM | LICH | LITT | SHAD | STAD | |

| BRYO | 178 | 14 | 3 | 28 | 0 | 92 | 27 | 0 |

| DSHR | 50 | 34 | 7 | 73 | 2 | 47 | 5 | 0 |

| FORB | 2 | 9 | 41 | 22 | 1 | 3 | 0 | 0 |

| GRAM | 16 | 16 | 8 | 270 | 0 | 35 | 0 | 4 |

| LICH | 6 | 3 | 2 | 20 | 38 | 74 | 0 | 43 |

| LITT | 47 | 8 | 1 | 18 | 9 | 431 | 9 | 6 |

| SHAD | 55 | 1 | 0 | 3 | 0 | 35 | 217 | 0 |

| STAD | 0 | 0 | 0 | 23 | 18 | 43 | 0 | 150 |

| Totals | 354 | 85 | 62 | 457 | 68 | 760 | 258 | 203 |

| UA | 52.0 | 15.6 | 52.6 | 77.4 | 20.4 | 81.5 | 69.8 | 64.1 |

| PA | 50.3 | 40.0 | 66.1 | 59.1 | 55.9 | 56.7 | 84.1 | 73.9 |

| OA | 60.5 | |||||||

| Kappa | 52.5 | |||||||

| Predictor | Type | Raw | Normalized |

|---|---|---|---|

| Intensity | Layer | 411.4 | 100.0 |

| Green Ratio | Spectral | 144.3 | 26.5 |

| Green-Red Vegetation Index | Spectral | 142.6 | 26.0 |

| Greenness Excess Index | Spectral | 116.4 | 18.8 |

| Hue | Layer | 112.5 | 17.7 |

| Density | Shape | 100.8 | 14.5 |

| Blue Ratio | Spectral | 98.6 | 13.9 |

| Red Ratio | Spectral | 95.7 | 13.1 |

| Homogeneity | Texture | 72.5 | 6.7 |

| Length-to-Width Ratio | Extent | 71.9 | 6.5 |

| Contrast | Texture | 71.1 | 6.3 |

| Length | Extent | 62.1 | 3.8 |

| Standard Deviation of the Green Layer | Layer | 61.3 | 3.6 |

| Radius of the Largest Enclosed Ellipse | Shape | 58.8 | 2.9 |

| Entropy | Texture | 58.7 | 2.9 |

| Standard Deviation Blue Layer | Layer | 56.2 | 2.2 |

| Compactness | Shape | 55.8 | 2.1 |

| Elliptic Fit | Shape | 55.7 | 2.0 |

| Width | Extent | 54.7 | 1.8 |

| Radius of the Smallest Enclosed Ellipse | Shape | 54.4 | 1.7 |

| Border Length | Extent | 53.2 | 1.3 |

| Area | Extent | 48.3 | 0.0 |

| Representative Variability | Model | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Temporal | Spatial | Temporal | Spatial | ||||||||||

| MAE | Bias | MAE | Bias | MAE | Bias | MAE | Bias | ||||||

| Bryophytes | 14 | 8 | 6 | 6 | 1 | 9 | 6 | 6 | 0 | ||||

| Deciduous Shrubs | 4 | 1 | 1 | 6 | 0 | 3 | 1 | 4 | 0 | ||||

| Forbs | 4 | 3 | 0 | 4 | −2 | 3 | 1 | 4 | 2 | ||||

| Graminoids | 33 | 11 | −9 | 11 | −1 | 9 | −7 | 7 | 4 | ||||

| Lichens | 7 | 2 | 2 | 8 | 1 | 4 | 1 | 4 | 2 | ||||

| Litter | 20 | 13 | −11 | 7 | 2 | 12 | −11 | 9 | 0 | ||||

| Standing Dead | 17 | 13 | 12 | 5 | 0 | 11 | 10 | 8 | 0 | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sellers, H.L.; Vargas Zesati, S.A.; Elmendorf, S.C.; Locher, A.; Oberbauer, S.F.; Tweedie, C.E.; Witharana, C.; Hollister, R.D. Can Plot-Level Photographs Accurately Estimate Tundra Vegetation Cover in Northern Alaska? Remote Sens. 2023, 15, 1972. https://doi.org/10.3390/rs15081972

Sellers HL, Vargas Zesati SA, Elmendorf SC, Locher A, Oberbauer SF, Tweedie CE, Witharana C, Hollister RD. Can Plot-Level Photographs Accurately Estimate Tundra Vegetation Cover in Northern Alaska? Remote Sensing. 2023; 15(8):1972. https://doi.org/10.3390/rs15081972

Chicago/Turabian StyleSellers, Hana L., Sergio A. Vargas Zesati, Sarah C. Elmendorf, Alexandra Locher, Steven F. Oberbauer, Craig E. Tweedie, Chandi Witharana, and Robert D. Hollister. 2023. "Can Plot-Level Photographs Accurately Estimate Tundra Vegetation Cover in Northern Alaska?" Remote Sensing 15, no. 8: 1972. https://doi.org/10.3390/rs15081972

APA StyleSellers, H. L., Vargas Zesati, S. A., Elmendorf, S. C., Locher, A., Oberbauer, S. F., Tweedie, C. E., Witharana, C., & Hollister, R. D. (2023). Can Plot-Level Photographs Accurately Estimate Tundra Vegetation Cover in Northern Alaska? Remote Sensing, 15(8), 1972. https://doi.org/10.3390/rs15081972