A Comparison of UAV-Derived Dense Point Clouds Using LiDAR and NIR Photogrammetry in an Australian Eucalypt Forest

Abstract

1. Introduction

2. Materials and Methods

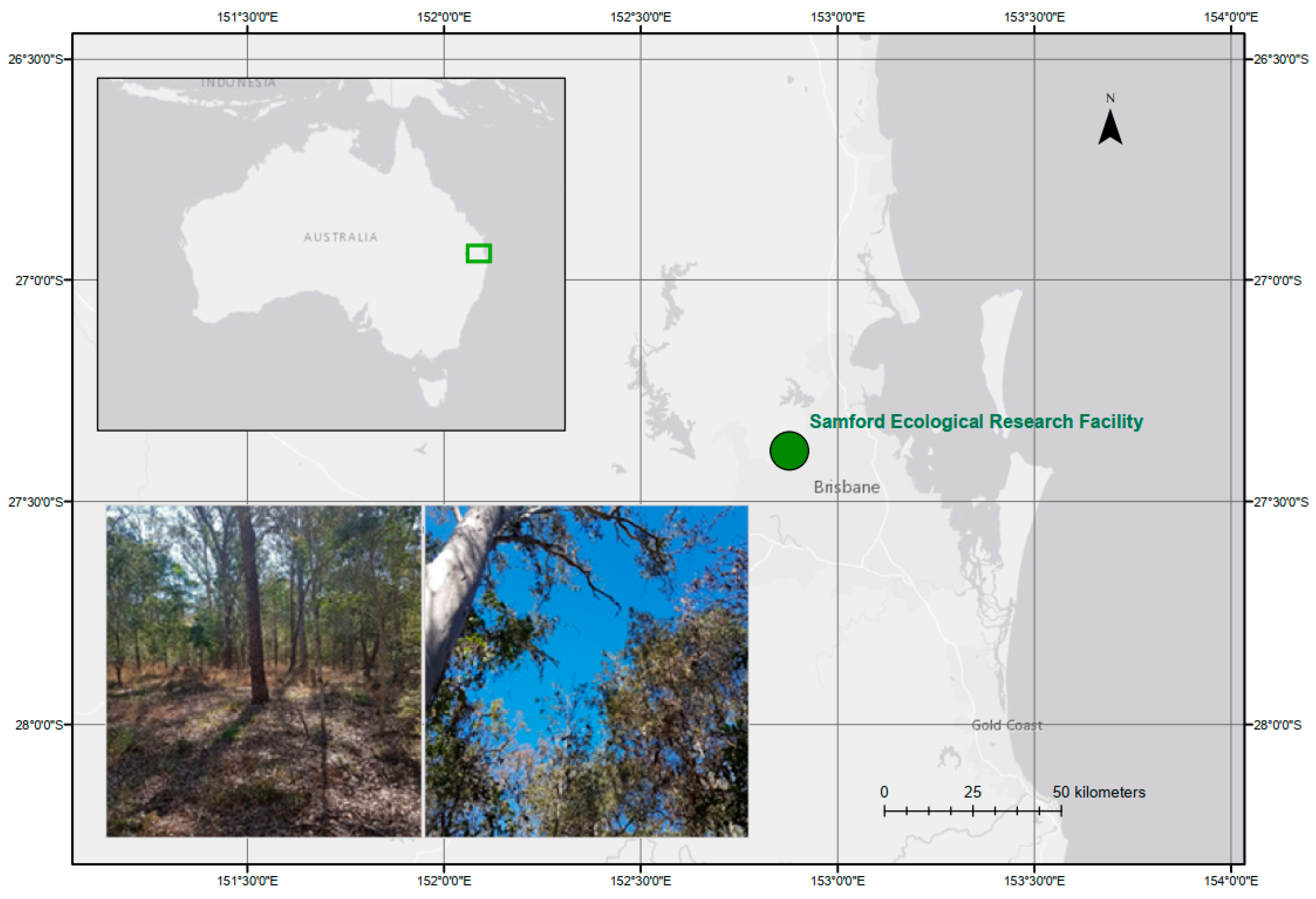

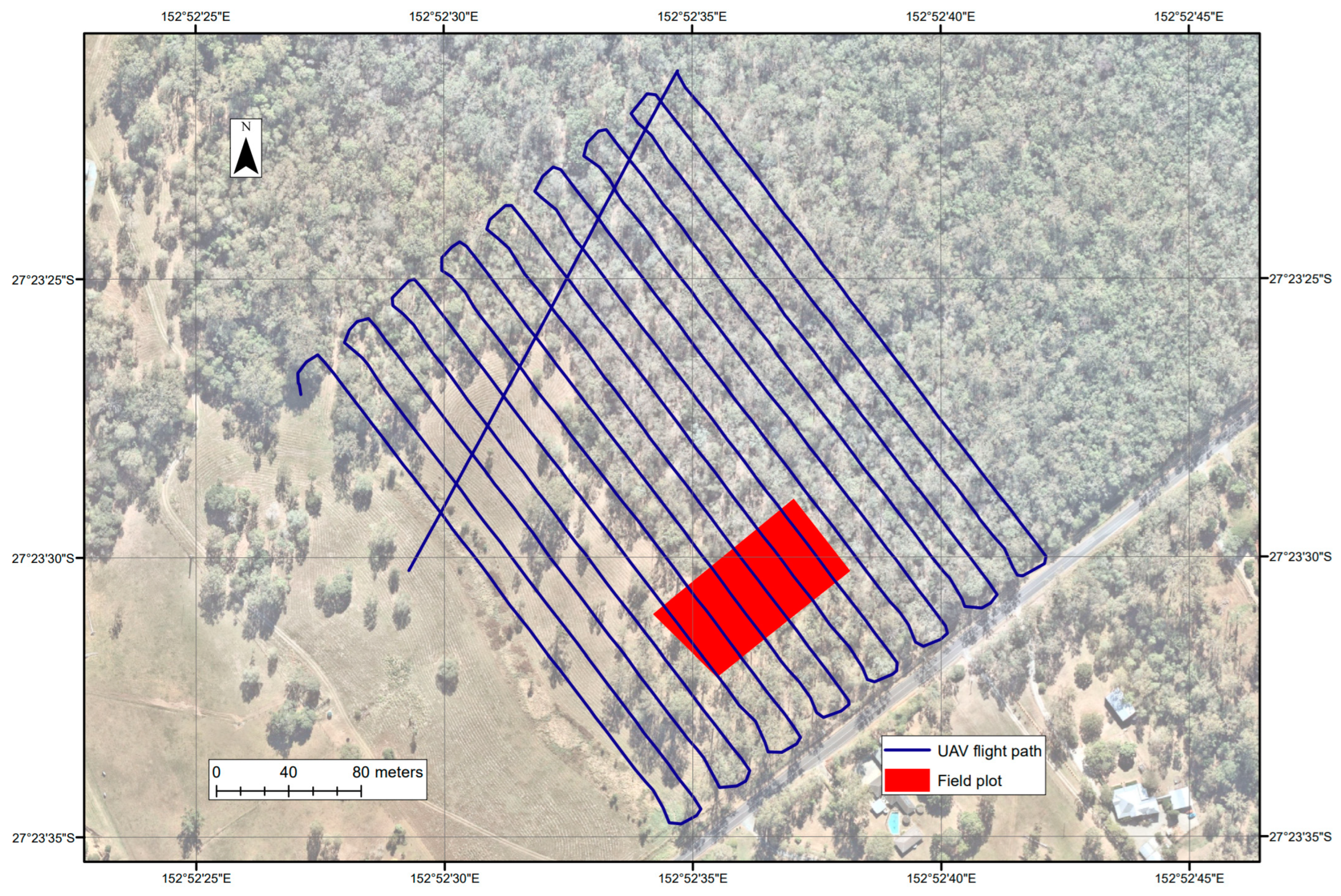

2.1. The Study Site

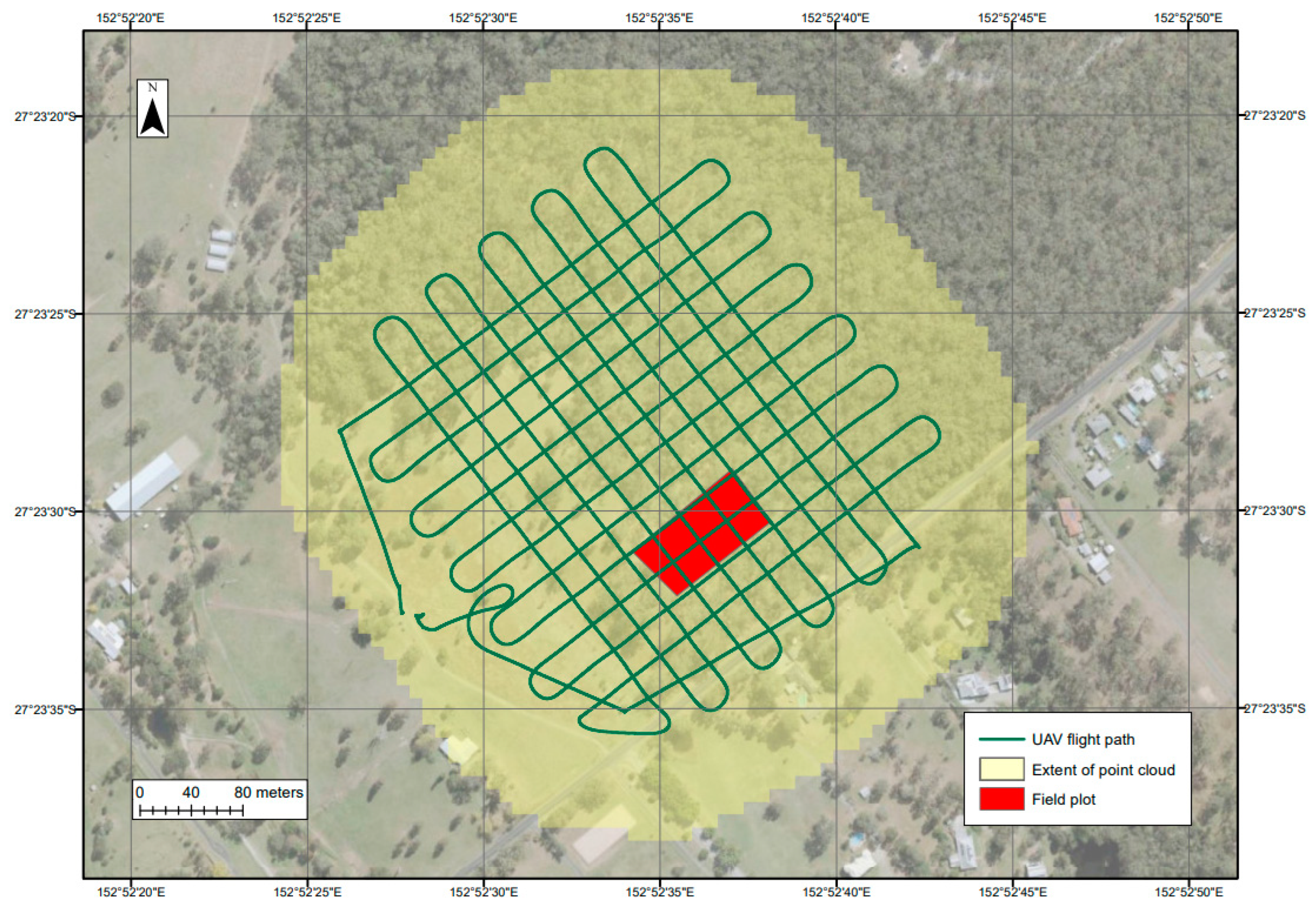

2.2. Data

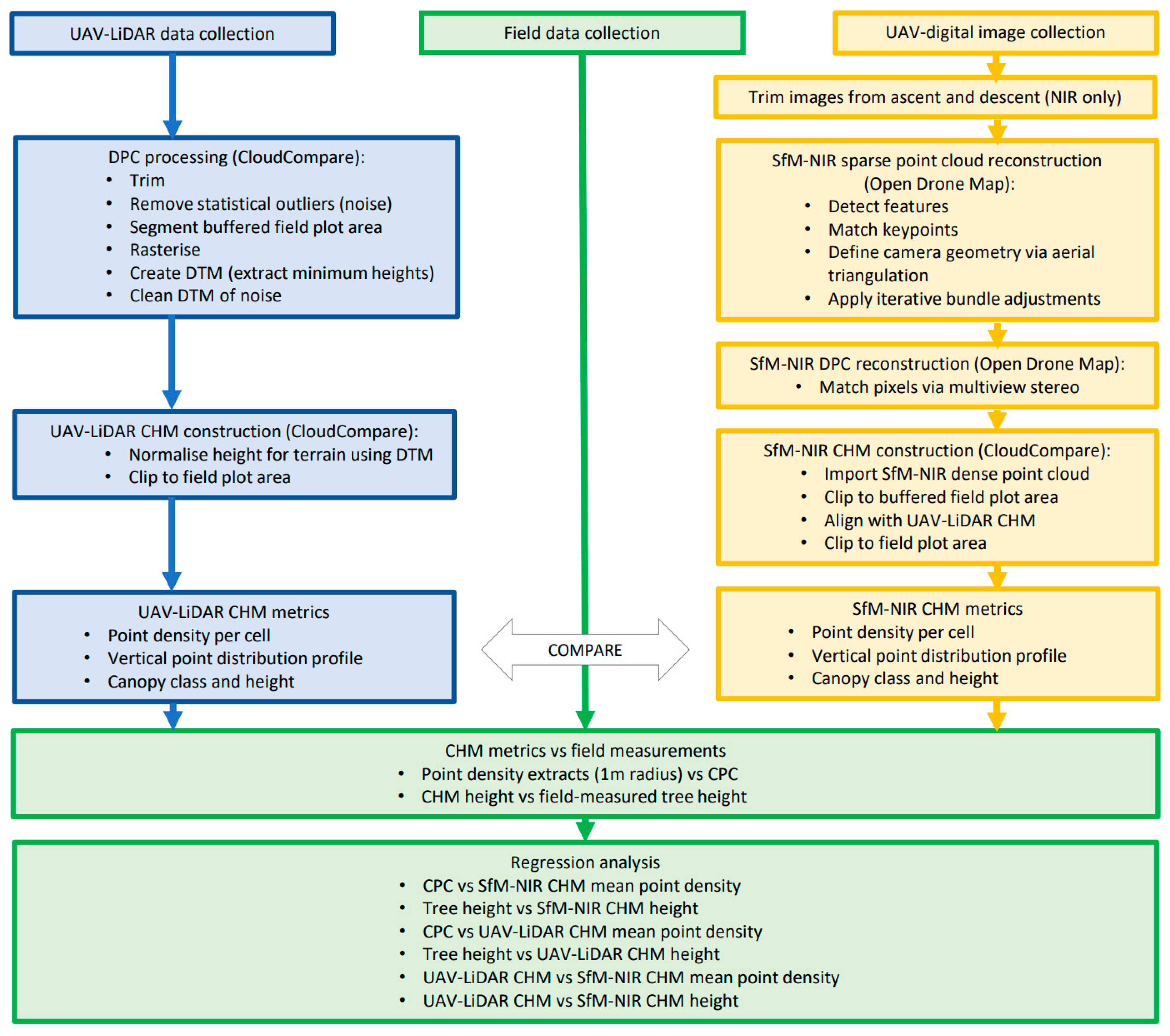

2.2.1. Remote Data

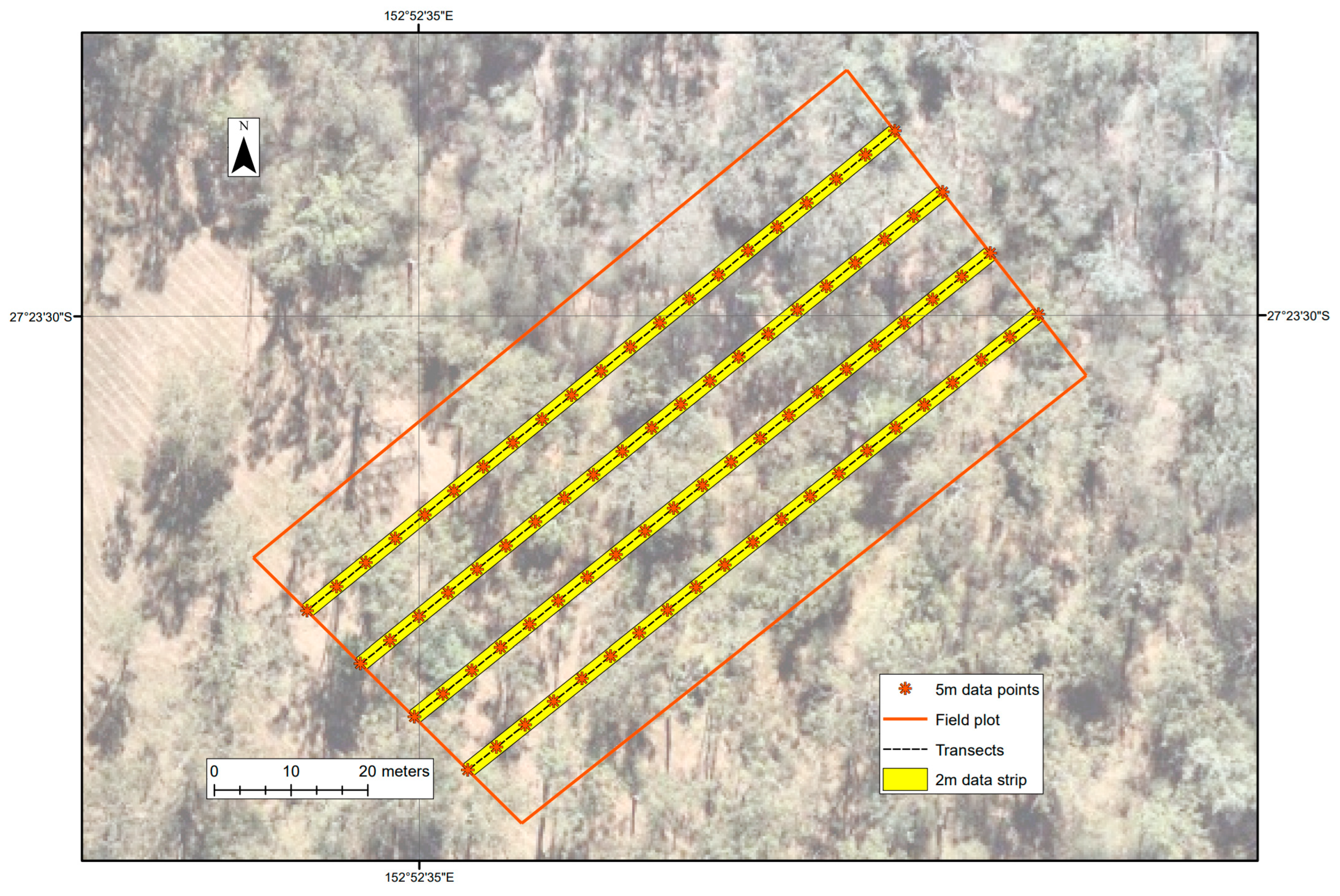

2.2.2. Field Data

2.3. Photogrammetric Pre-Processing of Digital Imagery

2.4. DPC Processing

2.5. Canopy Structure and Analysis

3. Results

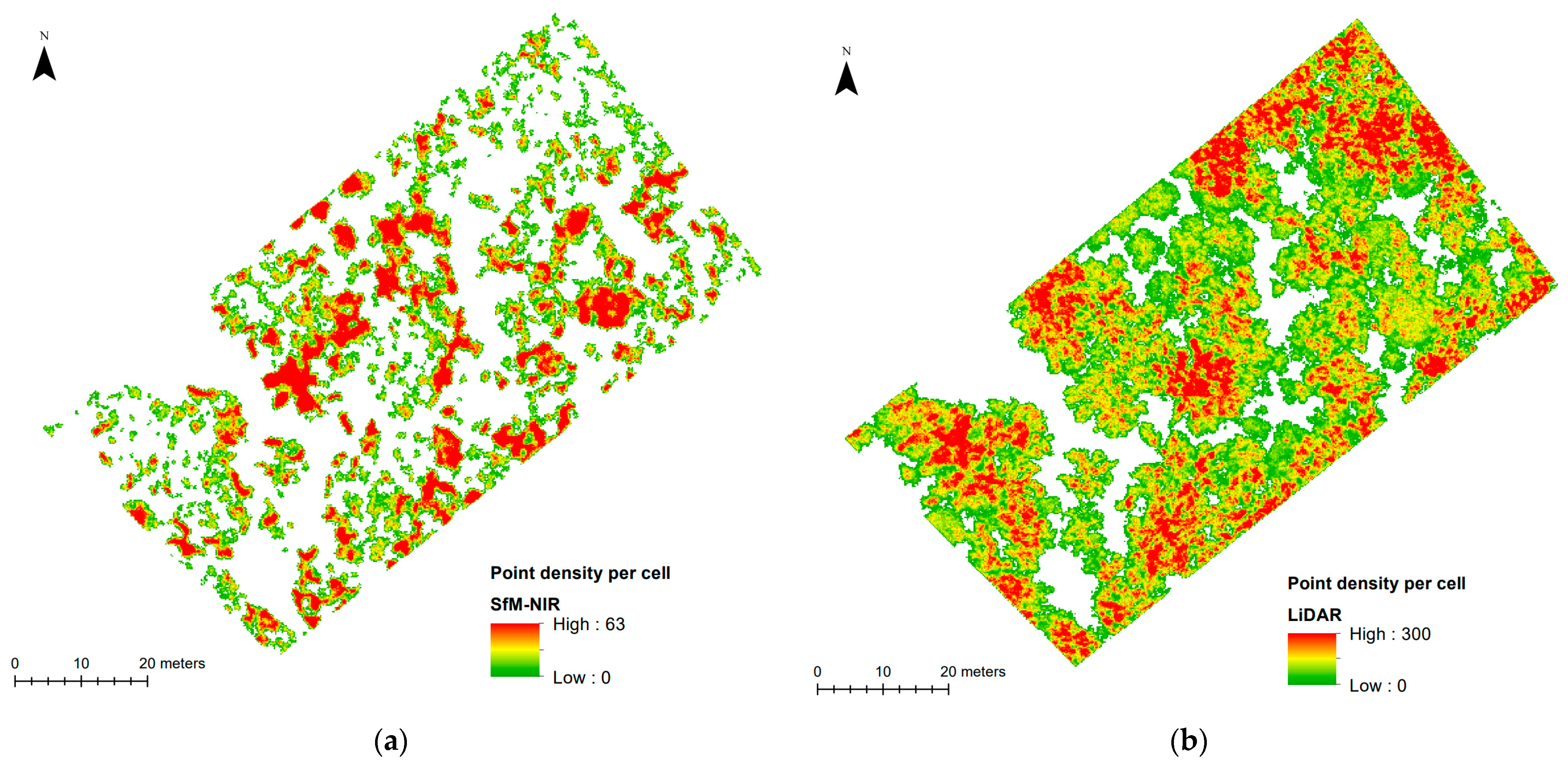

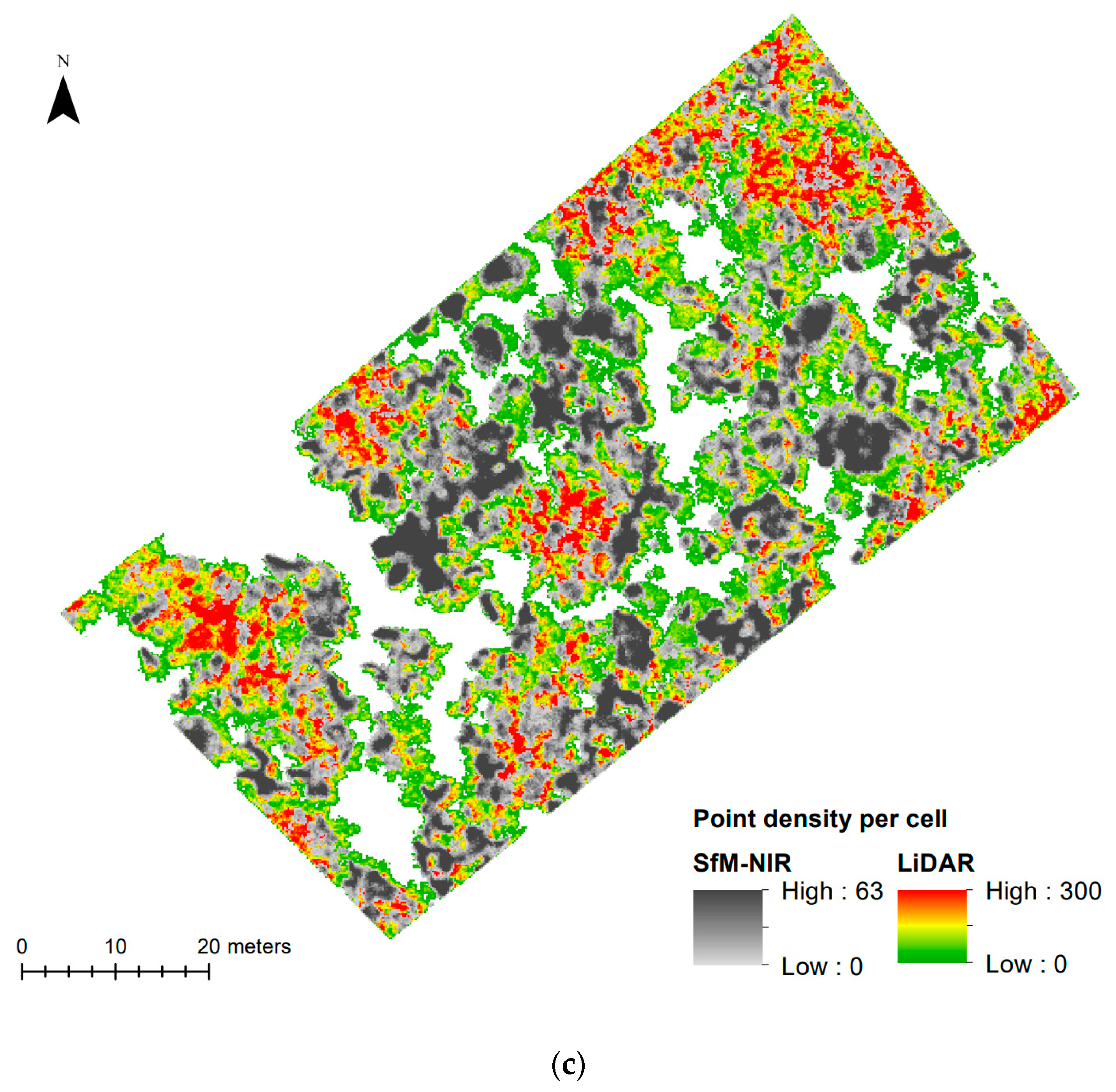

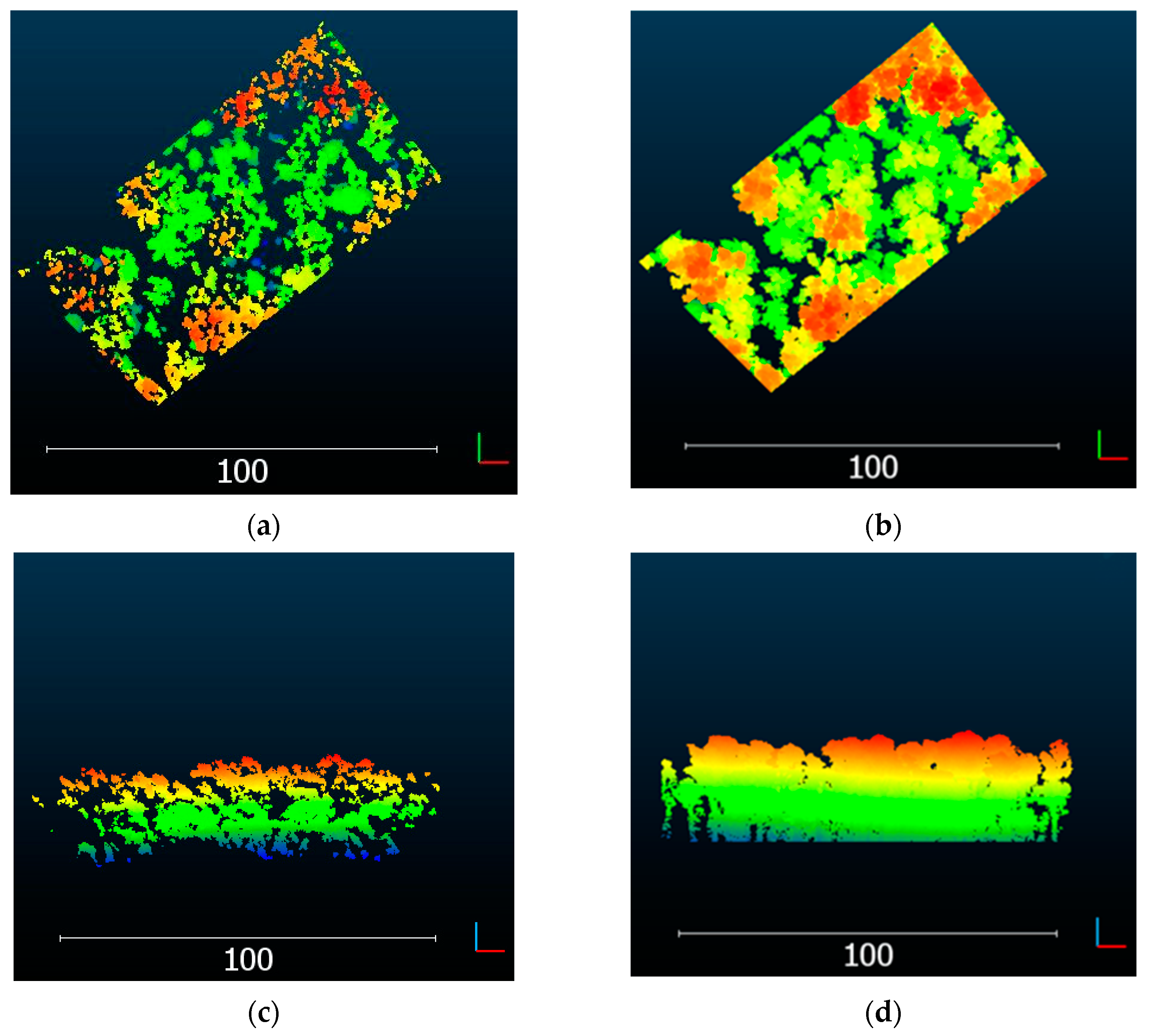

3.1. Comparison of DPC Properties

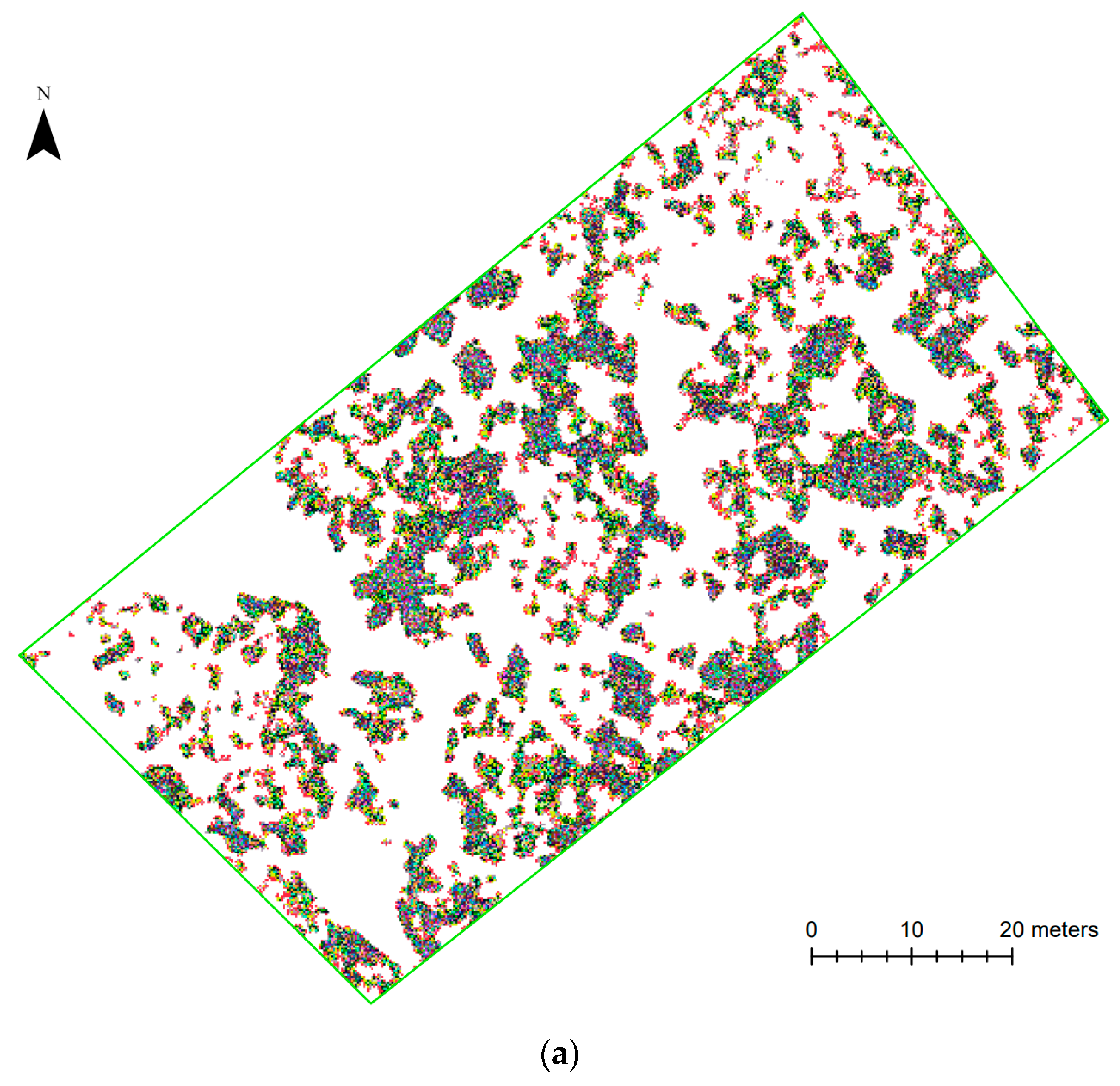

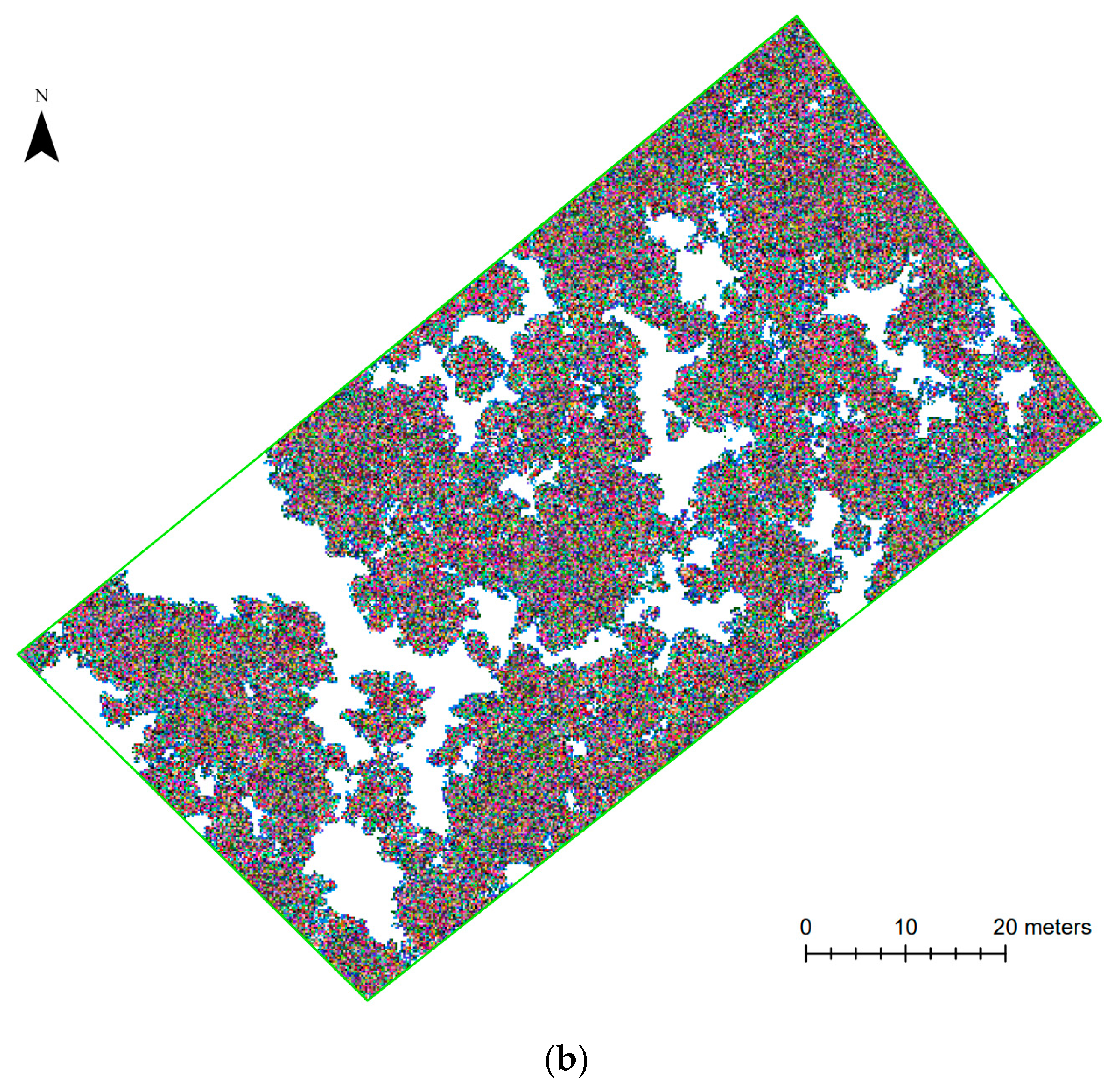

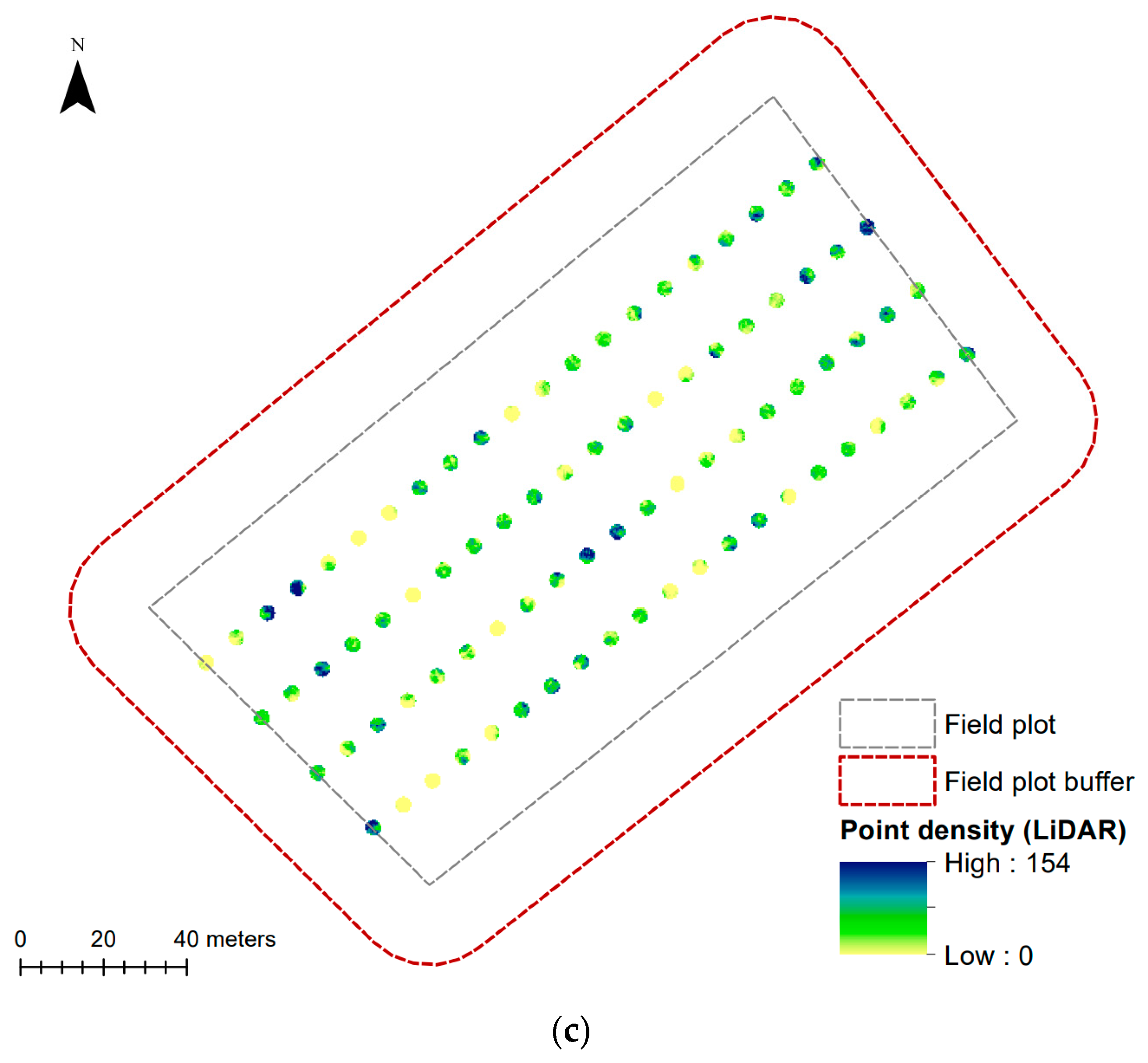

3.2. Comparison of CHM Point Densities

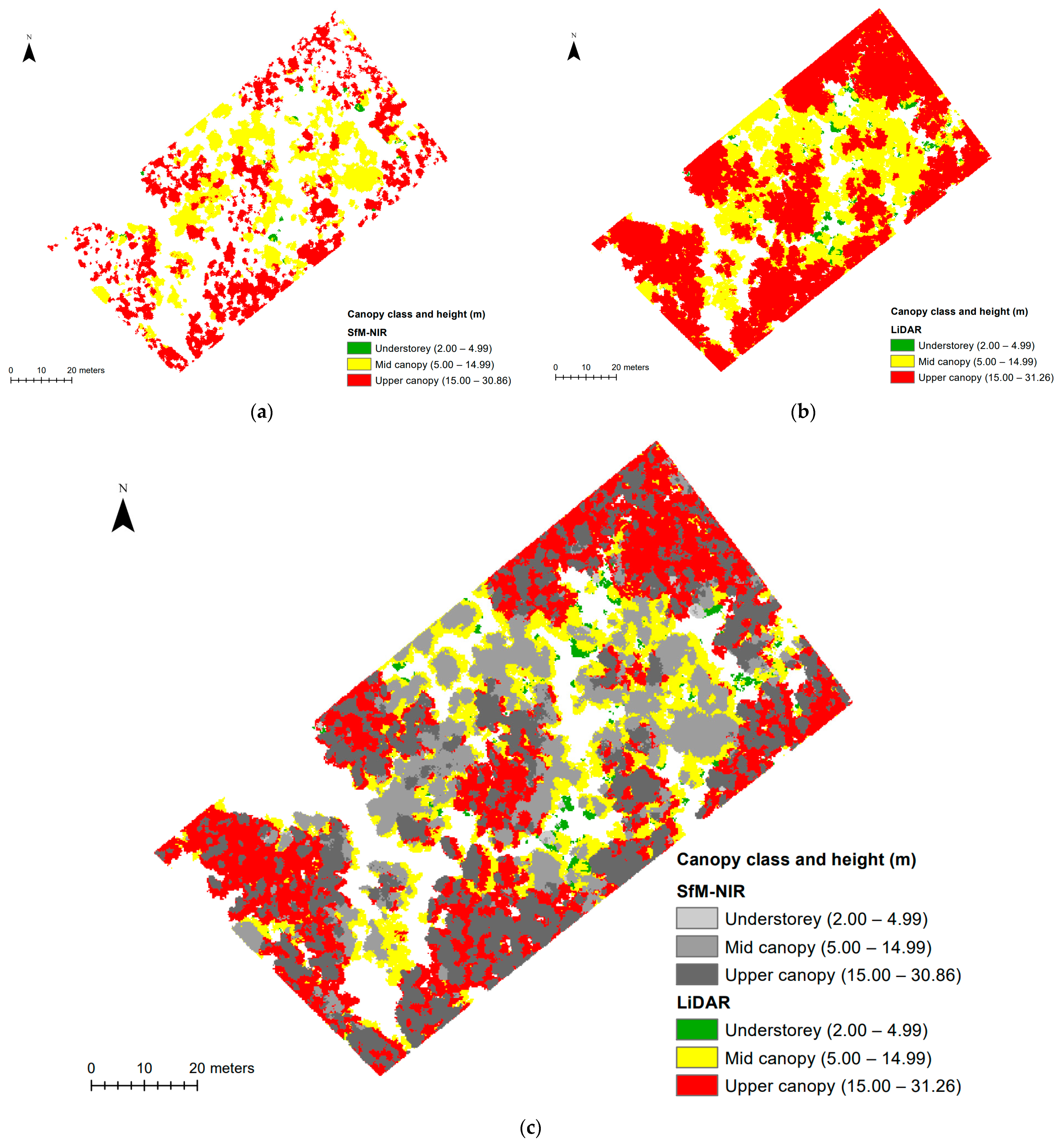

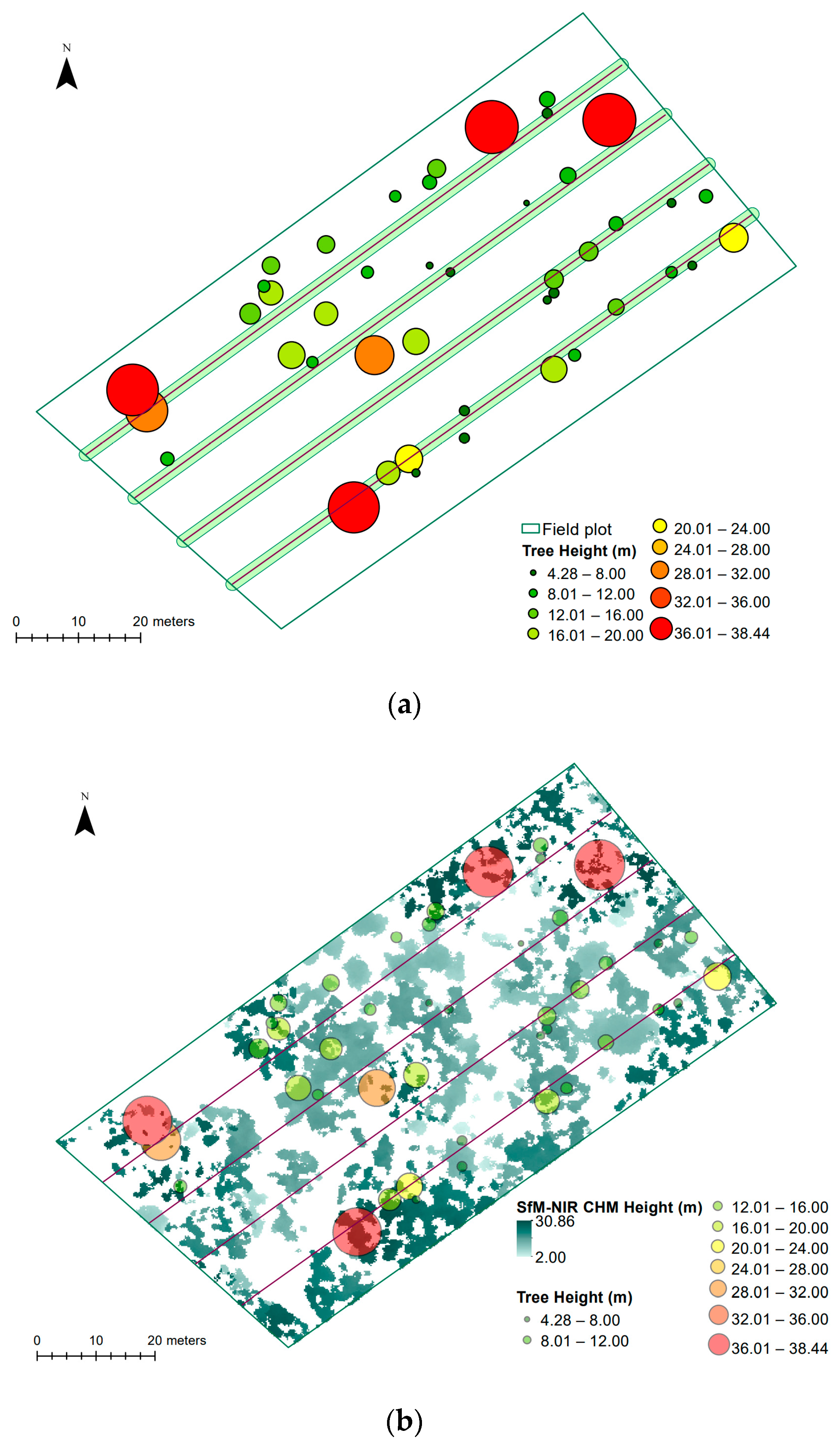

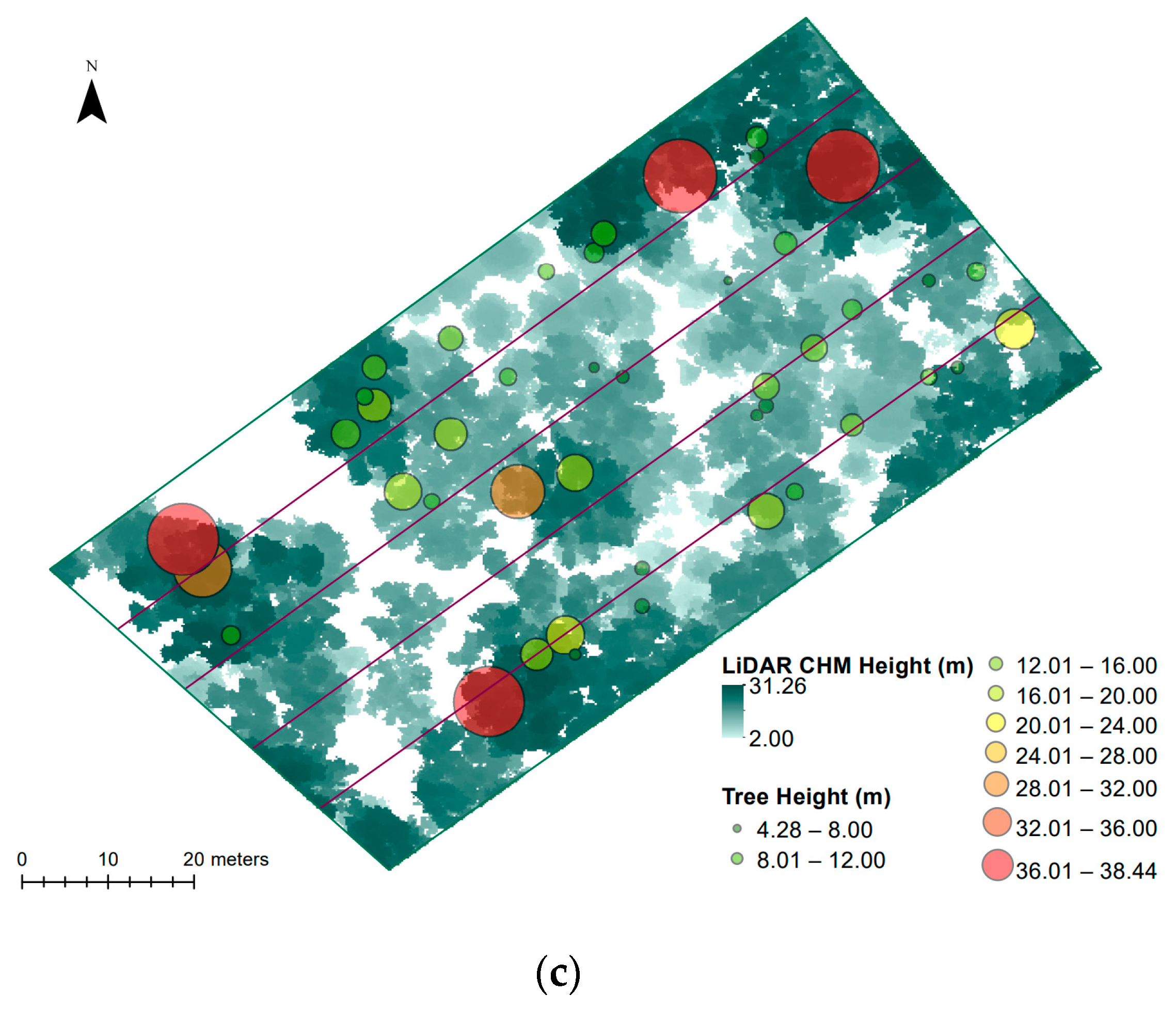

3.3. Comparison of CHM Heights

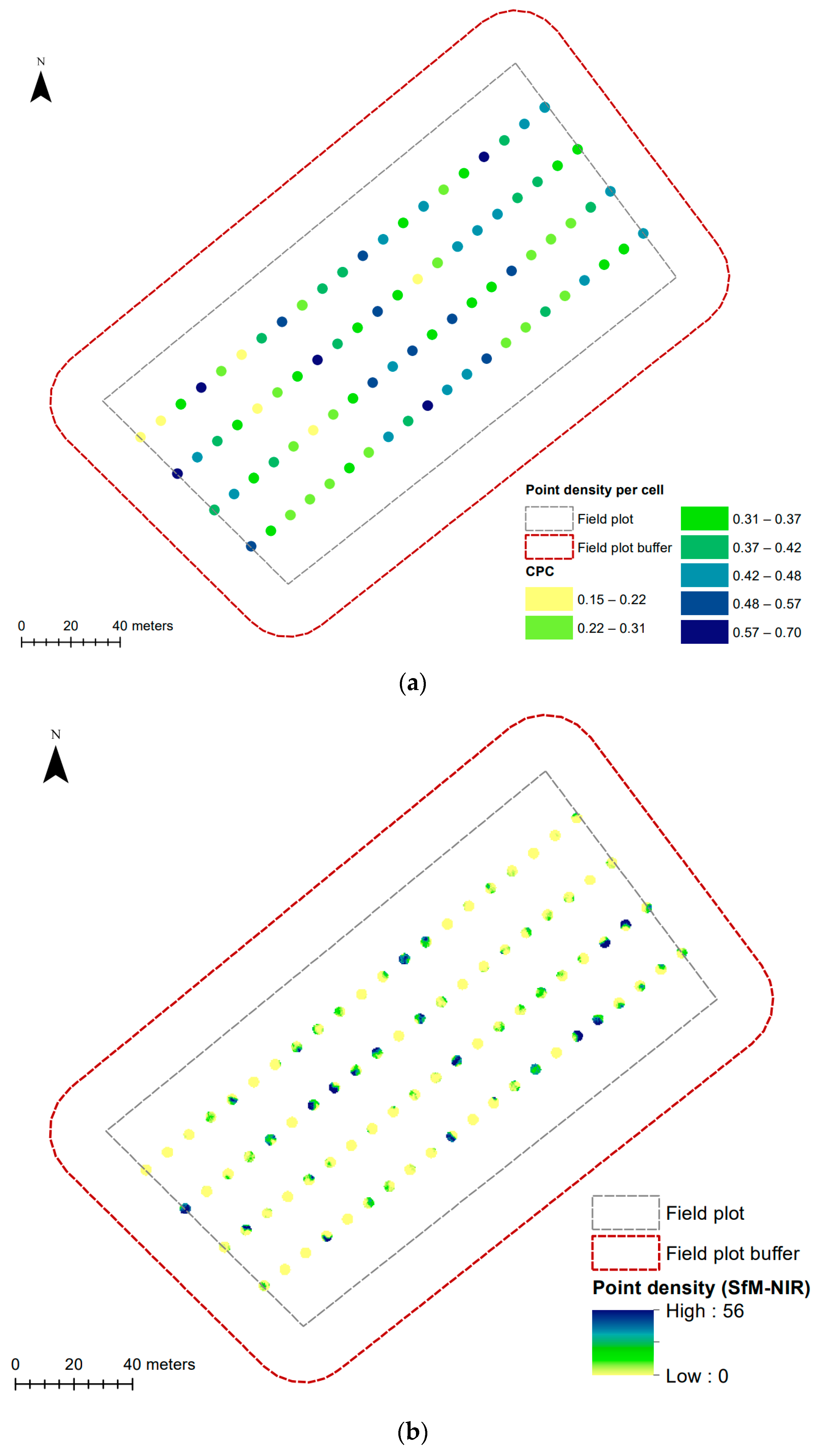

3.4. Comparison with Field Data—Canopy

3.5. Comparison with Field Data—Tree Height

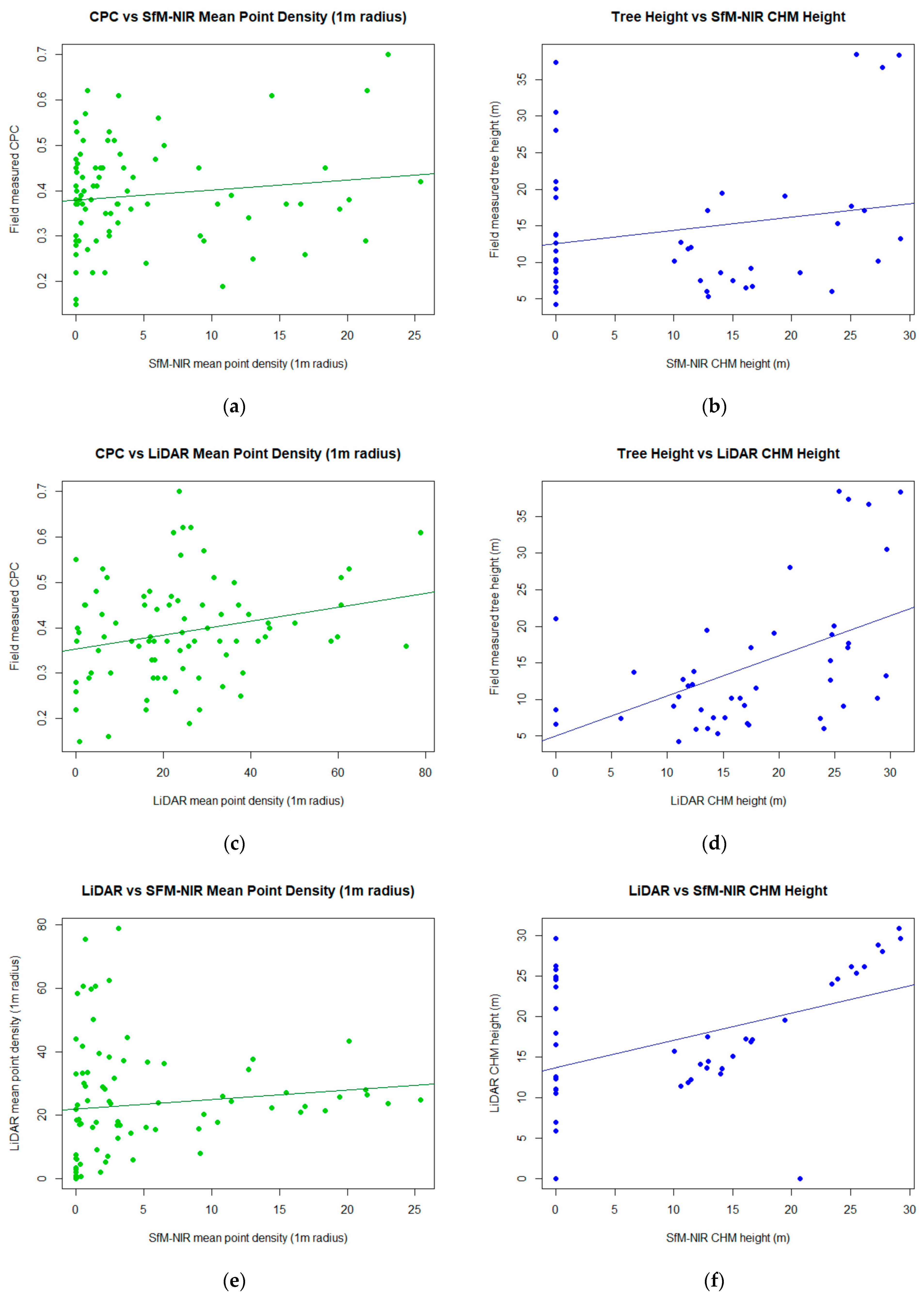

3.6. Regression Analysis

4. Discussion

4.1. Canopy Height Models

4.1.1. Effect of Eucalypt Forest Structure

4.1.2. Overlap and Spatial Resolution

4.1.3. Canopy Penetration

4.1.4. Solar Elevation and Shadow

4.2. CHM Comparison with Field Measurements

4.2.1. Canopy Cover

4.2.2. Canopy Height

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Number of Empty Cells | Number of Available Cells | % No Cover | % Cover | |

|---|---|---|---|---|

| SfM-NIR CHM | 72,879 | 269,230 | 27.1 | 72.9 |

| LiDAR CHM | 25,274 | 269,775 | 9.4 | 90.6 |

Appendix B

| CPC | Sites | % |

|---|---|---|

| 0.15–0.22 | 6 | 7.1 |

| 0.22–0.31 | 17 | 20.2 |

| 0.31–0.37 | 18 | 21.4 |

| 0.37–0.42 | 13 | 15.5 |

| 0.42–0.48 | 16 | 19.0 |

| 0.48–0.57 | 8 | 9.5 |

| 0.57–0.70 | 6 | 7.1 |

| Total | 84 | 100 |

Appendix C

| Field Measured CPC/CHM Mean Point Density per 0.2 m Grid Cell (within 1 m Radius) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Mean+/−1 SD | p0 | p05 | p10 | p25 | p50 | p75 | p90 | p95 | p100 | |

| Field CPC | 0.39+/−0.11 | 0.15 | 0.22 | 0.26 | 0.30 | 0.38 | 0.45 | 0.53 | 0.60 | 0.70 |

| SfM-NIR CHM mean point density | 4.74+/−6.61 | 0.00 | 0.00 | 0.00 | 0.18 | 1.89 | 5.90 | 16.23 | 20.05 | 25.41 |

| LiDAR CHM mean point density | 23.37+/−18.13 | 0.00 | 0.00 | 0.71 | 7.75 | 22.04 | 33.04 | 44.24 | 60.57 | 78.92 |

Appendix D

| Field Measured Tree Height (m)/CHM Height at Same Location (m) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Mean+/−1 SD | p0 | p05 | p10 | p25 | p50 | p75 | p90 | p95 | p100 | |

| Field tree height | 14.41+/−9.35 | 4.28 | 5.95 | 6.21 | 7.55 | 11.55 | 17.65 | 29.55 | 37.19 | 38.44 |

| SfM-NIR CHM height | 18.57+/−6.57 | 10.04 | 10.71 | 11.31 | 12.88 | 16.56 | 25.08 | 27.55 | 28.84 | 29.24 |

| LiDAR CHM height | 18.86+/−6.92 | 5.84 | 10.53 | 11.04 | 12.99 | 17.29 | 24.98 | 28.06 | 29.64 | 30.93 |

References

- Frey, J.; Kovach, K.; Stemmler, S.; Koch, B. UAV photogrammetry of forests as a vulnerable process. A sensitivity analysis for a structure from motion RGB-image pipeline. Remote Sens. 2018, 10, 912. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Malenovsky, Z.; Turner, D.; Vopenka, P. Assessment of forest structure using two UAV techniques: A comparison of airborne laser scanning and structure from motion (SfM) point clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef]

- Fortin, J.A.; Cardille, J.A.; Perez, E. Multi-sensor detection of forest-cover change across 45 years in Mato Grosso, Brazil. Remote Sens. Environ. 2020, 238, 111266. [Google Scholar] [CrossRef]

- Sunde, M.G.; Diamond, D.D.; Elliott, L.F.; Hanberry, P.; True, D. Mapping high-resolution percentage canopy cover using a multi-sensor approach. Remote Sens. Environ. 2020, 242, 111748. [Google Scholar] [CrossRef]

- Dandois, J.P.; Olano, M.; Ellis, E.C. Optimal altitude, overlap, and weather conditions for computer vision UAV estimates of forest structure. Remote Sens. 2015, 7, 13895–13920. [Google Scholar] [CrossRef]

- Hernandez-Santin, L.; Rudge, M.L.; Bartolo, R.E.; Erskine, P.D. Identifying species and monitoring understorey from UAS-derived data: A literature review and future directions. Drones 2019, 3, 9. [Google Scholar] [CrossRef]

- Morley, P.J.; Donoghue, D.N.M.; Chen, J.-C.; Jump, A.S. Quantifying structural diversity to better estimate change at mountain forest margins. Remote Sens. Environ. 2019, 223, 291–306. [Google Scholar] [CrossRef]

- Puliti, S.; Dash, J.P.; Watt, M.S.; Breidenbach, J.; Pearse, G.D. A comparison of UAV laser scanning, photogrammetry and airborne laser scanning for precision inventory of small-forest properties. Forestry 2019, 93, 150–162. [Google Scholar] [CrossRef]

- Guerra-Hernandez, J.; Cosenza, D.N.; Rodriguez, L.C.E.; Silva, M.; Tomé, M.; Díaz-Varela, R.A.; González-Ferreiro, E. Comparison of ALS- and UAV(SfM)-derived high-density point clouds for individual tree detection in eucalyptus plantations. Int. J. Remote Sens. 2018, 39, 5211–5235. [Google Scholar] [CrossRef]

- Ganz, S.; Kaber, Y.; Adler, P. Measuring tree height with remote sensing–A comparison of photogrammetric and LiDAR data with different field measurements. Forests 2019, 10, 694. [Google Scholar] [CrossRef]

- Davies, A.B.; Asner, G.P. Advances in animal ecology from 3D-LiDAR ecosystem mapping. Trends Ecol. Evol. 2014, 29, 681–691. [Google Scholar] [CrossRef] [PubMed]

- Iglhaut, J.; Puliti, S.; Piermattei, L.; O’Connor, J.; Rosette, J. Structure from motion photogrammetry in forestry: A review. Curr. For. Rep. 2019, 5, 155–168. [Google Scholar] [CrossRef]

- Tickle, P.K.; Lee, A.C.; Lucas, R.M.; Austin, J.M.; Witte, C. Quantifying Australian forest floristics and structure using small footprint LiDAR and large scale aerial photography. For. Ecol. Manag. 2006, 223, 379–394. [Google Scholar] [CrossRef]

- Filippelli, S.K.; Lefsky, M.A.; Rocca, M.E. Comparison and integration of lidar and photogrammetric point clouds for mapping pre-fire forest structure. Remote Sens. Environ. 2019, 224, 154–166. [Google Scholar] [CrossRef]

- Fisher, A.; Armston, J.; Goodwin, N.; Scarth, P. Modelling canopy gap probability, foliage projective cover and crown projective cover from airborne lidar metrics in Australian forests and woodlands. Remote Sens. Environ. 2020, 237, 111520. [Google Scholar] [CrossRef]

- Kellner, J.R.; Armston, J.; Birrer, M.; Cushman, K.C.; Duncanson, L.; Eck, C.; Falleger, C.; Imbach, B.; Král, K.; Krůček, M.; et al. New opportunities for forest remote sensing through ultra-high-density drone lidar. Surv. Geophys. 2019, 40, 959–977. [Google Scholar] [CrossRef]

- Remondino, F.; Nocerino, E.; Toschi, I.; Menna, F. A critical review of automated photogrammetric processing of large datasets. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2017, 42, 591–599. [Google Scholar] [CrossRef]

- Gianetti, F.; Puletti, N.; Puliti, S.; Travaglini, D.; Chirici, G. Assessment of UAV photogrammetric DTM-independent variables for modelling and mapping forest structural indices in mixed temperate forests. Ecol. Indic. 2020, 117, 106513. [Google Scholar] [CrossRef]

- Lisein, J.; Pierrot-Deseilligny, M.; Bonnet, S.; Lejune, P. A photogrammetric workflow for the creation of a forest canopy height model from small unmanned aerial system imagery. Forests 2013, 4, 922–944. [Google Scholar] [CrossRef]

- Poorazimy, M.; Shataee, S.; McRoberts, R.E.; Mohammadi, J. Integrating airborne laser scanning data, space-borne radar data and digital aerial imagery to estimate aboveground carbon stock in Hyrcanian forests, Iran. Remote Sens. Environ. 2020, 240, 111669. [Google Scholar] [CrossRef]

- Rahman, M.M.; McDermid, G.J.; Mckeeman, T.; Lovitt, J. A workflow to minimize shadows in UAV-based orthomosaics. J. Unmanned Veh. Syst. 2019, 7, 107–117. [Google Scholar] [CrossRef]

- Ecke, S.; Dempewolf, J.; Frey, J.; Schwaller, A.; Endres, E.; Klemmt, H.-J.; Tiede, D.; Seifert, T. UAV-based forest health monitoring: A systematic review. Remote Sens. 2022, 14, 3205. [Google Scholar] [CrossRef]

- Hillman, S.; Hally, B.; Wallace, L.; Turner, D.; Lucieer, A.; Reinke, K.; Jones, S. High-resolution estimates of fire severity—An evaluation of uas image and lidar mapping approaches on a sedgeland forest boundary in Tasmania, Australia. Fire 2021, 4, 14. [Google Scholar] [CrossRef]

- Fletcher, A.; Mather, R. Hypertemporal imaging capability of UAS improves photogrammetric tree canopy models. Remote Sens. 2020, 12, 1238. [Google Scholar] [CrossRef]

- Goldbergs, G.; Maier, S.W.; Levick, S.R.; Edwards, A. Limitations of high resolution satellite stereo imagery for estimating canopy height in Australian tropical savannas. Int. J. Appl. Earth Obs. 2019, 75, 83–95. [Google Scholar] [CrossRef]

- Queensland Government. Eucalypt Open-Forest. Available online: https://www.qld.gov.au/environment/plants-animals/habitats/habitat/eucalypt-open-forest (accessed on 31 January 2023).

- Olive, K.; Lewis, T.; Ghaffariyan, M.R.; Srivastava, S.K. Comparing canopy height estimates from satellite-based photogrammetry, airborne laser scanning and field measurements across Australian production and conservation eucalypt forests. J. For. Res. Jpn. 2020, 25, 108–112. [Google Scholar] [CrossRef]

- Corte, A.P.D.; de Vasconcellos, B.N.; Rex, F.E.; Sanquetta, C.R.; Mohan, M.; Silva, C.A.; Klauberg, C.; de Almeida, D.R.A.; Zambrano, A.M.A.; Trautenmüller, J.W.; et al. Applying high-resolution uav-lidar and quantitative structure modelling for estimating tree attributes in a crop-livestock-forest system. Land 2022, 11, 507. [Google Scholar] [CrossRef]

- Gao, L.; Chai, G.; Zhang, X. Above-ground biomass estimation of plantation with different tree species using airborne lidar and hyperspectral data. Remote Sens. 2022, 14, 2568. [Google Scholar] [CrossRef]

- Liao, K.; Li, Y.; Zou, B.; Li, D.; Lu, D. Examining the role of uav lidar data in improving tree volume calculation accuracy. Remote Sens. 2022, 14, 4410. [Google Scholar] [CrossRef]

- Silva, C.A.; Klauberg, C.; Hudak, A.T.; Vierling, L.A.; Liesenberg, V.; Carvalho, S.P.C.E.; Rodriguez, L.C.E. A principal component approach for predicting the stem volume in eucalyptus plantations in Brazil using airborne lidar data. Int. J. For. Res. 2016, 89, 422–433. [Google Scholar] [CrossRef]

- Silva, C.A.; Klauberg, C.E.; Carvalho, S.D.P.C.; Hudak, A.T.; Rodriguez, L.C.E. Mapping Aboveground Carbon Stocks Using Lidar Data in Eucalyptus spp. Plantations in the State of São Paulo, Brazil. Sci For. 2014, 42, 591–604. Available online: https://www.scopus.com/inward/record.uri?eid=2-s2.0-84923204323&partnerID=40&md5=080c779b8162dc1b043d3b8542c42ee9 (accessed on 2 February 2023).

- Zhang, Y.; Lu, D.; Jiang, X.; Li, Y.; Li, D. Forest structure simulation of eucalyptus plantation using remote-sensing-based forest age data and 3-pg model. Remote Sens. 2023, 15, 183. [Google Scholar] [CrossRef]

- Karna, Y.K.; Penman, T.D.; Aponte, C.; Gutekunst, C.; Bennett, L.T. Indications of positive feedbacks to flammability through fuel structure after high-severity fire in temperate eucalypt forests. Int. J. Wildland Fire 2021, 30, 664–679. [Google Scholar] [CrossRef]

- Brown, T.P.; Inbar, A.; Duff, T.J.; Burton, J.; Noske, P.J.; Lane, P.N.J.; Sheridan, G.J. Forest structure drives fuel moisture response across alternative forest states. Fire 2021, 4, 48. [Google Scholar] [CrossRef]

- Pendall, E.; Hewitt, A.; Boer, M.M.; Carrillo, Y.; Glenn, N.F.; Griebel, A.; Middleton, J.H.; Mumford, P.J.; Ridgeway, P.; Rymer, P.D.; et al. Remarkable resilience of forest structure and biodiversity following fire in the peri-urban bushland of Sydney, Australia. Climate 2022, 10, 86. [Google Scholar] [CrossRef]

- Fedrigo, M.; Newnham, G.J.; Coops, N.C.; Culvenor, D.S.; Bolton, D.K.; Nitschke, C.R. Predicting temperate forest stand types using only structural profiles from discrete return airborne lidar. ISPRS J. Photogramm. Remote Sens. 2018, 136, 106–119. [Google Scholar] [CrossRef]

- Jaskierniak, D.; Lucieer, A.; Kuczera, G.; Turner, D.; Lane, P.N.J.; Benyon, R.G.; Haydon, S. Individual tree detection and crown delineation from unmanned aircraft system (uas) lidar in structurally complex mixed species eucalypt forests. ISPRS J. Photogramm. 2021, 171, 171–187. [Google Scholar] [CrossRef]

- Trouvé, R.; Jiang, R.; Fedrigo, M.; White, M.D.; Kasel, S.; Baker, P.J.; Nitschke, C.R. Combining environmental, multispectral, and lidar data improves forest type classification: A case study on mapping cool temperate rainforests and mixed forests. Remote Sens. 2023, 15, 60. [Google Scholar] [CrossRef]

- Dhargay, S.; Lyell, C.S.; Brown, T.P.; Inbar, A.; Sheridan, G.J.; Lane, P.N.J. Performance of gedi space-borne lidar for quantifying structural variation in the temperate forests of south-eastern Australia. Remote Sens. 2022, 14, 3615. [Google Scholar] [CrossRef]

- Fisher, A.; Scarth, P.; Armston, J.; Danaher, T. Relating foliage and crown projective cover in Australian tree stands. Agr. Forest Meteorol. 2018, 259, 39–47. [Google Scholar] [CrossRef]

- Almeida, A.; Gonçalves, F.; Silva, G.; Mendonça, A.; Gonzaga, M.; Silva, J.; Souza, R.; Leite, I.; Neves, K.; Boeno, M.; et al. Individual tree detection and qualitative inventory of a Eucalyptus sp. stand using uav photogrammetry data. Remote Sens. 2021, 13, 3655. [Google Scholar] [CrossRef]

- Dell, M.; Stone, C.; Osborn, J.; Glen, M.; McCoull, C.; Rimbawanto, A.; Tjahyono, B.; Mohammed, C. Detection of necrotic foliage in a young Eucalyptus pellita plantation using unmanned aerial vehicle RGB photography–a demonstration of concept. Aust. For. 2019, 82, 79–88. [Google Scholar] [CrossRef]

- Tupinambá-Simões, F.; Bravo, F.; Guerra-Hernández, J.; Pascual, A. Assessment of drought effects on survival and growth dynamics in eucalypt commercial forestry using remote sensing photogrammetry. A showcase in Mato Grosso, Brazil. Forest Ecol. Manag. 2022, 505, 119930. [Google Scholar] [CrossRef]

- Zhu, R.; Guo, Z.; Zhang, X. Forest 3d reconstruction and individual tree parameter extraction combining close-range photo enhancement and feature matching. Remote Sens. 2021, 13, 1633. [Google Scholar] [CrossRef]

- Krisanski, S.; Taskhiri, M.S.; Turner, P. Enhancing methods for under-canopy unmanned aircraft system based photogrammetry in complex forests for tree diameter measurement. Remote Sens. 2020, 12, 1652. [Google Scholar] [CrossRef]

- Queensland Government. Regional Ecosystem Details for 12.12.12. Available online: https://apps.des.qld.gov.au/regional-ecosystems/details/?re=12.12.12 (accessed on 11 September 2020).

- Vélez, S.; Vacas, R.; Martín, H.; Ruano-Rosa, D.; Álvarez, S. A novel technique using planar area and ground shadows calculated from UAV RGB imagery to estimate pistachio tree (Pistacia vera L.) canopy volume. Remote Sens. 2022, 14, 6006. [Google Scholar] [CrossRef]

- Kükenbrink, D.; Marty, M.; Bösch, R.; Ginzler, C. Benchmarking laser scanning and terrestrial photogrammetry to extract forest inventory parameters in a complex temperate forest. Int. J. Appl. Earth Obs. 2022, 113, 102999. [Google Scholar] [CrossRef]

- Brede, B.; Terryn, L.; Barbier, N.; Bartholomeus, H.M.; Bartolo, R.; Calders, K.; Derroire, G.; Krishna Moorthy, S.M.; Lau, A.; Levick, S.R.; et al. Non-destructive estimation of individual tree biomass: Allometric models, terrestrial and UAV laser scanning. Remote Sens. Environ. 2022, 280, 113180. [Google Scholar] [CrossRef]

- Patel, N. CanopyCapture (Version 1.0.2) [Mobile Application Software]. Available online: https://nikp29.github.io/CanopyCapture/ (accessed on 25 August 2020).

- Thiel, C.; Schmullius, C. Comparison of UAV photograph-based and airborne lidar-based point clouds over forest from a forestry application perspective. Int. J. Remote Sens. 2017, 38, 241–2426. [Google Scholar] [CrossRef]

- Whitehurst, A.S.; Swatantran, A.; Blair, J.B.; Hofton, M.A.; Dubayah, R. Characterization of canopy layering in forested ecosystems using full waveform lidar. Remote Sens. 2013, 5, 2014–2036. [Google Scholar] [CrossRef]

- Whiteside, T.; Bartolo, R. A robust object-based woody cover extraction technique for monitoring mine site revegetation at scale in the monsoonal tropics using multispectral RPAS imagery from different sensors. Int. J. Appl. Earth Obs. 2018, 73, 300–312. [Google Scholar] [CrossRef]

- Toffanin, P. OpenDroneMap: The Missing Guide: A Practical Guide to Drone Mapping Using Free and Open Source Software, 1st ed.; UAV4GEO: St. Petersburg, FL, USA, 2019. [Google Scholar]

- Goldbergs, G.; Maier, S.W.; Levick, S.R.; Edwards, A. Efficiency of individual tree detection approaches based on light-weight and low-cost UAS imagery in Australian savannas. Remote Sens. 2018, 10, 161. [Google Scholar] [CrossRef]

- Sofonia, J.J.; Phinn, S.; Roelfsema, C.; Kendoul, F.; Rist, Y. Modelling the effects of fundamental UAV flight parameters on LiDAR point clouds to facilitate objectives-based planning. ISPRS J. Photogramm. 2019, 149, 105–118. [Google Scholar] [CrossRef]

- Jurjević, L.; Liang, X.; Gašparović, M.; Balenović, I. Is field-measured tree height as reliable as believed–Part II, A comparison study of tree height estimates from conventional field measurement and low-cost close-range remote sensing in a deciduous forest. ISPRS J. Photogramm. 2020, 169, 227–241. [Google Scholar] [CrossRef]

| SfM-NIR | LiDAR | |||

|---|---|---|---|---|

| Number of Points | % Removed from Previous Cloud | Number of Points | % Removed from Previous Cloud | |

| Raw data | 29,910,131 | - | 112,090,335 | - |

| Trimmed and cleaned | - | - | 85,110,874 | 24.07 |

| Clipped by buffered field plot | 1,158,749 | 96.13 | 7,340,689 | 91.38 |

| Points below 2 mAGL removed | 801,306 | 30.85 | 4,918,675 | 32.99 |

| Clipped by field plot (final CHM) | 468,491 | 41.53 | 2,963,676 | 39.75 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Winsen, M.; Hamilton, G. A Comparison of UAV-Derived Dense Point Clouds Using LiDAR and NIR Photogrammetry in an Australian Eucalypt Forest. Remote Sens. 2023, 15, 1694. https://doi.org/10.3390/rs15061694

Winsen M, Hamilton G. A Comparison of UAV-Derived Dense Point Clouds Using LiDAR and NIR Photogrammetry in an Australian Eucalypt Forest. Remote Sensing. 2023; 15(6):1694. https://doi.org/10.3390/rs15061694

Chicago/Turabian StyleWinsen, Megan, and Grant Hamilton. 2023. "A Comparison of UAV-Derived Dense Point Clouds Using LiDAR and NIR Photogrammetry in an Australian Eucalypt Forest" Remote Sensing 15, no. 6: 1694. https://doi.org/10.3390/rs15061694

APA StyleWinsen, M., & Hamilton, G. (2023). A Comparison of UAV-Derived Dense Point Clouds Using LiDAR and NIR Photogrammetry in an Australian Eucalypt Forest. Remote Sensing, 15(6), 1694. https://doi.org/10.3390/rs15061694