A Fast Hyperspectral Tracking Method via Channel Selection

Abstract

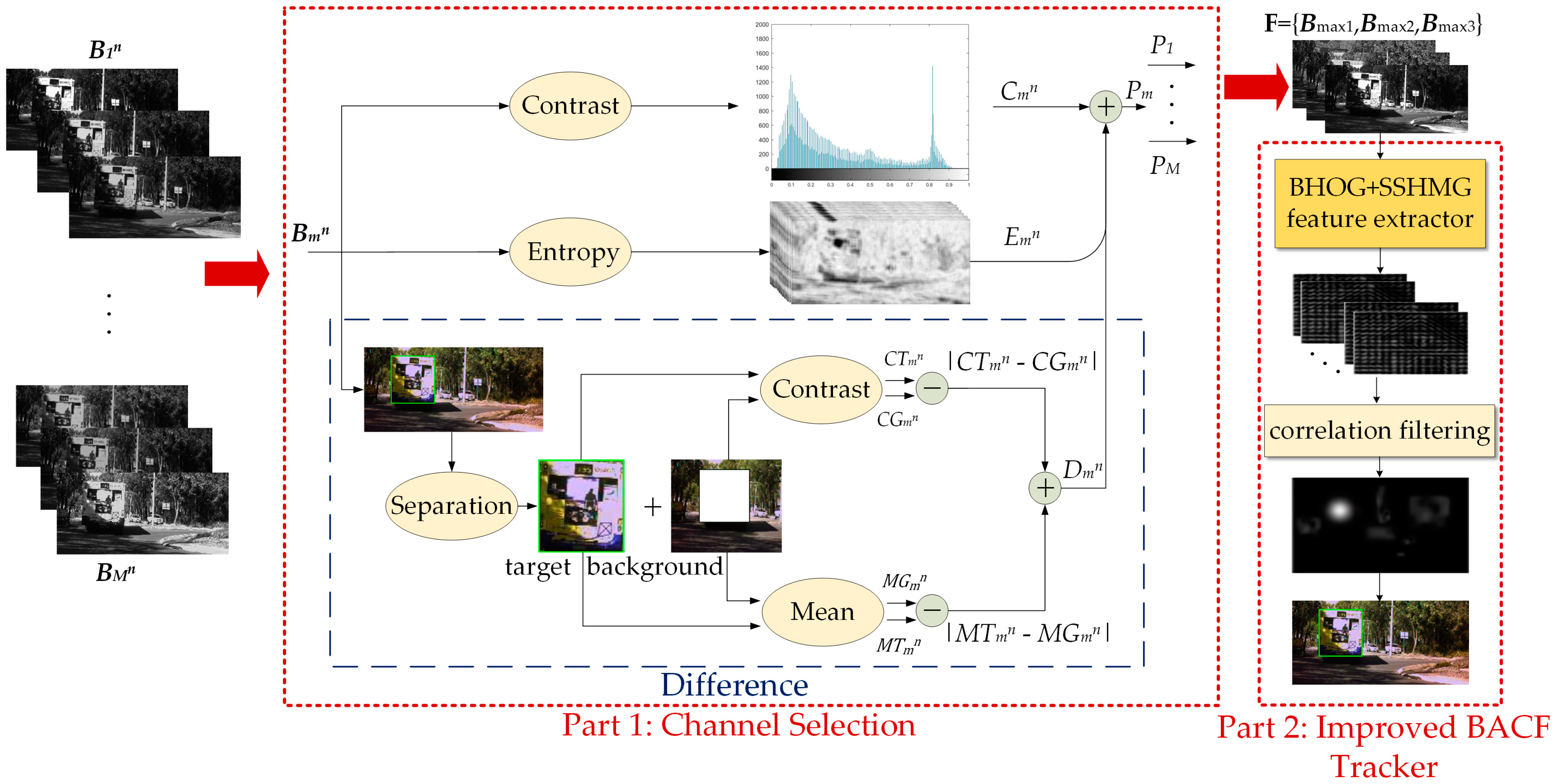

1. Introduction

- (1)

- We design a channel selection strategy for the hyperspectral video and then input the selected channels into a BACF tracking framework, which successfully reduces the massive hyperspectral video input.

- (2)

- We combine the band-by-band HOG (BHOG) and SSHMG in the BACF and capture the local and global spectral features to obtain a feature image with higher quality, thus improving tracking accuracy.

- (3)

- Our method achieved the fastest tracking speed and the highest tracking accuracy on the only hyperspectral video object tracking benchmark dataset currently available [33].

2. Proposed Method

2.1. Channel Selection

2.1.1. Contrast Module

2.1.2. Entropy Module

2.1.3. Difference Module

2.1.4. The Candidate Channels Selection

2.2. BACF Tracker

2.2.1. Classical BACF Tracker

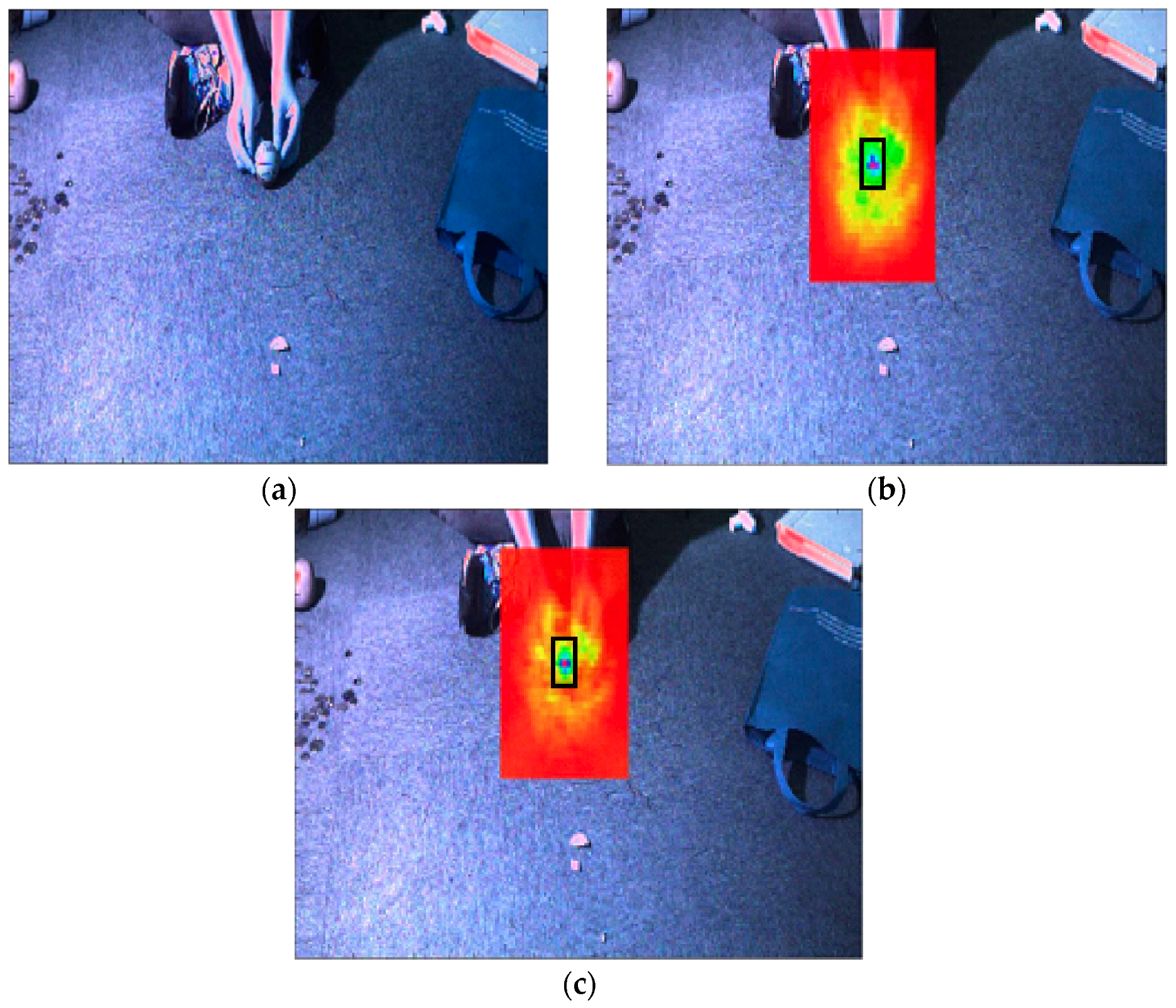

2.2.2. Improved BACF tracker

3. Experiment and Results

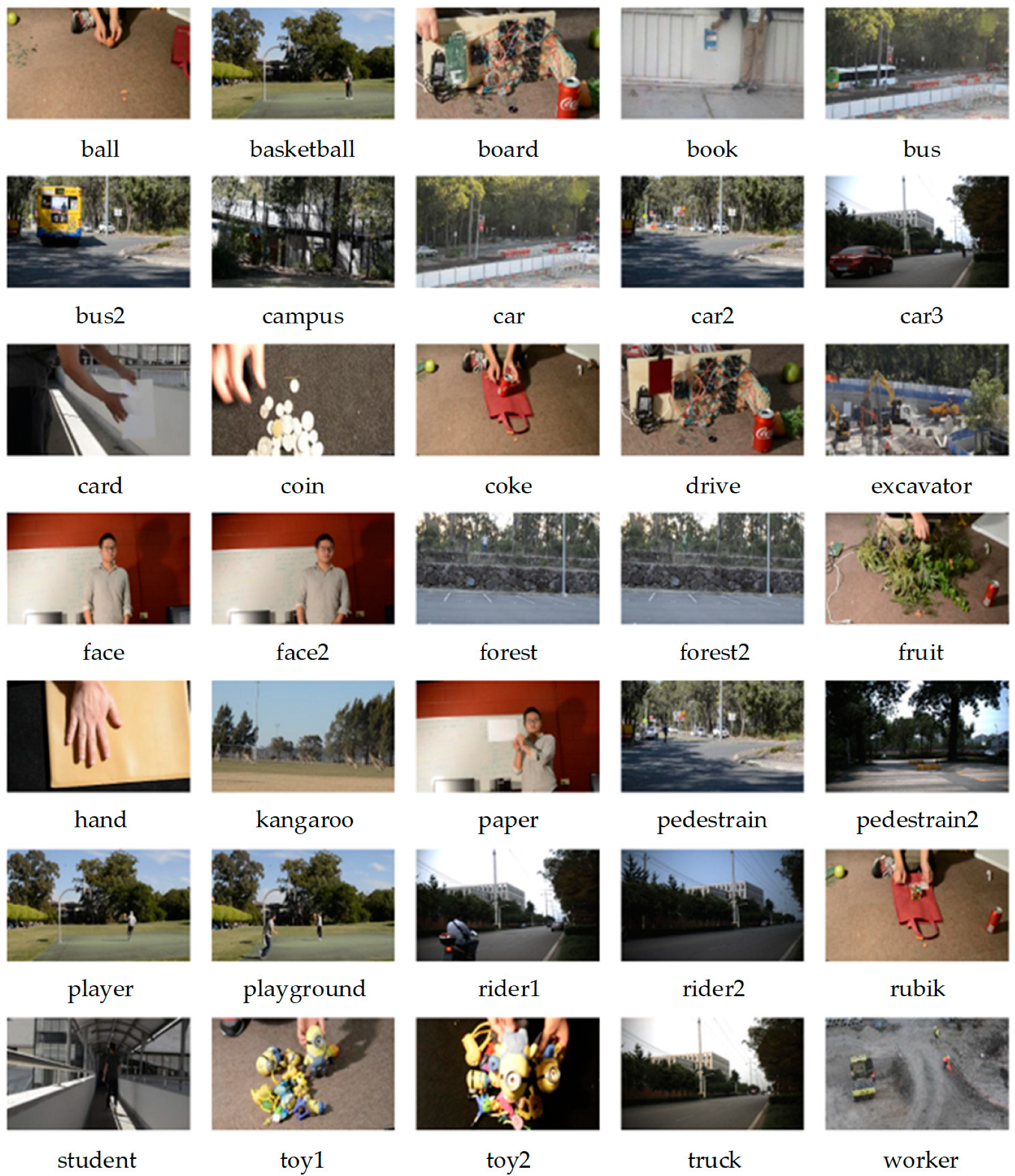

3.1. Dataset

3.2. Experiment Setting

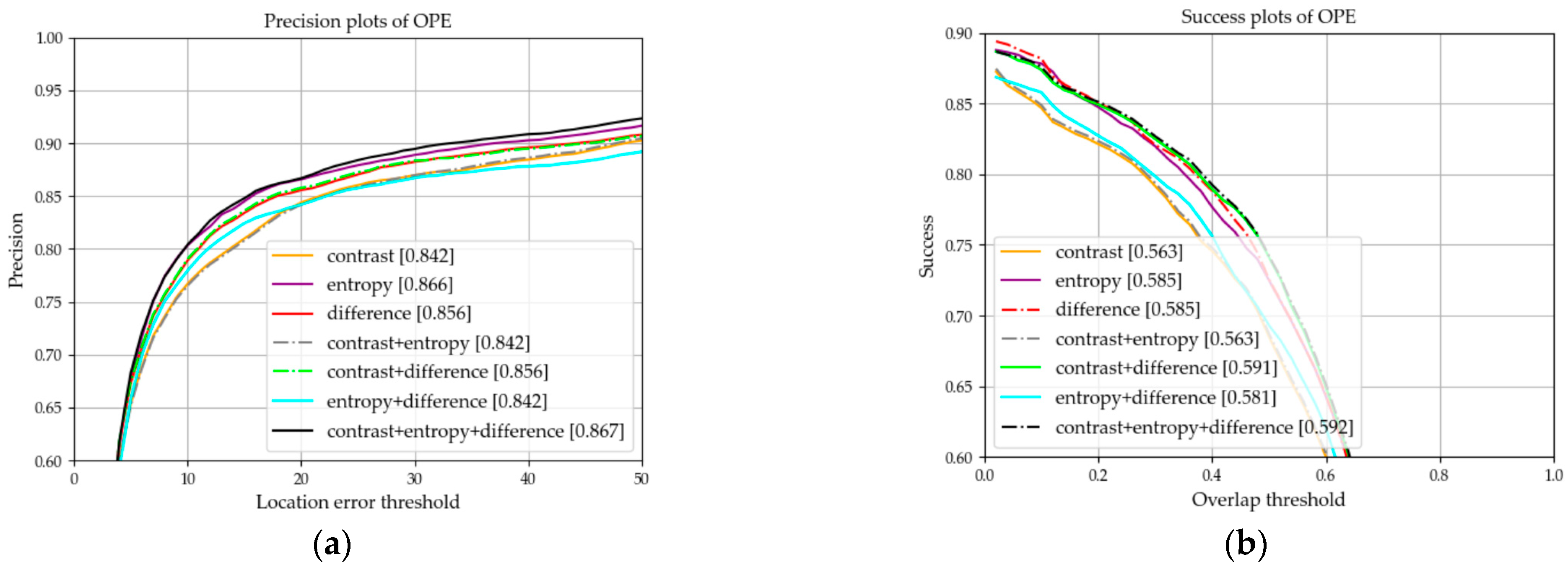

3.3. Comparison of Different Channel Selection Strategies

3.4. Comparison of Feature Extractors

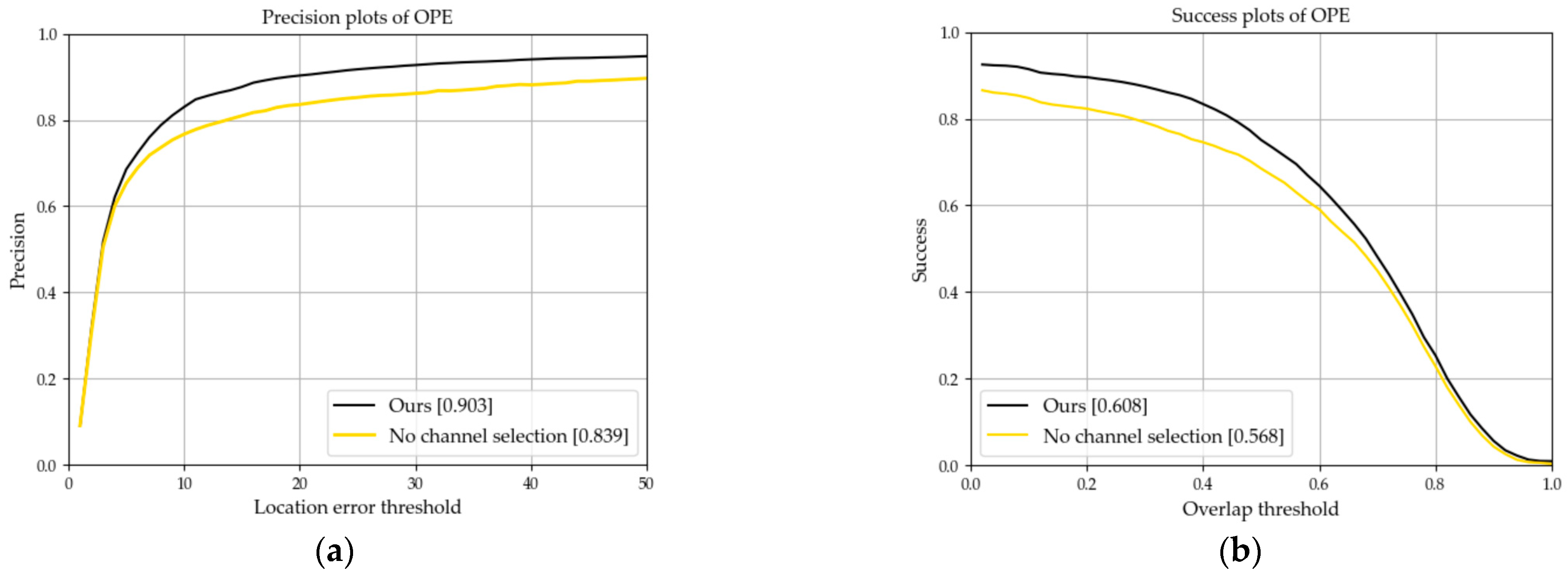

3.5. With and Without Channel Selection Strategy

3.6. Quantitative Comparison with RGB Object Trackers

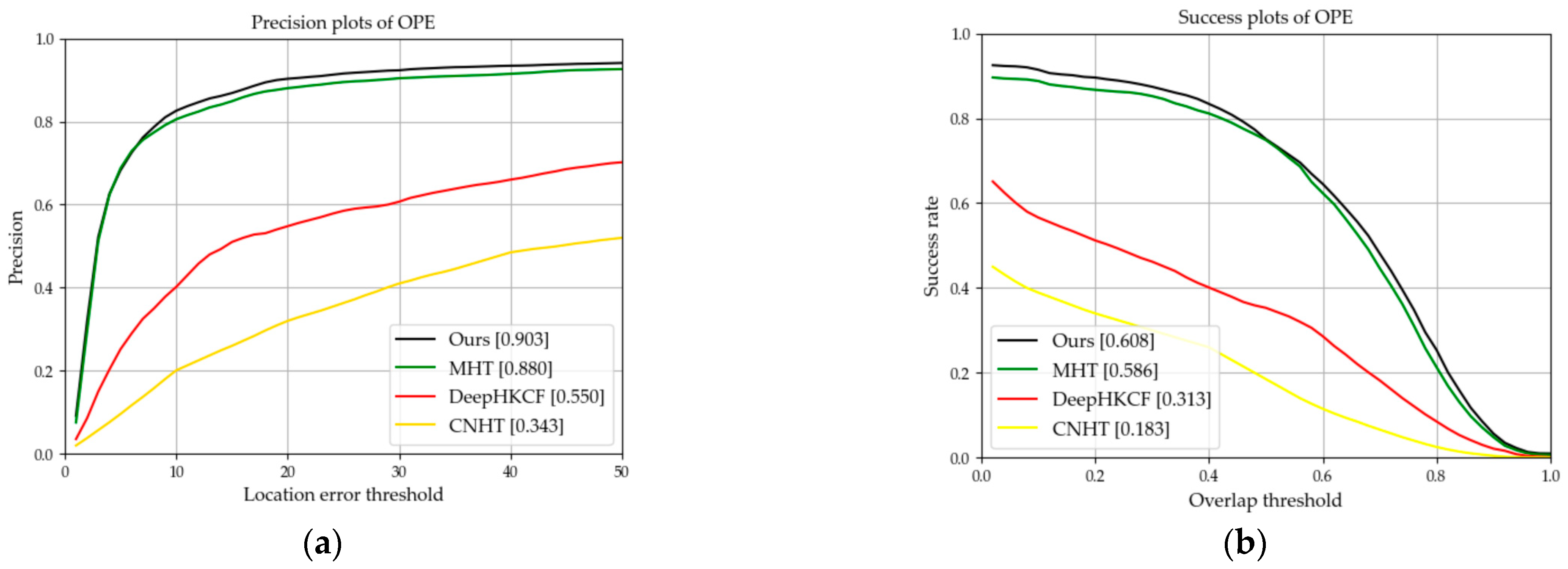

3.7. Hyperspectral Trackers Comparison

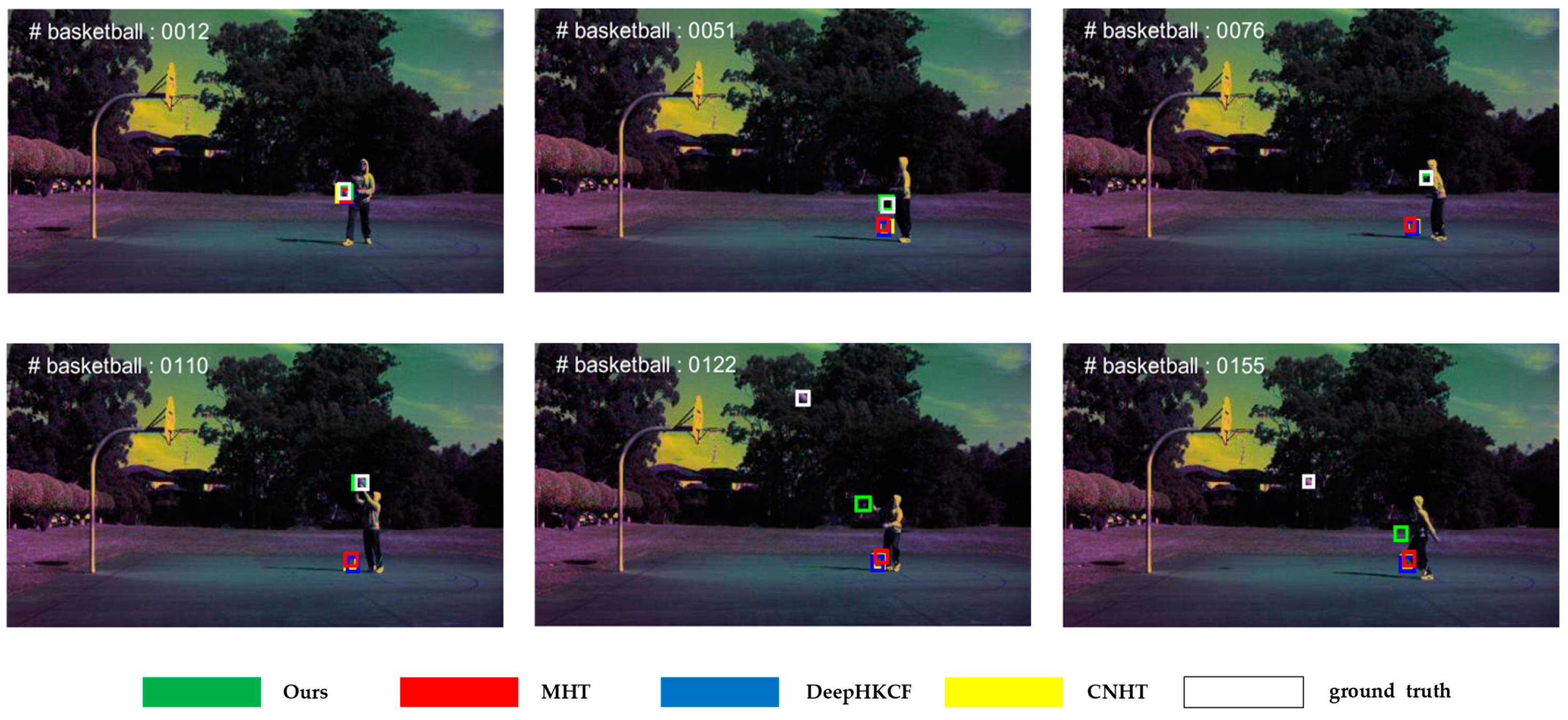

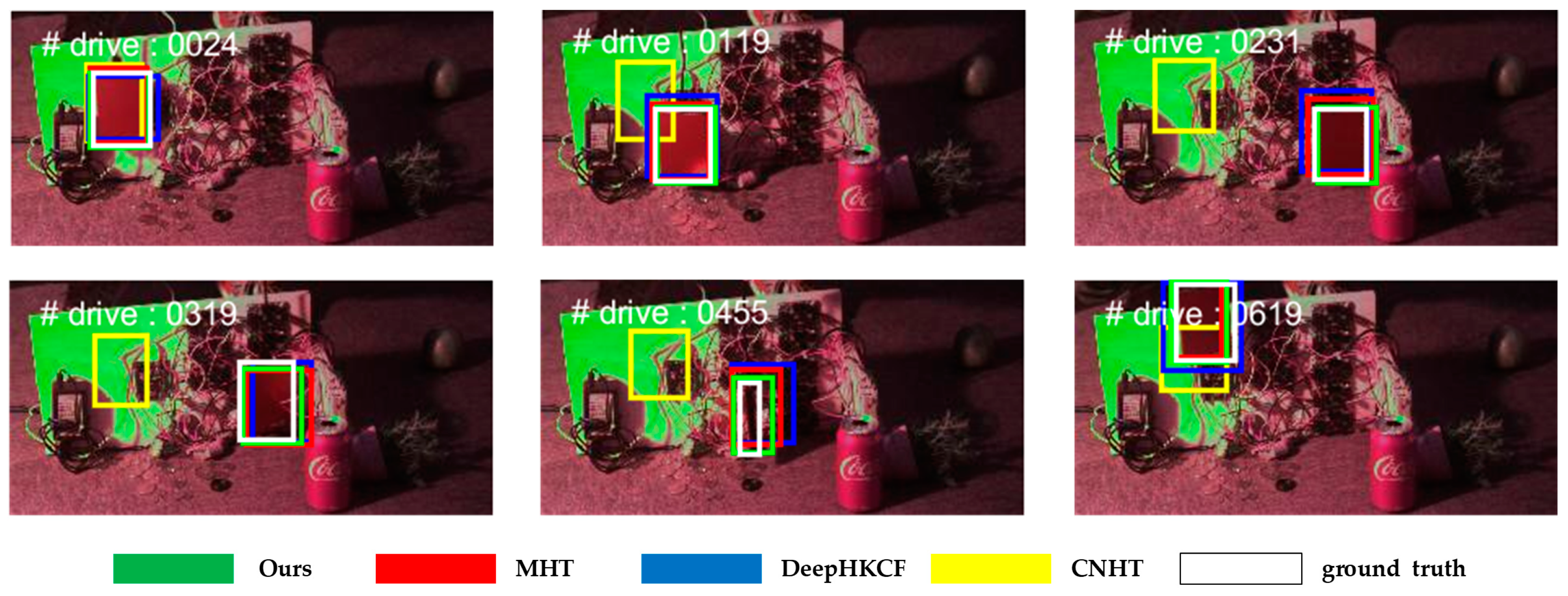

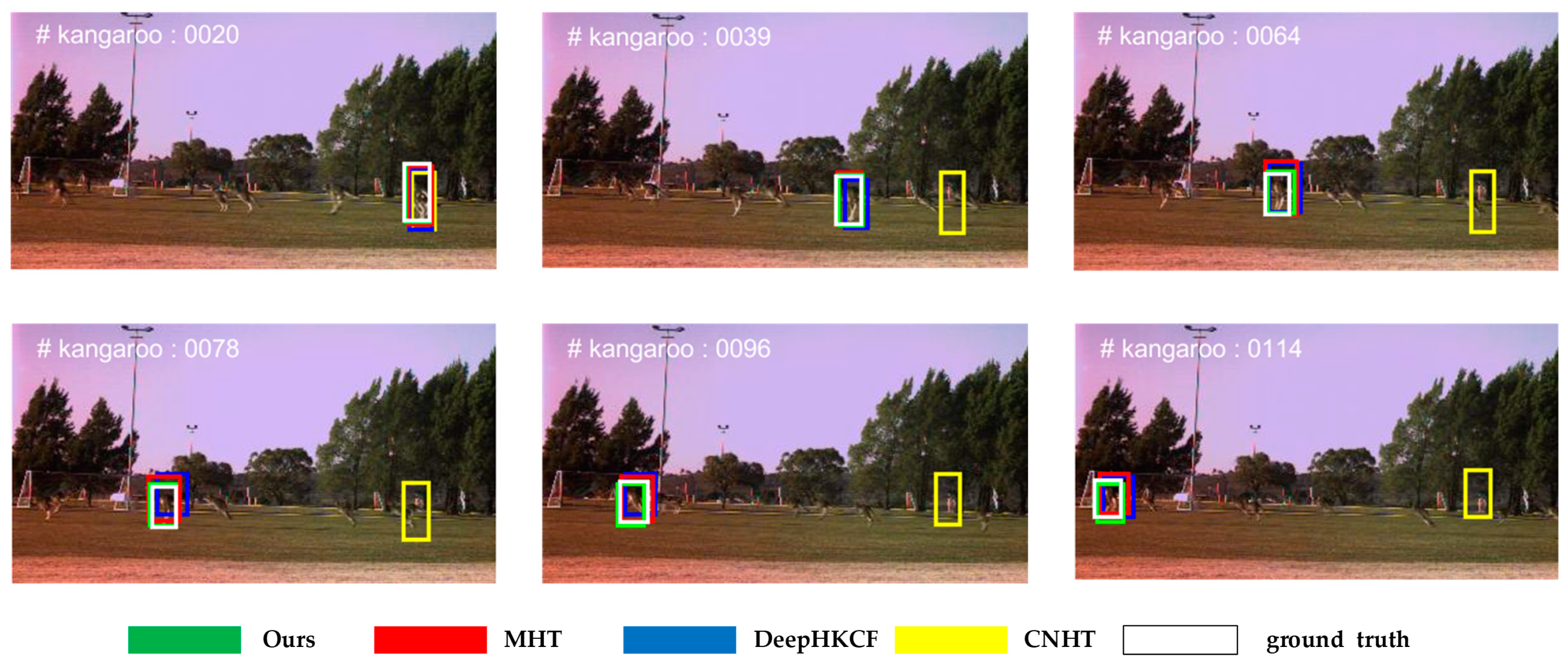

3.8. Visual Comparison with Hyperspectral Trackers

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Reddy, K.R.; Priya, K.H.; Neelima, N. Object Detection and Tracking—A Survey. In Proceedings of the International Conference on Computational Intelligence and Communication Networks (CICN), Jabalpur, India, 12–14 December 2015. [Google Scholar]

- Chavda, H.K.; Dhamecha, M. Moving Object Tracking Using PTZ Camera in Video Surveillance System. In Proceedings of the International Conference on Energy, Communication, Data Analytics and Soft Computing (ICECDS), Chennai, India, 1–2 August 2017. [Google Scholar]

- Wu, D.; Song, H.; Fan, C. Object Tracking in Satellite Videos Based on Improved Kernel Correlation Filter Assisted by Road Information. Remote Sens. 2022, 14, 4215. [Google Scholar] [CrossRef]

- Hu, W.; Gao, J.; Xing, J.; Zhang, C.; Maybank, S. Semi-Supervised Tensor-Based Graph Embedding Learning and Its Application to Visual Discriminant Tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 172–188. [Google Scholar] [CrossRef] [PubMed]

- Sui, Y.; Wang, G.; Zhang, L. Joint Correlation Filtering for Visual Tracking. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 167–178. [Google Scholar] [CrossRef]

- Zeng, H.; Peng, N.; Yu, Z.; Gu, Z.; Liu, H.; Zhang, K. Visual Tracking Using Multi-Channel Correlation Filters. In Proceedings of the IEEE International Conference on Digital Signal Processing (DSP), Singapore, 21–24 July 2015. [Google Scholar]

- Lin, B.; Bai, Y.; Bai, B.; Li, Y. Robust Correlation Tracking for UAV with Feature Integration and Response Map Enhancement. Remote Sens. 2022, 14, 4073. [Google Scholar] [CrossRef]

- Xu, Y.; Zhou, X.; Chen, S.; Li, F. Deep Learning for Multiple Object Tracking: A Survey. IET Comput. Vis. 2019, 13, 355–368. [Google Scholar] [CrossRef]

- Liu, J.; Wang, Z.; Cheng, D.; Chen, W.; Chen, C. Marine Extended Target Tracking for Scanning Radar Data Using Correlation Filter and Bayes Filter Jointly. Remote Sens. 2022, 14, 5937. [Google Scholar] [CrossRef]

- Fan, J.; Song, H.; Zhang, K.; Liu, Q.; Yan, F. Real-Time Manifold Regularized Context-Aware Correlation Tracking. Front. Comput. Sci. 2019, 14, 334–348. [Google Scholar] [CrossRef]

- Wang, X.; Brien, M.; Xiang, C.; Xu, B.; Najjaran, H. Real-Time Visual Tracking via Robust Kernelized Correlation Filter. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017. [Google Scholar]

- Lei, J.; Liu, P.; Xie, W.; Gao, L.; Li, Y.; Du, Q. Spatial–Spectral Cross-Correlation Embedded Dual-Transfer Network for Object Tracking Using Hyperspectral Videos. Remote Sens. 2022, 14, 3512. [Google Scholar] [CrossRef]

- Chen, L.; Zhao, Y.; Yao, J.; Chen, J.; Li, N.; Chan, J.C.; Kong, S.G. Object Tracking in Hyperspectral-Oriented Video with Fast Spatial-Spectral Features. Remote Sens. 2021, 13, 1922. [Google Scholar] [CrossRef]

- Erturk, A.; Iordache, M.D.; Plaza, A. Hyperspectral Change Detection by Sparse Unmixing with Dictionary Pruning. In Proceedings of the 7th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Tokyo, Japan, 2–5 June 2015. [Google Scholar]

- Yang, C.; Lee, W.S.; Gader, P.; Han, L. Hyperspectral Band Selection Using Kullback-Leibler Divergence for Blueberry Fruit Detection. In Proceedings of the 5th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Gainesville, FL, USA, 26–28 June 2013. [Google Scholar]

- Liu, Z.; Zhong, Y.; Wang, X.; Shu, M.; Zhang, L. Unsupervised Deep Hyperspectral Video Target Tracking and High Spectral-Spatial-Temporal Resolution (H3) Benchmark Dataset. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Duran, O.; Onasoglou, E.; Petrou, M. Fusion of Kalman Filter and Anomaly Detection for Multispectral and Hyperspectral Target Tracking. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Cape Town, South Africa, 12–17 July 2009. [Google Scholar]

- Xiong, F.; Zhou, J.; Chanussot, J.; Qian, Y. Dynamic Material-Aware Object Tracking in Hyperspectral Videos. In Proceedings of the 10th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Amsterdam, The Netherlands, 24–26 September 2019. [Google Scholar]

- Banerjee, A.; Burlina, P.; Broadwater, J. Hyperspectral Video for Illumination-Invariant Tracking. In Proceedings of the 1st Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Grenoble, France, 26–28 August 2009. [Google Scholar]

- Van Nguyen, H.; Banerjee, A.; Chellappa, R. Tracking via Object Reflectance Using a Hyperspectral Video Camera. In Proceedings of the IEEE Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010. [Google Scholar]

- Kandylakis, Z.; Karantzalos, K.; Doulamis, A.; Doulamis, N. Multiple Object Tracking with Background Estimation in Hyperspectral Video Sequences. In Proceedings of the 7th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Tokyo, Japan, 2–5 June 2015. [Google Scholar]

- Qian, K.; Zhou, J.; Xiong, F.; Zhou, H.; Du, J. Object Tracking in Hyperspectral Videos with Convolutional Features and Kernelized Correlation Filter. In Proceedings of the International Conference on Software Maintenance (ICSM), Madrid, Spain, 25–27 September 2018. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 583–596. [Google Scholar] [CrossRef] [PubMed]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005. [Google Scholar]

- Uzkent, B.; Rangnekar, A.; Hoffman, M.J. Tracking in Aerial Hyperspectral Videos Using Deep Kernelized Correlation Filters. IEEE Trans. Geosci. Remote Sens. 2019, 57, 449–461. [Google Scholar] [CrossRef]

- Xiong, F.; Zhou, J.; Qian, Y. Material Based Object Tracking in Hyperspectral Videos. IEEE Trans. Image Process. 2020, 29, 3719–3733. [Google Scholar] [CrossRef] [PubMed]

- Galoogahi, H.K.; Fagg, A.; Lucey, S. Learning Background-Aware Correlation Filters for Visual Tracking. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Li, Z.; Xiong, F.; Zhou, Z.; Wang, J.; Lu, J.; Qian, Y. BAE-Net: A Band Attention Aware Ensemble Network for Hyperspectral Object Tracking. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020. [Google Scholar]

- Liu, Z.; Wang, X.; Shu, M.; Li, G.; Sun, C.; Liu, Z.; Zhong, Y. An Anchor-Free Siamese Target Tracking Network for Hyperspectral Video. In Proceedings of the 11th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Amsterdam, The Netherlands, 24–26 March 2021. [Google Scholar]

- Liu, Z.; Wang, X.; Zhong, Y.; Shu, M.; Sun, C. SaimHYPER: Learning A Hyperspectral Object Tracker from an RGB-Based Tracker. IEEE Trans. Image Process. 2022, 31, 7116–7129. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Ye, X.; Xiong, F.; Lu, J.; Zhou, J.; Qian, Y. Spectral-Spatial-Temporal Attention Network for Hyperspectral Tracking. In Proceedings of the 11th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Amsterdam, The Netherlands, 24–26 March 2021. [Google Scholar]

- Zhao, C.; Liu, H.; Su, N.; Yan, Y. TFTN: A Transformer-Based Fusion Tracking Framework of Hyperspectral and RGB. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Hyperspectral Object Tracking Challenge 2022. Available online: https://www.hsitracking.com (accessed on 10 September 2022).

- Zhang, Y.; Li, X.; Wang, F.; Wei, B.; Li, L. A Fast Hyperspectral Object Tracking Method Based on Channel Selection Strategy. In Proceedings of the 12th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Rome, Italy, 13–16 September 2022. [Google Scholar]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed Optimization and Statistical Learning via the Alternating Direction Method of Multipliers. Found. Trends Mach. Learn. 2011, 3, 1–122. [Google Scholar] [CrossRef]

- Wu, Y.; Yang, M.H. Object Tracking Benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [PubMed]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. Accurate Scale Estimation for Robust Visual Tracking. In Proceedings of the British Machine Vision Conference (BMVC), Nottingham, UK, 1–5 September 2014. [Google Scholar]

- Danelljan, M.; Robinson, A.; Khan, F.; Felsberg, M. Beyond Correlation Filters: Learning Continuous Convolution Operators for Visual Tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016. [Google Scholar]

- Valmadre, J.; Bertinetto, L.; Henriques, J.F.; Vedaldi, A.; Torr, P.H.S. End-to-End Representation Learning for Correlation Filter Based Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

| Strategy | AUC | DP@20pixels |

|---|---|---|

| contrast | 0.563 | 0.842 |

| entropy | 0.585 | 0.866 |

| difference | 0.585 | 0.856 |

| contrast + entropy | 0.563 | 0.842 |

| contrast + difference | 0.591 | 0.856 |

| entropy + difference | 0.581 | 0.842 |

| contrast + entropy + difference | 0.592 | 0.867 |

| Feature Extractor | AUC | DP@20pixels | FPS |

|---|---|---|---|

| HOG | 0.581 | 0.854 | 86.460 |

| BHOG [25] | 0.591 | 0.856 | 48.683 |

| SSHMG [26] | 0.603 | 0.904 | 39.106 |

| HOG + BHOG | 0.588 | 0.880 | 33.829 |

| HOG + SSHMG | 0.601 | 0.901 | 32.671 |

| BHOG + SSHMG | 0.608 | 0.903 | 21.928 |

| HOG + BHOG + SSHMG | 0.607 | 0.887 | 20.576 |

| Trackers | AUC | DP@20pixels | FPS |

|---|---|---|---|

| Ours | 0.608 | 0.903 | 21.928 |

| No channel selection | 0.568 | 0.839 | 6.376 |

| Trackers | AUC | ∆AUC | DP@20pixels | ∆DP |

|---|---|---|---|---|

| Ours | 0.608 | +6.4% | 0.903 | +8.7% |

| BACF [27] | 0.544 | - | 0.816 | - |

| KCF [23] | 0.408 | −13.6% | 0.583 | −23.3% |

| DSST [37] | 0.442 | −10.2% | 0.705 | −11.1% |

| C-COT [38] | 0.557 | +1.3% | 0.869 | +5.3% |

| CF-Net [39] | 0.543 | −0.1% | 0.872 | +5.6% |

| Trackers | AUC | ∆AUC | DP@20pixels | ∆DP | FPS | ∆FPS |

|---|---|---|---|---|---|---|

| Ours | 0.608 | +2.2% | 0.903 | +2.3% | 21.928 | +13.343 |

| MHT [26] | 0.586 | - | 0.880 | - | 8.585 | - |

| DeepHKCF [25] | 0.313 | −27.3% | 0.550 | −33.0% | 7.965 | −0.620 |

| CNHT [22] | 0.183 | −40.3% | 0.343 | −53.7% | 8.101 | −0.484 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Li, X.; Wei, B.; Li, L.; Yue, S. A Fast Hyperspectral Tracking Method via Channel Selection. Remote Sens. 2023, 15, 1557. https://doi.org/10.3390/rs15061557

Zhang Y, Li X, Wei B, Li L, Yue S. A Fast Hyperspectral Tracking Method via Channel Selection. Remote Sensing. 2023; 15(6):1557. https://doi.org/10.3390/rs15061557

Chicago/Turabian StyleZhang, Yifan, Xu Li, Baoguo Wei, Lixin Li, and Shigang Yue. 2023. "A Fast Hyperspectral Tracking Method via Channel Selection" Remote Sensing 15, no. 6: 1557. https://doi.org/10.3390/rs15061557

APA StyleZhang, Y., Li, X., Wei, B., Li, L., & Yue, S. (2023). A Fast Hyperspectral Tracking Method via Channel Selection. Remote Sensing, 15(6), 1557. https://doi.org/10.3390/rs15061557