An Adaptive Adversarial Patch-Generating Algorithm for Defending against the Intelligent Low, Slow, and Small Target

Abstract

1. Introduction

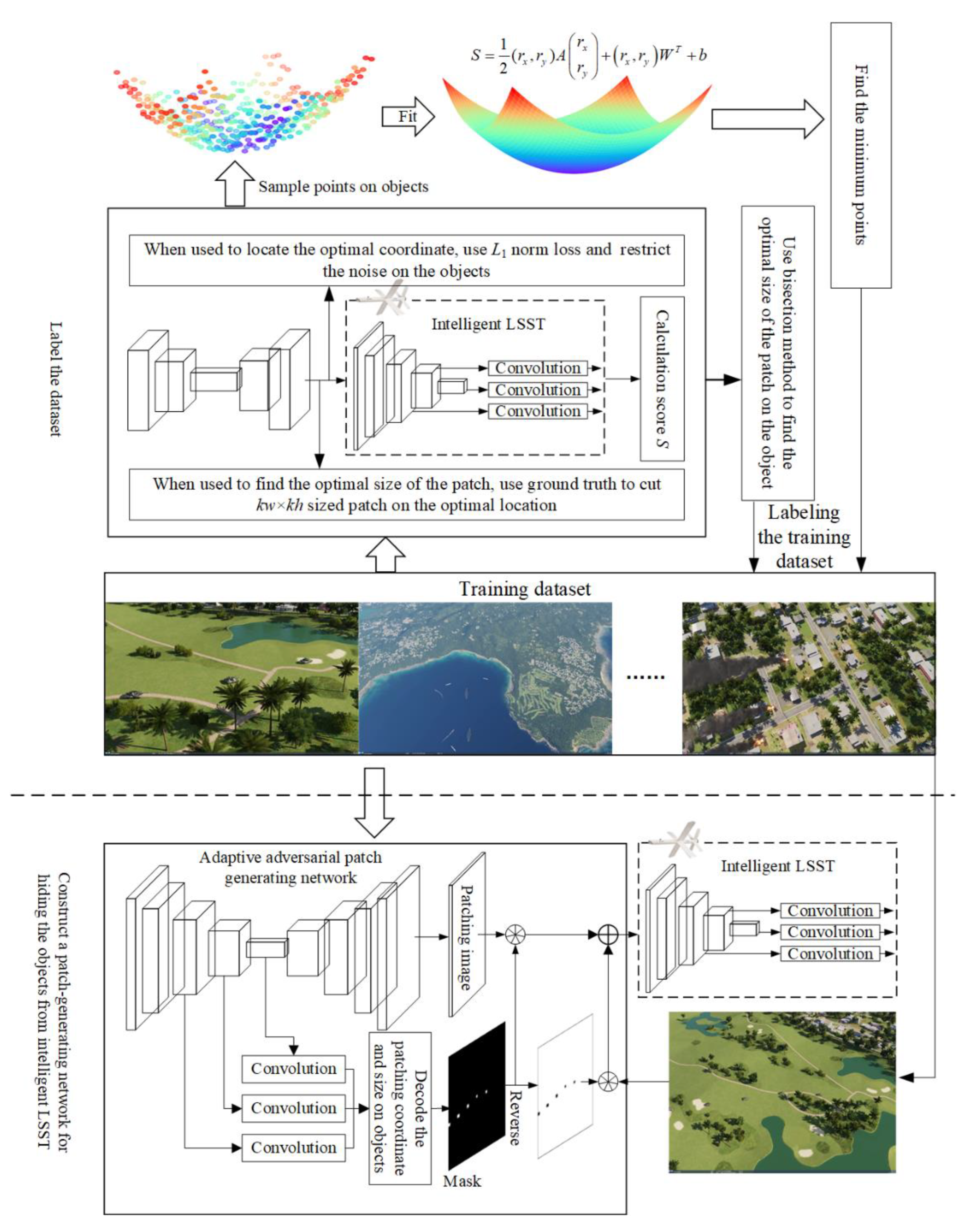

- We propose a novel idea for defending against the ILSST. Using the adversarial patch to defend against the ILSST can hide the ground unit from ILSST’s onboard object detection network.

- We design a patch-generating network that can generate patches on the optimal location of the object with optimal size without the ground truth of the objects.

- We propose a novel patch coordinate labeling method that fits a curve to an object using a set of sampling points and use this curve to find the point that affects the detection results most.

- We use the Bisectional search method to calculate the optimal size of the patch on objects, which enable our algorithm to generate different sized patch on different objects and increase the efficiency of the patch-generating algorithm.

2. Materials and Methods

2.1. Related Work

2.2. Method

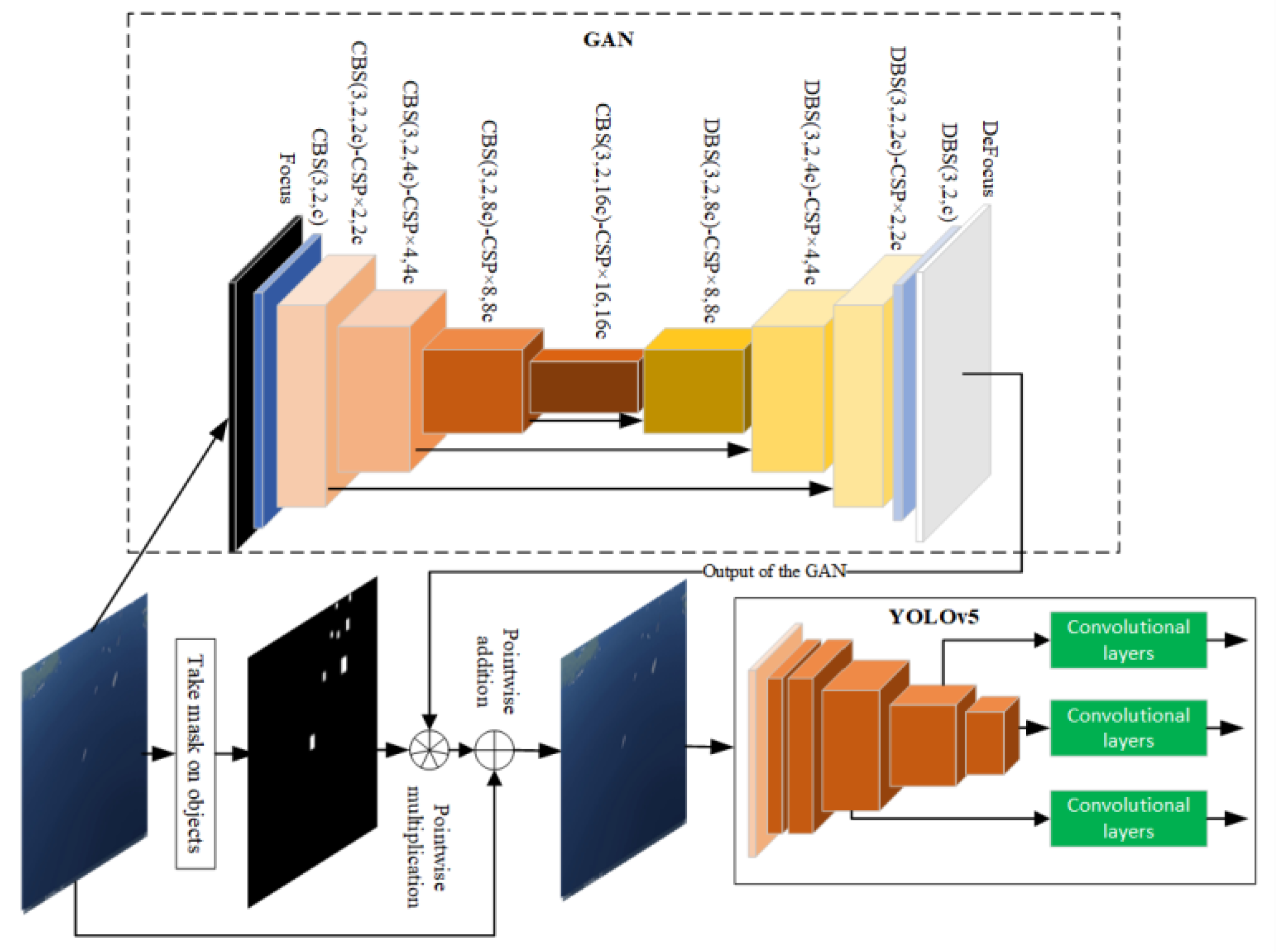

2.2.1. Adversarial Patch-Generating Network

- ALoF

- 2.

- PILoF

2.2.2. Label the Objects in the Training Dataset with Optimal Patch Coordinates and Size

The Optimal Coordinate of Patches

The Optimal Size of the Patches

| Algorithm 1: Patch size calculation algorithm |

| Preparation 1: set a thresh score of ts, a relaxation value , , 2: train a GAN with the sized patch on the object’s optimal patching point. 3: use GAN to produce a sized patch for every object in Start 1: for every object in : 2: while : 3: let 4: cut the area from the center of the as patch 5: if : 6: let 7: else: 8: let 9: save the k as the optimal patch size of the object end |

3. Results

3.1. Dataset

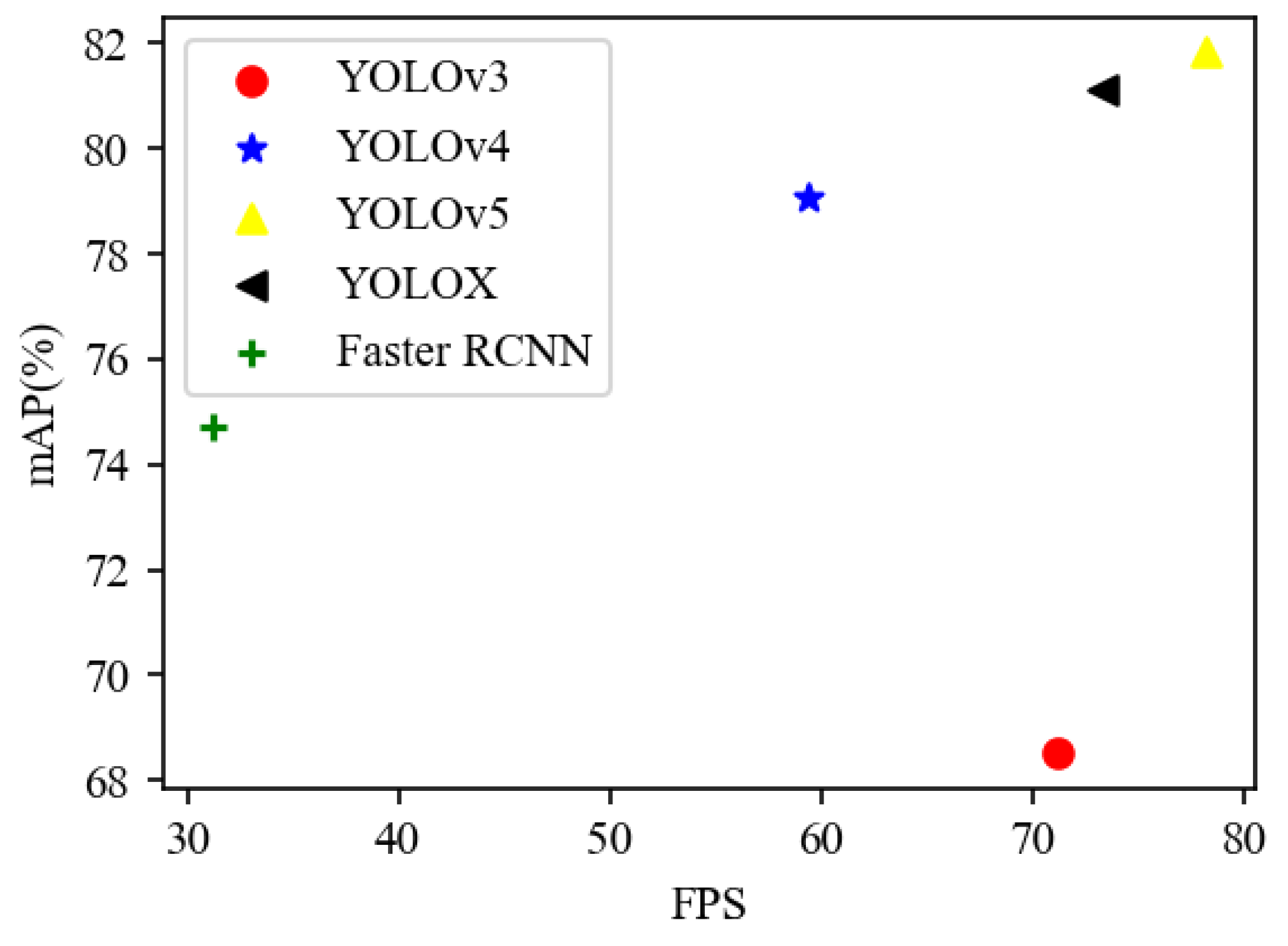

3.2. Implementation Details

3.3. Evaluation of the Scale Adaptiveness

3.4. Evaluation of the Optimal Coordinate

3.5. The Influence of Different Patch Shapes

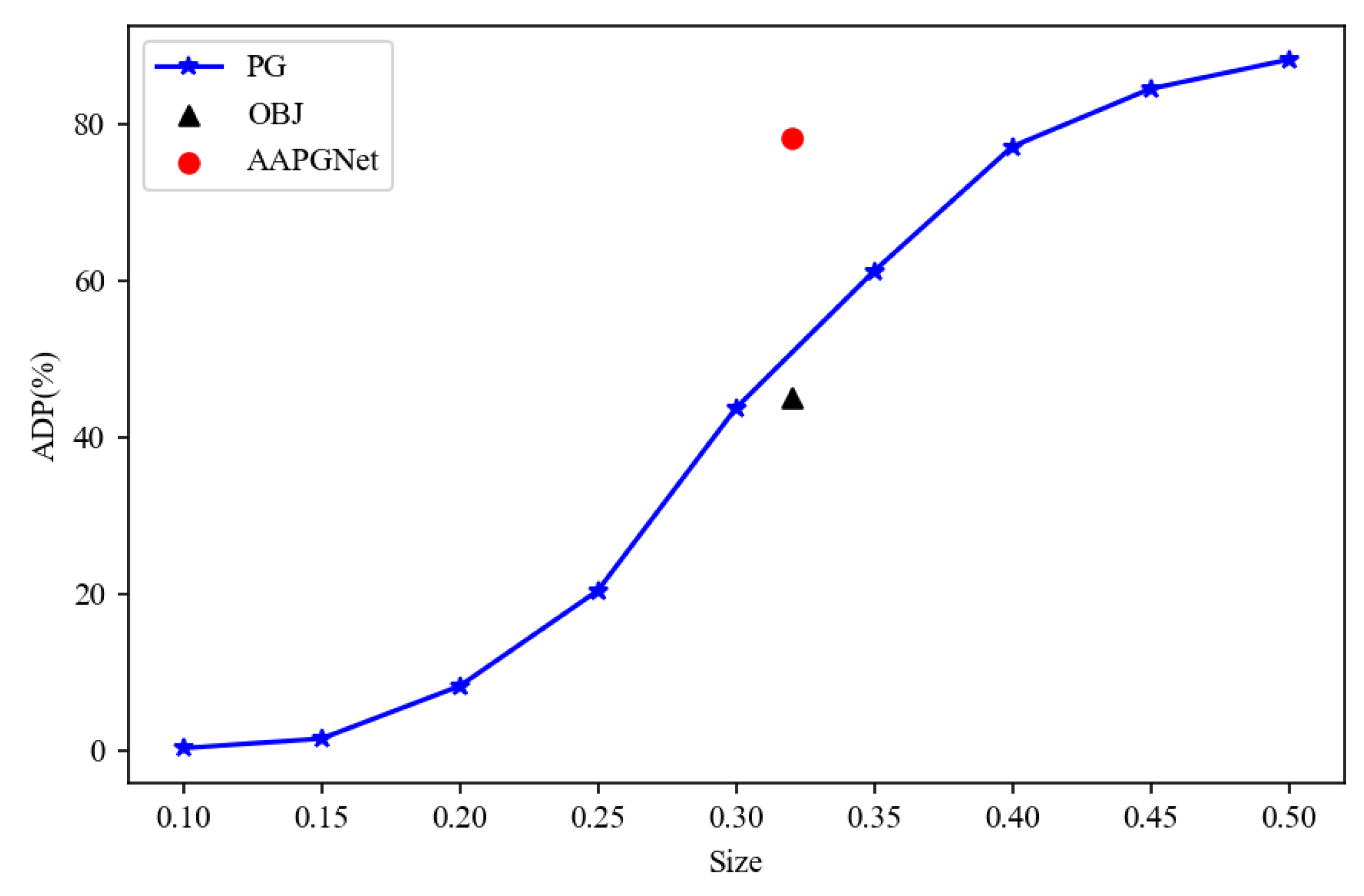

3.6. The Relationship between Patch Size and the Patch’s Adversarial Ability

3.7. Evaluation of the Transferability

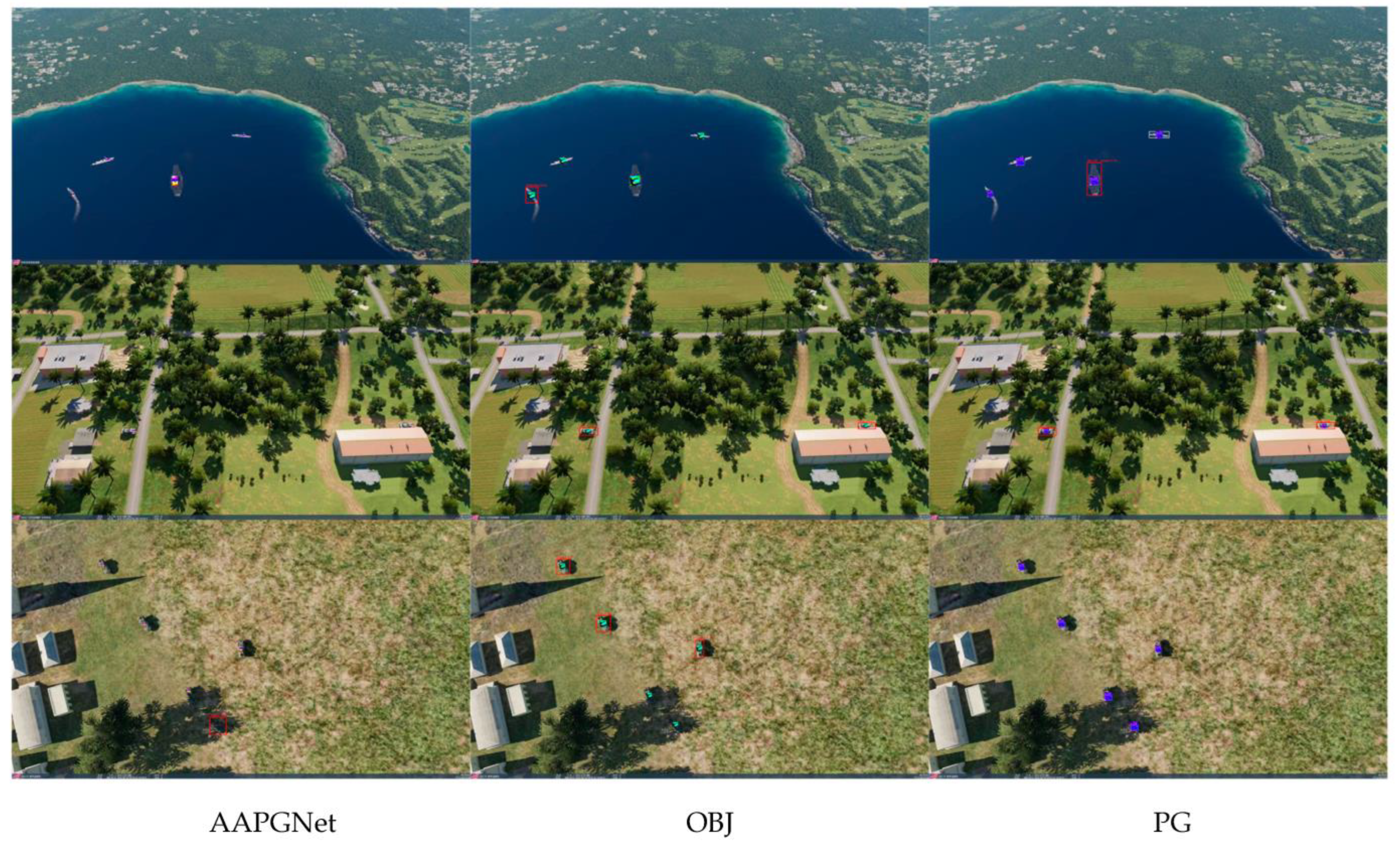

3.8. Comparing with Other Attacking Algorithms

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, X.; Lu, S.; Sun, J.; Shangguan, W. Low-Altitude and Slow-Speed Small Target Detection Based on Spectrum Zoom Processing. Math. Probl. Eng. 2018, 2018, 4146212. [Google Scholar] [CrossRef]

- Valenti, F.; Giaquinto, D.; Musto, L.; Zinelli, A.; Bertozzi, M.; Broggi, A. Enabling computer vision-based autonomous navigation for Unmanned Aerial Vehicles in cluttered GPS-denied environments. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; IEEE: New York, NY, USA; pp. 3886–3891. [Google Scholar]

- Hameed, S.; Junejo, F.; Zai, M.A.Y.; Amin, I. Prediction of Civilians Killing in the Upcoming Drone Attack. In Proceedings of the 2018 IEEE 5th International Conference on Engineering Technologies and Applied Sciences (ICETAS), Bangkok, Thailand, 22–23 November 2018; pp. 1–5. [Google Scholar]

- Heubl, B. Consumer drones used in bomb attacks: Terrorist rebels are turning consumer drones into deadly weapons. E&T investigates why it goes on and what can be done about it. Eng. Technol. 2021, 16, 1–9. [Google Scholar]

- Björklund, S. Target detection and classification of small drones by boosting on radar micro-Doppler. In Proceedings of the 2018 15th European Radar Conference (EuRAD), Madrid, Spain, 26–28 September 2018; pp. 182–185. [Google Scholar]

- Semkin, V.; Yin, M.; Hu, Y.; Mezzavilla, M.; Rangan, S. Drone detection and classification based on radar cross section signatures. In Proceedings of the 2020 International Symposium on Antennas and Propagation (ISAP), Osaka, Japan, 25–28 January 2020; pp. 223–224. [Google Scholar]

- Al-Emadi, S.; Al-Ali, A.; Al-Ali, A. Audio-Based Drone Detection and Identification Using Deep Learning Techniques with Dataset Enhancement through Generative Adversarial Networks. Sensors 2021, 21, 4953. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Cavallaro, A. Acoustic sensing from a multi-rotor drone. IEEE Sens. J. 2018, 18, 4570–4582. [Google Scholar] [CrossRef]

- Sie, N.J.; Srigrarom, S.; Huang, S. Field test validations of vision-based multi-camera multi-drone tracking and 3D localizing with concurrent camera pose estimation. In Proceedings of the 2021 6th International Conference on Control and Robotics Engineering (ICCRE), Beijing, China, 16–18 April 2021; pp. 139–144. [Google Scholar]

- Singha, S.; Aydin, B. Automated Drone Detection Using YOLOv4. Drones 2021, 5, 95. [Google Scholar] [CrossRef]

- Ezuma, M.; Erden, F.; Anjinappa, C.K.; Ozdemir, O.; Guvenc, I. Micro-UAV detection and classification from RF fingerprints using machine learning techniques. In Proceedings of the 2019 IEEE Aerospace Conference, Big Sky, MT, USA, 2–9 March 2019; pp. 1–13. [Google Scholar]

- Nie, W.; Han, Z.-C.; Li, Y.; He, W.; Xie, L.-B.; Yang, X.-L.; Zhou, M. UAV Detection and Localization Based on Multi-dimensional Signal Features. IEEE Sens. J. 2021, 22, 5150–5162. [Google Scholar] [CrossRef]

- Hoffmann, F.; Ritchie, M.; Fioranelli, F.; Charlish, A.; Griffiths, H. Micro-Doppler based detection and tracking of UAVs with multistatic radar. In Proceedings of the 2016 IEEE radar conference (RadarConf), Philadelphia, PA, USA, 2–6 May 2016; pp. 1–6. [Google Scholar]

- Kang, B.; Ahn, H.; Choo, H. A software platform for noise reduction in sound sensor equipped drones. IEEE Sens. J. 2019, 19, 10121–10130. [Google Scholar] [CrossRef]

- Zhang, Z.; Cao, Y.; Ding, M.; Zhuang, L.; Yao, W. An intruder detection algorithm for vision based sense and avoid system. In Proceedings of the 2016 International Conference on Unmanned Aircraft Systems (ICUAS), Arlington, VA, USA, 7–10 June 2016; pp. 550–556. [Google Scholar]

- Ganti, S.R.; Kim, Y. Implementation of detection and tracking mechanism for small UAS. In Proceedings of the 2016 International Conference on Unmanned Aircraft Systems (ICUAS), Arlington, VA, USA, 7–10 June 2016; pp. 1254–1260. [Google Scholar]

- Flak, P. Drone Detection Sensor with Continuous 2.4 GHz ISM Band Coverage Based on Cost-Effective SDR Platform. IEEE Access 2021, 9, 114574–114586. [Google Scholar] [CrossRef]

- Kim, M.; Lee, J. Impact of an interfering node on unmanned aerial vehicle communications. IEEE Trans. Veh. Technol. 2019, 68, 12150–12163. [Google Scholar] [CrossRef]

- Shepard, D.P.; Bhatti, J.A.; Humphreys, T.E. Drone hack: Spoofing attack demonstration on a civilian unmanned aerial vehicle. 2012. Available online: https://www.gpsworld.com/drone-hack/ (accessed on 3 November 2022).

- Glenn, J. Ultralytics/Yolov5: V6.0. 2021. Available online: https://github.com/ultralytics/yolov5 (accessed on 12 June 2022).

- Xu, Y.; Bai, T.; Yu, W.; Chang, S.; Atkinson, P.M.; Ghamisi, P. AI Security for Geoscience and Remote Sensing: Challenges and Future Trends. arXiv 2022, arXiv:2212.09360. [Google Scholar]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing properties of neural networks. arXiv 2013, arXiv:1312.6199. [Google Scholar]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and harnessing adversarial examples. arXiv 2014, arXiv:1412.6572. [Google Scholar]

- Kurakin, A.; Goodfellow, I.J.; Bengio, S. Adversarial examples in the physical world. In Artificial Intelligence Safety and Security; Chapman and Hall/CRC: Boca Raton, FL, USA, 2018; pp. 99–112. [Google Scholar]

- Dong, Y.; Liao, F.; Pang, T.; Su, H.; Zhu, J.; Hu, X.; Li, J. Boosting adversarial attacks with momentum. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Moosavi-Dezfooli, S.-M.; Fawzi, A.; Frossard, P. Deepfool: A simple and accurate method to fool deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2574–2582. [Google Scholar]

- Papernot, N.; McDaniel, P.; Jha, S.; Fredrikson, M.; Celik, Z.B.; Swami, A. The limitations of deep learning in adversarial settings. In Proceedings of the 2016 IEEE European Symposium on Security and Privacy (EuroS&P), Saarbrucken, Germany, 21–24 March 2019; pp. 372–387. [Google Scholar]

- Carlini, N.; Wagner, D. Towards evaluating the robustness of neural networks. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–24 May 2017; pp. 39–57. [Google Scholar]

- Rony, J.; Hafemann, L.G.; Oliveira, L.S.; Ayed, I.B.; Sabourin, R.; Granger, E. Decoupling direction and norm for efficient gradient-based l2 adversarial attacks and defenses. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Croce, F.; Hein, M. Reliable evaluation of adversarial robustness with an ensemble of diverse parameter-free attacks. In Proceedings of the International Conference on Machine Learning, Virtual, 13–18 July 2020; pp. 2206–2216. [Google Scholar]

- Xiao, C.; Li, B.; Zhu, J.-Y.; He, W.; Liu, M.; Song, D. Generating adversarial examples with adversarial networks. arXiv 2018, arXiv:1801.02610. [Google Scholar]

- Bai, T.; Zhao, J.; Zhu, J.; Han, S.; Chen, J.; Li, B.; Kot, A. Ai-gan: Attack-inspired generation of adversarial examples. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 2543–2547. [Google Scholar]

- Xie, C.; Wang, J.; Zhang, Z.; Zhou, Y.; Xie, L.; Yuille, A. Adversarial Examples for Semantic Segmentation and Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1378–1387. [Google Scholar]

- Li, Y.; Tian, D.; Chang, M.-C.; Bian, X.; Lyu, S. Robust adversarial perturbation on deep proposal-based models. arXiv 2018, arXiv:1809.05962. [Google Scholar]

- Wei, X.; Liang, S.; Chen, N.; Cao, X. Transferable adversarial attacks for image and video object detection. arXiv 2018, arXiv:1811.12641. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Athalye, A.; Engstrom, L.; Ilyas, A.; Kwok, K. Synthesizing Robust Adversarial Examples. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; Volume 80. [Google Scholar]

- Wang, D.; Li, C.; Wen, S.; Han, Q.L.; Nepal, S.; Zhang, X.; Xiang, Y. Daedalus: Breaking Nonmaximum Suppression in Object Detection via Adversarial Examples. IEEE Trans. Cybern. 2022, 52, 7427–7440. [Google Scholar] [CrossRef]

- Brown, T.B.; Mané, D.; Roy, A.; Abadi, M.; Gilmer, J. Adversarial patch. arXiv 2017, arXiv:1712.09665. [Google Scholar]

- Karmon, D.; Zoran, D.; Goldberg, Y. Lavan: Localized and visible adversarial noise. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018. [Google Scholar]

- Chindaudom, A.; Siritanawan, P.; Sumongkayothin, K.; Kotani, K. AdversarialQR: An adversarial patch in QR code format. In Proceedings of the 2020 Joint 9th International Conference on Informatics, Electronics & Vision (ICIEV) and 2020 4th International Conference on Imaging, Vision & Pattern Recognition (icIVPR), Kitakyushu, Japan, 26–29 August 2020; pp. 1–6. [Google Scholar]

- Chindaudom, A.; Siritanawan, P.; Sumongkayothin, K.; Kotani, K. Surreptitious Adversarial Examples through Functioning QR Code. J. Imaging 2022, 8, 122. [Google Scholar] [CrossRef]

- Gittings, T.; Schneider, S.; Collomosse, J. Robust synthesis of adversarial visual examples using a deep image prior. arXiv 2019, arXiv:1907.01996. [Google Scholar]

- Zhou, X.; Pan, Z.; Duan, Y.; Zhang, J.; Wang, S. A data independent approach to generate adversarial patches. Mach. Vis. Appl. 2021, 32, 1–9. [Google Scholar] [CrossRef]

- Bai, T.; Luo, J.; Zhao, J. Inconspicuous adversarial patches for fooling image-recognition systems on mobile devices. IEEE Internet Things J. 2021, 9, 9515–9524. [Google Scholar] [CrossRef]

- Song, D.; Eykholt, K.; Evtimov, I.; Fernandes, E.; Li, B.; Rahmati, A.; Tramer, F.; Prakash, A.; Kohno, T. Physical adversarial examples for object detectors. In Proceedings of the 12th USENIX Workshop on Offensive Technologies (WOOT 18), Baltimore, MD, USA, 13–14 August 2018. [Google Scholar]

- Eykholt, K.; Evtimov, I.; Fernandes, E.; Li, B.; Rahmati, A.; Xiao, C.; Prakash, A.; Kohno, T.; Song, D. Robust physical-world attacks on deep learning visual classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1625–1634. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef] [PubMed]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Wu, S.; Dai, T.; Xia, S.-T. Dpattack: Diffused patch attacks against universal object detection. arXiv 2020, arXiv:2010.11679. [Google Scholar]

- Lee, M.; Kolter, Z. On physical adversarial patches for object detection. arXiv 2019, arXiv:1906.11897. [Google Scholar]

- Thys, S.; Van Ranst, W.; Goedemé, T. Fooling automated surveillance cameras: Adversarial patches to attack person detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Komkov, S.; Petiushko, A. AdvHat: Real-World Adversarial Attack on ArcFace Face ID System. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 819–826. [Google Scholar]

- Wang, Y.; Lv, H.; Kuang, X.; Zhao, G.; Tan, Y.-a.; Zhang, Q.; Hu, J. Towards a physical-world adversarial patch for blinding object detection models. Inf. Sci. 2021, 556, 459–471. [Google Scholar] [CrossRef]

- Czaja, W.; Fendley, N.; Pekala, M.; Ratto, C.; Wang, I.-J. Adversarial examples in remote sensing. In Proceedings of the 26th ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems, Seattle, DC, USA, 6–9 November 2019; pp. 408–411. [Google Scholar]

- Chen, L.; Zhu, G.; Li, Q.; Li, H. Adversarial example in remote sensing image recognition. arXiv 2019, arXiv:1910.13222. [Google Scholar]

- Xu, Y.; Ghamisi, P. Universal adversarial examples in remote sensing: Methodology and benchmark. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, Y.; Qi, J.; Bin, K.; Wen, H.; Tong, X.; Zhong, P. Adversarial Patch Attack on Multi-Scale Object Detection for UAV Remote Sensing Images. Remote Sens. 2022, 14, 5298. [Google Scholar] [CrossRef]

- Bai, T.; Wang, H.; Wen, B. Targeted Universal Adversarial Examples for Remote Sensing. Remote Sens. 2022, 14, 5833. [Google Scholar] [CrossRef]

- Shi, C.; Dang, Y.; Fang, L.; Lv, Z.; Zhao, M. Hyperspectral image classification with adversarial attack. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Chen, L.; Xu, Z.; Li, Q.; Peng, J.; Wang, S.; Li, H. An empirical study of adversarial examples on remote sensing image scene classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 7419–7433. [Google Scholar] [CrossRef]

- Li, H.; Huang, H.; Chen, L.; Peng, J.; Huang, H.; Cui, Z.; Mei, X.; Wu, G. Adversarial examples for CNN-based SAR image classification: An experience study. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 1333–1347. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence, New York, New York, USA, 7–12 February 2020. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Sharif, M.; Bhagavatula, S.; Bauer, L.; Reiter, M.K. Accessorize to a crime: Real and stealthy attacks on state-of-the-art face recognition. In Proceedings of the 2016 Acm Sigsac Conference on Computer and Communications Security, Vienna, Austria, 24–28 October 2016; pp. 1528–1540. [Google Scholar]

| Dataset | mAPb | Attack Algorithms | mAPa | APD |

|---|---|---|---|---|

| Small objects | 74.11% | AAPGNet | 21.57% | 70.90% |

| AAPGNet1 | 40.99% | 44.69% | ||

| AAPGNet12 | 29.28% | 60.49% | ||

| AAPGNet13 | 24.60% | 66.80% | ||

| Large objects | 89.57% | AAPGNet | 13.74% | 84.66% |

| AAPGNet1 | 34.58% | 61.39% | ||

| AAPGNet12 | 24.22% | 72.96% | ||

| AAPGNet13 | 25.72% | 71.29% |

| Shape | mAPb | mAPa | APD | Average Size |

|---|---|---|---|---|

| Rectangle | 81.84% | 17.8% | 78.25% | 0.32 |

| Square | 15.86% | 80.62% | 0.41 | |

| Elliptical | 18.49% | 77.4% | 0.27 | |

| Circle | 16.43% | 79.92% | 0.35 |

| Control Group | mAPb | mAPa | APD |

|---|---|---|---|

| 0.6p-net with sized patch | 81.84% | 10.04% | 87.73% |

| 0.4p-net with sized patch | 81.84% | 30.62% | 62.59% |

| 0.6p-net with sized patch | 81.84% | 53.55% | 34.57% |

| 0.4p-net with sized patch | 81.84% | 28.36% | 65.35% |

| Algorithm | mAPb | mAPa | APD |

|---|---|---|---|

| YOLOv5 | 81.84% | 17.8% | 78.25% |

| Faster RCNN | 74.59% | 22.95% | 69.23% |

| YOLOv3 | 68.52% | 15.48% | 77.41% |

| YOLOv4 | 79.06% | 21.13% | 73.27% |

| YOLOX | 81.13% | 23.5% | 71.03% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jia, E.; Xu, Y.; Zhang, Z.; Zhang, F.; Feng, W.; Dong, L.; Hui, T.; Tao, C. An Adaptive Adversarial Patch-Generating Algorithm for Defending against the Intelligent Low, Slow, and Small Target. Remote Sens. 2023, 15, 1439. https://doi.org/10.3390/rs15051439

Jia E, Xu Y, Zhang Z, Zhang F, Feng W, Dong L, Hui T, Tao C. An Adaptive Adversarial Patch-Generating Algorithm for Defending against the Intelligent Low, Slow, and Small Target. Remote Sensing. 2023; 15(5):1439. https://doi.org/10.3390/rs15051439

Chicago/Turabian StyleJia, Erkenbieke, Yuelei Xu, Zhaoxiang Zhang, Fan Zhang, Weijia Feng, Liheng Dong, Tian Hui, and Chengyang Tao. 2023. "An Adaptive Adversarial Patch-Generating Algorithm for Defending against the Intelligent Low, Slow, and Small Target" Remote Sensing 15, no. 5: 1439. https://doi.org/10.3390/rs15051439

APA StyleJia, E., Xu, Y., Zhang, Z., Zhang, F., Feng, W., Dong, L., Hui, T., & Tao, C. (2023). An Adaptive Adversarial Patch-Generating Algorithm for Defending against the Intelligent Low, Slow, and Small Target. Remote Sensing, 15(5), 1439. https://doi.org/10.3390/rs15051439