SAR-to-Optical Image Translation and Cloud Removal Based on Conditional Generative Adversarial Networks: Literature Survey, Taxonomy, Evaluation Indicators, Limits and Future Directions

Abstract

1. Introduction

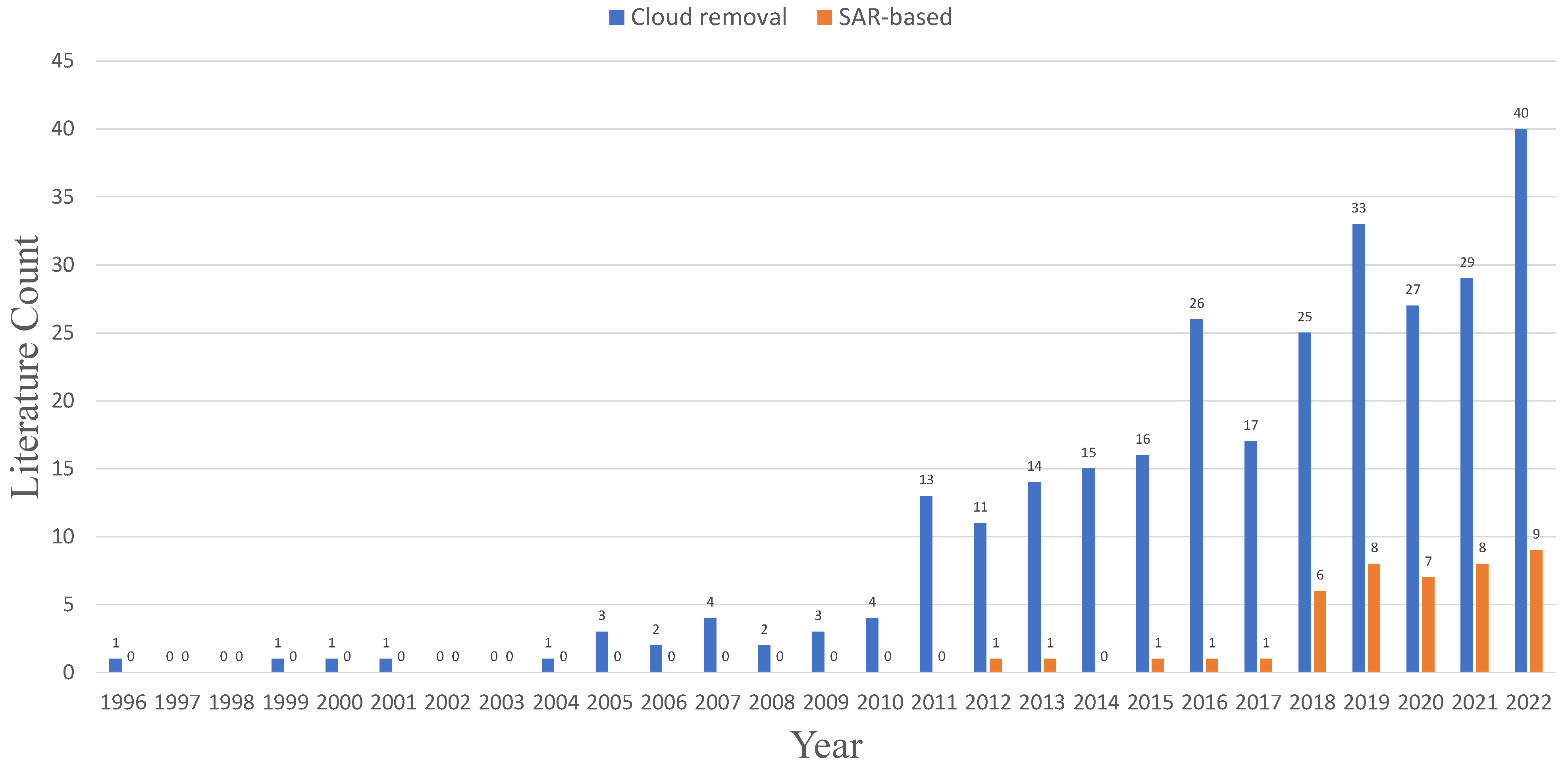

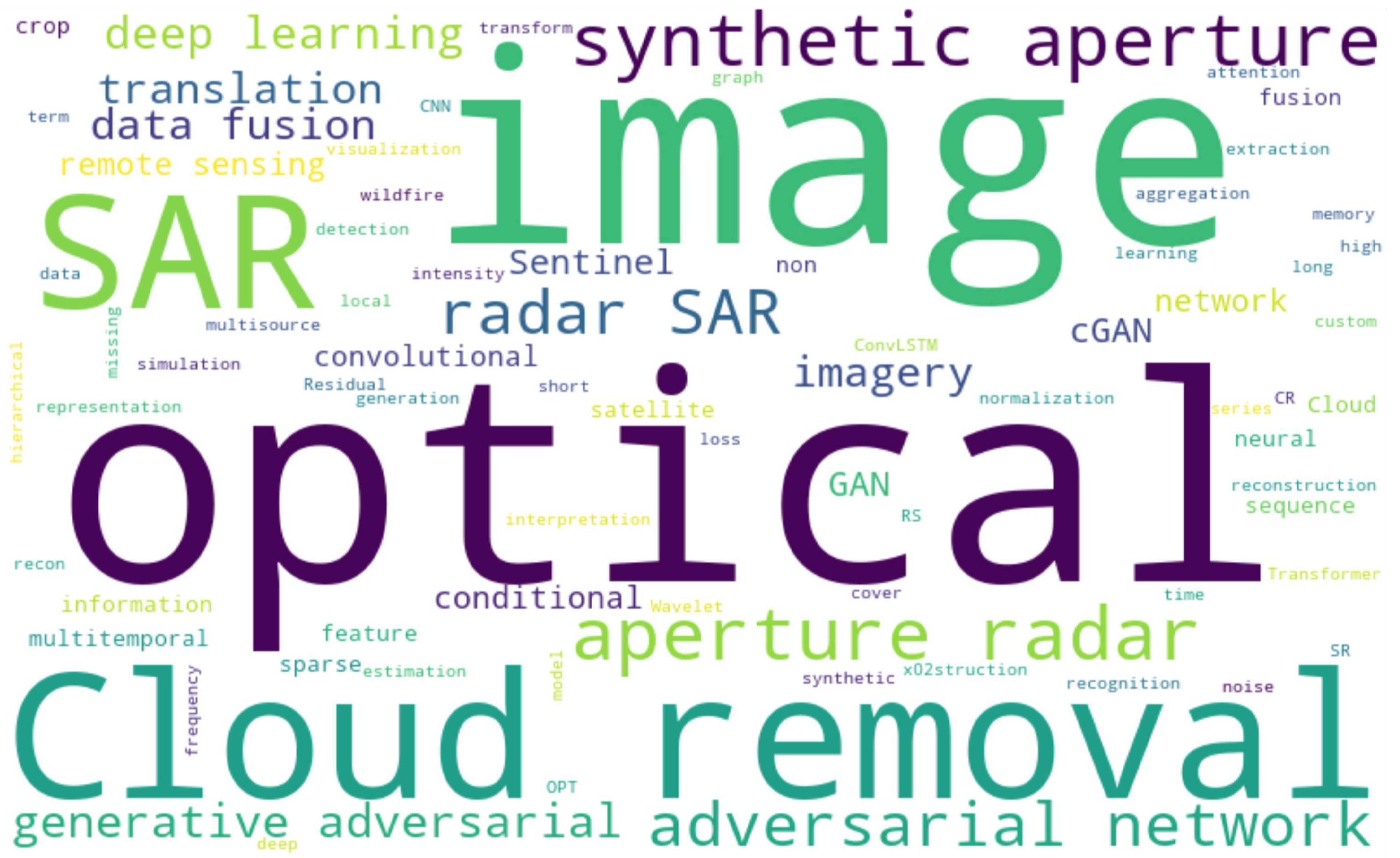

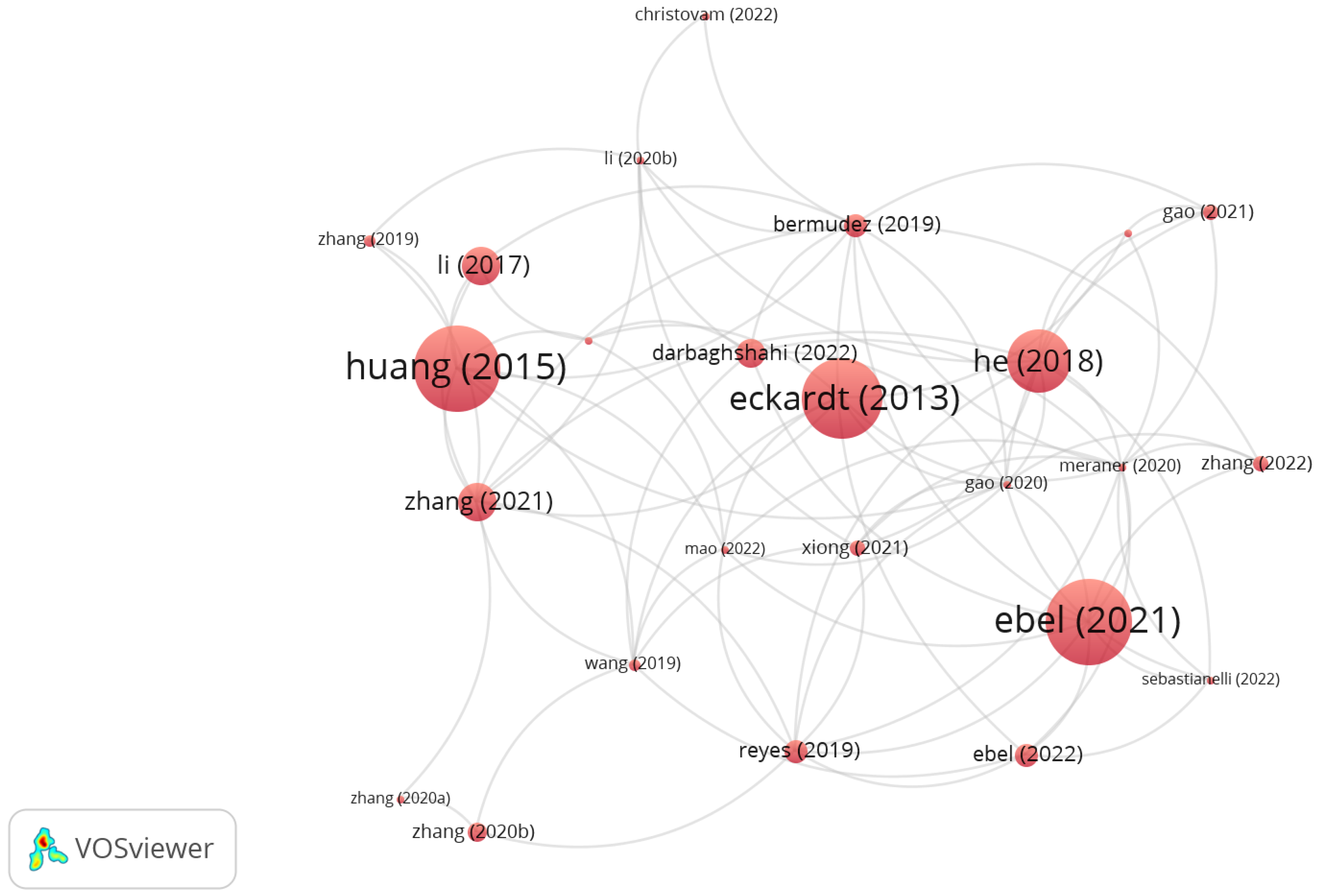

2. Literature Survey

3. Taxonomy

3.1. The Categories of the Methods

3.1.1. cGANs

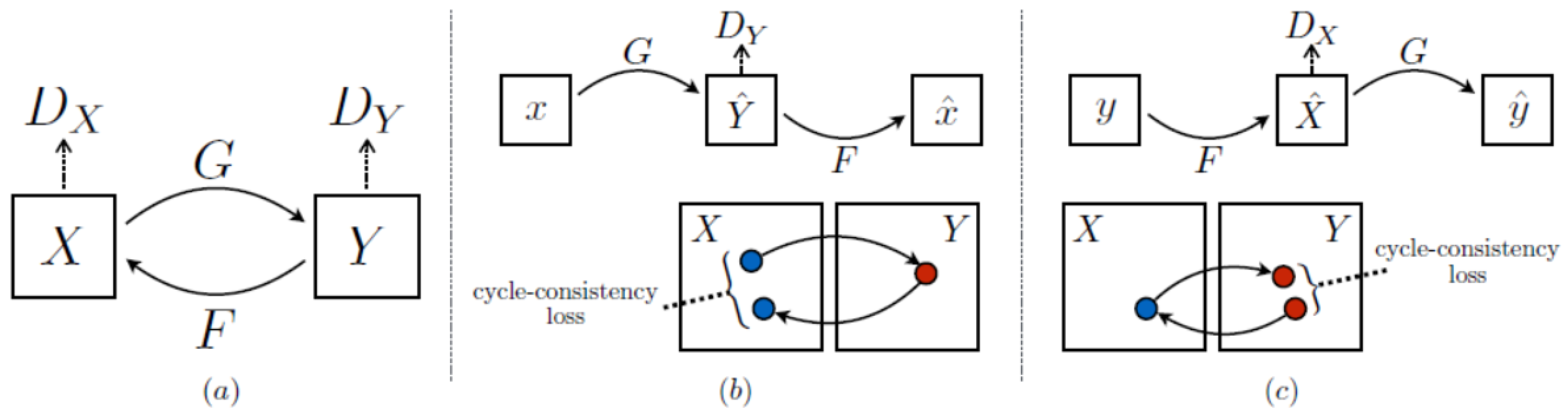

3.1.2. Unsupervised GANs

3.1.3. CNNs

3.1.4. Hybrid CNNs

3.1.5. Other Methods

3.2. The Categories of the Input

3.2.1. Mono-Temporal SAR

3.2.2. Monotemporal SAR and Corrupted Optical Images

3.2.3. Multitemporal SAR and Optical Images

4. Evaluation Indicators

5. Limits and Future Directions

5.1. Image Pixel Depth

5.2. Number of Image Bands

5.3. Global Training Datasets

- it involves multiple regions of interests in the world,

- it has paired SAR and optical data,

- it is a multi-temporal series,

- it is in GeoTIFF format, and

- the target image which is corrupted by clouds and its cloud free image.

5.4. Accuracy Verification for Cloud Regions

5.5. Auxiliary Data

5.6. Loss Functions

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| SAR | Synthetic Aperture Radar |

| GAN | Generative Adversarial Network |

| cGAN | Conditional Generative Adversarial Network |

References

- Desnos, Y.L.; Borgeaud, M.; Doherty, M.; Rast, M.; Liebig, V. The European Space Agency’s Earth Observation Program. IEEE Geosci. Remote Sens. Mag. 2014, 2, 37–46. [Google Scholar] [CrossRef]

- Xiong, Q.; Wang, Y.; Liu, D.; Ye, S.; Du, Z.; Liu, W.; Huang, J.; Su, W.; Zhu, D.; Yao, X.; et al. A Cloud Detection Approach Based on Hybrid Multispectral Features with Dynamic Thresholds for GF-1 Remote Sensing Images. Remote Sens. 2020, 12, 450. [Google Scholar] [CrossRef]

- Roy, D.P.; Wulder, M.A.; Loveland, T.R.; Woodcock, C.; Allen, R.G.; Anderson, M.C.; Helder, D.; Irons, J.R.; Johnson, D.M.; Kennedy, R.; et al. Landsat-8: Science and product vision for terrestrial global change research. Remote Sens. Environ. 2014, 145, 154–172. [Google Scholar] [CrossRef]

- Friedl, M.A.; McIver, D.K.; Hodges, J.C.; Zhang, X.Y.; Muchoney, D.; Strahler, A.H.; Woodcock, C.E.; Gopal, S.; Schneider, A.; Cooper, A.; et al. Global land cover mapping from MODIS: Algorithms and early results. Remote Sens. Environ. 2002, 83, 287–302. [Google Scholar] [CrossRef]

- Yang, N.; Liu, D.; Feng, Q.; Xiong, Q.; Zhang, L.; Ren, T.; Zhao, Y.; Zhu, D.; Huang, J. Large-scale crop mapping based on machine learning and parallel computation with grids. Remote Sens. 2019, 11, 1500. [Google Scholar] [CrossRef]

- Bontemps, S.; Arias, M.; Cara, C.; Dedieu, G.; Guzzonato, E.; Hagolle, O.; Inglada, J.; Matton, N.; Morin, D.; Popescu, R.; et al. Building a data set over 12 globally distributed sites to support the development of agriculture monitoring applications with Sentinel-2. Remote Sens. 2015, 7, 16062–16090. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, Z.; Liu, D.; Xiong, Q.; Yang, N.; Ren, T.; Zhang, C.; Zhang, X.; Li, S. Crop Mapping Based on Historical Samples and New Training Samples Generation in Heilongjiang Province, China. Sustainability 2019, 11, 5052. [Google Scholar] [CrossRef]

- Ye, S.; Song, C.; Shen, S.; Gao, P.; Cheng, C.; Cheng, F.; Wan, C.; Zhu, D. Spatial pattern of arable land-use intensity in China. Land Use Policy 2020, 99, 104845. [Google Scholar] [CrossRef]

- Cao, R.; Chen, Y.; Chen, J.; Zhu, X.; Shen, M. Thick cloud removal in Landsat images based on autoregression of Landsat time-series data. Remote Sens. Environ. 2020, 249, 112001. [Google Scholar] [CrossRef]

- Zhang, Q.; Yuan, Q.; Shen, H.; Zhang, L. A Unified Spatial-Temporal-Spectral Learning Framework for Reconstructing Missing Data in Remote Sensing Images. In Proceedings of the IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 4981–4984. [Google Scholar]

- Lin, C.H.; Tsai, P.H.; Lai, K.H.; Chen, J.Y. Cloud removal from multitemporal satellite images using information cloning. IEEE Trans. Geosci. Remote Sens. 2012, 51, 232–241. [Google Scholar] [CrossRef]

- Enomoto, K.; Sakurada, K.; Wang, W.; Fukui, H.; Matsuoka, M.; Nakamura, R.; Kawaguchi, N. Filmy cloud removal on satellite imagery with multispectral conditional generative adversarial nets. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 48–56. [Google Scholar]

- Das, S.; Das, P.; Roy, B.R. Cloud detection and cloud removal of satellite image—A case study. In Trends in Communication, Cloud, and Big Data; Springer: Singapore, 2020; pp. 53–63. [Google Scholar]

- Li, J.; Wu, Z.; Hu, Z.; Zhang, J.; Li, M.; Mo, L.; Molinier, M. Thin cloud removal in optical remote sensing images based on generative adversarial networks and physical model of cloud distortion. ISPRS J. Photogramm. Remote Sens. 2020, 166, 373–389. [Google Scholar] [CrossRef]

- Feng, Q.; Yang, J.; Zhu, D.; Liu, J.; Guo, H.; Bayartungalag, B.; Li, B. Integrating multitemporal sentinel-1/2 data for coastal land cover classification using a multibranch convolutional neural network: A case of the Yellow River Delta. Remote Sens. 2019, 11, 1006. [Google Scholar] [CrossRef]

- Wang, J.; Yang, X.; Yang, X.; Jia, L.; Fang, S. Unsupervised change detection between SAR images based on hypergraphs. ISPRS J. Photogramm. Remote Sens. 2020, 164, 61–72. [Google Scholar] [CrossRef]

- Wang, L.; Xu, X.; Yu, Y.; Yang, R.; Gui, R.; Xu, Z.; Pu, F. SAR-to-optical image translation using supervised cycle-consistent adversarial networks. IEEE Access 2019, 7, 129136–129149. [Google Scholar] [CrossRef]

- Singh, P.; Komodakis, N. Cloud-gan: Cloud removal for sentinel-2 imagery using a cyclic consistent generative adversarial networks. In Proceedings of the IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 1772–1775. [Google Scholar]

- Xu, M.; Jia, X.; Pickering, M.; Plaza, A.J. Cloud removal based on sparse representation via multitemporal dictionary learning. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2998–3006. [Google Scholar] [CrossRef]

- Tobler, W.R. A computer movie simulating urban growth in the Detroit region. Econ. Geogr. 1970, 46, 234–240. [Google Scholar] [CrossRef]

- Zhang, Q.; Yuan, Q.; Li, J.; Li, Z.; Shen, H.; Zhang, L. Thick cloud and cloud shadow removal in multitemporal imagery using progressively spatio-temporal patch group deep learning. ISPRS J. Photogramm. Remote Sens. 2020, 162, 148–160. [Google Scholar] [CrossRef]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Choi, Y.; Choi, M.; Kim, M.; Ha, J.W.; Kim, S.; Choo, J. Stargan: Unified generative adversarial networks for multi-domain image-to-image translation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8789–8797. [Google Scholar]

- Mostafa, Y. A review on various shadow detection and compensation techniques in remote sensing images. Can. J. Remote Sens. 2017, 43, 545–562. [Google Scholar] [CrossRef]

- Shen, H.; Li, X.; Cheng, Q.; Zeng, C.; Yang, G.; Li, H.; Zhang, L. Missing information reconstruction of remote sensing data: A technical review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 61–85. [Google Scholar] [CrossRef]

- Shahtahmassebi, A.; Yang, N.; Wang, K.; Moore, N.; Shen, Z. Review of shadow detection and de-shadowing methods in remote sensing. Chin. Geogr. Sci. 2013, 23, 403–420. [Google Scholar] [CrossRef]

- Fuentes Reyes, M.; Auer, S.; Merkle, N.; Henry, C.; Schmitt, M. Sar-to-optical image translation based on conditional generative adversarial networks—Optimization, opportunities and limits. Remote Sens. 2019, 11, 2067. [Google Scholar] [CrossRef]

- Xia, Y.; Zhang, H.; Zhang, L.; Fan, Z. Cloud Removal of Optical Remote Sensing Imagery with Multitemporal Sar-Optical Data Using X-Mtgan. In Proceedings of the IGARSS 2019-2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 3396–3399. [Google Scholar]

- Grohnfeldt, C.; Schmitt, M.; Zhu, X. A conditional generative adversarial network to fuse sar and multispectral optical data for cloud removal from sentinel-2 images. In Proceedings of the IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 1726–1729. [Google Scholar]

- Enomoto, K.; Sakurada, K.; Wang, W.; Kawaguchi, N.; Matsuoka, M.; Nakamura, R. Image translation between SAR and optical imagery with generative adversarial nets. In Proceedings of the IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 1752–1755. [Google Scholar]

- Ebel, P.; Schmitt, M.; Zhu, X.X. Cloud removal in unpaired Sentinel-2 imagery using cycle-consistent GAN and SAR-optical data fusion. In Proceedings of the IGARSS 2020-2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 2065–2068. [Google Scholar]

- Bermudez, J.; Happ, P.; Oliveira, D.; Feitosa, R. SAR TO OPTICAL IMAGE SYNTHESIS FOR CLOUD REMOVAL WITH GENERATIVE ADVERSARIAL NETWORKS. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 4, 5–11. [Google Scholar] [CrossRef]

- Fu, Z.; Zhang, W. Research on image translation between SAR and optical imagery. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 1, 273–278. [Google Scholar] [CrossRef]

- Gao, J.; Zhang, H.; Yuan, Q. Cloud removal with fusion of SAR and Optical Images by Deep Learning. In Proceedings of the 2019 10th International Workshop on the Analysis of Multitemporal Remote Sensing Images (MultiTemp), Shanghai, China, 5–7 August 2019; pp. 1–3. [Google Scholar]

- Zhu, C.; Zhao, Z.; Zhu, X.; Nie, Z.; Liu, Q.H. Cloud removal for optical images using SAR structure data. In Proceedings of the 2016 IEEE 13th International Conference on Signal Processing (ICSP), Chengdu, China, 6–10 November 2016; pp. 1872–1875. [Google Scholar]

- Wang, P.; Patel, V.M. Generating high quality visible images from SAR images using CNNs. In Proceedings of the 2018 IEEE Radar Conference (RadarConf18), Oklahoma City, OK, USA, 23–27 April 2018; pp. 0570–0575. [Google Scholar]

- Xiao, X.; Lu, Y. Cloud Removal of Optical Remote Sensing Imageries using SAR Data and Deep Learning. In Proceedings of the 2021 7th Asia-Pacific Conference on Synthetic Aperture Radar (APSAR), Virtual, 1–3 November 2021; pp. 1–5. [Google Scholar]

- Ley, A.; Dhondt, O.; Valade, S.; Haensch, R.; Hellwich, O. Exploiting GAN-based SAR to optical image transcoding for improved classification via deep learning. In Proceedings of the EUSAR 2018, 12th European Conference on Synthetic Aperture Radar, VDE, Aachen, Germany, 4–7 June 2018; pp. 1–6. [Google Scholar]

- Martinez, J.; Adarme, M.; Turnes, J.; Costa, G.; De Almeida, C.; Feitosa, R. A Comparison of Cloud Removal Methods for Deforestation Monitoring in Amazon Rainforest. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 43, 665–671. [Google Scholar] [CrossRef]

- Hwang, J.; Yu, C.; Shin, Y. SAR-to-optical image translation using SSIM and perceptual loss based cycle-consistent GAN. In Proceedings of the 2020 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 21–23 October 2020; pp. 191–194. [Google Scholar]

- Fu, S.; Xu, F.; Jin, Y.Q. Reciprocal translation between SAR and optical remote sensing images with cascaded-residual adversarial networks. arXiv 2019, arXiv:1901.08236. [Google Scholar] [CrossRef]

- Cresson, R.; Narçon, N.; Gaetano, R.; Dupuis, A.; Tanguy, Y.; May, S.; Commandre, B. Comparison of convolutional neural networks for cloudy optical images reconstruction from single or multitemporal joint SAR and optical images. arXiv 2022, arXiv:2204.00424. [Google Scholar] [CrossRef]

- Xu, F.; Shi, Y.; Ebel, P.; Yu, L.; Xia, G.S.; Yang, W.; Zhu, X.X. Exploring the Potential of SAR Data for Cloud Removal in Optical Satellite Imagery. arXiv 2022, arXiv:2206.02850. [Google Scholar]

- Sebastianelli, A.; Nowakowski, A.; Puglisi, E.; Del Rosso, M.P.; Mifdal, J.; Pirri, F.; Mathieu, P.P.; Ullo, S.L. Spatio-Temporal SAR-Optical Data Fusion for Cloud Removal via a Deep Hierarchical Model. arXiv 2021, arXiv:2106.12226. [Google Scholar]

- Gao, J.; Yuan, Q.; Li, J.; Zhang, H.; Su, X. Cloud Removal with Fusion of High Resolution Optical and SAR Images Using Generative Adversarial Networks. Remote Sens. 2020, 12, 191. [Google Scholar] [CrossRef]

- Eckardt, R.; Berger, C.; Thiel, C.; Schmullius, C. Removal of optically thick clouds from multi-spectral satellite images using multi-frequency SAR data. Remote Sens. 2013, 5, 2973–3006. [Google Scholar] [CrossRef]

- Chen, S.; Zhang, W.; Li, Z.; Wang, Y.; Zhang, B. Cloud Removal with SAR-Optical Data Fusion and Graph-Based Feature Aggregation Network. Remote Sens. 2022, 14, 3374. [Google Scholar] [CrossRef]

- Gao, J.; Yi, Y.; Wei, T.; Zhang, G. Sentinel-2 cloud removal considering ground changes by fusing multitemporal SAR and optical images. Remote Sens. 2021, 13, 3998. [Google Scholar] [CrossRef]

- Xiong, Q.; Di, L.; Feng, Q.; Liu, D.; Liu, W.; Zan, X.; Zhang, L.; Zhu, D.; Liu, Z.; Yao, X.; et al. Deriving non-cloud contaminated sentinel-2 images with RGB and near-infrared bands from sentinel-1 images based on a conditional generative adversarial network. Remote Sens. 2021, 13, 1512. [Google Scholar] [CrossRef]

- Zhang, J.; Zhou, J.; Li, M.; Zhou, H.; Yu, T. Quality assessment of SAR-to-optical image translation. Remote Sens. 2020, 12, 3472. [Google Scholar] [CrossRef]

- Zhang, Q.; Liu, X.; Liu, M.; Zou, X.; Zhu, L.; Ruan, X. Comparative analysis of edge information and polarization on sar-to-optical translation based on conditional generative adversarial networks. Remote Sens. 2021, 13, 128. [Google Scholar] [CrossRef]

- Christovam, L.E.; Shimabukuro, M.H.; Galo, M.d.L.B.; Honkavaara, E. Pix2pix Conditional Generative Adversarial Network with MLP Loss Function for Cloud Removal in a Cropland Time Series. Remote Sens. 2021, 14, 144. [Google Scholar] [CrossRef]

- Li, W.; Li, Y.; Chan, J.C.W. Thick Cloud Removal With Optical and SAR Imagery via Convolutional-Mapping-Deconvolutional Network. IEEE Trans. Geosci. Remote Sens. 2019, 58, 2865–2879. [Google Scholar] [CrossRef]

- Ebel, P.; Xu, Y.; Schmitt, M.; Zhu, X.X. SEN12MS-CR-TS: A Remote-Sensing Data Set for Multimodal Multitemporal Cloud Removal. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Sebastianelli, A.; Puglisi, E.; Del Rosso, M.P.; Mifdal, J.; Nowakowski, A.; Mathieu, P.P.; Pirri, F.; Ullo, S.L. PLFM: Pixel-Level Merging of Intermediate Feature Maps by Disentangling and Fusing Spatial and Temporal Data for Cloud Removal. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Ebel, P.; Meraner, A.; Schmitt, M.; Zhu, X.X. Multisensor data fusion for cloud removal in global and all-season sentinel-2 imagery. IEEE Trans. Geosci. Remote Sens. 2021, 59, 5866–5878. [Google Scholar] [CrossRef]

- Darbaghshahi, F.N.; Mohammadi, M.R.; Soryani, M. Cloud removal in remote sensing images using generative adversarial networks and SAR-to-optical image translation. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–9. [Google Scholar] [CrossRef]

- Zhang, J.; Zhou, J.; Lu, X. Feature-Guided SAR-to-Optical Image Translation. IEEE Access 2020, 8, 70925–70937. [Google Scholar] [CrossRef]

- Li, Y.; Fu, R.; Meng, X.; Jin, W.; Shao, F. A SAR-to-Optical Image Translation Method Based on Conditional Generation Adversarial Network (cGAN). IEEE Access 2020, 8, 60338–60343. [Google Scholar] [CrossRef]

- Bermudez, J.D.; Happ, P.N.; Feitosa, R.Q.; Oliveira, D.A. Synthesis of multispectral optical images from sar/optical multitemporal data using conditional generative adversarial networks. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1220–1224. [Google Scholar] [CrossRef]

- Huang, B.; Li, Y.; Han, X.; Cui, Y.; Li, W.; Li, R. Cloud removal from optical satellite imagery with SAR imagery using sparse representation. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1046–1050. [Google Scholar] [CrossRef]

- Li, Y.; Li, W.; Shen, C. Removal of optically thick clouds from high-resolution satellite imagery using dictionary group learning and interdictionary nonlocal joint sparse coding. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 1870–1882. [Google Scholar] [CrossRef]

- Mao, R.; Li, H.; Ren, G.; Yin, Z. Cloud Removal Based on SAR-Optical Remote Sensing Data Fusion via a Two-Flow Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 7677–7686. [Google Scholar] [CrossRef]

- Meraner, A.; Ebel, P.; Zhu, X.X.; Schmitt, M. Cloud removal in Sentinel-2 imagery using a deep residual neural network and SAR-optical data fusion. ISPRS J. Photogramm. Remote Sens. 2020, 166, 333–346. [Google Scholar] [CrossRef]

- Xu, F.; Shi, Y.; Ebel, P.; Yu, L.; Xia, G.S.; Yang, W.; Zhu, X.X. GLF-CR: SAR-enhanced cloud removal with global–local fusion. ISPRS J. Photogramm. Remote Sens. 2022, 192, 268–278. [Google Scholar] [CrossRef]

- He, W.; Yokoya, N. Multi-temporal sentinel-1 and-2 data fusion for optical image simulation. ISPRS Int. J. Geo-Inf. 2018, 7, 389. [Google Scholar] [CrossRef]

- Zhang, W.; Xu, M. Translate SAR data into optical image using IHS and wavelet transform integrated fusion. J. Indian Soc. Remote Sens. 2019, 47, 125–137. [Google Scholar] [CrossRef]

- Zhang, X.; Qiu, Z.; Peng, C.; Ye, P. Removing Cloud Cover Interference from Sentinel-2 imagery in Google Earth Engine by fusing Sentinel-1 SAR data with a CNN model. Int. J. Remote Sens. 2022, 43, 132–147. [Google Scholar] [CrossRef]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Johnson, J.; Alahi, A.; Fei-Fei, L. Perceptual losses for real-time style transfer and super-resolution. In Proceedings of the European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 694–711. [Google Scholar]

- Li, Z.; Shen, H.; Cheng, Q.; Liu, Y.; You, S.; He, Z. Deep learning based cloud detection for medium and high resolution remote sensing images of different sensors. ISPRS J. Photogramm. Remote Sens. 2019, 150, 197–212. [Google Scholar] [CrossRef]

- Meng, Q.; Borders, B.E.; Cieszewski, C.J.; Madden, M. Closest spectral fit for removing clouds and cloud shadows. Photogramm. Eng. Remote Sens. 2009, 75, 569–576. [Google Scholar] [CrossRef]

- Schmitt, M.; Hughes, L.H.; Qiu, C.; Zhu, X.X. SEN12MS–A Curated Dataset of Georeferenced Multi-Spectral Sentinel-1/2 Imagery for Deep Learning and Data Fusion. arXiv 2019, arXiv:1906.07789. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Hore, A.; Ziou, D. Image quality metrics: PSNR vs. ssim. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2366–2369. [Google Scholar]

- Kruse, F.A.; Lefkoff, A.; Boardman, J.; Heidebrecht, K.; Shapiro, A.; Barloon, P.; Goetz, A. The spectral image processing system (SIPS)—Interactive visualization and analysis of imaging spectrometer data. Remote Sens. Environ. 1993, 44, 145–163. [Google Scholar] [CrossRef]

- Chai, T.; Draxler, R.R. Root mean square error (RMSE) or mean absolute error (MAE). Geosci. Model Dev. Discuss. 2014, 7, 1525–1534. [Google Scholar]

- Asuero, A.G.; Sayago, A.; Gonzalez, A. The correlation coefficient: An overview. Crit. Rev. Anal. Chem. 2006, 36, 41–59. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A feature similarity index for image quality assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C. A universal image quality index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

- Allen, D.M. Mean square error of prediction as a criterion for selecting variables. Technometrics 1971, 13, 469–475. [Google Scholar] [CrossRef]

| Source Titles | Records | Percentage | Literature |

|---|---|---|---|

| Remote Sensing | 9 | 34.61% | [28,46,47,48,49,50,51,52,53] |

| IEEE Transactions on Geoscience and Remote Sensing | 5 | 19.23% | [54,55,56,57,58] |

| IEEE Access | 3 | 11.54% | [17,59,60] |

| IEEE Geoscience and Remote Sensing Letters | 2 | 7.69% | [61,62] |

| IEEE Journal of Selected Topics in Applied Earth Observations | 2 | 7.69% | [63,64] |

| and Remote Sensing | |||

| ISPRS Journal of Photogrammetry and Remote Sensing | 2 | 7.69% | [65,66] |

| ISPRS International Journal of Geo-Information | 1 | 3.85% | [67] |

| Journal of the Indian Society of Remote Sensing | 1 | 3.85% | [68] |

| International Journal of Remote Sensing | 1 | 3.85% | [69] |

| Total | 26 | 100% |

| Category | Methods and References |

|---|---|

| cGANs | modified cGAN-based SAR-to-optical translation method [60]; |

| feature-guided method [59]; MTcGAN [67]; S-CycleGAN [17]; | |

| Synthesis of Multispectral Optical Images method [61] | |

| Unsupervised GANs | CycleGAN [28] |

| CNNs (not GANs) | DSen2-CR [65]; CMD [54]; SEN12MS-CR-TS [55]; G-FAN [48] |

| Hybrid CNNs | SFGAN [46]; PLFM [56] |

| Other methods | Sparse Representation [62]; |

| cloud removal based on multifrequency SAR [47]; | |

| IHSW [68]; DGL-INJSC [63] |

| Input | Methods and References |

|---|---|

| monotemporal SAR | modified cGAN-based SAR-to-optical translation method [60]; |

| feature-guided method [59]; DGL-INJSC [63]; | |

| CycleGAN [28]; S-CycleGAN [17]; IHSW [68] | |

| monotemporal SAR & corrupted Optical | Sparse Representation [62]; DSen2-CR [65]; |

| SFGAN [46]; CMD [54]; PLFM [56]; | |

| cloud removal based on multifrequency SAR [47]; | |

| SEN12MS-CR-TS [55]; G-FAN [48] | |

| multi-temporal SAR & Optical | MTcGAN [67]; |

| Synthesis of Multispectral Optical Images method [61] |

| Indicators | References | Frequency |

|---|---|---|

| Structural Similarity Index Measurement (SSIM) | [17,46,48,54,55,56,59,60,62,65,67,68] | 12 |

| Peak Signal-to-Noise Ratio (PSNR) | [17,48,54,55,56,59,60,62,65,67] | 10 |

| Spectral Angle Mapper (SAM) | [46,47,48,55,56,65,68] | 7 |

| Root Mean Square Error (RMSE) | [46,55,56,62,65,68] | 6 |

| Correlation Coefficient (CC) | [46,48,56,68] | 4 |

| Feature Similarity Index Measurement (FSIM) | [17,59] | 2 |

| Universal Image Quality Index (UIQI) | [56,68] | 2 |

| Mean Absolute Error (MAE) | [48,65] | 2 |

| Mean Square Error (MSE) | [59] | 1 |

| Degree of Distortion (DD) | [56] | 1 |

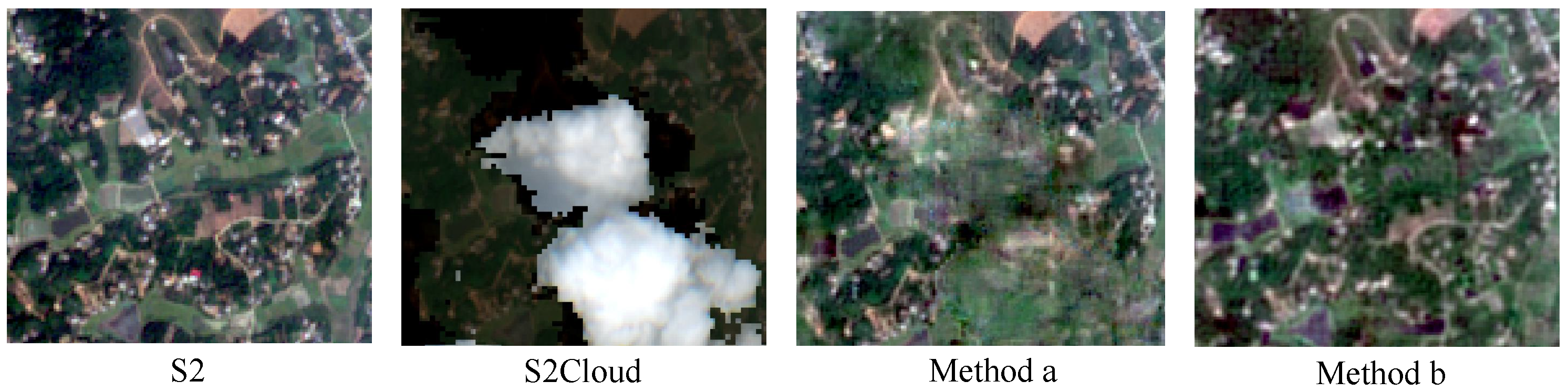

| Method A | Method B | |

|---|---|---|

| RMSE | 0.0067 | 0.0094 |

| R | 0.9596 | 0.9256 |

| KGE | 0.9224 | 0.9256 |

| SSIM | 0.9948 | 0.9943 |

| PSNR | 54.4722 | 54.1575 |

| SAM | 0.0869 | 0.0940 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiong, Q.; Li, G.; Yao, X.; Zhang, X. SAR-to-Optical Image Translation and Cloud Removal Based on Conditional Generative Adversarial Networks: Literature Survey, Taxonomy, Evaluation Indicators, Limits and Future Directions. Remote Sens. 2023, 15, 1137. https://doi.org/10.3390/rs15041137

Xiong Q, Li G, Yao X, Zhang X. SAR-to-Optical Image Translation and Cloud Removal Based on Conditional Generative Adversarial Networks: Literature Survey, Taxonomy, Evaluation Indicators, Limits and Future Directions. Remote Sensing. 2023; 15(4):1137. https://doi.org/10.3390/rs15041137

Chicago/Turabian StyleXiong, Quan, Guoqing Li, Xiaochuang Yao, and Xiaodong Zhang. 2023. "SAR-to-Optical Image Translation and Cloud Removal Based on Conditional Generative Adversarial Networks: Literature Survey, Taxonomy, Evaluation Indicators, Limits and Future Directions" Remote Sensing 15, no. 4: 1137. https://doi.org/10.3390/rs15041137

APA StyleXiong, Q., Li, G., Yao, X., & Zhang, X. (2023). SAR-to-Optical Image Translation and Cloud Removal Based on Conditional Generative Adversarial Networks: Literature Survey, Taxonomy, Evaluation Indicators, Limits and Future Directions. Remote Sensing, 15(4), 1137. https://doi.org/10.3390/rs15041137