Exploiting Superpixel-Based Contextual Information on Active Learning for High Spatial Resolution Remote Sensing Image Classification

Abstract

1. Introduction

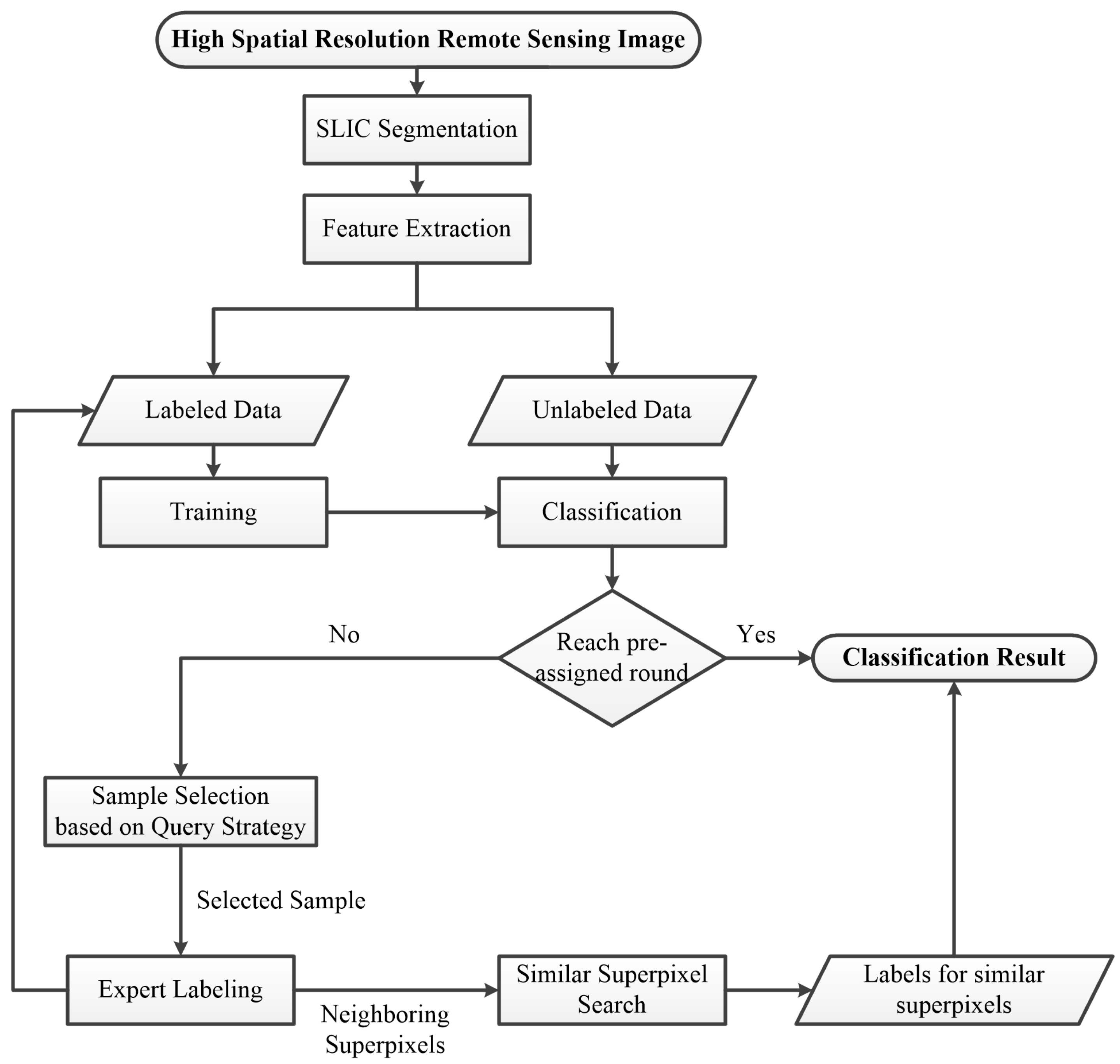

2. Materials and Methods

2.1. Superpixel Based Feature Extraction

2.2. Active Learning Based on Similar Neighboring Superpixel Search and Labeling

2.2.1. Active Learning Query Strategies

2.2.2. Superpixel Community

2.2.3. Superpixel Similarity Calculation

2.2.4. Similar Neighboring Superpixels Search and Labeling under Spatial Constraint

3. Data Sets and Experimental Results

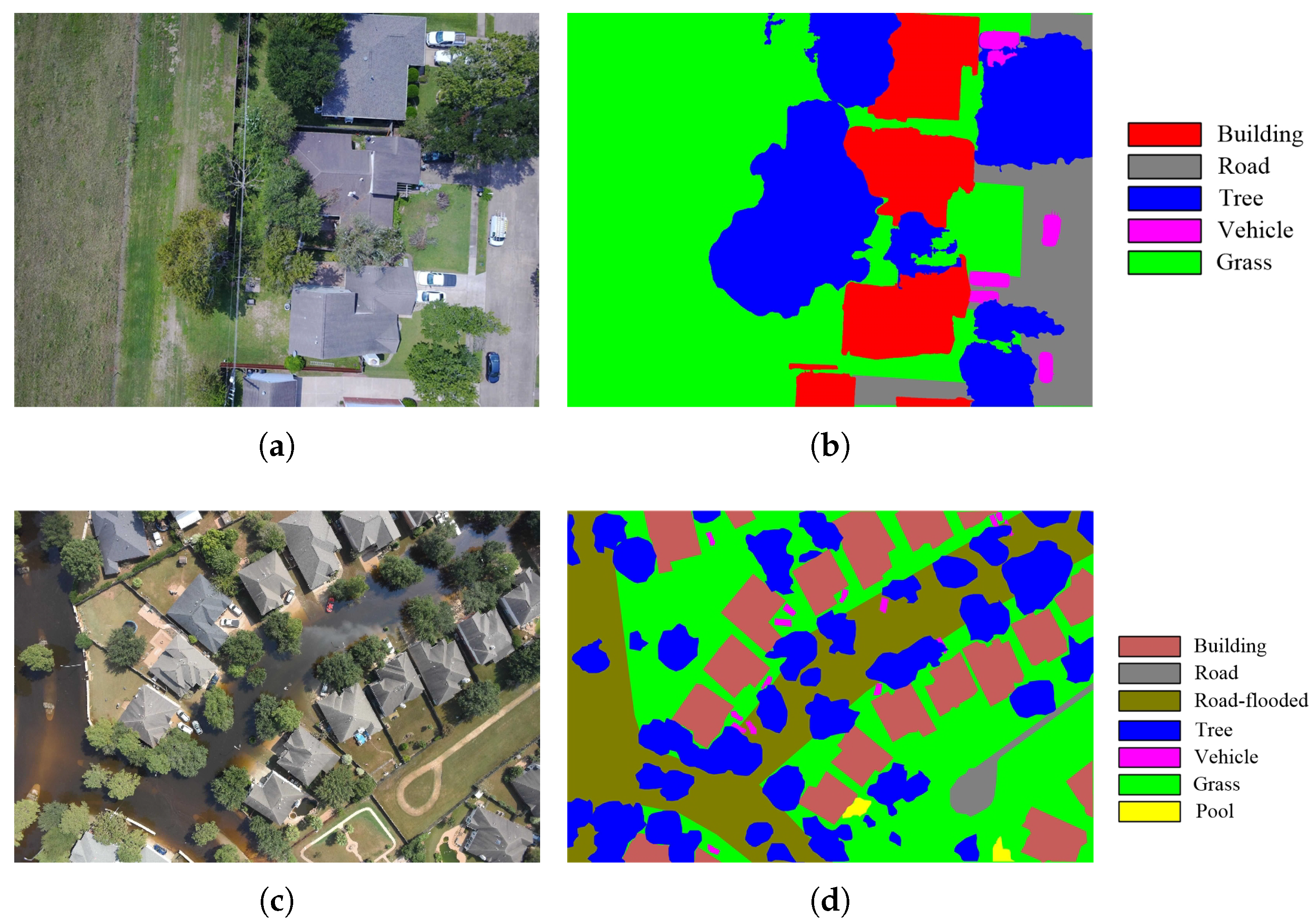

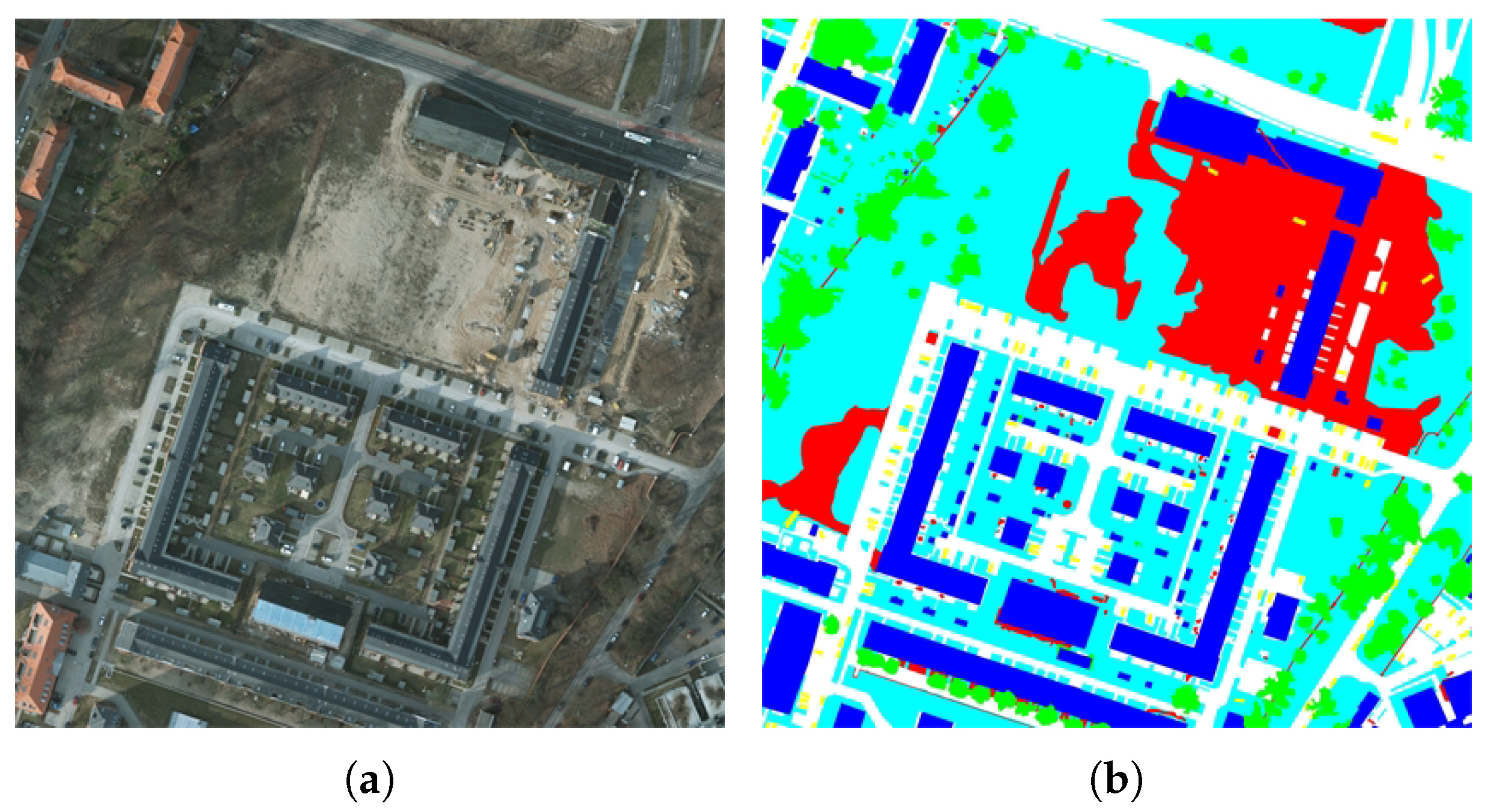

3.1. Data Sets

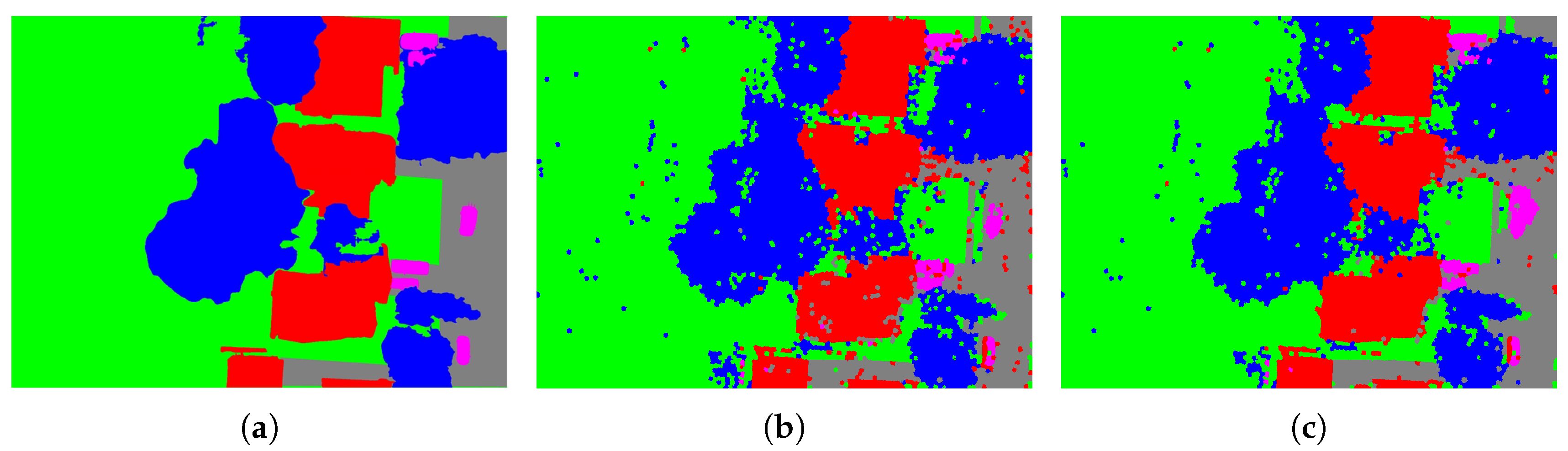

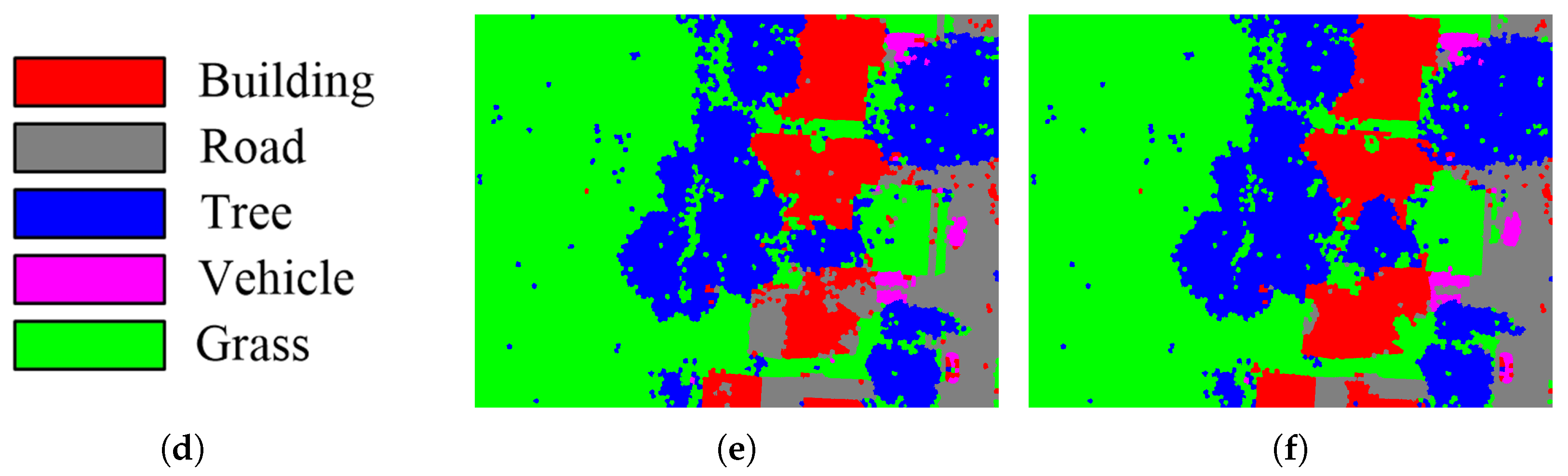

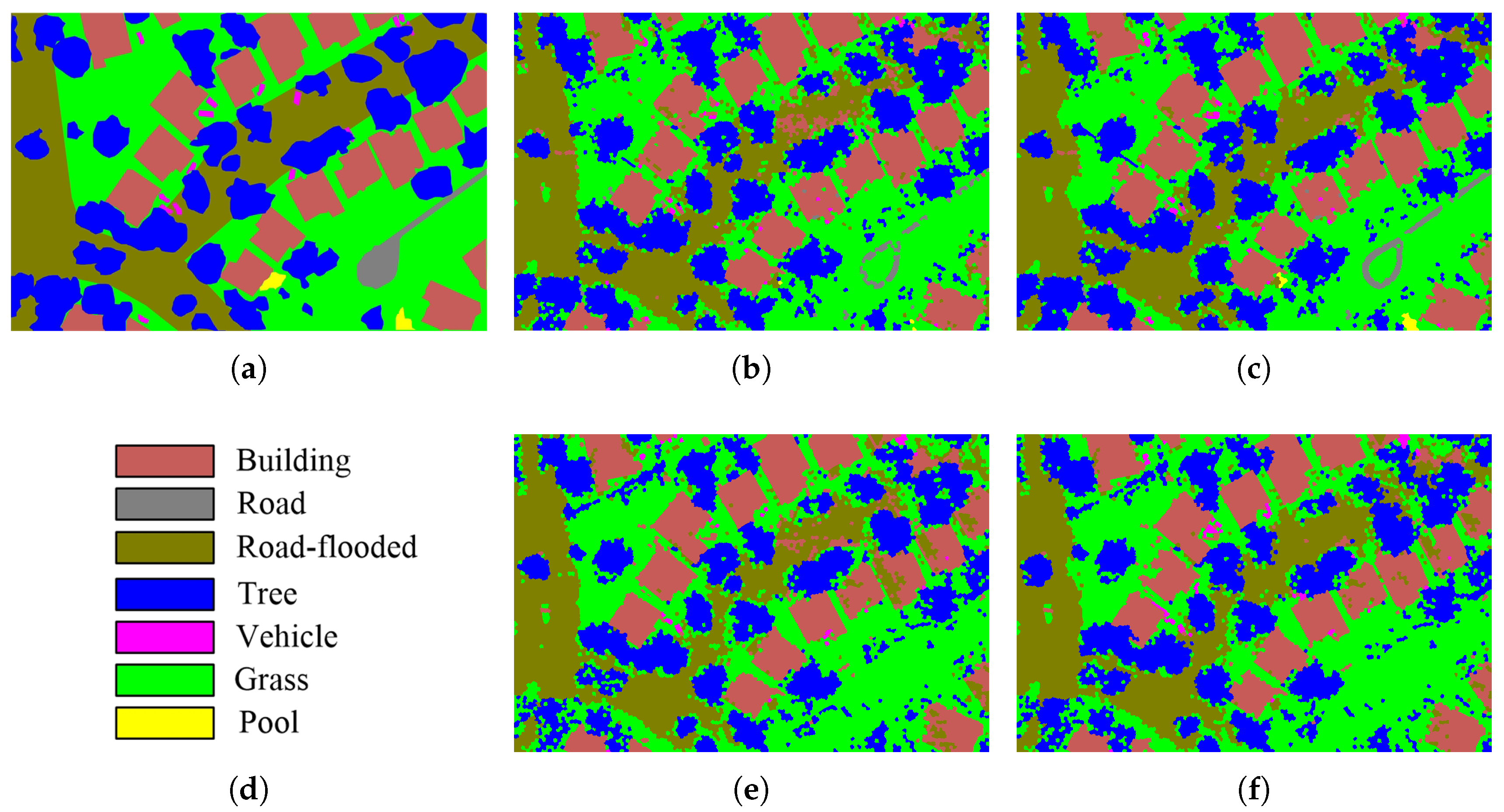

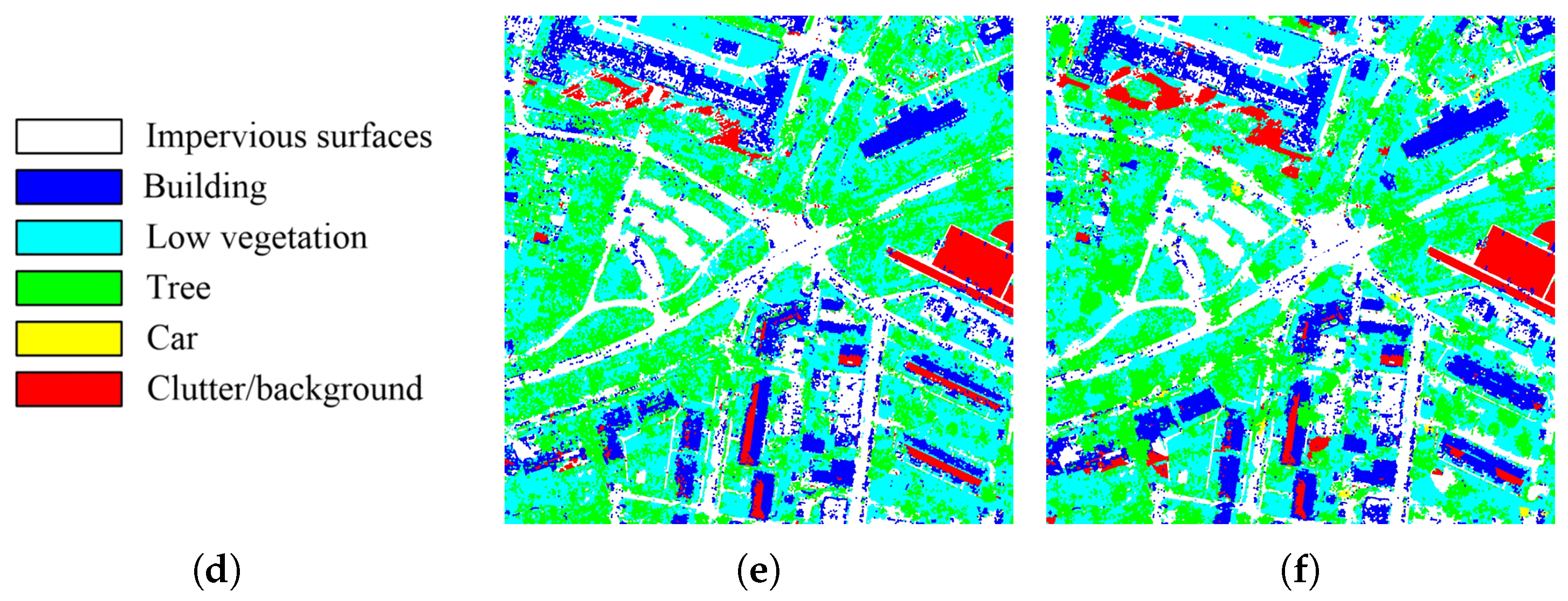

3.2. Experimental Results

4. Discussion

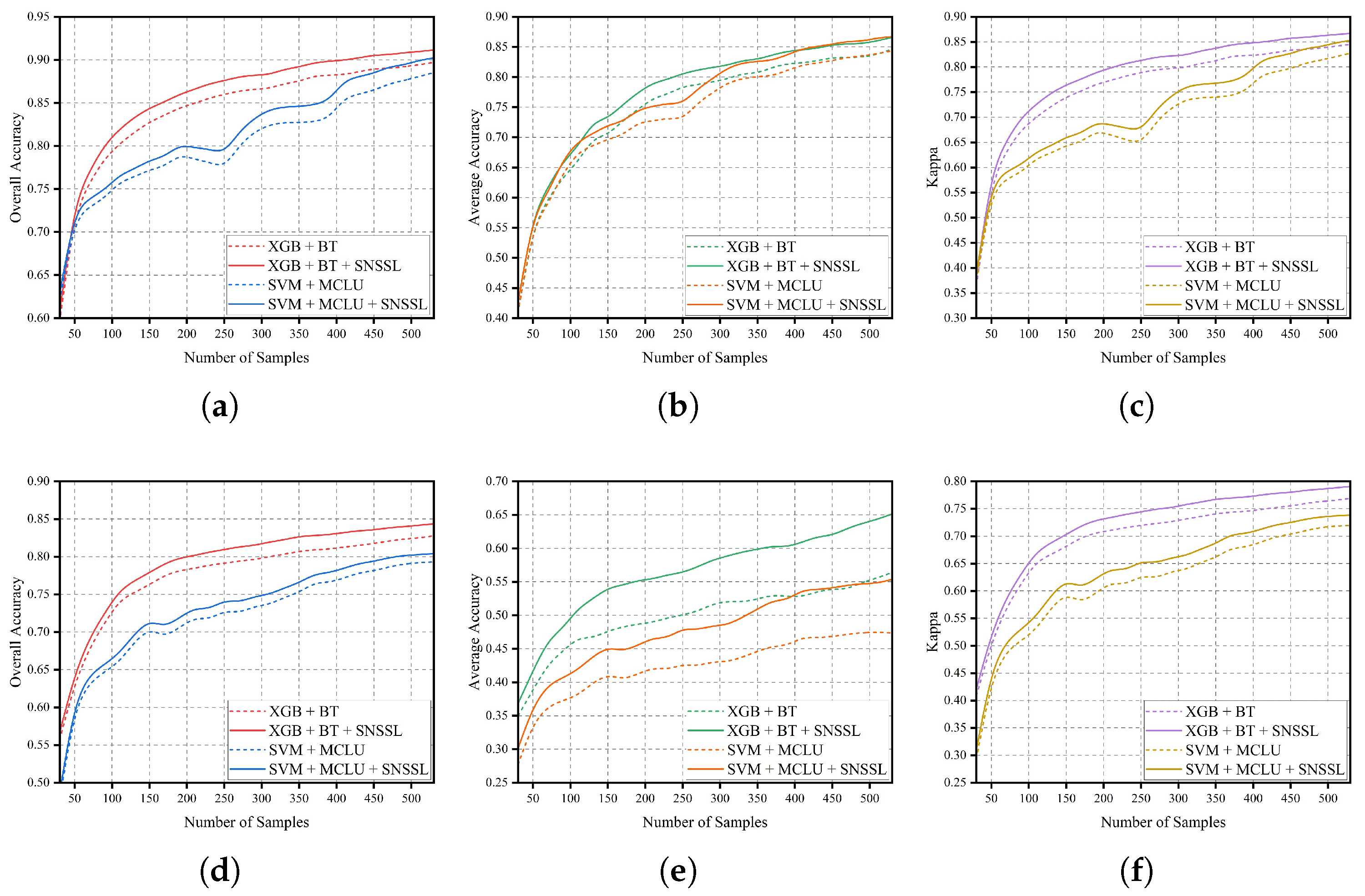

4.1. Effect of the Number of Samples on Classification Accuracy

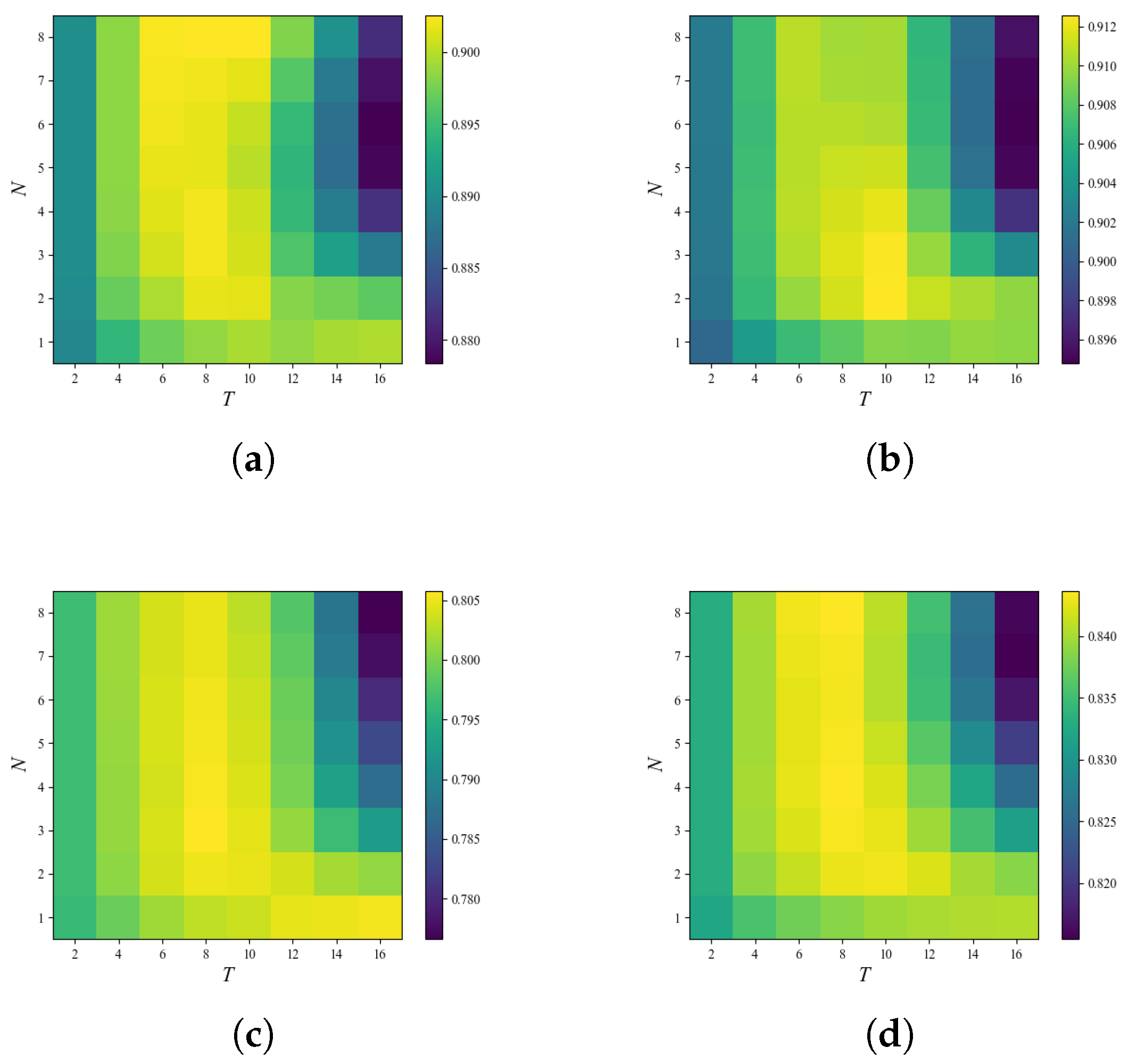

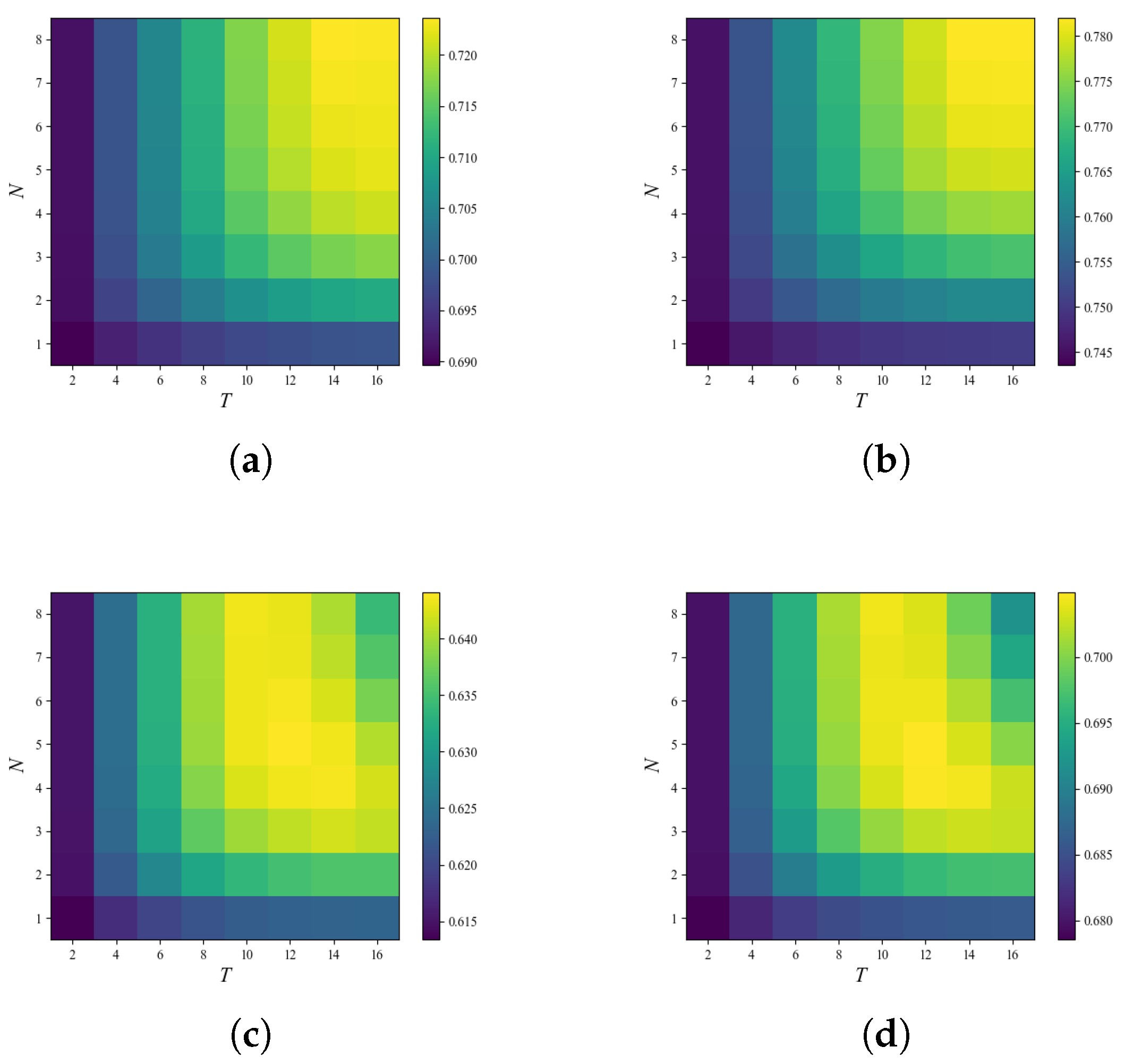

4.2. Effect of the Parameters Setting on Classification Accuracy

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Settles, B. Active Learning Literature Survey; Computer Sciences Technical Report 1648; University of Wisconsin–Madison: Madison, WI, USA, 2009. [Google Scholar]

- Aggarwal, C.C.; Kong, X.; Gu, Q.; Han, J.; Philip, S.Y. Active learning: A survey. In Data Classification; Chapman and Hall/CRC: Boca Raton, FL, USA, 2014; pp. 599–634. [Google Scholar]

- Kumar, P.; Gupta, A. Active learning query strategies for classification, regression, and clustering: A survey. J. Comput. Sci. Technol. 2020, 35, 913–945. [Google Scholar] [CrossRef]

- Demir, B.; Persello, C.; Bruzzone, L. Batch-mode active-learning methods for the interactive classification of remote sensing images. IEEE Trans. Geosci. Remote Sens. 2010, 49, 1014–1031. [Google Scholar] [CrossRef]

- Xu, J.; Hang, R.; Liu, Q. Patch-based active learning (PTAL) for spectral-spatial classification on hyperspectral data. Int. J. Remote Sens. 2014, 35, 1846–1875. [Google Scholar] [CrossRef]

- Wang, Z.; Du, B.; Zhang, L.; Zhang, L.; Jia, X. A novel semisupervised active-learning algorithm for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3071–3083. [Google Scholar] [CrossRef]

- Hay, G.J.; Castilla, G. Geographic Object-Based Image Analysis (GEOBIA): A new name for a new discipline. In Object-Based Image Analysis; Springer: Berlin/Heidelberg, Germany, 2008; pp. 75–89. [Google Scholar]

- Cheng, J.; Bo, Y.; Zhu, Y.; Ji, X. A novel method for assessing the segmentation quality of high-spatial resolution remote-sensing images. Int. J. Remote Sens. 2014, 35, 3816–3839. [Google Scholar] [CrossRef]

- Hossain, M.D.; Chen, D. Segmentation for Object-Based Image Analysis (OBIA): A review of algorithms and challenges from remote sensing perspective. ISPRS J. Photogramm. Remote Sens. 2019, 150, 115–134. [Google Scholar] [CrossRef]

- Myint, S.W.; Gober, P.; Brazel, A.; Grossman-Clarke, S.; Weng, Q. Per-pixel vs. object-based classification of urban land cover extraction using high spatial resolution imagery. Remote Sens. Environ. 2011, 115, 1145–1161. [Google Scholar] [CrossRef]

- Fu, Z.; Sun, Y.; Fan, L.; Han, Y. Multiscale and multifeature segmentation of high-spatial resolution remote sensing images using superpixels with mutual optimal strategy. Remote Sens. 2018, 10, 1289. [Google Scholar] [CrossRef]

- Sun, H.; Ren, J.; Zhao, H.; Yan, Y.; Zabalza, J.; Marshall, S. Superpixel based feature specific sparse representation for spectral-spatial classification of hyperspectral images. Remote Sens. 2019, 11, 536. [Google Scholar] [CrossRef]

- Huang, W.; Huang, Y.; Wang, H.; Liu, Y.; Shim, H.J. Local binary patterns and superpixel-based multiple kernels for hyperspectral image classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4550–4563. [Google Scholar] [CrossRef]

- Chen, Y.; Ming, D.; Lv, X. Superpixel based land cover classification of VHR satellite image combining multi-scale CNN and scale parameter estimation. Earth Sci. Inform. 2019, 12, 341–363. [Google Scholar] [CrossRef]

- Zhang, S.; Li, C.; Qiu, S.; Gao, C.; Zhang, F.; Du, Z.; Liu, R. EMMCNN: An ETPS-based multi-scale and multi-feature method using CNN for high spatial resolution image land-cover classification. Remote Sens. 2019, 12, 66. [Google Scholar] [CrossRef]

- Xie, F.; Gao, Q.; Jin, C.; Zhao, F. Hyperspectral image classification based on superpixel pooling convolutional neural network with transfer learning. Remote Sens. 2021, 13, 930. [Google Scholar] [CrossRef]

- Li, Z.; Li, E.; Samat, A.; Xu, T.; Liu, W.; Zhu, Y. An Object-Oriented CNN Model Based on Improved Superpixel Segmentation for High-Resolution Remote Sensing Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4782–4796. [Google Scholar] [CrossRef]

- Li, L.; Han, L.; Miao, Q.; Zhang, Y.; Jing, Y. Superpixel-Based Long-Range Dependent Network for High-Resolution Remote-Sensing Image Classification. Land 2022, 11, 2028. [Google Scholar] [CrossRef]

- Diao, Q.; Dai, Y.; Zhang, C.; Wu, Y.; Feng, X.; Pan, F. Superpixel-based attention graph neural network for semantic segmentation in aerial images. Remote Sens. 2022, 14, 305. [Google Scholar] [CrossRef]

- Dong, Y.; Liu, Q.; Du, B.; Zhang, L. Weighted feature fusion of convolutional neural network and graph attention network for hyperspectral image classification. IEEE Trans. Image Process. 2022, 31, 1559–1572. [Google Scholar] [CrossRef] [PubMed]

- Zhu, W.; Zhao, C.; Feng, S.; Qin, B. Multiscale short and long range graph convolutional network for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Guo, J.; Zhou, X.; Li, J.; Plaza, A.; Prasad, S. Superpixel-based active learning and online feature importance learning for hyperspectral image analysis. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 10, 347–359. [Google Scholar] [CrossRef]

- Liu, W.; Yang, J.; Li, P.; Han, Y.; Zhao, J.; Shi, H. A novel object-based supervised classification method with active learning and random forest for PolSAR imagery. Remote Sens. 2018, 10, 1092. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Ma, L.; Fu, T.; Li, M. Active learning for object-based image classification using predefined training objects. Int. J. Remote Sens. 2018, 39, 2746–2765. [Google Scholar] [CrossRef]

- Su, T.; Zhang, S.; Liu, T. Multi-spectral image classification based on an object-based active learning approach. Remote Sens. 2020, 12, 504. [Google Scholar] [CrossRef]

- Csillik, O. Fast segmentation and classification of very high resolution remote sensing data using SLIC superpixels. Remote Sens. 2017, 9, 243. [Google Scholar] [CrossRef]

- Tong, H.; Tong, F.; Zhou, W.; Zhang, Y. Purifying SLIC superpixels to optimize superpixel-based classification of high spatial resolution remote sensing image. Remote Sens. 2019, 11, 2627. [Google Scholar] [CrossRef]

- Tobler, W.R. A computer movie simulating urban growth in the Detroit region. Econ. Geogr. 1970, 46, 234–240. [Google Scholar] [CrossRef]

- Luo, T.; Kramer, K.; Goldgof, D.B.; Hall, L.O.; Samson, S.; Remsen, A.; Hopkins, T.; Cohn, D. Active learning to recognize multiple types of plankton. J. Mach. Learn. Res. 2005, 6, 589–613. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef]

- Stutz, D.; Hermans, A.; Leibe, B. Superpixels: An evaluation of the state-of-the-art. Comput. Vis. Image Underst. 2018, 166, 1–27. [Google Scholar] [CrossRef]

- Yue, J.; Li, Z.; Liu, L.; Fu, Z. Content-based image retrieval using color and texture fused features. Math. Comput. Model. 2011, 54, 1121–1127. [Google Scholar] [CrossRef]

- Wang, Y.; Zhao, Y.; Chen, Y. Texture classification using rotation invariant models on integrated local binary pattern and Zernike moments. EURASIP J. Adv. Signal Process. 2014, 2014, 182. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Connolly, C.; Fleiss, T. A study of efficiency and accuracy in the transformation from RGB to CIELAB color space. IEEE Trans. Image Process. 1997, 6, 1046–1048. [Google Scholar] [CrossRef] [PubMed]

- Tuia, D.; Pacifici, F.; Kanevski, M.; Emery, W.J. Classification of very high spatial resolution imagery using mathematical morphology and support vector machines. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3866–3879. [Google Scholar] [CrossRef]

- Luo, M.R.; Cui, G.; Rigg, B. The development of the CIE 2000 colour-difference formula: CIEDE2000. Color Res. Appl. 2001, 26, 340–350. [Google Scholar] [CrossRef]

- Floyd, R.W. Algorithm 97: Shortest path. Commun. ACM 1962, 5, 345. [Google Scholar] [CrossRef]

- Rahnemoonfar, M.; Chowdhury, T.; Sarkar, A.; Varshney, D.; Yari, M.; Murphy, R.R. Floodnet: A high resolution aerial imagery dataset for post flood scene understanding. IEEE Access 2021, 9, 89644–89654. [Google Scholar] [CrossRef]

- Rottensteiner, F.; Sohn, G.; Jung, J.; Gerke, M.; Baillard, C.; Benitez, S.; Breitkopf, U. The ISPRS benchmark on urban object classification and 3D building reconstruction. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, I-3, 293–298. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD’16, San Francisco, CA, USA, 13–17 August 2016; ACM: New York, NY, USA, 2016; pp. 785–794. [Google Scholar] [CrossRef]

| FloodNet-6651 | FloodNet-7577 | |||

|---|---|---|---|---|

| Pixel Number | Percentage | Pixel Number | Percentage | |

| Building | 1,731,044 | 14.4% | 2,668,245 | 18.9% |

| Road-flooded | - | - | 3,077,131 | 21.8% |

| Road | 1,201,785 | 10.0% | 203,765 | 1.4% |

| Tree | 3,048,555 | 25.4% | 3,421,016 | 24.3% |

| Vehicle | 156,470 | 1.3% | 80,009 | 0.6% |

| Grass | 5,862,146 | 48.9% | 4,606,927 | 32.7% |

| Pool | - | - | 49,531 | 0.4% |

| Potsdam-2_10 | Potsdam-3_10 | |||

|---|---|---|---|---|

| Pixel Number | Percentage | Pixel Number | Percentage | |

| Impervious surfaces | 4,944,599 | 13.7% | 8,338,198 | 23.2% |

| Building | 5,447,007 | 15.1% | 5,128,149 | 14.2% |

| Low vegetation | 15,182,061 | 42.2% | 11,428,326 | 31.7% |

| Tree | 2,679,388 | 7.4% | 8,780,245 | 24.4% |

| Car | 313,148 | 0.9% | 434,615 | 1.2% |

| Clutter/background | 7,433,797 | 20.6% | 1,890,467 | 5.3% |

| Class | XGB + BT | XGB + BT + SNSSL | SVM + MCLU | SVM + MCLU + SNSSL |

|---|---|---|---|---|

| Building | 90.38 | 91.45 | 87.03 | 88.95 |

| Road | 82.79 | 85.84 | 81.06 | 83.33 |

| Tree | 88.54 | 90.37 | 87.70 | 90.36 |

| Vehicle | 69.57 | 72.31 | 74.86 | 78.71 |

| Grass | 92.07 | 93.05 | 91.29 | 92.23 |

| OA (%) | 89.71 | 91.15 | 88.53 | 90.22 |

| AA (%) | 84.67 | 86.60 | 84.39 | 86.72 |

| Kappa × 100 | 84.54 | 86.70 | 82.77 | 85.28 |

| Class | XGB + BT | XGB + BT + SNSSL | SVM + MCLU | SVM + MCLU + SNSSL |

|---|---|---|---|---|

| Building | 90.82 | 92.48 | 91.80 | 92.08 |

| Road-flooded | 82.80 | 84.18 | 77.95 | 80.19 |

| Road | 11.58 | 33.10 | 0 | 6.51 |

| Tree | 85.94 | 85.93 | 76.62 | 77.31 |

| Vehicle | 19.78 | 25.83 | 5.14 | 24.27 |

| Grass | 80.63 | 82.26 | 80.20 | 81.35 |

| Pool | 23.40 | 52.20 | 0 | 25.86 |

| OA (%) | 82.74 | 84.37 | 79.49 | 80.55 |

| AA (%) | 56.42 | 65.14 | 47.39 | 55.37 |

| Kappa × 100 | 76.88 | 79.08 | 72.35 | 73.85 |

| Class | XGB + BT | XGB + BT + SNSSL | SVM + MCLU | SVM + MCLU + SNSSL |

|---|---|---|---|---|

| Impervious surfaces | 63.80 | 70.95 | 66.74 | 76.24 |

| Building | 73.37 | 80.28 | 77.33 | 78.96 |

| Low vegetation | 90.62 | 91.13 | 86.73 | 88.11 |

| Tree | 5.54 | 15.28 | 0 | 4.56 |

| Car | 15.68 | 19.78 | 2.10 | 8.18 |

| Clutter/background | 74.52 | 78.74 | 53.52 | 58.74 |

| OA (%) | 74.02 | 77.89 | 68.51 | 72.11 |

| AA (%) | 53.92 | 59.36 | 47.74 | 52.46 |

| Kappa × 100 | 62.92 | 68.70 | 55.47 | 60.77 |

| Class | XGB + BT | XGB + BT + SNSSL | SVM + MCLU | SVM + MCLU + SNSSL |

|---|---|---|---|---|

| Impervious surfaces | 56.72 | 67.52 | 45.17 | 66.57 |

| Building | 75.69 | 79.10 | 58.99 | 61.43 |

| Low vegetation | 69.83 | 71.44 | 74.70 | 77.74 |

| Tree | 53.72 | 59.75 | 35.55 | 39.19 |

| Car | 28.66 | 28.98 | 1.99 | 5.47 |

| Clutter/background | 78.33 | 77.56 | 76.23 | 76.76 |

| OA (%) | 68.20 | 69.70 | 61.60 | 63.57 |

| AA (%) | 60.49 | 64.06 | 50.01 | 53.29 |

| Kappa × 100 | 57.06 | 60.97 | 48.62 | 51.34 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, J.; Tong, H.; Tong, F.; Zhang, Y.; Chen, W. Exploiting Superpixel-Based Contextual Information on Active Learning for High Spatial Resolution Remote Sensing Image Classification. Remote Sens. 2023, 15, 715. https://doi.org/10.3390/rs15030715

Tang J, Tong H, Tong F, Zhang Y, Chen W. Exploiting Superpixel-Based Contextual Information on Active Learning for High Spatial Resolution Remote Sensing Image Classification. Remote Sensing. 2023; 15(3):715. https://doi.org/10.3390/rs15030715

Chicago/Turabian StyleTang, Jiechen, Hengjian Tong, Fei Tong, Yun Zhang, and Weitao Chen. 2023. "Exploiting Superpixel-Based Contextual Information on Active Learning for High Spatial Resolution Remote Sensing Image Classification" Remote Sensing 15, no. 3: 715. https://doi.org/10.3390/rs15030715

APA StyleTang, J., Tong, H., Tong, F., Zhang, Y., & Chen, W. (2023). Exploiting Superpixel-Based Contextual Information on Active Learning for High Spatial Resolution Remote Sensing Image Classification. Remote Sensing, 15(3), 715. https://doi.org/10.3390/rs15030715