Abstract

Three dimensional (3D) object detection with an optical camera and light detection and ranging (LiDAR) is an essential task in the field of mobile robot and autonomous driving. The current 3D object detection method is based on deep learning and is data-hungry. Recently, semi-supervised 3D object detection (SSOD-3D) has emerged as a technique to alleviate the shortage of labeled samples. However, it is still a challenging problem for SSOD-3D to learn 3D object detection from noisy pseudo labels. In this paper, to dynamically filter the unreliable pseudo labels, we first introduce a self-paced SSOD-3D method SPSL-3D. It exploits self-paced learning to automatically adjust the reliability weight of the pseudo label based on its 3D object detection loss. To evaluate the reliability of the pseudo label in accuracy, we present prior knowledge based SPSL-3D (named as PSPSL-3D) to enhance the SPSL-3D with the semantic and structure information provided by a LiDAR-camera system. Extensive experimental results in the public KITTI dataset demonstrate the efficiency of the proposed SPSL-3D and PSPSL-3D.

1. Introduction

Three dimensional (3D) environment perception has an important role in the field of autonomous driving [1]. It analyzes the real-time information of the surroundings to ensure traffic safety. To avoid vehicle collision [2], 3D object detection is an important approach among the techniques of 3D environment perception. Its task is to identify the classification and predict the 3D bounding box of a targeted object a the traffic scenario. In a word, 3D object detection performance affects the traffic safety of intelligent driving [3]. As 3D object detection requires spatial information from the environment, light detection and ranging (LiDAR) is a suitable sensor because it can generate a 3D point cloud in real-time [4]. Thanks to its ranging accuracy and stability, multi-beam mechanical LiDAR is the mainstream LiDAR sensor for environment perception [1,5]. It is referred to as LiDAR henceforth for discussion simplicity. Due to the limited rotation frequency and beam number, the vertical and horizontal resolution angles are limited, causing sparsity of the LiDAR point cloud, thus increasing the difficulty of 3D object detection [1].

The current 3D object detection method exploits the technique of deep learning and takes LiDAR points as the main input to identify and localize 3D objects [6]. To decrease the negative impact of the sparse LiDAR point cloud, researchers have done lots of work in several areas such as (i) detector architecture [7], (ii) supervised loss function [4], and (iii) data augmentation [8], which have made progress in fully supervised 3D object detection (FSOD-3D). By training with the sufficient labeled data, FSOD-3D can achieve performance in 3D environment perception close to that of humans.

However, there is a contradiction between the demand for 3D object detection performance and the cost of human annotation on the LiDAR point cloud. Due to the sparsity of the LiDAR point cloud and occlusion of the 3D object, the annotation cost of the 3D object is high, so the labeled dataset is insufficient. Therefore, it is essential to utilize unlabeled data to train the 3D object detector.

Semi-supervised 3D object detection (SSOD-3D) [9,10,11] has attracted a lot of attention, for it improves the generalization ability of the 3D object detector with both labeled and lots of unlabeled samples recorded in various traffic scenarios. From the viewpoint of optimization, SSOD-3D is regarded as a problem that alternatively optimizes the weights of 3D objects detector and pseudo labels from the unlabeled dataset. For one unlabeled sample (i.e., the LiDAR point cloud), its pseudo label consists of the 3D bounding boxes of the targeted objects (i.e., car, pedestrian, cyclist), predicted from . This means that the capacity of and quality of are coupled. To obtain the optimal , it is essential to decrease the false-positives (FP) and true-negatives (TN) in . To improve the quality of , one common approach is to utilize the label filter to remove the incorrect objects in . Sohn et al. [12] employed a confidence-based filter to remove pseudo labels of which the classification confidence score is below threshold . Wang et al. [11] extended this filter [12] in their SSOD-3D architecture, with both the and the 3D intersection-over-union (IoU) threshold. However, in practical application, the optimal thresholds are different with detector architecture, the training dataset and even the object category. It takes a lot of time to search the optimal thresholds in the label filter, which is inefficient in the actual application. Thus, it is a challenging problem to design a more effective and convenient SSOD-3D method.

In the background of intelligent driving, most self-driving cars are equipped with LiDAR and an optical camera. A sensor system with LiDAR and a camera is called a LiDAR-camera system. To remedy the sparsity of the LiDAR point cloud, researchers have studied 3D object detection methods on a LiDAR-camera system [13,14,15,16,17]; the LiDAR-camera system provides a dense texture feature from the RGB image, improving the classification accuracy and confidence of the 3D detection result. Thus, it is wise for the SSOD-3D to consider the prior knowledge provided by LiDAR-camera systems.

Motivated by this, we present a novel SSOD-3D method on a LiDAR-camera system. First, in order to train a 3D object detector with reliable pseudo labels, we introduce a self-paced, semi-supervised and learning-based 3D object detection (SPSL-3D) framework. It exploits the theory of self-paced learning (SPL) [18] to adaptively estimate the reliability weight of pseudo label with its 3D object detection loss. After that, we notice that the prior knowledge in the LiDAR point cloud and RGB image benefits the evaluation of the reliability of pseudo label, and propose a prior knowledge-based SPSL-3D (named PSPSL-3D) framework. Experiments are conducted in the autonomous driving dataset KITTI [19]. With the different labeled training samples, both comparison results and ablation studies demonstrate the efficiency of the SPSL-3D and PSPSL-3D frameworks. Therefore, SPSL-3D and PSPSL-3D benefit SSOD-3D on a LiDAR-camera system. The remainder of this paper is organized as follows. At first, the related works of FSOD-3D and SSOD-3D are illustrated in Section 2. In the next, the proposed SPSL-3D and PSPSL-3D methods are discussed in Section 3. After that, experimental configuration and results are analyzed in Section 4. Finally, this work is concluded in Section 5.

2. Related Works

2.1. Fully Supervised 3D Object Detection

To achieve high performance in environment perception in autonomous driving, FSOD-3D on LiDAR has been widely studied in recent years. Its architecture commonly has three modules [6]: (i) data representation, (ii) backbone network, (iii) detection head. LiDAR data mainly have three representations: point-based [4], pillar-based [20], and voxel-based [21]. Selection of a backbone network is dependent on data representation. Point-based features are extracted with PointNet [22], PointNet++ [23], or a graph neural network (GNN) [24]. As the pillar feature is regarded as the pseudo image, a 2D convolutional neural network (CNN) can be used. To deal with the sparsity of the LiDAR voxel, a 3D sparse convolutional neural network (Spconv) [25] is exploited for feature extraction. The detection head can be classified as anchor-based [26] and anchor-free [27]. The anchor-based 3D detector first generates the 3D bounding boxes with the pre-defined size of the different categories (called anchors) that are placed uniformly in the ground, then predicts the size, position shift, and confidence score of each anchor, and removes the incorrect anchors with lower confidence scores. After that, to remove the redundant 3D bounding boxes, 3D detection results are obtained by using non maximum suppression (NMS) on the remaining shifted anchors. The anchor-free 3D detector first usually predicts the foreground point cloud from the raw points [28], and then predicts the 3D bounding box from each foreground LiDAR point with a fully connected (FC) layer. Then, NMS is exploited to remove the bounding boxes with high overlap.

Recently, many researchers have produced lots of work; Zheng et al. [29] trained a baseline 3D detector with knowledge distillation. The teacher detector generates the pseudo label, and supervises the student detector with shape-aware data augmentation. Schinagl et al. [30] analyzed the importance of each LiDAR point for 3D object detection by means of Monte Carlo sampling. Man et al. [31] noticed that LiDAR can obtain multiple return signals with a single laser pulse and use this mechanism to encode a meaningful feature for the localization and classification of 3D proposals. Yin et al. [27] proposed a light anchor-free 3D detector to regress the heat maps of 3D bounding box parameters. In the training stage, it did not need target object alignment, thus saving lots of time. Based on 3D Spconv [25], Chen et al. [32] presented a focal sparse convolution to dynamically select the receptive field of voxel features for convolution computation. This can be extended for the multi-sensor feature fusion. As for FSOD-3D on a LiDAR-camera system, Wu et al. [33] used multi-model-based depth completion to generate a dense colored point cloud of 3D proposals for accurate proposal refinement. Li et al. [34] presented the multi-sensor-based cross attention module to utilize the LiDAR point as query, and its neighbored projected pixel coordinates and RGB values as keys and values for the fusion feature computation. Piergiovanni et al. [35] studied a general 4D detection framework for both RGB images and LiDAR point clouds in a time series. To deal with the sparsity of the LiDAR point cloud, Yin et al. [36] attempted to generate 3D virtual points of a targeted object with the guidance of the instance segmentation result predicted from the RGB image.

2.2. Semi-Supervised 3D Object Detection

Semi-supervised learning (SSL) is a classical problem in machine learning and deep learning [37]. Compared with the booming development of FSOD-3D and the rapid development of SSOD-2D, relatively fewer works on SSOD-3D have been published in academia. However, it is a challenging and meaningful problem for both industry and academia. First, unlike FSOD-3D, SSOD-3D needs to both consider how to generate reliable pseudo or weak labels from unlabeled point clouds, and how to exploit pseudo labels with uncertain quality for 3D detector training. Second, a classical SSL framework is difficult to directly use in SSOD-3D. For the labeled data and unlabeled data , traditional SSL theory [37] emphasises their similarity , and constructs a manifold regularization term for SSL optimization. However, in SSOD-3D, point clouds and are collected from different places and have different data distributions; thus, it is difficult to measure their similarity. Third, as the sparse and unstructured LiDAR point cloud contains fewer texture features than the dense and structured RGB image, it is more difficult for SSOD-3D to extract salient prior knowledge of the targeted object than it is for SSOD-2D.

Some insightful works of SSOD-3D are discussed hereafter. Tang and Lee [9] exploited the weak label (i.e., the 2D bounding box in RGB image) of an unlabeled 3D point cloud to train a 3D detector. The weak label is generated via the 2D object detector. To compute 3D detection loss with the weak label, the predicted 3D bounding box is projected onto the image plane. After that, 3D detection loss is converted into 2D detection loss. This method requires both RGB imaging and point cloud, and it works for both RGB-D camera and LiDAR-camera systems. Xu et al. [38] adaptively filtered the incorrect 3D object in the unlabeled data with a statistical and adaptive confidence threshold, and added the remaining predicted 3D objects into the 3D object database for 3D object detector training in the next iteration.

Mean teacher [39] is a common SSL paradigm in SSOD-3D. It consists of teacher and student detectors. For one unlabeled data, its pseudo label is generated from the teacher detector and used to supervise the student detector. Zhao et al. [10] were the first to utilize the mean teacher framework [39] for SSOD-3D. For the unlabeled data , they generated its pseudo label from the teacher detector and constructed consistency loss to minimize the difference between the pseudo label and result predicted by the student detector with data augmentation on . After that, parameters in the teacher detector were updated with the trained student detector via exponential moving average (EMA). Some current literature [11,40,41] has attempted to improve the previous work [10]. Wang et al. [11] focused on how to remove incorrect annotations from the predicted label l with multi-thresholds of object confidence, class, and 3D IoU. Wang et al. [40] attempted to generate accurate predicted labels with temporal smoothing. The teacher 3D detector predicted multi-frame labels from the multi-frame LiDAR data. After that, temporal GNN was used to generate the accurate labels at the current frame from these multi-frame labels. Park et al. [41] exploited a multi-task (i.e., 3D and 2D object detection) teacher detector to establish multi-task guided consistency loss for supervision. It works on a LiDAR-camera system. Sautier et al. [42] presented a self-supervised distillation method to pre-train the backbone network in a 3D object detector, with the guidance of super-pixel segmentation results, on an RGB image.

Some researchers consider that weak label is convenient and time-efficient for annotation, and study weak-supervised 3D object detection (WSOD-3D). Meng et al. [43] proposed a weak and fast 3D annotation procedure to generate a 3D cylindrical bounding box by clicking the object center in an RGB image. With the cylindrical label, they converted SSOD-3D as WSOD-3D and provided a two-stage training scheme for the 3D object detector. Qin et al. [44] designed an unsupervised 3D proposal generation method to obtain the 3D bounding box with anchor size, using the normalized point cloud density. Peng et al. [45] presented a WSOD-3D method for a monocular camera, which utilizes the alignment constraint of predicted 3D proposal and LiDAR points for the weak supervision. Xu et al. [46] dealt with WSOD-3D under the condition that the position-level annotations are known. A virtual scene with GT annotation is constructed with the known object centers. Then, samples in the real scene with weak labels and in the virtual scene with GT labels are both used for detector training.

2.3. Discussions

From the above analysis, most of the current study of SSOD-3D emphasises improving the quality of pseudo labels. Two common schemes are utilized: (i) label filter [10,11,38,41] and (ii) temporal smoothing [40]. However, both of these have space for improvement. The label filter scheme is not time-efficient enough to search for the optimal filter thresholds. The temporal smoothing scheme needs multi-frame LiDAR point clouds with the accurate sensor pose information; this need is difficult to satisfy in some actual situations. Although the weak label (i.e., 2D bounding box [9,45], 3D cylindrical bounding box [43] and 3D center position [46]) is easier for annotation than the standard label (i.e., 3D bounding box), it still costs time and human resources for the amount of unlabeled data that needs annotation in the context of autonomous driving. Therefore, an effective SSOD-3D method is still required.

3. Proposed Semi-Supervised 3D Object Detection

3.1. Problem Statement

SSOD-3D is a training framework to learn baseline detection from the labeled and unlabeled datasets and . The baseline detector is the arbitrary 3D object detector based on the LiDAR point cloud. Let be the parameter set of baseline detector. SSOD-3D aims to learn with higher generalization ability.

Some symbols are discussed here. Let be the i-th training sample where or means that it is ground truth (GT), labeled or not. is LiDAR point cloud where is number of LiDAR points. It contains the 3D position and reflected intensity of the LiDAR point cloud. Let be the 3D object label of . is the object number. represents the 3D bounding box of the j-th object using the parameter vector of the 3D bounding box [28]. is the pseudo or GT label if or . Let be the output of the 3D object detector with the input of and weight of . is the pseudo label of .

3.2. Previous Semi-Supervised 3D Object Detection

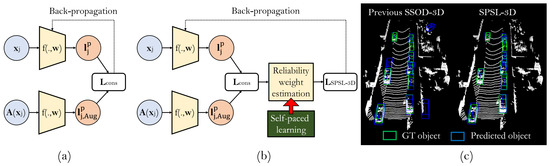

Before illustrating the proposed SPSL-3D, we briefly revisit the previous SSOD-3D approach [10]. The pipeline of the previous SSOD-3D is presented in Figure 1a. For the 3D object detector with high generalization ability, its prediction results from the unlabeled sample and its augmented sample are both consistent and closed to the GT labels. Based on this analysis, as the unlabeled sample does not have annotation, was proposed to minimize the difference in labels predicted from and . is the affine transformation on of , which contains scaling, X/Y-axis flipping, and Z-axis rotating operations. is the core in this scheme [10], for this loss can update the weights in the 3D object detector via back-propagation. The current SSOD-3D [10,11,38,41,47] optimizes by minimizing the function as:

where is the common 3D object detection loss of each detected object [20,28]. It is represented as vector to describe the detection loss of each object. With the inverse affine transformation , is obtained with the same reference coordinate system as in . In the end, we also provide discussion of the relation of a previous SSOD-3D, and traditional SSL theory is further discussed in Appendix A.1.

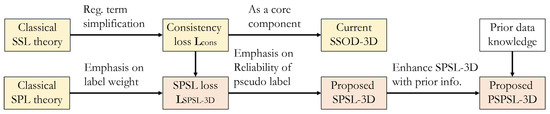

Figure 1.

Difference between the previous SSOD-3D [10] and the proposed SPSL-3D. (a) Consistency loss [10]. (b) Loss in SPSL-3D. This emphasizes the quality of pseudo label , adjusting the reliability weight of object in and thus enhancing the generalization ability of the baseline detector. (c) Improvement of SPSL-3D in 3D object detection.

3.3. Self-Paced Semi-Supervised Learning-Based 3D Object Detection

The main challenge of consistency loss in Equation (2) is that the quality of the pseudo label is uncertain. As , if is noisy or even incorrect, the baseline detector with the optimized parameter set tends to detect 3D objects with low localization accuracy. To deal with this problem, we needs to evaluate the reliability weight of , where is a vector to reflect the reliability score of objects in (). In the training stage, unreliable pseudo labels are filtered out with . However, determining is a crucial problem.

One naive idea is to adjust the reliability weight with the guidance of the consistency loss of . If the consistency loss of enlarges, the pseudo labels are unreliable. Based on this idea, we exploit the theory of SPL [18] to construct the mathematical relation of to , and propose a novel SSOD-3D framework, SPSL-3D, in this paper. Its pipeline is presented in Figure 1b. SPSL-3D optimizes by minimizing the function as:

where is age parameter to control the learning pace [48]. Let the current epoch and maximum training epoch be e and E. Furthermore, is a self-paced regularization term [48] for the unlabeled sample:

However, in deep learning, contains lots of parameters, so it is difficult to directly optimize Equation (3). As the modern deep neural network (DNN) is trained with a batch of data the size of [49], the loss of SPSL-3D is simplified as:

An alternative optimization scheme is used to optimize and . With the fixed , needs to be optimized. The closed-form solution is obtained as Equation (7) via . For a vector L, is its k-th element. With the fixed , is optimized in Equation (6) with the deep learning-based optimizer (i.e., Adam and SGD).

Intuitive explanation of Equation (7) is discussed here. For the k-th object in the sample with the pseudo label, if its loss is larger than , it is regarded as an unreliable label and cannot be used. If its loss is smaller than , SPSL-3D evaluates its reliability score with its consistency loss. SPSL-3D emphasizes the most reliable pseudo label in the training stage to enhance the robustness of the baseline detector. When epoch e grows, increases (seen Equation (4)), meaning that SPSL-3D enlarges the size of the unlabeled samples for training, thus improving the generalization ability of baseline detector. The improvement can be found in Figure 1c.

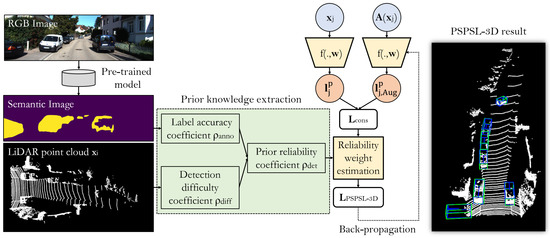

3.4. Improving SPSL-3D with Prior Knowledge

From Equation (7), SPSL-3D can adaptively adjust the reliability weight of pseudo label using its 3D object detection loss. In fact, the reliability weight of pseudo label is not only dependent on consistency loss, but also dependent on the prior knowledge in the LiDAR point cloud and RGB image provided by the LiDAR-camera system. If the LiDAR point cloud or image feature of one predicted object is not salient, its pseudo label is not reliable. Based on this analysis, to further enhance the performance of PSPL-3D with information from the LiDAR point cloud and RGB image, we propose a prior knowledge-based SPSL-3D named PSPSL-3D, which is presented in Figure 2.

Figure 2.

Framework of the proposed PSPSL-3D. It can evaluate the reliability weight of a pseudo label from prior knowledge extracted from the LiDAR point cloud and RGB image.

In PSPSL-3D, we attempt to represent the reliability of the pseudo label with the LiDAR point cloud and RGB image. For the k-th object in , its prior reliability coefficient is modeled as . It consists of the detection difficulty coefficient and the label accuracy coefficient . The motivation of designing is to constrain with both 3D detection loss and prior knowledge from the RGB image and LiDAR point cloud.

Due to the LiDAR mechanism, is mainly dependent on the occlusion and resolution of in the LiDAR point cloud. However, due to the complex situation of 3D object in the real traffic situation, it is difficult to model the relationship between the occlusion, resolution, and detection difficulty of . An approximate solution is provided here. Following the thought in the literature [50], a statistical variable is used as the LiDAR point number inside the 3D bounding box of to describe :

where is the category (i.e., car, pedestrian, cyclist) of , and is the minimal threshold of the LiDAR point number of the corresponding category. For the actual implementation, is a statistic variable from , discussed in Section 4.2. If has higher resolution and less occlusion, is closer and even higher than , so that is closer to 1. The 3D detection difficulty of is largely decreased.

Then, is discussed. As GT is unknown, we attempt to evaluate the annotation accuracy indirectly. On the one hand, as for the current 3D detection method, a confidence score of is supervised with the 3D IoU of the predicted and GT 3D bounding box [21,26]. can be used to describe . On the other hand, a semantic segmentation map predicted from RGB image also contains annotation information. As the RGB image is more dense than the LiDAR point cloud, semantic segmentation on the RGB image is more accurate than the semantic segmentation on the LiDAR point cloud. Projecting the 3D bounding box of onto the image plane generates a 2D bounding box . Its pixel area is . The pixel area of the semantic map of inside is . If the predicted 3D bounding box of is accurate, and ratio of and are closer to 1. Due to the occlusion of object, is not accurate enough. Thus, the arithmetic mean of and the pixel area ratio is used to describe :

From the above discussion, prior knowledge is not directly extracted from the RGB image, for the RGB feature of the targeted object is affected by shadow and blur in the complex traffic scenario. Compared with the RGB image, the semantic segmentation map is more stable to reflect the location information of a targeted object. Thus, the semantic feature is used to describe .

After obtaining , a scheme designed to constrain with is required. Referring to the thought in self-paced curriculum learning [51], the interval of can be constrained from to . This means that the interval of is dependent on its prior detection coefficient . To achieve this scheme, in Equation (6) is replaced with , and the loss function of PSPSL-3D is presented as:

As the same in Section 3.2, the alternative optimization scheme is used to find the optimal and . The close-formed solution of is shown in Equation (11) via . The procedure of PSPSL-3D is summarized in Algorithm 1.

| Algorithm 1 Proposed SPSL-3D and PSPSL-3D framework for SSOD-3D. |

Inputs: Baseline detector, maximum epoch E, datasets and , batch sizes and ; Parameters: Baseline detector weight , current epoch e, sample weight , age parameters and ; Output: Optimal 3D detector weight

|

4. Experiments

4.1. Dataset and Configuration

The classical outdoor KITTI dataset [19] is exploited to evaluate 3D detection performance in the outdoor traffic scenario. It contains (i) training dataset with 3712 samples, (ii) validation dataset with 3769 samples, and (iii) testing dataset with 7518 samples. All of them have GT annotation. The LiDAR point cloud and RGB image are provided in each sample. As the raw KITTI dataset does not contain semantic segmentation images, we generate semantic maps with four categories (i.e., car, pedestrian, bicycle, background) using the pre-trained deeplab v3 [52]. Three categories (i.e., car, pedestrian, cyclist) of targeted object are considered in the following experiments. To verify the performance of SSOD-3D methods, a semi-supervised condition is established in the experiments. is divided as and where and . GT labels in are disabled in the training stage. is regarded as unlabeled dataset. Let be the labeled ratio. In the following experiments, to evaluate the performance of SSOD-3D comprehensively, we set various training situations with the different labeled ratios, from 4% (hard SSL case ) to 64% (easy SSL case). Specifically, is set as 4%, 8%, 16%, 32%, 64%, respectively. Furthermore, we mainly focus on the comparison results in the hard SSL case ().

To measure the results of SSOD-3D methods, SSOD-3D methods are trained on , and then evaluated on . 3D average precision (AP) is the main metric for comparison. In order to further evaluate the different SSOD-3D methods, a bird’s eye view (BEV) AP and 3D recall rate are also provided. The IoU thresholds of 3D and BEV objects are 0.7 (car) and 0.5 (pedestrian and cyclist). As object label in KITTI dataset has three levels (i.e., easy, moderate, hard), these metrics of all level objects are provided for the comprehensive comparison.

The proposed SPSL-3D, PSPSL-3D needs a baseline detector. Voxel RCNN [21] is selected as the baseline 3D detector as it has simple detector architecture and fast and accurate inference performance. THe optimizer, learning rate policy, and hyper-parameters in are default [21]. As the proposed method is implemented on a single Nvidia GTX 3070, the batch size is set as 2, where and . is needed for SPSL-3D and PSPSL-3D. This is obtained by per-training Voxel R-CNN on with 80 epochs. The maximum epoch E is related to . Fine-tuning experience shows that better results are achieved when if and if . In the actual training stage, data augmentation contains not only 3D affine transformation on the LiDAR point cloud, but also the cut-and-paste operation [4]. This operation aims to increase the object number in by putting extra 3D objects with GT annotation into the point cloud . 3D objects with GT labels are stored in the object bank before training. To prevent data leakage, the object bank should be built only on instead of .

Current SSOD-3D methods are selected for comparison. SESS [10] is the first to utilize the mean-teacher SSL framework [39] in SSOD-3D. SESS is enhanced with the multi-threshold label filter (LF) proposed in work [11], and the improved method is named as 3DIoUMatch. UDA [53] is a classical SSL framework. It exploits the consistency loss for supervision. We consider that it can work for SSOD-3D. As curriculum learning (CL) [54] is a fundamental part of SPL theory [18], CL can also be used in SSOD-3D, so that method UDA+CL is designed. As unlabeled data increase learning uncertainty, UDA+CL tries to learn with increasing unlabeled data. For in Equation (3), is replaced with where . These methods also require a baseline 3D detector. For a fair comparison, Voxel RCNN [21] is marked as Baseline and used as the baseline 3D detector for all SSOD-3D methods. The above mentioned methods are trained in the same condition. As for the SSOD-3D method on the LiDAR point cloud, only the open-source code of 3DIoUMatch [11] is provided (https://github.com/THU17cyz/3DIoUMatch-PVRCNN, accessed on 1 July 2022). Other methods are implemented by authors on the open-source FSOD-3D framework OpenPCDet (https://github.com/open-mmlab/OpenPCDet, accessed on 1 March 2022).

4.2. Comparison with Semi-Supervised Methods

This experiment investigates the comparison results of the proposed PSPSL-3D methods with the current SSOD-3D methods. The results of all SSOD-3D methods at are presented in Table 1, Table 2, Table 3, Table 4 and Table 5. In these tables, gain from baseline means the improvement of the proposed PSPSL-3D over the baseline method. For the baseline, its 3D mAPs of all categories are dramatically increased from to , suggesting the large potential improvement of SSOD-3D. The 3D mAPs of baseline increased slowly when , and the improvements of SSOD-3D methods are also relatively small. The 3D mAP of 3DIoUMatch [11] is higher than SESS [10], as the multi-threshold-based label filter in 3DIoUMatch can remove some incorrectly predicted labels. The 3D mAP of UDA+CL [54] is higher than UDA [53], as the curriculum can reduce certain instances of overfitting of easily detected objects in the training stage. In most cases, the proposed SPSL-3D is superior to SESS [10] and UDA [53], because it exploits SPL theory [18] to filter incorrect and too difficult labeled and unlabeled training samples adaptively. It is noticed that the proposed PSPSL-3D has higher 3D mAPs than other current methods because it adds prior knowledge from the RGB image and LiDAR point cloud as self-paced regularization terms in SPSL-3D to achieve robust and accurate learning results. It is found that 3DIouMatch [11] has better performance than other previous methods. Compared with 3DIoUMatch, the proposed PSPSL-3D makes a significant improvement in 3D cyclist detection and also has a certain improvement in 3D pedestrian detection, because the prior knowledge from the LiDAR point cloud and RGB image is beneficial to modeling the reliability of objects with a relatively small size. Therefore, it is concluded that the proposed SPSL-3D and PSPSL-3D benefit SSOD-3D in a LiDAR-camera system.

Table 1.

3D AP of current SSOD-3D methods in KITTI validation dataset at .

Table 2.

3D AP of current SSOD-3D methods in KITTI validation dataset at .

Table 3.

3D AP of current SSOD-3D methods in KITTI validation dataset at .

Table 4.

3D AP of current SSOD-3D methods in KITTI validation dataset at .

Table 5.

3D AP of current SSOD-3D methods in KITTI validation dataset at .

4.3. Comparison with Fully Supervised Methods

This experiment investigates the comparison results of the proposed PSPSL-3D methods with current FSOD-3D methods. FSOD-3D methods are all trained on the entire . For the proposed method PSPSL-3D at , the unlabeled testing samples in the KITTI dataset are used in the training procedure. Results in the KITTI validation dataset are provided in Table 6. The 3D APs of almost all categories of SPSL-3D and PSPSL-3D are smaller than fully supervised Voxel RCNN [21], but the 3D APs difference between car and pedestrian is not large. Compared with other FSOD-3D methods, it is found that 3D APs of pedestrians of PSPSL-3D are larger than some of classical methods [4,20,26], while 3D APs of the car category are smaller than the state-of-the-art FSOD-3D methods [14,29,55,56]. Additionally, the BEV AP results of SPSL-3D, PSPSL-3D, and fully supervised Voxel RCNN [21] are also presented in Table 7. As BEV object detection is easier than 3D object detection, it is found that most of the BEV APs of the proposed SSOD-3D methods and fully supervised methods are fairly close. In conclusion, the proposed PSPSL-3D method, which needs only 64% labeled data, can achieve a performance that is close to the BEV and 3D object detection performance of current FSOD-3D methods. By exploiting more unlabeled samples (), the proposed PSPSL-3D outperforms than other FSOD-3D methods, which means that SSOD-3D has huge research potential in the field of autonomous driving.

Table 6.

3D AP of the proposed SSOD-3D at and current FSOD-3D methods in KITTI validation dataset.

Table 7.

BEV AP of the proposed SSOD-3D at and baseline FSOD-3D methods in KITTI validation dataset.

4.4. Visualizations

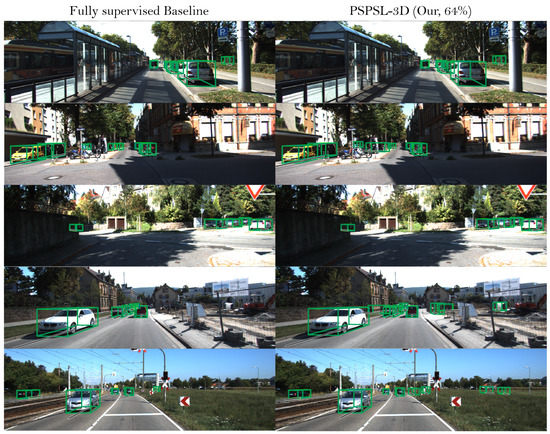

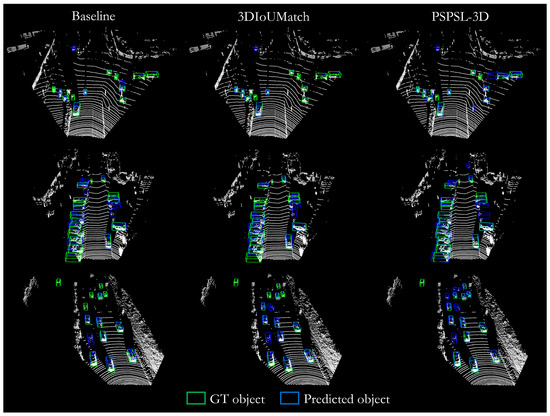

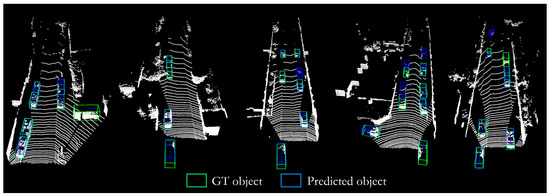

To better show the learning efficiency of PSPSL-3D, visualizations of 3D object detection are provided and discussed in this experiment. Comparisons with a fully supervised baseline 3D detector and PSPSL-3D trained in -labeled data are shown in Figure 3. In the different outdoor scenarios, the proposed PSPSL-3D has nearly the same performance as the fully supervised baseline detector. If the object is far from the LiDAR, only a few false-positives are generated in PSPSL-3D. To further compare 3D object detection results in the LiDAR point cloud, more visualizations of the proposed method in the condition of are presented in Figure 4 and Figure 5. As 3DIoUMatch [11] has a performance close to that of PSPSL-3D, it is used for the main comparison. In the complex street scenarios with lots of cars and cyclists, PSPSL-3D has a higher 3D recall rate than 3DIoUMatch, because the proposed PSPSL-3D emphasizes the reliability of the pseudo label, thus achieving stable learning results. PSPSL-3D also has accurate results in simple outdoor scenarios. It is concluded that the proposed PSPSL-3D has stable and accurate 3D object detection performance.

Figure 3.

3D object detection results of the fully supervised (FS) baseline method and the proposed PSPSL-3D trained in -labeled data in the KITTI validation dataset.

Figure 4.

3D object detection results in the complex scenarios. Baseline, 3DIoUMatch, and PSPSL-3D are trained with -labeled data. The recall rate of PSPSL-3D is higher than in other methods.

Figure 5.

3D object detection results of PSPSL-3D trained with -labeled data in simple traffic scenarios. Few false-positives and true-negatives were obtained.

4.5. Ablation Study

This experiment evaluates the effectiveness of the proposed PSPSL-3D framework. From Table 6, Table 7 and Table 8, it is also found that the 3D AP, BEV AP, and 3D recall rates of PSPSL-3D are higher than those of SPSL-3D in most of cases. Specifically, in the task of BEV object detection, PSPSL-3D has significantly improved pedestrian and cyclist detection under the same annotation conditions. In the task of 3D object detection, under the condition that , PSPSL-3D has a larger improvement than SPSL-3D in detecting 3D objects of all categories. The reason for this is provided in the following. Based on an SPSL-3D framework, PSPSL-3D exploits prior knowledge from the RGB image and LiDAR point cloud and then establishes extra regularization terms to prevent incorrectly predicted labels, thus achieving a higher 3D object detection performance. Therefore, ablation studies demonstrate the effectiveness of the proposed PSPSL-3D.

Table 8.

3D recall rate with an IoU threshold of 0.7 in the KITTI validation dataset.

5. Discussion

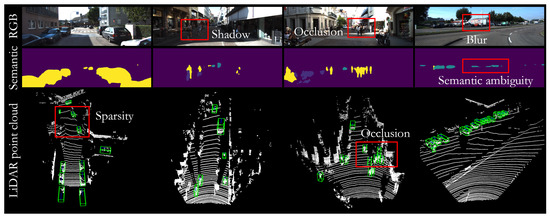

The proposed SSOD-3D frameworks, SPSL-3D and PSPSL-3D, have several advantages. At first, we consider the reliability of pseudo label in the SSOD-3D training stage. As the GT annotation of pseudo label is unknown, we attempt to use the consistency loss to represent the weight of the pseudo label. If the pseudo label is incorrect, its consistency loss is larger than other pseudo labels. To reduce the negative effect of the pseudo label with large noise, we need to decrease the reliability weight of this pseudo label in the training stage. To adaptively and dynamically adjust the reliability weight of all pseudo labels, we exploit the theory of SPL [18] and then propose SPSL-3D as a novel and efficient framework. Second, we utilize the multi-model sensor data in the semi-supervised learning stage, thus further enhancing the capacity of the baseline 3D object detector based on LiDAR. The reason for the usage of multi-model data is that we notice that the LiDAR-camera system is widely equipped in the autonomous driving system. Thus, both the RGB image and LiDAR point cloud can be used in the training stage of SSOD-3D. For one object, there are generally abundant structural and textural features in the RGB image and LiDAR point cloud. However, as shown in Figure 6, the RGB image has shadow, occlusion, and blur, so it is difficult to extract prior knowledge of an object in the RGB image. Compared with RGB images, semantic segmentation images can directly reflect the object category information. Based on this analysis, we used the area of semantic segmentation of the object to describe the annotation accuracy. However, the semantic segmentation image also has two main limitations: it cannot identify occluded objects, and finds it hard to determine objects that are far from the sensor. Thus, the proposed PSPSL-3D exploits Equation (9) to approximately represent the annotation accuracy of the pseudo label.

Figure 6.

Multi-sensor data collected by the LiDAR-camera system in the different outdoor scenarios. Occlusion, shadow, blur, and sparsity in the LiDAR point cloud and RGB image have impacts on the detection difficulty and annotation accuracy (only for pseudo label) of the 3D object, limiting the efficiency of SSOD-3D. Blur in RGB images causes ambiguity in the semantic image. The image and 3D point cloud show significant differences in the various scenes. 3D objects are detected using the proposed PSPSL-3D framework with .

Extensive experiments in Section 4 demonstrate the effectiveness of the proposed SPSL-3D and PSPSL-3D. Firstly, compared with the state-of-the-art SSOD-3D methods, the proposed SPSL-3D and PSPSL-3D frameworks have achieved the better results than other methods because the proposed frameworks consider the reliability of the pseudo labels, thus decreasing the negative effect of incorrect pseudo labels in the training stage. Second, the proposed frameworks are also suitable to train baseline 3D object in a fully supervising way. Compared with the current FSOD-3D methods, the baseline 3D detector trained with PSPSL-3D outperforms other FSOD-3D methods. This means that the training scheme which emphasizes the weight of label is beneficial to baseline 3D object detector training.

In the future, we will study SSOD-3D in the several ways. Firstly, computer graphic (CG) techniques can be used to generate a huge number of labeled simulated samples. Exploiting the theory of SPL [57] in SSOD-3D with unlabeled samples and labeled simulated samples is an ongoing problem. Secondly, in actual application, the data distribution of the labeled LiDAR point cloud might be different from that of the unlabeled LiDAR point cloud because the dataset is collected with a different type of LiDAR sensor at a different place. Utilizing domain adaptation in a SSOD-3D is a challenging problem. We will deal with the above problems in subsequent studies.

6. Conclusions

The main challenge of learning-based 3D object detection is the shortage of labeled samples. To make full use of unlabeled samples, SSOD-3D is an important technique. In this paper, we propose a novel and efficient SSOD-3D framework for 3D object detection on a LiDAR-camera system. Firstly, to avoid the negative effect of unreliable pseudo labels, we propose SPSL-3D to adaptively evaluate the reliability weight of pseudo labels. Secondly, to better evaluate the reliability weight of pseudo labels, we utilize prior knowledge from the LiDAR-camera system and present the PSPSL-3D framework. Extensive experiments show the effectiveness of the proposed SPSL-3D and PSPSL-3D on the public dataset. Hence, we believe that the proposed framework benefits 3D environmental perception in autonomous driving.

Author Contributions

Methodology and writing—original draft preparation, P.A.; software, J.L.; investigation, X.H.; formal analysis, T.M.; validation, S.Q.; supervision, Y.C. and L.W.; Resources, and funding acquisition, J.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (U1913602, 61991412, 62201536). Equipment Pre-Research Project (41415020202, 41415020404, 305050203).

Data Availability Statement

Publicly available datasets were analyzed in this study. This data can be found here: http://www.cvlibs.net/datasets/kitti/ (accessed on 1 March 2022).

Acknowledgments

The authors thank Siying Ke, Bin Fang, Junfeng Ding, Zaipeng Duan, and Zhenbiao Tan from Huazhong University of Science and Technology, and anonymous reviewers for providing many valuable suggestions.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| LiDAR | Light detection and ranging |

| 3D | Three dimensional |

| SSL | Semi-supervised learning |

| SSOD-3D | Semi-supervised 3D object detection |

| FSOD-3D | Fully supervised 3D object detection |

| WSOD-3D | Weakly supervised 3D object detection |

| SPL | Self-paced learning |

| SPSL-3D | Self-paced semi-supervised learning based 3D object detection |

| PSPSL-3D | Self-paced semi-supervised learning based 3D object detection with prior knowledge |

| AP | Average precision |

| BEV | Bird’s eye view |

| UDA | Unsupervised data augmentation |

| CL | Curriculum learning |

| IoU | Intersection over union |

| GT | Ground truth |

| EMA | Exponential moving average |

| NMS | Non maximum suppression |

| FC | Fully connected |

| CNN | Convolutional neural network |

| GNN | Graph neural network |

| Spconv | Sparse convolutional neural network |

Appendix A

Figure A1.

Relation of classical SSL, current SSOD-3D, and proposed SPSL-3D, PSPSL-3D. As weight function is difficult to design in complex traffic scenarios, the regularization (reg.) term in traditional SSL theory is simplified as the consistency loss . In this paper, emphasising the impact of the reliability of pseudo labels on SSOD-3D training, we present SPL-based consistency loss and SPSL-3D. Prior information (info.) is exploited to enhance the effect of SPL on SPSL-3D.

Appendix A.1. Relation of Traditional SSL Theory and Previous SSOD-3D Method

We briefly revisit the previous SSOD-3D approach [10] and discuss the relation between the classical SSL theory and existing SSOD-3D method (seen in Figure A1). Classical SSL [37] aims to find by minimizing the following cost function:

where and are coefficients of regularization terms. Suppose that lies in a compact manifold [37]. is the marginal distribution of . reflects the intrinsic structure of on [37]:

In the above equation, data weight describes the similarity of and . However, as presented in Figure 6, and collected in the different scenarios have different data distribution. Their object number and category are also not the same. Therefore, it is hard to design a suitable . There is a gap between traditional SSL theory and SSOD-3D.

To solve this problem, researchers attempted to relax and present the consistency loss as an approximation of SSOD-3D. They exploit data augmentation operator to create datum similar to [10,39]. is used to replace in Equation (A2). is the affine transformation on of , which contains scaling, X/Y-axis flipping and Z-axis rotating operations [26]. As affine transformation does not change the structure of point cloud, it is safe to assume that . is one of the results in . With the inverse affine transformation , is obtained, which has the same reference coordinate system as . After that, in Equation (A1) is simplified and replaced as one consistency loss [10] as Equation (2). It is the core loss function in the pseudo label based SSOD-3D methods [10,11,38,41,47]. In the other literature, is called unsupervised data augmentation (UDA) [53] because it does not utilize any GT information. The current SSOD-3D [10] optimizes by minimizing the function in Equation (1).

References

- Li, X.; Zhou, Y.; Hua, B. Study of a Multi-Beam LiDAR Perception Assessment Model for Real-Time Autonomous Driving. IEEE Trans. Instrum. Meas. 2021, 70, 1–15. [Google Scholar] [CrossRef]

- Yuan, Z.; Song, X.; Bai, L.; Wang, Z.; Ouyang, W. Temporal-Channel Transformer for 3D Lidar-Based Video Object Detection for Autonomous Driving. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 2068–2078. [Google Scholar] [CrossRef]

- Zhang, J.; Lou, Y.; Wang, J.; Wu, K.; Lu, K.; Jia, X. Evaluating Adversarial Attacks on Driving Safety in Vision-Based Autonomous Vehicles. IEEE Internet Things J. 2022, 9, 3443–3456. [Google Scholar] [CrossRef]

- Shi, S.; Wang, X.; Li, H. PointRCNN: 3D Object Proposal Generation and Detection From Point Cloud. In Proceedings of the CVPR, Long Beach, CA, USA, 16–20 June 2019; pp. 770–779. [Google Scholar]

- Zhu, H.; Yuen, K.; Mihaylova, L.; Leung, H. Overview of Environment Perception for Intelligent Vehicles. IEEE Trans. Intell. Transp. Syst. 2017, 18, 2584–2601. [Google Scholar] [CrossRef]

- Zamanakos, G.; Tsochatzidis, L.T.; Amanatiadis, A.; Pratikakis, I. A comprehensive survey of LIDAR-based 3D object detection methods with deep learning for autonomous driving. Comput. Graph. 2021, 99, 153–181. [Google Scholar] [CrossRef]

- He, C.; Zeng, H.; Huang, J.; Hua, X.; Zhang, L. Structure Aware Single-stage 3D Object Detection from Point Cloud. In Proceedings of the CVPR, Seattle, WA, USA, 14–19 June 2020; pp. 11870–11879. [Google Scholar]

- Fang, J.; Zuo, X.; Zhou, D.; Jin, S.; Wang, S.; Zhang, L. LiDAR-Aug: A General Rendering-Based Augmentation Framework for 3D Object Detection. In Proceedings of the CVPR, Virtual, 19–25 June 2021; pp. 4710–4720. [Google Scholar]

- Tang, Y.S.; Lee, G.H. Transferable Semi-Supervised 3D Object Detection From RGB-D Data. In Proceedings of the IEEE ICCV, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1931–1940. [Google Scholar]

- Zhao, N.; Chua, T.; Lee, G.H. SESS: Self-Ensembling Semi-Supervised 3D Object Detection. In Proceedings of the CVPR, Seattle, WA, USA, 13–19 June 2020; pp. 11076–11084. [Google Scholar]

- Wang, H.; Cong, Y.; Litany, O.; Gao, Y.; Guibas, L.J. 3DIoUMatch: Leveraging IoU Prediction for Semi-Supervised 3D Object Detection. In Proceedings of the CVPR, Virtual, 19–25 June 2021; pp. 14615–14624. [Google Scholar]

- Sohn, K.; Berthelot, D.; Carlini, N.; Zhang, Z.; Zhang, H.; Raffel, C.; Cubuk, E.D.; Kurakin, A.; Li, C. FixMatch: Simplifying Semi-Supervised Learning with Consistency and Confidence. In Proceedings of the NeurIPS, Virtual, 6–12 December 2020; pp. 1–13. [Google Scholar]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-view 3D Object Detection Network for Autonomous Driving. In Proceedings of the CVPR, Honolulu, HI, USA, 21–26 July 2017; pp. 6526–6534. [Google Scholar]

- Yoo, J.H.; Kim, Y.; Kim, J.S.; Choi, J.W. 3D-CVF: Generating Joint Camera and LiDAR Features Using Cross-View Spatial Feature Fusion for 3D Object Detection. In Proceedings of the ECCV, Glasgow, UK, 23–28 August 2020; pp. 1–16. [Google Scholar]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum PointNets for 3D Object Detection from RGB-D Data. In Proceedings of the CVPR, Salt Lake City, UT, USA, 18–23 June 2018; pp. 918–927. [Google Scholar]

- Ku, J.; Mozifian, M.; Lee, J.; Harakeh, A.; Waslander, S.L. Joint 3D Proposal Generation and Object Detection from View Aggregation. In Proceedings of the IROS, Madrid, Spain, 1–5 October 2018; pp. 1–8. [Google Scholar]

- An, P.; Liang, J.; Yu, K.; Fang, B.; Ma, J. Deep structural information fusion for 3D object detection on LiDAR-camera system. Comput. Vis. Image Underst. 2022, 214, 103295. [Google Scholar] [CrossRef]

- Kumar, M.P.; Packer, B.; Koller, D. Self-Paced Learning for Latent Variable Models. In Proceedings of the NeurIPS, Vancouver, BC, Canada, 6–9 December 2010; pp. 1189–1197. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In In Proceedings of the CVPR, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. PointPillars: Fast Encoders for Object Detection From Point Clouds. In Proceedings of the CVPR, Long Beach, CA, USA, 15–20 June 2019; pp. 12697–12705. [Google Scholar]

- Deng, J.; Shi, S.; Li, P.; Zhou, W.; Zhang, Y.; Li, H. Voxel R-CNN: Towards High Performance Voxel-based 3D Object Detection. In Proceedings of the AAAI, Virtually, 2–9 February 2021; pp. 1201–1209. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the CVPR, Honolulu, HI, USA, 21–26 July 2017; pp. 77–85. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In Proceedings of the NIPS, Long Beach, CA, USA, 4–9 December 2017; pp. 5099–5108. [Google Scholar]

- Shi, W.; Rajkumar, R. Point-GNN: Graph Neural Network for 3D Object Detection in a Point Cloud. In Proceedings of the CVPR, Seattle, WA, US, 13–19 June 2020; pp. 1708–1716. [Google Scholar]

- Graham, B.; Engelcke, M.; van der Maaten, L. 3D Semantic Segmentation with Submanifold Sparse Convolutional Networks. In Proceedings of the CVPR, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9224–9232. [Google Scholar]

- Shi, S.; Guo, C.; Jiang, L.; Wang, Z.; Shi, J.; Wang, X.; Li, H. PV-RCNN: Point-Voxel Feature Set Abstraction for 3D Object Detection. In Proceedings of the CVPR, Seattle, WA, USA, 13–19 June 2020; pp. 10526–10535. [Google Scholar]

- Yin, T.; Zhou, X.; Krähenbühl, P. Center-Based 3D Object Detection and Tracking. In Proceedings of the CVPR, Virtual, 19–25 June 2021; pp. 11784–11793. [Google Scholar]

- Shi, S.; Wang, Z.; Shi, J.; Wang, X.; Li, H. From Points to Parts: 3D Object Detection From Point Cloud With Part-Aware and Part-Aggregation Network. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 2647–2664. [Google Scholar] [CrossRef] [PubMed]

- Zheng, W.; Tang, W.; Jiang, L.; Fu, C. SE-SSD: Self-Ensembling Single-Stage Object Detector From Point Cloud. In Proceedings of the CVPR, Virtual, 19–25 June 2021; pp. 14494–14503. [Google Scholar]

- Schinagl, D.; Krispel, G.; Possegger, H.; Roth, P.M.; Bischof, H. OccAM’s Laser: Occlusion-based Attribution Maps for 3D Object Detectors on LiDAR Data. In Proceedings of the CVPR, New Orleans, LA, USA, 18–24 June 2022; pp. 1131–1140. [Google Scholar]

- Man, Y.; Weng, X.; Sivakumar, P.K.; O’Toole, M.; Kitani, K. Multi-Echo LiDAR for 3D Object Detection. In Proceedings of the ICCV, Seoul, Republic of Korea, 27 October–2 November 2021; pp. 3743–3752. [Google Scholar]

- Chen, Y.; Li, Y.; Zhang, X.; Sun, J.; Jia, J. Focal Sparse Convolutional Networks for 3D Object Detection. In Proceedings of the CVPR, New Orleans, LA, USA, 18–24 June 2022; pp. 5418–5427. [Google Scholar]

- Wu, X.; Peng, L.; Yang, H.; Xie, L.; Huang, C.; Deng, C.; Liu, H.; Cai, D. Sparse Fuse Dense: Towards High Quality 3D Detection with Depth Completion. In Proceedings of the CVPR, New Orleans, LA, USA, 18–24 June 2022; pp. 5408–5417. [Google Scholar]

- Li, Y.; Yu, A.W.; Meng, T.; Caine, B.; Ngiam, J.; Peng, D.; Shen, J.; Wu, B.; Lu, Y.; Zhou, D.; et al. DeepFusion: Lidar-Camera Deep Fusion for Multi-Modal 3D Object Detection. In Proceedings of the CVPR, New Orleans, LA, USA, 18–24 June 2022; pp. 17161–17170. [Google Scholar]

- Piergiovanni, A.J.; Casser, V.; Ryoo, M.S.; Angelova, A. 4D-Net for Learned Multi-Modal Alignment. In Proceedings of the ICCV, Seoul, Republic of Korea, 27 October–2 November 2021; pp. 15415–15425. [Google Scholar]

- Yin, T.; Zhou, X.; Krähenbühl, P. Multimodal Virtual Point 3D Detection. In Proceedings of the NeurIPS, Virtual, 6–14 December 2021; pp. 16494–16507. [Google Scholar]

- Belkin, M.; Niyogi, P.; Sindhwani, V. Manifold Regularization: A Geometric Framework for Learning from Labeled and Unlabeled Examples. J. Mach. Learn. Res. 2006, 7, 2399–2434. [Google Scholar]

- Xu, H.; Liu, F.; Zhou, Q.; Hao, J.; Cao, Z.; Feng, Z.; Ma, L. Semi-Supervised 3d Object Detection Via Adaptive Pseudo-Labeling. In Proceedings of the ICIP, Anchorage, AK, USA, 19–22 September 2021; pp. 3183–3187. [Google Scholar]

- Tarvainen, A.; Valpola, H. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. In Proceedings of the NeurIPS, Long Beach, CA, USA, 4–9 December 2017; pp. 1195–1204. [Google Scholar]

- Wang, J.; Gang, H.; Ancha, S.; Chen, Y.; Held, D. Semi-supervised 3D Object Detection via Temporal Graph Neural Networks. In Proceedings of the 3DV, Virtual, 1–3 December 2021; pp. 413–422. [Google Scholar]

- Park, J.; Xu, C.; Zhou, Y.; Tomizuka, M.; Zhan, W. DetMatch: Two Teachers are Better Than One for Joint 2D and 3D Semi-Supervised Object Detection. arXiv 2022, arXiv:2203.09510. [Google Scholar] [CrossRef]

- Sautier, C.; Puy, G.; Gidaris, S.; Boulch, A.; Bursuc, A.; Marlet, R. Image-to-Lidar Self-Supervised Distillation for Autonomous Driving Data. In Proceedings of the CVPR, New Orleans, LA, USA, 18–24 June 2022; pp. 9881–9891. [Google Scholar]

- Meng, Q.; Wang, W.; Zhou, T.; Shen, J.; Gool, L.V.; Dai, D. Weakly Supervised 3D Object Detection from Lidar Point Cloud. In Proceedings of the ECCV, Glasgow, UK, 23–28 August 2020; Volume 12358, pp. 515–531. [Google Scholar]

- Qin, Z.; Wang, J.; Lu, Y. Weakly Supervised 3D Object Detection from Point Clouds. In Proceedings of the ACM MM, Istanbul, Turkey, 8–11 June 2020; pp. 4144–4152. [Google Scholar]

- Peng, L.; Yan, S.; Wu, B.; Yang, Z.; He, X.; Cai, D. Weakly Supervised 3D Object Detection from Point Clouds. In Proceedings of the ICLR, Virtual, 25–29 April 2022; pp. 1–20. [Google Scholar]

- Xu, X.; Wang, Y.; Zheng, Y.; Rao, Y.; Zhou, J.; Lu, J. Back to Reality: Weakly-supervised 3D Object Detection with Shape-guided Label Enhancement. In Proceedings of the CVPR, New Orleans, LA, USA, 18–24 June 2022; pp. 8428–8437. [Google Scholar]

- Zhang, J.; Liu, H.; Lu, J. A semi-supervised 3D object detection method for autonomous driving. Displays 2022, 71, 102117. [Google Scholar] [CrossRef]

- Meng, D.; Zhao, Q.; Jiang, L. A theoretical understanding of self-paced learning. Inf. Sci. 2017, 414, 319–328. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the ICML, Lille, France, 7–9 July 2015; Volume 37, pp. 448–456. [Google Scholar]

- Sun, P.; Kretzschmar, H.; Dotiwalla, X.; Chouard, A.; Patnaik, V.; Tsui, P.; Guo, J.; Zhou, Y.; Chai, Y.; Caine, B.; et al. Scalability in Perception for Autonomous Driving: Waymo Open Dataset. In Proceedings of the CVPR, Seattle, WA, USA, 13–19 June 2020; pp. 2443–2451. [Google Scholar]

- Zhang, D.; Meng, D.; Zhao, L.; Han, J. Bridging Saliency Detection to Weakly Supervised Object Detection Based on Self-Paced Curriculum Learning. In Proceedings of the IJCAI, New York, NY, USA, 9–15 July 2016; pp. 3538–3544. [Google Scholar]

- Chen, L.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking Atrous Convolution for Semantic Image Segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Xie, Q.; Dai, Z.; Hovy, E.H.; Luong, T.; Le, Q. Unsupervised Data Augmentation for Consistency Training. In Proceedings of the NeurIPS, Virtual, 6–12 December 2020; pp. 1–21. [Google Scholar]

- Bengio, Y.; Louradour, J.; Collobert, R.; Weston, J. Curriculum learning. In Proceedings of the ICML, Montreal, QC, Canada, 14–18 June 2009; pp. 41–48. [Google Scholar]

- Huang, T.; Liu, Z.; Chen, X.; Bai, X. EPNet: Enhancing Point Features with Image Semantics for 3D Object Detection. In Proceedings of the ECCV, Glasgow, UK, 23–28 August 2020; pp. 1–16. [Google Scholar]

- Liu, Z.; Zhao, X.; Huang, T.; Hu, R.; Zhou, Y.; Bai, X. TANet: Robust 3D Object Detection from Point Clouds with Triple Attention. In Proceedings of the AAAI, New York, NY, USA, 7–12 February 2020; pp. 1–9. [Google Scholar]

- Jiang, L.; Meng, D.; Yu, S.; Lan, Z.; Shan, S.; Hauptmann, A.G. Self-Paced Learning with Diversity. In Proceedings of the NeurIPS, Montreal, QC, Canada, 8–13 December 2014; pp. 2078–2086. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).