Meta-Knowledge Guided Weakly Supervised Instance Segmentation for Optical and SAR Image Interpretation

Abstract

1. Introduction

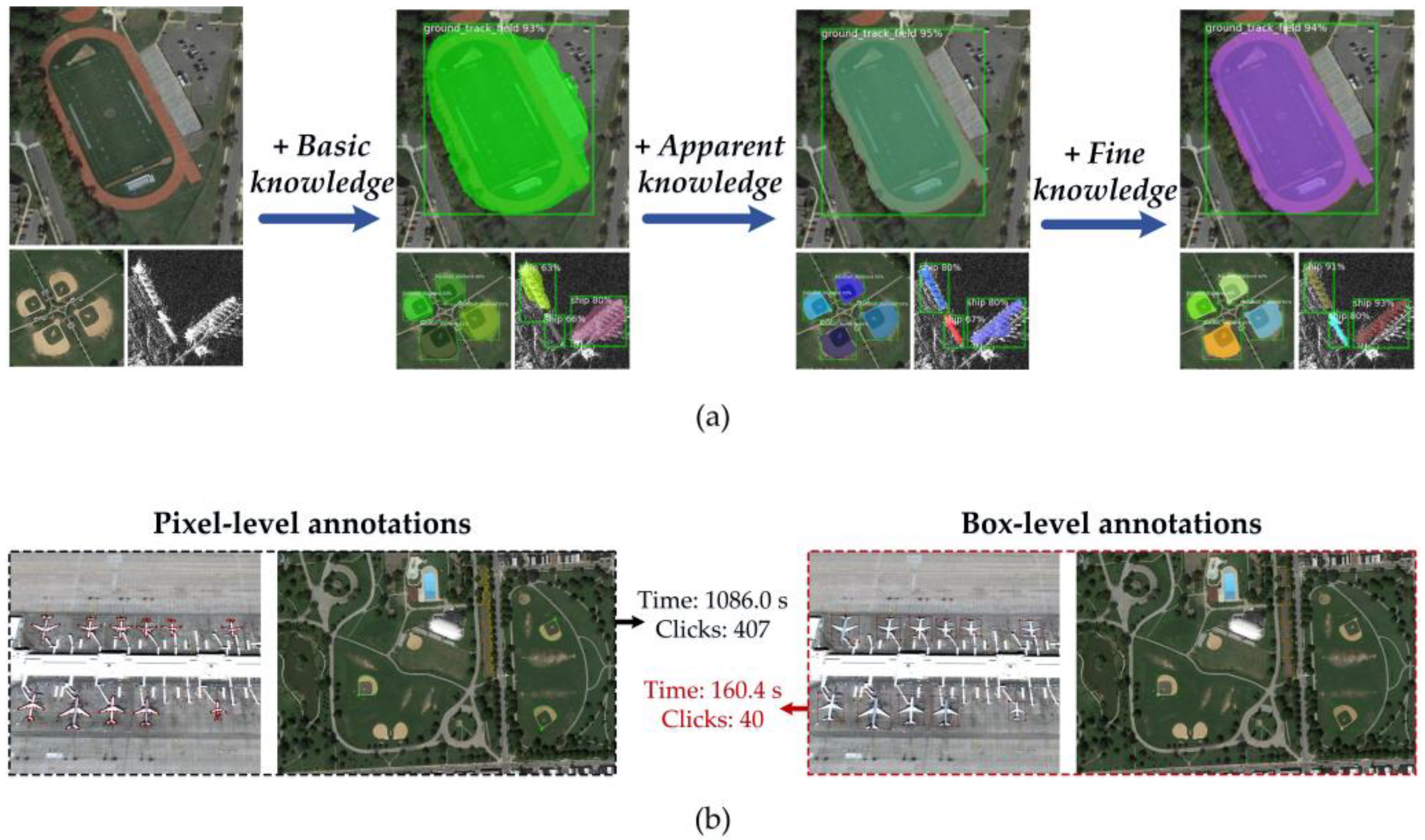

- We thoroughly weigh the visual processing requirements and annotation costs, and then introduce the instance segmentation of the box-level supervised paradigm into the interpretation of optical and SAR images in remote sensing;

- Through the meta-knowledge theory and human visual perception habits, we decompose the prior knowledge of the mask-aware task in WSIS into three meta-knowledge components: fundamental knowledge, apparent knowledge, and detailed knowledge, which can provide a unified representation of the mask-aware task;

- By instantiating these meta-knowledge components, we propose the MGWI-Net. The WSM head in this network can instantiate both fundamental and epistemic knowledge to perform mask awareness without any annotations at the pixel level. The MIAA head can implicitly guide the network to learn detailed information through the boundary-sensitive feature of the fully connected CRF, enabling the instantiation of detailed knowledge;

- The experimental results show in the NWPU VHR-10 instance segmentation dataset and the SSDD dataset that the proposed three meta-knowledge components can guide the MGWI-Net to accurately segment the instance masks and ultimately achieve the approximate instance segmentation results of the fully supervised approach with about one-eighth of the annotation time.

2. Related Work

2.1. Instance Segmentation

2.2. Weakly Supervised Instance Segmentation

3. Methodology

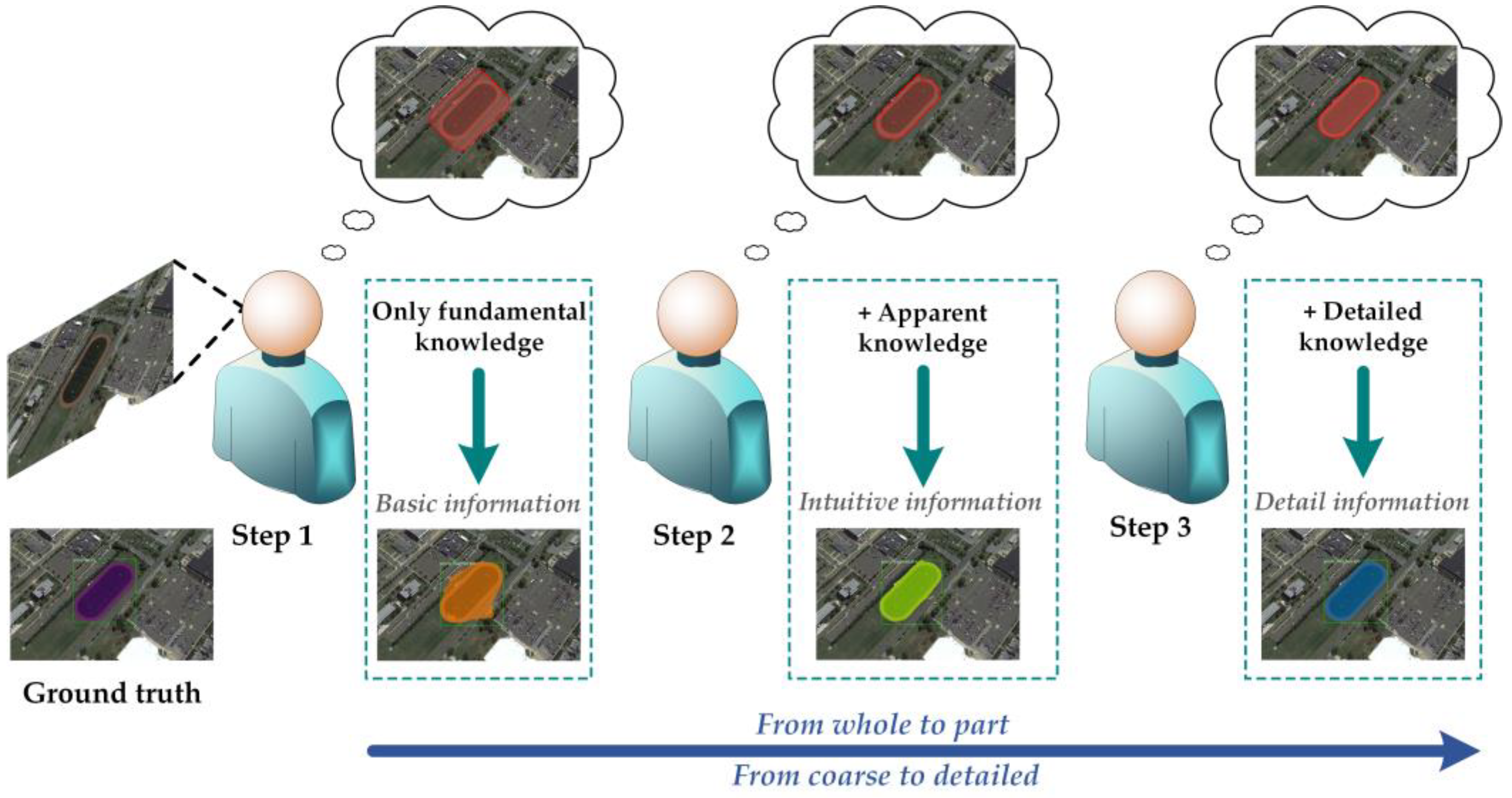

3.1. Meta-Knowledge

- Fundamental knowledge, Kf: In visual perception, people tend first to acquire basic information, such as the position and size of an object, which is indispensable for refined observation later. Therefore, we define the fundamental knowledge of mask-aware task in WSIS as the position and size of the object, aiming to help the model locate and roughly perceive the object and lay the foundation for subsequently refined mask awareness;

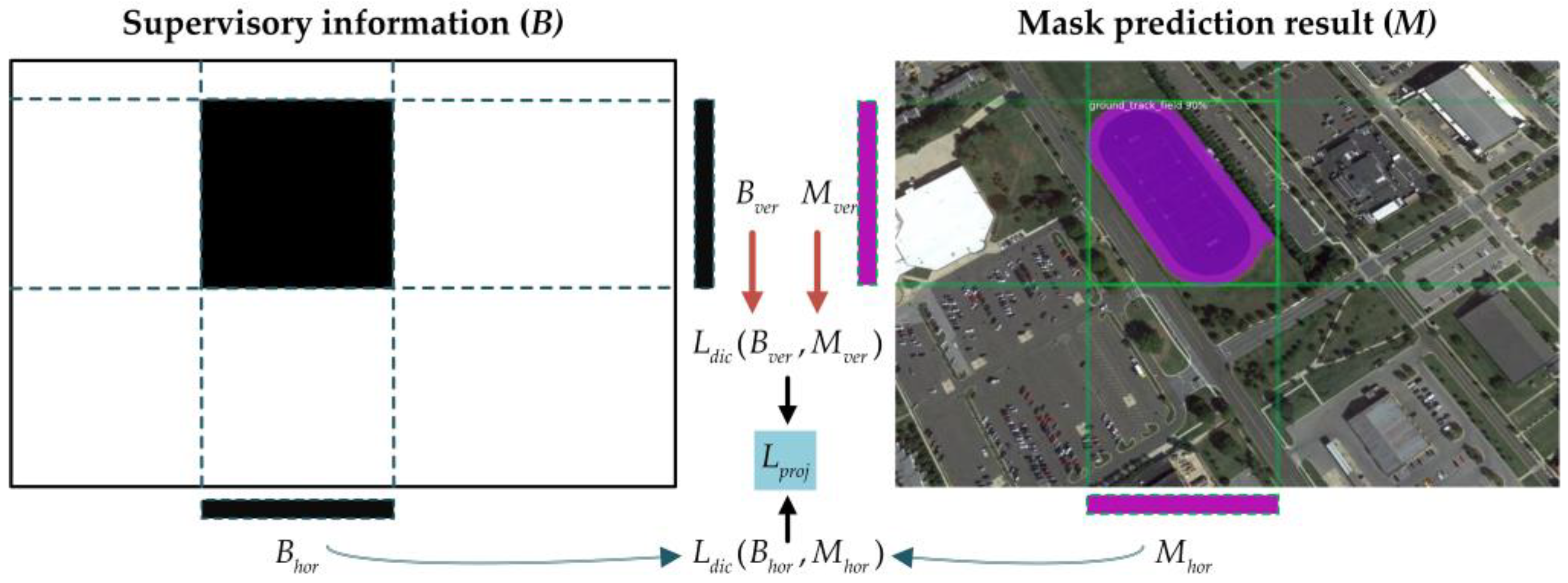

- Apparent knowledge, Ka: According to visual perception habits, people naturally focus on objects’ intuitive information (such as color) after acquiring basic information and using this intuitive information to distinguish objects from the background. Therefore, we define the apparent knowledge of the mask-aware task as the color and instantiate this knowledge as a specific loss function in the MGWI-Net to implement the preliminary extraction of masks. See Section 3.2.2 for details;

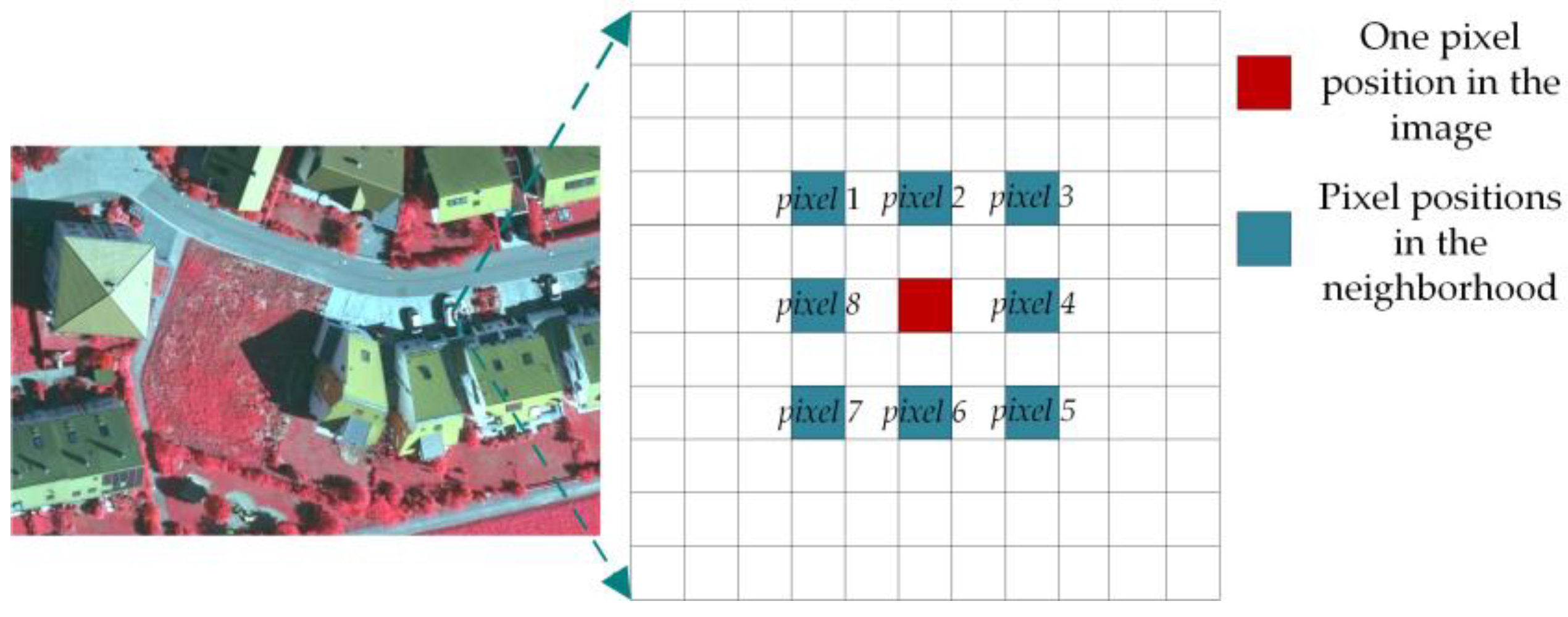

- Detailed knowledge, Kd: As shown in Step 3 of Figure 2, after the successful use of the fundamental information and the intuitive information, it is necessary to pay attention to the detailed information, such as the edge of the object, and then achieve perfect and detailed mask awareness. Based on this, we define the detailed knowledge as the edge and instantiate this knowledge with an additional task branch and a new loss function in the MGWI-Net, so that this model can accurately perceive the mask.

3.2. Meta-Knowledge-Guided Weakly Supervised Instance Segmentation Network

3.2.1. Overview

3.2.2. Weakly Supervised Mask Head

3.2.3. Mask Information Awareness Assist Head

3.2.4. Total Loss Function

4. Experiment and Analysis

4.1. Datasets

4.2. Implementation Details

4.3. Evaluation Metrics

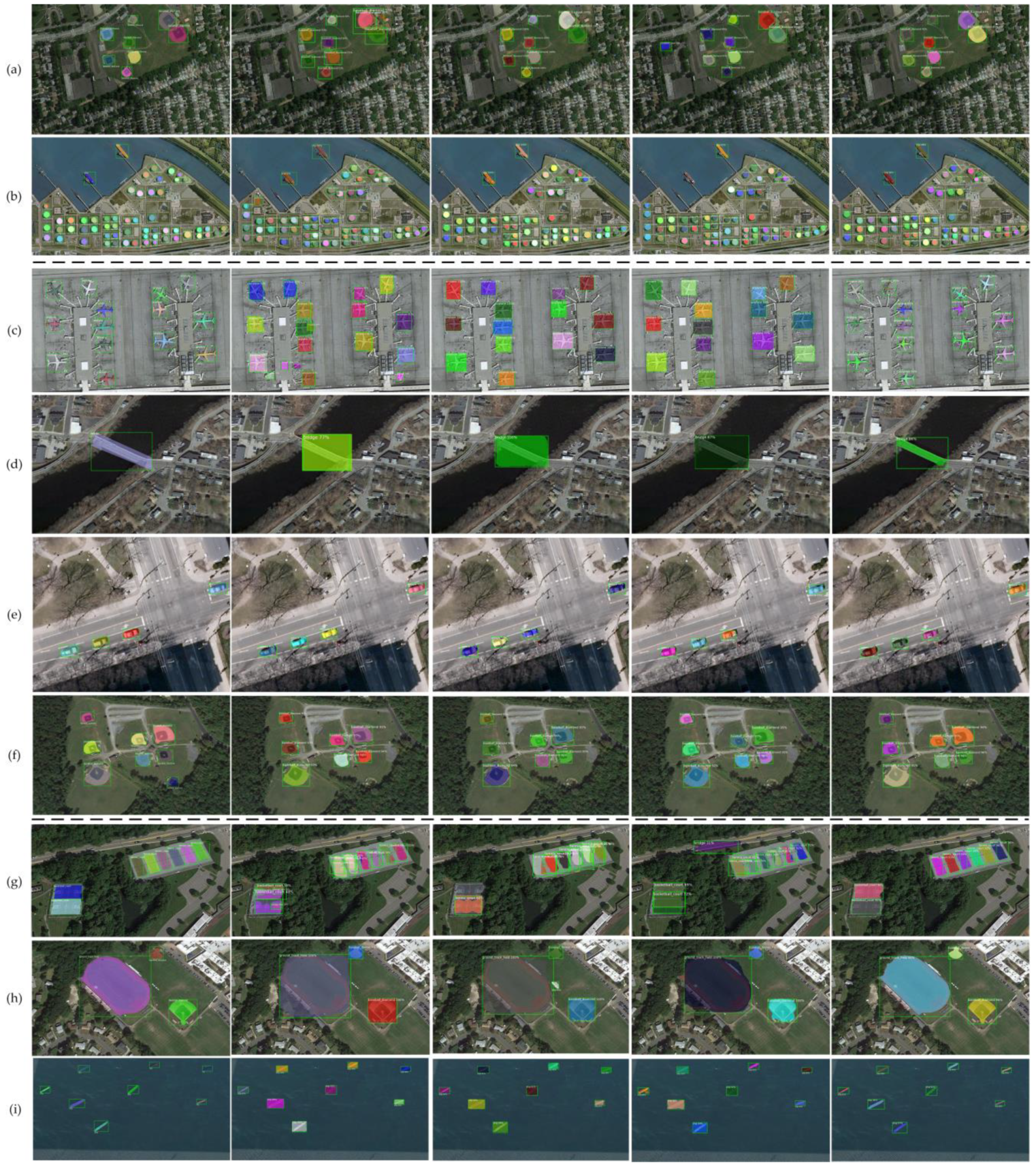

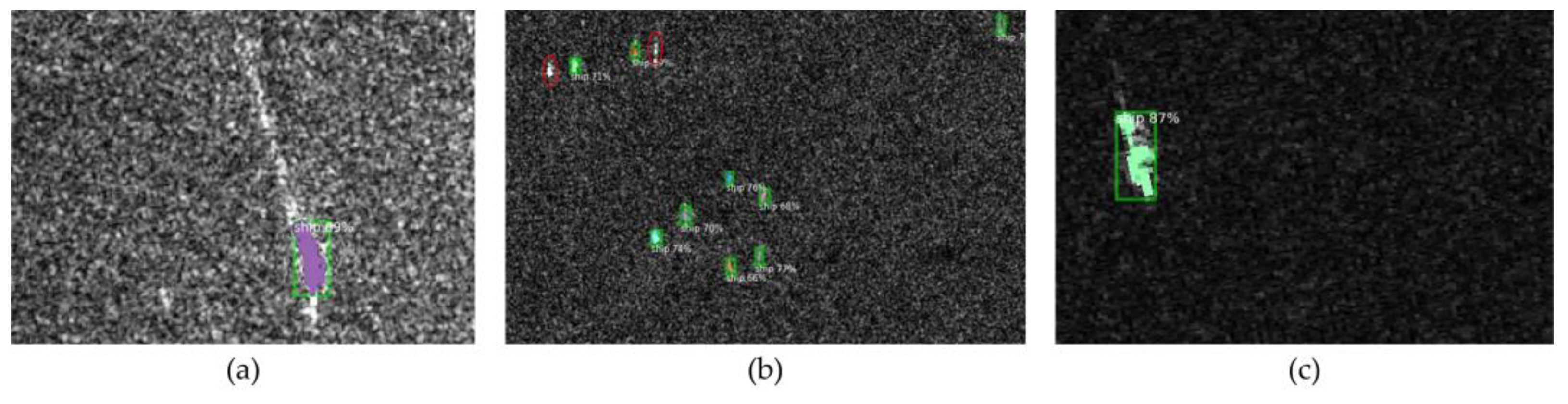

4.4. Impact of Meta-Knowledge in MGWI-Net

4.5. Comparison of Annotation Costs

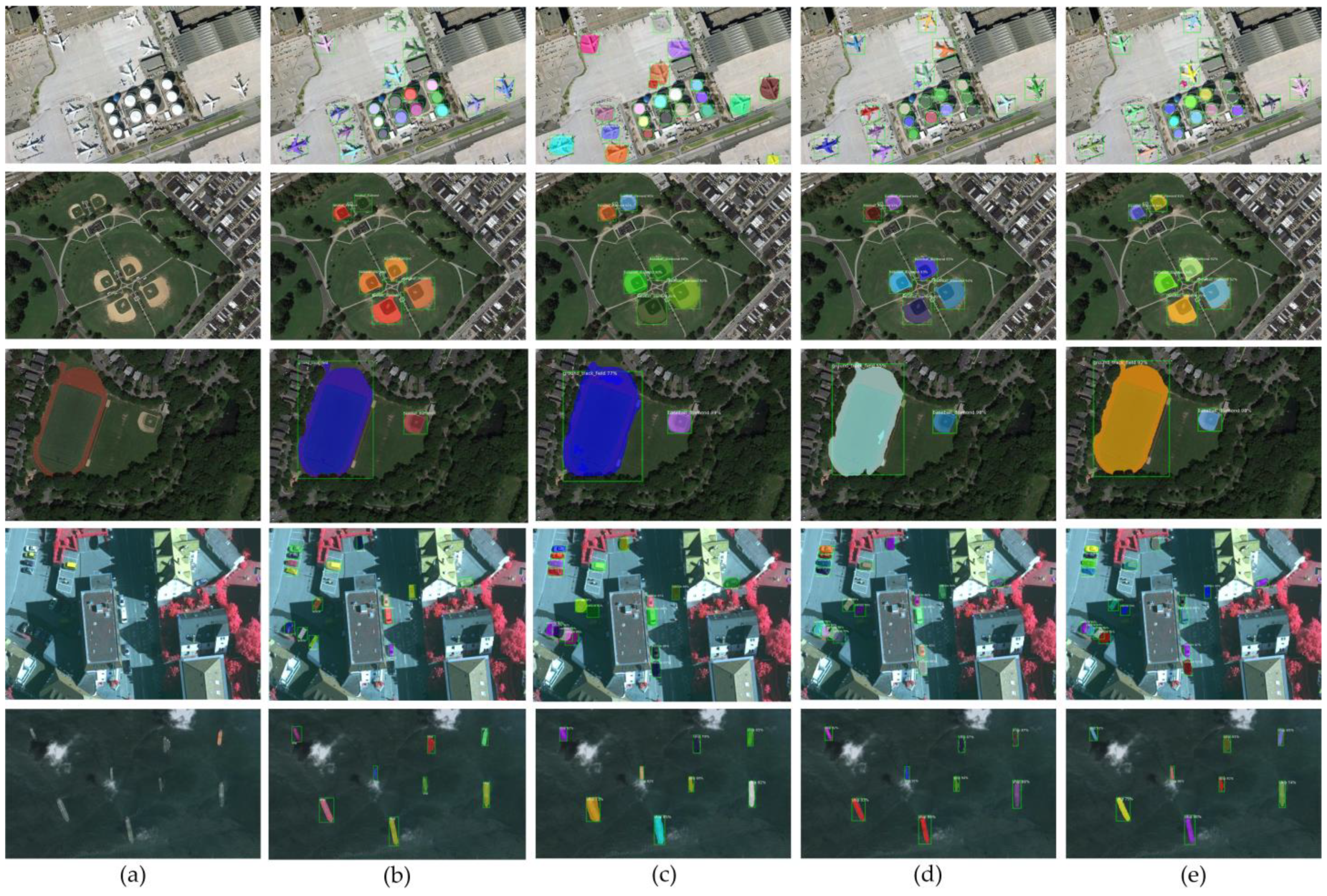

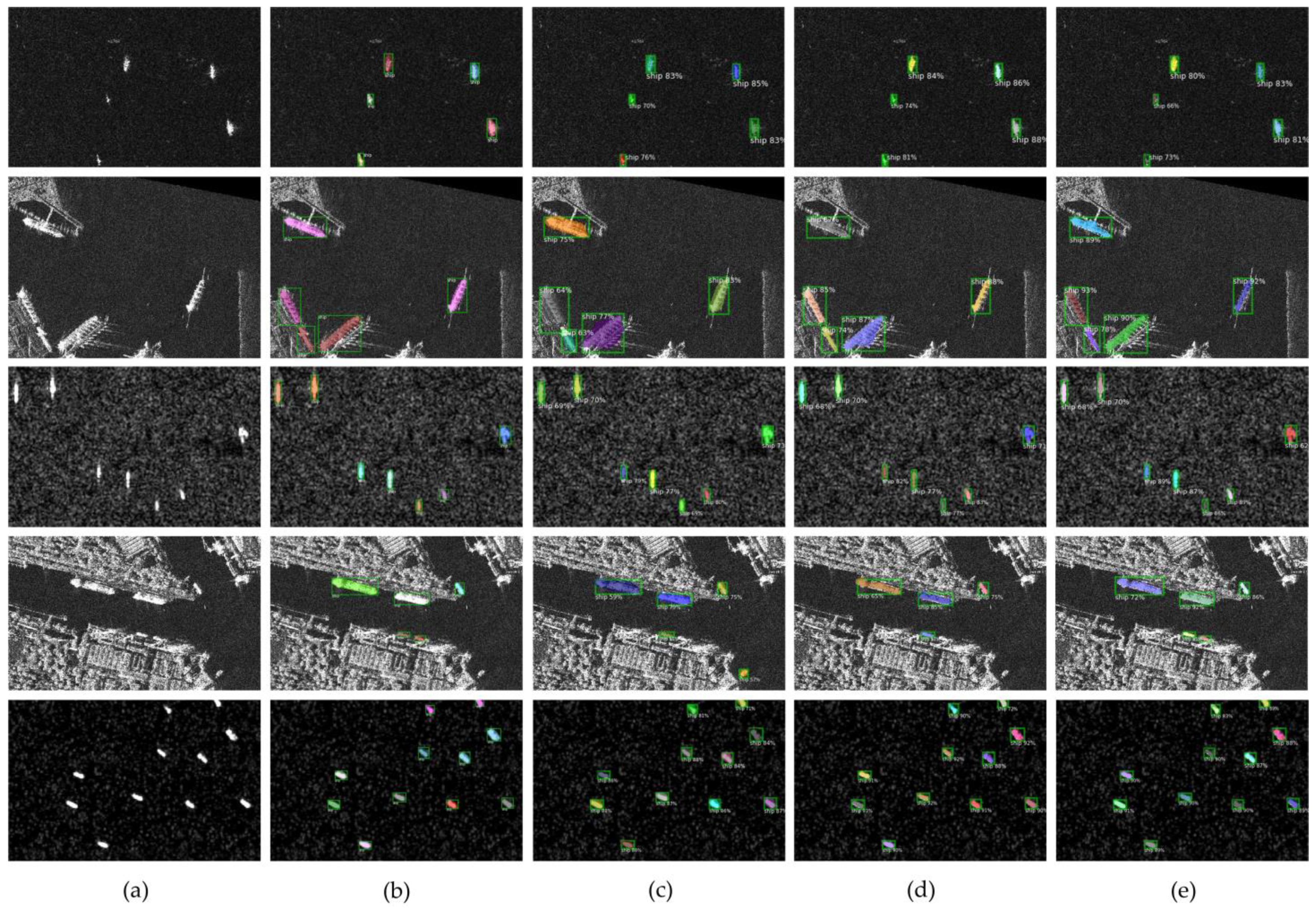

4.6. Comparison of Other Methods

- Weakly supervised mode: For conventional fully supervised instance segmentation methods (such as YOLACT [48], the mask R-CNN [43], and CondInst [50]), we consider the region enclosed by the bounding box as one of pseudo-pixel-level annotations to guide the network in instance segmentation training and obtain predicted masks. BoxInst [36], DiscoBox [38], DBIN [40], and our proposed MGWI-Net are dedicated WSIS methods that do not require a pixel-level ground truth and can be trained directly with box-level annotations. Although conventional fully supervised instance segmentation methods use pseudo-pixel-level annotations during training, their ground truth is generated from box-level annotations. Hence, the annotation costs are consistent with those of dedicated WSIS methods. Additionally, as this study did not consider domain adaptation tasks, the DBIN does not have a corresponding domain adaptation structure.

- Weakly supervised + fully supervised mode: We adopted partial pixel-level annotations and some box-level labels to train the network. Note that only pixel-level annotations were utilized for training the mask branch, while the classification and regression branches were trained using both box-level and pixel-level annotations. Compared with the proposed method, this mode has richer supervision information but requires a greater annotation cost.

- Fully supervised mode: The networks are trained with all pixel-level annotations. Compared with other modes, this mode requires constructing pixel-level annotations for each image, so its supervision information is the richest, and the annotation cost is the highest.

4.7. Ablation Study

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Amitrano, D.; Di Martino, G.; Guida, R.; Iervolino, P.; Iodice, A.; Papa, M.N.; Riccio, D.; Ruello, G. Earth Environmental Monitoring Using Multi-Temporal Synthetic Aperture Radar: A Critical Review of Selected Applications. Remote Sens. 2021, 13, 604. [Google Scholar] [CrossRef]

- Liu, C.; Xing, C.; Hu, Q.; Wang, S.; Zhao, S.; Gao, M. Stereoscopic Hyperspectral Remote Sensing of the Atmospheric Environment: Innovation and Prospects. Earth Sci. Rev. 2022, 226, 103958. [Google Scholar] [CrossRef]

- Wu, Z.; Hou, B.; Ren, B.; Ren, Z.; Wang, S.; Jiao, L. A Deep Detection Network Based on Interaction of Instance Segmentation and Object Detection for SAR Images. Remote Sens. 2021, 13, 2582. [Google Scholar] [CrossRef]

- Zhu, M.; Hu, G.; Li, S.; Zhou, H.; Wang, S.; Feng, Z. A Novel Anchor-Free Method Based on FCOS + ATSS for Ship Detection in SAR Images. Remote Sens. 2022, 14, 2034. [Google Scholar] [CrossRef]

- Bühler, M.M.; Sebald, C.; Rechid, D.; Baier, E.; Michalski, A.; Rothstein, B.; Nübel, K.; Metzner, M.; Schwieger, V.; Harrs, J.-A.; et al. Application of Copernicus Data for Climate-Relevant Urban Planning Using the Example of Water, Heat, and Vegetation. Remote Sens. 2021, 13, 3634. [Google Scholar] [CrossRef]

- Yu, D.; Ji, S.; Li, X.; Yuan, Z.; Shen, C. Earthquake Crack Detection from Aerial Images Using a Deformable Convolutional Neural Network. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Ke, X.; Liu, C.; Xu, X.; Zhan, X.; Wang, C.; Ahmad, I.; Zhou, Y.; Pan, D.; et al. HOG-ShipCLSNet: A Novel Deep Learning Network with HOG Feature Fusion for SAR Ship Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–22. [Google Scholar] [CrossRef]

- Liu, X.; Huang, Y.; Wang, C.; Pei, J.; Huo, W.; Zhang, Y.; Yang, J. Semi-Supervised SAR ATR via Conditional Generative Adversarial Network with Multi-Discriminator. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Brussels, Belgium, 11–16 July 2021; pp. 2361–2364. [Google Scholar] [CrossRef]

- Hao, S.; Wu, B.; Zhao, K.; Ye, Y.; Wang, W. Two-Stream Swin Transformer with Differentiable Sobel Operator for Remote Sensing Image Classification. Remote Sens. 2022, 14, 1507. [Google Scholar] [CrossRef]

- Miao, W.; Geng, J.; Jiang, W. Semi-Supervised Remote-Sensing Image Scene Classification Using Representation Consistency Siamese Network. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Chen, S.-B.; Wei, Q.-S.; Wang, W.-Z.; Tang, J.; Luo, B.; Wang, Z.-Y. Remote Sensing Scene Classification via Multi-Branch Local Attention Network. IEEE Trans. Image Process. 2022, 31, 99–109. [Google Scholar] [CrossRef]

- Shi, C.; Fang, L.; Lv, Z.; Shen, H. Improved Generative Adversarial Networks for VHR Remote Sensing Image Classification. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Ke, X. Quad-FPN: A Novel Quad Feature Pyramid Network for SAR Ship Detection. Remote Sens. 2021, 13, 2771. [Google Scholar] [CrossRef]

- Chen, S.; Zhang, J.; Zhan, R.; Zhu, R.; Wang, W. Few Shot Object Detection for SAR Images via Feature Enhancement and Dynamic Relationship Modeling. Remote Sens. 2022, 14, 3669. [Google Scholar] [CrossRef]

- Zhang, R.; Zhang, X.; Zheng, Y.; Wang, D.; Hua, L. MSCNet: A Multilevel Stacked Context Network for Oriented Object Detection in Optical Remote Sensing Images. Remote Sens. 2022, 14, 5066. [Google Scholar] [CrossRef]

- Wang, J.; Gong, Z.; Liu, X.; Guo, H.; Yu, D.; Ding, L. Object Detection Based on Adaptive Feature-Aware Method in Optical Remote Sensing Images. Remote Sens. 2022, 14, 3616. [Google Scholar] [CrossRef]

- Liu, B.; Hu, J.; Bi, X.; Li, W.; Gao, X. PGNet: Positioning Guidance Network for Semantic Segmentation of Very-High-Resolution Remote Sensing Images. Remote Sens. 2022, 14, 4219. [Google Scholar] [CrossRef]

- Feng, M.; Sun, X.; Dong, J.; Zhao, H. Gaussian Dynamic Convolution for Semantic Segmentation in Remote Sensing Images. Remote Sens. 2022, 14, 5736. [Google Scholar] [CrossRef]

- Kong, Y.; Li, Q. Semantic Segmentation of Polarimetric SAR Image Based on Dual-Channel Multi-Size Fully Connected Convolutional Conditional Random Field. Remote Sens. 2022, 14, 1502. [Google Scholar] [CrossRef]

- Colin, A.; Fablet, R.; Tandeo, P.; Husson, R.; Peureux, C.; Longépé, N.; Mouche, A. Semantic Segmentation of Metoceanic Processes Using SAR Observations and Deep Learning. Remote Sens. 2022, 14, 851. [Google Scholar] [CrossRef]

- Su, H.; Wei, S.; Liu, S.; Liang, J.; Wang, C.; Shi, J.; Zhang, X. HQ-ISNet: High-Quality Instance Segmentation for Remote Sensing Imagery. Remote Sens. 2020, 12, 989. [Google Scholar] [CrossRef]

- Zeng, X.; Wei, S.; Wei, J.; Zhou, Z.; Shi, J.; Zhang, X.; Fan, F. CPISNet: Delving into Consistent Proposals of Instance Segmentation Network for High-Resolution Aerial Images. Remote Sens. 2021, 13, 2788. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Zhu, P.; Tang, X.; Li, C.; Jiao, L.; Zhou, H. Semantic Attention and Scale Complementary Network for Instance Segmentation in Remote Sensing Images. IEEE Trans. Cybern. 2022, 52, 10999–11013. [Google Scholar] [CrossRef] [PubMed]

- Zhao, D.; Zhu, C.; Qi, J.; Qi, X.; Su, Z.; Shi, Z. Synergistic Attention for Ship Instance Segmentation in SAR Images. Remote Sens. 2021, 13, 4384. [Google Scholar] [CrossRef]

- Shi, F.; Zhang, T. An Anchor-Free Network with Box Refinement and Saliency Supplement for Instance Segmentation in Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Ke, X.; Zhang, X.; Zhang, T. GCBANet: A Global Context Boundary-Aware Network for SAR Ship Instance Segmentation. Remote Sens. 2022, 14, 2165. [Google Scholar] [CrossRef]

- Fan, F.; Zeng, X.; Wei, S.; Zhang, H.; Tang, D.; Shi, J.; Zhang, X. Efficient Instance Segmentation Paradigm for Interpreting SAR and Optical Images. Remote Sens. 2022, 14, 531. [Google Scholar] [CrossRef]

- Uijlings, J.R.R.; van de Sande, K.E.A.; Gevers, T.; Smeulders, A.W.M. Selective Search for Object Recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

- Pont-Tuset, J.; Arbelaez, P.; Barron, J.T.; Marques, F.; Malik, J. Multiscale Combinatorial Grouping for Image Segmentation and Object Proposal Generation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 128–140. [Google Scholar] [CrossRef]

- Zhou, Y.; Zhu, Y.; Ye, Q.; Qiu, Q.; Jiao, J. Weakly Supervised Instance Segmentation Using Class Peak Response. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 3791–3800. [Google Scholar] [CrossRef]

- Ahn, J.; Cho, S.; Kwak, S. Weakly Supervised Learning of Instance Segmentation with Inter-Pixel Relations. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 2204–2213. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhou, Y.; Xu, H.; Ye, Q.; Doermann, D.; Jiao, J. Learning Instance Activation Maps for Weakly Supervised Instance Segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 3111–3120. [Google Scholar] [CrossRef]

- Ge, W.; Guo, S.; Huang, W.; Scott, M.R. Label-PEnet: Sequential Label Propagation and Enhancement Networks for Weakly Supervised Instance Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27–28 October 2019; pp. 3345–3354. [Google Scholar] [CrossRef]

- Arun, A.; Jawahar, C.V.; Kumar, M.P. Weakly Supervised Instance Segmentation by Learning Annotation Consistent Instances. In Proceedings of the European Conference on Computer Vision (ECCV), 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 254–270. [Google Scholar] [CrossRef]

- Hsu, C.-C.; Hsu, K.-J.; Tsai, C.-C.; Lin, Y.-Y.; Chuang, Y.-Y. Weakly Supervised Instance Segmentation Using the Bounding Box Tightness Prior. In Proceedings of the 2019 Advances in Neural Information Processing Systems (NeurIPS), Vancouver, BC, Canada, 8–14 December 2019; pp. 6586–6597. [Google Scholar] [CrossRef]

- Tian, Z.; Shen, C.; Wang, X.; Chen, H. BoxInst: High-Performance Instance Segmentation with Box Annotations. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 5443–5452. [Google Scholar] [CrossRef]

- Hao, S.; Wang, G.; Gu, R. Weakly Supervised Instance Segmentation Using Multi-Prior Fusion. Comput. Vis. Image Underst. 2021, 211, 103261. [Google Scholar] [CrossRef]

- Lan, S.; Yu, Z.; Choy, C.; Radhakrishnan, S.; Liu, G.; Zhu, Y.; Davis, L.S.; Anandkumar, A. DiscoBox: Weakly Supervised Instance Segmentation and Semantic Correspondence from Box Supervision. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 3386–3396. [Google Scholar] [CrossRef]

- Wang, X.; Feng, J.; Hu, B.; Ding, Q.; Ran, L.; Chen, X.; Liu, W. Weakly-Supervised Instance Segmentation via Class-Agnostic Learning with Salient Images. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 10225–10235. [Google Scholar] [CrossRef]

- Li, Y.; Xue, Y.; Li, L.; Zhang, X.; Qian, X. Domain Adaptive Box-Supervised Instance Segmentation Network for Mitosis Detection. IEEE Trans. Med. Imaging. 2022, 41, 2469–2485. [Google Scholar] [CrossRef]

- Bellver, M.; Salvador, A.; Torres, J.; Giro-i-Nieto, X. Budget-Aware Semi-Supervised Semantic and Instance Segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–17 June 2019; pp. 93–102. [Google Scholar] [CrossRef]

- Krähenbühl, P.; Koltun, V. Efficient Inference in Fully Connected CRFs with Gaussian Edge Potentials. arXiv 2012, arXiv:1210.5644. [Google Scholar]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into High Quality Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6154–6162. [Google Scholar] [CrossRef]

- Chen, K.; Ouyang, W.; Loy, C.C.; Lin, D.; Pang, J.; Wang, J.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; et al. Hybrid Task Cascade for Instance Segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4969–4978. [Google Scholar] [CrossRef]

- Liu, J.-J.; Hou, Q.; Cheng, M.-M.; Wang, C.; Feng, J. Improving Convolutional Networks with Self-Calibrated Convolutions. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10093–10102. [Google Scholar] [CrossRef]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. YOLACT: Real-Time Instance Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27–28 October 2019; pp. 9156–9165. [Google Scholar] [CrossRef]

- Xie, E.; Sun, P.; Song, X.; Wang, W.; Liang, D.; Shen, C.; Luo, P. PolarMask: Single Shot Instance Segmentation with Polar Representation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12193–12202. [Google Scholar] [CrossRef]

- Tian, Z.; Shen, C.; Chen, H. Conditional Convolutions for Instance Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 254–270. [Google Scholar] [CrossRef]

- Wang, X.; Kong, T.; Shen, C.; Jiang, Y.; Li, L. SOLO: Segmenting Objects by Locations. In Proceedings of the European Conference on Computer Vision (ECCV), 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 649–665. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, R.; Kong, T.; Li, L.; Shen, C. SOLOv2: Dynamic and Fast Instance Segmentation. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–12 December 2020; pp. 17721–17732. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar] [CrossRef]

- Finn, C.; Abbeel, P.; Levine, S. Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks. In Proceedings of the 34th International Conference on Machine Learning (ICML), Sydney, NSW, Australia, 6–11 August 2017; pp. 1126–1135. [Google Scholar] [CrossRef]

- Hospedales, T.M.; Antoniou, A.; Micaelli, P.; Storkey, A.J. Meta-Learning in Neural Networks: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 5149–5169. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, X.; Zhang, Y.; Duan, Y.; Li, Y.; Pan, Z. Meta-Knowledge Learning and Domain Adaptation for Unseen Background Subtraction. IEEE Trans. Image Process. 2021, 30, 9058–9068. [Google Scholar] [CrossRef]

- Tonioni, A.; Rahnama, O.; Joy, T.; Di Stefano, L.; Ajanthan, T.; Torr, P.H.S. Learning to Adapt for Stereo. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9653–9662. [Google Scholar] [CrossRef]

- Huisman, M.; van Rijn, J.N.; Plaat, A. A Survey of Deep Meta-Learning. Artif. Intell. Rev. 2021, 54, 4483–4541. [Google Scholar] [CrossRef]

- Milletari, F.; Navab, N.; Ahmadi, S.-A. V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [PubMed]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully Convolutional One-Stage Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27–28 October 2019; p. 9626. [Google Scholar] [CrossRef]

- Su, H.; Wei, S.; Yan, M.; Wang, C.; Shi, J.; Zhang, X. Object Detection and Instance Segmentation in Remote Sensing Imagery Based on Precise Mask R-CNN. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 1454–1457. [Google Scholar] [CrossRef]

- Cheng, G.; Zhou, P.; Han, J. Learning Rotation-Invariant Convolutional Neural Networks for Object Detection in VHR Optical Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7405–7415. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the European Conference on Computer Vision (ECCV), 13th European Conference, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar] [CrossRef]

- Wada, K. Labelme: Image Polygonal Annotation with Python. Available online: https://github.com/wkentaro/labelme (accessed on 20 July 2018).

- Bearman, A.; Russakovsky, O.; Ferrari, V.; Fei-Fei, L. What’s the Point: Semantic Segmentation with Point Supervision. In Proceedings of the European Conference on Computer Vision (ECCV), 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 740–755. [Google Scholar] [CrossRef]

| Head Network | Sub-Component | Structure |

|---|---|---|

| Classification head | - | (Conv3 × 3) × 4 |

| Center-ness head | - | (Conv3 × 3) × 4 |

| Box head | - | (Conv3 × 3) × 4 |

| WSM head | Mask branch | (Conv3×3) × 4, Conv1 × 1 |

| Controller | Conv3 × 3 | |

| FCN head | (Conv1 × 1) × 3 | |

| MIAA head | Semantic branch | (Conv3 × 3) × 2 |

| Fundamental Knowledge | Apparent Knowledge | Detailed Knowledge | AP | AP50 | AP75 | APS | APM | APL |

|---|---|---|---|---|---|---|---|---|

| √ | 29.8 | 62.9 | 25.8 | 14.7 | 27.4 | 39.0 | ||

| √ | √ | 49.8 | 80.7 | 51.0 | 35.2 | 46.1 | 57.4 | |

| √ | √ | √ | 51.6 | 81.3 | 53.3 | 37.6 | 48.2 | 59.1 |

| Fundamental Knowledge | Apparent Knowledge | Detailed Knowledge | AI | BD | GTF | VC | SH | TC | HB | ST | BC | BR | AP |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| √ | 0.2 | 47.8 | 66.4 | 16.5 | 8.1 | 35.3 | 11.4 | 59.4 | 45.5 | 7.9 | 29.8 | ||

| √ | √ | 15.7 | 77.0 | 90.4 | 40.8 | 45.9 | 68.5 | 12.1 | 76.8 | 63.1 | 8.1 | 49.8 | |

| √ | √ | √ | 17.0 | 77.3 | 91.9 | 41.0 | 50.8 | 71.2 | 15.7 | 76.5 | 64.6 | 10.9 | 51.6 |

| Fundamental Knowledge | Apparent Knowledge | Detailed Knowledge | AP | AP50 | AP75 | APS | APM | APL |

|---|---|---|---|---|---|---|---|---|

| √ | 38.1 | 88.5 | 21.8 | 39.8 | 42.7 | - | ||

| √ | √ | 51.8 | 91.9 | 54.0 | 52.7 | 53.2 | - | |

| √ | √ | √ | 53.0 | 92.4 | 57.1 | 53.7 | 54.9 | - |

| Dataset | Type of Annotation | Tann | Ncli |

|---|---|---|---|

| NWPU VHR-10 instance segmentation dataset | Box-level | 51.5 | 12.1 |

| Pixel-level | 379.8 | 101.9 | |

| Rate | 13.6% | 11.9% | |

| SSDD dataset | Box-level | 10.6 | 4.5 |

| Pixel-level | 94.6 | 30.6 | |

| Rate | 11.2% | 14.7% |

| Supervision Mode | Method | Rpix | AP | AP50 | AP75 | APS | APM | APL |

|---|---|---|---|---|---|---|---|---|

| Weakly + fully supervised | YOLACT [48] | 25% | 15.2 | 41.2 | 7.8 | 7.7 | 16.8 | 12.6 |

| YOLACT [48] | 50% | 22.5 | 49.7 | 17.0 | 9.6 | 19.9 | 31.5 | |

| YOLACT [48] | 75% | 27.5 | 54.4 | 27.4 | 12.1 | 25.9 | 34.2 | |

| Mask R-CNN [43] | 25% | 25.7 | 59.4 | 18.8 | 16.9 | 25.3 | 29.3 | |

| Mask R-CNN [43] | 50% | 35.5 | 70.8 | 31.3 | 24.6 | 34.2 | 39.9 | |

| Mask R-CNN [43] | 75% | 49.3 | 82.6 | 51.7 | 36.9 | 47.0 | 53.9 | |

| CondInst [50] | 25% | 23.9 | 59.8 | 14.8 | 19.8 | 23.7 | 25.3 | |

| CondInst [50] | 50% | 34.5 | 73.4 | 27.6 | 23.7 | 34.1 | 35.9 | |

| CondInst [50] | 75% | 49.5 | 85.1 | 50.3 | 35.9 | 48.6 | 53.7 | |

| Fully supervised | YOLACT [48] | 100% | 35.6 | 68.4 | 36.4 | 14.8 | 33.3 | 56.0 |

| Mask R-CNN [43] | 100% | 58.8 | 86.6 | 65.2 | 47.1 | 57.5 | 62.4 | |

| CondInst [50] | 100% | 58.5 | 90.1 | 62.9 | 29.4 | 56.8 | 71.3 | |

| Weakly supervised | YOLACT [48] | 0 | 9.8 | 32.9 | 1.3 | 4.4 | 11.3 | 8.0 |

| Mask R-CNN [43] | 0 | 19.8 | 54.7 | 9.7 | 7.8 | 19.4 | 24.6 | |

| CondInst [50] | 0 | 17.1 | 50.5 | 6.7 | 10.7 | 17.7 | 18.5 | |

| BoxInst [36] | 0 | 47.6 | 78.9 | 49.0 | 33.8 | 43.9 | 55.5 | |

| DiscoBox [38] | 0 | 46.2 | 79.7 | 47.4 | 29.4 | 42.9 | 57.1 | |

| DBIN [40] | 0 | 48.3 | 80.2 | 50.5 | 34.5 | 46.1 | 57.0 | |

| MGWI-Net | 0 | 51.6 | 81.3 | 53.3 | 37.6 | 48.2 | 59.1 |

| Supervision Mode | Method | Rpix | AI | BD | GTF | VC | SH | TC | HB | ST | BC | BR | AP |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Weakly + fully supervised | YOLACT [48] | 25% | 0 | 39.1 | 14.2 | 12.2 | 1.5 | 7.6 | 12.2 | 49.8 | 10.3 | 1.4 | 15.2 |

| YOLACT [48] | 50% | 0.1 | 55.0 | 49.9 | 11.7 | 4.6 | 17.0 | 19.3 | 52.5 | 13.6 | 1.2 | 22.5 | |

| YOLACT [48] | 75% | 0.7 | 64.0 | 62.9 | 19.4 | 5.9 | 19.8 | 17.4 | 60.7 | 16.9 | 7.2 | 27.5 | |

| Mask R-CNN [43] | 25% | 0.1 | 34.2 | 37.4 | 12.5 | 4.7 | 39.3 | 22.5 | 56.6 | 42.0 | 7.8 | 25.7 | |

| Mask R-CNN [43] | 50% | 8.8 | 49.7 | 50.8 | 28.1 | 14.9 | 44.1 | 30.6 | 59.4 | 52.1 | 16.7 | 35.5 | |

| Mask R-CNN [43] | 75% | 27.6 | 71.2 | 68.6 | 40.5 | 30.4 | 63.2 | 33.0 | 70.1 | 64.7 | 23.9 | 49.3 | |

| CondInst [50] | 25% | 0 | 37.5 | 35.7 | 18.2 | 3.0 | 36.6 | 18.2 | 53.8 | 32.5 | 3.5 | 23.9 | |

| CondInst [50] | 50% | 14.8 | 49.0 | 45.6 | 23.0 | 12.8 | 45.6 | 28.4 | 57.1 | 56.8 | 11.3 | 34.5 | |

| CondInst [50] | 75% | 30.9 | 68.4 | 64.5 | 41.5 | 31.8 | 68.4 | 30.3 | 65.0 | 66.0 | 27.8 | 49.5 | |

| Fully supervised | YOLACT [48] | 100% | 8.2 | 70.5 | 70.8 | 22.7 | 21.5 | 24.3 | 34.8 | 63.4 | 26.5 | 13.5 | 35.6 |

| Mask R-CNN [43] | 100% | 35.3 | 78.8 | 84.8 | 46.1 | 50.2 | 72.0 | 48.1 | 80.9 | 64.2 | 28.0 | 58.8 | |

| CondInst [50] | 100% | 26.7 | 77.7 | 89.1 | 46.2 | 46.1 | 69.7 | 46.8 | 73.4 | 74.0 | 35.4 | 58.5 | |

| Weakly supervised | YOLACT [48] | 0 | 0 | 20.7 | 12.1 | 4.8 | 0.1 | 9.6 | 2.1 | 33.5 | 14.9 | 0.1 | 9.8 |

| Mask R-CNN [43] | 0 | 0 | 33.3 | 34.2 | 8.0 | 2.3 | 21.5 | 16.4 | 48.6 | 26.9 | 6.6 | 19.8 | |

| CondInst [50] | 0 | 0 | 30.7 | 26.8 | 6.6 | 1.1 | 19.2 | 14.2 | 46.1 | 23.1 | 3.1 | 17.1 | |

| BoxInst [36] | 0 | 12.5 | 76.6 | 89.7 | 38.0 | 47.9 | 65.5 | 11.3 | 75.4 | 58.9 | 6.8 | 47.6 | |

| DiscoBox [38] | 0 | 12.0 | 77.7 | 91.5 | 33.7 | 42.8 | 64.3 | 10.6 | 74.6 | 57.9 | 6.0 | 46.2 | |

| DBIN [40] | 0 | 14.0 | 77.1 | 91.2 | 37.8 | 48.6 | 67.8 | 13.0 | 75.2 | 61.9 | 5.4 | 48.3 | |

| MGWI-Net | 0 | 17.0 | 77.3 | 91.9 | 41.0 | 50.8 | 71.2 | 15.7 | 76.5 | 64.6 | 10.9 | 51.6 |

| Supervision Mode | Method | Rpixel | AP | AP50 | AP75 | APS | APM | APL |

|---|---|---|---|---|---|---|---|---|

| Weakly + fully supervised | YOLACT [48] | 25% | 17.4 | 59.0 | 1.5 | 19.7 | 21.0 | - |

| YOLACT [48] | 50% | 28.6 | 76.7 | 9.0 | 32.1 | 34.2 | - | |

| YOLACT [48] | 75% | 39.0 | 79.9 | 32.5 | 40.3 | 45.5 | - | |

| Mask R-CNN [43] | 25% | 22.8 | 72.4 | 6.3 | 27.2 | 28.5 | - | |

| Mask R-CNN [43] | 50% | 39.3 | 86.2 | 28.0 | 42.7 | 44.4 | - | |

| Mask R-CNN [43] | 75% | 54.6 | 90.2 | 63.0 | 56.6 | 57.1 | - | |

| CondInst [50] | 25% | 18.6 | 65.7 | 2.7 | 22.1 | 23.7 | - | |

| CondInst [50] | 50% | 38.4 | 87.4 | 28.6 | 41.3 | 43.8 | - | |

| CondInst [50] | 75% | 54.1 | 93.0 | 59.6 | 54.6 | 56.8 | - | |

| Fully supervised | YOLACT [48] | 100% | 44.6 | 86.6 | 41.0 | 45.3 | 48.5 | - |

| Mask R-CNN [43] | 100% | 64.2 | 94.9 | 80.1 | 62.0 | 64.7 | - | |

| CondInst [50] | 100% | 63.0 | 95.9 | 78.4 | 63.7 | 63.6 | - | |

| Weakly supervised | YOLACT [48] | 0 | 12.4 | 49.4 | 0.6 | 15.9 | 17.3 | - |

| Mask R-CNN [43] | 0 | 15.5 | 61.0 | 1.6 | 20.2 | 21.1 | - | |

| CondInst [50] | 0 | 14.8 | 59.1 | 1.4 | 17.7 | 19.6 | - | |

| BoxInst [36] | 0 | 49.9 | 90.1 | 52.7 | 50.6 | 52.3 | - | |

| DiscoBox [38] | 0 | 48.4 | 90.2 | 50.4 | 47.2 | 50.6 | - | |

| DBIN [40] | 0 | 50.6 | 91.7 | 52.8 | 51.3 | 52.0 | - | |

| MGWI-Net | 0 | 53.0 | 92.4 | 57.1 | 53.7 | 54.9 | - |

| Method | Parameters/M | FPS-NW10 | FPS-SSDD |

|---|---|---|---|

| YOLACT [48] | 34.8 | 16.4 | 22.8 |

| Mask R-CNN [43] | 63.3 | 13.5 | 19.7 |

| CondInst [50] | 53.5 | 10.6 | 15.7 |

| BoxInst [36] | 53.5 | 10.6 | 15.6 |

| DiscoBox [38] | 65.0 | 11.0 | 16.5 |

| DBIN [40] | 55.6 | 10.1 | 15.3 |

| MGWI-Net | 53.7 | 10.4 | 15.4 |

| Dataset | Similarity Threshold θ | AP | AP50 | AP75 | APS | APM | APL |

|---|---|---|---|---|---|---|---|

| NWPU VHR-10 instance segmentation dataset | 0 | 9.9 | 34.4 | 1.4 | 9.9 | 11.3 | 9.7 |

| 0.1 | 50.7 | 81.0 | 51.7 | 36.8 | 47.6 | 59.2 | |

| 0.2 | 51.4 | 80.8 | 53.4 | 37.1 | 48.1 | 58.7 | |

| 0.3 | 51.6 | 81.3 | 53.3 | 37.6 | 48.2 | 59.1 | |

| 0.4 | 50.9 | 81.2 | 52.2 | 37.5 | 47.4 | 58.8 | |

| SSDD dataset | 0 | 11.0 | 47.6 | 2.1 | 16.6 | 18.2 | - |

| 0.1 | 52.6 | 91.4 | 56.2 | 52.8 | 55.0 | - | |

| 0.2 | 53.3 | 91.9 | 57.5 | 53.5 | 55.6 | - | |

| 0.3 | 53.0 | 92.4 | 57.1 | 53.7 | 54.9 | - | |

| 0.4 | 52.2 | 92.0 | 56.8 | 53.2 | 54.1 | - |

| Dataset | λ3 = 0.1 | λ3 = 0.5 | λ3 = 1.0 | λ3 = 1.5 |

|---|---|---|---|---|

| NW10 | 51.6 | 51.4 | 50.6 | 49.9 |

| SSDD | 53.0 | 52.9 | 52.5 | 51.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, M.; Zhang, Y.; Chen, E.; Hu, Y.; Xie, Y.; Pan, Z. Meta-Knowledge Guided Weakly Supervised Instance Segmentation for Optical and SAR Image Interpretation. Remote Sens. 2023, 15, 2357. https://doi.org/10.3390/rs15092357

Chen M, Zhang Y, Chen E, Hu Y, Xie Y, Pan Z. Meta-Knowledge Guided Weakly Supervised Instance Segmentation for Optical and SAR Image Interpretation. Remote Sensing. 2023; 15(9):2357. https://doi.org/10.3390/rs15092357

Chicago/Turabian StyleChen, Man, Yao Zhang, Enping Chen, Yahao Hu, Yifei Xie, and Zhisong Pan. 2023. "Meta-Knowledge Guided Weakly Supervised Instance Segmentation for Optical and SAR Image Interpretation" Remote Sensing 15, no. 9: 2357. https://doi.org/10.3390/rs15092357

APA StyleChen, M., Zhang, Y., Chen, E., Hu, Y., Xie, Y., & Pan, Z. (2023). Meta-Knowledge Guided Weakly Supervised Instance Segmentation for Optical and SAR Image Interpretation. Remote Sensing, 15(9), 2357. https://doi.org/10.3390/rs15092357