Three-Dimensional Point Cloud Semantic Segmentation for Cultural Heritage: A Comprehensive Review

Abstract

1. Introduction

- (1)

- Point clouds in cultural heritage require a higher point density to express the complex geometric details of the object surface.

- (2)

- The basic geometric elements of cultural heritage include a lot of non-planar, curved geometrical shapes, irregular shapes, and complex structures [33].

- (3)

- Before handling point clouds, the segmentation categories always depend on the knowledge of experts in the field of cultural heritage.

- (4)

- For the same cultural heritage, the segmentation categories can be identified based on different research objectives and practical applications [34].

- (5)

- The same segmentation categories in different heritages have very significant morphological differences. For example, different historical periods and architectural styles include a variety of vaults supported by pillars of various patterns and shapes.

- (6)

- A high level of accuracy is required for the semantic segmentation of point clouds for applications such as structural analysis and damage detection [35].

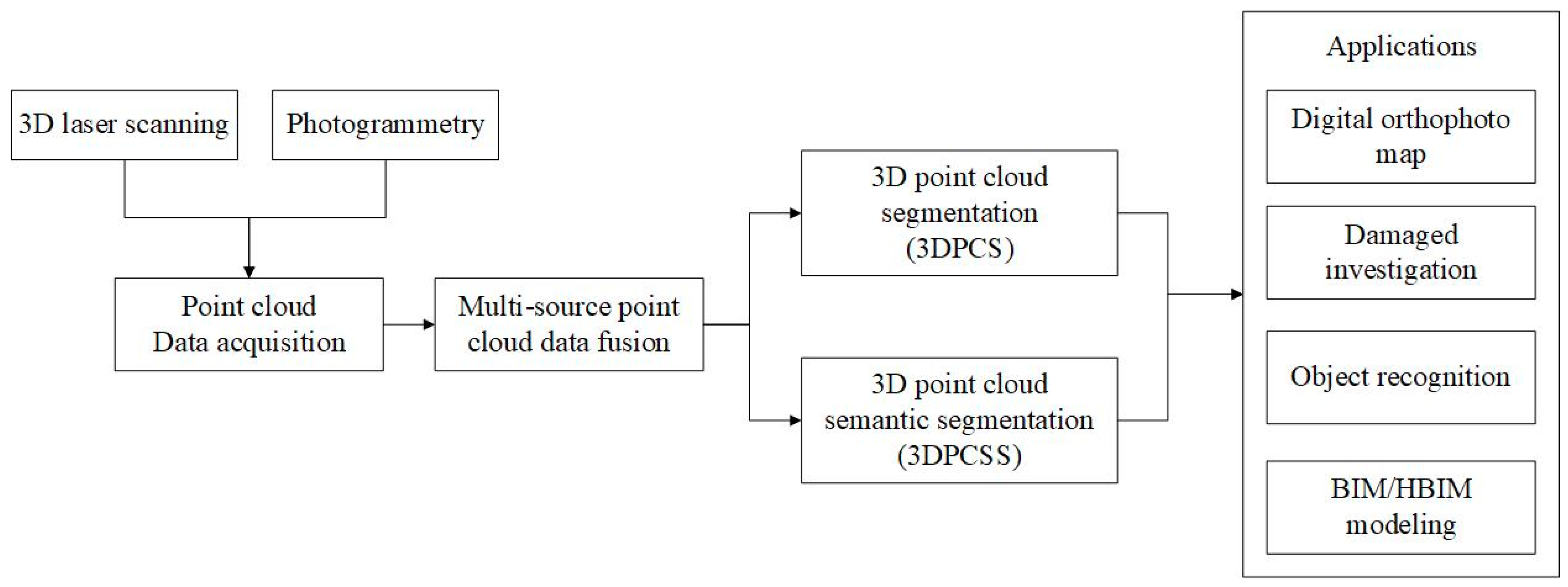

2. Three-Dimensional Point Cloud Data in Cultural Heritage

- (1)

- A single platform with multiple sensors: a point cloud data acquisition platform equipped with various sensors can obtain additional information in a single acquisition task. This additional information can improve the effect of 3D point cloud segmentation and semantic segmentation.

- (2)

- Multi-platform data fusion: combining the advantages of point cloud data acquisition of different platforms, a more complete and multi-resolution point cloud can be obtained by data fusion.

2.1. Point Cloud Data Acqusition Technologies

2.2. A single Platform with Multiple Sensors

2.3. Multi-Platform Data Fusion

3. 3D Point Cloud Segmentation

3.1. Region Growing

3.2. Model Fitting

3.2.1. Hough Transform (HT)

3.2.2. Random Sample Consensus (RANSAC)

3.3. Unsupervised Clustering Based

4. Three-Dimensional Point Cloud Semantic Segmentation

4.1. Supervised Machine Learning

- (1)

- Point cloud neighborhood selection.

- (2)

- Local feature extraction.

- (3)

- Salient feature selection.

- (4)

- Point cloud supervised classification.

4.2. Deep Learning

4.3. Public Benchmark Dataset

5. Discussion

5.1. Multi-Source Point Cloud Data

5.2. Over-Segmentation Results in Useless Classes

5.3. Supervised Machine Learning Versus Deep Learning

5.4. The Application of 3DPCSS in Cultural Heritage

5.5. Understanding and Cognition of Cultural Heritage 3D Scenes

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bakirman, T.; Bayram, B.; Akpinar, B.; Karabulut, M.F.; Bayrak, O.C.; Yigitoglu, A.; Seker, D.Z. Implementation of ultra-light UAV systems for cultural heritage documentation. J. Cult. Herit. 2020, 44, 174–184. [Google Scholar] [CrossRef]

- Pan, Y.; Dong, Y.; Wang, D.; Chen, A.; Ye, Z. Three-Dimensional Reconstruction of Structural Surface Model of Heritage Bridges Using UAV-Based Photogrammetric Point Clouds. Remote Sens. 2019, 11, 1204. [Google Scholar] [CrossRef]

- Yastikli, N. Documentation of cultural heritage using digital photogrammetry and laser scanning. J. Cult. Herit. 2007, 8, 423–427. [Google Scholar] [CrossRef]

- Pavlidis, G.; Koutsoudis, A.; Arnaoutoglou, F.; Tsioukas, V.; Chamzas, C. Methods for 3D digitization of Cultural Heritage. J. Cult. Herit. 2007, 8, 93–98. [Google Scholar] [CrossRef]

- Pepe, M.; Costantino, D.; Alfio, V.S.; Restuccia, A.G.; Papalino, N.M. Scan to BIM for the digital management and representation in 3D GIS environment of cultural heritage site. J. Cult. Herit. 2021, 50, 115–125. [Google Scholar] [CrossRef]

- Poux, F.; Neuville, R.; Van Wersch, L.; Nys, G.-A.; Billen, R. 3D Point Clouds in Archaeology: Advances in Acquisition, Processing and Knowledge Integration Applied to Quasi-Planar Objects. Geosciences 2017, 7, 96. [Google Scholar] [CrossRef]

- Barrile, V.; Bernardo, E.; Fotia, A.; Bilotta, G. A Combined Study of Cultural Heritage in Archaeological Museums: 3D Survey and Mixed Reality. Heritage 2022, 5, 1330–1349. [Google Scholar] [CrossRef]

- Bekele, M.K.; Pierdicca, R.; Frontoni, E.; Malinverni, E.S.; Gain, J. A Survey of Augmented, Virtual, and Mixed Reality for Cultural Heritage. J. Comput. Cult. Herit. 2018, 11, 1–36. [Google Scholar] [CrossRef]

- Xie, Y.; Tian, J.; Zhu, X.X. Linking Points with Labels in 3D: A Review of Point Cloud Semantic Segmentation. IEEE Geosci. Remote Sens. Mag. 2020, 8, 38–59. [Google Scholar] [CrossRef]

- Poux, F.; Billen, R. Voxel-based 3D Point Cloud Semantic Segmentation: Unsupervised Geometric and Relationship Featuring vs. Deep Learning Methods. ISPRS Int. J. Geo-Inf. 2019, 8, 213. [Google Scholar] [CrossRef]

- Bosché, F.; Ahmed, M.; Turkan, Y.; Haas, C.T.; Haas, R. The value of integrating Scan-to-BIM and Scan-vs-BIM techniques for construction monitoring using laser scanning and BIM: The case of cylindrical MEP components. Autom. Constr. 2015, 49, 201–213. [Google Scholar] [CrossRef]

- Rocha, G.; Mateus, L.; Fernández, J.; Ferreira, V. A Scan-to-BIM Methodology Applied to Heritage Buildings. Heritage 2020, 3, 47–67. [Google Scholar] [CrossRef]

- Volk, R.; Stengel, J.; Schultmann, F. Building Information Modeling (BIM) for existing buildings—Literature review and future needs. Autom. Constr. 2014, 38, 109–127. [Google Scholar] [CrossRef]

- López, F.; Lerones, P.; Llamas, J.; Gómez-García-Bermejo, J.; Zalama, E. A Review of Heritage Building Information Modeling (H-BIM). Multimodal Technol. Interact. 2018, 2, 21. [Google Scholar] [CrossRef]

- Pocobelli, D.P.; Boehm, J.; Bryan, P.; Still, J.; Grau-Bové, J. BIM for heritage science: A review. Herit. Sci. 2018, 6, 30. [Google Scholar] [CrossRef]

- Yang, S.; Hou, M.; Shaker, A.; Li, S. Modeling and Processing of Smart Point Clouds of Cultural Relics with Complex Geometries. ISPRS Int. J. Geo-Inf. 2021, 10, 617. [Google Scholar] [CrossRef]

- Florent Poux, R.B. A Smart Point Cloud Infrastructure for intelligent environments. In Laser Scanning; CRC Press: London, UK, 2019; p. 23. [Google Scholar]

- Poux, F.; Neuville, R.; Hallot, P.; Billen, R. Model for Semantically Rich Point Cloud Data. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, IV-4/W5, 107–115. [Google Scholar] [CrossRef]

- Alkadri, M.F.; Alam, S.; Santosa, H.; Yudono, A.; Beselly, S.M. Investigating Surface Fractures and Materials Behavior of Cultural Heritage Buildings Based on the Attribute Information of Point Clouds Stored in the TLS Dataset. Remote Sens. 2022, 14, 410. [Google Scholar] [CrossRef]

- Arias, P.; GonzÁLez-Aguilera, D.; Riveiro, B.; Caparrini, N. Orthoimage-Based Documentation of Archaeological Structures: The Case of a Mediaeval Wall in Pontevedra, Spain. Archaeometry 2011, 53, 858–872. [Google Scholar] [CrossRef]

- Chen, S.; Hu, Q.; Wang, S.; Yang, H. A Virtual Restoration Approach for Ancient Plank Road Using Mechanical Analysis with Precision 3D Data of Heritage Site. Remote Sens. 2016, 8, 828. [Google Scholar] [CrossRef]

- Yang, S.; Xu, S.; Huang, W. 3D Point Cloud for Cultural Heritage: A Scientometric Survey. Remote Sens. 2022, 14, 5542. [Google Scholar] [CrossRef]

- Ronchi, A.M. Cultural Content. In eCulture: Cultural Content in the Digital Age; Springer: Berlin/Heidelberg, Germany, 2009; pp. 15–20. [Google Scholar]

- Van Eetvelde, V.; Antrop, M. Indicators for assessing changing landscape character of cultural landscapes in Flanders (Belgium). Land Use Policy 2009, 26, 901–910. [Google Scholar] [CrossRef]

- Soler, F.; Melero, F.J.; Luzón, M.V. A complete 3D information system for cultural heritage documentation. J. Cult. Herit. 2017, 23, 49–57. [Google Scholar] [CrossRef]

- Sánchez, M.L.; Cabrera, A.T.; Del Pulgar, M.L.G. Guidelines from the heritage field for the integration of landscape and heritage planning: A systematic literature review. Landsc. Urban Plan. 2020, 204, 103931. [Google Scholar] [CrossRef]

- Moyano, J.; Justo-Estebaranz, Á.; Nieto-Julián, J.E.; Barrera, A.O.; Fernández-Alconchel, M. Evaluation of records using terrestrial laser scanner in architectural heritage for information modeling in HBIM construction: The case study of the La Anunciación church (Seville). J. Build. Eng. 2022, 62, 105190. [Google Scholar] [CrossRef]

- Barrile, V.; Fotia, A. A proposal of a 3D segmentation tool for HBIM management. Appl. Geomat. 2021, 14, 197–209. [Google Scholar] [CrossRef]

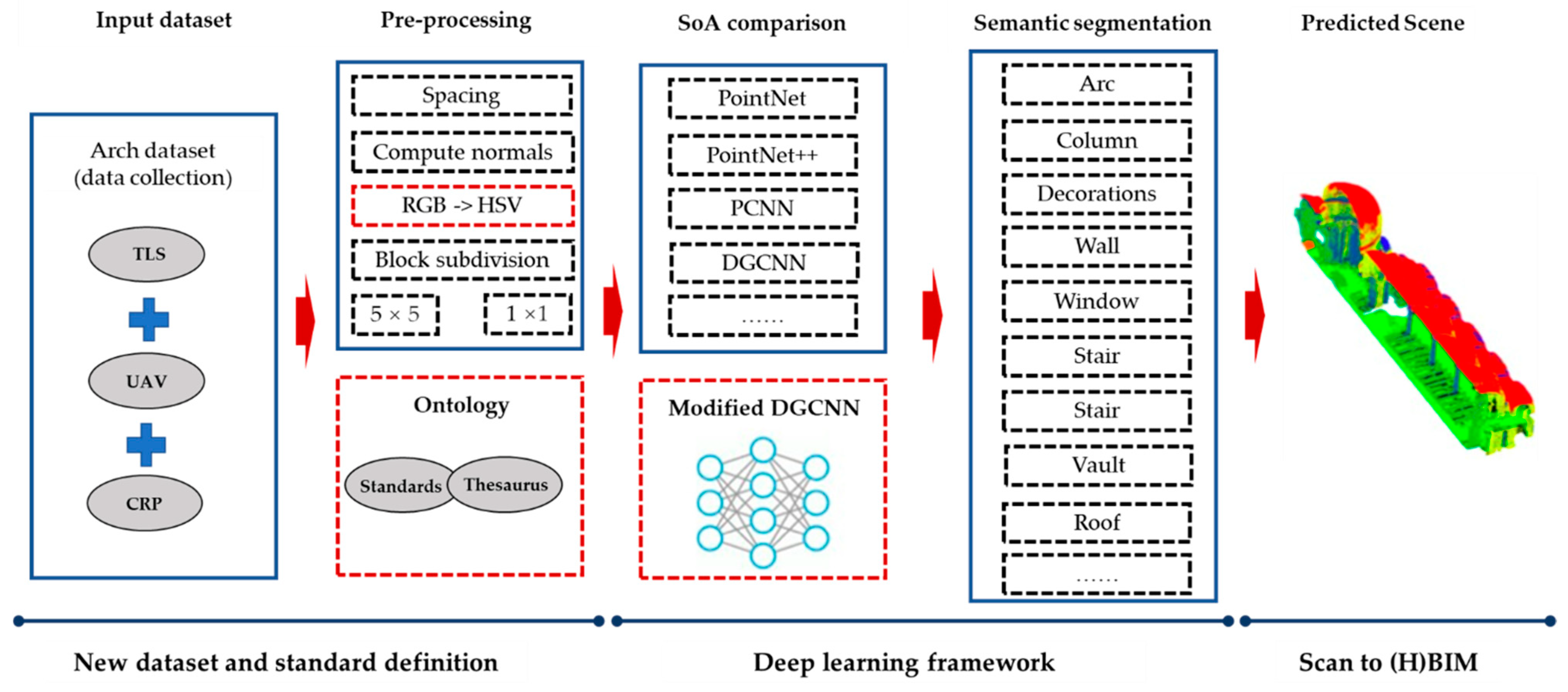

- Pierdicca, R.; Paolanti, M.; Matrone, F.; Martini, M.; Morbidoni, C.; Malinverni, E.S.; Frontoni, E.; Lingua, A.M. Point cloud semantic segmentation using a deep learning framework for cultural heritage. Remote Sens. 2020, 12, 1005. [Google Scholar] [CrossRef]

- Chew, A.W.Z.; Ji, A.; Zhang, L. Large-scale 3D point-cloud semantic segmentation of urban and rural scenes using data volume decomposition coupled with pipeline parallelism. Autom. Constr. 2022, 133, 103995. [Google Scholar] [CrossRef]

- Chen, Y.; Xiong, Y.; Zhang, B.; Zhou, J.; Zhang, Q. 3D point cloud semantic segmentation toward large-scale unstructured agricultural scene classification. Comput. Electron. Agric. 2021, 190, 106445. [Google Scholar] [CrossRef]

- Grandio, J.; Riveiro, B.; Soilán, M.; Arias, P. Point cloud semantic segmentation of complex railway environments using deep learning. Autom. Constr. 2022, 141, 104425. [Google Scholar] [CrossRef]

- Angjeliu, G.; Cardani, G.; Coronelli, D. A parametric model for ribbed masonry vaults. Autom. Constr. 2019, 105, 102785. [Google Scholar] [CrossRef]

- Grilli, E.; Özdemir, E.; Remondino, F. Application of Machine and Deep Learning Strategies for The Classification of Heritage Point Clouds. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-4/W18, 447–454. [Google Scholar] [CrossRef]

- Hamid-Lakzaeian, F. Point cloud segmentation and classification of structural elements in multi-planar masonry building facades. Autom. Constr. 2020, 118, 103232. [Google Scholar] [CrossRef]

- Grilli, E.; Remondino, F. Classification of 3D Digital Heritage. Remote Sens. 2019, 11, 847. [Google Scholar] [CrossRef]

- Li, Y.; Luo, Y.; Gu, X.; Chen, D.; Gao, F.; Shuang, F. Point Cloud Classification Algorithm Based on the Fusion of the Local Binary Pattern Features and Structural Features of Voxels. Remote Sens. 2021, 13, 3156. [Google Scholar] [CrossRef]

- Hackel, T.; Wegner, J.D.; Savinov, N.; Ladicky, L.; Schindler, K.; Pollefeys, M. Large-Scale Supervised Learning For 3D Point Cloud Labeling: Semantic3d.Net. Photogramm. Eng. Remote Sens. 2018, 84, 297–308. [Google Scholar] [CrossRef]

- Ramiya, A.M.; Nidamanuri, R.R.; Ramakrishnan, K. A supervoxel-based spectro-spatial approach for 3D urban point cloud labelling. Int. J. Remote Sens. 2016, 37, 4172–4200. [Google Scholar] [CrossRef]

- Grilli, E.; Menna, F.; Remondino, F. A Review of Point Clouds Segmentation and Classification Algorithms. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 339–344. [Google Scholar] [CrossRef]

- van Oosterom, P.; Martinez-Rubi, O.; Ivanova, M.; Horhammer, M.; Geringer, D.; Ravada, S.; Tijssen, T.; Kodde, M.; Gonçalves, R. Massive point cloud data management: Design, implementation and execution of a point cloud benchmark. Comput. Graph. 2015, 49, 92–125. [Google Scholar] [CrossRef]

- Yang, J.; Huang, X. A Hybrid Spatial Index for Massive Point Cloud Data Management and Visualization. Trans. GIS 2014, 18, 97–108. [Google Scholar] [CrossRef]

- Yang, X.; Grussenmeyer, P.; Koehl, M.; Macher, H.; Murtiyoso, A.; Landes, T. Review of built heritage modelling: Integration of HBIM and other information techniques. J. Cult. Herit. 2020, 46, 350–360. [Google Scholar] [CrossRef]

- Bassier, M.; Vergauwen, M. Unsupervised reconstruction of Building Information Modeling wall objects from point cloud data. Autom. Constr. 2020, 120, 103338. [Google Scholar] [CrossRef]

- Moyano, J.; León, J.; Nieto-Julián, J.E.; Bruno, S. Semantic interpretation of architectural and archaeological geometries: Point cloud segmentation for HBIM parameterisation. Autom. Constr. 2021, 130, 103856. [Google Scholar] [CrossRef]

- Rashdi, R.; Martínez-Sánchez, J.; Arias, P.; Qiu, Z. Scanning Technologies to Building Information Modelling: A Review. Infrastructures 2022, 7, 49. [Google Scholar] [CrossRef]

- Nguyen, A.; Le, B. 3D Point Cloud Segmentation: A survey. In Proceedings of the 6th IEEE International Conference on Robotics, Automation and Mechatronics (RAM), De La Salle Univ, Manila, Philippines, 12–15 November 2013; pp. 225–230. [Google Scholar]

- Salonia, P.; Scolastico, S.; Pozzi, A.; Marcolongo, A.; Messina, T.L. Multi-scale cultural heritage survey: Quick digital photogrammetric systems. J. Cult. Herit. 2009, 10, e59–e64. [Google Scholar] [CrossRef]

- McCarthy, J. Multi-image photogrammetry as a practical tool for cultural heritage survey and community engagement. J. Archaeol. Sci. 2014, 43, 175–185. [Google Scholar] [CrossRef]

- Nikolakopoulos, K.G.; Soura, K.; Koukouvelas, I.K.; Argyropoulos, N.G. UAV vs. classical aerial photogrammetry for archaeological studies. J. Archaeol. Sci. Rep. 2017, 14, 758–773. [Google Scholar] [CrossRef]

- Vavulin, M.V.; Chugunov, K.V.; Zaitceva, O.V.; Vodyasov, E.V.; Pushkarev, A.A. UAV-based photogrammetry: Assessing the application potential and effectiveness for archaeological monitoring and surveying in the research on the ‘valley of the kings’ (Tuva, Russia). Digit. Appl. Archaeol. Cult. Herit. 2021, 20, e00172. [Google Scholar] [CrossRef]

- Jeon, E.-I.; Yu, S.-J.; Seok, H.-W.; Kang, S.-J.; Lee, K.-Y.; Kwon, O.-S. Comparative evaluation of commercial softwares in UAV imagery for cultural heritage recording: Case study for traditional building in South Korea. Spat. Inf. Res. 2017, 25, 701–712. [Google Scholar] [CrossRef]

- Kingsland, K. Comparative analysis of digital photogrammetry software for cultural heritage. Digit. Appl. Archaeol. Cult. Herit. 2020, 18, e00157. [Google Scholar] [CrossRef]

- Szeliski, R. Computer Vision: Algorithms and Applications; Springer Nature: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Aicardi, I.; Chiabrando, F.; Maria Lingua, A.; Noardo, F. Recent trends in cultural heritage 3D survey: The photogrammetric computer vision approach. J. Cult. Herit. 2018, 32, 257–266. [Google Scholar] [CrossRef]

- Murtiyoso, A.; Grussenmeyer, P. Documentation of heritage buildings using close-range UAV images: Dense matching issues, comparison and case studies. Photogramm. Rec. 2017, 32, 206–229. [Google Scholar] [CrossRef]

- Grussenmeyer, P.; Alby, E.; Landes, T.; Koehl, M.; Guillemin, S.; Hullo, J.-F.; Assali, P.; Smigiel, E. Recording approach of heritage sites based on merging point clouds from high resolution photogrammetry and terrestrial laser scanning. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci 2012, 39, 553–558. [Google Scholar] [CrossRef]

- Remondino, F.; Spera, M.G.; Nocerino, E.; Menna, F.; Nex, F. State of the art in high density image matching. Photogramm. Rec. 2014, 29, 144–166. [Google Scholar] [CrossRef]

- Pepe, M.; Alfio, V.S.; Costantino, D. UAV Platforms and the SfM-MVS Approach in the 3D Surveys and Modelling: A Review in the Cultural Heritage Field. Appl. Sci. 2022, 12, 12886. [Google Scholar] [CrossRef]

- Capolupo, A. Accuracy assessment of cultural heritage models extracting 3D point cloud geometric features with RPAS SfM-MVS and TLS techniques. Drones 2021, 5, 145. [Google Scholar] [CrossRef]

- Koutsoudis, A.; Ioannakis, G.; Arnaoutoglou, F.; Kiourt, C.; Chamzas, C. 3D reconstruction challenges using structure-from-motion. In Applying Innovative Technologies in Heritage Science; IGI Global: Hershey, PA, USA, 2020; pp. 138–152. [Google Scholar]

- Adamopoulos, E.; Rinaudo, F. Enhancing image-based multiscale heritage recording with near-infrared data. ISPRS Int. J. Geo-Inf. 2020, 9, 269. [Google Scholar] [CrossRef]

- Peppa, M.; Mills, J.; Fieber, K.; Haynes, I.; Turner, S.; Turner, A.; Douglas, M.; Bryan, P. Archaeological feature detection from archive aerial photography with a SfM-MVS and image enhancement pipeline. Int. Arch. Photogramm. Remote Sens. Spat. Inf. 2018, XLII-2, 869–875. [Google Scholar] [CrossRef]

- Ju, Y.; Shi, B.; Jian, M.; Qi, L.; Dong, J.; Lam, K.-M. NormAttention-PSN: A High-frequency Region Enhanced Photometric Stereo Network with Normalized Attention. Int. J. Comput. Vis. 2022, 130, 3014–3034. [Google Scholar] [CrossRef]

- Woodham, R.J. Photometric method for determining surface orientation from multiple images. Opt. Eng. 1980, 19, 139–144. [Google Scholar] [CrossRef]

- Risbøl, O.; Briese, C.; Doneus, M.; Nesbakken, A. Monitoring cultural heritage by comparing DEMs derived from historical aerial photographs and airborne laser scanning. J. Cult. Herit. 2015, 16, 202–209. [Google Scholar] [CrossRef]

- Damięcka-Suchocka, M.; Katzer, J.; Suchocki, C. Application of TLS Technology for Documentation of Brickwork Heritage Buildings and Structures. Coatings 2022, 12, 1963. [Google Scholar] [CrossRef]

- di Filippo, A.; Sánchez-Aparicio, L.; Barba, S.; Martín-Jiménez, J.; Mora, R.; González Aguilera, D. Use of a Wearable Mobile Laser System in Seamless Indoor 3D Mapping of a Complex Historical Site. Remote Sens. 2018, 10, 1897. [Google Scholar] [CrossRef]

- Lou, L.; Wei, C.; Wu, H.; Yang, C. Cave feature extraction and classification from rockery point clouds acquired with handheld laser scanners. Herit. Sci. 2022, 10, 177. [Google Scholar] [CrossRef]

- Ramm, R.; Heinze, M.; Kühmstedt, P.; Christoph, A.; Heist, S.; Notni, G. Portable solution for high-resolution 3D and colour texture on-site digitization of cultural heritage objects. J. Cult. Herit. 2022, 53, 165–175. [Google Scholar] [CrossRef]

- Gomes, L.; Regina Pereira Bellon, O.; Silva, L. 3D reconstruction methods for digital preservation of cultural heritage: A survey. Pattern Recognit. Lett. 2014, 50, 3–14. [Google Scholar] [CrossRef]

- Maté-González, M.Á.; Di Pietra, V.; Piras, M. Evaluation of Different LiDAR Technologies for the Documentation of Forgotten Cultural Heritage under Forest Environments. Sensors 2022, 22, 6314. [Google Scholar] [CrossRef] [PubMed]

- Ruiz, R.M.; Torres, M.T.M.; Allegue, P.S. Comparative Analysis Between the Main 3D Scanning Techniques: Photogrammetry, Terrestrial Laser Scanner, and Structured Light Scanner in Religious Imagery: The Case of The Holy Christ of the Blood. J. Comput. Cult. Herit. 2022, 15, 1–23. [Google Scholar] [CrossRef]

- Nagai, M.; Tianen, C.; Shibasaki, R.; Kumagai, H.; Ahmed, A. UAV-Borne 3-D Mapping System by Multisensor Integration. IEEE Trans. Geosci. Remote Sens. 2009, 47, 701–708. [Google Scholar] [CrossRef]

- Erenoglu, R.C.; Akcay, O.; Erenoglu, O. An UAS-assisted multi-sensor approach for 3D modeling and reconstruction of cultural heritage site. J. Cult. Herit. 2017, 26, 79–90. [Google Scholar] [CrossRef]

- Rodríguez-Gonzálvez, P.; Jiménez Fernández-Palacios, B.; Muñoz-Nieto, Á.; Arias-Sanchez, P.; Gonzalez-Aguilera, D. Mobile LiDAR System: New Possibilities for the Documentation and Dissemination of Large Cultural Heritage Sites. Remote Sens. 2017, 9, 189. [Google Scholar] [CrossRef]

- Milella, A.; Reina, G.; Nielsen, M. A multi-sensor robotic platform for ground mapping and estimation beyond the visible spectrum. Precis. Agric. 2018, 20, 423–444. [Google Scholar] [CrossRef]

- Hakala, T.; Suomalainen, J.; Kaasalainen, S.; Chen, Y. Full waveform hyperspectral LiDAR for terrestrial laser scanning. Opt. Express 2012, 20, 7119–7127. [Google Scholar] [CrossRef] [PubMed]

- Zlot, R.; Bosse, M.; Greenop, K.; Jarzab, Z.; Juckes, E.; Roberts, J. Efficiently capturing large, complex cultural heritage sites with a handheld mobile 3D laser mapping system. J. Cult. Herit. 2014, 15, 670–678. [Google Scholar] [CrossRef]

- Alsadik, B. Practicing the geometric designation of sensor networks using the Crowdsource 3D models of cultural heritage objects. J. Cult. Herit. 2018, 31, 202–207. [Google Scholar] [CrossRef]

- Ramos, M.M.; Remondino, F. Data fusion in Cultural Heritage—A Review. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-5/W7, 359–363. [Google Scholar] [CrossRef]

- Fassi, F.; Achille, C.; Fregonese, L. Surveying and modelling the main spire of Milan Cathedral using multiple data sources. Photogramm. Rec. 2011, 26, 462–487. [Google Scholar] [CrossRef]

- Achille, C.; Adami, A.; Chiarini, S.; Cremonesi, S.; Fassi, F.; Fregonese, L.; Taffurelli, L. UAV-Based Photogrammetry and Integrated Technologies for Architectural Applications--Methodological Strategies for the After-Quake Survey of Vertical Structures in Mantua (Italy). Sensors 2015, 15, 15520–15539. [Google Scholar] [CrossRef]

- Galeazzi, F. Towards the definition of best 3D practices in archaeology: Assessing 3D documentation techniques for intra-site data recording. J. Cult. Herit. 2016, 17, 159–169. [Google Scholar] [CrossRef]

- Martínez-Espejo Zaragoza, I.; Caroti, G.; Piemonte, A.; Riedel, B.; Tengen, D.; Niemeier, W. Structure from motion (SfM) processing of UAV images and combination with terrestrial laser scanning, applied for a 3D-documentation in a hazardous situation. Geomat. Nat. Hazards Risk 2017, 8, 1492–1504. [Google Scholar] [CrossRef]

- Herrero-Tejedor, T.R.; Arques Soler, F.; Lopez-Cuervo Medina, S.; de la O Cabrera, M.R.; Martin Romero, J.L. Documenting a cultural landscape using point-cloud 3d models obtained with geomatic integration techniques. The case of the El Encin atomic garden, Madrid (Spain). PLoS ONE 2020, 15, e0235169. [Google Scholar] [CrossRef] [PubMed]

- Guidi, G.; Russo, M.; Ercoli, S.; Remondino, F.; Rizzi, A.; Menna, F. A multi-resolution methodology for the 3D modeling of large and complex archeological areas. Int. J. Archit. Comput. 2009, 7, 39–55. [Google Scholar] [CrossRef]

- Abate, D.; Sturdy-Colls, C. A multi-level and multi-sensor documentation approach of the Treblinka extermination and labor camps. J. Cult. Herit. 2018, 34, 129–135. [Google Scholar] [CrossRef]

- Jo, Y.; Hong, S. Three-Dimensional Digital Documentation of Cultural Heritage Site Based on the Convergence of Terrestrial Laser Scanning and Unmanned Aerial Vehicle Photogrammetry. ISPRS Int. J. Geo-Inf. 2019, 8, 53. [Google Scholar] [CrossRef]

- Nurunnabi, A.; Belton, D.; West, G. Robust segmentation in laser scanning 3D point cloud data. In Proceedings of the 2012 International Conference on Digital Image Computing Techniques and Applications (DICTA), Fremantle, Australia, 3–5 December 2012; pp. 1–8. [Google Scholar]

- Su, Z.; Gao, Z.; Zhou, G.; Li, S.; Song, L.; Lu, X.; Kang, N. Building Plane Segmentation Based on Point Clouds. Remote Sens. 2021, 14, 95. [Google Scholar] [CrossRef]

- Grussenmeyer, P.; Landes, T.; Voegtle, T.; Ringle, K. Comparison methods of terrestrial laser scanning, photogrammetry and tacheometry data for recording of cultural heritage buildings. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 213–218. [Google Scholar]

- Paiva, P.V.V.; Cogima, C.K.; Dezen-Kempter, E.; Carvalho, M.A.G. Historical building point cloud segmentation combining hierarchical watershed transform and curvature analysis. Pattern Recognit. Lett. 2020, 135, 114–121. [Google Scholar] [CrossRef]

- Deschaud, J.-E.; Goulette, F. A fast and accurate plane detection algorithm for large noisy point clouds using filtered normals and voxel growing. In 3DPVT; Hal Archives-Ouvertes: Paris, France, 2010. [Google Scholar]

- Fan, Y.; Wang, M.; Geng, N.; He, D.; Chang, J.; Zhang, J.J. A self-adaptive segmentation method for a point cloud. Vis. Comput. 2017, 34, 659–673. [Google Scholar] [CrossRef]

- Ning, X.; Zhang, X.; Wang, Y.; Jaeger, M. Segmentation of architecture shape information from 3D point cloud. In Proceedings of the 8th International Conference on Virtual Reality Continuum and its Applications in Industry, Yokohama, Japan, 14–15 December 2009; pp. 127–132. [Google Scholar]

- Saglam, A.; Makineci, H.B.; Baykan, N.A.; Baykan, Ö.K. Boundary constrained voxel segmentation for 3D point clouds using local geometric differences. Expert Syst. Appl. 2020, 157, 113439. [Google Scholar] [CrossRef]

- Aijazi, A.; Checchin, P.; Trassoudaine, L. Segmentation Based Classification of 3D Urban Point Clouds: A Super-Voxel Based Approach with Evaluation. Remote Sens. 2013, 5, 1624–1650. [Google Scholar] [CrossRef]

- Vo, A.-V.; Truong-Hong, L.; Laefer, D.F.; Bertolotto, M. Octree-based region growing for point cloud segmentation. ISPRS J. Photogramm. Remote Sens. 2015, 104, 88–100. [Google Scholar] [CrossRef]

- Xiao, J.; Zhang, J.; Adler, B.; Zhang, H.; Zhang, J. Three-dimensional point cloud plane segmentation in both structured and unstructured environments. Robot. Auton. Syst. 2013, 61, 1641–1652. [Google Scholar] [CrossRef]

- Dong, Z.; Yang, B.; Hu, P.; Scherer, S. An efficient global energy optimization approach for robust 3D plane segmentation of point clouds. ISPRS J. Photogramm. Remote Sens. 2018, 137, 112–133. [Google Scholar] [CrossRef]

- Pérez-Sinticala, C.; Janvier, R.; Brunetaud, X.; Treuillet, S.; Aguilar, R.; Castañeda, B. Evaluation of Primitive Extraction Methods from Point Clouds of Cultural Heritage Buildings. In Structural Analysis of Historical Constructions; RILEM Bookseries; Springer: Cham, Switzerland, 2019; pp. 2332–2341. [Google Scholar]

- Poux, F.; Mattes, C.; Selman, Z.; Kobbelt, L. Automatic region-growing system for the segmentation of large point clouds. Autom. Constr. 2022, 138, 104250. [Google Scholar] [CrossRef]

- Dalitz, C.; Schramke, T.; Jeltsch, M. Iterative Hough Transform for Line Detection in 3D Point Clouds. Image Process. Line 2017, 7, 184–196. [Google Scholar] [CrossRef]

- Tian, P.; Hua, X.; Yu, K.; Tao, W. Robust Segmentation of Building Planar Features From Unorganized Point Cloud. IEEE Access 2020, 8, 30873–30884. [Google Scholar] [CrossRef]

- Rabbani, T.; Van Den Heuvel, F. Efficient hough transform for automatic detection of cylinders in point clouds. Isprs Wg Iii/3 Iii/4 2005, 3, 60–65. [Google Scholar]

- Camurri, M.; Vezzani, R.; Cucchiara, R. 3D Hough transform for sphere recognition on point clouds. Mach. Vis. Appl. 2014, 25, 1877–1891. [Google Scholar] [CrossRef]

- Borrmann, D.; Elseberg, J.; Lingemann, K.; Nüchter, A. The 3d hough transform for plane detection in point clouds: A review and a new accumulator design. 3D Res. 2011, 2, 3. [Google Scholar] [CrossRef]

- Hassanein, A.S.; Mohammad, S.; Sameer, M.; Ragab, M.E. A survey on Hough transform, theory, techniques and applications. arXiv 2015, arXiv:1502.02160. [Google Scholar]

- Kaiser, A.; Ybanez Zepeda, J.A.; Boubekeur, T. A survey of simple geometric primitives detection methods for captured 3D data. In Computer Graphics Forum; Wiley Online Library: Hoboken, NJ, USA, 2019; pp. 167–196. [Google Scholar]

- Lerma, J.; Biosca, J. Segmentation and filtering of laser scanner data for cultural heritage. In Proceedings of the CIPA 2005 XX International Symposium, Torino, Italy, 26 September–1 October 2005; p. 6. [Google Scholar]

- Pierrot-Deseilligny, M.; De Luca, L.; Remondino, F. Automated image-based procedures for accurate artifacts 3D Modeling and orthoimage. J. Geoinform. FCE CTU 2011, 6, 1–10. [Google Scholar] [CrossRef]

- Markiewicz, J.; Podlasiak, P.; Zawieska, D. A New Approach to the Generation of Orthoimages of Cultural Heritage Objects—Integrating TLS and Image Data. Remote Sens. 2015, 7, 16963–16985. [Google Scholar] [CrossRef]

- Maltezos, E.; Ioannidis, C. Plane detection of polyhedral cultural heritage monuments: The case of tower of winds in Athens. J. Archaeol. Sci. Rep. 2018, 19, 562–574. [Google Scholar] [CrossRef]

- Alshawabkeh, Y. Linear feature extraction from point cloud using colour information. Herit. Sci. 2020, 8, 28. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Li, L.; Yang, F.; Zhu, H.; Li, D.; Li, Y.; Tang, L. An improved RANSAC for 3D point cloud plane segmentation based on normal distribution transformation cells. Remote Sens. 2017, 9, 433. [Google Scholar] [CrossRef]

- Xu, B.; Jiang, W.; Shan, J.; Zhang, J.; Li, L. Investigation on the weighted ransac approaches for building roof plane segmentation from lidar point clouds. Remote Sens. 2015, 8, 5. [Google Scholar] [CrossRef]

- Yang, M.Y.; Förstner, W. Plane detection in point cloud data. In Proceedings of the 2nd International Conference on Machine Control Guidance, Bonn, Germany, 25 January 2010; pp. 95–104. [Google Scholar]

- Tittmann, P.; Shafii, S.; Hartsough, B.; Hamann, B. Tree detection and delineation from LiDAR point clouds using RANSAC. In Proceedings of the SilviLaser 2011, Hobart, AU, USA, 16–19 October 2011; pp. 1–23. [Google Scholar]

- Xu, B.; Chen, Z.; Zhu, Q.; Ge, X.; Huang, S.; Zhang, Y.; Liu, T.; Wu, D. Geometrical Segmentation of Multi-Shape Point Clouds Based on Adaptive Shape Prediction and Hybrid Voting RANSAC. Remote Sens. 2022, 14, 2024. [Google Scholar] [CrossRef]

- Aitelkadi, K.; Tahiri, D.; Simonetto, E.; Sebari, I.; Polidori, L. Segmentation of heritage building by means of geometric and radiometric components from terrestrial laser scanning. ISPRS Ann. Photogramm. Remote Sens Spat. Inf. Sci 2013, 1, 1–6. [Google Scholar] [CrossRef]

- Chan, T.O.; Xiao, H.; Liu, L.; Sun, Y.; Chen, T.; Lang, W.; Li, M.H. A Post-Scan Point Cloud Colourization Method for Cultural Heritage Documentation. ISPRS Int. J. Geo-Inf. 2021, 10, 737. [Google Scholar] [CrossRef]

- Kivilcim, C.Ö.; Duran, Z. Parametric Architectural Elements from Point Clouds for HBIM Applications. Int. J. Environ. Geoinform. 2021, 8, 144–149. [Google Scholar] [CrossRef]

- Macher, H.; Landes, T.; Grussenmeyer, P.; Alby, E. Semi-automatic segmentation and modelling from point clouds towards historical building information modelling. In Proceedings of the Euro-Mediterranean Conference, Limassol, Cyprus, 3– November 2014; pp. 111–120. [Google Scholar]

- Andrés, A.N.; Pozuelo, F.B.; Marimón, J.R.; de Mesa Gisbert, A. Generation of virtual models of cultural heritage. J. Cult. Herit. 2012, 13, 103–106. [Google Scholar] [CrossRef]

- Nespeca, R.; De Luca, L. Analysis, thematic maps and data mining from point cloud to ontology for software development. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci 2016, XLI-B5, 347–354. [Google Scholar] [CrossRef]

- Poux, F.; Neuville, R.; Hallot, P.; Billen, R. Point cloud classification of tesserae from terrestrial laser data combined with dense image matching for archaeological information extraction. Int. J. Adv. Life Sci. 2017, 4, 203–211. [Google Scholar] [CrossRef]

- Li, Z.; Shan, J. RANSAC-based multi primitive building reconstruction from 3D point clouds. ISPRS J. Photogramm. Remote Sens. 2022, 185, 247–260. [Google Scholar] [CrossRef]

- Roth, G.; Levine, M.D. Extracting geometric primitives. CVGIP Image Underst. 1993, 58, 1–22. [Google Scholar] [CrossRef]

- Shi, B.-Q.; Liang, J.; Liu, Q. Adaptive simplification of point cloud using k-means clustering. Comput. -Aided Des. 2011, 43, 910–922. [Google Scholar] [CrossRef]

- Melzer, T. Non-parametric segmentation of ALS point clouds using mean shift. J. Appl. Geod. 2007, 1, 159–170. [Google Scholar] [CrossRef]

- Biosca, J.M.; Lerma, J.L. Unsupervised robust planar segmentation of terrestrial laser scanner point clouds based on fuzzy clustering methods. ISPRS J. Photogramm. Remote Sens. 2008, 63, 84–98. [Google Scholar] [CrossRef]

- Quagliarini, E.; Clini, P.; Ripanti, M. Fast, low cost and safe methodology for the assessment of the state of conservation of historical buildings from 3D laser scanning: The case study of Santa Maria in Portonovo (Italy). J. Cult. Herit. 2017, 24, 175–183. [Google Scholar] [CrossRef]

- Galantucci, R.A.; Fatiguso, F. Advanced damage detection techniques in historical buildings using digital photogrammetry and 3D surface anlysis. J. Cult. Herit. 2019, 36, 51–62. [Google Scholar] [CrossRef]

- Armesto-González, J.; Riveiro-Rodríguez, B.; González-Aguilera, D.; Rivas-Brea, M.T. Terrestrial laser scanning intensity data applied to damage detection for historical buildings. J. Archaeol. Sci. 2010, 37, 3037–3047. [Google Scholar] [CrossRef]

- Sánchez-Aparicio, L.J.; Del Pozo, S.; Ramos, L.F.; Arce, A.; Fernandes, F.M. Heritage site preservation with combined radiometric and geometric analysis of TLS data. Autom. Constr. 2018, 85, 24–39. [Google Scholar] [CrossRef]

- Wood, R.L.; Mohammadi, M.E. Feature-Based Point Cloud-Based Assessment of Heritage Structures for Nondestructive and Noncontact Surface Damage Detection. Heritage 2021, 4, 775–793. [Google Scholar] [CrossRef]

- Ankerst, M.; Breunig, M.M.; Kriegel, H.-P.; Sander, J. OPTICS: Ordering points to identify the clustering structure. ACM Sigmod Rec. 1999, 28, 49–60. [Google Scholar] [CrossRef]

- Hassan, M.; Akçamete Güngör, A.; Meral, Ç. Investigation of terrestrial laser scanning reflectance intensity and RGB distributions to assist construction material identification. In Proceedings of the Joint Conference on Computing in Construction, Heraklion, Greece, 4–7 July 2017; pp. 507–515. [Google Scholar]

- Valero, E.; Bosché, F.; Forster, A. Automatic segmentation of 3D point clouds of rubble masonry walls, and its application to building surveying, repair and maintenance. Autom. Constr. 2018, 96, 29–39. [Google Scholar] [CrossRef]

- Hou, T.-C.; Liu, J.-W.; Liu, Y.-W. Algorithmic clustering of LiDAR point cloud data for textural damage identifications of structural elements. Measurement 2017, 108, 77–90. [Google Scholar] [CrossRef]

- Niemeyer, J.; Rottensteiner, F.; Soergel, U. Contextual classification of lidar data and building object detection in urban areas. ISPRS J. Photogramm. Remote Sens. 2014, 87, 152–165. [Google Scholar] [CrossRef]

- Vosselman, G.; Coenen, M.; Rottensteiner, F. Contextual segment-based classification of airborne laser scanner data. ISPRS J. Photogramm. Remote Sens. 2017, 128, 354–371. [Google Scholar] [CrossRef]

- Fiorucci, M.; Khoroshiltseva, M.; Pontil, M.; Traviglia, A.; Del Bue, A.; James, S. Machine Learning for Cultural Heritage: A Survey. Pattern Recognit. Lett. 2020, 133, 102–108. [Google Scholar] [CrossRef]

- Mesanza-Moraza, A.; García-Gómez, I.; Azkarate, A. Machine Learning for the Built Heritage Archaeological Study. J. Comput. Cult. Herit. 2021, 14, 1–21. [Google Scholar] [CrossRef]

- Weinmann, M.; Jutzi, B.; Hinz, S.; Mallet, C. Semantic point cloud interpretation based on optimal neighborhoods, relevant features and efficient classifiers. ISPRS J. Photogramm. Remote Sens. 2015, 105, 286–304. [Google Scholar] [CrossRef]

- Hackel, T.; Wegner, J.D.; Schindler, K. Fast semantic segmentation of 3D point clouds with strongly varying density. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 3, 177–184. [Google Scholar] [CrossRef]

- Grilli, E.; Dininno, D.; Marsicano, L.; Petrucci, G.; Remondino, F. Supervised segmentation of 3D cultural heritage. In Proceedings of the 2018 3rd Digital Heritage International Congress (DigitalHERITAGE) held jointly with 2018 24th International Conference on Virtual Systems & Multimedia (VSMM 2018), San Francisco, CA, USA, 26–30 October 2018; pp. 1–8. [Google Scholar]

- Valero, E.; Forster, A.; Bosché, F.; Hyslop, E.; Wilson, L.; Turmel, A. Automated defect detection and classification in ashlar masonry walls using machine learning. Autom. Constr. 2019, 106, 102846. [Google Scholar] [CrossRef]

- Grilli, E.; Remondino, F. Machine learning generalisation across different 3D architectural heritage. ISPRS Int. J. Geo-Inf. 2020, 9, 379. [Google Scholar] [CrossRef]

- Croce, V.; Caroti, G.; De Luca, L.; Jacquot, K.; Piemonte, A.; Véron, P. From the semantic point cloud to heritage-building information modeling: A semiautomatic approach exploiting machine learning. Remote Sens. 2021, 13, 461. [Google Scholar] [CrossRef]

- Teruggi, S.; Grilli, E.; Russo, M.; Fassi, F.; Remondino, F. A hierarchical machine learning approach for multi-level and multi-resolution 3D point cloud classification. Remote Sens. 2020, 12, 2598. [Google Scholar] [CrossRef]

- Guo, Y.; Wang, H.; Hu, Q.; Liu, H.; Liu, L.; Bennamoun, M. Deep learning for 3d point clouds: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 4338–4364. [Google Scholar] [CrossRef]

- Bello, S.A.; Yu, S.; Wang, C.; Adam, J.M.; Li, J. Deep learning on 3D point clouds. Remote Sens. 2020, 12, 1729. [Google Scholar] [CrossRef]

- Liu, W.; Sun, J.; Li, W.; Hu, T.; Wang, P. Deep learning on point clouds and its application: A survey. Sensors 2019, 19, 4188. [Google Scholar] [CrossRef]

- Zhang, J.; Zhao, X.; Chen, Z.; Lu, Z. A review of deep learning-based semantic segmentation for point cloud. IEEE Access 2019, 7, 179118–179133. [Google Scholar] [CrossRef]

- Pellis, E.; Murtiyoso, A.; Masiero, A.; Tucci, G.; Betti, M.; Grussenmeyer, P. 2D to 3D Label Propagation for the Semantic Segmentation of Heritage Building Point Clouds. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 43, 861–867. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 201; Volume 30.

- Malinverni, E.S.; Pierdicca, R.; Paolanti, M.; Martini, M.; Morbidoni, C.; Matrone, F.; Lingua, A. Deep learning for semantic segmentation of 3D point cloud. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W15, 735–742. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S.E.; Bronstein, M.M.; Solomon, J.M. Dynamic graph cnn for learning on point clouds. Acm Trans. Graph. 2019, 38, 1–12. [Google Scholar] [CrossRef]

- Morbidoni, C.; Pierdicca, R.; Paolanti, M.; Quattrini, R.; Mammoli, R. Learning from synthetic point cloud data for historical buildings semantic segmentation. J. Comput. Cult. Herit. 2020, 13, 1–16. [Google Scholar] [CrossRef]

- Matrone, F.; Grilli, E.; Martini, M.; Paolanti, M.; Pierdicca, R.; Remondino, F. Comparing machine and deep learning methods for large 3D heritage semantic segmentation. ISPRS Int. J. Geo-Inf. 2020, 9, 535. [Google Scholar] [CrossRef]

- Chen, X.-T.; Li, Y.; Fan, J.-H.; Wang, R. RGAM: A novel network architecture for 3D point cloud semantic segmentation in indoor scenes. Inf. Sci. 2021, 571, 87–103. [Google Scholar] [CrossRef]

- Lee, J.S.; Park, J.; Ryu, Y.-M. Semantic segmentation of bridge components based on hierarchical point cloud model. Autom. Constr. 2021, 130, 103847. [Google Scholar] [CrossRef]

- Yin, C.; Wang, B.; Gan, V.J.; Wang, M.; Cheng, J.C. Automated semantic segmentation of industrial point clouds using ResPointNet++. Autom. Constr. 2021, 130, 103874. [Google Scholar] [CrossRef]

- Matrone, F.; Lingua, A.; Pierdicca, R.; Malinverni, E.; Paolanti, M.; Grilli, E.; Remondino, F.; Murtiyoso, A.; Landes, T. A benchmark for large-scale heritage point cloud semantic segmentation. In Proceedings of the XXIV ISPRS Congress, Nice, France, 31 August–2 September 2020; pp. 1419–1426. [Google Scholar]

- Dong, Z.; Liang, F.; Yang, B.; Xu, Y.; Zang, Y.; Li, J.; Wang, Y.; Dai, W.; Fan, H.; Hyyppä, J. Registration of large-scale terrestrial laser scanner point clouds: A review and benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 163, 327–342. [Google Scholar] [CrossRef]

- Dong, Z.; Yang, B.; Liang, F.; Huang, R.; Scherer, S. Hierarchical registration of unordered TLS point clouds based on binary shape context descriptor. ISPRS J. Photogramm. Remote Sens. 2018, 144, 61–79. [Google Scholar] [CrossRef]

- Dong, Z.; Yang, B.; Liu, Y.; Liang, F.; Li, B.; Zang, Y. A novel binary shape context for 3D local surface description. ISPRS J. Photogramm. Remote Sens. 2017, 130, 431–452. [Google Scholar] [CrossRef]

- Hackel, T.; Savinov, N.; Ladicky, L.; Wegner, J.D.; Schindler, K.; Pollefeys, M. Semantic3d. net: A new large-scale point cloud classification benchmark. arXiv 2017, arXiv:1704.03847. [Google Scholar]

- Pepe, M.; Alfio, V.S.; Costantino, D.; Scaringi, D. Data for 3D reconstruction and point cloud classification using machine learning in cultural heritage environment. Data Brief 2022, 42, 6. [Google Scholar] [CrossRef] [PubMed]

- Lengauer, S.; Sipiran, I.; Preiner, R.; Schreck, T.; Bustos, B. A Benchmark Dataset for Repetitive Pattern Recognition on Textured 3D Surfaces. In Computer Graphics Forum; Wiley Online Library: Hoboken, NJ, USA, 2021; pp. 1–8. [Google Scholar]

- Hao, F.; Li, J.; Song, R.; Li, Y.; Cao, K. Mixed Feature Prediction on Boundary Learning for Point Cloud Semantic Segmentation. Remote Sens. 2022, 14, 4757. [Google Scholar] [CrossRef]

- Yang, F.; Davoine, F.; Wang, H.; Jin, Z. Continuous conditional random field convolution for point cloud segmentation. Pattern Recognit. 2022, 122, 108357. [Google Scholar] [CrossRef]

- Ponciano, J.-J.; Roetner, M.; Reiterer, A.; Boochs, F. Object Semantic Segmentation in Point Clouds—Comparison of a Deep Learning and a Knowledge-Based Method. ISPRS Int. J. Geo-Inf. 2021, 10, 256. [Google Scholar] [CrossRef]

- Colucci, E.; Xing, X.; Kokla, M.; Mostafavi, M.A.; Noardo, F.; Spanò, A. Ontology-based semantic conceptualisation of historical built heritage to generate parametric structured models from point clouds. Appl. Sci. 2021, 11, 2813. [Google Scholar] [CrossRef]

- Wang, P.; Yao, W. A new weakly supervised approach for ALS point cloud semantic segmentation. ISPRS J. Photogramm. Remote Sens. 2022, 188, 237–254. [Google Scholar] [CrossRef]

- Zhang, Y.; Qu, Y.; Xie, Y.; Li, Z.; Zheng, S.; Li, C. Perturbed self-distillation: Weakly supervised large-scale point cloud semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–18 October 2021; pp. 15520–15528. [Google Scholar]

- Dimitrov, A.; Golparvar-Fard, M. Segmentation of building point cloud models including detailed architectural/structural features and MEP systems. Autom. Constr. 2015, 51, 32–45. [Google Scholar] [CrossRef]

- Cabaleiro, M.; Hermida, J.; Riveiro, B.; Caamaño, J. Automated processing of dense points clouds to automatically determine deformations in highly irregular timber structures. Constr. Build. Mater. 2017, 146, 393–402. [Google Scholar] [CrossRef]

- Moyano, J.; Gil-Arizón, I.; Nieto-Julián, J.E.; Marín-García, D. Analysis and management of structural deformations through parametric models and HBIM workflow in architectural heritage. J. Build. Eng. 2022, 45, 103274. [Google Scholar] [CrossRef]

- Cardani, G.; Angjeliu, G. Integrated Use of Measurements for the Structural Diagnosis in Historical Vaulted Buildings. Sensors 2020, 20, 4290. [Google Scholar] [CrossRef]

- Barrile, V.; Bernardo, E.; Bilotta, G. An Experimental HBIM Processing: Innovative Tool for 3D Model Reconstruction of Morpho-Typological Phases for the Cultural Heritage. Remote Sens. 2022, 14, 1288. [Google Scholar] [CrossRef]

- Poux, F.; Hallot, P.; Neuville, R.; Billen, R. Smart Point Cloud: Definition and Remaining Challenges. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, IV-2/W1, 119–127. [Google Scholar] [CrossRef]

- Marra, A.; Gerbino, S.; Greco, A.; Fabbrocino, G. Combining integrated informative system and historical digital twin for maintenance and preservation of artistic assets. Sensors 2021, 21, 5956. [Google Scholar] [CrossRef]

- Jouan, P.; Hallot, P. Digital twin: Research framework to support preventive conservation policies. ISPRS Int. J. Geo-Inf. 2020, 9, 228. [Google Scholar] [CrossRef]

- Funari, M.F.; Hajjat, A.E.; Masciotta, M.G.; Oliveira, D.V.; Lourenço, P.B. A parametric scan-to-FEM framework for the digital twin generation of historic masonry structures. Sustainability 2021, 13, 11088. [Google Scholar] [CrossRef]

- De Luca, L. Towards the Semantic-aware 3D Digitisation of Architectural Heritage: The” Notre-Dame de Paris” Digital Twin Project. In Proceedings of the 2nd Workshop on Structuring and Understanding of Multimedia heritAge Contents, Seattle, WA, USA, 12 October 2020; pp. 3–4. [Google Scholar]

| Technology | Point Density | Advantages | Disadvantages | Spatial Scales |

|---|---|---|---|---|

| Photogrammetry | Depends on the resolution of the camera sensors | Including colour and spectral information, can be installed on different platforms, | Influenced by light and shadows | Landscape, immovable heritage, and movable heritage |

| 3D laser scanning—ALS | Low density | rapid acquisition of a wide range (meters to centimeters resolution), and able to penetrate occlusion | 2.5D point clouds | Landscape scale (heritage landscape, large site) |

| 3D laser scanning—TLS | High desity | High accuracy (centimeter-to-millimeter resolution), access to a geometric surface, and structural details | Expensive and time-consuming, object occlusion | Immovable heritage scale (archaeological site, small landscape, and historical building) |

| 3D laser scanning—MLS | Middle density | High accuracy (centimeter resolution), larger measurement range, and higher efficiency than TLS | Expensive and time-consuming, object occlusion | Landscape and immovable heritage scale |

| 3D laser scanning—Handheld | From high desity to very high density | Very high accuracy (centimeter to submillimeter) | Expensive and time-consuming | Immovable and movable heritage scale (artefacts, objects, a part that is immovable) |

| Paper | Platform | Sensors | Data Characteristics |

|---|---|---|---|

| Nagai et al. [74] | UAV |

| Terrain shapes, detailed textures, and global geospatial references. |

| Erenogl et al. [75] | UAV |

| Geometric features and material classification information |

| Rodríguez-Gonzálvez et al. [76] | Mobile vehicle |

| Colour information and spatial geographic reference |

| Milell et al. [77] | All-terrain vehicle |

| Colour, geometry, spectral, and mechanical properties of soil |

| Hakala et al. [78] | Ground station |

| Hyperspectral point clouds |

| Zlot et al. [79] | Handheld |

| Both site context and building detail comparable in accuracy |

| Case | Platform and Main Sensors | Application |

|---|---|---|

| Fassi et al. [82] |

| Integrating different instrumentation and modeling methods to surveying and modeling very complex architecture (Main spire of MILAN CATHEDRAL) |

| Achille et al. [83] |

| Integration of the building’s interior and exterior 3D model with a tall and complex façade. |

| Galeazzi [84] |

| 3D documentation of archaeological stratigraphy in extreme environments characterized by extreme humidity, access difficulty, and challenging light conditions. |

| Zaragoza et al. [85] |

| Integrate the survey of roofs, gardens, and inner courts. |

| Herrero-Tejedor et al. [86] |

| 3D documentation for the management and conservation of cultural landscapes with unique biogeographical features |

| Guidi et al. [87] |

| Multi-resolution 3D modeling of the complex area of Roman Pompeii (150 m × 80 m), DSM (25 mm), medium resolution 3D model (5–20 mm), ground photogrammetry (0.5–10 mm) |

| Abate et al. [88] |

| Centimeter to millimeter multi-resolution 3D model of Treblink concentration camp (3.75 square kilometers) |

| Young et al. [89] |

| Establish a 3D model and the associated digital documentation of the Magoksa Temple, Republic of Korea. |

| Case | Object | Classification | Neighborhood Selection | Feature Extraction | Geometry Feature Selection | Classifier |

|---|---|---|---|---|---|---|

| Grilli et al. [149] | European historical buildings, ancient ruins, and stone cultural relics | Damaged areas | - | - | Orthophoto or UV map 2D supervised machine learning projection onto 3D data | j48, random tree, RepTREE, LogitBoost, random forest, fast random forest (16), and fast random forest (40) |

| Valero et al. [150] | Ancient ruins wall | Wall structure and damage information (erosion, delamination, mechanical, damage, and non-defective) | - | 17 colour-related features, 16 geometric features | Ten geometric features | Logistic regression multi-class classifier, and binary classifier |

| Grilli et al. [151] | European historical buildings | Nine structures | 0.1–0.8 m | Radiometric features and 77 multi-scale geometric features | Seven geometric features | Random forest classifier |

| Croce et al. [152] | European historical buildings | 19 structures | 0.2 m, 0.4 m, and 0.6 m | 27 geometric features, RGB values, laser scanner intensity, and point cloud Z coordinate | Nine geometric features | Random forest classifier |

| Grilli et al. [34] | European historical buildings and Temple | Building: 15 structures Temple: 15 structures | - | Decentralized coordinates, radiometric values, and geometric features | Seven geometric features | Random forest one-versus-one (OvO) classifier |

| Teruggi et al. [153] | European historical buildings | Building structures, subdivision structures, and detailed structures | 0.2 cm–3 m | Geometric features | Six geometric features | Random forest |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, S.; Hou, M.; Li, S. Three-Dimensional Point Cloud Semantic Segmentation for Cultural Heritage: A Comprehensive Review. Remote Sens. 2023, 15, 548. https://doi.org/10.3390/rs15030548

Yang S, Hou M, Li S. Three-Dimensional Point Cloud Semantic Segmentation for Cultural Heritage: A Comprehensive Review. Remote Sensing. 2023; 15(3):548. https://doi.org/10.3390/rs15030548

Chicago/Turabian StyleYang, Su, Miaole Hou, and Songnian Li. 2023. "Three-Dimensional Point Cloud Semantic Segmentation for Cultural Heritage: A Comprehensive Review" Remote Sensing 15, no. 3: 548. https://doi.org/10.3390/rs15030548

APA StyleYang, S., Hou, M., & Li, S. (2023). Three-Dimensional Point Cloud Semantic Segmentation for Cultural Heritage: A Comprehensive Review. Remote Sensing, 15(3), 548. https://doi.org/10.3390/rs15030548