An Automatic Deep Learning Bowhead Whale Whistle Recognizing Method Based on Adaptive SWT: Applying to the Beaufort Sea

Abstract

:1. Introduction

2. Materials and Methods

2.1. Dataset

- Watkins: This dataset consists of 60 recording files of the bowhead whale voices from the Bering Strait, Barrow, Alaska. The bowhead whale voice information is shown in Table 1. Column 2 describes the sampling frequency of bowhead whale voices; column 3 describes the bowhead whale voices band range.

- DRYAD: This dataset is derived from 184 sound clips recorded over three consecutive winters in the Fram Strait by Stafford et al. [40]. Of these, 38 singing segments were identified in 2010–2011; 69 segments were identified in 2012–2013 and 76 segments were identified in 2013–2014.

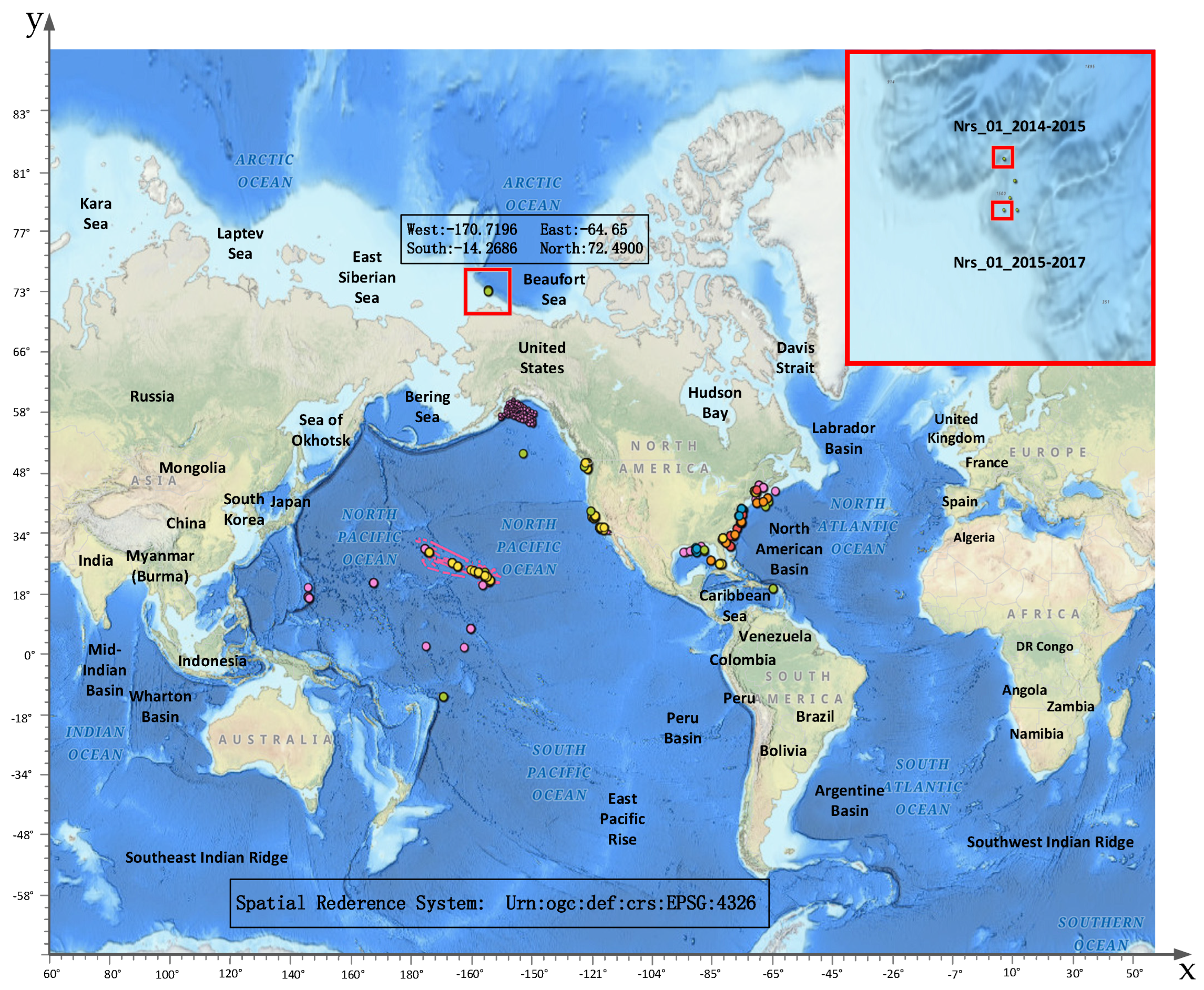

- NOAA PAM: This dataset is from the National Oceanic and Atmospheric Administration’s National Centers for Environmental Information Arctic Beaufort Sea PAM station, which was used to validate the methodology in this paper. The PAM sites in the Beaufort Sea and related information are depicted in Figure 1. The measured recording size is 23.3 M.

2.2. Data Analysis

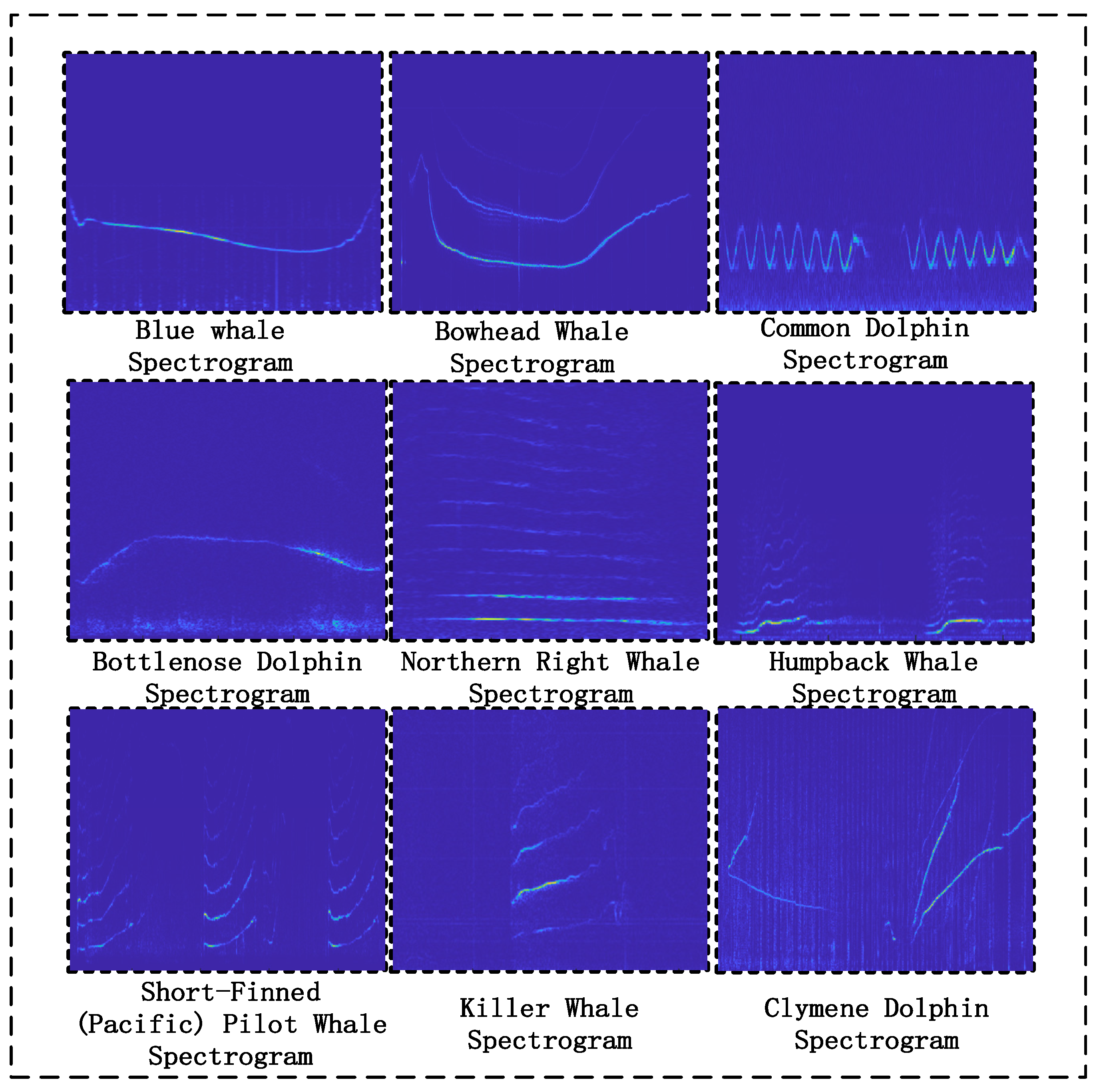

2.2.1. Bowhead Whale Voice Characteristics

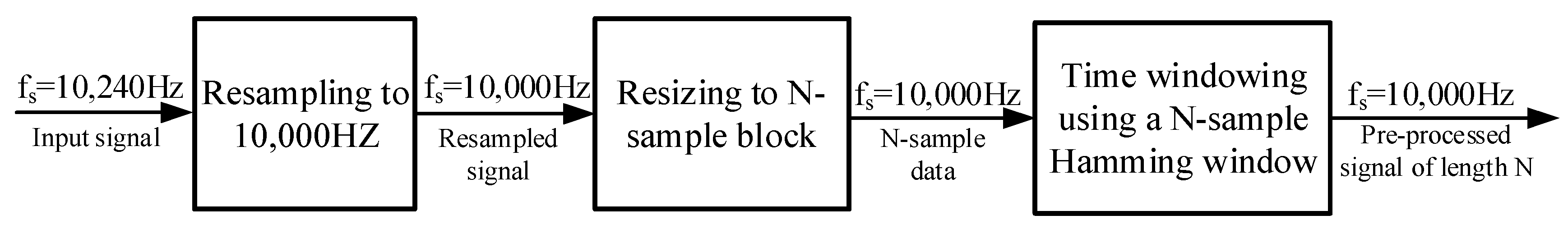

2.2.2. Data Processing

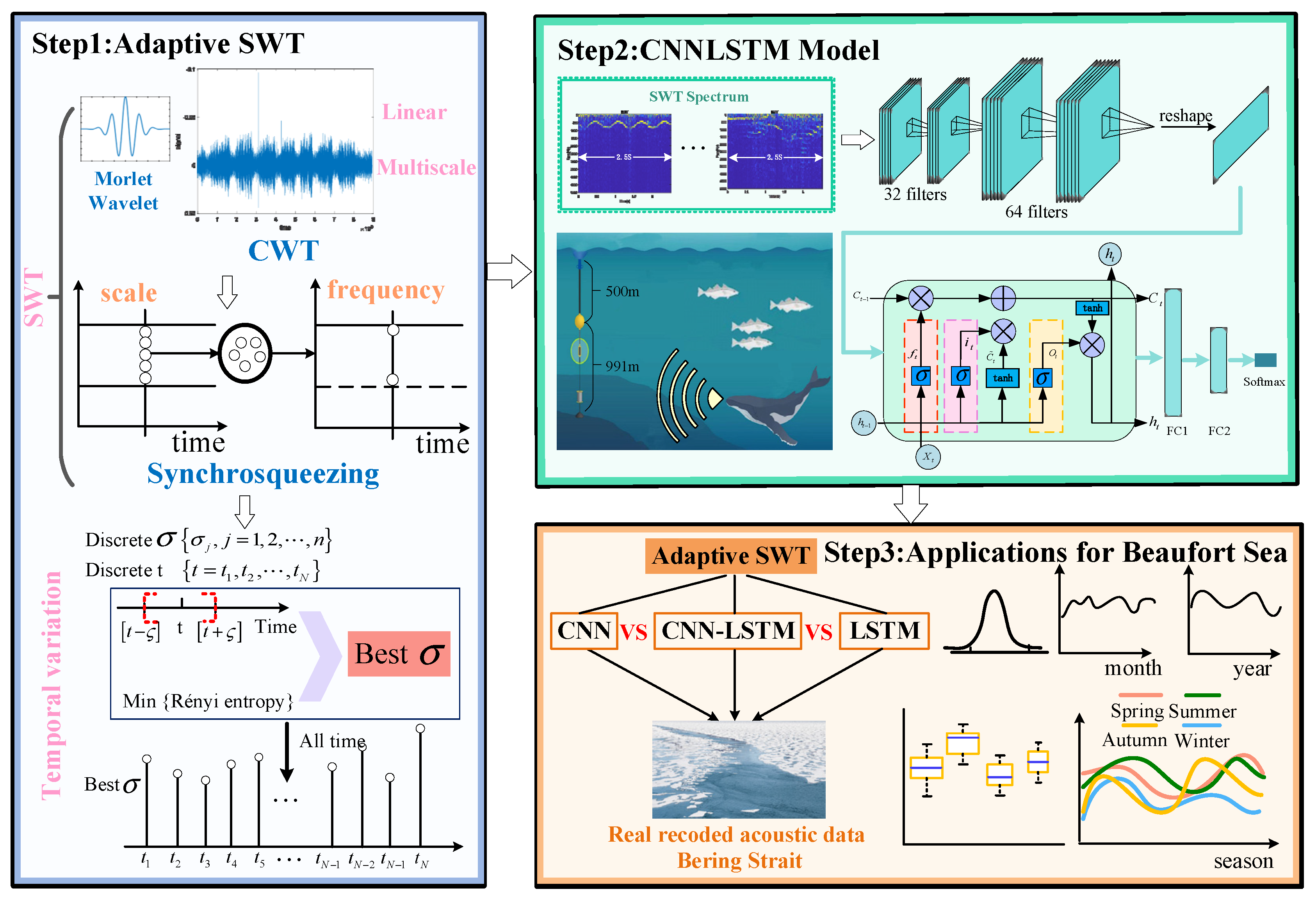

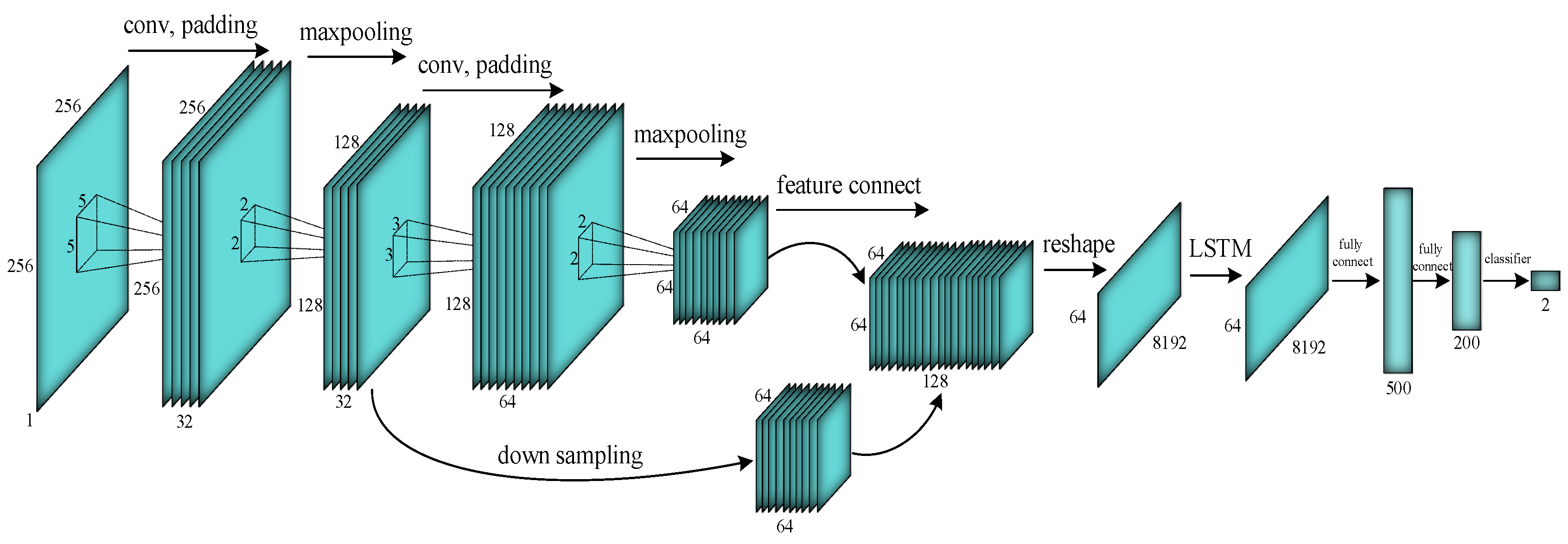

2.3. Model Architecture

2.4. Feature Extraction

2.4.1. Bowhead Whale Whistle Feature Extraction Based on SWT

- (1)

- Select the appropriate wavelet basis function and perform continuous wavelet transform on the original signal.

- (2)

- Calculate the instantaneous frequency of the original signal.

- (3)

- Compress and reorganize the wavelet coefficients in the frequency direction to obtain the synchronously compressed wavelet transform .

2.4.2. Time-Varying Parameter Estimation Based on Rényi Entropy

- (1)

- Fix a time , calculate the Rényi entropy value in the local area for all through Formula (7) and obtain the set of Rényi entropy values .

- (2)

- In the case of a fixed time , the parameter corresponding to the minimum Rényi entropy value is found from the set of Rényi entropy values as the optimal parameter at this moment.

- (3)

- Perform steps 1 and 2 for all moments to find the optimal parameter at all moments and finally get the time-varying parameter .

- (4)

- Smooth through a low-pass filter , then the time-varying parameter to be estimated .

2.5. Neural Network Architecture

3. Results

3.1. Experiment Preparation

3.2. Results of Comparative Experiments

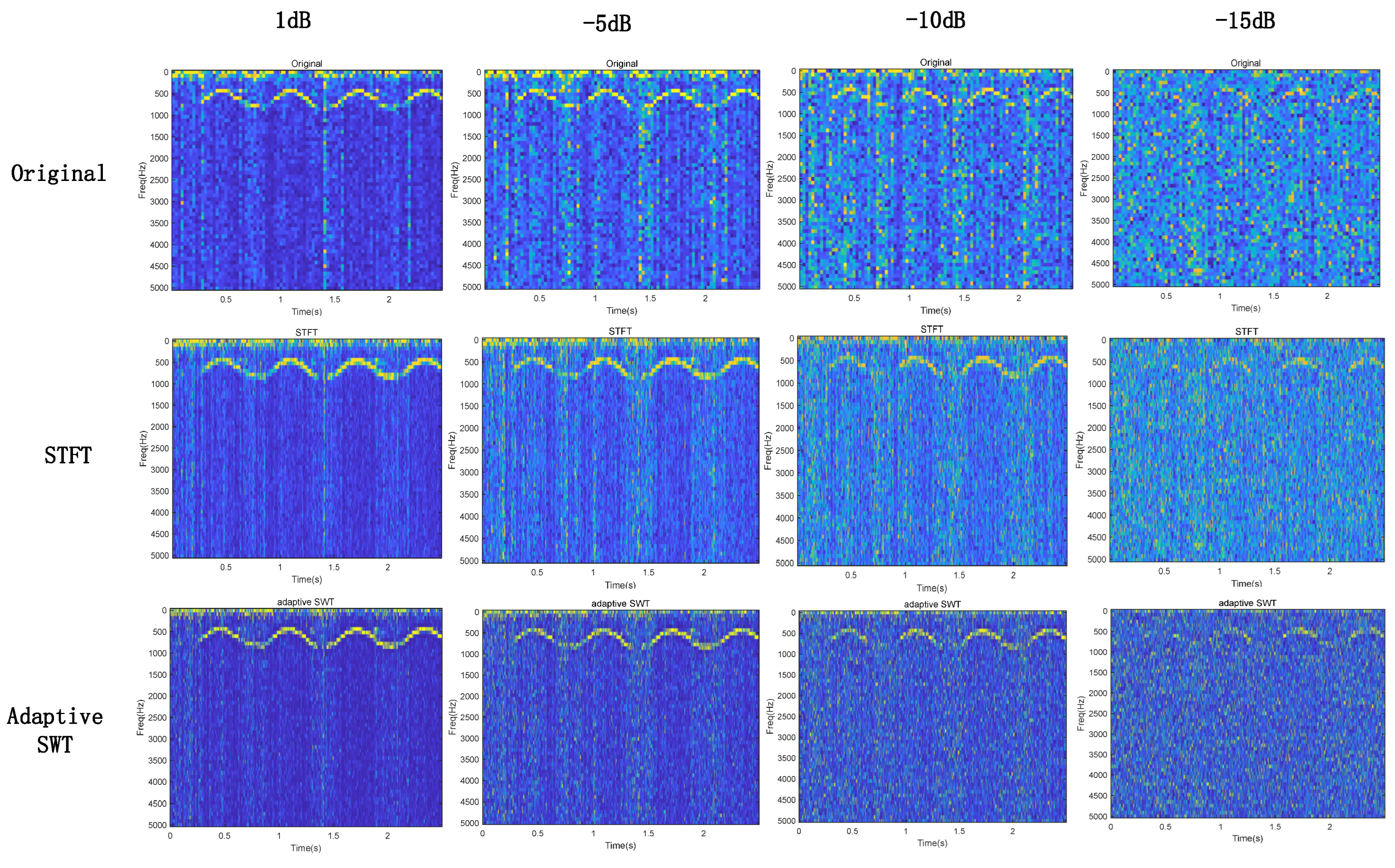

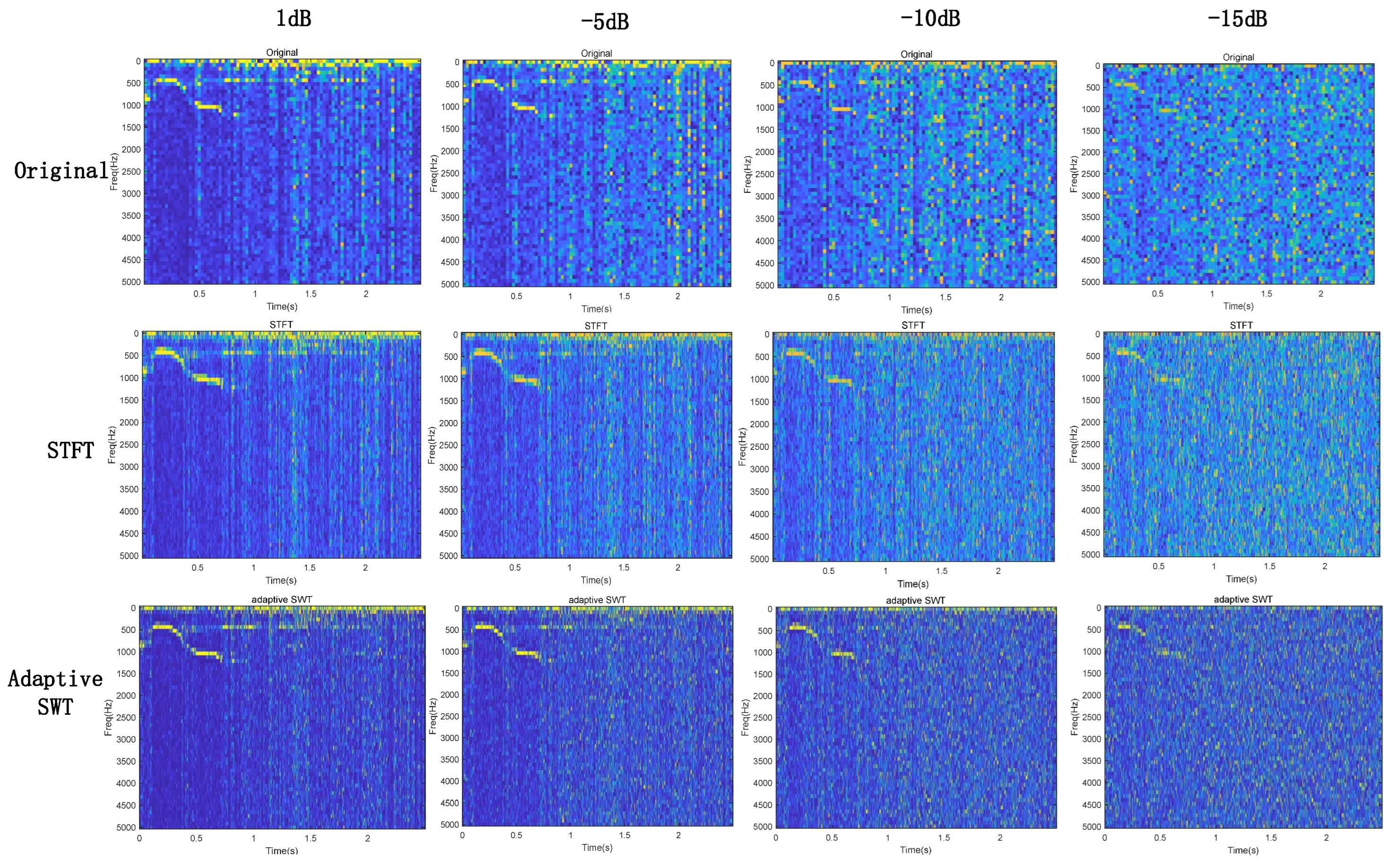

3.2.1. Comparative Experiment Based on the STFT, Fixed Parameter SWT and Adaptive SWT

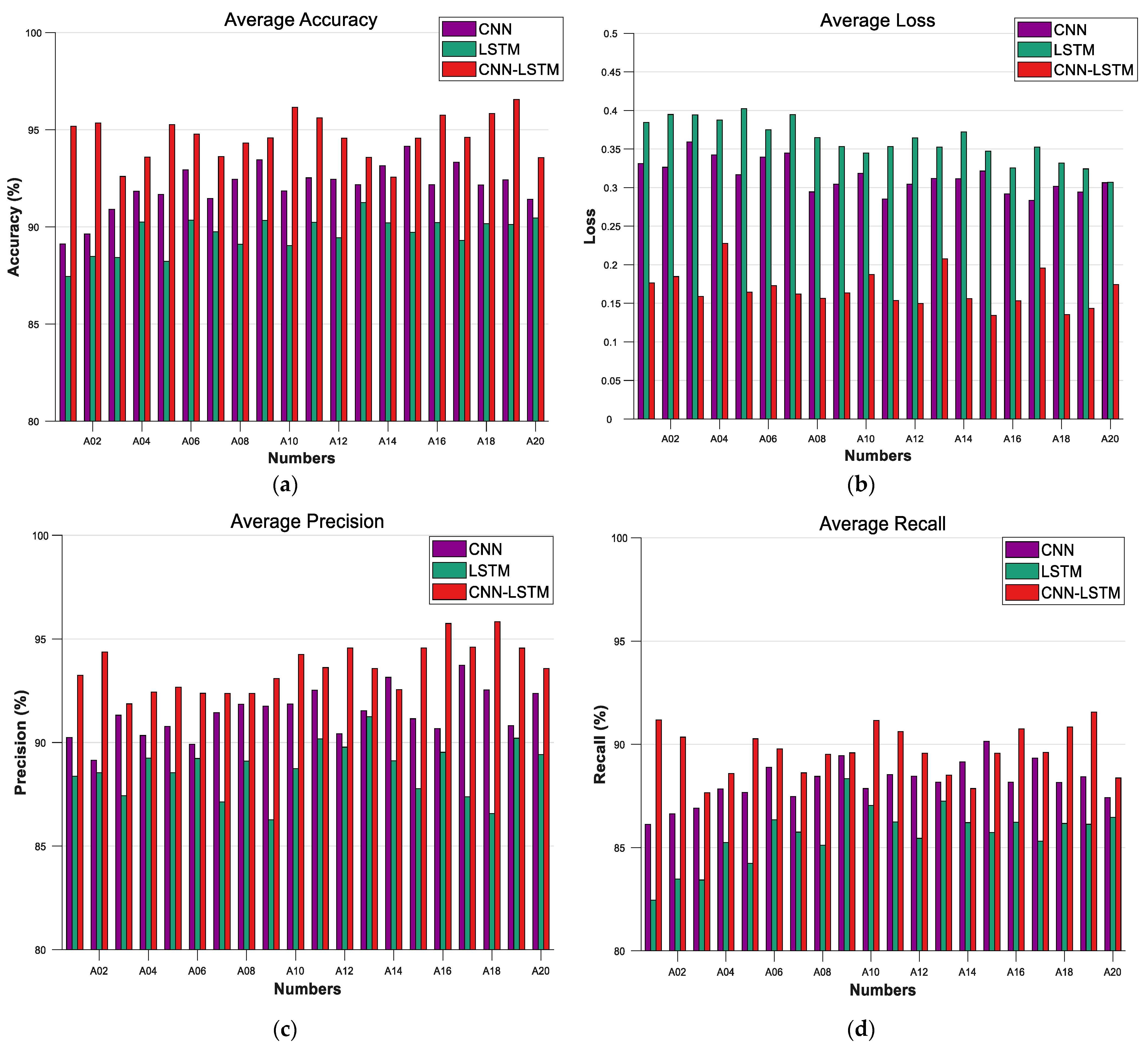

3.2.2. Comparative Experiment Based on the CNN, LSTM and CNN-LSTM

3.2.3. Sensitivity Analysis

3.2.4. Cross Validation

4. Application

4.1. Comparative Experiments Based on Measured Data

4.2. Comparative Experiments with Published Articles

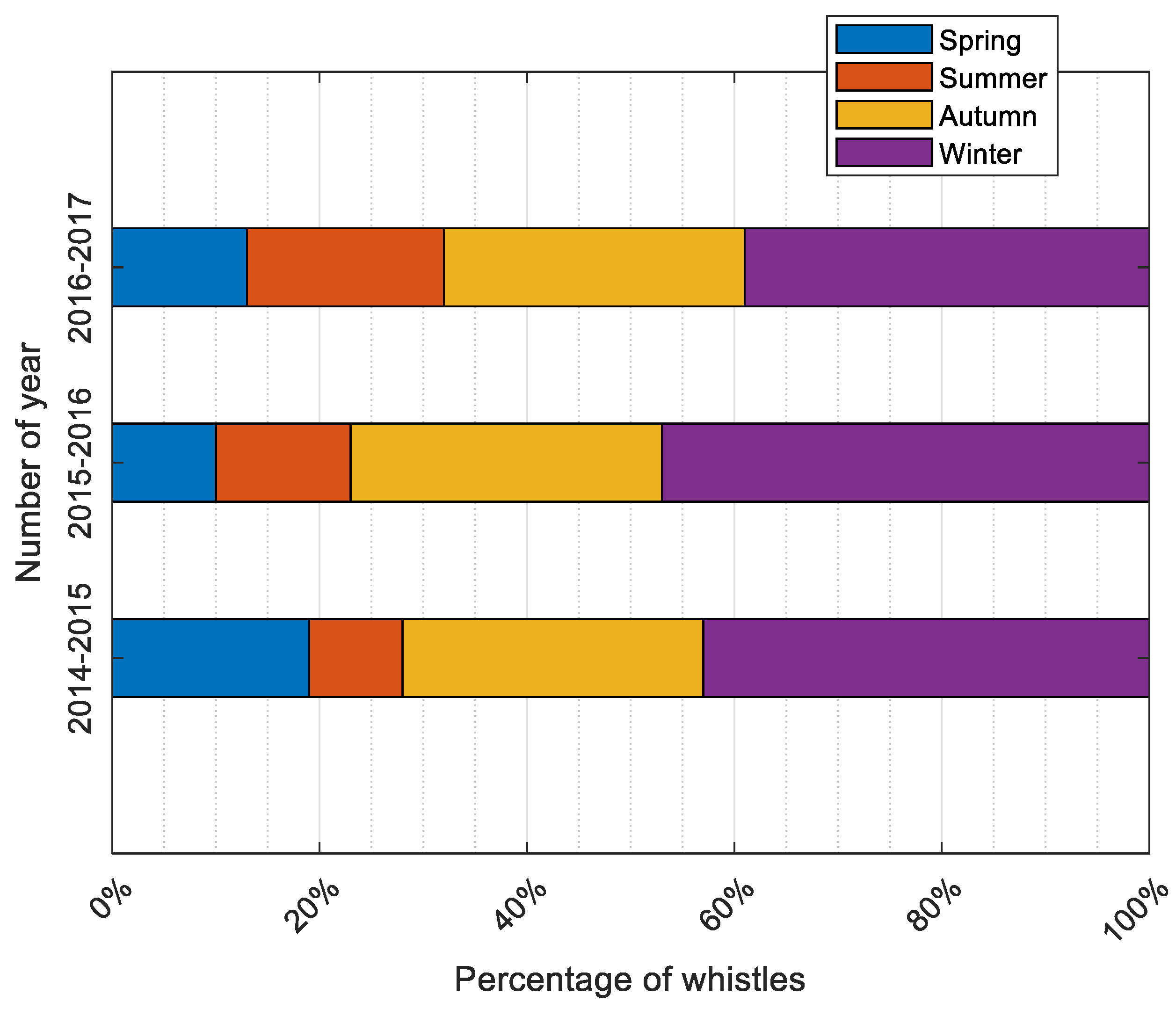

4.3. Comparative Experiments Based on Fisheries Ecology Studies

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Moore, S.E. Marine mammals as ecosystem sentinels. J. Mammal. 2008, 89, 534–540. [Google Scholar] [CrossRef]

- Laidre, K.L.; Peter Heide-Jørgensen, M.; Gissel Nielsen, T. Role of the bowhead whale as a predator in West Greenland. Mar. Ecol. Prog. Ser. 2007, 346, 285–297. [Google Scholar] [CrossRef]

- Reeves, R.; Rosa, C.; George, J.C.; Sheffield, G.; Moore, M. Implications of Arctic industrial growth and strategies to mitigate future vessel and fishing gear impacts on bowhead whales. Mar. Policy 2012, 36, 454–462. [Google Scholar] [CrossRef]

- George, J.C.; Zeh, J.; Suydam, R.; Clark, C. Abundance and Population Trend (1978–2001) of Western Arctic Bowhead Whales Surveyed Near Barrow, Alaska. Mar. Mammal. Sci. 2004, 20, 755–773. [Google Scholar] [CrossRef]

- Jones, N. The Quest for Quieter Seas. Nature 2019, 568, 158–161. [Google Scholar] [CrossRef] [PubMed]

- Kaklamanis, E.; Purnima, R.C.N.M. Optimal Automatic Wide-Area Discrimination of Fish Shoals from Seafloor Geology with Multi-Spectral Ocean Acoustic Waveguide Remote Sensing in the Gulf of Maine. Remote Sens. 2023, 15, 437. [Google Scholar]

- Duane, D.; Godø, O.R.; Makris, N.C. Quantification of Wide-Area Norwegian Spring-Spawning Herring Population Density with Ocean Acoustic Waveguide Remote Sensing (OAWRS). Remote Sens. 2021, 13, 4546. [Google Scholar] [CrossRef]

- Godin, O.A.; Katsnelson, B.G.; Qin, J.; Brown, M.G.; Zabotin, N.A. Application of time reversal to passive acoustic remote sensing of the ocean. Acoust. Phys. 2017, 63, 309–320. [Google Scholar] [CrossRef]

- Zhu, C.; Garcia, H.; Kaplan, A.; Schinault, M.; Handegard, N.; Godø, O.; Ratilal, P. Detection, Localization and Classification of Multiple Mechanized Ocean Vessels over Continental-Shelf Scale Regions with Passive Ocean Acoustic Waveguide Remote Sensing. Remote Sens. 2018, 10, 1699. [Google Scholar] [CrossRef]

- Churnside, J.H.; Naugolnykh, K.; Marchbanks, R.D. Optical remote sensing of sound in the ocean. In Proceedings of the SPIE 9111, Ocean Sensing and Monitoring VI, 91110T; SPIE: New York, NY, USA, 2014. [Google Scholar] [CrossRef]

- Akulichev, V.A.; Bezotvetnykh, V.V.; Burenin, A.V.; Voytenko, E.A.; Kamenev, S.I.; Morgunov, Y.N.; Polovinka, Y.A.; Strobykin, D.S. Remote acoustic sensing methods for studies in oceanology. Ocean Sci. J. 2006, 41, 105–111. [Google Scholar] [CrossRef]

- Burtenshaw, J.C.; Oleson, E.M.; Hildebrand, J.A.; McDonald, M.A.; Andrew, R.K.; Howe, B.M.; Mercer, J.A. Acoustic and satellite remote sensing of blue whale seasonality and habitat in the Northeast Pacific. Deep Sea Res. Part II Top. Stud. Oceanogr. 2004, 51, 967–986. [Google Scholar] [CrossRef]

- Fretwell, P.T.; Jackson, J.A.; Ulloa Encina, M.J.; Häussermann, V.; Perez Alvarez, M.J.; Olavarría, C.; Gutstein, C.S. Using remote sensing to detect whale strandings in remote areas: The case of sei whales mass mortality in Chilean Patagonia. PLoS ONE 2019, 14, e0222498. [Google Scholar] [CrossRef]

- Garcia, H.A.; Couture, T.; Galor, A.; Topple, J.M.; Huang, W.; Tiwari, D.; Ratilal, P. Comparing Performances of Five Distinct Automatic Classifiers for Fin Whale Vocalizations in Beamformed Spectrograms of Coherent Hydrophone Array. Remote Sens. 2020, 12, 326. [Google Scholar] [CrossRef]

- Balcazar, N.E.; Tripovich, J.S.; Klinck, H.; Nieukirk, S.L.; Mellinger, D.K.; Dziak, R.P.; Rogers, T.L. Calls reveal population structure of blue whales across the southeast Indian Ocean and the southwest Pacific Ocean. J. Mammal. 2015, 96, 1184–1193. [Google Scholar] [CrossRef]

- Chapman, R. A Review of “Passive Acoustic Monitoring of Cetaceans. Trans. Am. Fish. Soc. 2013, 142, 578–579. [Google Scholar] [CrossRef]

- Campos-Cerqueira, M.; Aide, T.M. Improving distribution data of threatened species by combining acoustic monitoring and occupancy modelling. Methods Ecol. Evol. 2016, 7, 1340–1348. [Google Scholar] [CrossRef]

- Tervo, O.M.; Christoffersen, M.F.; Parks, S.E.; Møbjerg Kristensen, R.; Teglberg Madsen, P. Evidence for simultaneous sound production in the bowhead whale (Balaena mysticetus). J. Acoust. Soc. Am. 2011, 130, 2257–2262. [Google Scholar] [CrossRef] [PubMed]

- Ou, H.; Au, W.W.L.; Oswald, J.N. A non-spectrogram-correlation method of automatically detecting minke whale boings. J. Acoust. Soc. Am. 2012, 132, EL317–EL322. [Google Scholar] [CrossRef]

- Gómez Blas, N.; de Mingo López, L.F.; Arteta Albert, A.; Martínez Llamas, J. Image Classification with Convolutional Neural Networks Using Gulf of Maine Humpback Whale Catalog. Electronics 2020, 9, 731. [Google Scholar] [CrossRef]

- Xie, Z.; Zhou, Y. The Study on Classification for Marine Mammal Based on Time-Frequency Perception. In Proceedings of the 4th International Conference on Bioinformatics and Biomedical Engineering, Chengdu, China, 18–20 June 2010. [Google Scholar] [CrossRef]

- Yuanfeng, M.; Chen, K. A time-frequency perceptual feature for classification of marine mammal sounds. In Proceedings of the 9th International Conference on Signal Processing, Beijing, China, 26–29 October 2008. [Google Scholar] [CrossRef]

- Bahoura, M.; Simard, Y. Blue whale calls classification using short-time Fourier and wavelet packet transforms and artificial neural network. Digit. Signal Process. 2010, 20, 1256–1263. [Google Scholar] [CrossRef]

- Jiang, B.L.; Duan, F.; Wang, X.; Liu, W.; Sun, Z.; Li, C. Whistle detection and classification for whales based on convolutional neural networks. Appl. Acoust. 2019, 150, 169–178. [Google Scholar] [CrossRef]

- Ibrahim, A.K.; Zhuang, H.; Erdol, N.; Ali, A.M. A New Approach for North Atlantic Right Whale Upcall Detection. In Proceedings of the 2016 International Symposium on Computer, Consumer and Control (IS3C), Xi’an, China, 4–6 July 2016. [Google Scholar] [CrossRef]

- Wang, Q.; Zhou, B.; Yu, W. Passive CFAR detection based on continuous wavelet transform of sound signals of marine animal. In Proceedings of the 2017 IEEE International Conference on Signal Processing, Communications and Computing (ICSPCC), Xiamen, China, 22–25 October 2017. [Google Scholar] [CrossRef]

- Ou, H.; Au, W.W.L.; Van Parijs, S.; Oleson, E.M.; Rankin, S. Discrimination of frequency-modulated Baleen whale downsweep calls with overlapping frequencies. J. Acoust. Soc. Am. 2015, 137, 3024–3032. [Google Scholar] [CrossRef] [PubMed]

- Adam, O. The use of the Hilbert-Huang transform to analyze transient signals emitted by sperm whales. Appl. Acoust. 2006, 67, 1134–1143. [Google Scholar] [CrossRef]

- Daubechies, I.; Lu, J.; Wu, H.T. Synchrosqueezed wavelet transforms: An empirical mode decomposition-like tool. Appl. Comput. Harmon. Anal. 2011, 30, 243–261. [Google Scholar] [CrossRef]

- Luo, X.; Chen, L.; Zhou, H.; Cao, H. A Survey of Underwater Acoustic Target Recognition Methods Based on Machine Learning. J. Mar. Sci. Eng. 2023, 11, 384. [Google Scholar] [CrossRef]

- Hachicha Belghith, E.; Rioult, F.; Bouzidi, M. Acoustic Diversity Classification Using Machine Learning Techniques: Towards Automated Marine Big Data Analysis. Int. J. Artif. Intell. Tools 2020, 29, 2060011. [Google Scholar] [CrossRef]

- Yang, H.; Lee, K.; Choo, Y.; Kim, K. Underwater Acoustic Research Trends with Machine Learning: General Background. J. Ocean. Eng. Technol. 2020, 34, 147–154. [Google Scholar] [CrossRef]

- Mishachandar, B.; Vairamuthu, S. Diverse ocean noise classification using deep learning. Appl. Acoust. 2021, 181, 108141. [Google Scholar] [CrossRef]

- Yang, H.; Li, J.; Shen, S.; Xu, G. A Deep Convolutional Neural Network Inspired by Auditory Perception for Underwater Acoustic Target Recognition. Sensors 2019, 19, 1104. [Google Scholar] [CrossRef]

- Li, S.; Jin, X.; Yao, S.; Yang, S. Underwater Small Target Recognition Based on Convolutional Neural Network. In Proceedings of the Global Oceans 2020: Singapore–US Gulf Coast, Biloxi, MS, USA, 5–30 October 2020. [Google Scholar]

- Miller, B.S.; Madhusudhana, S.; Aulich, M.G.; Kelly, N. Deep learning algorithm outperforms experienced human observer at detection of blue whale D-calls: A double-observer analysis. Remote Sens. 2022, 9, 104–116. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, D.; Bao, C.; Wang, Y.; Xu, K. Large-Scale Whale-Call Classification by Transfer Learning on Multi-Scale Waveforms and Time-Frequency Features. Appl. Sci. 2019, 9, 1020. [Google Scholar] [CrossRef]

- Madhusudhana, S.; Shiu, Y.; Klinck, H.; Fleishman, E.; Liu, X.; Nosal, E.M.; Helble, T.; Cholewiak, D.; Gillespie, D.; Roch, M.A. Improve automatic detection of animal call sequences with temporal context. J. R. Soc. Interface 2021, 18, 20210297. [Google Scholar] [CrossRef]

- Madhusudhana, S.; Shiu, Y.; Klinck, H.; Fleishman, E.; Liu, X.; Nosal, E.M.; Helble, T.; Cholewiak, D.; Gillespie, D.; Roch, M.A. Temporal context improves automatic recognition of call sequences in soundscape data. J. Acoust. Soc. Am. 2020, 148, 2442. [Google Scholar] [CrossRef]

- Stafford, K.M.; Lydersen, C.; Wiig, Ø.; Kovacs, K.M. Data from: Extreme diversity in the songs of Spitsbergen’s bowhead whales. Biol. Lett. 2018, 14, 20180056. [Google Scholar] [CrossRef] [PubMed]

- Erbs, F.; van der Schaar, M.; Weissenberger, J.; Zaugg, S.; André, M. Contribution to unravel variability in bowhead whale songs and better understand its ecological significance. Sci. Rep. 2021, 11, 168. [Google Scholar] [CrossRef] [PubMed]

- Bu, L.R. Study on Identification and Classification Methods of Whale Acoustic Signals between Whale Species; Tianjin University: Tianjin, China, 2018. [Google Scholar] [CrossRef]

- Daubechies, I. Ten Lectures on Wavelets; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 1992. [Google Scholar]

- Baraniuk, R.G.; Flandrin, P.; Janssen, A.J.E.M.; Michel, O.J.J. Measuring time-frequency information content using the Renyi entropies. IEEE Trans. Inf. Theory 2001, 47, 1391–1409. [Google Scholar] [CrossRef]

- Stanković, L. A measure of some time–frequency distributions concentration. Signal Process. 2001, 81, 621–631. [Google Scholar] [CrossRef]

- Zhao, J.; Mao, X.; Chen, L. Speech emotion recognition using deep 1D & 2D CNN LSTM networks. Biomed. Signal Process. Control 2019, 47, 312–323. [Google Scholar] [CrossRef]

- Wei, Y. Research on Detection and Recognition Technology of Cetacean Call; Harbin Engineering University: Harbin, China, 2022. [Google Scholar] [CrossRef]

- Forney, K.A.; Barlow, J. Seasonal Patterns in the Abundance and Distribution of California Cetaceans, 1991–1992. Mar. Mammal Sci. 1998, 14, 460–489. [Google Scholar] [CrossRef]

- Insley, S.J.; Halliday, W.D.; Mouy, X.; Diogou, N. Bowhead whales overwinter in the Amundsen Gulf and Eastern Beaufort Sea. R. Soc. Open Sci. 2021, 8, 202268. [Google Scholar] [CrossRef] [PubMed]

- Szesciorka, A.R.; Stafford, K.M. Sea ice directs changes in bowhead whale phenology through the Bering Strait. Mov. Ecol. 2023, 11, 8. [Google Scholar] [CrossRef] [PubMed]

- Chambault, P.; Albertsen, C.M.; Patterson, T.A.; Hansen, R.G.; Tervo, O.; Laidre, K.L.; Heide-Jørgensen, M.P. Sea surface temperature predicts the movements of an Arctic cetacean: The bowhead whale. Sci. Rep. 2018, 8, 9658. [Google Scholar] [CrossRef]

- Shi, J.; Chen, G.; Zhao, Y.; Tao, R. Synchrosqueezed Fractional Wavelet Transform: A New High-Resolution Time-Frequency Representation. IEEE Trans. Signal Process. 2023, 71, 264–278. [Google Scholar] [CrossRef]

- Wang, X.-L.; Li, C.-L.; Yan, X. Nonstationary harmonic signal extraction from strong chaotic interference based on synchrosqueezed wavelet transform. Signal Image Video Process. 2018, 13, 397–403. [Google Scholar] [CrossRef]

| Number | Sampling Freq (Hz) | Whale Frequency Band |

|---|---|---|

| 1 | 10,240 | 100–4000 |

| 2 | 10,240 | 500–3000 |

| 3 | 10,240 | 200–2000 |

| 4 | 10,000 | 100–3000 |

| 5 | 10,000 | 100–2500 |

| 6 | 10,000 | 200–2000 |

| …… | …… | …… |

| 55 | 10,240 | 50–2000 |

| 56 | 10,000 | 100–500 |

| 57 | 10,000 | 50–2500 |

| 58 | 10,000 | 100–3500 |

| 59 | 10,240 | 500–4500 |

| 60 | 10,000 | 450–3000 |

| Type | Subtype | Min f (Hz) | Max f (Hz) | Delta f (Hz) | Start f (Hz) | End f (Hz) | Med f (Hz) | Delta Time (S) |

|---|---|---|---|---|---|---|---|---|

| M | 1055 | 2160 | 1105 | 1724 | 1142 | 1672 | 8.46 | |

| MSG1 | 1077 | 2069 | 1532 | 2002 | 1122 | 2012 | 14.1 | |

| MSG5 | 762 | 2586 | 1823 | 1817 | 820 | 1894 | 10.5 | |

| MSG11 | 1271 | 1771 | 501 | 1722 | 1438 | 1512 | 9.09 | |

| MSG2 | 1163 | 2246 | 1083 | 1962 | 1225 | 1923 | 8.84 | |

| Mo | 1046 | 2015 | 1060 | 1654 | 1125 | 1598 | 7.63 | |

| S | 461 | 872 | 410 | 593 | 803 | 728 | 1.4 | |

| Vigh | 973 | 1605 | 631 | 1461 | 1155 | 1095 | 1 | |

| rumble | 234 | 319 | 84 | 278 | 272 | 279 | 7.3 | |

| Short R | 221 | 298 | 76 | 265 | 258 | 257 | 4.5 | |

| Long R | 266 | 370 | 104 | 309 | 306 | 331 | 14 | |

| sLFdown | 83 | 202 | 119 | 180 | 110 | 147 | 0.0 | |

| sLFconst | 341 | 412 | 71 | 383 | 370 | 376 | 0.3 | |

| sLFconst2 | 589 | 713 | 123 | 673 | 623 | 627 | 0.1 | |

| Minter | 869 | 1153 | 284 | 1135 | 917 | 1049 | 0.6 | |

| MiSG3 | 1137 | 1276 | 139 | 1254 | 1184 | 1198 | 1.2 | |

| Mio | 708 | 1079 | 370 | 1064 | 758 | 958 | 0.3 | |

| sup | 457 | 537 | 80 | 481 | 520 | 504 | 0.0 |

| Layer | Parameters | Output Shape |

|---|---|---|

| Input | 256 × 256 | (256, 256, 1) |

| Conv1 | 5*5 conv, filter = 32, padding = 2, strides = 1 | (256, 256, 32) |

| Maxpooling1 | 2*2 maxpool, strides = 2 | (128, 128, 32) |

| Conv2 | 3*3 conv, filter = 64, padding = 1, strides = 1 | (128, 128, 64) |

| Maxpooling2 | 2*2 maxpool, strides = 2 | (64, 64, 64) |

| Feature Connect | Maxpooling2 + Maxpooling1 | (64, 64, 128) |

| LSTM | Activation = “tanh” Recurrent_activation = “hard_sigmoid” Return_sequences = True | (8192, 64) |

| Fully Connect1 | 500 hidden neurons | (8192, 500) |

| Fully Connect2 | 200 hidden neurons | (8192, 200) |

| Classifier | softmax | (2, 1) |

| Parameters | Value |

|---|---|

| Activation | ReLU |

| Loss | Categorical cross-entropy |

| Optimizer | Adam |

| Learning rate | 0.001 |

| Dropout | 0.5 |

| Category | Value |

|---|---|

| CPU | Intel Core i9 |

| GPU | NVIDIA GeForce RTX3070 |

| RAM | 32 GB |

| Software | Tensorflow2.1 |

| Cuda11.1 + Cdnn8.0 | |

| Python3.8 | |

| Ubuntu18.0.4 |

| Actual Sample | Predicted Sample | |

|---|---|---|

| Positive | Negative | |

| Positive | True Positive (TP) | False Negative (FN) |

| Negative | False Positive (FP) | True Negative (TN) |

| SCR of STFT | SCR of SWT | SCR of Adaptive SWT | Increasement (STFT-Adaptive SWT) | Percentage Increase (STFT-Adaptive SWT) | |

|---|---|---|---|---|---|

| Stationary | 192.235 | 274.832 | 361.794 | 169.559 | 88.20% |

| Nonstationary | 191.364 | 265.649 | 353.508 | 162.144 | 84.37% |

| 1 dB | −5 dB | −10 dB | −15 dB | |

|---|---|---|---|---|

| Original | 4.123 | 6.779 | 7.548 | 8.413 |

| STFT | 3.521 | 5.345 | 6.533 | 7.817 |

| Adaptive SWT | 2.557 | 4.581 | 5.954 | 7.234 |

| 1 dB | −5 dB | −10 dB | −15 dB | |

|---|---|---|---|---|

| Original | 4.314 | 6.865 | 8.212 | 9.023 |

| STFT | 3.568 | 5.421 | 7.124 | 8.224 |

| Adaptive SWT | 2.685 | 4.612 | 6.296 | 7.752 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, R.; Xu, J.; Jin, K.; Xu, L.; Liu, Y.; Chen, D.; Chen, L. An Automatic Deep Learning Bowhead Whale Whistle Recognizing Method Based on Adaptive SWT: Applying to the Beaufort Sea. Remote Sens. 2023, 15, 5346. https://doi.org/10.3390/rs15225346

Feng R, Xu J, Jin K, Xu L, Liu Y, Chen D, Chen L. An Automatic Deep Learning Bowhead Whale Whistle Recognizing Method Based on Adaptive SWT: Applying to the Beaufort Sea. Remote Sensing. 2023; 15(22):5346. https://doi.org/10.3390/rs15225346

Chicago/Turabian StyleFeng, Rui, Jian Xu, Kangkang Jin, Luochuan Xu, Yi Liu, Dan Chen, and Linglong Chen. 2023. "An Automatic Deep Learning Bowhead Whale Whistle Recognizing Method Based on Adaptive SWT: Applying to the Beaufort Sea" Remote Sensing 15, no. 22: 5346. https://doi.org/10.3390/rs15225346

APA StyleFeng, R., Xu, J., Jin, K., Xu, L., Liu, Y., Chen, D., & Chen, L. (2023). An Automatic Deep Learning Bowhead Whale Whistle Recognizing Method Based on Adaptive SWT: Applying to the Beaufort Sea. Remote Sensing, 15(22), 5346. https://doi.org/10.3390/rs15225346